U-Net-Embedded Gabor Kernel and Coaxial Correction Methods to Dorsal Hand Vein Image Projection System

Abstract

:1. Introduction

- (1)

- The prior knowledge of Gabor kernels is integrated into U-Net to improve the network. Through the embedding of the Gabor kernel into the neural network, the feature extraction capability of the shallow network is enhanced. Consequently, this leads to improved accuracy in image segmentation and lays a foundation for later precise projection.

- (2)

- The proposed U-Net achieves a lightweight design by replacing conventional convolutions with inverted residual blocks. This operation mitigates the semantic information loss caused by channel reduction, while decreasing the parameter size, and is suitable for real-time projection.

- (3)

- During the projection process, this paper proposes a method that combines coaxial correction with the homography matrix. This approach enables the device to require only a single calibration before use. It enhances the accuracy of the vein projection system and simplifies the process of projection correction.

- (4)

- To validate the improved algorithm, we have established a database, created corresponding labels manually, and constructed a dorsal hand vein projection system. The proposed methods for vein segmentation and projection have been trained and tested using the dataset. The accuracy of both segmentation and projection, along with the response time, meets the requirements of the application.

2. Related Research

3. Materials and Methods

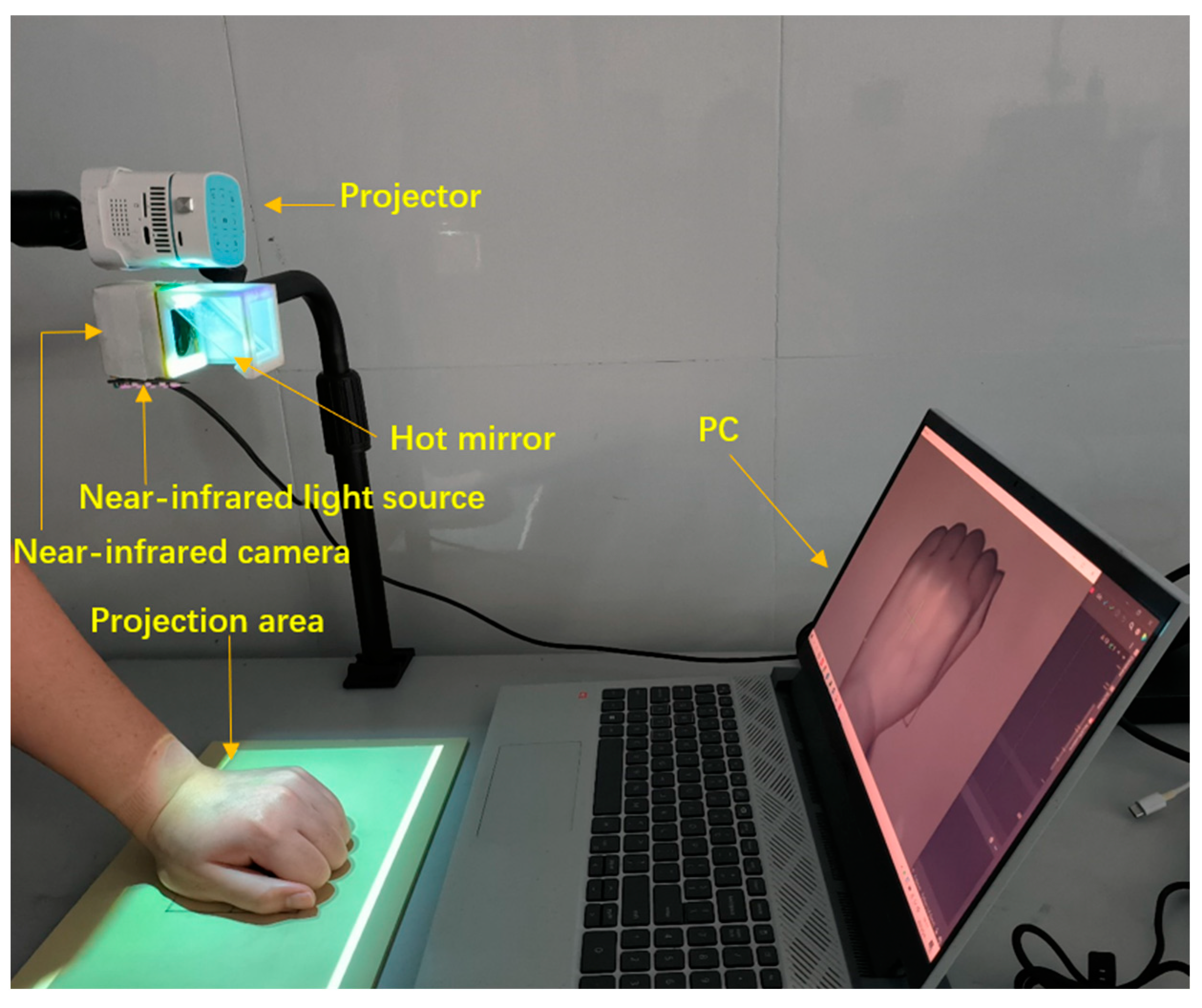

3.1. Image Acquisition

3.2. Lightweight U-Net Model Design with Embedded Gabor Kernel

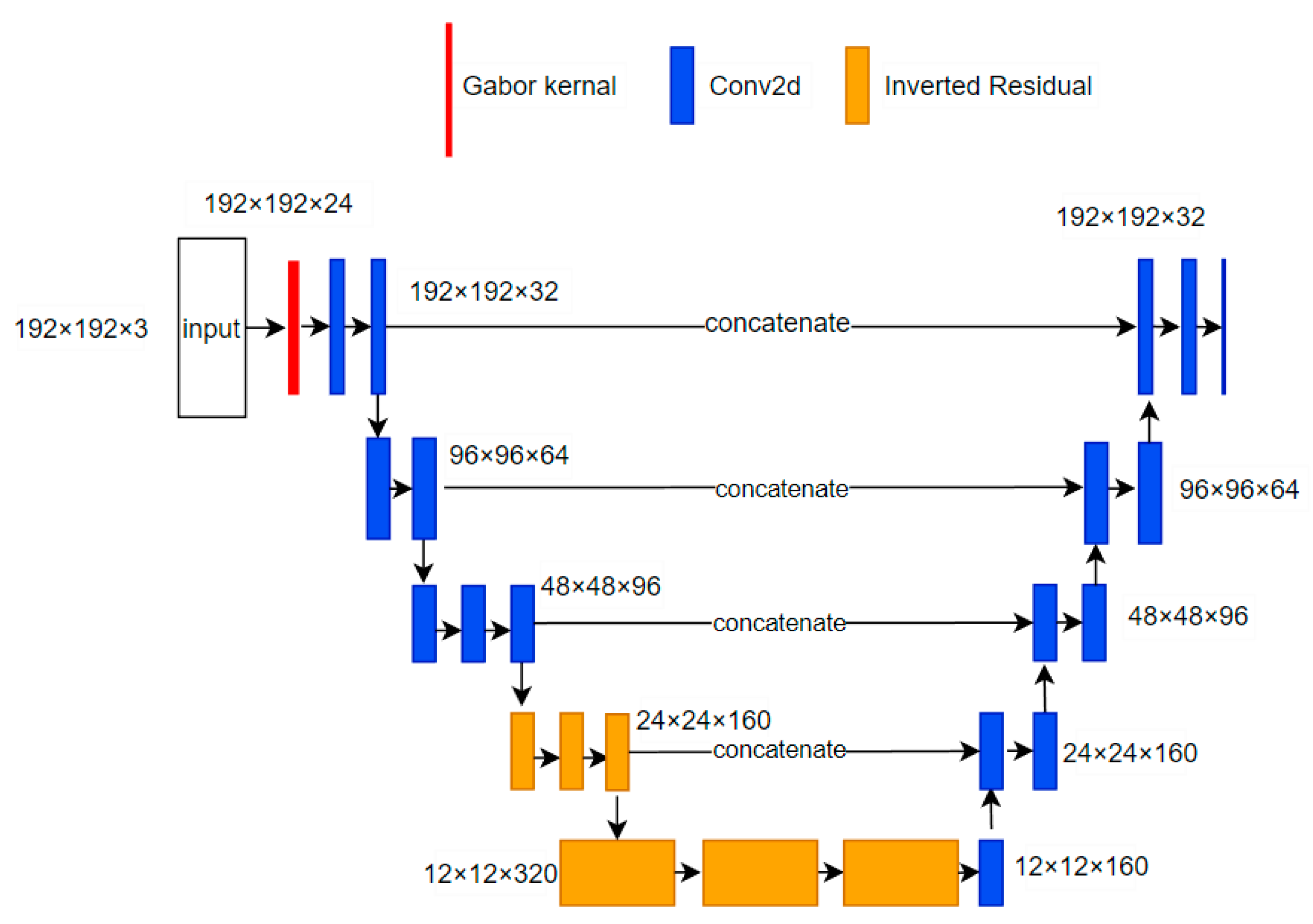

3.2.1. Improved U-Net Architecture

3.2.2. Embedding of Gabor Prior Knowledge

3.2.3. Lightweight Design of U-Net

3.3. Coaxial Alignment Correction Algorithm Based on Optical Path

3.3.1. Coaxial Alignment of Optical Path

3.3.2. Homography Matrix Calibration

4. Experiment and Discussion

4.1. Image Segmentation

4.1.1. Comparison with and without DC Component

4.1.2. Comparison of Image Segmentation Results

4.2. Results of Projection Correction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Indarwati, F.; Munday, J.; Keogh, S. Nurse knowledge and confidence on peripheral intravenous catheter insertion and maintenance in pediatric patients: A multicentre cross-sectional study. J. Pediatr. Nurs. 2022, 62, 10–16. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, A.F.; Winslow, E.H. Variables influencing intravenous catheter insertion difficulty and failure: An analysis of 339 intravenous catheter insertions. Heart Lung 2005, 34, 345–359. [Google Scholar] [CrossRef]

- He, T.; Guo, C.; Liu, H.; Jiang, L. Research on Robotic Humanoid Venipuncture Method Based on Biomechanical Model. J. Intell. Robot. Syst. 2022, 106, 31. [Google Scholar] [CrossRef] [PubMed]

- Sakudo, A. Near-infrared spectroscopy for medical applications: Current status and future perspectives. Clin. Chim. Acta 2016, 455, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Zeman, H.D.; Lovhoiden, G.; Vrancken, C. Prototype vein contrast enhancer. Opt. Eng. 2005, 44, 086401. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, T.; Li, Q.; Fang, H. Adaptive gamma correction based on cumulative histogram for enhancing near-infrared images. Infrared Phys. Technol. 2016, 79, 205–215. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Liu, T.; Xie, J.B.; Yan, W.; Li, P.Q.; Lu, H.Z. An algorithm for finger-vein segmentation based on modified repeated line tracking. Imaging Sci. J. 2013, 61, 491–502. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, Z.; Li, M. Active contour-based method for finger-vein image segmentation. IEEE Trans. Instrum. Meas. 2020, 69, 8656–8665. [Google Scholar] [CrossRef]

- Zhang, H.; He, L.; Wang, D. Deep reinforcement learning for real-world quadrupedal locomotion: A comprehensive review. Intell. Robot. 2022, 2, 275–297. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Liu, M.; Qian, P. Automatic segmentation and enhancement of latent fingerprints using deep nested unets. IEEE Trans. Inf. Forensics Secur. 2020, 16, 1709–1719. [Google Scholar] [CrossRef]

- He, T.; Guo, C.; Jiang, L.; Liu, H. Automatic venous segmentation in venipuncture robot using deep learning. In Proceedings of the 2021 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021. [Google Scholar]

- Chen, Y.; Ge, P.; Wang, G.; Weng, G.; Chen, H. An overview of intelligent image segmentation using active contour models. Intell. Robot. 2023, 3, 23–55. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, H.; Tan, N.; Chen, L. Automatic retinal blood vessel segmentation based on fully convolutional neural networks. Symmetry 2019, 11, 1112. [Google Scholar] [CrossRef]

- Dai, X.; Zhou, Y.; Hu, X.; Liu, M.; Zhu, X.; Wu, Z. A fast vein display device based on the camera-projector system. In Proceedings of the 2013 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 22–23 October 2013. [Google Scholar]

- Gan, Q.; Wang, D.; Ye, J.; Zhang, Z.; Wang, X.; Hu, C.; Shao, P.; Xu, R.X. Benchtop and animal validation of a projective imaging system for potential use in intraoperative surgical guidance. PLoS ONE 2016, 11, e0157794. [Google Scholar] [CrossRef]

- Van Tran, T.; Dau, H.S.; Nguyen, D.T.; Huynh, S.Q.; Huynh, L.Q. Design and enhance the vein recognition using near infrared light and projector. VNUHCM J. Sci. Technol. Dev. 2017, 20, 91–95. [Google Scholar] [CrossRef]

- May, H.Y.; Ernawan, F. Real Time Vein Visualization using Near-Infrared Imaging. In Proceedings of the 2020 International Conference on Computational Intelligence (ICCI), Bandar Seri Iskandar, Malaysia, 8–9 October 2020. [Google Scholar]

- Funk, M.; Mayer, S.; Schmidt, A. Using in-situ projection to support cognitively impaired workers at the workplace. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, Lisbon, Portugal, 26–28 October 2015. [Google Scholar]

- Gunawan, I.P.A.S.; Sigit, R.; Gunawan, A.I. Vein visualization system using camera and projector based on distance sensor. In Proceedings of the 2018 International Electronics Symposium on Engineering Technology and Applications (IES-ETA), Bali, Indonesia, 29–30 October 2018. [Google Scholar]

- Gunawan, I.P.A.S.; Sigit, R.; Gunawan, A.I. Multi-Distance Veins Projection Based on Single Axis Camera and Projector System. EMITTER Int. J. Eng. Technol. 2019, 7, 444–466. [Google Scholar] [CrossRef]

- Liu, P.; Shao, P.; Ma, J.; Xu, M.; Li, C. A co-axial projection surgical navigation system for breast cancer sentinel lymph node mapping: System design and clinical trial. In Proceedings of the Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems XVII, San Francisco, CA, USA, 2–7 February 2019; Volume 10868. [Google Scholar]

- Li, C.; Liu, P.; Shao, P.; Pei, J.; Li, Y.; Pawlik, T.M.; Martin, E.W.; Xu, R.X. Handheld projective imaging device for near-infrared fluorescence imaging and intraoperative guidance of sentinel lymph node resection. J. Biomed. Opt. 2019, 24, 080503. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Pang, G.K.H. Defect detection in textured materials using Gabor filters. IEEE Trans. Ind. Appl. 2002, 38, 425–440. [Google Scholar] [CrossRef]

- Yang, J.; Zhou, H.; Yang, B.; Wang, Y.; Wei, L. Improved algorithm and application of convolutional neural network based on Gabor kernel. J. Yanshan Univ. 2018, 42, 427–433. [Google Scholar]

- Chen, C.; Zhou, K.; Qi, S.; Lu, T.; Xiao, R. A learnable Gabor Convolution kernel for vessel segmentation. Comput. Biol. Med. 2023, 158, 106892. [Google Scholar] [CrossRef]

- Luan, S.; Chen, C.; Zhang, B.; Han, J.; Liu, J. Gabor convolutional networks. IEEE Trans. Image Process. 2018, 27, 4357–4366. [Google Scholar] [CrossRef]

- Thoma, M. A survey of semantic segmentation. arXiv 2016, arXiv:1602.06541. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Jalilian, E.; Uhl, A. Finger-vein recognition using deep fully convolutional neural semantic segmentation networks: The impact of training data. In Proceedings of the 2018 IEEE International Workshop on Information Forensics and Security (WIFS), Hong Kong, China, 11–13 December 2018. [Google Scholar]

- Chaconas, K. Range from Triangulation Using an Inverse Perspective Method to Determine Relative Camera Pose; National Institute of Standards and Technology: Gaithersburg, MD, USA, 1990. [Google Scholar]

- Wang, S.; Zhao, W.; Zhang, G.; Xu, H.; Du, Y. Identification of structural parameters from free vibration data using Gabor wavelet transform. Mech. Syst. Signal Process. 2021, 147, 107122. [Google Scholar] [CrossRef]

- Chazhoor, A.A.P.; Ho, E.S.L.; Gao, B.; Woo, W.L. Deep transfer learning benchmark for plastic waste classification. Intell. Robot. 2022, 2, 1–19. [Google Scholar] [CrossRef]

| Technical Index | Specification Parameters |

|---|---|

| Brand | LRCP Luoke |

| Model Number | V1080P_PCBA |

| Focal Length | 3.8 mm |

| Resolution | 1920 × 1080 pixels |

| Light Source Power | 2 W |

| Sensor Type | CMOS |

| Compatible System | Windows/Linux/macOS |

| Technical Index | Specification Parameters |

|---|---|

| Brand | Vmai |

| Model Number | m100smart |

| Display Technique | DLP |

| Light Source Power | 20 W |

| Body Size | 58 × 58 × 64 mm |

| Range of Screen Placement | 5–300 inches |

| Projector Brightness | 400ANSI lumens |

| Resolution Ratio | 1920 × 1080 dpi |

| RAM | 2 GB |

| Support autofocus | Yes |

| Convolution Kernel | Miou | Precision |

|---|---|---|

| With Gabor convolution kernel | 90.07% | 95.12% |

| With Sobel convolution kernel | 82.15% | 90.64% |

| Without convolution kernel | 80.38% | 87.55% |

| Downsampling Network | Miou | Precision | Size (M) | Consumption Time (s) |

|---|---|---|---|---|

| VGG16 | 89.82% | 94.33% | 95 | 0.1963 |

| ResNet50 | 88.62% | 93.02% | 168 | 0.2147 |

| DenseNet | 90.30% | 93.66% | 111 | 0.3092 |

| MobileNetv2 | 90.16% | 95.76% | 19 | 0.1513 |

| Proposed method | 90.01% | 95.25% | 15 | 0.0910 |

| L (mm) | 0 | 370 | 340 | 310 | 280 | 250 |

| X (mm) | 0 | 0.12 | 0.26 | 0.36 | 0.45 | 0.53 |

| Y (mm) | 0 | 0 | 0.07 | 0.08 | 0.11 | 0.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Lv, M.; Cai, J.; Guo, Z.; Li, Z. U-Net-Embedded Gabor Kernel and Coaxial Correction Methods to Dorsal Hand Vein Image Projection System. Appl. Sci. 2023, 13, 11222. https://doi.org/10.3390/app132011222

Chen L, Lv M, Cai J, Guo Z, Li Z. U-Net-Embedded Gabor Kernel and Coaxial Correction Methods to Dorsal Hand Vein Image Projection System. Applied Sciences. 2023; 13(20):11222. https://doi.org/10.3390/app132011222

Chicago/Turabian StyleChen, Liukui, Monan Lv, Junfeng Cai, Zhongyuan Guo, and Zuojin Li. 2023. "U-Net-Embedded Gabor Kernel and Coaxial Correction Methods to Dorsal Hand Vein Image Projection System" Applied Sciences 13, no. 20: 11222. https://doi.org/10.3390/app132011222