Real-Time Optical Detection of Artificial Coating Defects in PBF-LB/P Using a Low-Cost Camera Solution and Convolutional Neural Networks

Abstract

:1. Introduction

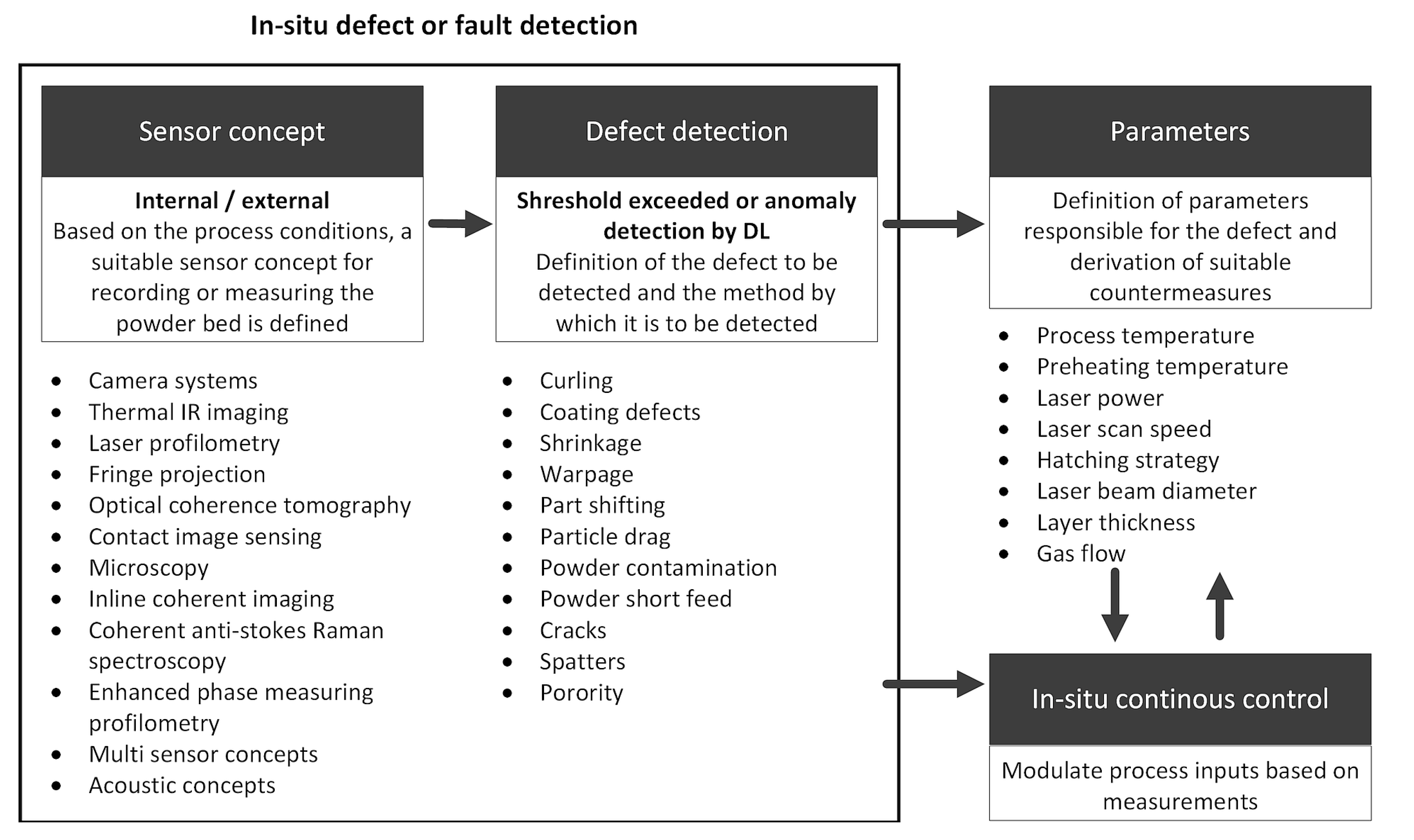

2. Background and Methodology

3. Materials and Methods

3.1. PBF-LB/P System

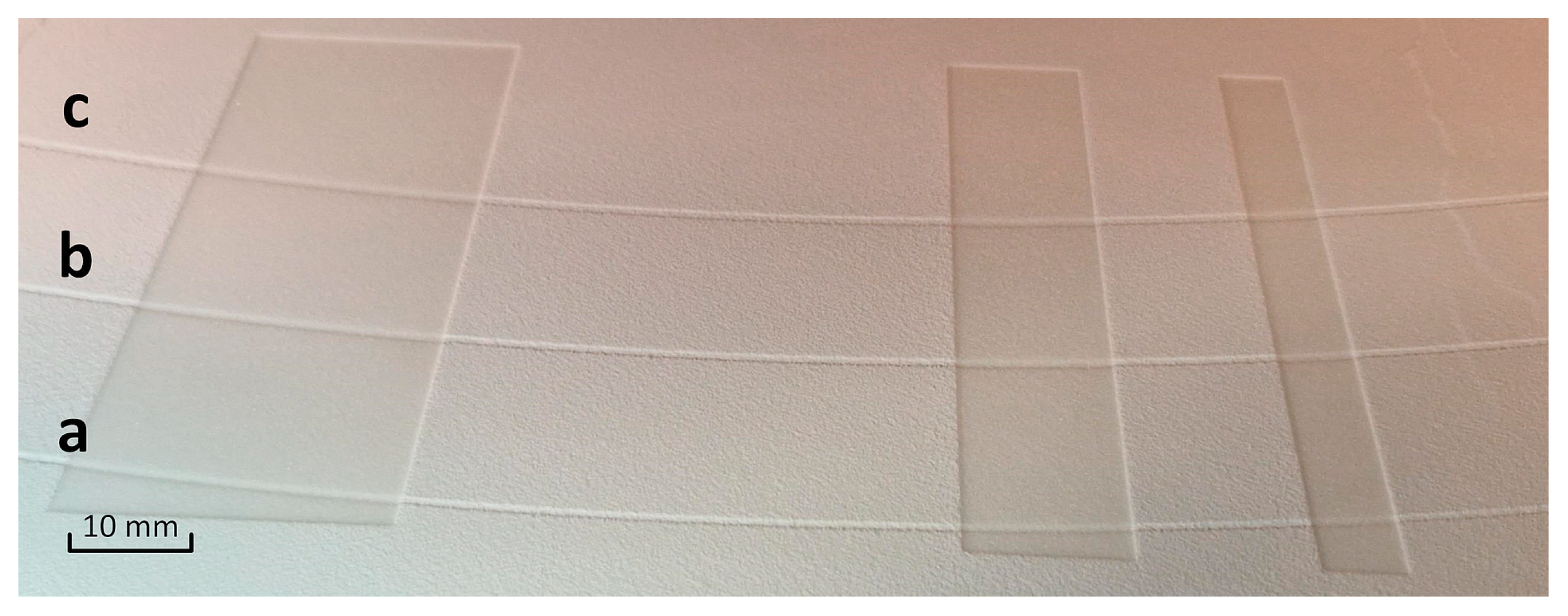

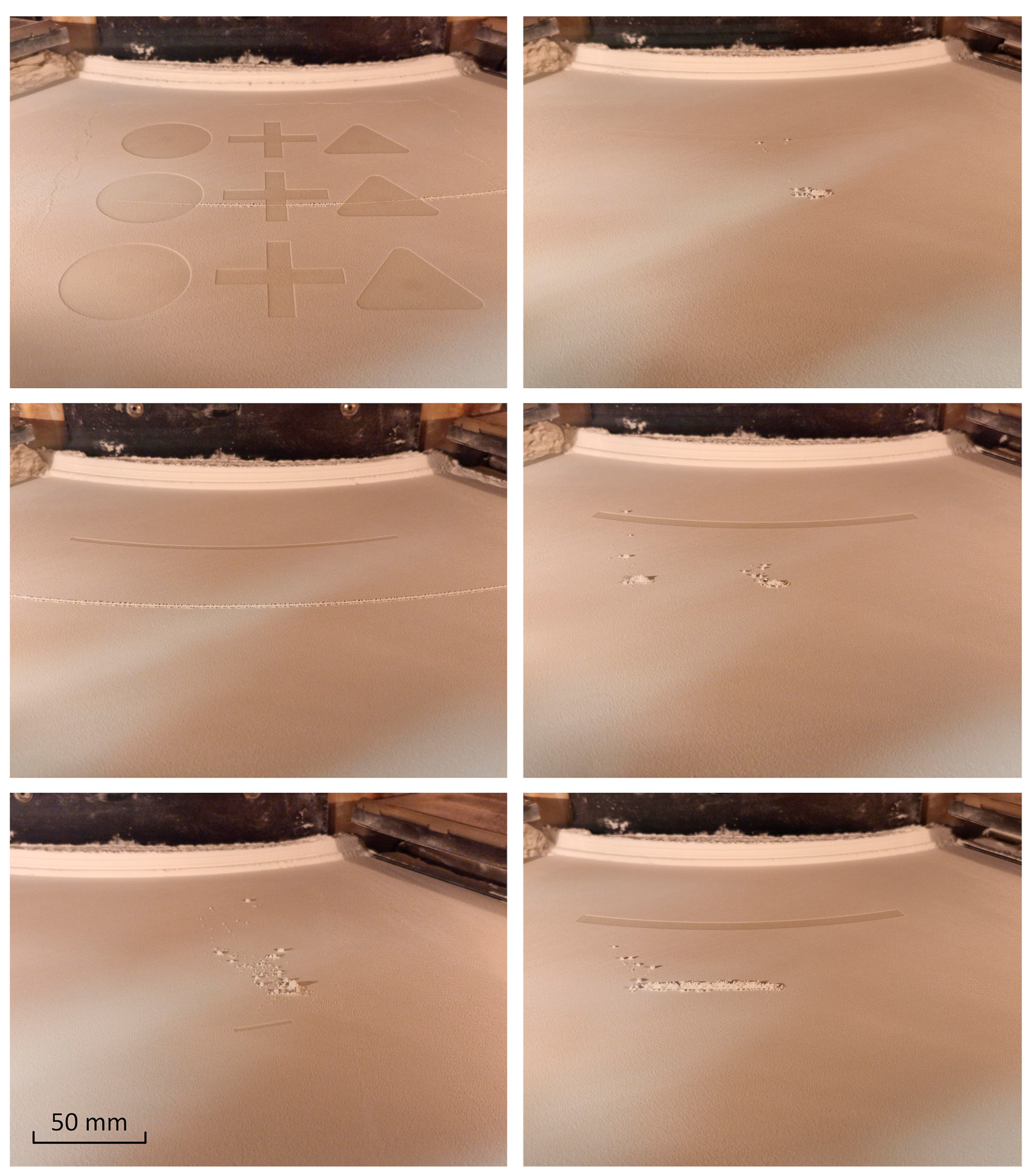

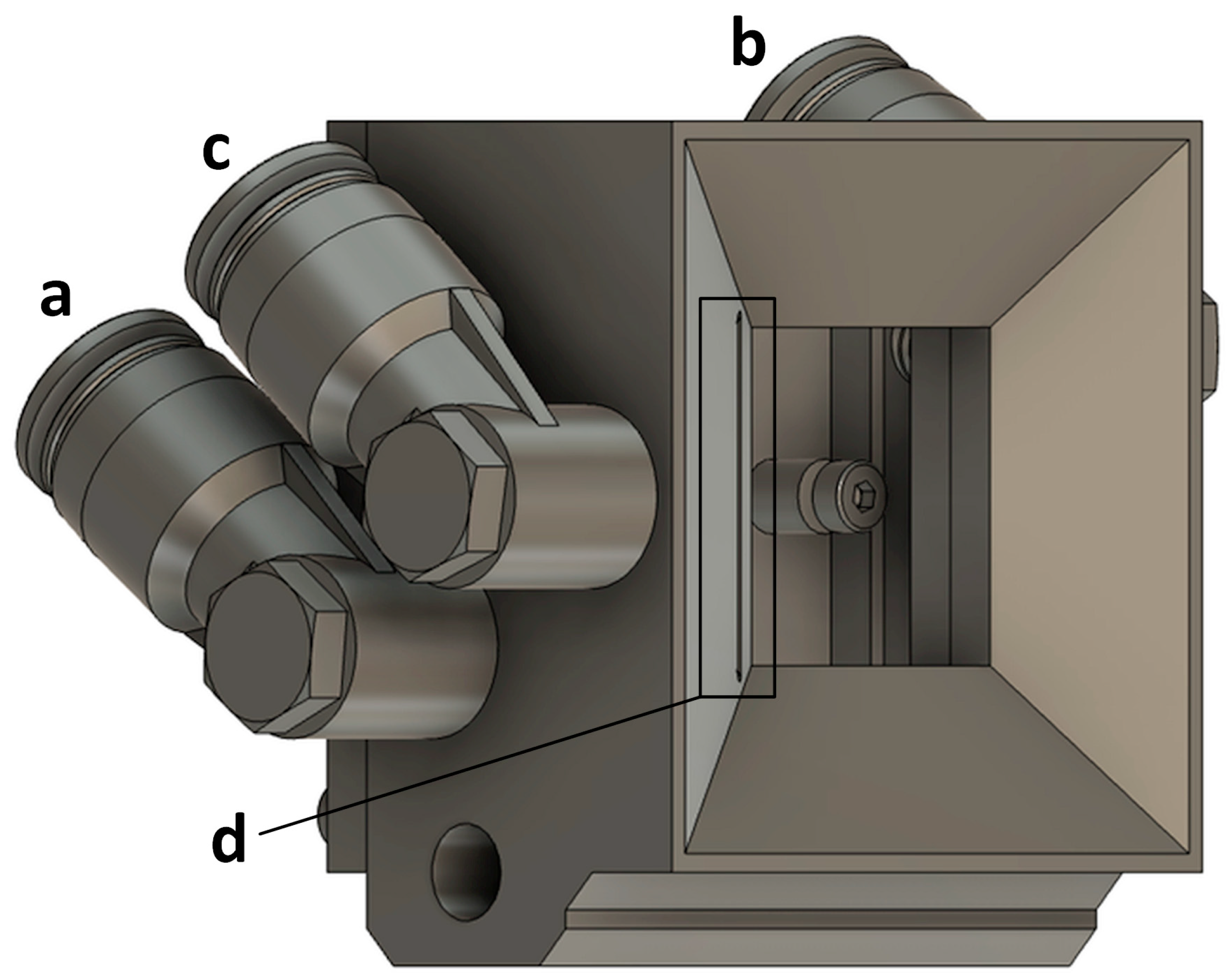

3.2. Simulation of Artificial Coating Defects as Part Shifting and Particle Drag

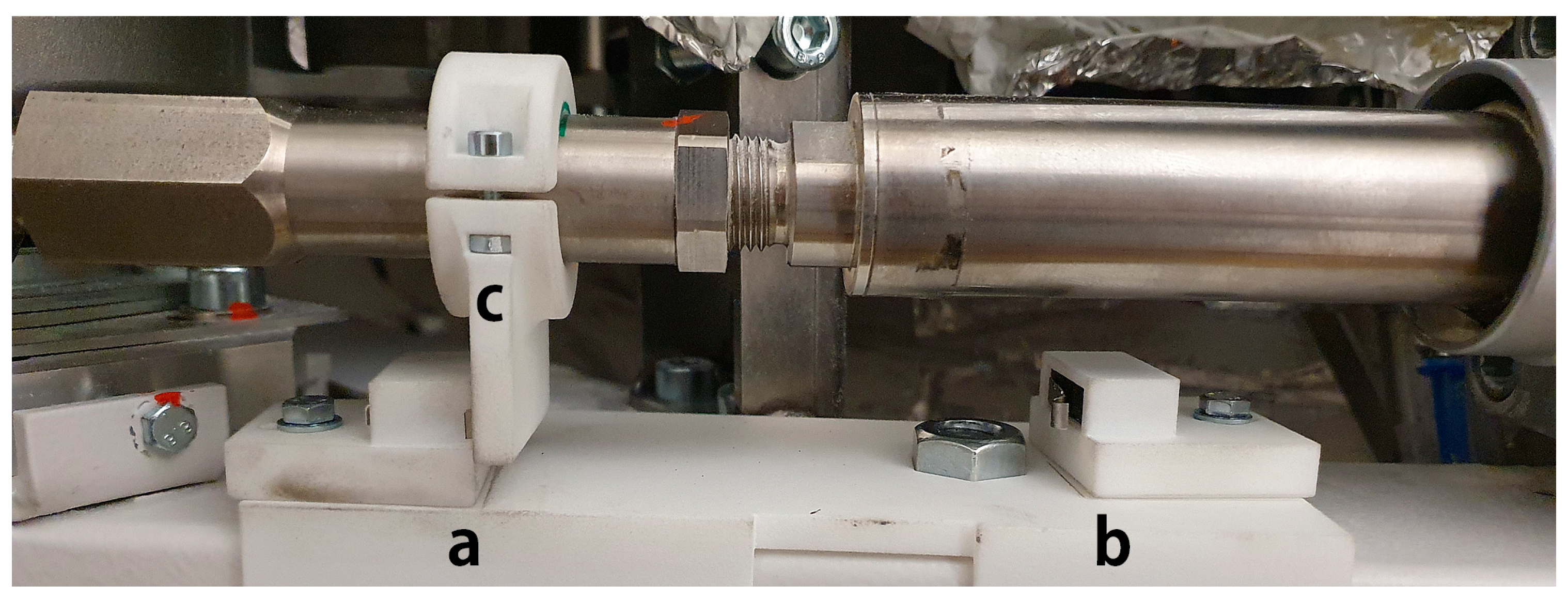

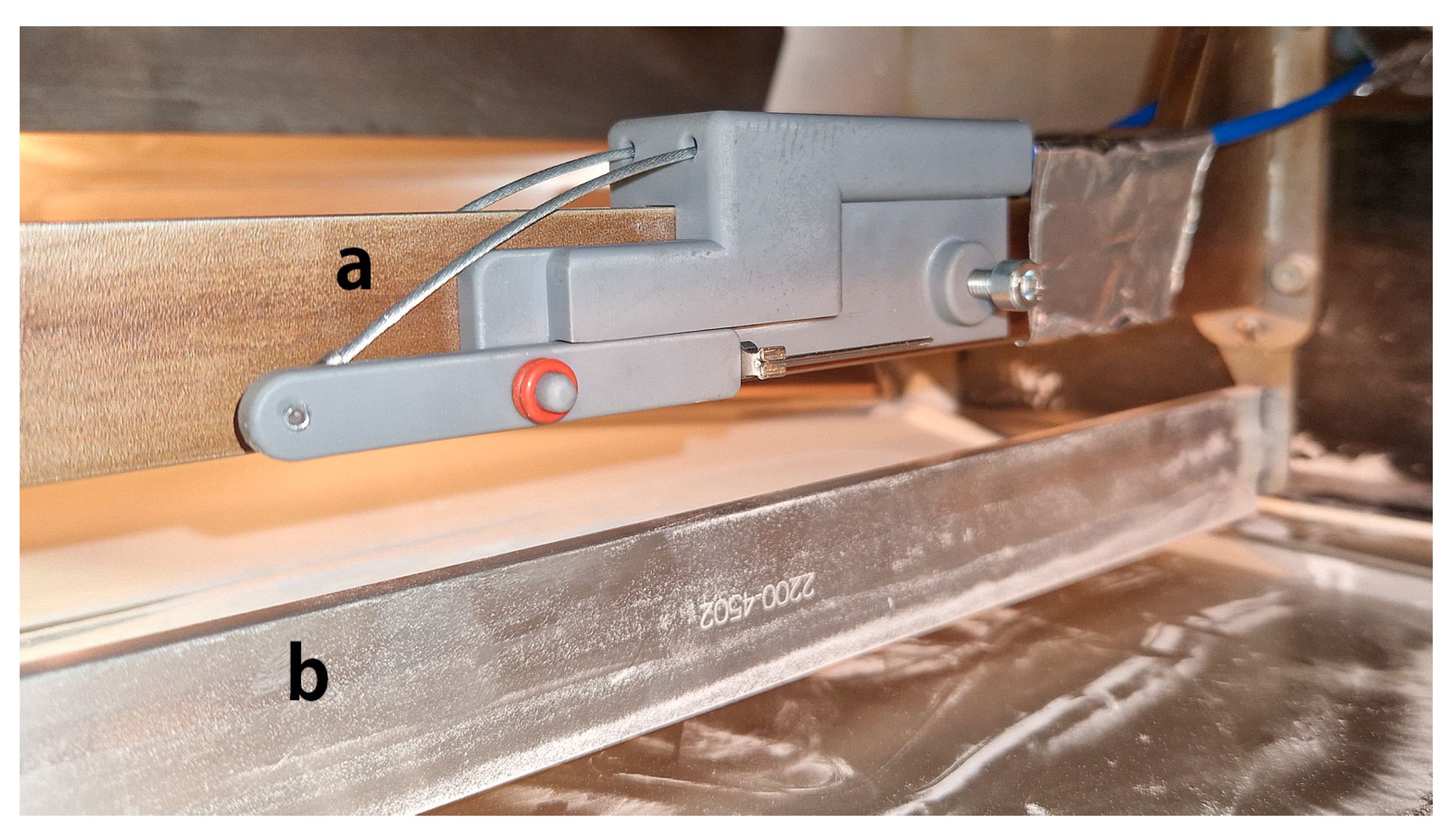

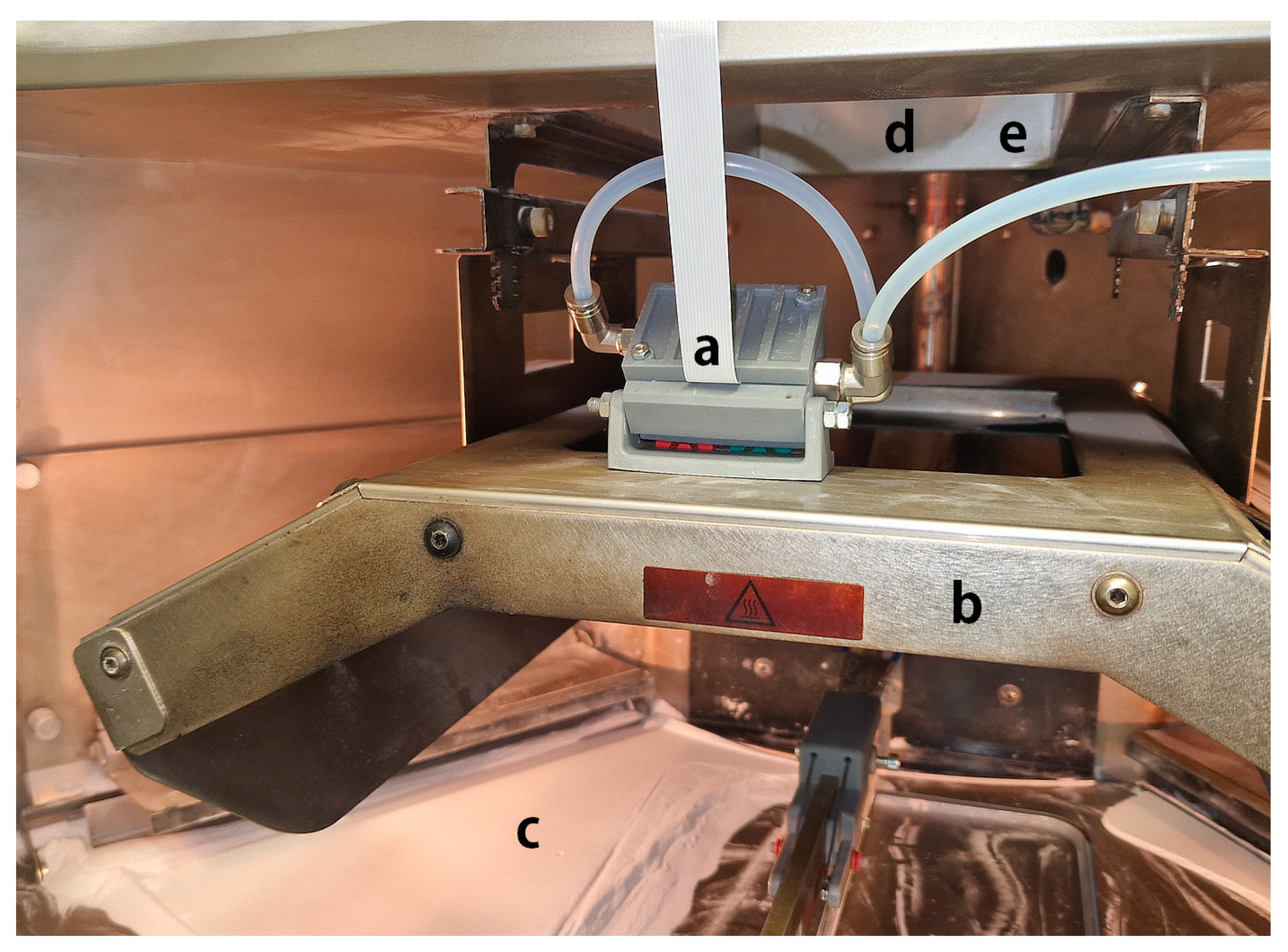

3.3. Camera Set-Up and Machine Integration

4. Design and Optimization of the CNN Architecture

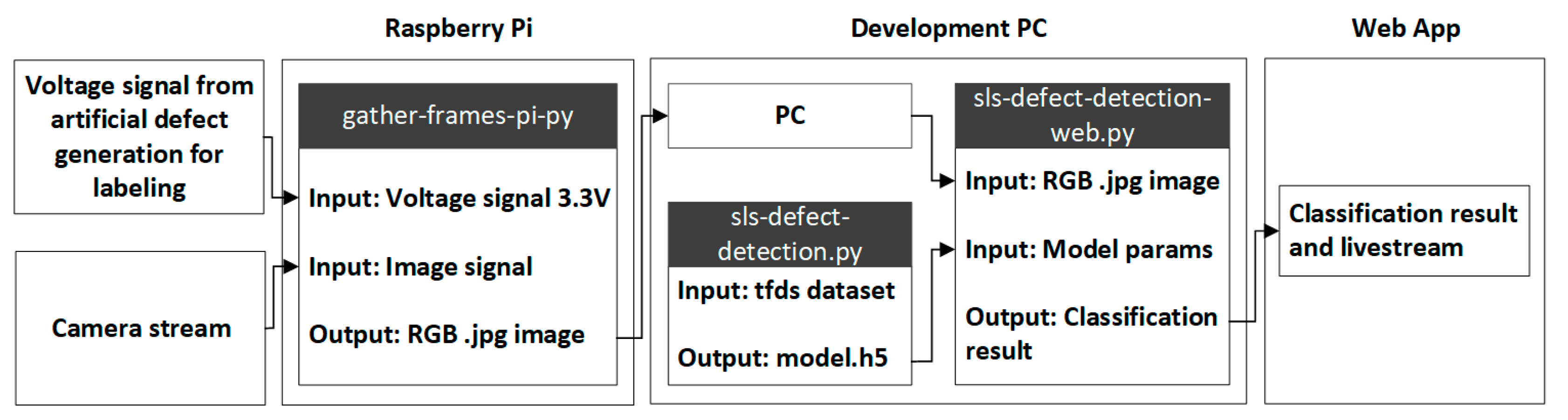

4.1. Data Quality and Interfaces

4.2. Initial Setup

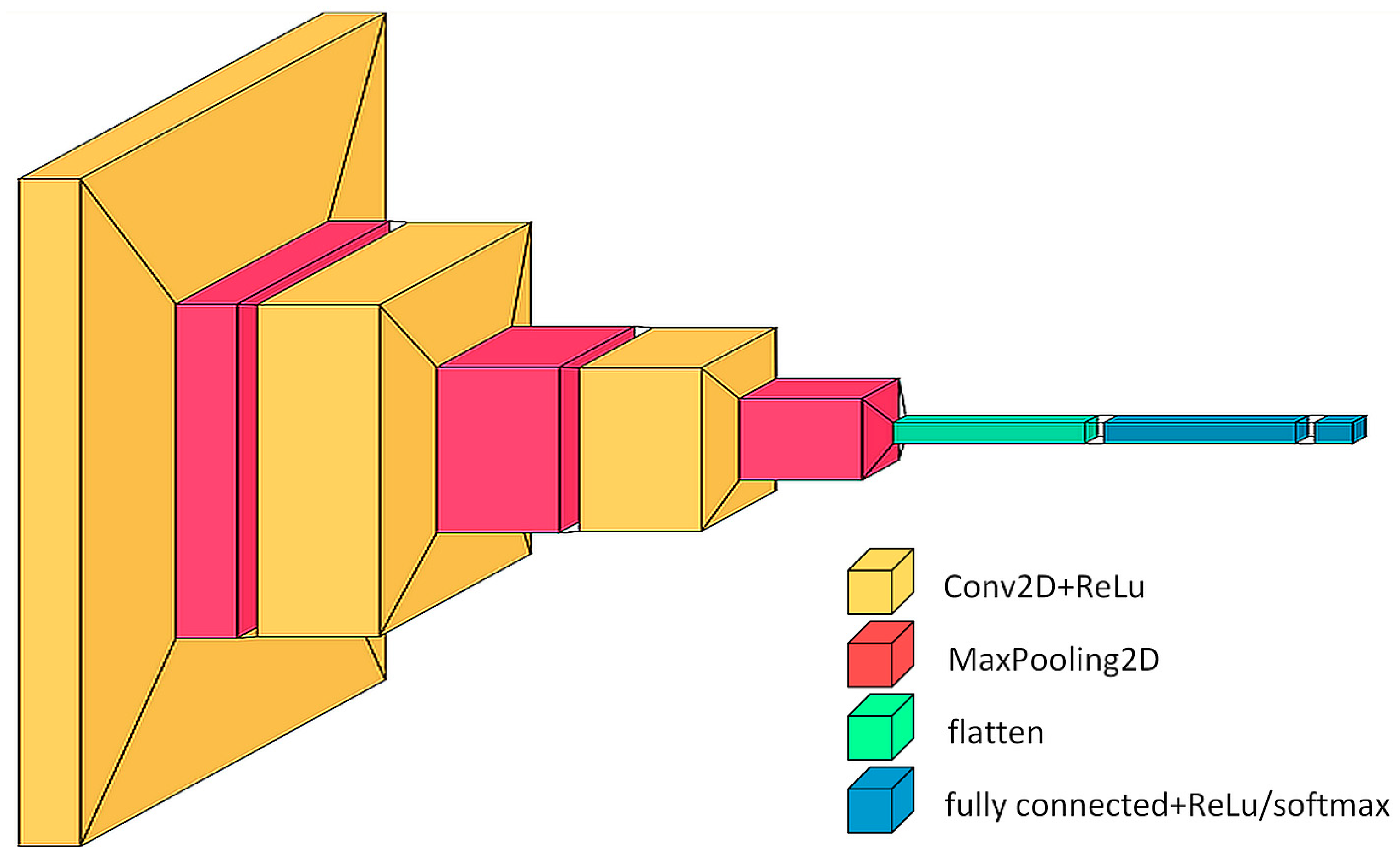

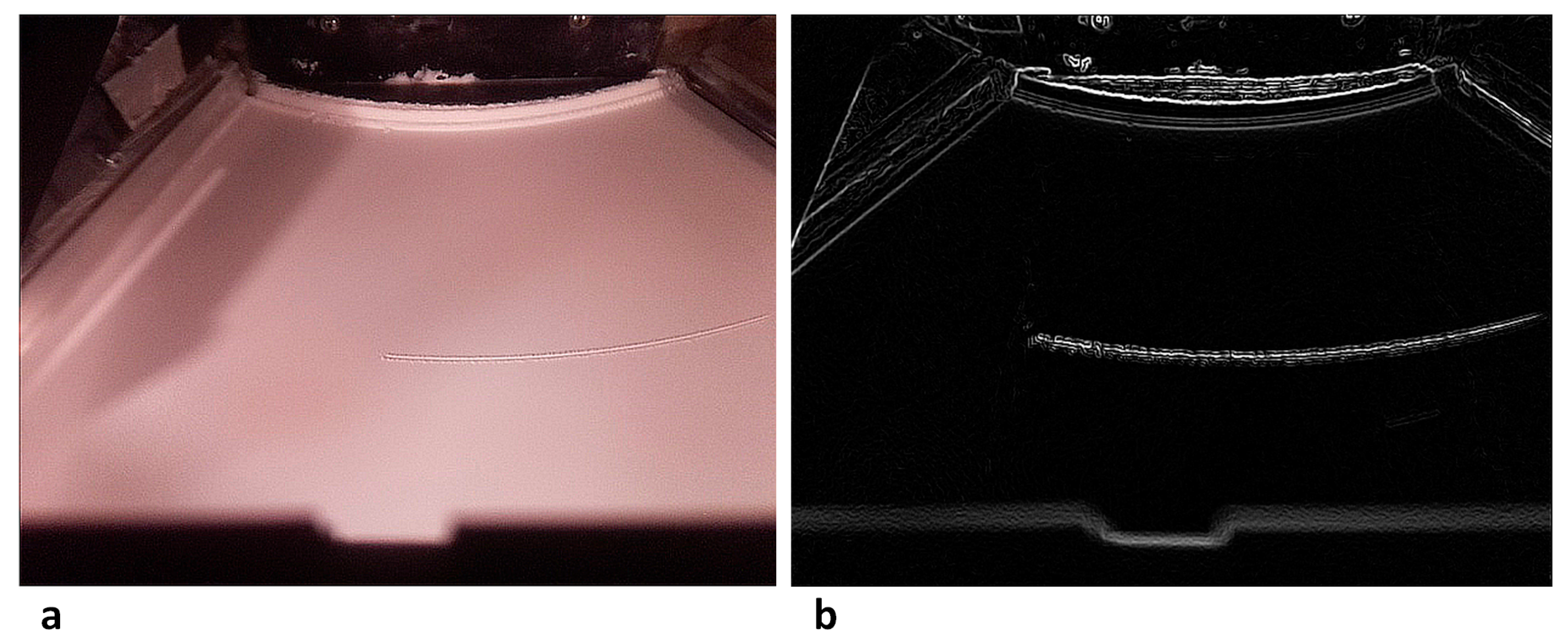

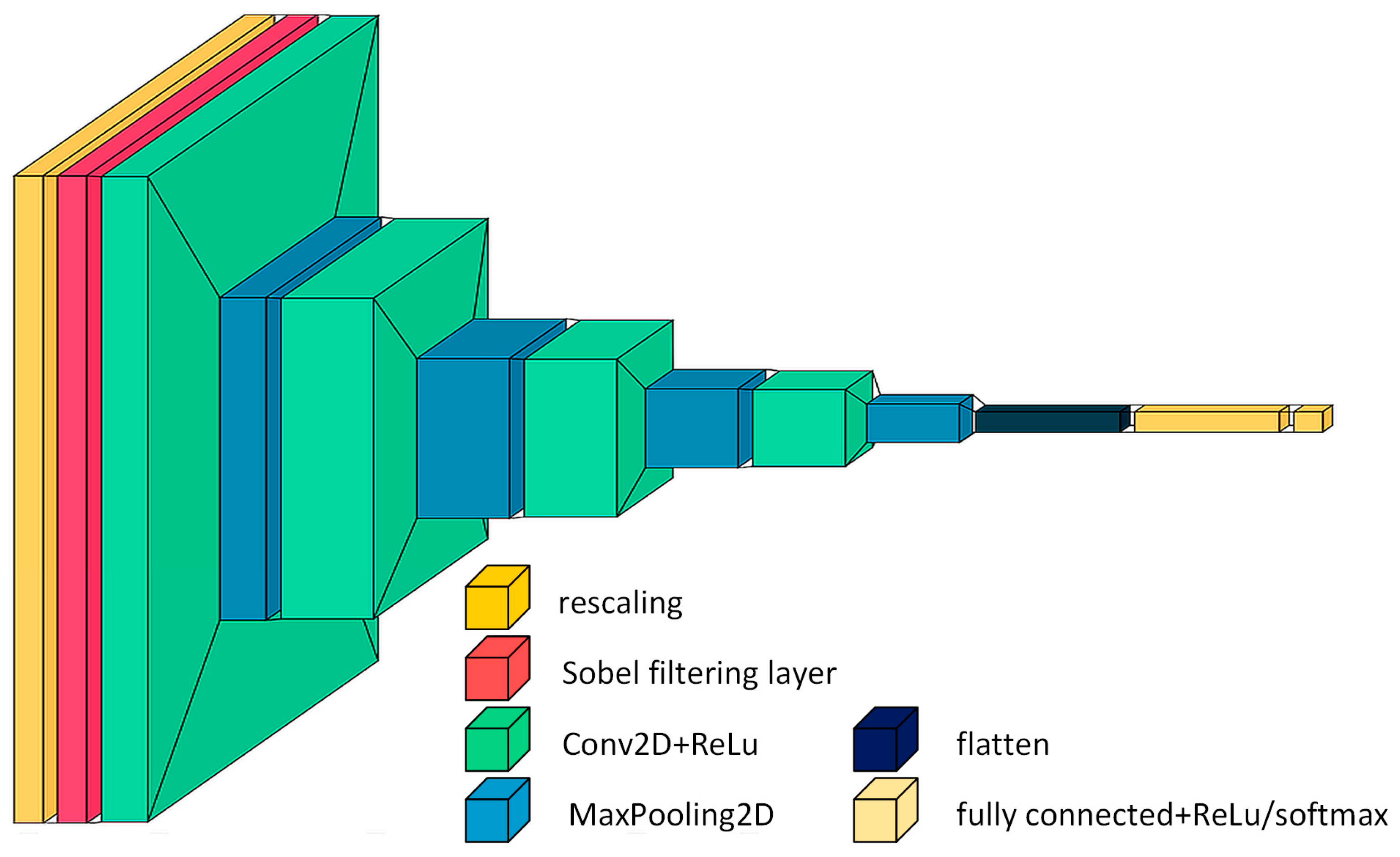

4.3. Model Architecture

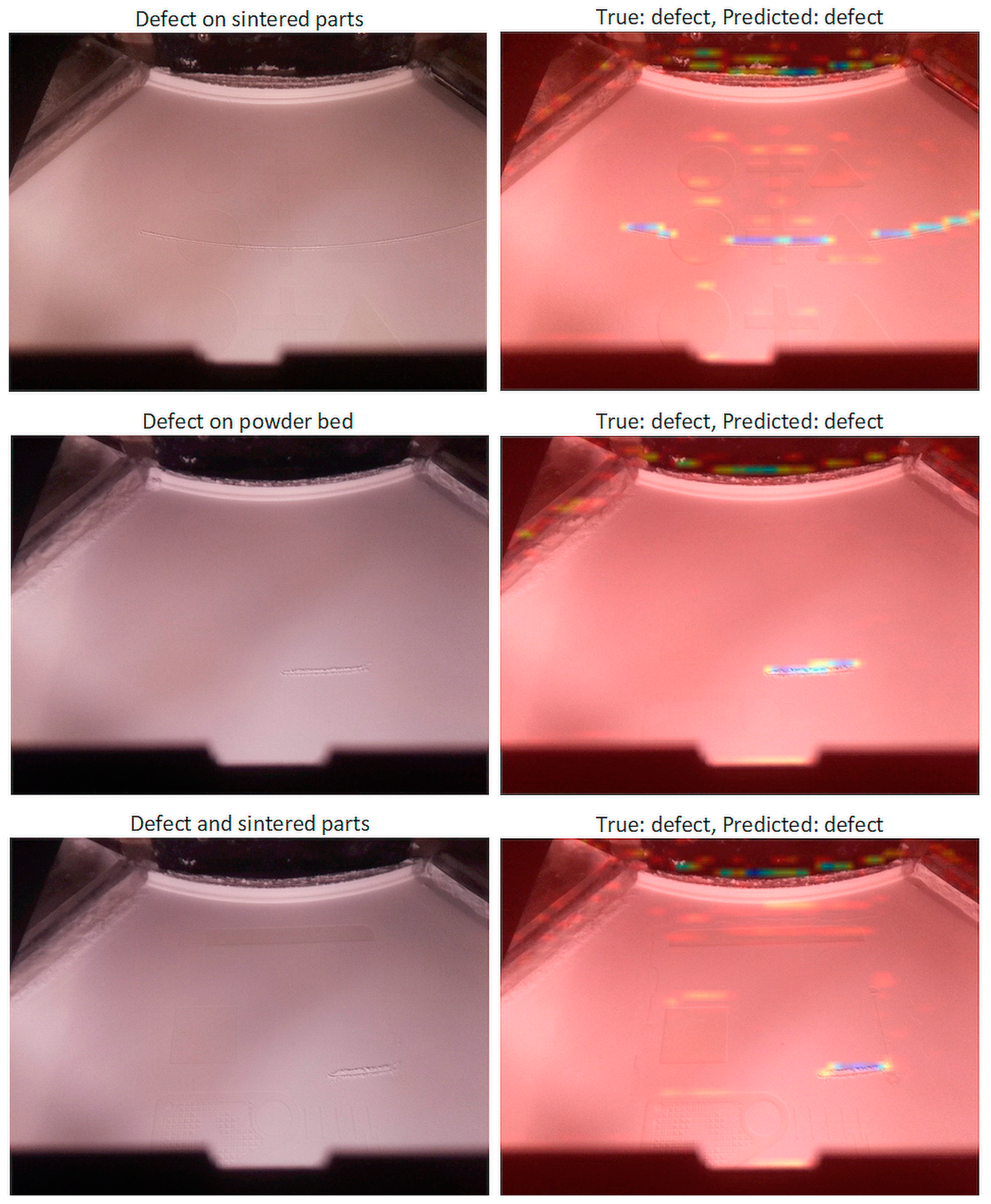

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gisario, A.; Kazarian, M.; Martina, F.; Mehrpouya, M. Metal additive manufacturing in the commercial aviation industry: A review. J. Manuf. Syst. 2019, 53, 124–149. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A. Additive manufacturing applications in medical cases: A literature based review. Alex. J. Med. 2018, 54, 411–422. [Google Scholar] [CrossRef]

- Snow, Z.; Nassar, A.R.; Reutzel, E.W. Invited Review Article: Review of the formation and impact of flaws in powder bed fusion additive manufacturing. Addit. Manuf. 2020, 36, 101457. [Google Scholar] [CrossRef]

- Clayton, J. Optimising metal powders for additive manufacturing. Met. Powder Rep. 2014, 69, 14–17. [Google Scholar] [CrossRef]

- Bourell, D.; Kruth, J.P.; Leu, M.; Levy, G.; Rosen, D.; Beese, A.M.; Clare, A. Materials for additive manufacturing. CIRP Ann. 2017, 66, 659–681. [Google Scholar] [CrossRef]

- Vafadar, A.; Guzzomi, F.; Rassau, A.; Hayward, K. Advances in Metal Additive Manufacturing: A Review of Common Processes, Industrial Applications, and Current Challenges. Appl. Sci. 2021, 11, 1213. [Google Scholar] [CrossRef]

- Eyers, D.R.; Potter, A.T. Industrial Additive Manufacturing: A manufacturing systems perspective. Comput. Ind. 2017, 92–93, 208–218. [Google Scholar] [CrossRef]

- Zhang, D.; Lim, W.Y.S.; Duran, S.S.F.; Loh, X.J.; Suwardi, A. Additive Manufacturing of Thermoelectrics: Emerging Trends and Outlook. ACS Energy Lett. 2022, 7, 720–735. [Google Scholar] [CrossRef]

- Zhang, Y.; Jarosinski, W.; Jung, Y.-G.; Zhang, J. Additive manufacturing processes and equipment. In Additive Manufacturing: Materials, Processes, Quantifications and Applications; Zhang, J., Jung, Y.-G., Eds.; Butterworth-Heinemann an Imprint of Elsevier: Cambridge, MA, USA; Oxford, UK, 2018; pp. 39–51. [Google Scholar]

- Zhang, J.; Jung, Y.-G. (Eds.) Additive Manufacturing: Materials, Processes, Quantifications and Applications; Butterworth-Heinemann an Imprint of Elsevier: Cambridge, MA, USA; Oxford, UK, 2018. [Google Scholar]

- Chueh, Y.-H.; Zhang, X.; Ke, J.C.-R.; Li, Q.; Wei, C.; Li, L. Additive manufacturing of hybrid metal/polymer objects via multiple-material laser powder bed fusion. Addit. Manuf. 2020, 36, 101465. [Google Scholar] [CrossRef]

- Dechet, M.A.; Baumeister, I.; Schmidt, J. Development of Polyoxymethylene Particles via the Solution-Dissolution Process and Application to the Powder Bed Fusion of Polymers. Materials 2020, 13, 1535. [Google Scholar] [CrossRef]

- Singh, D.D.; Mahender, T.; Reddy, A.R. Powder bed fusion process: A brief review. Mater. Today Proc. 2021, 46, 350–355. [Google Scholar] [CrossRef]

- Plessis, A.D.; Yadroitsava, I.; Yadroitsev, I. Ti6Al4V lightweight lattice structures manufactured by laser powder bed fusion for load-bearing applications. Opt. Laser Technol. 2018, 108, 521–528. [Google Scholar] [CrossRef]

- Emmelmann, C.; Sander, P.; Kranz, J.; Wycisk, E. Laser Additive Manufacturing and Bionics: Redefining Lightweight Design. Phys. Procedia 2011, 12, 364–368. [Google Scholar] [CrossRef]

- Kusoglu, I.M.; Doñate-Buendía, C.; Barcikowski, S.; Gökce, B. Laser Powder Bed Fusion of Polymers: Quantitative Research Direction Indices. Materials 2021, 14, 1169. [Google Scholar] [CrossRef]

- Fina, F.; Gaisford, S.; Basit, A.W. Powder Bed Fusion: The Working Process, Current Applications and Opportu-nities. In 3D Printing of Pharmaceuticals; Springer: Cham, Switzerland, 2018; pp. 81–105. [Google Scholar]

- Qian, M.; Bourell, D.L. Additive Manufacturing of Titanium Alloys. JOM 2017, 69, 2677–2678. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, T. Laser Powder Bed Fusion of Powder Material: A Review. In 3D Printing and Additive Manufacturing; Art. No. 3dp.2021.0297; Mary Ann Liebert, Inc.: New Rochelle, NY, USA, 2022. [Google Scholar] [CrossRef]

- Tan, X.P.; Tan, Y.J.; Chow, C.S.L.; Tor, S.B.; Yeong, W.Y. Metallic powder-bed based 3D printing of cellular scaffolds for orthopaedic implants: A state-of-the-art review on manufacturing, topological design, mechanical properties and biocompatibility. Mater. Sci. Eng. C Mater. Biol. Appl. 2017, 76, 1328–1343. [Google Scholar] [CrossRef] [PubMed]

- DIN 16742:2013; Plastics Mouldings: Tolerances and Acceptance Conditions. German Institute for Standardization: Berlin, Germany, 2013.

- Baturynska, I. Statistical analysis of dimensional accuracy in additive manufacturing considering STL model properties. Int. J. Adv. Manuf. Technol. 2018, 97, 2835–2849. [Google Scholar] [CrossRef]

- Baturynska, I.; Semeniuta, O.; Wang, K. Application of Machine Learning Methods to Improve Dimensional Accuracy in Additive Manufacturing. In Advanced Manufacturing and Automation VIII 8; Springer: Singapore, 2019; pp. 245–252. [Google Scholar] [CrossRef]

- Klamert, V.; Schiefermair, L.; Bublin, M.; Otto, A. In Situ Analysis of Curling Defects in Powder Bed Fusion of Polyamide by Simultaneous Application of Laser Profilometry and Thermal Imaging. Appl. Sci. 2023, 13, 7179. [Google Scholar] [CrossRef]

- Sillani, F.; MacDonald, E.; Villela, J.; Schmid, M.; Wegener, K. In-situ monitoring of powder bed fusion of polymers using laser profilometry. Addit. Manuf. 2022, 59, 103074. [Google Scholar] [CrossRef]

- Wang, P.; Yang, Y.; Moghaddam, N.S. Process modeling in laser powder bed fusion towards defect detection and quality control via machine learning: The state-of-the-art and research challenges. J. Manuf. Process. 2022, 73, 961–984. [Google Scholar] [CrossRef]

- Soundararajan, B.; Sofia, D.; Barletta, D.; Poletto, M. Review on modeling techniques for powder bed fusion processes based on physical principles. Addit. Manuf. 2021, 47, 102336. [Google Scholar] [CrossRef]

- McCann, R.; Obeidi, M.A.; Hughes, C.; McCarthy, É.; Egan, D.S.; Vijayaraghavan, R.K.; Joshi, A.M.; Garzon, V.A.; Dowling, D.P.; McNally, P.J.; et al. In-situ sensing, process monitoring and machine control in Laser Powder Bed Fusion: A review. Addit. Manuf. 2021, 45, 102058. [Google Scholar] [CrossRef]

- Mani, M.; Feng, S.; Brandon, L.; Donmez, A.; Moylan, S.; Fesperman, R. Measurement science needs for real-time control of additive manufacturing powder-bed fusion processes. In Additive Manufacturing Handbook: Product Development for the Defense Industry (Systems Innovation Series); CRC Press Taylor & Francis Group: Boca Raton, FL, USA, 2017; pp. 629–652. [Google Scholar]

- Liu, J.; Ye, J.; Izquierdo, D.S.; Vinel, A.; Shamsaei, N.; Shao, S. A review of machine learning techniques for process and performance optimization in laser beam powder bed fusion additive manufacturing. J. Intell. Manuf. 2022, 34, 3249–3275. [Google Scholar] [CrossRef]

- Irwin, J.E.; Wang, Q.; Michaleris, P.; Nassar, A.R.; Ren, Y.; Stutzman, C.B. Iterative simulation-based techniques for control of laser powder bed fusion additive manufacturing. Addit. Manuf. 2021, 46, 102078. [Google Scholar] [CrossRef]

- Vlasea, M.L.; Lane, B.; Lopez, F.; Mekhontsev, S.; Donmez, A. Development of Powder Bed Fusion Additive Manufacturing Test Bed for Enhanced Real-Time Process Control; University of Texas at Austin: Austin, TX, USA, 2015. [Google Scholar]

- Chen, Y.; Peng, X.; Kong, L.; Dong, G.; Remani, A.; Leach, R. Defect inspection technologies for additive manufacturing. Int. J. Extrem. Manuf. 2021, 3, 22002. [Google Scholar] [CrossRef]

- Zhang, B.; Li, Y.; Bai, Q. Defect Formation Mechanisms in Selective Laser Melting: A Review. Chin. J. Mech. Eng. 2017, 30, 515–527. [Google Scholar] [CrossRef]

- Klamert, V.; Schmid-Kietreiber, M.; Bublin, M. A deep learning approach for real time process monitoring and curling defect detection in Selective Laser Sintering by infrared thermography and convolutional neural networks. Procedia CIRP 2022, 111, 317–320. [Google Scholar] [CrossRef]

- Gardner, M.R.; Lewis, A.; Park, J.; McElroy, A.B.; Estrada, A.D.; Fish, S.; Beaman, J.J.; Milner, T.E. In situ process monitoring in selective laser sintering using optical coherence tomography. Opt. Eng. 2018, 57, 041407. [Google Scholar] [CrossRef]

- Guan, G.; Hirsch, M.; Syam, W.P.; Leach, R.K.; Huang, Z.; Clare, A.T. Loose powder detection and surface characterization in selective laser sintering via optical coherence tomography. R. Soc. Proc. 2016, 472, 20160201. [Google Scholar] [CrossRef]

- Phuc, L.T.; Seita, M. A high-resolution and large field-of-view scanner for in-line characterization of powder bed defects during additive manufacturing. Mater. Des. 2019, 164, 107562. [Google Scholar] [CrossRef]

- Sassaman, D.M.; Ide, M.S.; Kovar, D.; Beaman, J.J. Design of an In-situ microscope for selective laser sintering. Addit. Manuf. Lett. 2022, 2, 100033. [Google Scholar] [CrossRef]

- Southon, N.; Stavroulakis, P.; Goodridge, R.; Leach, R. In-process measurement and monitoring of a polymer laser sintering powder bed with fringe projection. Mater. Des. 2018, 157, 227–234. [Google Scholar] [CrossRef]

- Kanko, J.A.; Sibley, A.P.; Fraser, J.M. In situ morphology-based defect detection of selective laser melting through inline coherent imaging. J. Mater. Process. Technol. 2016, 231, 488–500. [Google Scholar] [CrossRef]

- Baldacchini, T.; Zadoyan, R. In situ and real time monitoring of two-photon polymerization using broadband coherent anti-Stokes Raman scattering microscopy. Opt. Express OE 2010, 18, 19219–19231. [Google Scholar] [CrossRef]

- Li, Z.; Liu, X.; Wen, S.; He, P.; Zhong, K.; Wei, Q.; Shi, Y.; Liu, S. In Situ 3D Monitoring of Geometric Signatures in the Powder-Bed-Fusion Additive Manufacturing Process via Vision Sensing Methods. Sensors 2018, 18, 1180. [Google Scholar] [CrossRef]

- Maucher, C.; Werkle, K.T.; Möhring, H.-C. In-Situ defect detection and monitoring for laser powder bed fusion using a multi-sensor build platform. Procedia CIRP 2021, 104, 146–151. [Google Scholar] [CrossRef]

- Zhirnov, I.; Panahi, N.; Åsberg, M.; Krakhmalev, P. Process quality assessment with imaging and acoustic monitoring during Laser Powder Bed Fusion. Procedia CIRP 2022, 111, 363–367. [Google Scholar] [CrossRef]

- Jha, S.B.; Babiceanu, R.F. Deep CNN-based visual defect detection: Survey of current literature. Comput. Ind. 2023, 148, 103911. [Google Scholar] [CrossRef]

- Qin, J.; Hu, F.; Liu, Y.; Witherell, P.; Wang, C.C.; Rosen, D.W.; Simpson, T.W.; Lu, Y.; Tang, Q. Research and application of machine learning for additive manufacturing. Addit. Manuf. 2022, 52, 102691. [Google Scholar] [CrossRef]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using Deep Learning to Detect Defects in Manufacturing: A Comprehensive Survey and Current Challenges. Materials 2020, 13, 5755. [Google Scholar] [CrossRef]

- Prunella, M.; Scardigno, R.M.; Buongiorno, D.; Brunetti, A.; Longo, N.; Carli, R.; Dotoli, M.; Bevilacqua, V. Deep Learning for Automatic Vision-Based Recognition of Industrial Surface Defects: A Survey. IEEE Access 2023, 11, 43370–43423. [Google Scholar] [CrossRef]

- Chen, F.-C.; Jahanshahi, M.R. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naïve Bayes Data Fusion. IEEE Trans. Ind. Electron. 2018, 65, 4392–4400. [Google Scholar] [CrossRef]

- Dong, X.; Taylor, C.J.; Cootes, T.F. Small Defect Detection Using Convolutional Neural Network Features and Random Forests. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2019; pp. 398–412. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Shi, J.; Yin, W.; Du, Y.; Folkesson, J. Automated Underwater Pipeline Damage Detection using Neural Nets. In Proceedings of the ICRA 2019 Workshop on Underwater Robotics Perception, Montreal, QC, Canada, 24 May 2019. [Google Scholar]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef]

- Tang, Z.; Tian, E.; Wang, Y.; Wang, L.; Yang, T. Nondestructive Defect Detection in Castings by Using Spatial Attention Bilinear Convolutional Neural Network. IEEE Trans. Ind. Inf. 2021, 17, 82–89. [Google Scholar] [CrossRef]

- Yang, H.; Mei, S.; Song, K.; Tao, B.; Yin, Z. Transfer-Learning-Based Online Mura Defect Classification. IEEE Trans. Semicond. Manufact. 2018, 31, 116–123. [Google Scholar] [CrossRef]

- Chow, J.K.; Su, Z.; Wu, J.; Tan, P.S.; Mao, X.; Wang, Y.H. Anomaly detection of defects on concrete structures with the convolutional autoencoder. Adv. Eng. Inform. 2020, 45, 101105. [Google Scholar] [CrossRef]

- Yang, H.; Chen, Y.; Song, K.; Yin, Z. Multiscale Feature-Clustering-Based Fully Convolutional Autoencoder for Fast Accurate Visual Inspection of Texture Surface Defects. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1450–1467. [Google Scholar] [CrossRef]

- Ruff, L.; Vandermeulen, R.A.; Görnitz, N.; Binder, A.; Kloft, M. Deep Support Vector Data Description for Unsupervised and Semi-Supervised Anomaly Detection. arXiv 2019, arXiv:1906.02694. [Google Scholar]

- Lian, J.; Jia, W.; Zareapoor, M.; Zheng, Y.; Luo, R.; Jain, D.K.; Kumar, N. Deep-Learning-Based Small Surface Defect Detection via an Exaggerated Local Variation-Based Generative Adversarial Network. IEEE Trans. Ind. Inf. 2020, 16, 1343–1351. [Google Scholar] [CrossRef]

- Niu, S.; Li, B.; Wang, X.; Lin, H. Defect Image Sample Generation with GAN for Improving Defect Recognition. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1611–1622. [Google Scholar] [CrossRef]

- Zenati, H.; Foo, C.S.; Lecouat, B.; Manek, G.; Chandrasekhar, V.R. Efficient GAN-Based Anomaly Detection. arXiv 2018, arXiv:1802.06222. [Google Scholar]

- Deecke, L.; Vandermeulen, R.; Ruff, L.; Mandt, S.; Kloft, M. Image Anomaly Detection with Generative Adversarial Networks. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2018, Dublin, Ireland, 10–14 September 2018, Proceedings, Part I 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 3–17. [Google Scholar] [CrossRef]

- Zhou, F.; Liu, G.; Xu, F.; Deng, H. A Generic Automated Surface Defect Detection Based on a Bilinear Model. Appl. Sci. 2019, 9, 3159. [Google Scholar] [CrossRef]

- Tsai, D.-M.; Fan, S.-K.S.; Chou, Y.-H. Auto-Annotated Deep Segmentation for Surface Defect Detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Abdulhameed, O.; Al-Ahmari, A.; Ameen, W.; Mian, H.M. Additive manufacturing: Challenges, trends, and applications. Adv. Mech. Eng. 2019, 11, 168781401882288. [Google Scholar] [CrossRef]

- Westphal, E.; Seitz, H. A machine learning method for defect detection and visualization in selective laser sintering based on convolutional neural networks. Addit. Manuf. 2021, 41, 101965. [Google Scholar] [CrossRef]

- Arslan, E.; Unal, D.; Akgün, O. Defect detection with image processing and deep learning in polymer powder bed additive manufacturing systems. J. Addit. Manuf. Technol. 2023, 2, 684. [Google Scholar] [CrossRef]

- Xiao, L.; Lu, M.; Huang, H. Detection of powder bed defects in selective laser sintering using convolutional neural network. Int. J. Adv. Manuf. Technol. 2020, 107, 2485–2496. [Google Scholar] [CrossRef]

- Schlicht, S.; Jaksch, A.; Drummer, D. Inline Quality Control through Optical Deep Learning-Based Porosity Determination for Powder Bed Fusion of Polymers. Polymers 2022, 14, 885. [Google Scholar] [CrossRef]

- EOS Formiga P 110; Technical Description. EOS: Krailling, Germany, 2023.

- EOS PA2200; Product Information. EOS: Krailling, Germany, 2023.

- Almabrouk, M.A. Experimental investigations of curling phenomenon in selective laser sintering process. Rapid Prototyp. J. 2016, 22, 405–415. [Google Scholar] [CrossRef]

- Raspberry Pi Camera Module 2 Technical Description; Raspberry Pi: Cambridge, UK, 2023.

- Wondrak, W. Physical limits and lifetime limitations of semiconductor devices at high temperatures. Microelectron. Reliab. 1999, 39, 1113–1120. [Google Scholar] [CrossRef]

- Phrozen TR300; Technical Description. Phrozen: Hsinchu, Taiwan, 2023.

- Japkowicz, N.; Stephen, S. The class imbalance problem: A systematic study1. IDA 2002, 6, 429–449. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.-Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–19 June 2019; pp. 9268–9277. [Google Scholar]

- Vasilev, I. Python Deep Learning: Exploring Deep Learning Techniques and Neural Network Architectures with PyTorch, Keras, and TensorFlow; Packt Publishing: Birmingham, UK, 2019. [Google Scholar]

- Chirodea, M.C.; Novac, O.C.; Novac, C.M.; Bizon, N.; Oproescu, M.; Gordan, C.E. Comparison of Tensorflow and PyTorch in Convolutional Neural Network-based Applications. In Proceedings of the 2021 13th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Pitesti, Romania, 1–3 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Mannor, S.; Peleg, D.; Rubinstein, R. The cross entropy method for classification. In Proceedings of the 22nd International Conference on Machine Learning (ICML’05); Association for Computing Machinery: New York, NY, USA, 2005; pp. 561–568. [Google Scholar] [CrossRef]

- Polat, G.; Ergenc, I.; Kani, H.T.; Alahdab, Y.O.; Atug, O.; Temizel, A. Class Distance Weighted Cross-Entropy Loss for Ulcerative Colitis Severity Estimation. In 26th UK Conference on Medical Image Understanding and Analysis; Springer: Cham, Switzerland; Cambridge, UK, 2022. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Schmidt, D.; Gevers, R.; Ordieres-Meré, J.; Buchwitz, M.; Wellbrock, W. Deep Learning for Industrial Computer Vision Quality Control in the Printing Industry 4.0. Sensors 2019, 19, 3987. [Google Scholar] [CrossRef]

- Amer, G.M.H.; Abushaala, A.M. Edge detection methods. In Proceedings of the 2015 2nd World Symposium on Web Applications and Networking (WSWAN 2015), Sousse, Tunisia, 21–23 March 2015; pp. 1–7. [Google Scholar] [CrossRef]

| Laser type | CO2 |

| Laser power | 30 W |

| Laser wavelength | 10.6 µm |

| Scanning speed | 5 m/s |

| Exposed area (xy) | 200 mm × 250 mm |

| Maximum part height (z) | 300 mm |

| Layer thickness | 50 µm–200 µm |

| Defined layer thickness | 100 µm |

| Powder type | PA2200 nylon |

| Dataset | Ok Frames | Defective Frames | Resolution | Defect Info |

|---|---|---|---|---|

| 1 | 16.506 | 4.827 | 480 × 640 | Overheating |

| 2 | 3.007 | n.a. | 480 × 640 | Various defects |

| 3 | 22.449 | 2.356 | 480 × 640 | Various defects |

| 4 | 24.823 | 388 | 960 × 1280 | Various defects |

| 5 | 40.609 | 1.044 | 960 × 1280 | Various defects |

| 6 | 79.563 | 3.400 | mixed | Consolidated |

| 7 | 3.000 | 3.000 | mixed | Evaluation |

| Model Architecture | Loss | Accuracy | Precision | F1 Score | Epochs |

|---|---|---|---|---|---|

| Basic | 0.0002 | 0.7233 | 0.7233 | 0.7233 | 15 |

| + Weighted cross-entropy | <0.0001 | 0.9838 | 0.9846 | 0.9835 | 10 |

| + Learning rate scheduling | <0.0001 | 0.9852 | 0.9886 | 0.9847 | 15 |

| + Sobel layer | <0.0001 | 0.9912 | 0.9910 | 0.9911 | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Klamert, V.; Achsel, T.; Toker, E.; Bublin, M.; Otto, A. Real-Time Optical Detection of Artificial Coating Defects in PBF-LB/P Using a Low-Cost Camera Solution and Convolutional Neural Networks. Appl. Sci. 2023, 13, 11273. https://doi.org/10.3390/app132011273

Klamert V, Achsel T, Toker E, Bublin M, Otto A. Real-Time Optical Detection of Artificial Coating Defects in PBF-LB/P Using a Low-Cost Camera Solution and Convolutional Neural Networks. Applied Sciences. 2023; 13(20):11273. https://doi.org/10.3390/app132011273

Chicago/Turabian StyleKlamert, Victor, Timmo Achsel, Efecan Toker, Mugdim Bublin, and Andreas Otto. 2023. "Real-Time Optical Detection of Artificial Coating Defects in PBF-LB/P Using a Low-Cost Camera Solution and Convolutional Neural Networks" Applied Sciences 13, no. 20: 11273. https://doi.org/10.3390/app132011273

APA StyleKlamert, V., Achsel, T., Toker, E., Bublin, M., & Otto, A. (2023). Real-Time Optical Detection of Artificial Coating Defects in PBF-LB/P Using a Low-Cost Camera Solution and Convolutional Neural Networks. Applied Sciences, 13(20), 11273. https://doi.org/10.3390/app132011273