UAV Image Small Object Detection Based on RSAD Algorithm

Abstract

:1. Introduction

2. Related Work

2.1. Object Detection in UAV Images

2.2. SSD Object Detection Framework

2.3. Datasets and Evaluation Metrics

2.4. Motivation and Contribution

- (1)

- By comparing backbone networks geared towards real-world applications and considering UAV resource constraints, we chose ResNet-50 as the backbone network for RSAD and continued to use multiscale detection.

- (2)

- We designed a feature fusion module based on the self-attention mechanism. Different from the traditional attention mechanism, we pay more attention to the weight relationship within the image. By reshaping and concatenating the information extracted into the image by the backbone network, the correlation between the information inside the image is established. It helps the network to better understand the information in the UAV image.

- (3)

- We introduced the Focal Loss loss function to enhance RSAD’s focus on small targets. The parameters of the Focal Loss loss function are adjusted through experiments with a small number of iterations to make RSAD pay the best attention to small objects.

3. Proposed Method

3.1. Establishment of the Backbone Network

3.2. Self-Attention Mechanism Based Feature Fusion

3.3. Loss Function

4. Results

4.1. Experimental Setup

4.2. Ablation Experiments

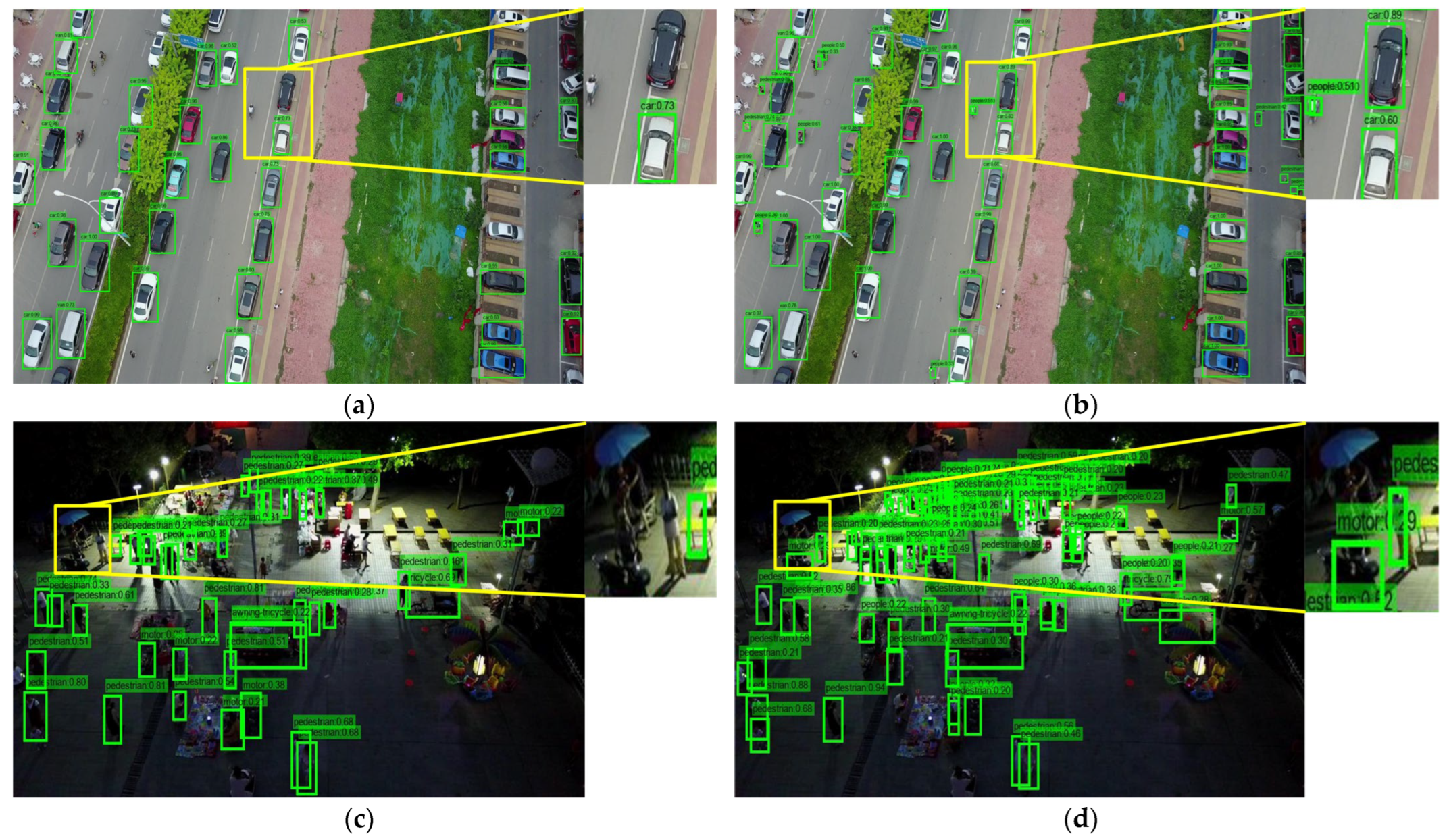

4.3. Comparison Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lan, Y.; Huang, Z.; Deng, X.; Zhu, Z.; Huang, H.; Zheng, Z.; Lian, B.; Zeng, G.; Tong, Z. Comparison of machine learning methods for citrus greening detection on UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105234. [Google Scholar] [CrossRef]

- Liekai, C.; Martin, D.; Danxun, L. Airborne lmage Velocimetry System and lts Application on River Surface Flow Field Measurement. J. Basic Sci. Eng. 2020, 28, 1271–1280. [Google Scholar]

- Jiang, B.; Qu, R.; Li, Y.; Li, C. Object detection in UAV imagery based on deep learning: Review. Acta Aeronaut. Astronaut. Sin. 2021, 42, 137–151. [Google Scholar]

- Tong, K.; Wu, Y. Deep learning-based detection from the perspective of tiny objects: A survey. Image Vis. Comput. 2022, 123, 104471. [Google Scholar]

- Li, X.; Song, S.; Yin, X. Real-time Vehicle Detection Technology for UAV lmagery Based on Target Spatial Distribution Features. China J. Highw. 2022, 35, 193–204. [Google Scholar]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 is based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Wu, X.; Li, W.; Hong, D.; Tao, R.; Du, Q. Deep Learning for Unmanned Aerial Vehicle-Based Object Detection and Tracking: A survey. IEEE Geosci. Remote Sens. Mag. 2021, 10, 91–124. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martinez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield 556 and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Yang, J.; Yang, H.; Wang, F.; Chen, X. A modified YOLOv5 for object detection in UAV-captured scenarios. In Proceedings of the 2022 IEEE International Conference on Networking, Sensing and Control (ICNSC), Shanghai, China, 15–18 December 2022; pp. 1–6. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V13. Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Saeed, Z.; Yousaf, M.H.; Ahmed, R.; Velastin, S.A.; Viriri, S. On-Board Small-Scale Object Detection for Unmanned Aerial Vehicles (UAVs). Drones 2023, 7, 310. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE. Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhang, R.; Shao, Z.; Huang, X.; Wang, J.; Li, D. Object Detection in UAV Images via Global Density Fused Convolutional Network. Remote Sens. 2020, 12, 3140. [Google Scholar] [CrossRef]

- Junyan, M.; Yanan, C. MFE-YOLOX: Dense small target detection algorithm under UAV aerial photography. J. Chongqing Univ. Posts Telecommun. (Nat. Sci. Ed.) 2023, 1–8. [Google Scholar]

- Gangyi, T.; Jianran, L.; Wenyuan, Y. A dual neural network for object detection in UAV images. Neurocomputing. 2021, 443, 292–301. [Google Scholar]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of Maize Tassels from UAV RGB Imagery with Faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.; Lu, Q. Learning roi transformer for oriented object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. Yolov6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Jawaharlalnehru, A.; Sambandham, T.; Sekar, V.; Ravikumar, D.; Loganathan, V.; Kannadasan, R.; Khan, A.A.; Wechtaisong, C.; Haq, M.A.; Alhussen, A.; et al. Target Object Detection from Unmanned Aerial Vehicle (UAV) Images Based on Improved YOLO Algorithm. Electronics 2022, 11, 2343. [Google Scholar] [CrossRef]

- Elhagry, A.; Dai, H.; El Saddik, A.; Gueaieb, W.; De Masi, G. CEAFFOD: Cross-Ensemble Attention-based Feature Fusion Architecture Towards a Robust and Real-time UAV-based Object Detection in Complex Scenarios. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 4865–4872. [Google Scholar]

- Maktab Dar Oghaz, M.; Razaak, M.; Remagnino, P. Enhanced Single Shot Small Object Detector for Aerial Imagery Using Super-Resolution, Feature Fusion and Deconvolution. Sensors 2022, 22, 4339. [Google Scholar] [CrossRef]

- Yundong, L.I.; Han, D.; Hongguang, L.I.; Xueyan, Z.; Baochang, Z.; Zhifeng, X. Multi-block SSD based on small object detection for UAV railway scene surveillance. Chin. J. Aeronaut. 2020, 33, 1747–1755. [Google Scholar]

- Bowei, L.; Ming, H.; Qing, L.; Wenlong, X. Improved SSD Domestic Garbage Detection Algorithm. Mach. Des. Manufacture. 2023, 9, 157–162. [Google Scholar]

- Liu, X.; Li, Y.; Shuang, F.; Gao, F.; Zhou, X.; Chen, X. ISSD: Improved SSD for Insulator and Spacer Online Detection Based on UAV System. Sensors 2020, 20, 6961. [Google Scholar] [CrossRef]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An Improved SSD Object Detection Algorithm Based on DenseNet and Feature Fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Leng, J.; Liu, Y. An enhanced SSD with feature fusion and visual reasoning for object detection. Neural. Comput. Appl. 2019, 31, 6549–6558. [Google Scholar] [CrossRef]

- VisDrone. Available online: https://github.com/VisDrone/VisDrone-Dataset (accessed on 16 May 2021).

- Jian, J.; Liu, L.; Zhang, Y.; Xu, K.; Yang, J. Optical Remote Sensing Ship Recognition and Classification Based on Improved YOLOv5. Remote Sens. 2023, 15, 4319. [Google Scholar] [CrossRef]

- Canziani, A.; Paszke, A.; Culurciello, E. An Analysis of Deep Neural Network Models for Practical Applications. arXiv 2016, arXiv:1605.07678. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Adam, P.; Abhishek, C.; Sangpil, K.; Eugenio, C. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Min, L.; Qiang, C.; Shuicheng, Y. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Christian, S.; Wei, L.; Yangqing, J.; Pierre, S.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv 2015, arXiv:151 2.03385. [Google Scholar]

- Karen, S.; Andrew, Z. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Li, X.; Lv, C.; Wang, W.; Li, G.; Yang, L.; Yang, J. Generalized focal loss: Towards efficient representation learning for dense object detaction. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3139–3153. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, H.; Li, W.; Sakaridis, C.; Dai, D.; Van Gool, L. Scale-aware domain adaptive faster r-cnn. Int. J. Comput. Vis. 2021, 129, 2223–2243. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Albaba, B.M.; Ozer, S. SyNet: An ensemble network for object detection in UAV images. In Proceedings of the 2020 25th International Conference on Pattern Recognition(ICPR), Milan, Italy, 10–15 January 2021; pp. 10227–10234. [Google Scholar]

- Cao, Y.; He, Z.; Wang, L.; Wang, W.; Yuan, Y.; Zhang, D.; Zhang, J.; Zhu, P.; Van Gool, L.; Han, J.; et al. VisDrone-DET2021: The vision meets drone object detection challenge results. In Proceedings of the IEEE/CVF International Conference on Computer vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2847–2854. [Google Scholar]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 213–226. [Google Scholar]

| Backbone | A | B | C | D | E | F | G | H | I | J | K | L |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameters/M | 61.1 | 65 | 6.8 | 4.5 | 6.6 | 11.7 | 138.4 | 143.7 | 21.8 | 25.6 | 44.6 | 60.2 |

| Flops/G | 1.2 | 0.8 | 1.15 | 0.6 | 2.6 | 3.4 | 27.2 | 34.6 | 6.9 | 7.71 | 14.9 | 21.5 |

| VGG-16 | ResNet-50 | SAFM | Focal Loss | mAP/% | Parameters/B | FPS (3060 Ti) |

|---|---|---|---|---|---|---|

| ✔ | 19.9 | 22.9 | 55 | |||

| ✔ | 21.2 | 29.8 | 59 | |||

| ✔ | ✔ | 28.6 | 37.4 | 52 | ||

| ✔ | ✔ | ✔ | 30.5 | 37.4 | 52 |

| Model | Backbone | AP/% | mAP /% | FPS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P1 | P2 | B1 | C | V | T1 | T2 | A | B2 | M | ||||

| Faster R-CNN | ResNet-50 | 21.4 | 15.6 | 6.7 | 51.7 | 29.5 | 19.0 | 13.1 | 7.7 | 31.4 | 20.7 | 21.7 | 9.3 |

| Cascade R-CNN | ResNet-50 | 22.2 | 14.8 | 7.6 | 54.6 | 31.5 | 21.6 | 14.8 | 8.6 | 34.9 | 21.4 | 23.2 | 12.1 |

| RetinaNet | ResNet-50 | 13.0 | 7.9 | 1.4 | 45.5 | 19.9 | 11.5 | 6.3 | 4.2 | 17.8 | 11.8 | 13.9 | 16.7 |

| CenterNet | Hourglass-104 | 14.8 | 13.2 | 5.6 | 50.2 | 24.0 | 21.3 | 20.1 | 17.4 | 37.9 | 23.7 | 22.8 | 14.0 |

| YOLOv5s | CSPDarknet53 | 19.7 | 13.7 | 3.84 | 62.0 | 27.2 | 22.4 | 15.7 | 6.9 | 40.3 | 19.8 | 23.2 | 57.2 |

| YOLOv8n | CSPNet | 13.6 | 11.6 | 1.8 | 55.9 | 21.0 | 18.3 | 10.6 | 5.63 | 30.1 | 15.4 | 18.4 | 61.5 |

| SSD300 | VGG-16 | 13.6 | 11.3 | 9.1 | 42.6 | 25.8 | 26.1 | 13.2 | 6.8 | 37.9 | 12.6 | 19.9 | 55.0 |

| RSAD(ours) | ResNet-50 | 15.1 | 14.0 | 10.5 | 51.8 | 43.0 | 46.1 | 25.5 | 19.1 | 59.5 | 19.9 | 30.5 | 52.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, J.; Yu, Z.; Qi, G.; Su, Q.; Xie, J.; Liu, W. UAV Image Small Object Detection Based on RSAD Algorithm. Appl. Sci. 2023, 13, 11524. https://doi.org/10.3390/app132011524

Song J, Yu Z, Qi G, Su Q, Xie J, Liu W. UAV Image Small Object Detection Based on RSAD Algorithm. Applied Sciences. 2023; 13(20):11524. https://doi.org/10.3390/app132011524

Chicago/Turabian StyleSong, Jian, Zhihong Yu, Guimei Qi, Qiang Su, Jingjing Xie, and Wenhang Liu. 2023. "UAV Image Small Object Detection Based on RSAD Algorithm" Applied Sciences 13, no. 20: 11524. https://doi.org/10.3390/app132011524

APA StyleSong, J., Yu, Z., Qi, G., Su, Q., Xie, J., & Liu, W. (2023). UAV Image Small Object Detection Based on RSAD Algorithm. Applied Sciences, 13(20), 11524. https://doi.org/10.3390/app132011524