A System for Interviewing and Collecting Statements Based on Intent Classification and Named Entity Recognition Using Augmentation

Abstract

:1. Introduction

- The model is proposed for intent classification and NER for objective interviews using augmentation due to a limited statement dataset.

- The system produces objective questioning in accordance with both the proposed model and the NICHD protocol, which is able to elicit quality statements based on proven interview guidelines.

- The approach and results of this study show that it can be used for objective intent classification and NER even in special environments such as child sexual abuse cases, indicating that it can be used in various domains through transfer learning.

2. Materials and Methods

2.1. NICHD Protocol

2.2. Data

2.3. Data Augmentation

| Algorithm 1 Text augmentation with these parameters: SR = 0.7, RI = 0.7, RS = 0.3, RD = 0.1 = Korean dictionary of similar words, = FastText embedding model |

| Input: The trained dataset Output: A set of augmented sentences,

|

2.4. Word Embedding

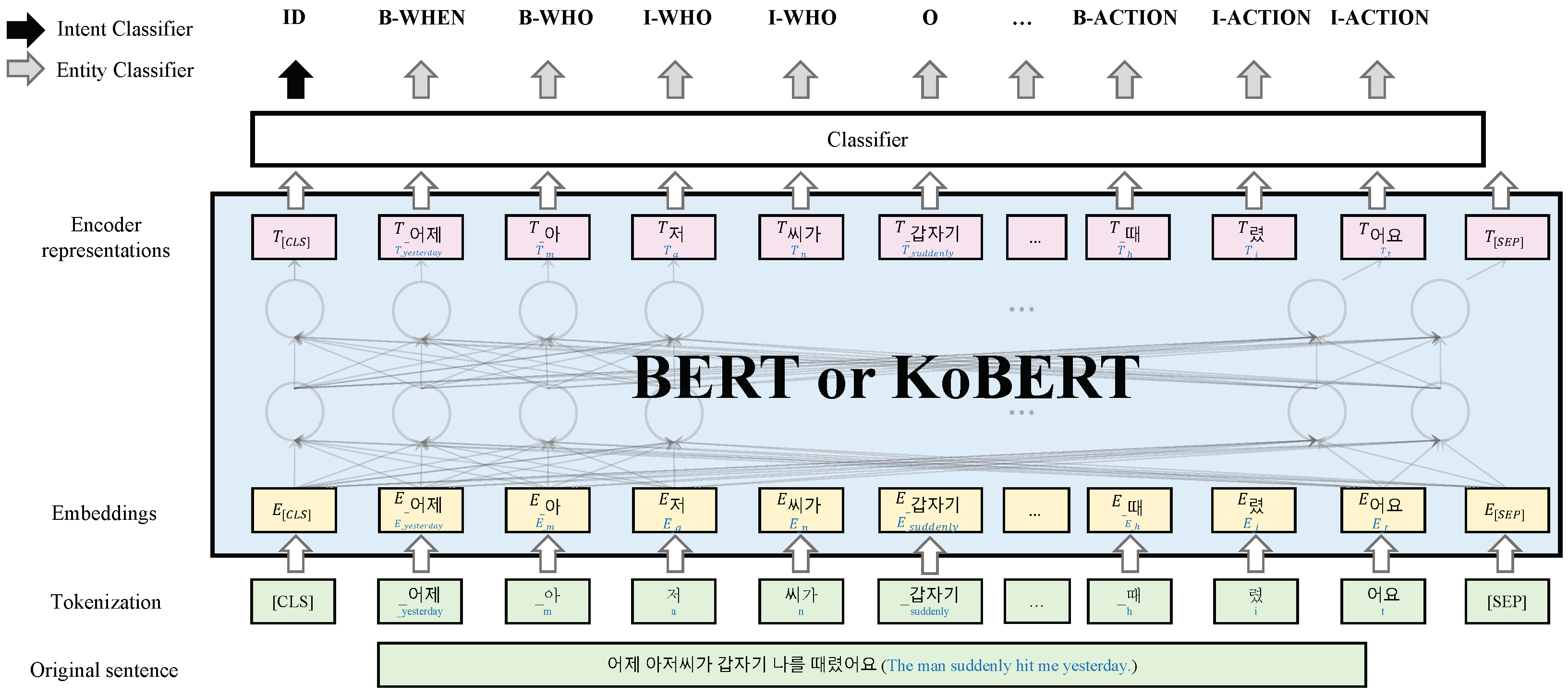

2.5. Language Model

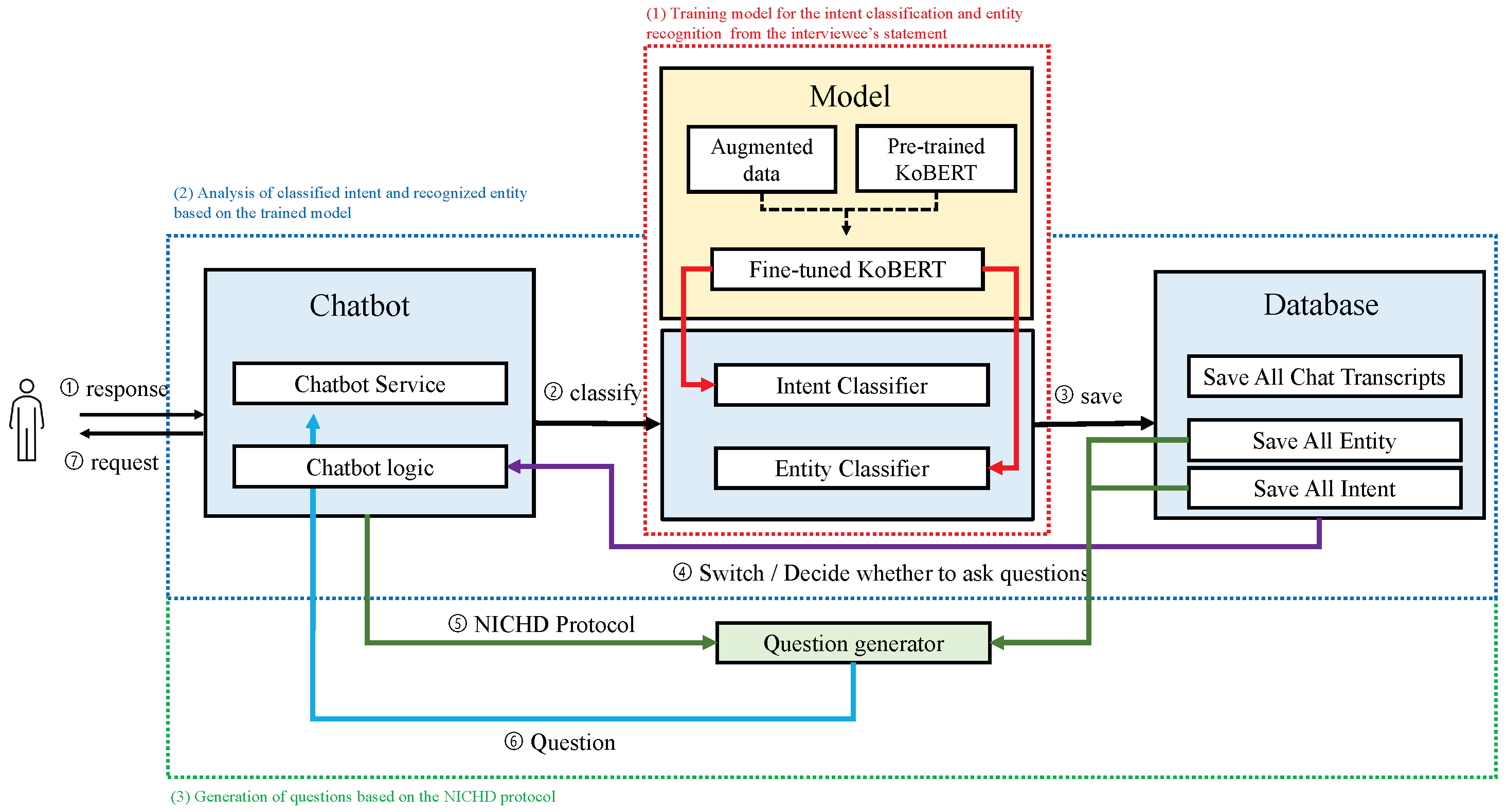

2.6. Configuring and Implementing the System

2.6.1. Training Model for Intent Classification and Entity Recognition from the Interviewee’s Statements

2.6.2. Analysis of the Classified Intent and Recognized Entity Based on the Trained Model

2.6.3. Generating Questions Based on the NICHD Protocol

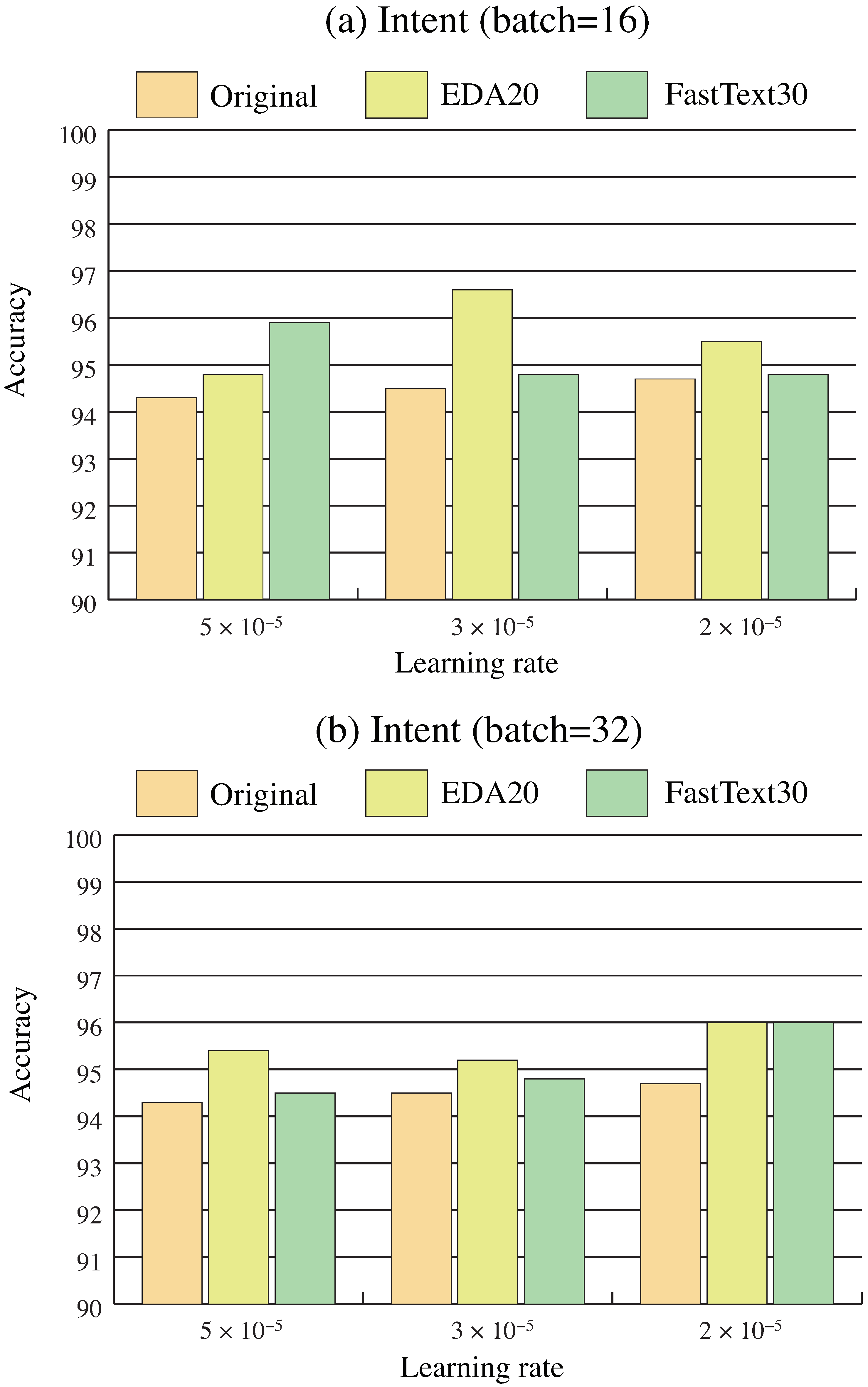

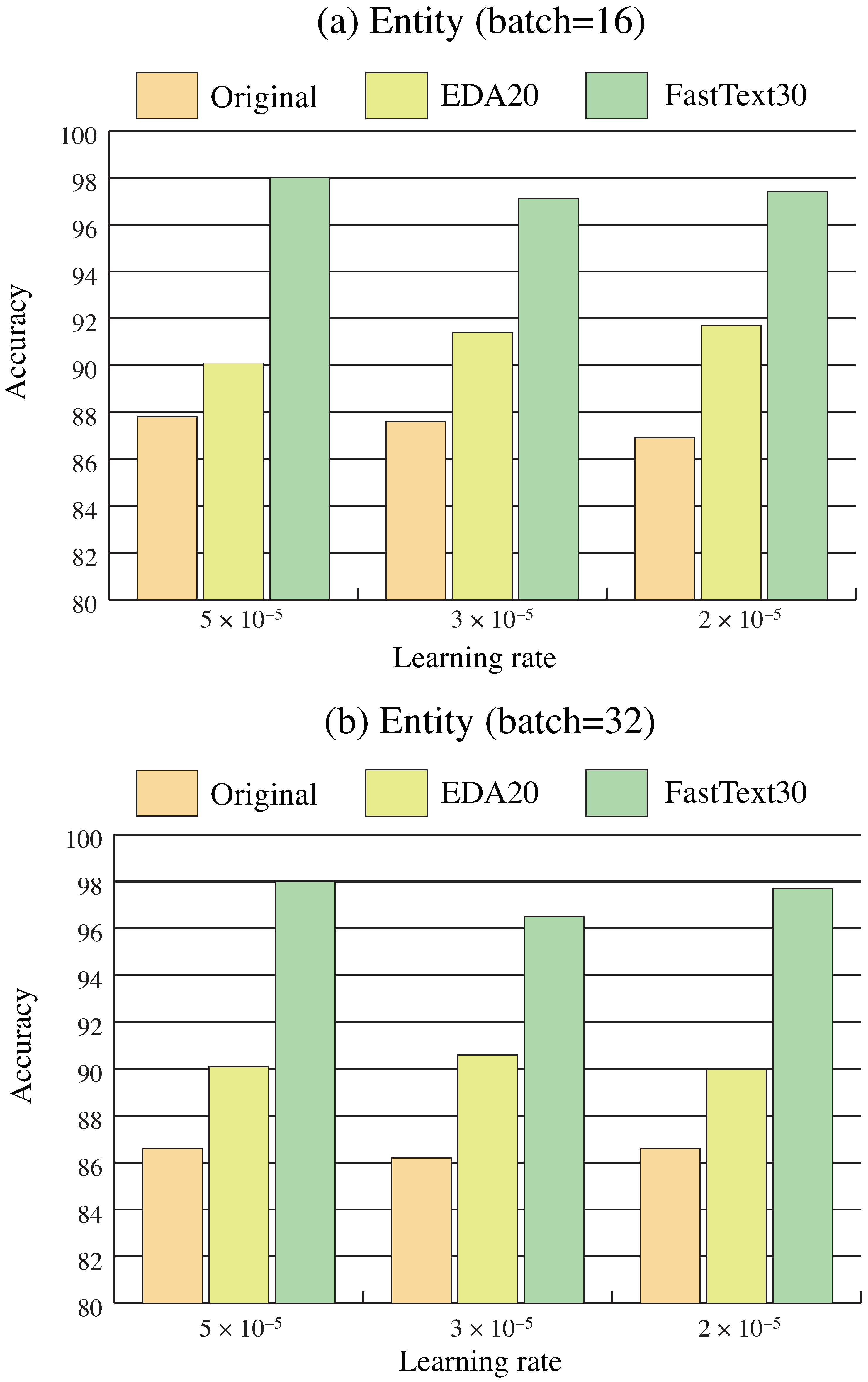

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NER | Named Entity Recognition |

| NICHD | National Institute of Child Health and Development |

| BERT | Bidirectional Encoder Representations from Transformers |

| KoBERT | Korean BERT pre-trained cased |

| RI | Random Insertion |

| RS | Random Swap |

| RD | Random Deletion |

| SR | Synonym Replacement |

| EDA | Easy Data Augmentation |

| SPD | Subjective Psychological Description |

| ID | Interaction Description |

| ALM | Acknowledging Lack of Memory |

| BIO | Beginning, Inside, Outside |

| CLS Token | Classification Token |

References

- Orbach, Y.; Hershkowitz, I.; Lamb, M.E.; Sternberg, K.J.; Esplin, P.W.; Horowitz, D. Assessing the value of structured protocols for forensic interviews of alleged child abuse victims. Child Abus. Negl. 2000, 24, 733–752. [Google Scholar] [CrossRef] [PubMed]

- Lamb, M.E.; Orbach, Y.; Sternberg, K.J.; Aldridge, J.; Pearson, S.; Stewart, H.L.; Esplin, P.W.; Bowler, L. Use of a Structured Investigative Protocol Enhances the Quality of Investigative Interviews with Alleged Victims of Child Sexual Abuse in Britain. Appl. Cogn. Psychol. Off. J. Soc. Appl. Res. Mem. Cogn. 2009, 23, 449–467. [Google Scholar] [CrossRef]

- Sternberg, K.J.; Lamb, M.E.; Orbach, Y.; Esplin, P.W.; Mitchell, S. Use of a structured investigative protocol enhances young children’s responses to free-recall prompts in the course of forensic interviews. J. Appl. Psychol. 2001, 86, 997. [Google Scholar] [CrossRef] [PubMed]

- Lamb, M.; Brown, D.; Hershkowitz, I.; Orbach, Y.; Esplin, P. Tell Me What Happened: Questioning Children about Abuse; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Ettinger, T.R. Children’s needs during disclosures of abuse. SN Soc. Sci. 2022, 2, 101. [Google Scholar] [CrossRef] [PubMed]

- Fernandes, D.; Gomes, J.P.; Pedro, B. Albuquerque and Marlene Matos. Forensic Interview Techniques in Child Sexual Abuse Cases: A Scoping Review. Trauma Violence Abus. 2023. [Google Scholar] [CrossRef]

- Tidmarsh, P.; Sharman, S.; Hamilton, G. The Effect of Specialist Training on Sexual Assault Investigators’ Questioning and Use of Relationship Evidence. J. Police Crim. Psychol. 2023, 38, 318–327. [Google Scholar] [CrossRef]

- Minhas, R.; Elphick, C.; Shaw, J. Protecting victim and witness statement: Examining the effectiveness of a chatbot that uses artificial intelligence and a cognitive interview. AI Soc. 2022, 37, 265–281. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Blanc, C.; Bailly, A.; Francis, É.; Guillotin, T.; Jamal, F.; Wakim, B.; Roy, P. FlauBERT vs. CamemBERT: Understanding patient’s answers by a French medical chatbot. Artif. Intell. Med. 2022, 127, 102264. [Google Scholar] [CrossRef]

- Nadarzynski, T.; Miles, O.; Cowie, A.; Ridge, D. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digit. Health 2019, 5, 2055207619871808. [Google Scholar] [CrossRef] [PubMed]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Li, C.H.; Yeh, S.F.; Chang, T.J.; Tsai, M.H.; Chen, K.; Chang, Y.J. A Conversation Analysis of Non-Progress and Coping Strategies with a Banking Task-Oriented Chatbot. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI’20), Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar] [CrossRef]

- Sidaoui, K.; Jaakkola, M.; Burton, J. AI feel you: Customer experience assessment via chatbot interviews. J. Serv. Manag. 2020, 31, 745–766. [Google Scholar] [CrossRef]

- Ho, A.; Hancock, J.; Miner, A.S. Psychological, Relational, and Emotional Effects of Self-Disclosure after Conversations with a Chatbot. J. Commun. 2018, 68, 712–733. [Google Scholar] [CrossRef] [PubMed]

- Tsai, W.H.S.; Lun, D.; Carcioppolo, N.; Chuan, C.H. Human versus chatbot: Understanding the role of emotion in health marketing communication for vaccines. Psychol. Mark. 2021, 38, 2377–2392. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Hershkowitz, I.; Orbach, Y.; Lamb, M.E.; Sternberg, K.J.; Horowitz, D. Dynamics of Forensic Interviews with Suspected Abuse Victims who do not Disclose Abuse. Child Abus. Negl. 2006, 30, 753–769. [Google Scholar] [CrossRef]

- Yi, M.; Jo, E.; Lamb, M.E. Effects of the NICHD protocol training on child investigative interview quality in Korean police officers. J. Police Crim. Psychol. 2016, 31, 155–163. [Google Scholar] [CrossRef]

- Sternberg, K.J.; Lamb, M.E.; Hershkowitz, I.; Yudilevitch, L.; Orbach, Y.; Esplin, P.W.; Hovav, M. Effects of introductory style on children’s abilities to describe experiences of sexual abuse. Child Abus. Negl. 1997, 21, 1133–1146. [Google Scholar] [CrossRef]

- Yi, M.; Jo, E.; Lamb, M.E. Assessing the Effectiveness of NICHD Protocol Training Focused on Episodic Memory Training and Rapport-Building: A Study of Korean Police Officers. J. Police Crim. Psychol. 2017, 32, 279–288. [Google Scholar] [CrossRef]

- Saywitz, K.J.; Camparo, L.B. Contemporary child forensic interviewing: Evolving consensus and innovation over 25 years. In Children as Victims, Witnesses, and Offenders: Psychological Science and the Law; Guilford Press: New York, NY, USA, 2009; pp. 102–127. [Google Scholar]

- Malloy, L.C.; Brubacher, S.P.; Lamb, M.E. “Because She’s One Who Listens” Children Discuss Disclosure Recipients in Forensic Interviews. Child Maltreat. 2013, 18, 245–251. [Google Scholar] [CrossRef] [PubMed]

- Lamb, M.E.; Sternberg, K.J.; Orbach, Y.; Hershkowitz, I.; Horowitz, D.; Esplin, P.W. The Effects of Intensive Training and Ongoing Supervision on the Quality of Investigative Interviews with Alleged Sex Abuse Victims. Appl. Dev. Sci. 2002, 6, 114–125. [Google Scholar] [CrossRef]

- Lamb, M.E.; Orbach, Y.; Hershkowitz, I.; Esplin, P.W.; Horowitz, D. A structured forensic interview protocol improves the quality and informativeness of investigative interviews with children: A review of research using the NICHD Investigative Interview Protocol. Child Abus. Negl. 2007, 11–12, 1201–1231. [Google Scholar] [CrossRef] [PubMed]

- Steller, M.; Köhnken, G. Statement analysis: Credibility assessment of children’s testimonies in sexual abuse cases. In Psychological Methods in Criminal Investigation and Evidence; Springer: Berlin/Heidelberg, Germany, 1989; pp. 217–245. [Google Scholar]

- Wei, J.; Zou, K. EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar]

- Dhiman, A.; Toshniwal, D. An Enhanced Text Classification to Explore Health based Indian Government Policy Tweets. arXiv 2020, arXiv:2007.06511. [Google Scholar]

- Dai, X.; Adel, H. An Analysis of Simple Data Augmentation for Named Entity Recognition. arXiv 2020, arXiv:2010.11683. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Available online: https://bitbucket.org/eunjeon/mecab-ko-dic/ (accessed on 20 July 2018).

- AI-Hub. Available online: https://aihub.or.kr/aihubdata/data/view.do?currMenu=115&topMenu=100&aihubDataSe=realm&dataSetSn=117 (accessed on 1 October 2023).

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Jo, H.; Goo Lee, S. Korean Word Embedding Using FastText; The Korean Institute of Information Scientists and Engineers: Busan, Republic of Korea, 2017; pp. 705–707. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Wang, S.; Hu, G. Revisiting Pre-Trained Models for Chinese Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings, Online, 16–20 November 2020; pp. 657–668. [Google Scholar]

- Kikuta, Y. BERT Pretrained Model Trained on Japanese Wikipedia Articles. 2019. Available online: https://github.com/yoheikikuta/bert-japanese (accessed on 20 October 2019).

- Amer, E.; Hazem, A.; Farouk, O.; Louca, A.; Mohamed, Y.; Ashraf, M. A Proposed Chatbot Framework for COVID-19. In Proceedings of the 2021 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 26–27 May 2021; pp. 263–268. [Google Scholar]

- Lee, J.H.; Wu, E.H.K.; Ou, Y.Y.; Lee, Y.C.; Lee, C.H.; Chung, C.R. Anti-Drugs Chatbot: Chinese BERT-Based Cognitive Intent Analysis. IEEE Trans. Comput. Soc. Syst. 2023, 1–8. [Google Scholar] [CrossRef]

- Fernández-Martínez, F.; Luna-Jiménez, C.; Kleinlein, R.; Griol, D.; Callejas, Z.; Montero, J.M. Fine-Tuning BERT Models for Intent Recognition Using a Frequency Cut-Off Strategy for Domain-Specific Vocabulary Extension. Appl. Sci. 2022, 12, 1610. [Google Scholar] [CrossRef]

- SKT-Brain. Korean BERT Pre-Trained Cased (KoBERT). 2021. Available online: https://github.com/SKTBrain/KoBERT (accessed on 20 August 2022).

- How multilingual is Multilingual BERT? arXiv 2019, arXiv:1906.01502.

- Schuster, M.; Nakajima, K. Japanese and Korean Voice Search. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 5149–5152. [Google Scholar]

- Kudo, T.; Richardson, J. Sentencepiece: A simple and language independent subword tokenizer and detokenizer for neural text processing. arXiv 2018, arXiv:1808.06226. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Available online: https://pypi.org/project/kochat/ (accessed on 1 October 2023).

| Type | Description |

|---|---|

| Invitation | Open-ended questions to help the child recall information about the incident |

| “Could you tell me everything that happened that day?” | |

| Facilitator | Non-suggestive prompt to elicit a continuous response |

| “I see.”, “You must have had a hard time.” | |

| Cued-invitation | Refocusing on the information already mentioned by the child to prompt free recall of information |

| “You mentioned ‘that guy.”, “Could you tell more about this person?” | |

| Directive | Refocusing on information already mentioned to extract more detailed information |

| “When did this happen?” | |

| Option-posing | Prompting the child to focus on aspects or details not mentioned. Confirmation, negation, or selection of interviewer’s words |

| “Did it hurt?”, “Did he touch you over or under your clothes?” |

| Original Sentence | Augmentation Techniques | Total Augmented Sentences | |||

|---|---|---|---|---|---|

| SR | RI | RS | RD | ||

| 1 | 5 | 1 | 2 | 1 | 10 |

| 1 | 10 | 3 | 4 | 2 | 20 |

| 1 | 15 | 5 | 6 | 3 | 30 |

| Augmentation Method | Augmented Ratio | INTENT | ENTITY | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ALM | ID | SPD | WHO | WHEN | WHERE | ACTION | NO | ||

| Original Data | 557 | 1849 | 497 | 523 | 305 | 256 | 1059 | 445 | |

| EDA | 10 | 5570 | 18,490 | 4970 | 4737 | 2855 | 2403 | 9793 | 4205 |

| 20 | 11,140 | 36,980 | 9940 | 9106 | 5472 | 4553 | 18,716 | 8012 | |

| 30 | 16,710 | 55,470 | 14,910 | 13,455 | 8117 | 6739 | 27,541 | 11,860 | |

| FastText | 10 | 5570 | 18,490 | 4970 | 3307 | 2123 | 1639 | 6494 | 2849 |

| 20 | 11,140 | 36,980 | 9940 | 6189 | 4037 | 3031 | 12,215 | 5312 | |

| 30 | 16,710 | 55,470 | 14,910 | 9111 | 5828 | 4468 | 17,913 | 7895 | |

| Intent | Example |

|---|---|

| ALM | (1) 몰라요* |

| (2) _몰, 라, 요* | |

| (3) (B-NO*, I-NO*, I-NO*) | |

| (4) (I don’t know*.) | |

| ALM | (1) 뭘로 밀었는지 그게 기억이 안나네요* |

| (2) _, 뭘, 로, _밀, 었, 는, 지, _그, 게, _기억, 이, _안, 나, 네요* | |

| (3) (O O O O O O O O O O O B-NO* I-NO* I-NO*) | |

| (4) (I don’t remember* what he shoved me with.) | |

| SPD | (1) 되게 기분 나빴어요. |

| (2) _되, 게, _기분, _나, 빴, 어요 | |

| (3) (O O O O O O) | |

| (4) (I felt really bad.) | |

| SPD | (1) 그때 그래서 정말 화가 났어요. |

| (2) _그때, _그래서, _정말, _화, 가, _, 났, 어요 | |

| (3) (O O O O O O O O) | |

| (4) (So, I got really angry then.) | |

| ID | (1) 저를 학교√에서 오빠가⋄ 때렸어요∆ |

| (2) _저, 를, _학교√, 에서, _오빠, 가,⋄ _때, 렸, 어요∆ | |

| (3) (O O B-WHERE√ I-WHERE√ B-WHO⋄ I-WHO⋄ B-ACTION∆ I-ACTION∆ I-ACTION∆) | |

| (4) (My brother⋄ hit∆ me at√ school√.) | |

| ID | (1) 쌤이⋄ 어제♡ 괴롭혔어요∆ 아침에♡ |

| (2) _, 쌤, 이,⋄ _어제,♡ _괴, 롭, 혔, 어요,∆ _아침, 에♡ | |

| (3) (O B-WHO⋄ I-WHO⋄ B-WHEN ♡ B-ACTION∆ I-ACTION∆ I-ACTION∆ I-ACTION∆ B-WHEN♡ I-WHEN♡ | |

| (4) (The teacher⋄ bullied∆ me at yesterday♡ morning.♡) |

| BERT-Multilingual-Cased | KoBERT | |

|---|---|---|

| Trained languages | 104 languages | Korean |

| Number of layers | 12 | 12 |

| Hidden size | 768 | 768 |

| Maximum length | 512 | 512 |

| Attention heads | 12 | 12 |

| Vocabulary size | 30,522 | 8002 |

| Parameter size | 110 M | 92 M |

| Tokenizer method | Wordpiece [45] | Sentencepiece [46] |

| Line | Speaker | Text | Classified Intent, Recognized Entity | NHCID Adopted Question |

|---|---|---|---|---|

| 1 | Chatbot | As far as I know, something happened to you, can you tell me everything about what happened to you, from beginning to end? | Invitation | |

| 2 | Child | Yesterday, I was going home with my friend after school, and an old man came to me to teach me how to use my cell phone and touched my body. | ID, Yesterday(WHEN), old man(WHO), touched(ACTION) | |

| 3 | Chatbot | Please elaborate a little more on what you just told me. | Invitation | |

| 4 | Child | I was with a friend, and he came to me. That’s why he asked me to teach him how to capture it. So, he asked me to help him, so I helped him, but he suddenly grabbed my waist. | ID, old man(WHO), grabbed(ACTION) | |

| 5 | Chatbot | Then I’d like to ask you about the things that you told me in detail. | Invitation | |

| 6 | Chatbot | Please tell me more about that time. | Cued-invitation | |

| 7 | Child | It is probably 3 o’clock because it is time to go home after school. | 3 o’clock(WHEN) | |

| 8 | Chatbot | Please tell me more about the position you were in at the time. | Option-posing | |

| 9 | Child | At that time, I was turning my back on the apartment, and the old man came to us after sitting on a bench. | ID, At that time(WHEN) | |

| 10 | Chatbot | Please tell me everything you know about him. | Cued-invitation | |

| 11 | Child | He was wearing a hat, so I can’t remember his face in detail… He was wearing something like a coat, and he was lame. | ALM, he(WHO) | |

| 12 | Chatbot | Could you tell me what action you took in detail when that person "touched" you? | Cued-invitation | |

| 13 | Child | I felt that he was trying to touch my body with force. So, I was so embarrassed that I shook off his hand and ran away with my friend because I was scared. | SPD | |

| 14 | Chatbot | You told me that he “grabbed” it, but could you tell me in more detail about it? | Cued-invitation | |

| 15 | Child | He pushed his face closer to me asking for instructions on how to capture a cell phone and then I pretended to be natural and put my hands around my waist. | ID | |

| 16 | Chatbot | Could you tell me how you felt when it happened? | Directive |

| Model | Augmentation Method | Augmented Ratio | INTENT (F1-Score) | Accuracy | |||

|---|---|---|---|---|---|---|---|

| ALM | ID | SPD | Average | ||||

| BERT | Original | 95.5 | 94.4 | 79.2 | 89.7 | 91.9 | |

| EDA | 10 | 96.0 | 95.5 | 82.7 | 91.4 | 93.5 | |

| 20 | 94.2 | 95.5 | 82.8 | 90.8 | 93.1 | ||

| 30 | 92.0 | 95.4 | 81.2 | 89.5 | 92.4 | ||

| FastText | 10 | 95.5 | 95.9 | 83.9 | 91.8 | 93.8 | |

| 20 | 93.8 | 95.1 | 80.6 | 89.8 | 92.4 | ||

| 30 | 94.1 | 96.1 | 84.8 | 91.7 | 93.8 | ||

| KoBERT | Original | 95.2 | 96.9 | 85.1 | 92.4 | 94.5 | |

| EDA | 10 | 96.0 | 97.3 | 91.6 | 94.9 | 96.0 | |

| 20 | 97.3 | 97.7 | 91.5 | 95.5 | 96.6 | ||

| 30 | 94.5 | 96.0 | 84.4 | 91.6 | 93.6 | ||

| FastText | 10 | 96.4 | 96.9 | 87.6 | 93.6 | 95.2 | |

| 20 | 92.9 | 97.1 | 87.6 | 93.0 | 95.0 | ||

| 30 | 96.5 | 96.6 | 86.6 | 93.2 | 94.8 | ||

| Model | Augmentation Method | Augmented Ratio | ENTITY (F1-Score) | Accuracy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B-WHO | I-WHO | B-WHEN | I-WHEN | B-WHERE | I-WHERE | B-ACTION | I-ACTION | B-NO | I-NO | Average | ||||

| BERT | Original | 96.1 | 96.7 | 94.6 | 97.6 | 89.7 | 86.4 | 84.3 | 82.6 | 97.0 | 97.6 | 92.3 | 84.9 | |

| EDA | 10 | 98.6 | 98.4 | 97.9 | 98.8 | 95.6 | 95.2 | 90.6 | 92.8 | 92.7 | 97.6 | 95.8 | 81.7 | |

| 20 | 97.2 | 97.9 | 96.9 | 98.8 | 94.6 | 94.3 | 91.3 | 93.7 | 93.2 | 96.5 | 95.4 | 80.3 | ||

| 30 | 96.3 | 96.9 | 96.8 | 98.8 | 92.6 | 93.2 | 90.6 | 91.6 | 97.0 | 98.2 | 95.2 | 80.9 | ||

| FastText | 10 | 98.6 | 100 | 97.8 | 98.8 | 94.1 | 93.7 | 91.3 | 93.1 | 97.6 | 98.2 | 96.3 | 90.6 | |

| 20 | 98.1 | 98.9 | 97.8 | 98.8 | 95.2 | 94.9 | 90.9 | 94.0 | 98.2 | 98.8 | 96.6 | 91.0 | ||

| 30 | 99.0 | 99.5 | 97.9 | 97.6 | 94.0 | 94.9 | 92.9 | 94.3 | 98.2 | 98.8 | 96.7 | 93.3 | ||

| KoBERT | Original | 96.2 | 97.1 | 96.8 | 97.4 | 89.5 | 87.7 | 92.0 | 90.9 | 97.6 | 97.6 | 94.3 | 87.8 | |

| EDA | 10 | 98.1 | 97.2 | 99.0 | 98.7 | 95.7 | 93.2 | 96.0 | 95.9 | 97.1 | 97.1 | 96.8 | 92.2 | |

| 20 | 96.8 | 96.7 | 100 | 100 | 94.6 | 93.2 | 95.6 | 95.4 | 97.1 | 97.1 | 96.6 | 90.1 | ||

| 30 | 99.1 | 98.3 | 100 | 97.4 | 94.6 | 91.9 | 93.6 | 92.8 | 97.6 | 97.6 | 96.3 | 89.0 | ||

| FastText | 10 | 98.6 | 98.9 | 100 | 100 | 97.7 | 98.5 | 94.2 | 93.7 | 97.6 | 97.6 | 97.7 | 94.4 | |

| 20 | 99.5 | 100 | 98.9 | 100 | 96.6 | 95.5 | 95.3 | 94.8 | 98.2 | 98.2 | 97.8 | 97.7 | ||

| 30 | 99.0 | 98.9 | 98.9 | 100 | 97.7 | 97.0 | 95.0 | 94.5 | 98.2 | 98.2 | 97.8 | 98.0 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, J.; Jo, E.; Yoon, Y.; Jung, J. A System for Interviewing and Collecting Statements Based on Intent Classification and Named Entity Recognition Using Augmentation. Appl. Sci. 2023, 13, 11545. https://doi.org/10.3390/app132011545

Shin J, Jo E, Yoon Y, Jung J. A System for Interviewing and Collecting Statements Based on Intent Classification and Named Entity Recognition Using Augmentation. Applied Sciences. 2023; 13(20):11545. https://doi.org/10.3390/app132011545

Chicago/Turabian StyleShin, Junho, Eunkyung Jo, Yeohoon Yoon, and Jaehee Jung. 2023. "A System for Interviewing and Collecting Statements Based on Intent Classification and Named Entity Recognition Using Augmentation" Applied Sciences 13, no. 20: 11545. https://doi.org/10.3390/app132011545