1. Introduction

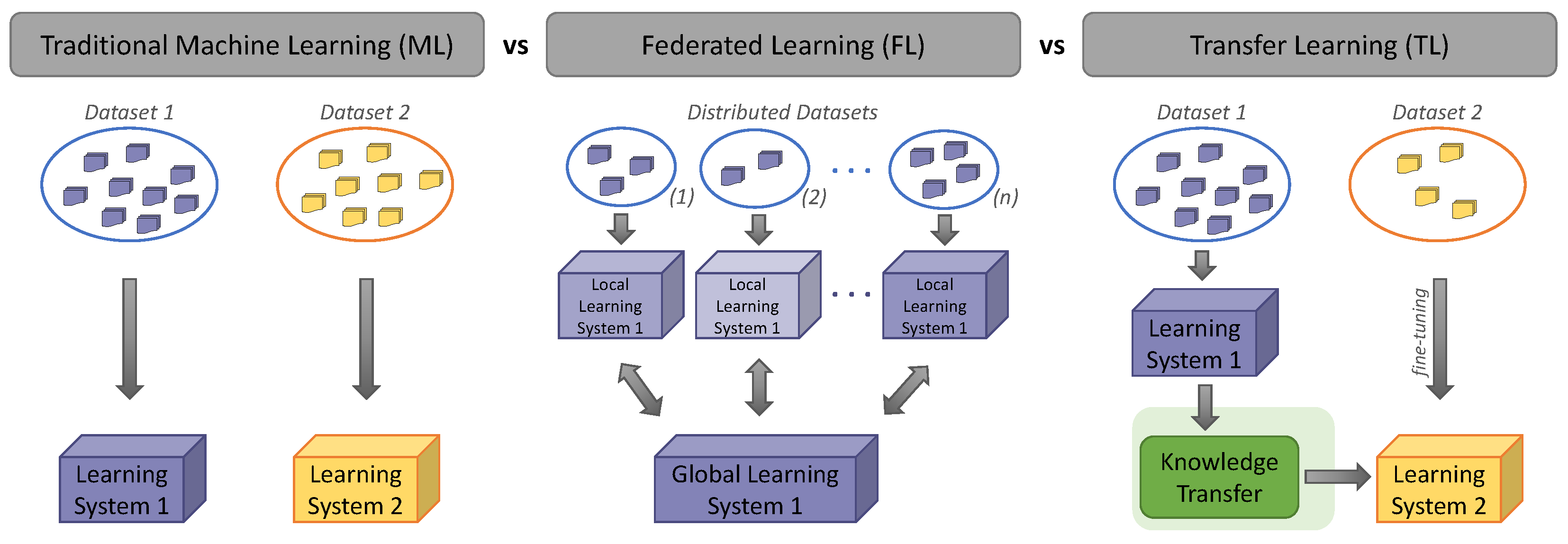

The classic example of machine learning is based on isolated learning—a single model for each task using a single dataset. Most deep learning methods require a significant amount of labelled data, preventing their applicability in many areas where there is a shortage. In these cases, the ability of models to leverage information from unlabelled data or data that are not publicly available (for privacy and security reasons) can offer a remarkable alternative. Transfer learning and federated learning are such alternative approaches that have emerged in recent years. More precisely, transfer learning (TL) is defined as a set of methods that leverage data from additional fields or tasks to train a model with greater generalizability and usually use a smaller amount of labelled data (via fine-tuning) to make them more specific for dedicated tasks. Accordingly, federated learning (FL) is a learning model that seeks to address the problem of data management and privacy through joint training with this data, without the need to transfer the data to a central entity. Figure 1 illustrates the comparison of federated and transfer learning applications with traditional machine learning approaches.

Figure 1.

Comparison of federated and transfer learning applications with traditional machine learning.

With this in mind, the present Special Issue of Applied Sciences on “Federated and Transfer Learning Applications” provides an overview of the latest developments in this field. Twenty-four papers were submitted to this Special Issue, and eleven papers [1,2,3,4,5,6,7,8,9,10,11] were accepted (i.e., a 45.8% acceptance rate). The presented papers explore innovative trends of federated learning approaches that enable technological breakthroughs in high-impact areas such as aggregation algorithms, effective training, cluster analysis, incentive mechanisms, influence study of unreliable participants and security/privacy issues, as well as innovative breakthroughs in transfer learning such as Arabic handwriting recognition, literature-based drug–drug interaction, anomaly detection, and chat-based social engineering attack recognition.

2. Federated Learning Approaches

The federated learning approaches published in this Special Issue include aggregation algorithms, layer regularization for effective training, cluster analysis, blockchain-based incentive mechanisms, influence study of unreliable participants, and security/privacy issues.

- FL aggregation algorithms: Xu et al. [1] proposed an FL framework, called FedLA (Federated Lazy Aggregation), which reduces aggregation frequency to obtain high-quality gradients and improve robustness to non-independent and identically distributed (non-IID) training data. This framework is particularly used to deal with data heterogeneity. Based on FedAvg, FedLA allows more devices, which come from consecutive rounds, to be trained sequentially, without needing additional information about the devices. Due to sequential training, it performs well on both homogeneous and heterogeneous data, simply by discovering enough samples to aggregate them in time. Regarding the aggregation timing, this work proposes weighted deviation (WDR) as the change rate of the models to monitor sampling. Furthermore, the authors propose a FedLA with a Cross-Device Momentum (CDM) mechanism, called FedLAM, which alleviates the instability of the vertical optimization, helps the local models escape from the local optimum, and improves the performance of the global model in FL. Compared to benchmark algorithms (e.g., FedAvg, FedProx, and FedDyn), FedLAM performs best in most scenarios, achieving a 2.4–3.7% increase in accuracy compared to FedAvg on three image classification datasets.

Li et al. [10] presented a novel federated aggregation algorithm, named FedGSA, which relies on gradient similarity for wireless traffic prediction. FedGSA addresses critical aspects in the optimization of cellular networks, including load balancing, congestion control, and the promotion of value-added services. This method incorporates several key elements: (i) utilizing a global data-sharing strategy to overcome the challenge of data heterogeneity in multi-client collaborative training within federated learning, (ii) implementing a sliding window approach to construct dual-channel training data, enhancing the model’s capacity for feature learning, and (iii) proposing a two-layer aggregation scheme based on gradient similarity to enhance the generalization capability of the global model. This entails the generation of personalized models through a comparison of gradient similarity for each client model, followed by aggregation at the central server to obtain the global model. In the experiments conducted using two authentic traffic datasets, FedGSA outperformed the commonly used Federated Averaging (FedAvg) algorithm, delivering superior prediction results while preserving the privacy of client traffic data.

- Layer regularization for effective training: Federated learning is a widely used method for training neural networks on distributed data; however, its main limitation is the performance degradation that occurs when data are heterogeneously distributed. To address this limitation, Son et al. [3] proposed FedCKA, an alternative approach based on a more up-to-date understanding of neural networks, showing that only certain important layers in a neural network require regularization for effective training. In addition, the authors show that Centred Kernel Alignment (CKA) is the most appropriate when comparing representational similarity between layers of neural networks, in contrast with previous studies that used the L2-distance (FedProx) or cosine similarity (MOON). Experimental results reveal that FedCKA outperforms previous state-of-the-art methods in various deep learning tasks, while also improving efficiency and scalability.

- Federated clustering framework: Stallmann and Wilbik [5] presented for first time a federated clustering framework that addresses three key challenges: (i) determining the optimal number of global clusters within a federated dataset, (ii) creating a data partition using a federated fuzzy c-means algorithm, and (iii) validating the quality of the clustering using a federated fuzzy Davies–Bouldin index. This framework is thoroughly evaluated through numerical experiments conducted on both artificial and real-world datasets. The findings indicate that, in most instances, the results achieved by the federated clustering framework are in line with those of the non-federated counterpart. Additionally, the authors incorporate an alternative federated fuzzy c-means formulation into the proposed framework and observe that this approach exhibits greater reliability when dealing with non-IID data, while performing equally well in the IID scenario.

- Blockchain-based incentive mechanism: In response to the issues associated with centralization and the absence of incentives in conventional federated learning, Liu et al. [8] introduced a decentralized federated learning method for edge computing environments based on blockchain technology, called BD-FL. This approach integrates all edge servers into the blockchain network, where the edge server nodes that acquire bookkeeping rights collectively aggregate the global model. This addresses the centralization problem inherent in federated learning, which is prone to single points of failure. To encourage active participation of local devices in federated learning model training, an incentive mechanism is introduced. To minimize the cost of model training, BD-FL employs a preference-based stable matching algorithm which associates local devices with suitable edge servers, effectively reducing communication overhead. Furthermore, the authors propose the utilization of a reputation-based practical Byzantine fault tolerance (R-PBFT) algorithm to enhance the consensus process for global model training within the blockchain. The experimental results demonstrate that BD-FL achieves a notable reduction in model training time of up to 34.9% compared to baseline federated learning methods. Additionally, the incorporation of the R-PBFT algorithm enhances the training efficiency of BD-FL by 12.2%.

- Influence of unreliable participants and selective aggregation: Esteves et al. [9] introduced a multi-mobile Android-based implementation of a federated learning system and investigated how the presence of unreliable participants and selective aggregation influence the feasibility of deploying mobile federated learning solutions in real-world scenarios. Furthermore, the authors illustrated that employing a more sophisticated aggregation method, such as a weighted average instead of a simple arithmetic average, can lead to substantial enhancements in the overall performance of a federated learning solution. In the context of ongoing challenges in the field of federated learning, this research argues that imposing eligibility criteria can prove beneficial to the context of federated learning, particularly when dealing with unreliable participants.

- Survey on privacy and security in FL: Gosselin et al. [2] presented a comprehensive survey addressing various privacy and security issues related to federated learning (FL). Toward this end, the authors introduce an overview of the FL applications, network topology, and aggregation methods, and then present and discuss the existing FL-based studies in terms of security and privacy protection techniques aimed at mitigating FL vulnerabilities. The findings show that the current major security threats are poisoning, backdoor, and Generative Adversarial Network (GAN) attacks, while inference-based attacks are the most critical for FL privacy. Finally, this study concludes with FL open issues and provides future research directions on the topic.

3. Transfer Learning Approaches

The transfer learning approaches published in this Special Issue include Arabic handwriting recognition, literature-based drug–drug interaction, anomaly detection, and chat-based social engineering attack recognition.

- Arabic handwriting recognition: Albattah and Albahli [4] proposed an approach for Arabic handwriting recognition by leveraging the advantages of machine learning for classification and deep learning for feature extraction, resulting in the development of hybrid models. The most exceptional performance among the stand-alone deep learning models, trained on both datasets (Arabic MNIST and Arabic Character), was achieved by the transfer learning model applied to the MNIST dataset, with an accuracy of 99.67%. In contrast, the hybrid models performed strongly when using the MNIST dataset, consistently achieving accuracies exceeding 90% across all hybrid models. It is important to note that the results of the hybrid models using the Arabic character dataset were particularly poor, suggesting a possible problem with the dataset itself.

- Drug–drug interaction based on the biomedical literature: Zaikis et al. [6] introduced a Knowledge Graph schema integrated into a Graph Neural Network-based architecture designed for the classification of Drug–Drug Interactions (DDIs) within the biomedical literature. Specifically, they present an innovative Knowledge Graph (KG)-based approach that leverages a unique graph structure, combined with a Transformer-based Language Model (LM) and Graph Neural Networks (GNNs), to effectively classify DDIs found in the biomedical literature. The KG is meticulously constructed to incorporate the knowledge present in the DDI Extraction 2013 benchmark dataset without relying on additional external information sources. Each drug pair is classified based on the context in which it appears within a sentence, making use of transfer knowledge through semantic representations derived from domain-specific BioBERT weights, which act as the initial KG states. The proposed approach is evaluated on the DDI classification task using the same dataset, and it achieves an F1-score of 79.14% across the four positive classes, surpassing the current state-of-the-art methods.

- Anomaly detection for latency in 5G networks: Han et al. [7] proposed a novel approach for detecting anomalies and issuing early warnings with application to abnormal driving scenarios involving Automated Guided Vehicles (AGVs) within the private 5G networks of China Telecom. This approach efficiently identifies high-latency cases through their proposed ConvAE-Latency model. Building on this, the authors introduce the LstmAE-TL model, which leverages the characteristics of Long Short-Term Memory (LSTM) to provide abnormal early warnings at 15 min intervals. Furthermore, they employ transfer learning to address the challenge of achieving convergence in the training process for abnormal early warning detection, particularly when dealing with a limited sample size. Experimental results demonstrate that both models excel in anomaly detection and prediction, delivering significantly improved performance compared to existing research in this domain.

- Chat-based social engineering attack recognition: Tsinganos et al. [11] employed the terminology and methodologies of dialogue systems to simulate human-to-human dialogues within the realm of chat-based social engineering (CSE) attacks. The ability to accurately discern the genuine intentions of an interlocutor is a crucial element in establishing an effective real-time defence mechanism against CSE attacks. The authors introduce ‘in-context dialogue acts’ that reveal an interlocutor’s intent and the specific information they aim to convey. This, in turn, enables the real-time identification of CSE attacks. This research presents dialogue acts tailored to the CSE domain, constructed with a meticulously designed ontology, and establishes an annotated corpus that employs these dialogue acts as classification labels. In addition, they introduce SG-CSE BERT, a BERT-based model that adheres to the schema-guided approach, for zero-shot CSE attack scenarios in dialogue-state tracking. The preliminary evaluation results indicate a satisfactory level of performance.

Author Contributions

Conceptualization, G.D., P.S.E. and A.A.; writing—original draft preparation, G.D.; writing—review and editing, P.S.E. and A.A. All authors have read and agreed to the published version of the manuscript.

Acknowledgments

We would like to thank the authors of the eleven papers, the reviewers, and the editorial team of Applied Sciences for their valuable contributions to this special issue.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xu, G.; Kong, D.L.; Chen, X.B.; Liu, X. Lazy Aggregation for Heterogeneous Federated Learning. Appl. Sci. 2022, 12, 8515. [Google Scholar] [CrossRef]

- Gosselin, R.; Vieu, L.; Loukil, F.; Benoit, A. Privacy and Security in Federated Learning: A Survey. Appl. Sci. 2022, 12, 9901. [Google Scholar] [CrossRef]

- Son, H.M.; Kim, M.H.; Chung, T.M. Comparisons Where It Matters: Using Layer-Wise Regularization to Improve Federated Learning on Heterogeneous Data. Appl. Sci. 2022, 12, 9943. [Google Scholar] [CrossRef]

- Albattah, W.; Albahli, S. Intelligent Arabic Handwriting Recognition Using Different Standalone and Hybrid CNN Architectures. Appl. Sci. 2022, 12, 10155. [Google Scholar] [CrossRef]

- Stallmann, M.; Wilbik, A. On a Framework for Federated Cluster Analysis. Appl. Sci. 2022, 12, 10455. [Google Scholar] [CrossRef]

- Zaikis, D.; Karalka, C.; Vlahavas, I. A Message Passing Approach to Biomedical Relation Classification for Drug–Drug Interactions. Appl. Sci. 2022, 12, 10987. [Google Scholar] [CrossRef]

- Han, J.; Liu, T.; Ma, J.; Zhou, Y.; Zeng, X.; Xu, Y. Anomaly Detection and Early Warning Model for Latency in Private 5G Networks. Appl. Sci. 2022, 12, 12472. [Google Scholar] [CrossRef]

- Liu, S.; Wang, X.; Hui, L.; Wu, W. Blockchain-Based Decentralized Federated Learning Method in Edge Computing Environment. Appl. Sci. 2023, 13, 1677. [Google Scholar] [CrossRef]

- Esteves, L.; Portugal, D.; Peixoto, P.; Falcao, G. Towards Mobile Federated Learning with Unreliable Participants and Selective Aggregation. Appl. Sci. 2023, 13, 3135. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y.; Wang, J.; Zhang, C. Wireless Traffic Prediction Based on a Gradient Similarity Federated Aggregation Algorithm. Appl. Sci. 2023, 13, 4036. [Google Scholar] [CrossRef]

- Tsinganos, N.; Fouliras, P.; Mavridis, I. Leveraging Dialogue State Tracking for Zero-Shot Chat-Based Social Engineering Attack Recognition. Appl. Sci. 2023, 13, 5110. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).