Anthropological Comparative Analysis of CCTV Footage in a 3D Virtual Environment

Abstract

1. Introduction

Related Works

2. Materials and Methods

| Research Material | |||

|---|---|---|---|

| Thumbnail | Description | Purpose | |

| 1. |  | Two video recordings showing the crime scene of the incident and the figure of the perpetrator in different poses. It contains information about the anthropometric values of the perpetrator (image of body proportions, but impossible to correlate or read at this stage due to the lack of video scale and distorted proportions resulting from the used focal length of the lens). | Evidence for comparative studies. Reconstruction of a virtual camera corresponding to the CCTV camera from which the 3D scene will then be observed in real scale (point two of the table in question). |

| 2. |  | The physical site of the incident. | Creation of a 3D scan, which is the scene for the virtually reconstructed CCTV camera (point one of the table in question) to recreate the figure of the perpetrator in the correct proportions and dimensions. |

| 3. |  | The physical outline of the suspect’s body. | Creation of a 3D scan to study the correlation of spatial geometry in a 3D environment. |

| Computer Software | |||

|---|---|---|---|

| Icon | Description | Application: | |

| 1. |  | ADOBE PHOTOSHOP (v. 24.0)—a comprehensive graphics program for creating and editing raster graphics. | Single image editing, descriptions, and metrics. |

| 2. |  | ADOBE AFTER EFFECTS (v.24.0)—comprehensive video editing and animation software. | Video editing and frame extraction. |

| 3. |  | AGISOFT METASHAPE (v.1.5.0)—a program for converting photographs into a textured 3D model (photogrammetry). | Creating a 3D scan of the crime scene and creating a 3D scan of the figure of the suspect. |

| 4. |  | PIXEL FARM PFTRACK (v. 4.1.5)—software that allows for the extraction of camera movement and spatial position based on video footage, as well as analysis and correction of lens distortions. | Reconstruction and correction of video lens distortion resulting from the short focal length of the CCTV camera. |

| 5. |  | AUTODESK 3D STUDIO MAX (v. 2020)—software offering a wide range of tools for creating, handling, and editing 3D models and animations in a scalar system. | Integration of all elements in 3D space, transforming them to the real scale, animation of poses of the figures, and spatial correlation of the human figures. |

- A—evidence, video footage of the incident with the figure of the perpetrator.

- B—photographic and measurement documentation of the crime scene after the incident.

- C—photographic and measurement documentation of the figure of the suspect (comparative material).

- A1—obtaining evidence from the case files—video sequence.

- A2—selection of critical fragments of the video sequence (radically different poses of the perpetrator in order to obtain a meaningful reading of the proportions) and export of selected frames.

- A2r—result of the A2 process, selected individual images (frames).

- A3—correction of the lens distortion (necessary for image interpretation in a 3D application scene) and reconstruction of camera positions and parameters.

- A3r—result of the A3 process, reconstructed 3D camera corresponding to the camera of the evidential video footage.

- B1—post-incident visit to the space of the crime scene.

- B2—placement of markers at the crime scene and taking control measurements with a laser rangefinder for the subsequent calibration of the point cloud of a photogrammetric 3D scan.

- B3—performance of a photo shoot, with a view to subsequent photogrammetric reconstruction.

- B3r—result of the B3 process, a sequence of images for photogrammetric reconstruction.

- B4—creating a 3D scan scale reconstruction based on markers and rangefinder measurements.

- B4r—result of the B4 process, a colored scalar point cloud.

- AB1—synthesis of the results of work on material A and material B; import of the reconstructed 3D camera to the 3D scan space.

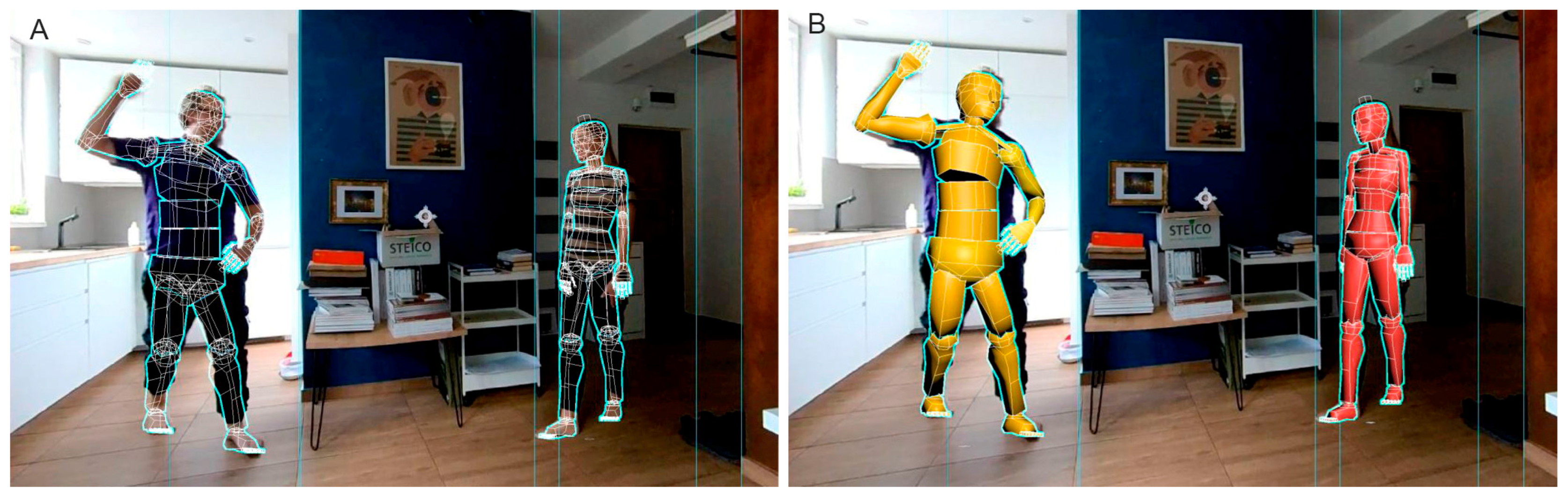

- AB1r—synthesis of work on material A and material B, placing the evidence in the point cloud of the 3D camera, allowing for examinations of the 3D scan from the viewpoint of the evidential footage camera. Insertion of an editable human figure into the space of the 3D scene, positioning this figure in the location of the figure seen in the footage, animation from the ‘zero pose’ to the pose corresponding to the footage, and adjusting its anthropometric values and dimensions to the visible evidential image. Animation returning to the zero pose for comparison with another human figure. Export of the figure prepared in this way for further work in the 3D environment.

- C1—visual examination of the suspect.

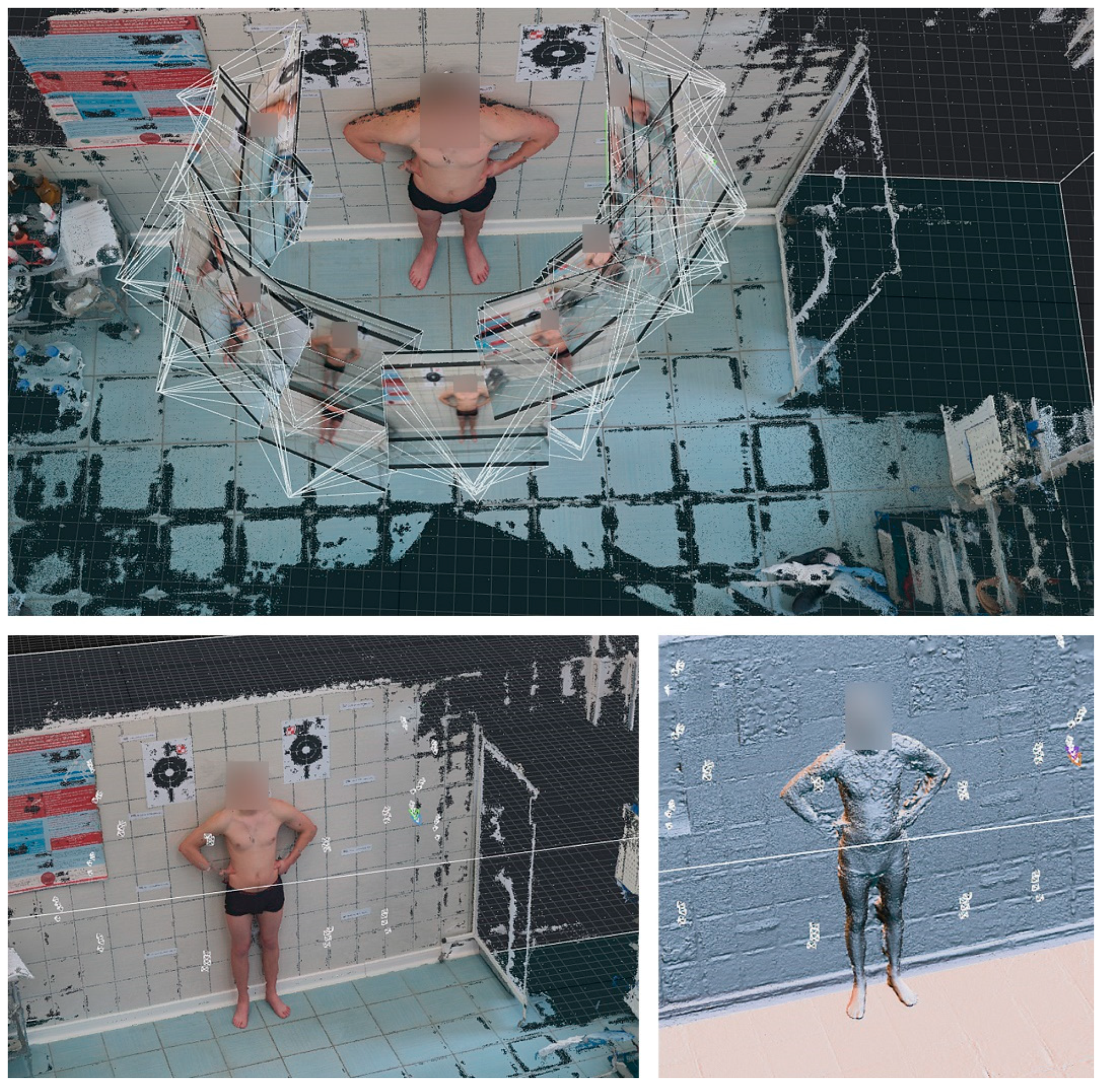

- C2—placement of markers at the site of scanning of the suspect and on their body, taking control measurements with a laser rangefinder for the subsequent calibration of the point cloud of a photogrammetric 3D scan.

- C3—performance of a photo shoot, with a view to subsequent photogrammetric reconstruction.

- C3r—result of the C3 process, a sequence of images for photogrammetric reconstruction.

- C4—creating a 3D scan scale reconstruction based on markers and rangefinder measurements.

- C4r—result of the B4 process, a colored scalar point cloud.

- C5—insertion of an editable human figure in the space of the 3D model of the figure of the suspect, animation from the zero pose to the pose corresponding to the pose from the 3D scan, and adjusting its anthropometric values and dimensions to this scan. Animation returning to the zero pose for comparison with another human figure. Export of the figure prepared in this way for further work in the 3D environment.

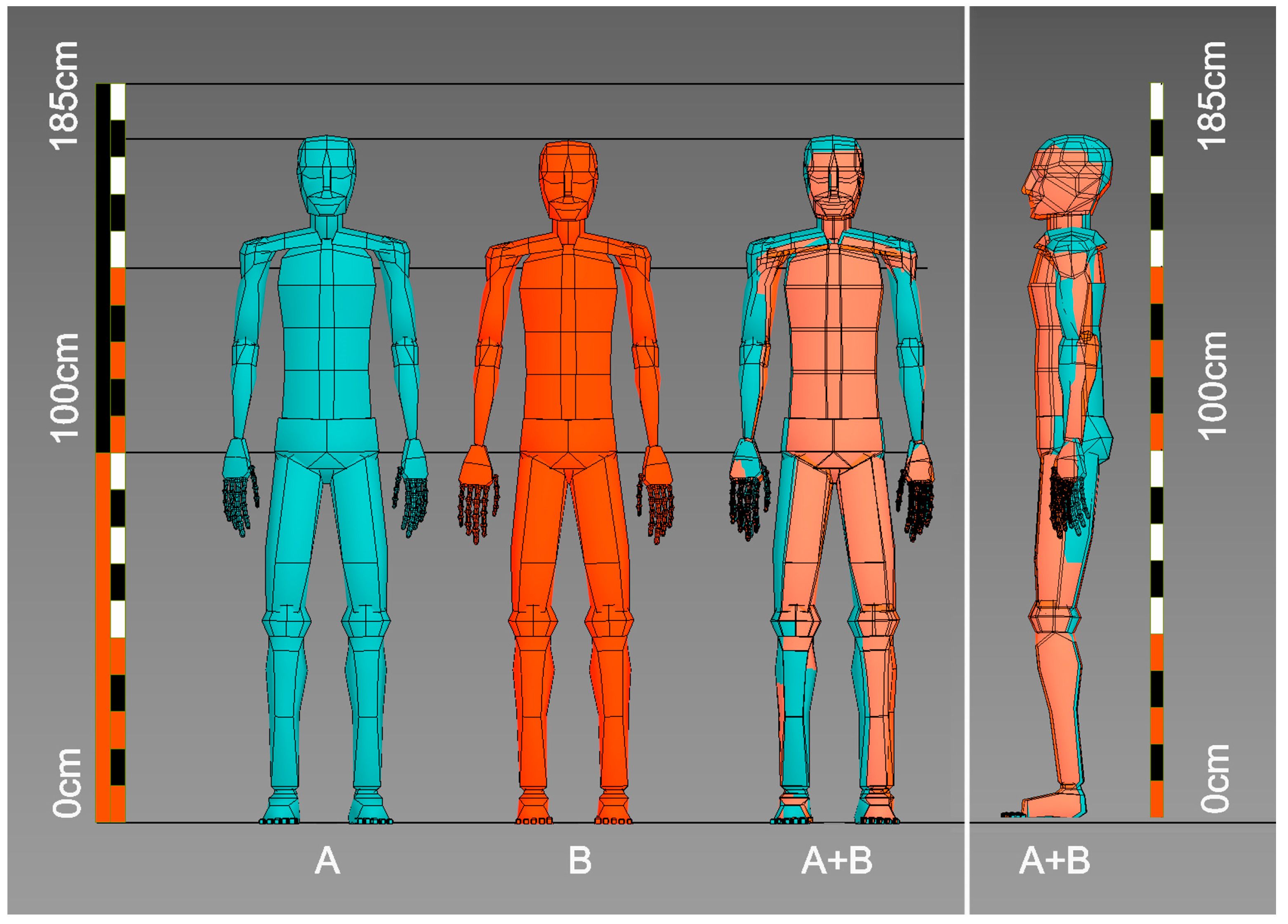

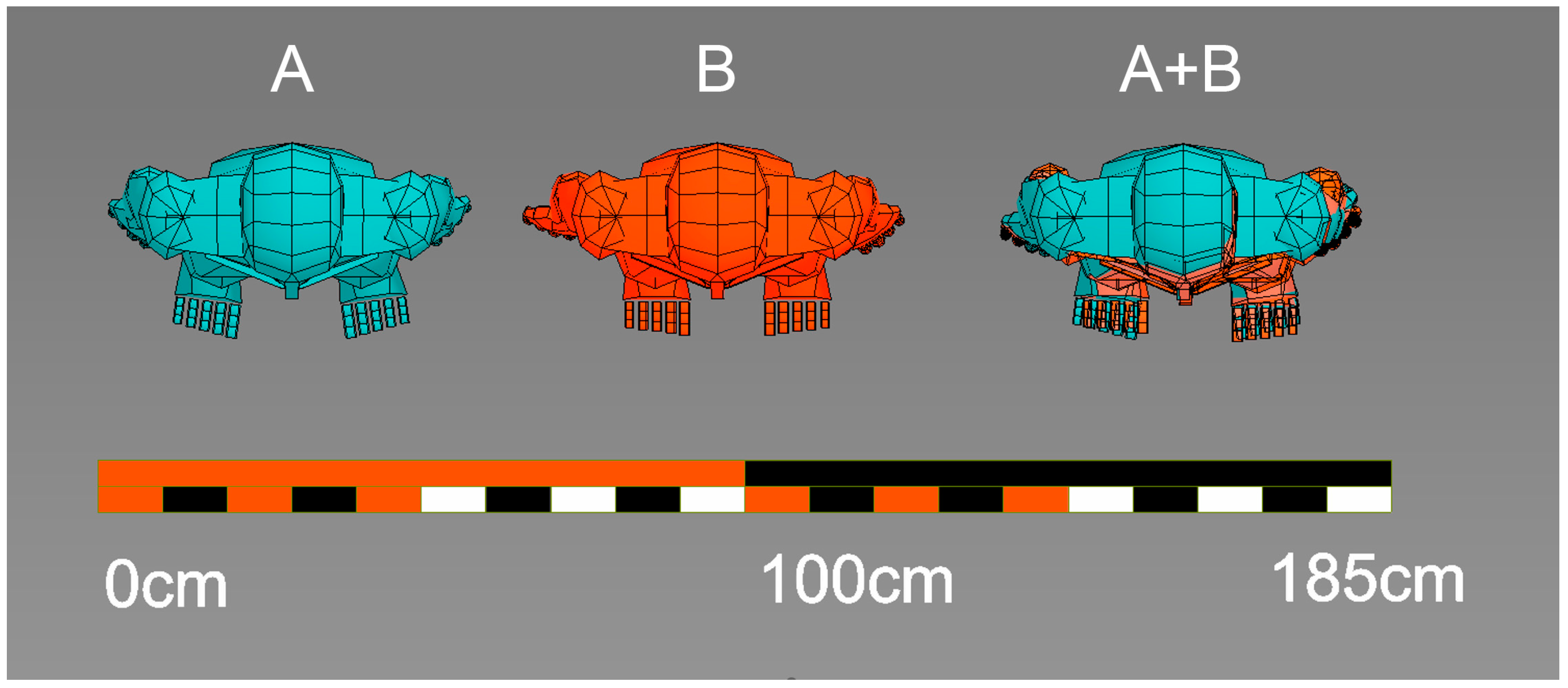

- ABC1—synthesis of the results of work on materials A, B, and C. Import of the reconstructed 3D models of two figures—that of the perpetrator (reconstructed to scale using the substituted 3D scan of the crime scene based on the video footage from the viewpoint of the reconstructed 3D camera) and that of the suspect (obtained via reconstruction based on a 3D body scan). Comparison of results, involving views of the figures of both models from various isometric viewpoints, free of perspective and lens distortions.

3. Results

4. Discussion

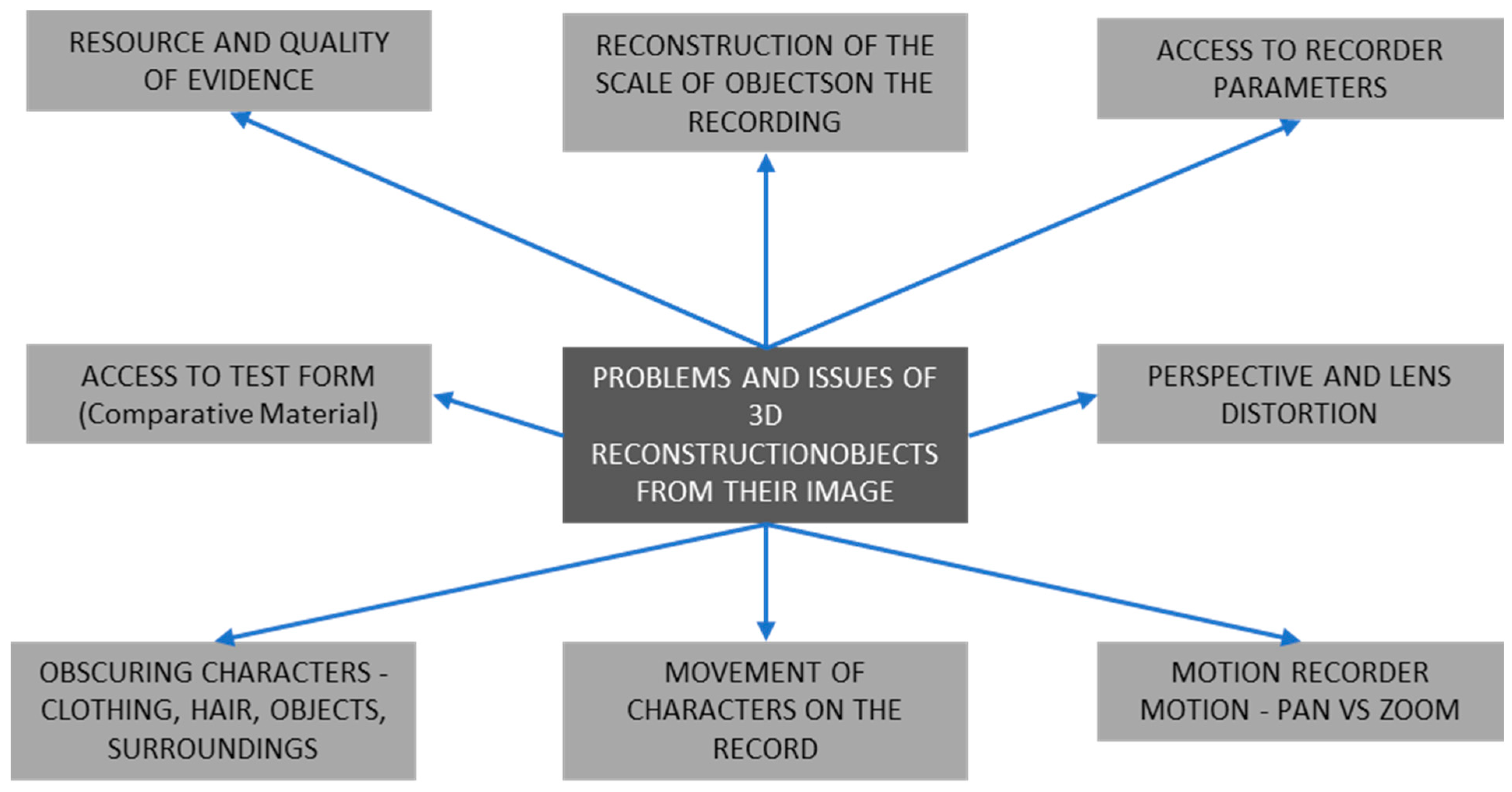

4.1. Problems and Issues Underlying 3D Reconstruction of Objects Based on Their Images

4.2. Resource and Quality of Evidence

4.3. Reconstruction of the Scale of Objects on the Recording

4.4. Access to Recorder Parameters

4.5. Access to Test Form (Comparative Material)

4.6. Perspective and Lens Distortion

4.7. Problem with Reading Character Proportions—Clothing, Goodness, and Hair

4.8. Character Movement in the Recording

4.9. Motion Recorder Motion—Pan vs. Zoom

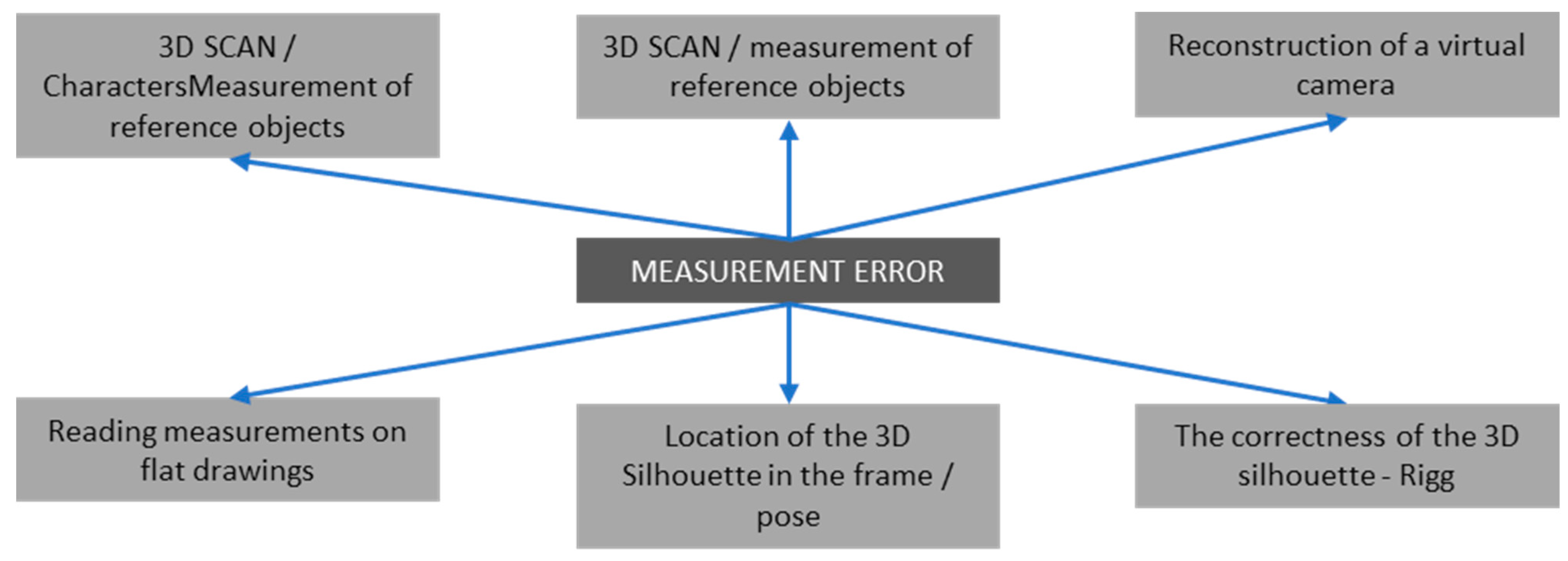

4.10. Measuring Error Analysis

4.10.1. 3D Scan/Measurement of Reference Objects

4.10.2. Reconstruction of a Virtual Camera

4.10.3. Location of the 3D Silhouette in the Frame/Pose

4.10.4. The Correctness of the 3D Silhouette—Rigg

4.10.5. Reading Measurements on Flat Drawings

4.11. Additional/Other Notes

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Milliet, Q.; Delémont, O.; Margot, P. A forensic science perspective on the role of images in crime investigation and reconstruction. Sci. Justice 2014, 54, 470–480. [Google Scholar] [CrossRef] [PubMed]

- Rahman, S.Z.A.; Abdullah, S.N.H.S.; Hao, L.E.; Abdulameer, M.H.; Zamani, N.A.; Darus, M.Z.A. Mapping 2D to 3D forensic facial recognition via bio-inspired active appearance model. J. Teknol. 2016, 78, 121–129. [Google Scholar] [CrossRef][Green Version]

- Han, I. Car speed estimation based on cross-ratio using video data of car-mounted camera (black box). Forensic Sci. Int. 2016, 269, 89–96. [Google Scholar] [CrossRef] [PubMed]

- Arsié, D.; Schuller, B.; Rigoll, G. Multiple Camera Person Tracking in Multiple Layers Combining 2D and 3D Information. In Workshop on Multi-Camera and Multi-Modal Sensor Fusion Algorithms and Multiple Camera P; ECCV: Marseille, France, 2008. [Google Scholar]

- Johnson, M.; Liscio, E. Suspect Height Estimation Using the Faro Focus(3D) Laser Scanner. J. Forensic Sci. 2015, 60, 1582–1588. [Google Scholar] [CrossRef] [PubMed]

- Mitzel, D.; Diesel, J.; Osep, A.; Rafi, U.; Leibe, B. A fixed-dimensional 3D shape representation for matching partially observed objects in street scenes. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2015; Volume 2015, pp. 1336–1343. [Google Scholar]

- Buck, U.; Naether, S.; Räss, B.; Jackowski, C.; Thali, M.J. Accident or homicide—Virtual crime scene reconstruction using 3D methods. Forensic Sci. Int. 2013, 225, 75–84. [Google Scholar] [CrossRef] [PubMed]

- Buck, U.; Naether, S.; Braun, M.; Bolliger, S.; Friederich, H.; Jackowski, C.; Aghayev, E.; Christe, A.; Vock, P.; Dirnhofer, R.; et al. Application of 3D documentation and geometric reconstruction methods in traffic accident analysis: With high resolution surface scanning, radiological MSCT/MRI scanning and real data based animation. Forensic Sci. Int. 2007, 170, 20–28. [Google Scholar] [CrossRef] [PubMed]

- Russo, P.; Gualdi-Russo, E.; Pellegrinelli, A.; Balboni, J.; Furini, A. A new approach to obtain metric data from video surveillance: Preliminary evaluation of a low-cost stereo-photogrammetric system. Forensic Sci. Int. 2017, 271, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Li, S.; Xu, Q. Video-Based Evidence Analysis and Extraction in Digital Forensic Investigation. IEEE Access 2019, 7, 55432–55442. [Google Scholar] [CrossRef]

- De Angelis, D.; Sala, R.; Cantatore, A.; Poppa, P.; Dufour, M.; Grandi, M.; Cattaneo, C. New method for height estimation of subjects represented in photograms taken from video surveillance systems. Int. J. Leg. Med. 2007, 121, 489–492. [Google Scholar] [CrossRef] [PubMed]

- Liscio, E.; Guryn, H.; Le, Q.; Olver, A. A comparison of reverse projection and PhotoModeler for suspect height analysis. Forensic Sci. Int. 2021, 320, 110690. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, N.H.; Hartley, R. Height measurement for humans in motion using a camera: A comparison of different methods. In Proceedings of the 2012 International Conference on Digital Image Computing Techniques and Applications (DICTA), Fremantle, Australia, 3–5 December 2012. [Google Scholar] [CrossRef]

- Seckiner, D.; Mallett, X.; Roux, C.; Meuwly, D.; Maynard, P. Forensic image analysis—CCTV distortion and artefacts. Forensic Sci. Int. 2018, 285, 77–85. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry: Principles, Techniques and Applications; Whittles Publishing: Dunbeath, UK, 2011. [Google Scholar]

- Jameson, J.; Zamani, N.A.; Abdullah, S.N.S.H.; Ghazali, N.N.A.N. Multiple Frames Combination Versus Single Frame Super Resolution Methods for CCTV Forensic Interpretation. J. Inf. Assur. Secur. 2013, 8, 230–239. [Google Scholar]

- Bulut, Ö.; Sevim, A. The Efficiency of Anthropological Examinations in Forensic Facial Analysis. Turk. J. Police Stud./Polis Bilim. Derg. 2013, 15, 139–158. [Google Scholar]

- Lee, J.; Lee, E.D.; Tark, H.O.; Hwang, J.W.; Yoon, D.Y. Efficient height measurement method of surveillance camera image. Forensic Sci. Int. 2008, 177, 17–23. [Google Scholar] [CrossRef] [PubMed]

- Hoogeboom, B.; Alberink, I.; Goos, M. Body height measurements in images. J. Forensic Sci. 2009, 54, 1365–1375. [Google Scholar] [CrossRef] [PubMed]

- Buck, U.; Naether, S.; Kreutz, K.; Thali, M. Geometric facial comparisons in speed-check photographs. Int. J. Leg. Med. 2011, 125, 785–790. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Bouchrika, I.; Goffredo, M.; Carter, J.; Nixon, M. On using gait in forensic biometrics. J. Forensic Sci. 2011, 56, 882–889. [Google Scholar] [CrossRef] [PubMed]

- Criminisi, A.; Zisserman, A.; Van Gool, L.J.; Bramble, S.K.; Compton, D. New approach to obtain height measurements from video. Investig. Forensic Sci. Technol. 1999, 3576, 227–238. [Google Scholar] [CrossRef]

- Tosti, F.; Nardinocchi, C.; Wahbeh, W.; Ciampini, C.; Marsella, M.; Lopes, P.; Giuliani, S. Human height estimation from highly distorted surveillance image. J. Forensic Sci. 2022, 67, 332–344. [Google Scholar] [CrossRef] [PubMed]

| Paper or Publication Closest to the Field: | Common Elements: | Research Potential beyond the Analyzed Position: |

|---|---|---|

| Johnson, M.; Liscio, E. Suspect Height Estimation Using the Faro Focus (3D) Laser Scanner. J. Forensic Sci. 2015, 60, 1582–1588, doi:10.1111/1556-4029.12829 [5] | 3D-scaling objects based on a 3D scan survey; and lens distortion issue solving solution. |

|

| Xiao, J.; Li, S.; Xu, Q. Video-Based Evidence Analysis and Extraction in Digital Forensic Investigation. IEEE Access 2019, 7, 55432–55442, doi:10.1109/ACCESS.2019.2913648 [10] | Rich theoretical background of the field. | |

| De Angelis, D.; Sala, R.; Cantatore, A.; Poppa, P.; Dufour, M.; Grandi, M.; Cattaneo, C. New method for height estimation of subjects represented in photograms taken from video surveillance systems. Int. J. Legal Med. 2007, 121, 489–492, doi:10.1007/S00414-007-0176-4. [11] | New method of object measurements in pictures; no 3D scan data involved; and no lens distortion issue solving solution. | |

| Liscio, E.; Guryn, H.; Le, Q.; Olver, A. A comparison of reverse projection and PhotoModeler for suspect height analysis. Forensic Sci. Int. 2021, 320, doi:10.1016/J.FORSCIINT.2021.110690. [12] | New method of objects measurements in pictures; no 3D scan data involved; and lens distortion issue solving solution. | |

| Nguyen, N.H.; Hartley, R. Height measurement for humans in motion using a camera: A comparison of different methods. 2012 Int. Conf. Digit. Image Comput. Tech. Appl. DICTA 2012 2012, doi:10.1109/DICTA.2012.6411679. [13] | New method of object measurements in pictures; no 3D scan data involved; and lens distortion issue solving solution. |

| Reconstructed 3D Model: | 3D Model Color in Figures | Overall Height Measure (Centimeters) | Overall Measure Error (Percentage) |

|---|---|---|---|

| Man (3D scan) | ------------------------------------ | 184 cm | 1.08% |

| Man (video) | ------------------------------------ | 182 cm | |

| Woman (3D scan) | ------------------------------------ | 165 cm | 1.80% |

| Woman (video) | ------------------------------------ | 168 cm |

| Study stage: | Video footage of the re-enactors in motion | 3D scan of the re-enactors | 3D scan of the surroundings | Reconstruction of the figures of the re-enactors based on a 3D scan | Reconstruction of the characters seen in the video footage | Correlation between the results and analysis of measurement error |

| Thumbnail | Figure 2 | Figure 3 | Figure 4 | Figure 5 | Figure 6 and Figure 7 | Figure 8, Figure 9 and Figure 11 |

| Method | Video footage | Photogrammetry | Lidar | 3D modeling and animation | 3D modeling and animation | 3D modeling and animation |

| Participants | Two, a man and a woman | Two, a man and a woman | None | None | None | None |

| Equipment | Insta 360 | Canon G7X | iPhone 14 Pro Max | Personal computer | Personal computer | Personal computer |

| Software | Insta Studio | Agisoft Metashape | iPhone 14 Pro Max | Autodesk 3D Studio Max | Autodesk 3D Studio Max | Autodesk 3D Studio Max |

| Goal | The video footage is to be the main basis of the reconstruction material (it is to simulate the evidence in analyses at the post-implementation stage of the method) | A 3D scan needed to create 3D models of the re-enactors for further correlation of 3D geometry with the figure in the video footage | A 3D scan needed to analyze the elimination of lens distortion and reconstruct the real scale of the video footage | A 3D reconstruction of the figures to correlate their shapes and dimensions with the subsequently obtained result of video sequence reconstruction | A 3D reconstruction of the figures to correlate their shapes and dimensions with the previously obtained result of video sequence reconstructions | Superimposition of the results in a 3D environment, without perspective distortions, in identical poses—a reliable and complementary comparison of the anthropometric values of the figures |

| Result | Video sequence | A three-dimensional real-scale point cloud | A three-dimensional real-scale point cloud | Animated 3D models | Animated 3D models | An interactive 3D scene + flat 2D images |

| Validation/comment | A 360 camera with an ultra-wide angle lens was used to create the real-life conditions of CCTV cameras | In order to validate the results of the correctness of the 3D reconstruction of the re-enactors, markers, with known set distances from each other, were placed in the space of photographs used for subsequent photogrammetric reconstruction. These dimensions were determined with a laser finder. | In order to validate the results of the correctness of the 3D reconstruction of the re-enactors, markers, with known set distances from each other, were placed in the space of photographs used for subsequent photogrammetric reconstruction. These dimensions were determined with a laser finder. | The correctness of joint locations and limb lengths of the 3D skeleton was validated based on scans of various poses of the re-enactors | The reconstruction involved a prepared 3D scene based on the recreated camera of the video footage. | The superimposed images were presented on a real scale, and the measurement error was read. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maksymowicz, K.; Kuzan, A.; Szleszkowski, Ł.; Tunikowski, W. Anthropological Comparative Analysis of CCTV Footage in a 3D Virtual Environment. Appl. Sci. 2023, 13, 11879. https://doi.org/10.3390/app132111879

Maksymowicz K, Kuzan A, Szleszkowski Ł, Tunikowski W. Anthropological Comparative Analysis of CCTV Footage in a 3D Virtual Environment. Applied Sciences. 2023; 13(21):11879. https://doi.org/10.3390/app132111879

Chicago/Turabian StyleMaksymowicz, Krzysztof, Aleksandra Kuzan, Łukasz Szleszkowski, and Wojciech Tunikowski. 2023. "Anthropological Comparative Analysis of CCTV Footage in a 3D Virtual Environment" Applied Sciences 13, no. 21: 11879. https://doi.org/10.3390/app132111879

APA StyleMaksymowicz, K., Kuzan, A., Szleszkowski, Ł., & Tunikowski, W. (2023). Anthropological Comparative Analysis of CCTV Footage in a 3D Virtual Environment. Applied Sciences, 13(21), 11879. https://doi.org/10.3390/app132111879