An Intelligent Facial Expression Recognition System Using a Hybrid Deep Convolutional Neural Network for Multimedia Applications

Abstract

:1. Introduction

2. Literature Review

Deep-Learning-Based Face Recognition

3. Methodology

3.1. Image Resizing

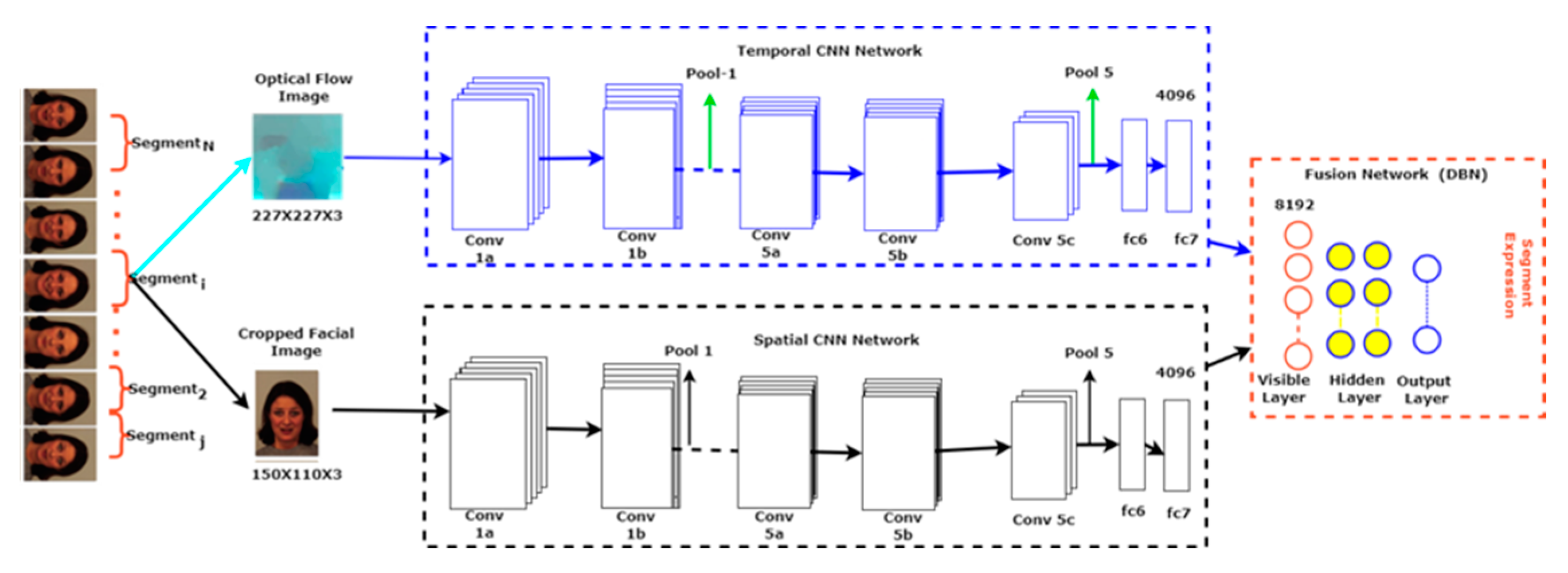

3.2. Spatiotemporal Feature Learning with CNNs

3.3. Spatiotemporal Fusion with DBNs

- As a bottom-up method of unsupervised pretraining, greedy layer-wise training is used. The logarithm of the probability of derivatives is used to update the weights of each RBM model:

- 2.

- Network parameters are updated through the supervised fine-tuning stage with backpropagation. Specifically, supervised fine-tuning can be achieved by comparing input data to the reconstructed data using the following loss function.

3.4. Classification

4. Experiments Analysis

4.1. Dataset

4.2. Performance Metric

4.3. Analysis and Discussion of Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shao, J.; Qian, Y. Three convolutional neural network models for facial expression recognition in the wild. Neurocomputing 2019, 355, 82–92. [Google Scholar] [CrossRef]

- Joshi, D.; Datta, R.; Fedorovskaya, E.; Luong, Q.-T.; Wang, J.Z.; Li, J.; Luo, J. Aesthetics and emotions in images. IEEE Signal Process. Mag. 2011, 28, 94–115. [Google Scholar] [CrossRef]

- Cootes, T.F.; Taylor, C.J.; Cooper, D.H.; Graham, J. Active Shape Models-Their Training and Application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 681–685. [Google Scholar] [CrossRef]

- Kähler, K.; Haber, J.; Seidel, H.-P. Geometry-based muscle modeling for facial animation. In Proceedings of the Graphics Interface, Ottawa, ON, Canada, 7–9 June 2001; Volume 2001, pp. 37–46. [Google Scholar]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep Facial Expression Recognition: A Survey. IEEE Trans. Affect. Comput. 2022, 13, 1195–1215. [Google Scholar] [CrossRef]

- Sandbach, G.; Zafeiriou, S.; Pantic, M.; Yin, L. Static and dynamic 3D facial expression recognition: A comprehensive survey. Image Vis. Comput. 2012, 30, 683–697. [Google Scholar] [CrossRef]

- Carcagnì, P.; Del Coco, M.; Leo, M.; Distante, C. Facial expression recognition and histograms of oriented gradients: A comprehensive study. SpringerPlus 2015, 4, 1–25. [Google Scholar] [CrossRef]

- Shan, C.; Gritti, T. Learning Discriminative LBP-Histogram Bins for Facial Expression Recognition. In Proceedings of the BMVC, Leeds, UK, 1–4 September 2008; pp. 1–10. [Google Scholar]

- Lajevardi, S.M.; Lech, M. Averaged Gabor filter features for facial expression recognition. In Proceedings of the 2008 Digital Image Computing: Techniques and Applications, Canberra, Australia, 1–3 December 2008; pp. 71–76. [Google Scholar]

- Kahou, S.E.; Froumenty, P.; Pal, C. Facial expression analysis based on high dimensional binary features. In Proceedings of the Computer Vision-ECCV 2014 Workshops, Zurich, Switzerland, 6–7,12 September 2014; Proceedings, Part II. Springer: Cham, Switzerland, 2015; pp. 135–147. [Google Scholar]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on local binary patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef]

- Rani, P.; Verma, S.; Yadav, S.P.; Rai, B.K.; Naruka, M.S.; Kumar, D. Simulation of the Lightweight Blockchain Technique Based on Privacy and Security for Healthcare Data for the Cloud System. Int. J. E-Health Med. Commun. 2022, 13, 1–15. [Google Scholar] [CrossRef]

- Rani, P.; Singh, P.N.; Verma, S.; Ali, N.; Shukla, P.K.; Alhassan, M. An Implementation of Modified Blowfish Technique with Honey Bee Behavior Optimization for Load Balancing in Cloud System Environment. Wirel. Commun. Mob. Comput. 2022, 2022, 3365392. [Google Scholar] [CrossRef]

- Kahou, S.E.; Bouthillier, X.; Lamblin, P.; Gulcehre, C.; Michalski, V.; Konda, K.; Jean, S.; Froumenty, P.; Dauphin, Y.; Boulanger-Lewandowski, N. Emonets: Multimodal deep learning approaches for emotion recognition in video. J. Multimodal User Interfaces 2016, 10, 99–111. [Google Scholar] [CrossRef]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A convolutional neural network for modelling sentences. arXiv 2014, arXiv14042188. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Yang, J. Convolutional neural network in network (CNNiN): Hyperspectral image classification and dimensionality reduction. IET Image Process. 2019, 13, 246–253. [Google Scholar] [CrossRef]

- Zareapoor, M.; Shamsolmoali, P.; Yang, J. Learning depth super-resolution by using multi-scale convolutional neural network. J. Intell. Fuzzy Syst. 2019, 36, 1773–1783. [Google Scholar] [CrossRef]

- Sariyanidi, E.; Gunes, H.; Cavallaro, A. Automatic analysis of facial affect: A survey of registration, representation, and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1113–1133. [Google Scholar] [CrossRef]

- Sebe, N.; Lew, M.S.; Sun, Y.; Cohen, I.; Gevers, T.; Huang, T.S. Authentic facial expression analysis. Image Vis. Comput. 2007, 25, 1856–1863. [Google Scholar] [CrossRef]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef]

- Gharavian, D.; Bejani, M.; Sheikhan, M. Audio-visual emotion recognition using FCBF feature selection method and particle swarm optimization for fuzzy ARTMAP neural networks. Multimed. Tools Appl. 2017, 76, 2331–2352. [Google Scholar] [CrossRef]

- Jain, N.; Kumar, S.; Kumar, A.; Shamsolmoali, P.; Zareapoor, M. Hybrid deep neural networks for face emotion recognition. Pattern Recognit. Lett. 2018, 115, 101–106. [Google Scholar] [CrossRef]

- Dellandrea, E.; Liu, N.; Chen, L. Classification of affective semantics in images based on discrete and dimensional models of emotions. In Proceedings of the 2010 International Workshop on Content Based Multimedia Indexing (CBMI), Grenoble, France, 23–25 June 2010; pp. 1–6. [Google Scholar]

- Sohail, A.S.M.; Bhattacharya, P. Classifying facial expressions using level set method based lip contour detection and multi-class support vector machines. Int. J. Pattern Recognit. Artif. Intell. 2011, 25, 835–862. [Google Scholar] [CrossRef]

- Khan, S.A.; Hussain, A.; Usman, M.; Nazir, M.; Riaz, N.; Mirza, A.M. Robust face recognition using computationally efficient features. J. Intell. Fuzzy Syst. 2014, 27, 3131–3143. [Google Scholar] [CrossRef]

- Chelali, F.Z.; Djeradi, A. Face Recognition Using MLP and RBF Neural Network with Gabor and Discrete Wavelet Transform Characterization: A Comparative Study. Math. Probl. Eng. 2015, 2015, e523603. [Google Scholar] [CrossRef]

- Ryu, S.-J.; Kirchner, M.; Lee, M.-J.; Lee, H.-K. Rotation invariant localization of duplicated image regions based on Zernike moments. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1355–1370. [Google Scholar]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005. [Google Scholar] [CrossRef]

- Wang, S.; Wu, C.; He, M.; Wang, J.; Ji, Q. Posed and spontaneous expression recognition through modeling their spatial patterns. Mach. Vis. Appl. 2015, 26, 219–231. [Google Scholar] [CrossRef]

- Gunes, H.; Hung, H. Is automatic facial expression recognition of emotions coming to a dead end? The rise of the new kids on the block. Image Vis. Comput. 2016, 55, 6–8. [Google Scholar] [CrossRef]

- Sajjad, M.; Ullah, F.U.M.; Ullah, M.; Christodoulou, G.; Cheikh, F.A.; Hijji, M.; Muhammad, K.; Rodrigues, J.J. A comprehensive survey on deep facial expression recognition: Challenges, applications, and future guidelines. Alex. Eng. J. 2023, 68, 817–840. [Google Scholar] [CrossRef]

- Ansari, G.; Rani, P.; Kumar, V. A Novel Technique of Mixed Gas Identification Based on the Group Method of Data Handling (GMDH) on Time-Dependent MOX Gas Sensor Data. In Proceedings of International Conference on Recent Trends in Computing; Mahapatra, R.P., Peddoju, S.K., Roy, S., Parwekar, P., Eds.; Lecture Notes in Networks and Systems; Springer Nature: Singapore, 2023; Volume 600, pp. 641–654. ISBN 978-981-19882-4-0. [Google Scholar]

- Visin, F.; Kastner, K.; Cho, K.; Matteucci, M.; Courville, A.; Bengio, Y. ReNet: A Recurrent Neural Network Based Alternative to Convolutional Networks. arXiv 2015, arXiv150500393. [Google Scholar]

- Sulong, G.B.; Wimmer, M.A. Image hiding by using spatial domain steganography. Wasit J. Comput. Math. Sci. 2023, 2, 39–45. [Google Scholar] [CrossRef]

- Zhang, T.; Zheng, W.; Cui, Z.; Zong, Y.; Li, Y. Spatial–temporal recurrent neural network for emotion recognition. IEEE Trans. Cybern. 2018, 49, 839–847. [Google Scholar] [CrossRef]

- Bhola, B.; Kumar, R.; Rani, P.; Sharma, R.; Mohammed, M.A.; Yadav, K.; Alotaibi, S.D.; Alkwai, L.M. Quality-enabled decentralized dynamic IoT platform with scalable resources integration. IET Commun. 2022. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Jamali, M.A.J.; Akbarpour, S. A hybrid approach for latency and battery lifetime optimization in IoT devices through offloading and CNN learning. Sustain. Comput. Inform. Syst. 2023, 39, 100899. [Google Scholar] [CrossRef]

- Alluhaidan, A.S.; Saidani, O.; Jahangir, R.; Nauman, M.A.; Neffati, O.S. Speech Emotion Recognition through Hybrid Features and Convolutional Neural Network. Appl. Sci. 2023, 13, 4750. [Google Scholar] [CrossRef]

- Triki, N.; Karray, M.; Ksantini, M. A real-time traffic sign recognition method using a new attention-based deep convolutional neural network for smart vehicles. Appl. Sci. 2023, 13, 4793. [Google Scholar] [CrossRef]

- Zhou, W.; Jia, J. Training convolutional neural network for sketch recognition on large-scale dataset. Int. Arab. J. Inf. Technol. 2020, 17, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Zouari, R.; Boubaker, H.; Kherallah, M. RNN-LSTM Based Beta-Elliptic Model for Online Handwriting Script Identification. Int. Arab. J. Inf. Technol. 2018, 15, 532–539. [Google Scholar]

- Barros, P.; Wermter, S. Developing crossmodal expression recognition based on a deep neural model. Adapt. Behav. 2016, 24, 373–396. [Google Scholar] [CrossRef]

- Zhang, K.; Huang, Y.; Du, Y.; Wang, L. Facial expression recognition based on deep evolutional spatial-temporal networks. IEEE Trans. Image Process. 2017, 26, 4193–4203. [Google Scholar] [CrossRef]

- Jeong, M.; Ko, B.C. Driver’s Facial Expression Recognition in Real-Time for Safe Driving. Sensors 2018, 18, 4270. [Google Scholar] [CrossRef] [PubMed]

- Ullah, M.; Ullah, H.; Alseadonn, I.M. Human action recognition in videos using stable features. Signal Image Process. Int. J. 2017, 8, 1–10. [Google Scholar] [CrossRef]

- Wang, S.-H.; Phillips, P.; Dong, Z.-C.; Zhang, Y.-D. Intelligent facial emotion recognition based on stationary wavelet entropy and Jaya algorithm. Neurocomputing 2018, 272, 668–676. [Google Scholar] [CrossRef]

- Yan, H. Collaborative discriminative multi-metric learning for facial expression recognition in video. Pattern Recognit. 2018, 75, 33–40. [Google Scholar] [CrossRef]

- Samadiani, N.; Huang, G.; Cai, B.; Luo, W.; Chi, C.-H.; Xiang, Y.; He, J. A Review on Automatic Facial Expression Recognition Systems Assisted by Multimodal Sensor Data. Sensors 2019, 19, 1863. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv14091556. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E. Training products of experts by minimizing contrastive divergence. Neural Comput. 2002, 14, 1771–1800. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Jain, D.K.; Shamsolmoali, P.; Sehdev, P. Extended deep neural network for facial emotion recognition. Pattern Recognit. Lett. 2019, 120, 69–74. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gaboury, S.; Bouchard, B. User action and facial expression recognition for error detection system in an ambient assisted environment. Expert Syst. Appl. 2018, 112, 173–189. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Lyons, M.J.; Akamatsu, S.; Kamachi, M.; Gyoba, J.; Budynek, J. The Japanese female facial expression (JAFFE) database. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 14–16. [Google Scholar]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.; Hawk, S.T.; Van Knippenberg, A.D. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M.; Voyiatzis, I. Feature Extraction with Handcrafted Methods and Convolutional Neural Networks for Facial Emotion Recognition. Appl. Sci. 2022, 12, 8455. [Google Scholar] [CrossRef]

- Lundqvist, D.; Flykt, A.; Öhman, A. Karolinska directed emotional faces. Cogn. Emot. 1998, 91. [Google Scholar]

- Rani, P.; Sharma, R. Intelligent transportation system for internet of vehicles based vehicular networks for smart cities. Comput. Electr. Eng. 2023, 105, 108543. [Google Scholar] [CrossRef]

- Shah, J.H.; Sharif, M.; Yasmin, M.; Fernandes, S.L. Facial expressions classification and false label reduction using LDA and threefold SVM. Pattern Recognit. Lett. 2020, 139, 166–173. [Google Scholar] [CrossRef]

- Barman, A.; Dutta, P. Facial expression recognition using distance and shape signature features. Pattern Recognit. Lett. 2021, 145, 254–261. [Google Scholar] [CrossRef]

- Liang, D.; Yang, J.; Zheng, Z.; Chang, Y. A facial expression recognition system based on supervised locally linear embedding. Pattern Recognit. Lett. 2005, 26, 2374–2389. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, S. Facial expression recognition using local binary patterns and discriminant kernel locally linear embedding. EURASIP J. Adv. Signal Process. 2012, 2012, 20. [Google Scholar] [CrossRef]

- Kas, M.; Ruichek, Y.; Messoussi, R. New framework for person-independent facial expression recognition combining textural and shape analysis through new feature extraction approach. Inf. Sci. 2021, 549, 200–220. [Google Scholar] [CrossRef]

- Eng, S.K.; Ali, H.; Cheah, A.Y.; Chong, Y.F. Facial expression recognition in JAFFE and KDEF Datasets using histogram of oriented gradients and support vector machine. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 705, p. 012031. [Google Scholar]

- Islam, B.; Mahmud, F.; Hossain, A.; Goala, P.B.; Mia, M.S. A facial region segmentation based approach to recognize human emotion using fusion of HOG & LBP features and artificial neural network. In Proceedings of the 2018 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; pp. 642–646. [Google Scholar]

- Yaddaden, Y. An efficient facial expression recognition system with appearance-based fused descriptors. Intell. Syst. Appl. 2023, 17, 200166. [Google Scholar] [CrossRef]

- Olivares-Mercado, J.; Toscano-Medina, K.; Sanchez-Perez, G.; Portillo-Portillo, J.; Perez-Meana, H.; Benitez-Garcia, G. Analysis of hand-crafted and learned feature extraction methods for real-time facial expression recognition. In Proceedings of the 2019 7th International Workshop on Biometrics and Forensics (IWBF), Cancun, Mexico, 2–3 May 2019; pp. 1–6. [Google Scholar]

- Yaddaden, Y.; Adda, M.; Bouzouane, A. Facial expression recognition using locally linear embedding with lbp and hog descriptors. In Proceedings of the 2020 2nd International Workshop on Human-Centric Smart Environments for Health and Well-Being (IHSH), Boumerdes, Algeria, 9–10 February 2021; pp. 221–226. [Google Scholar]

- Lekdioui, K.; Messoussi, R.; Ruichek, Y.; Chaabi, Y.; Touahni, R. Facial decomposition for expression recognition using texture/shape descriptors and SVM classifier. Signal Process. Image Commun. 2017, 58, 300–312. [Google Scholar] [CrossRef]

- Li, R.; Liu, P.; Jia, K.; Wu, Q. Facial expression recognition under partial occlusion based on gabor filter and gray-level cooccurrence matrix. In Proceedings of the 2015 International Conference on Computational Intelligence and Communication Networks (CICN), Jabalpur, India, 12–14 December 2015; pp. 347–351. [Google Scholar]

| Dataset | FE | AN | DI | HA | SA | SU | NE | Total | Resolution |

|---|---|---|---|---|---|---|---|---|---|

| JAFFE | 30 | 30 | 28 | 29 | 30 | 30 | 30 | 207 | 256 × 256 |

| KDEF | 140 | 140 | 140 | 140 | 140 | 140 | 140 | 980 | 562 × 762 |

| RaFD | 67 | 67 | 67 | 67 | 67 | 67 | 67 | 469 | 681 × 1024 |

| Classes | JAFFE | KDEF | RaFD | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| NE | 97.49 | 98.1 | 98.97 | 95.88 | 96.3 | 96.23 | 97.14 | 99.0 | 0.9832 |

| AN | 99.1 | 99.3 | 99.00 | 94.97 | 93.8 | 94.18 | 98.29 | 99.8 | 99.48 |

| DI | 97.73 | 97.2 | 97.43 | 94.11 | 95.4 | 94.97 | 95.97 | 96.9 | 96.90 |

| FE | 99.82 | 99.4 | 8.96 | 95.55 | 96.2 | 96.51 | 99.08 | 98.3 | 98.39 |

| HA | 96.39 | 97.9 | 97.11 | 95.80 | 94.2 | 95.12 | 98.95 | 99.2 | 99.56 |

| SA | 99.97 | 99.7 | 99.58 | 97.04 | 97.2 | 97.10 | 98.85 | 98.5 | 98.47 |

| SU | 95.79 | 95.6 | 95.71 | 95.27 | 95.8 | 95.83 | 99.50 | 99.1 | 98.84 |

| Authors | Reference Number | Year of Study | Accuracy (%) |

|---|---|---|---|

| Liang, Dong, et al. | [67] | 2005 | 95 |

| Zhao, X., and Zhang, S | [68] | 2012 | 77.14 |

| Islam, et al. | [71] | 2018 | 93.51 |

| Eng, S.K., et al. | [70] | 2019 | 79.19 |

| Jain, et al. | [57] | 2019 | 95.23 |

| Shah, et al. | [65] | 2020 | 93.96 |

| Barman, A., and Paramartha D.a | [66] | 2021 | 96.4 |

| Kas, et al. | [69] | 2021 | 56.67 |

| Yaddaden, Y. | [72] | 2023 | 96.17 |

| Ahmed J. and Hassanain K. | Proposed model | 2023 | 98.14 |

| Authors | Reference Number | Year of Study | Accuracy |

|---|---|---|---|

| Lekdioui, K., et al. | [75] | 2017 | 88.25 |

| Olivares-Mercado, et al. | [73] | 2019 | 77.86 |

| Eng, S.K., et al. | [70] | 2019 | 80.95 |

| Kas, et al. | [69] | 2021 | 76.73 |

| Yaddaden, et al. | [74] | 2021 | 85 |

| Yaddaden, Y. | [72] | 2023 | 90.12 |

| Ahmed J. and Hassanain K. | Proposed model | 2023 | 95.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Obaid, A.J.; Alrammahi, H.K. An Intelligent Facial Expression Recognition System Using a Hybrid Deep Convolutional Neural Network for Multimedia Applications. Appl. Sci. 2023, 13, 12049. https://doi.org/10.3390/app132112049

Obaid AJ, Alrammahi HK. An Intelligent Facial Expression Recognition System Using a Hybrid Deep Convolutional Neural Network for Multimedia Applications. Applied Sciences. 2023; 13(21):12049. https://doi.org/10.3390/app132112049

Chicago/Turabian StyleObaid, Ahmed J., and Hassanain K. Alrammahi. 2023. "An Intelligent Facial Expression Recognition System Using a Hybrid Deep Convolutional Neural Network for Multimedia Applications" Applied Sciences 13, no. 21: 12049. https://doi.org/10.3390/app132112049

APA StyleObaid, A. J., & Alrammahi, H. K. (2023). An Intelligent Facial Expression Recognition System Using a Hybrid Deep Convolutional Neural Network for Multimedia Applications. Applied Sciences, 13(21), 12049. https://doi.org/10.3390/app132112049