Autonomous Fever Detection, Medicine Delivery, and Environmental Disinfection for Pandemic Prevention

Abstract

:1. Introduction

- Novel algorithms for automatic fever detection and medicine-taking recognition that ensure both human safety and comfort, as well as environmental disinfection that covers blind spots well, were developed.

- A quantitative assessment method for evaluating the reduction in infection risk through the use of robots based on the duration and proximity of contact with confirmed cases was developed.

- Via integration with multi-sensors and a collision-free path planning strategy, an autonomous system that was capable of navigating the mobile robot manipulator among static obstacles and moving people was developed and applied for a field study in a hospital-like environment.

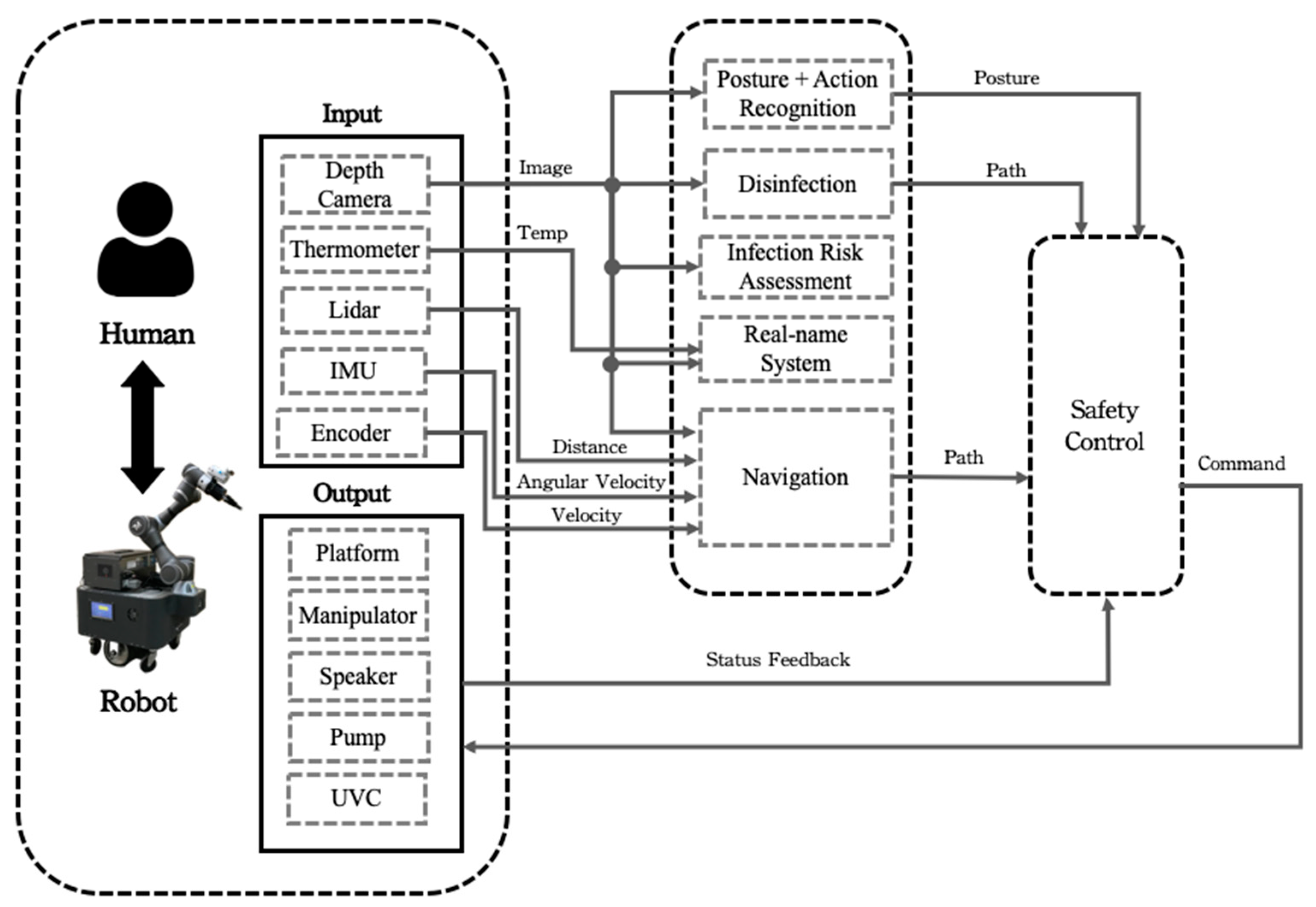

2. Proposed System

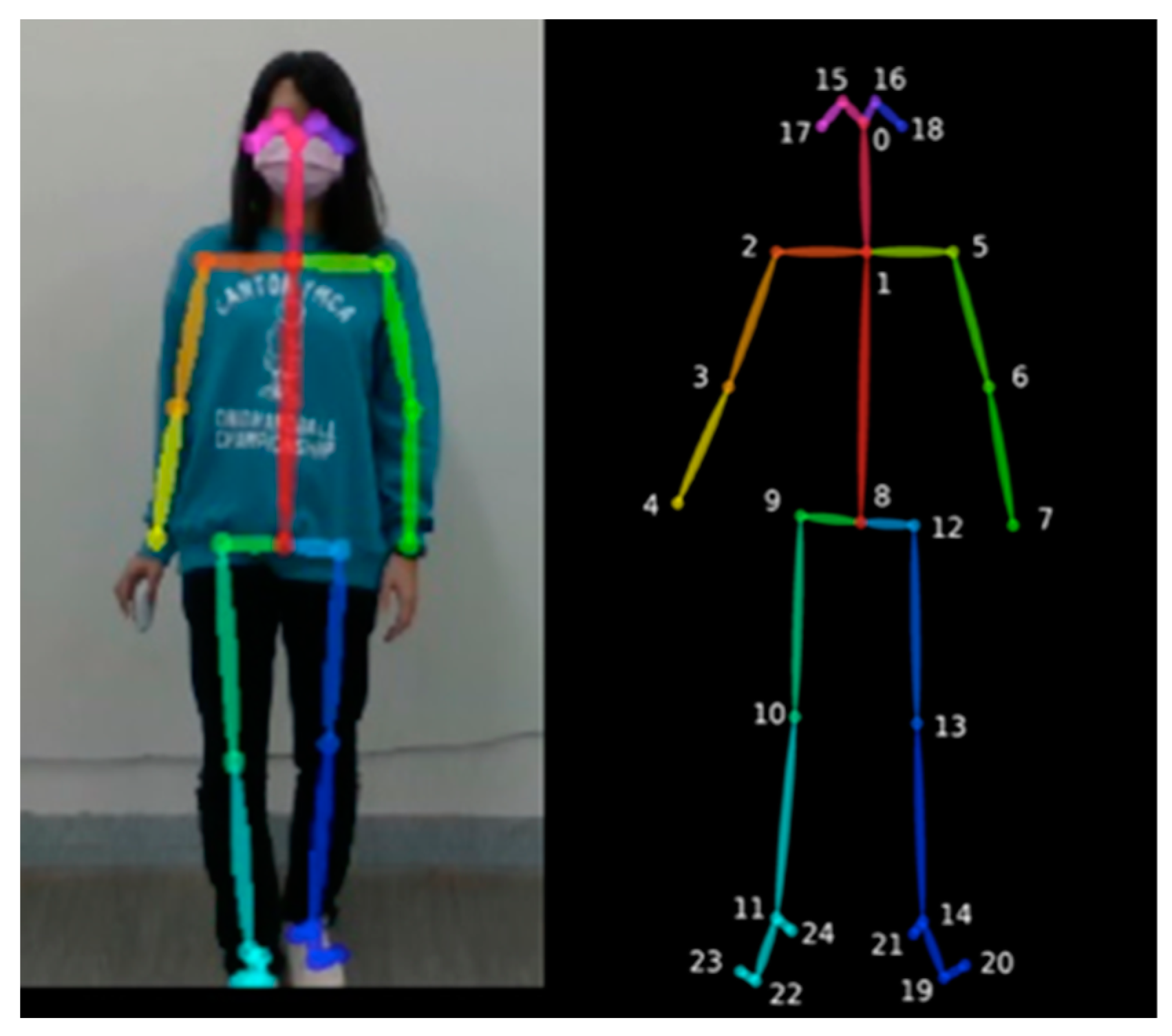

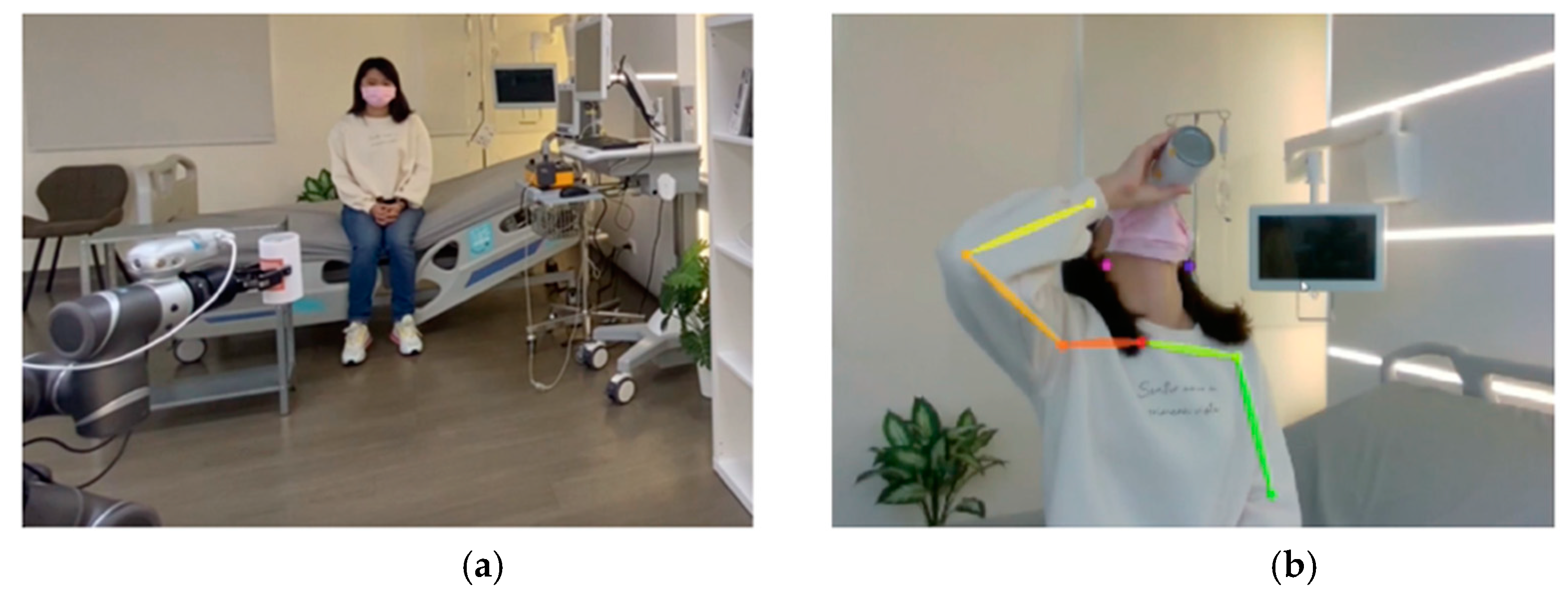

2.1. Posture and Action Recognition

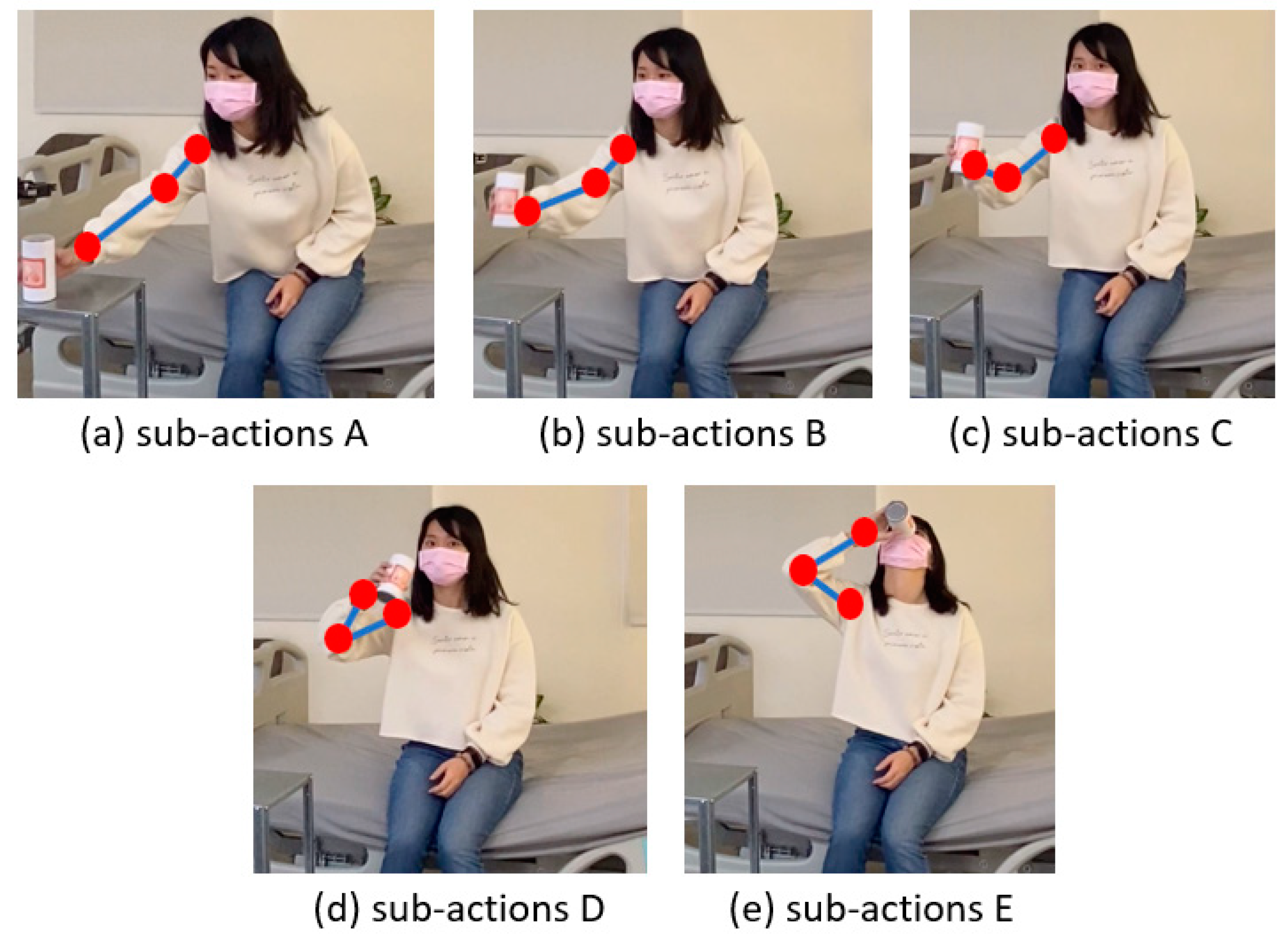

- Step 1:

- Input the patient’s time sequence of medicine taken, measured via the depth camera, and process it to become the posture sequence O. Classify the postures into corresponding sub-actions A~E via the CNN and rename O to be Ol.

- Step 2:

- Obtain the standard posture sequence R to serve as the reference for comparison from a number of demonstrations of successful medicine taking.

- Step 3:

- Generate Matrix M for similarity evaluation between R and Ol. Derive the dynamic time warping distance for its elements M(i,j) for similarity measurement.

- Step 4:

- If the value of the similarity measurement in Step 3 is smaller (larger) than a preset threshold, the patient is judged to have (not) taken the medicine.

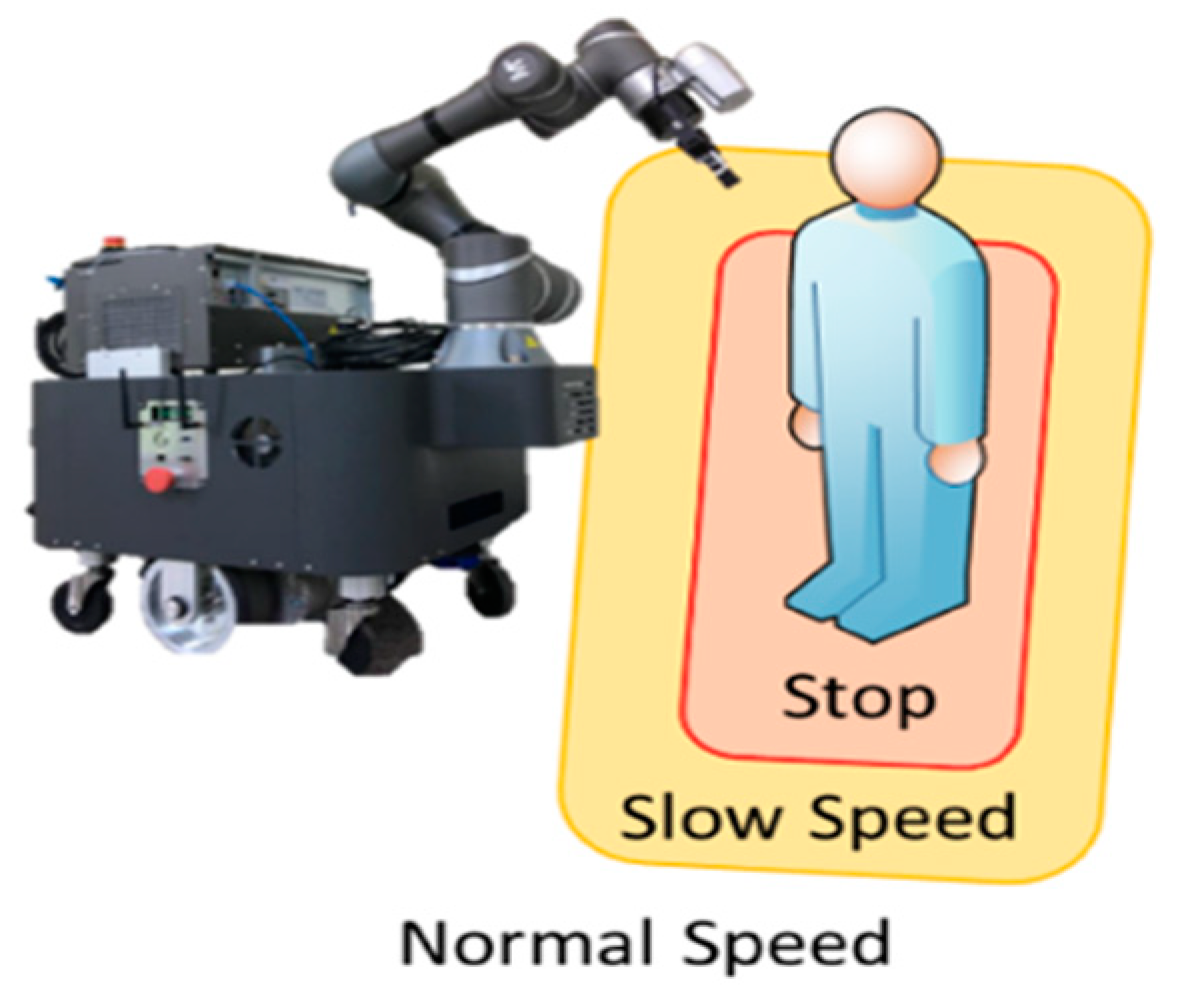

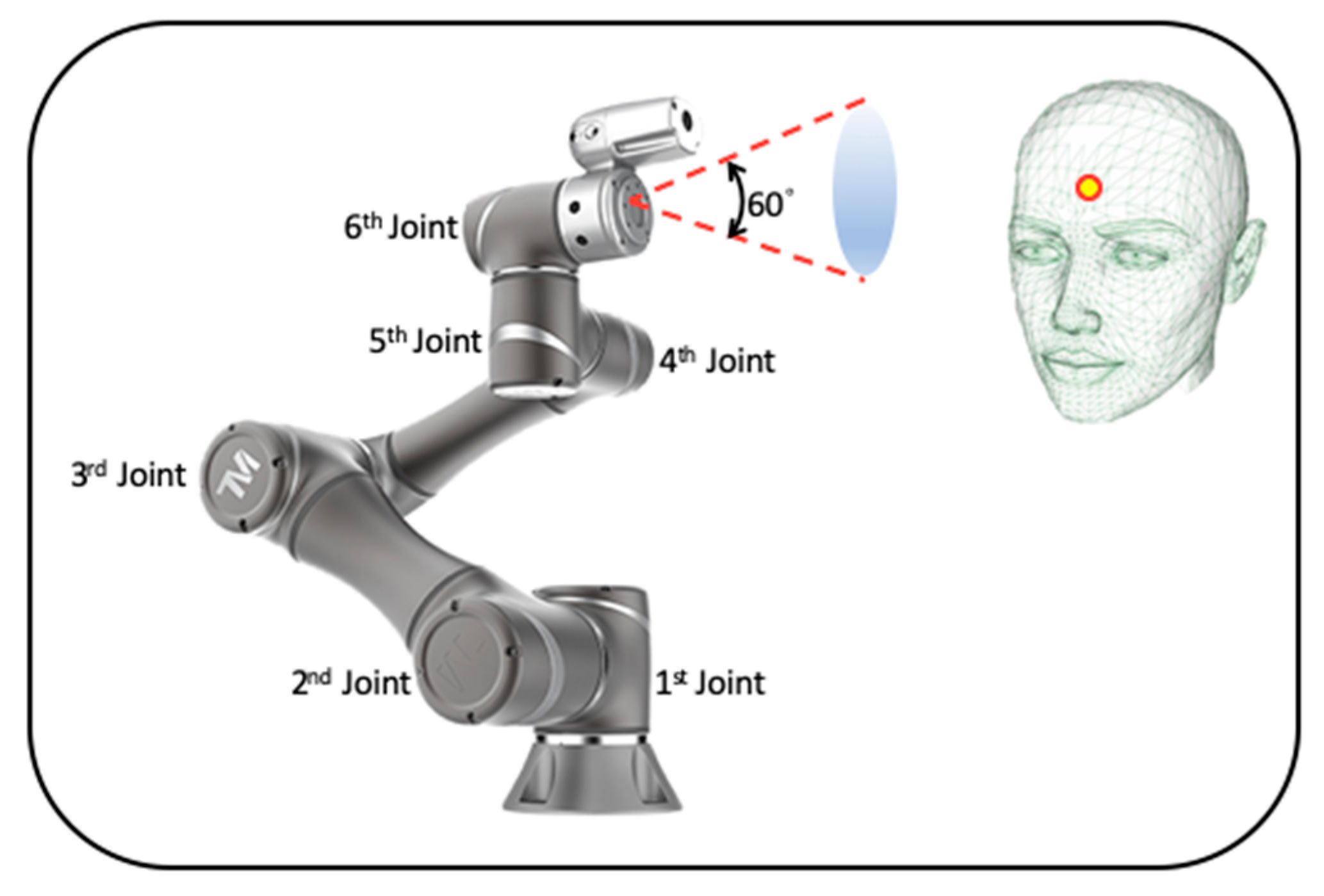

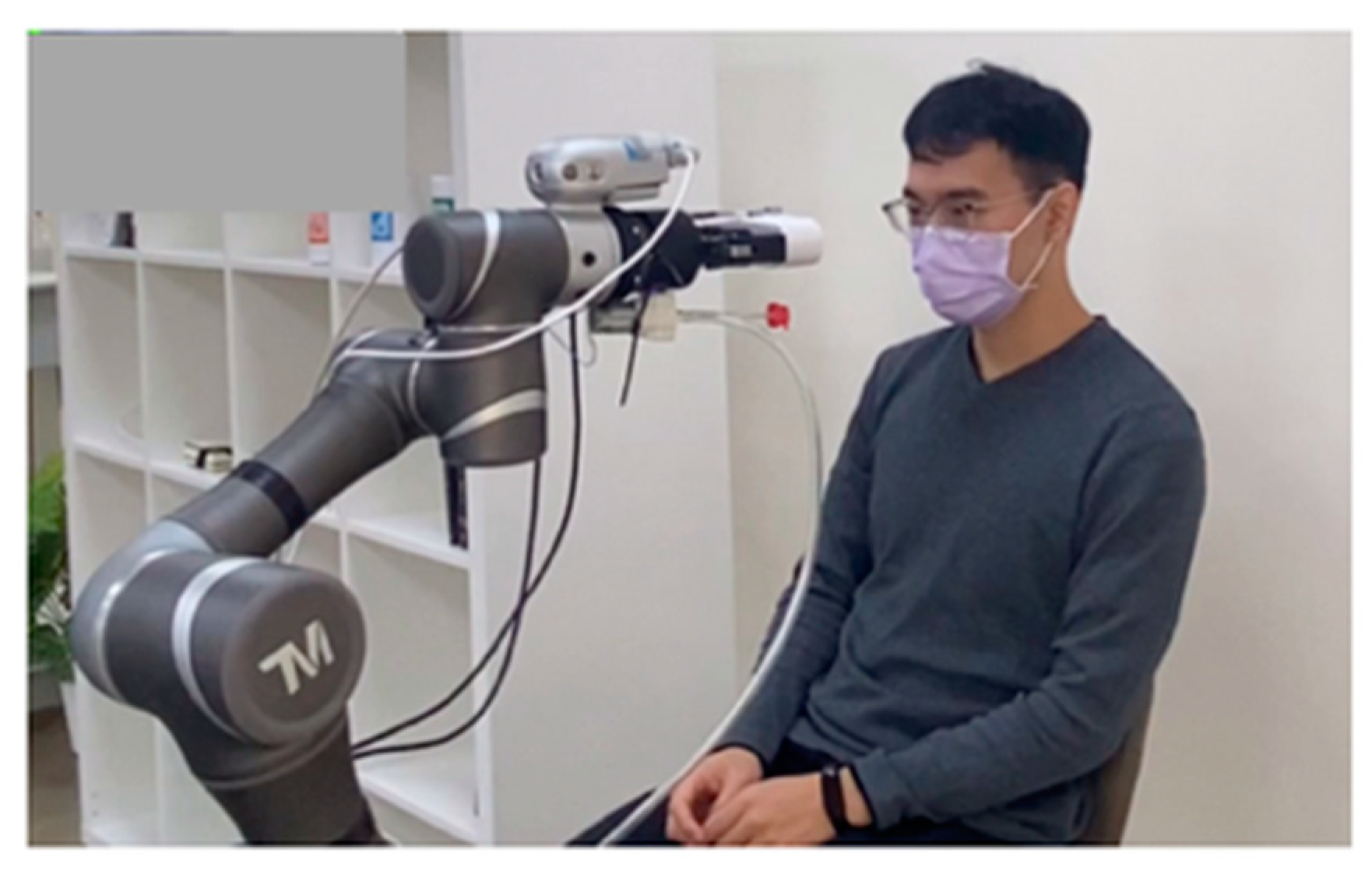

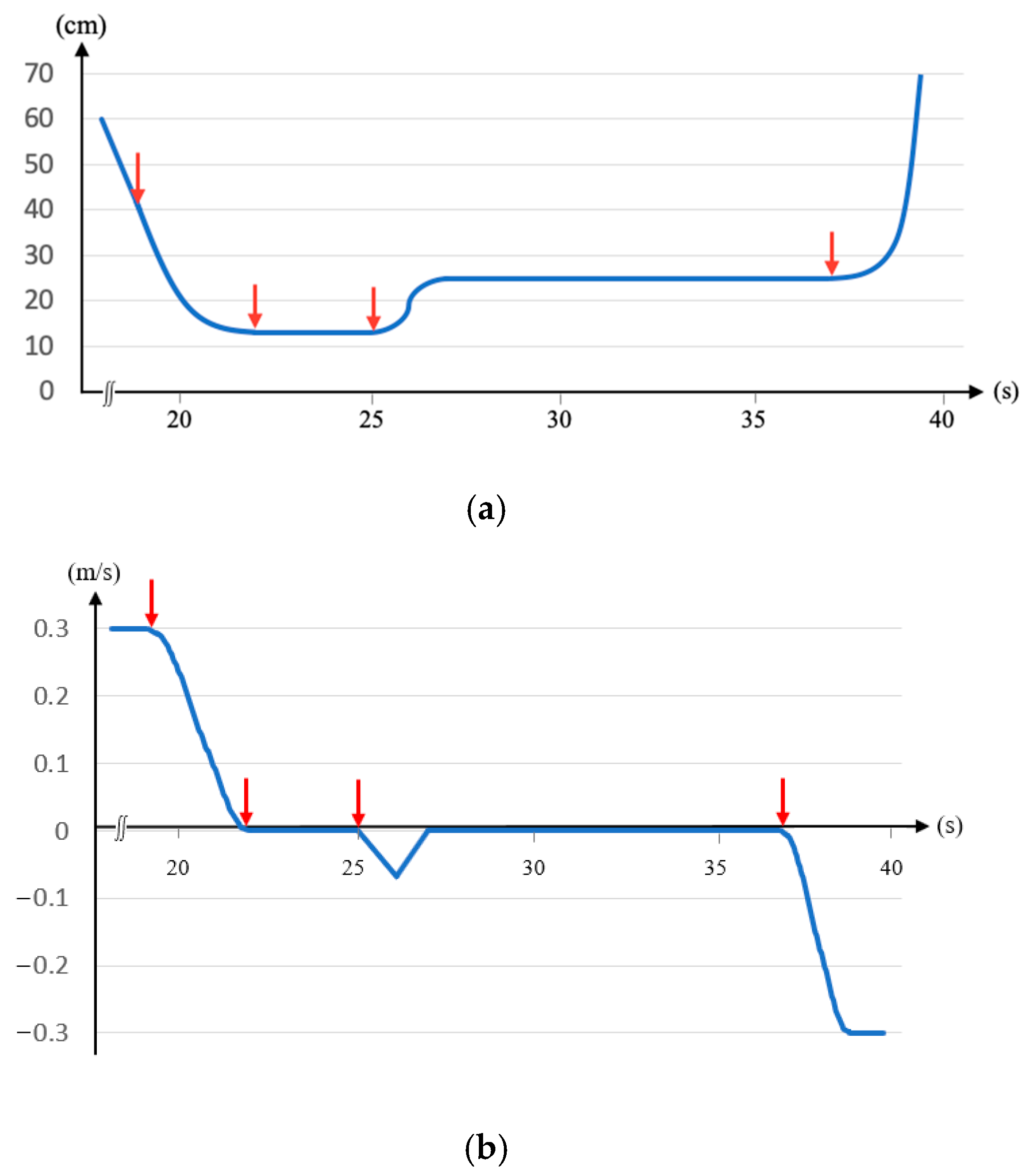

2.2. Safety Control

- Step 1:

- Confirm that the patient is seated and stationary.

- Step 2:

- Utilize the depth camera on the robotic arm to calculate the coordinates of the patient’s forehead.

- Step 3:

- Compute the path from the robotic arm to the forehead and restrict the orientation of the end-effector.

- Step 4:

- Dynamically adjust the arm’s speed based on the distance from the patient.

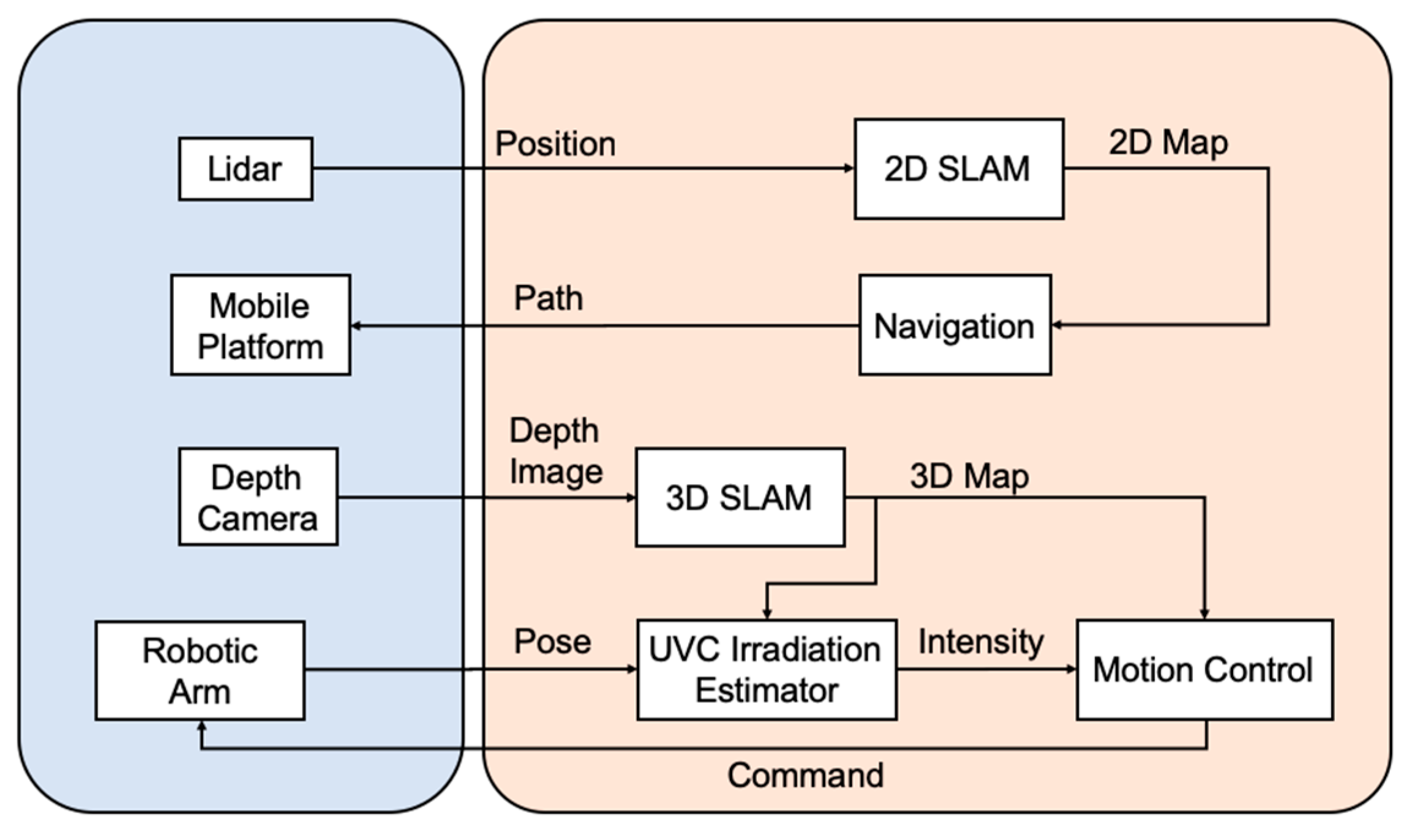

2.3. Environmental Disinfection

- Step 1:

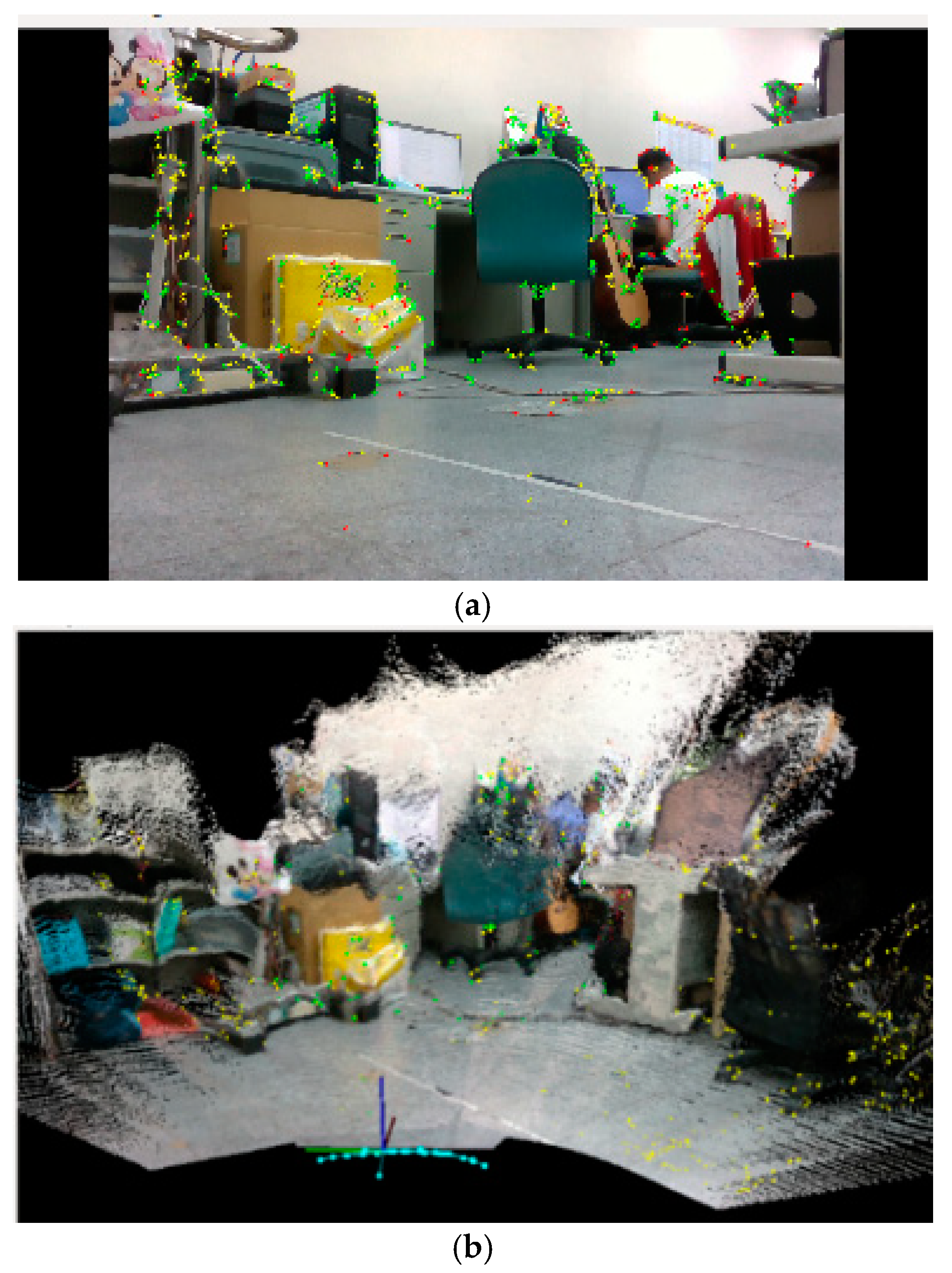

- Activate the mobile robot manipulator to explore the environment and objects.

- Step 2:

- Generate the 2D map of the environment by using the Lidar and GMapping algorithm and the 3D map for the object in the environment by using the depth camera and RTABMap algorithm.

- Step 3:

- Navigate the mobile platform for disinfection in the open area. Conduct path planning for contour following of the object by using the PRM and NN algorithms and proceed with corresponding disinfection.

- Step 4:

- Estimate the UVC irradiation intensity for the locations on the object by using (7). If the intensity exceeds a preset value, move the robotic arm to the next disinfection location; otherwise, let it remain in the same location.

- Step 5:

- The process continues until the entire environment is fully disinfected.

2.4. Infection Risk Assessment

2.5. Real-Name System

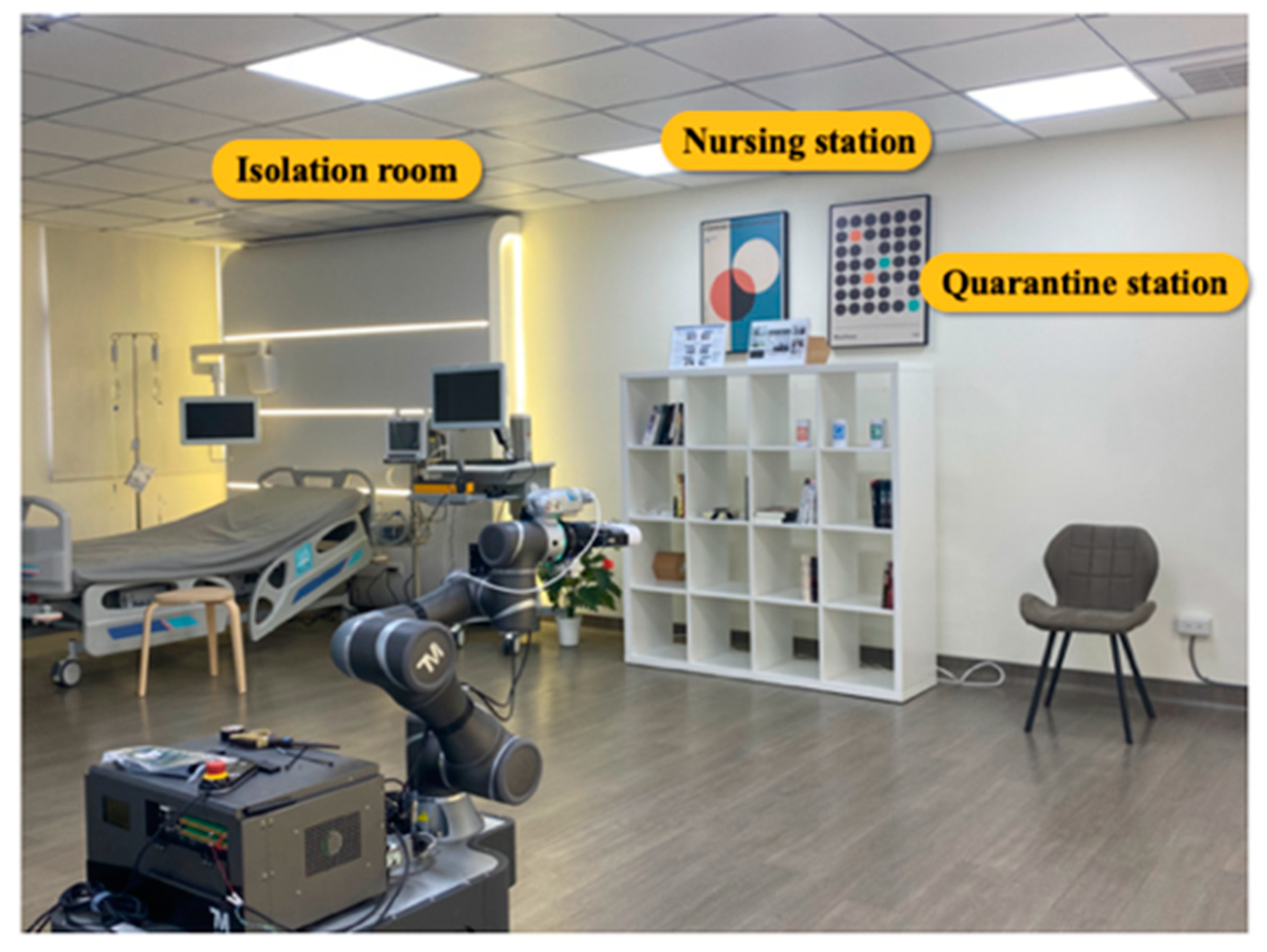

3. Experiments

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lanfranco, A.R.; Castellanos, A.E.; Desai, J.P.; Meyers, W.C. Robotic surgery: A current perspective. Ann. Surg. 2004, 239, 14. [Google Scholar] [CrossRef] [PubMed]

- Szymona, B.; Maciejewski, M.; Karpiński, R.; Jonak, K.; Radzikowska-Büchner, E.; Niderla, K.; Prokopiak, A. Robot-assisted autism therapy (RAAT). Criteria and types of experiments using anthropomorphic and zoomorphic robots. Review of the research. Sensors 2021, 21, 3720. [Google Scholar] [CrossRef] [PubMed]

- Schroeter, C.; Mueller, S.; Volkhardt, M.; Einhorn, E.; Huijnen, C.; van den Heuvel, H.; van Berlo, A.; Bley, A.; Gross, H.-M. Realization and user evaluation of a companion robot for people with mild cognitive impairments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1153–1159. [Google Scholar]

- Yang, G.; Lv, H.; Zhang, Z.; Yang, L.; Deng, J.; You, S.; Du, J.; Yang, H. Keep healthcare workers safe: Application of teleoperated robot in isolation ward for COVID-19 prevention and control. Chin. J. Mech. Eng. 2020, 33, 1–4. [Google Scholar] [CrossRef]

- Conte, D.; Leamy, S.; Furukawa, T. Design and map-based teleoperation of a robot for disinfection of COVID-19 in complex indoor environments. In Proceedings of the 2020 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Abudhabi, United Arab Emirates, 4–6 November 2020; pp. 276–282. [Google Scholar]

- Mišeikis, J.; Caroni, P.; Duchamp, P.; Gasser, A.; Marko, R.; Mišeikienė, N.; Zwilling, F.; De Castelbajac, C.; Eicher, L.; Früh, M. Lio-a personal robot assistant for human-robot interaction and care applications. IEEE Robot. Autom. Lett. 2020, 5, 5339–5346. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Li, Q.; Chen, J.; Guo, X.; Miao, C.; Yang, H.; Chen, Z.; Li, C.; Li, L. Hospital emergency management plan during the COVID-19 epidemic. Acad. Emerg. Med. 2020, 27, 309–311. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.-W.; Chen, J.; Chai, P.R.; Ehmke, C.; Rupp, P.; Dadabhoy, F.Z.; Feng, A.; Li, C.; Thomas, A.J.; da Silva, M. Mobile robotic platform for contactless vital sign monitoring. Cyborg Bionic Syst. 2022, 2022, 9780497. [Google Scholar] [CrossRef] [PubMed]

- Rane, K.P. Design and development of low cost humanoid robot with thermal temperature scanner for COVID-19 virus preliminary identification. Int. J. 2020, 9, 3485–3493. [Google Scholar] [CrossRef]

- Kim, R. Development of an autonomously navigating robot capable of conversing and scanning body temperature to help screen for COVID-19. In Proceedings of the 2021 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 8–10 October 2021; pp. 1–5. [Google Scholar]

- Nakhaeinia, D.; Laferrière, P.; Payeur, P.; Laganière, R. Safe close-proximity and physical human-robot interaction using industrial robots. In Proceedings of the 2015 12th Conference on Computer and Robot, Vision, Halifax, NS, Canada, 3–5 June 2015; pp. 237–244. [Google Scholar]

- Lasota, P.A.; Rossano, G.F.; Shah, J.A. Toward safe close-proximity human-robot interaction with standard industrial robots. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; pp. 339–344. [Google Scholar]

- Rosenstrauch, M.J.; Krüger, J. Safe human-robot-collaboration-introduction and experiment using ISO/TS 15066. In Proceedings of the 2017 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan, 24–26 April 2017; pp. 740–744. [Google Scholar]

- Donovan, J.L.; Blake, D.R. Patient non-compliance: Deviance or reasoned decision-making? Soc. Sci. Med. 1992, 34, 507–513. [Google Scholar] [CrossRef]

- Kleinsinger, F. The unmet challenge of medication nonadherence. Perm. J. 2018, 22, 18–33. [Google Scholar] [CrossRef]

- Lee, H.; Youm, S. Development of a wearable camera and AI algorithm for medication behavior recognition. Sensors 2021, 21, 3594. [Google Scholar] [CrossRef]

- Jun, K.; Oh, S.; Lee, D.-W.; Kim, M.S. Management of medication using a mobile robot and artificial intelligence. In Proceedings of the 2021 IEEE Region 10 Symposium (TENSYMP), Jeju, Republic of Korea, 23–25 August 2021; pp. 1–3. [Google Scholar]

- Osawa, R.; Huang, S.Y.; Yu, W. Development of a Medication-Taking Behavior Monitoring System Using Depth Sensor. In Proceedings of the International Conference on Intelligent Autonomous Systems, Suwon, Republic of Korea, 4–7 July 2021; pp. 250–260. [Google Scholar]

- Datta, S.; Karmakar, C.K.; Palaniswami, M. Averaging methods using dynamic time warping for time series classification. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, Australia, 1–4 December 2020; pp. 2794–2798. [Google Scholar]

- Tiseni, L.; Chiaradia, D.; Gabardi, M.; Solazzi, M.; Leonardis, D.; Frisoli, A. UV-C mobile robots with optimized path planning: Algorithm design and on-field measurements to improve surface disinfection against SARS-CoV-2. IEEE Robot. Autom. Mag. 2021, 28, 59–70. [Google Scholar] [CrossRef]

- Sanchez, A.G.; Smart, W.D. Verifiable Surface Disinfection Using Ultraviolet Light with a Mobile Manipulation Robot. Technologies 2022, 10, 48. [Google Scholar] [CrossRef]

- Brooke, J. Sus: A “quick and dirty” usability. Usability Eval. Ind. 1996, 189, 189–194. [Google Scholar]

- Su, C.-Y.; Wang, H.-C.; Ko, C.-H.; Young, K.-Y. Development of an Autonomous Robot Replenishment System for Convenience Stores. Electronics 2023, 12, 1940. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Charalambous, G.; Fletcher, S.; Webb, P. Identifying the key organisational human factors for introducing human-robot collaboration in industry: An exploratory study. Int. J. Adv. Manuf. Technol. 2015, 81, 2143–2155. [Google Scholar] [CrossRef]

- Koppenborg, M.; Nickel, P.; Naber, B.; Lungfiel, A.; Huelke, M. Effects of movement speed and predictability in human–robot collaboration. Hum. Factors Ergon. Manuf. Serv. Ind. 2017, 27, 197–209. [Google Scholar] [CrossRef]

- Connor UVC Disinfection Robot. Available online: https://www.robotlab.com/store/connor-uvc-disinfection-robot (accessed on 7 December 2023).

- LightStrike Pulsed, High Intensity, Broad Spectrum UV Light Devices. Available online: https://xenex.com/light-strike (accessed on 7 December 2023).

- Intelligent Disinfection Robot. Available online: http://www.tmirob.com/solutions/19 (accessed on 7 December 2023).

- XDBot. Available online: https://www.transformarobotics.com/xdbot (accessed on 7 December 2023).

- Intelligent Disinfection Robots Help Fight the Virus. Available online: https://www.siemens.com/global/en/company/stories/industry/intelligentrobotics-siemens-aucma.html (accessed on 7 December 2023).

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving grid-based slam with rao-blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2432–2437. [Google Scholar]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, L.; Lei, L.; Xu, F. An Improved Potential Field-Based Probabilistic Roadmap Algorithm for Path Planning. In Proceedings of the 2022 6th International Conference on Automation, Control and Robots (ICACR), Shanghai, China, 23–25 September 2022; pp. 195–199. [Google Scholar]

- Takashima, Y.; Nakamura, Y. Theoretical and Experimental Analysis of Traveling Salesman Walk Problem. In Proceedings of the 2021 IEEE Asia Pacific Conference on Circuit and Systems (APCCAS), Penang, Malaysia, 22–26 November 2021; pp. 241–244. [Google Scholar]

- Fredes, P.; Raff, U.; Gramsch, E.; Tarkowski, M. Estimation of the ultraviolet-C doses from mercury lamps and light-emitting diodes required to disinfect surfaces. J. Res. Natl. Inst. Stand. Technol. 2021, 126, 1–24. [Google Scholar] [CrossRef]

- Taiwan Center for Disease Control. Available online: https://www.cdc.gov.tw/En (accessed on 1 November 2023).

- Sanche, S.; Lin, Y.T.; Xu, C.; Romero-Severson, E.; Hengartner, N.; Ke, R. High contagiousness and rapid spread of severe acute respiratory syndrome coronavirus 2. Emerg. Infect. Dis. 2020, 26, 1470. [Google Scholar] [CrossRef] [PubMed]

- Weyersberg, L.; Sommerfeld, F.; Vatter, P.; Hessling, M. UV radiation sensitivity of bacteriophage PhiX174-A potential surrogate for SARS-CoV-2 in terms of radiation inactivation. AIMS Microbiol. 2023, 9, 431–443. [Google Scholar] [CrossRef] [PubMed]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D.K. Likert scale: Explored and explained. Br. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

| Dose Card | Proposed System | Conventional System |

|---|---|---|

| 1 | 20 | 0 |

| 2 | 25 | 10 |

| 3 | 20 | 5 |

| 4 | 25 | 15 |

| 5 | 20 | 10 |

| Questions | Strongly Disagree | Strongly Agree | ||||

|---|---|---|---|---|---|---|

| 1. | I think I am willing to use this system in the fever station (isolation ward). | |||||

| 2. | I think the use of this system in the fever station (isolation ward) is too complicated. | |||||

| 3. | I think this system is easy to use. | |||||

| 4. | I think I need help to use this system. | |||||

| 5. | I think the functions of this system are well integrated. | |||||

| 6. | I think there are too many inconsistencies in this system. | |||||

| 7. | I think most people can learn to use this system soon. | |||||

| 8. | I think this system is troublesome to use. | |||||

| 9. | I am confident that I can use this system. | |||||

| 10. | I need to learn a lot of extra information to use this system. | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, C.-Y.; Young, K.-Y. Autonomous Fever Detection, Medicine Delivery, and Environmental Disinfection for Pandemic Prevention. Appl. Sci. 2023, 13, 13316. https://doi.org/10.3390/app132413316

Su C-Y, Young K-Y. Autonomous Fever Detection, Medicine Delivery, and Environmental Disinfection for Pandemic Prevention. Applied Sciences. 2023; 13(24):13316. https://doi.org/10.3390/app132413316

Chicago/Turabian StyleSu, Chien-Yu, and Kuu-Young Young. 2023. "Autonomous Fever Detection, Medicine Delivery, and Environmental Disinfection for Pandemic Prevention" Applied Sciences 13, no. 24: 13316. https://doi.org/10.3390/app132413316