Facial Affect Recognition in Depression Using Human Avatars

Abstract

1. Introduction

- Hypothesis 1 (H1). Individuals diagnosed with MDD will demonstrate a diminished ability to recognize emotions, as well as longer reaction times compared to healthy controls.

- Hypothesis 2 (H2). Both the MDD and control groups will display greater precision in recognizing more DVFs compared to less dynamic ones, resulting in a higher number of successful identifications.

- Hypothesis 3 (H3). Both groups will exhibit greater accuracy in recognizing DVFs presented in a frontal view in comparison to those presented in profile views, resulting in a higher number of successful identifications.

- Hypothesis 4 (H4). For the depression group, differences in age will be observed, with younger participants performing better. No differences will be found in terms of gender or educational level.

2. Materials and Methods

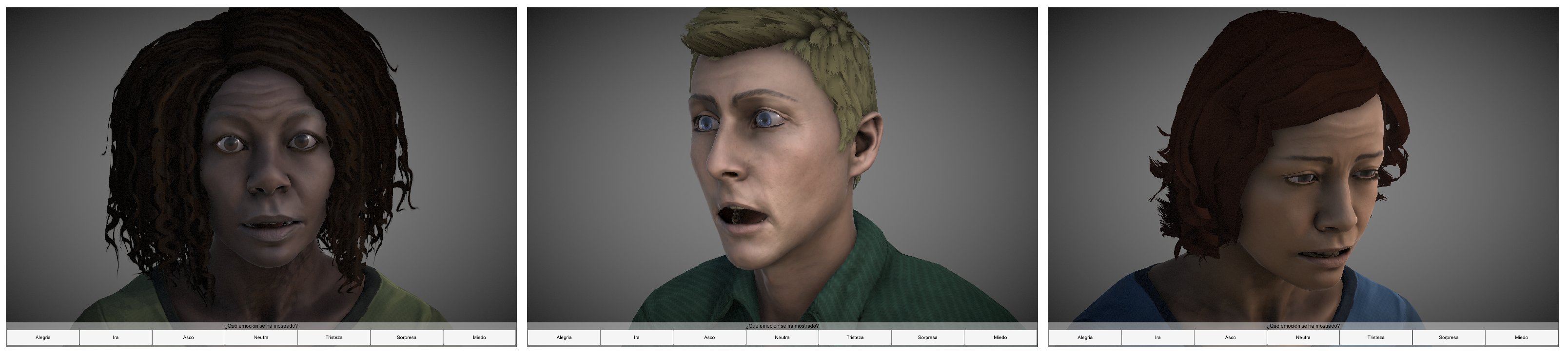

2.1. Design of Dynamic Virtual Humans

2.2. Participants

2.3. Data Collection

2.4. Experimental Procedure

2.5. Statistical Analysis

3. Results

3.1. Comparison of Recognition Scores and Reaction Times between Depression and Healthy Groups in Emotion Recognition (H1)

3.2. Influence of Dynamism of the DVFs on Emotion Recognition (H2)

3.3. Influence of the Presentation Angle of the DVFs on Emotion Recognition (H3)

3.4. Influence of Sociodemographic Data on Emotion Recognition for the Depression Group (H4)

3.4.1. Influence of Age

3.4.2. Influence of Gender

3.4.3. Influence of Educational Level

4. Discussion

4.1. Comparison of Recognition Scores and Reaction Times for the Depression and Healthy Groups in Emotion Recognition (H1)

4.2. Influence of Dynamism of the DVFs on Emotion Recognition (H2)

4.3. Influence of the Presentation Angle of the DVFs on Emotion Recognition (H3)

4.4. Influence of Sociodemographic Data on Emotion Recognition for the Depression Group (Recognition Scores and Reaction Times) (H4)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Depression. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/depression (accessed on 30 March 2022).

- Ferrari, A.J.; Charlson, F.J.; Norman, R.E.; Patten, S.B.; Freedman, G.; Murray, C.J.; Vos, T.; Whiteford, H.A. Burden of Depressive Disorders by Country, Sex, Age, and Year: Findings from the Global Burden of Disease Study 2010. PLoS Med. 2013, 10, e1001547. [Google Scholar] [CrossRef]

- Lakhan, R.; Agrawal, A.; Sharma, M. Prevalence of depression, anxiety, and stress during COVID-19 pandemic. J. Neurosci. Rural. Pract. 2020, 11, 519–525. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Xue, J.; Zhao, N.; Zhu, T. The Impact of COVID-19 Epidemic Declaration on Psychological Consequences: A Study on Active Weibo Users. Int. J. Environ. Res. Public Health 2020, 17, 2032. [Google Scholar] [CrossRef]

- Salari, N.; Hosseinian-Far, A.; Jalali, R.; Vaisi-Raygani, A.; Rasoulpoor, S.; Mohammadi, M.; Rasoulpoor, S.; Khaledi-Paveh, B. Prevalence of stress, anxiety, depression among the general population during the COVID-19 pandemic: A systematic review and meta-analysis. Glob. Health 2020, 16, 57. [Google Scholar] [CrossRef]

- Lozano-Monasor, E.; López, M.T.; Fernández-Caballero, A.; Vigo-Bustos, F. Facial Expression Recognition from Webcam Based on Active Shape Models and Support Vector Machines. In Proceedings of the Ambient Assisted Living and Daily Activities; Pecchia, L., Chen, L.L., Nugent, C., Bravo, J., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 147–154. [Google Scholar]

- Mayer, J.D.; Salovey, P.; Caruso, D.R.; Sitarenios, G. Emotional intelligence as a standard intelligence. Emotion 2001, 1, 232–242. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Sotos, P.; Torio, I.; Fernández-Caballero, A.; Navarro, E.; González, P.; Dompablo, M.; Rodriguez-Jimenez, R. Social cognition remediation interventions: A systematic mapping review. PLoS ONE 2019, 14, e0218720. [Google Scholar] [CrossRef]

- Pinkham, A.E.; Penn, D.L.; Green, M.F.; Buck, B.; Healey, K.; Harvey, P.D. The Social Cognition Psychometric Evaluation Study: Results of the Expert Survey and RAND Panel. Schizophr. Bull. 2013, 40, 813–823. [Google Scholar] [CrossRef] [PubMed]

- Monferrer, M.; Ricarte, J.J.; Montes, M.J.; Fernández-Caballero, A.; Fernández-Sotos, P. Psychosocial remediation in depressive disorders: A systematic review. J. Affect. Disord. 2021, 290, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Krause, F.C.; Linardatos, E.; Fresco, D.M.; Moore, M.T. Facial emotion recognition in major depressive disorder: A meta-analytic review. J. Affect. Disord. 2021, 293, 320–328. [Google Scholar] [CrossRef]

- Everaert, J.; Podina, I.R.; Koster, E.H. A comprehensive meta-analysis of interpretation biases in depression. Clin. Psychol. Rev. 2017, 58, 33–48. [Google Scholar] [CrossRef]

- Bourke, C.; Douglas, K.; Porter, R. Processing of Facial Emotion Expression in Major Depression: A Review. Aust. N. Z. J. Psychiatry 2010, 44, 681–696. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Fujimoto, Y.; Kanbara, M.; Kato, H. Virtual Reality as a Reflection Technique for Public Speaking Training. Appl. Sci. 2021, 11, 3988. [Google Scholar] [CrossRef]

- Heyse, J.; Torres Vega, M.; De Jonge, T.; De Backere, F.; De Turck, F. A Personalised Emotion-Based Model for Relaxation in Virtual Reality. Appl. Sci. 2020, 10, 6124. [Google Scholar] [CrossRef]

- Kamińska, D.; Sapiński, T.; Wiak, S.; Tikk, T.; Haamer, R.E.; Avots, E.; Helmi, A.; Ozcinar, C.; Anbarjafari, G. Virtual Reality and Its Applications in Education: Survey. Information 2019, 10, 318. [Google Scholar] [CrossRef]

- Maples-Keller, J.L.; Bunnell, B.E.; Kim, S.J.; Rothbaum, B.O. The use of virtual reality technology in the treatment of anxiety and other psychiatric disorders. Harv. Rev. Psychiatry 2017, 25, 103–113. [Google Scholar] [CrossRef]

- Pallavicini, F.; Orena, E.; Achille, F.; Cassa, M.; Vuolato, C.; Stefanini, S.; Caragnano, C.; Pepe, A.; Veronese, G.; Ranieri, P.; et al. Psychoeducation on Stress and Anxiety Using Virtual Reality: A Mixed-Methods Study. Appl. Sci. 2022, 12, 9110. [Google Scholar] [CrossRef]

- Bolinski, F.; Etzelmüller, A.; De Witte, N.A.; van Beurden, C.; Debard, G.; Bonroy, B.; Cuijpers, P.; Riper, H.; Kleiboer, A. Physiological and self-reported arousal in virtual reality versus face-to-face emotional activation and cognitive restructuring in university students: A crossover experimental study using wearable monitoring. Behav. Res. Ther. 2021, 142, 103877. [Google Scholar] [CrossRef]

- Morina, N.; Kampmann, I.; Emmelkamp, P.; Barbui, C.; Hoppen, T.H. Meta-analysis of virtual reality exposure therapy for social anxiety disorder. Psychol. Med. 2021, 1–3. [Google Scholar] [CrossRef]

- Zainal, N.H.; Chan, W.W.; Saxena, A.P.; Taylor, C.B.; Newman, M.G. Pilot randomized trial of self-guided virtual reality exposure therapy for social anxiety disorder. Behav. Res. Ther. 2021, 147, 103984. [Google Scholar] [CrossRef]

- Fernández-Sotos, P.; Fernández-Caballero, A.; Rodriguez-Jimenez, R. Virtual reality for psychosocial remediation in schizophrenia: A systematic review. Eur. J. Psychiatry 2020, 34, 1–10. [Google Scholar] [CrossRef]

- Horigome, T.; Kurokawa, S.; Sawada, K.; Kudo, S.; Shiga, K.; Mimura, M.; Kishimoto, T. Virtual reality exposure therapy for social anxiety disorder: A systematic review and meta-analysis. Psychol. Med. 2020, 50, 2487–2497. [Google Scholar] [CrossRef] [PubMed]

- Ioannou, A.; Papastavrou, E.; Avraamides, M.N.; Charalambous, A. Virtual Reality and Symptoms Management of Anxiety, Depression, Fatigue, and Pain: A Systematic Review. SAGE Open Nurs. 2020, 6, 2377960820936163. [Google Scholar] [CrossRef]

- Dyck, M.; Winbeck, M.; Leiberg, S.; Chen, Y.; Gur, R.C.; Mathiak, K. Recognition Profile of Emotions in Natural and Virtual Faces. PLoS ONE 2008, 3, e3628. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Mountain View, CA, USA, 1978. [Google Scholar]

- Höfling, T.T.A.; Alpers, G.W.; Büdenbender, B.; Föhl, U.; Gerdes, A.B.M. What’s in a face: Automatic facial coding of untrained study participants compared to standardized inventories. PLoS ONE 2022, 17, e0263863. [Google Scholar] [CrossRef]

- García, A.S.; Fernández-Sotos, P.; Vicente-Querol, M.A.; Lahera, G.; Rodriguez-Jimenez, R.; Fernández-Caballero, A. Design of reliable virtual human facial expressions and validation by healthy people. Integr. Comput. Aided Eng. 2020, 27, 287–299. [Google Scholar] [CrossRef]

- Fernández-Sotos, P.; García, A.S.; Vicente-Querol, M.A.; Lahera, G.; Rodriguez-Jimenez, R.; Fernández-Caballero, A. Validation of dynamic virtual faces for facial affect recognition. PLoS ONE 2021, 16, e0246001. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues; Ishk: San Jose, CA, USA, 2003. [Google Scholar]

- Sayette, M.A.; Cohn, J.F.; Wertz, J.M.; Perrott, M.A.; Parrott, D.J. A psychometric evaluation of the facial action coding system for assessing spontaneous expression. J. Nonverbal Behav. 2001, 25, 167–185. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; DSM-5; American Psychiatric Association: Arlington, VA, USA, 2013. [Google Scholar]

- Dalili, M.N.; Penton-Voak, I.S.; Harmer, C.J.; Munafò, M.R. Meta-analysis of emotion recognition deficits in major depressive disorder. Psychol. Med. 2015, 45, 1135–1144. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Llinares, C.; Guixeres, J.; Alcañiz, M. Emotion Recognition in Immersive Virtual Reality: From Statistics to Affective Computing. Sensors 2020, 20, 5163. [Google Scholar] [CrossRef]

- Alders, G.L.; Davis, A.D.; MacQueen, G.; Strother, S.C.; Hassel, S.; Zamyadi, M.; Sharma, G.B.; Arnott, S.R.; Downar, J.; Harris, J.K.; et al. Reduced accuracy accompanied by reduced neural activity during the performance of an emotional conflict task by unmedicated patients with major depression: A CAN-BIND fMRI study. J. Affect. Disord. 2019, 257, 765–773. [Google Scholar] [CrossRef]

- Kohler, C.G.; Hoffman, L.J.; Eastman, L.B.; Healey, K.; Moberg, P.J. Facial emotion perception in depression and bipolar disorder: A quantitative review. Psychiatry Res. 2011, 188, 303–309. [Google Scholar] [CrossRef] [PubMed]

- Suslow, T.; Günther, V.; Hensch, T.; Kersting, A.; Bodenschatz, C.M. Alexithymia Is Associated With Deficits in Visual Search for Emotional Faces in Clinical Depression. Front. Psychiatry 2021, 12, 668019. [Google Scholar] [CrossRef] [PubMed]

- Senior, C.; Hassel, S.; Waheed, A.; Ridout, N. Naming emotions in motion: Alexithymic traits impact the perception of implied motion in facial displays of affect. Emotion 2020, 20, 311–316. [Google Scholar] [CrossRef] [PubMed]

- de Lima Bomfim, A.J.; dos Santos Ribeiro, R.A.; Chagas, M.H.N. Recognition of dynamic and static facial expressions of emotion among older adults with major depression. Trends Psychiatry Psychother. 2019, 41, 159–166. [Google Scholar] [CrossRef]

- Guo, W.; Yang, H.; Liu, Z.; Xu, Y.; Hu, B. Deep Neural Networks for Depression Recognition Based on 2D and 3D Facial Expressions Under Emotional Stimulus Tasks. Front. Neurosci. 2021, 15, 609760. [Google Scholar] [CrossRef]

- Calvo, M.G.; Avero, P.; Fernández-Martín, A.; Recio, G. Recognition thresholds for static and dynamic emotional faces. Emotion 2016, 16, 1186–1200. [Google Scholar] [CrossRef]

- Thompson, A.E.; Voyer, D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cogn. Emot. 2014, 28, 1164–1195. [Google Scholar] [CrossRef]

- Wright, S.L.; Langenecker, S.A.; Deldin, P.J.; Rapport, L.J.; Nielson, K.A.; Kade, A.M.; Own, L.S.; Akil, H.; Young, E.A.; Zubieta, J.K. Gender-specific disruptions in emotion processing in younger adults with depression. Depress. Anxiety 2009, 26, 182–189. [Google Scholar] [CrossRef]

- Ochi, R.; Midorikawa, A. Decline in Emotional Face Recognition Among Elderly People May Reflect Mild Cognitive Impairment. Front. Psychol. 2021, 12, 664367. [Google Scholar] [CrossRef]

- Murphy, J.; Millgate, E.; Geary, H.; Catmur, C.; Bird, G. No effect of age on emotion recognition after accounting for cognitive factors and depression. Q. J. Exp. Psychol. 2019, 72, 2690–2704. [Google Scholar] [CrossRef]

- Brzezińska, A.; Bourke, J.; Rivera-Hernández, R.; Tsolaki, M.; Woźniak, J.; Kaźmierski, J. Depression in Dementia or Dementia in Depression? Systematic Review of Studies and Hypotheses. Curr. Alzheimer Res. 2020, 17, 16–28. [Google Scholar] [CrossRef] [PubMed]

- Santabárbara, J.; Villagrasa, B.; Gracia-García, P. Does depression increase the risk of dementia? Updated meta-analysis of prospective studies. Actas Españolas Psiquiatr. 2020, 48, 169–180. [Google Scholar]

- Arshad, F.; Paplikar, A.; Mekala, S.; Varghese, F.; Purushothaman, V.V.; Kumar, D.J.; Shingavi, L.; Vengalil, S.; Ramakrishnan, S.; Yadav, R.; et al. Social Cognition Deficits Are Pervasive across Both Classical and Overlap Frontotemporal Dementia Syndromes. Dement. Geriatr. Cogn. Disord. Extra 2020, 10, 115–126. [Google Scholar] [CrossRef] [PubMed]

- Torres Mendonça De Melo Fádel, B.; Santos De Carvalho, R.L.; Belfort Almeida Dos Santos, T.T.; Dourado, M.C.N. Facial expression recognition in Alzheimer’s disease: A systematic review. J. Clin. Exp. Neuropsychol. 2019, 41, 192–203. [Google Scholar] [CrossRef]

| MDD Group | Healthy Group | |

|---|---|---|

| Sample [n] | 54 | 54 |

| Gender [female:male] | 34:20 | 34:20 |

| Age [mean (SD)] | 53.20 (13.63) | 50.54 (13.72) |

| Age [n] | ||

| Young (20–39) | 9 | 10 |

| Middle-age (40–59) | 27 | 27 |

| Elderly (60–79) | 18 | 17 |

| Education level [n] | ||

| Basic | 17 | 17 |

| Medium | 21 | 21 |

| High | 16 | 16 |

| MDD Group | Neutral | Surprise | Fear | Anger | Disgust | Joy | Sadness |

|---|---|---|---|---|---|---|---|

| Neutral | 90.3% | 2.8% | 0.9% | 1.4% | 0.5% | 0.5% | 3.7% |

| Surprise | 2.3% | 89.6% | 4.4% | 1.4% | 0.2% | 0.9% | 1.2% |

| Fear | 1.2% | 41.7% | 48.4% | 2.8% | 1.9% | 0.2% | 3.9% |

| Anger | 2.1% | 5.1% | 2.5% | 83.6% | 5.3% | 0.0% | 1.4% |

| Disgust | 1.2% | 7.2% | 4.2% | 19.9% | 66.9% | 0.2% | 0.5% |

| Joy | 6.0% | 4.2% | 0.9% | 1.4% | 2.3% | 84.5% | 0.7% |

| Sadness | 7.9% | 7.6% | 8.1% | 8.1% | 4.4% | 0.9% | 63.0% |

| Healthy group | Neutral | Surprise | Fear | Anger | Disgust | Joy | Sadness |

| Neutral | 94.0% | 0.5% | 0.5% | 0.0% | 0.9% | 0.0% | 4.2% |

| Surprise | 0.9% | 90.3% | 8.3% | 0.0% | 0.0% | 0.0% | 0.5% |

| Fear | 0.9% | 12.7% | 77.3% | 0.2% | 0.7% | 0.0% | 8.1% |

| Anger | 0.7% | 1.2% | 1.9% | 92.4% | 3.0% | 0.0% | 0.9% |

| Disgust | 0.2% | 0.5% | 0.9% | 13.2% | 85.0% | 0.0% | 0.2% |

| Joy | 4.9% | 0.7% | 0.2% | 0.7% | 0.5% | 93.1% | 0.0% |

| Sadness | 3.0% | 3.5% | 5.6% | 0.9% | 1.6% | 0.0% | 85.4% |

| Neutral | Surprise | Fear | Anger | Disgust | Joy | Sadness | |

|---|---|---|---|---|---|---|---|

| MDD group | 6.25 (3.38) | 3.93 (1.61) | 4.55 (1.63) | 4.59 (2.87) | 4.55 (1.56) | 4.47 (2.97) | 5.41 (2.75) |

| Healthy group | 2.85 (1.24) | 2.73 (1.16) | 2.53 (1.02) | 2.58 (1.14) | 2.46 (1.04) | 2.25 (0.84) | 2.16 (0.77) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monferrer, M.; García, A.S.; Ricarte, J.J.; Montes, M.J.; Fernández-Sotos, P.; Fernández-Caballero, A. Facial Affect Recognition in Depression Using Human Avatars. Appl. Sci. 2023, 13, 1609. https://doi.org/10.3390/app13031609

Monferrer M, García AS, Ricarte JJ, Montes MJ, Fernández-Sotos P, Fernández-Caballero A. Facial Affect Recognition in Depression Using Human Avatars. Applied Sciences. 2023; 13(3):1609. https://doi.org/10.3390/app13031609

Chicago/Turabian StyleMonferrer, Marta, Arturo S. García, Jorge J. Ricarte, María J. Montes, Patricia Fernández-Sotos, and Antonio Fernández-Caballero. 2023. "Facial Affect Recognition in Depression Using Human Avatars" Applied Sciences 13, no. 3: 1609. https://doi.org/10.3390/app13031609

APA StyleMonferrer, M., García, A. S., Ricarte, J. J., Montes, M. J., Fernández-Sotos, P., & Fernández-Caballero, A. (2023). Facial Affect Recognition in Depression Using Human Avatars. Applied Sciences, 13(3), 1609. https://doi.org/10.3390/app13031609