The Extended Information Systems Success Measurement Model: e-Learning Perspective

Abstract

1. Introduction

2. Background

2.1. Taxonomy of Information Systems Success

2.2. The Role of Workforce Agility in Is Success

3. Research Framework and Hypothesis Development

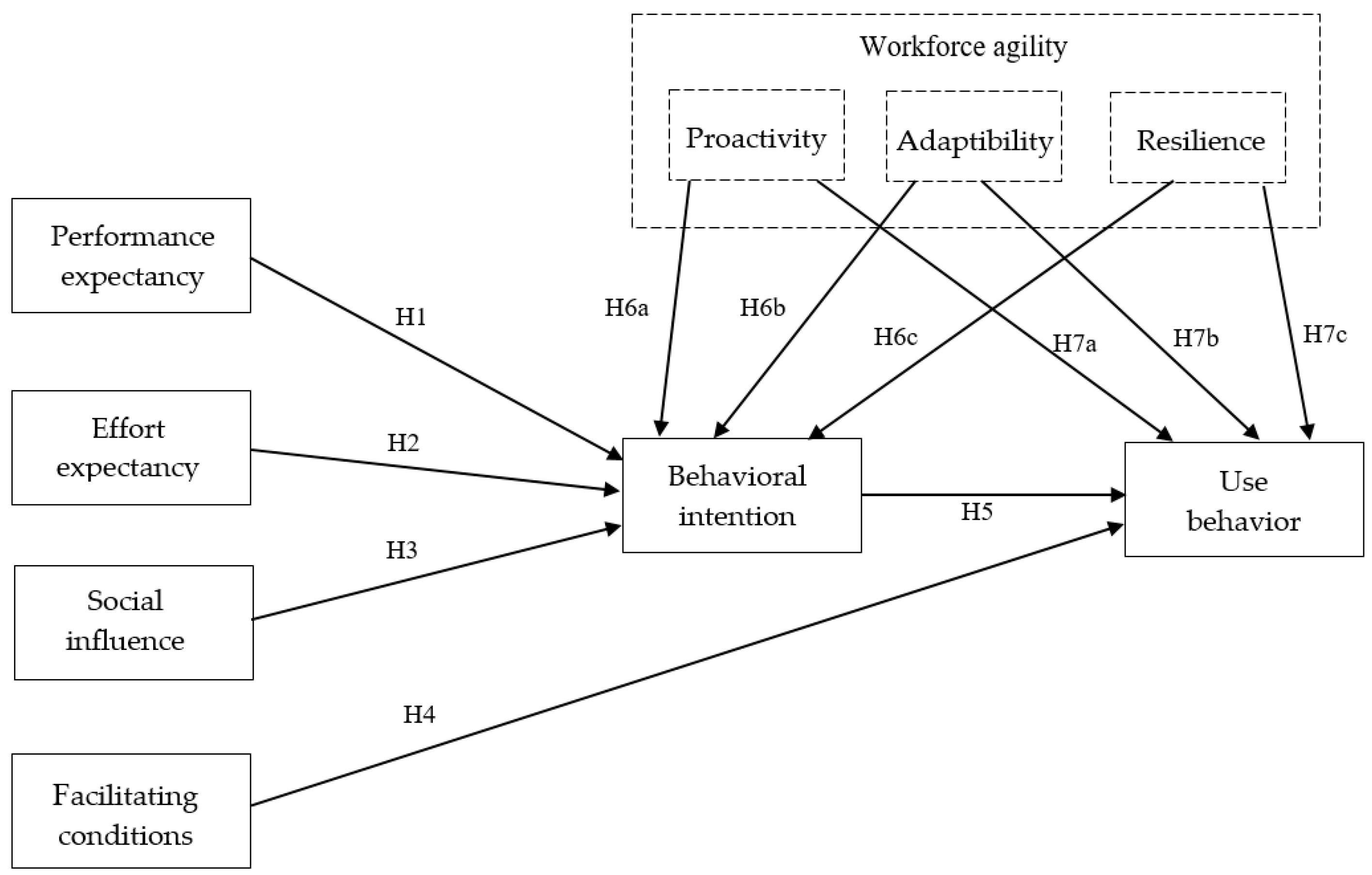

3.1. Research Framework: The Extended Information Systems Success Measurement Model (EISSMM)

3.2. Hypotheses Development

4. Methods and Materials

4.1. Research Instrument

4.2. Sample and Data Collection

4.3. Sample Demographics

5. Results

5.1. UNS Results

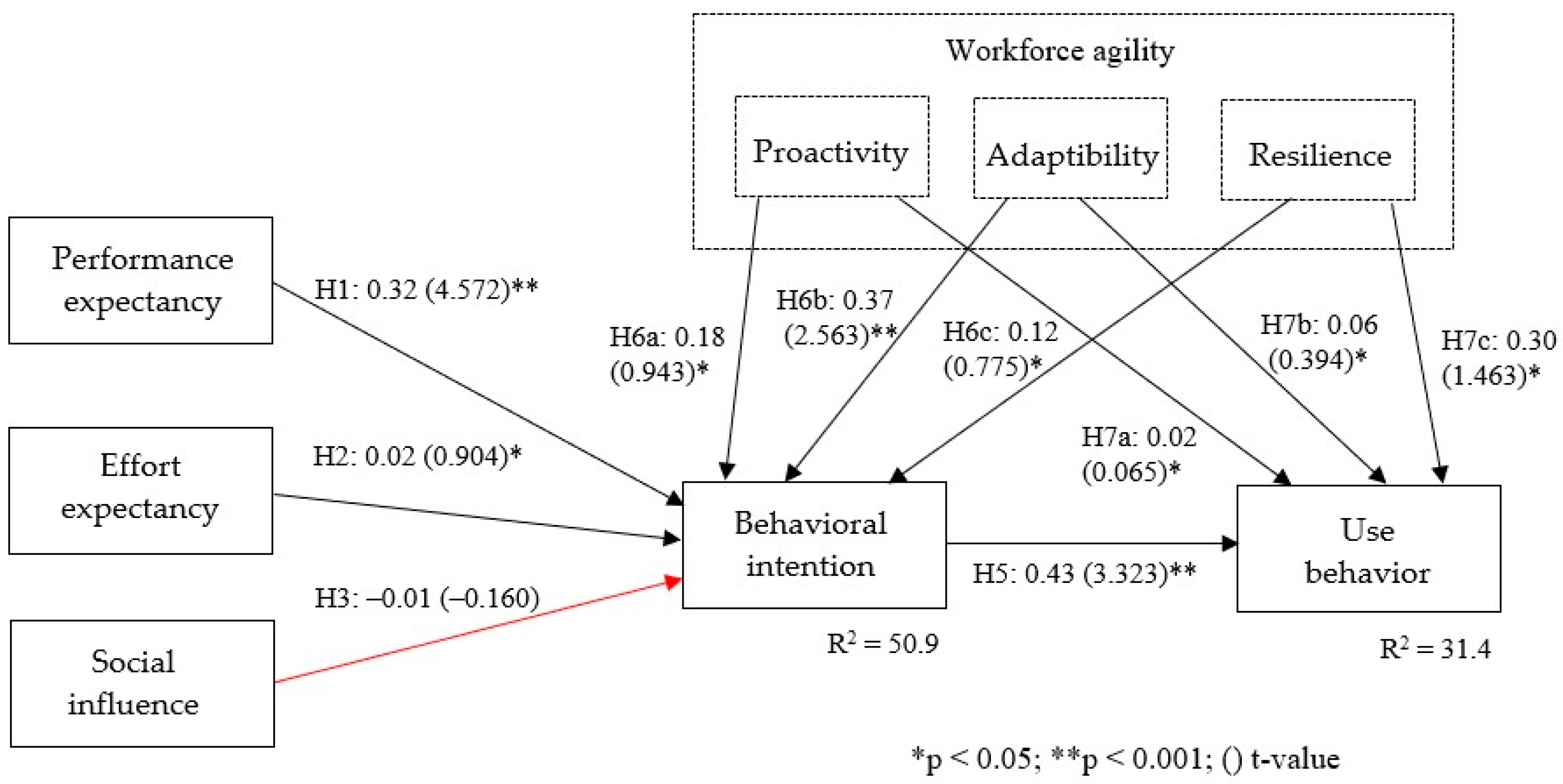

5.2. PICB Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- DeLone, W.; McLean, E. Information Systems Success: The Quest for the Dependent Variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Maldonado, U.P.T.; Khan, G.F.; Moon, J.; Rho, J.J. E-learning motivation and educational portal acceptance in developing countries. Online Inf. Rev. 2011, 35, 66–85. [Google Scholar] [CrossRef]

- Lolić, T.; Ristić, S.; Stefanović, D.; Marjanović, U. Acceptance of E-Learning System at Faculty of Technical Sciences. In Proceedings of the Cental European Conference on Information and Intelligent Systems, Varazdin, Croatia, 19–21 September 2018. [Google Scholar]

- Lolic, T.; Dionisio, R.; Ciric, D.; Ristic, S.; Stefanovic, D. Factors Influencing Students Usage of an e-Learning System: Evidence from IT Students. In Proceedings of the International Joint Conference on Industrial Engineering and Operations Management—IJCIEOM, Rio de Janeiro, Brazil, 8–11 July 2020. [Google Scholar] [CrossRef]

- Lolic, T.; Stefanovic, D.; Dionisio, R.; Havzi, S. Assessing Engineering Students’ Acceptance of an E-Learning System: A Longitudinal Study. Int. J. Eng. Educ. 2021, 37, 785–796. [Google Scholar]

- Ghobakhloo, M.; Tang, S.H. Information Technology for Development Information system success among manufacturing SMEs: Case of developing countries. J. Assoc. Inf. Syst. 2015, 21, 573–600. [Google Scholar] [CrossRef]

- Wang, Y.S.; Liao, Y.W. Assessing eGovernment systems success: A validation of the DeLone and McLean model of information systems success. Gov. Inf. Q. 2008, 25, 717–733. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Dec. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Unified Theory of Acceptance and Use of Technology: A Synthesis and the Road Ahead. J. Assoc. Inf. Syst. 2016, 17, 328–376. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. Mis Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Forrester. IT Spending Forecasts; Forrester: New York, NY, USA, 2019. [Google Scholar]

- Gartner. Gartner Worldwide IT Spending Forecast Available; Gartner Inc.: New York, NY, USA, 2019. [Google Scholar]

- Breu, K.; Hemingway, C.J.; Strathern, M.; Bridger, D. Workforce agility: The new employee strategy for the knowledge economy. J. Inf. Technol. 2001, 17, 21–31. [Google Scholar] [CrossRef]

- Stefanovic, D. Prilog Istraživanju Uslova za Integraciju Savremenih Ict u Poslovanju Industrijskih Proizvodno—Poslovnih Sistema. Ph.D. Thesis, Univerzitet u Novom Sadu, Novi Sad, Serbia, 2012. [Google Scholar]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 19, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, F.D.; Davis, V.A. Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Ajzen, I. The Theory of Planned Behavior. Organ. Behav. Hum. Decis. Process 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Compeau, S.; Higgins, D. Social Cognitive Theory and Individual Reactions to Computing Technology. MIS Q. 1999, 23, 145–159. [Google Scholar] [CrossRef]

- Klobas, J.E.; McGill, T.J. The role of involvement in learning management system success. J. Comput. High. Educ. 2010, 22, 114–134. [Google Scholar] [CrossRef]

- Jang, C.-L. Measuring Electronic Government Procurement Success and Testing for the Moderating Effect of Computer Self-efficacy. Int. J. Digit. Content Technol. Its Appl. 2010, 4, 224–232. [Google Scholar] [CrossRef]

- Janssen, M.; Lamersdorf, W.; Pries-Heje, J.; Rosemann, M. E-Government. E-Services and Global processes. In Proceedings of the Joint IFIP TC 8 and TC 6 International Conferences, EGES 2010 and GISP 2010, Held as Part of WCC 2010, Brisbane, Australia, 20–23 September 2010. [Google Scholar]

- Chang, H.C.; Liu, C.F.; Hwang, H.G. Exploring nursing e-learning systems success based on information system success model. Comp. Inf. Nurs. 2011, 29, 741–747. [Google Scholar] [CrossRef]

- Floropoulos, J.; Spathis, C.; Halvatzis, D.; Tsipouridou, M. Measuring the success of the Greek Taxation Information System. Int. J. Inf. Manag. 2010, 30, 47–56. [Google Scholar] [CrossRef]

- Hsiao, N.; Chu, P.; Lee, C. Impact of E-Governance on Businesses: Model Development and Case Study. In Electronic Governance and Cross-Boundary Collaboration: Innovations and Advancing Tools; Chen, Y., Chu, P., Eds.; IGI Global: Hershey, PA, USA, 2012; pp. 1407–1425. [Google Scholar] [CrossRef]

- Alkhalaf, S.; Nguyen, A.; Drew, S.; Jones, V. Measuring the Information Quality of e-Learning Systems in KSA: Attitudes and Perceptions of Learners. In Proceedings of the Robot Intelligence Technology and Applications 2012, Advances in Intelligent Systems and Computing, Berlin, Germany, 16–17 December 2013. [Google Scholar]

- Visser, M.; Biljon, J.V.; Herselman, M. Modeling management information systems’ success. In Proceedings of the South African Institute for Computer Scientists and Information Technologists Conference, Pretoria, South Africa, 1–3 October 2012. [Google Scholar] [CrossRef]

- Halonen, R.; Thomander, H.; Laukkanen, E. DeLone & McLean IS Success Model in Evaluating Knowledge Transfer in a Virtual Learning Environment. Int. J. Inf. Syst. Soc. Chang. 2010, 1, 36–48. [Google Scholar] [CrossRef]

- Abdelsalam, H.; Reddick, C.; Kadi, H. Success and failure of local e-government projects: Lessons learned from Egypt. In Managing E-Government Projects: Concepts, Issues and Best Practices; IGI Global: Hershey, PA, USA, 2012; pp. 242–261. [Google Scholar] [CrossRef]

- Khayun, V.; Ractham, P.; Firpo, D. Assessing e-Excise sucess with Delone and Mclean’s model. J. Comput. Inf. Syst. 2012, 52, 31–40. [Google Scholar]

- Hollmann, V.; Lee, H.; Zo, H.; Ciganek, A.P. Examining success factors of open source software repositories: The case of OSOR.eu portal. Int. J. Bus. Inf. Syst. 2013, 14, 1–20. [Google Scholar] [CrossRef]

- Lwoga, E.T. Critical success factors for adoption of web-based learning management systems in Tanzania. Int. J. Educ. Dev. Using Inf. Commun. Technol. 2014, 10, 4–21. [Google Scholar] [CrossRef]

- Komba, M.M.; Ngulube, P. An Empirical Application of the DeLone and McLean Model to Examine Factors for E-Government Adoption in the Selected Districts of Tanzania. In Emerging Issues and Prospects in African E-Government; IGI Global: Hershey, PA, USA, 2014. [Google Scholar] [CrossRef]

- Ramírez-Correa, P.; Alfaro-Peréz, J.; Cancino-Flores, L. Meta-analysis of the DeLone and McLean information systems success model at individual level: An examination of the heterogeneity of the studies. Espacios 2015, 36, 11–19. [Google Scholar] [CrossRef]

- Huang, Y.M.; Pu, Y.H.; Chen, T.S.; Chiu, P.S. Development and evaluation of the mobile library service system success model A case study of Taiwan. Electron. Libr. 2015, 33, 1174–1192. [Google Scholar] [CrossRef]

- Arshad, Y.; Azrin, M.; Afiqah, S.N. The influence of information system success factors towards user satisfaction in universiti teknikal Malaysia Melaka. J. Eng. Appl. Sci. 2015, 10, 18155–18164. [Google Scholar]

- Yosep, Y. Analysis of Relationship between Three Dimensions of Quality, User Satisfaction, and E-Learning Usage of Binus Online Learning. Comm. Inf. Technol. J. 2015, 9, 67–72. [Google Scholar] [CrossRef]

- Seyal, A.H.; Rahman, M.N.A.; Zurainah, A.; Tazrajiman, S. Evaluating User Satisfaction with Bruneian E-Government Website: A Case of e-Darussalam. In Proceedings of the 26th IBIMA Conference, Madrid, Spain, 11–15 November 2015. [Google Scholar]

- Khudhair, R.S. An Empirical Test of Information System Success Model in a University’s Electronic Services. Adv. Nat. Appl. Sci. 2016, 10, 1–10. [Google Scholar]

- Masrek, M.N.; Gaskin, J.E. Assessing users satisfaction with web digital library: The case of Universiti Teknologi MARA. Int. J. Inf. Learn. Technol. 2016, 33, 36–56. [Google Scholar] [CrossRef]

- Gorla, N.; Chiravuri, A. Developing electronic government success models for G2C and G2B scenarios. In Proceedings of the 2016 International Conference on Information Management ICIM 2016, London, UK, 7–8 May 2016. [Google Scholar] [CrossRef]

- Alzahrani, A.I.; Mahmud, I.; Ramayah, T.; Alfarraj, O.; Alalwan, N. Modelling digital library success using the DeLone and McLean information system success model. J. Librariansh. Inf. Sci. 2019, 51, 291–306. [Google Scholar] [CrossRef]

- Zheng, Q.; Liang, C.Y. The path of new information technology affecting educational equality in the new digital divide-based on information system success model. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 3587–3597. [Google Scholar] [CrossRef]

- Al-Nassar, B.A.Y. The influence of service quality in information system success model as an antecedent of mobile learning in education organizations: Case study in Jordan. Int. J. Mob. Learn. Organ. 2017, 11, 41–62. [Google Scholar] [CrossRef]

- Santos, A.D.; Santoso, A.J.; Setyohadi, D.B. The analysis of academic information system success: A case study at Instituto Profissional de Canossa (IPDC) Dili timor-leste. In Proceedings of the 2017 International Conference on Soft Computing, Intelligent System and Information Technology, Thessaloniki, Greece, 7–8 July 2017. [Google Scholar] [CrossRef]

- Wirtz, B.W.; Mory, L.; Piehler, R.; Daiser, P. E-government: A citizen relationship marketing analysis. Int. Rev. Public Nonprofit Mark. 2017, 14, 149–178. [Google Scholar] [CrossRef]

- Hassan, N.S.; Seyal, A.H. Measuring Success of Higher Education Centralised Administration Information System: An e-Government Initiative. In Proceedings of the 15th European Conference on eGovernment 2015: ECEG 2015, Ljubljana, Slovenia, 16–17 June 2015. [Google Scholar]

- Aldholay, A.; Isaac, O.; Abdullah, Z.; Abdulsalam, R.; Al-Shibami, A.H. An extension of Delone and McLean IS success model with self-efficacy: Online learning usage in Yemen. Int. J. Inf. Learn. Technol. 2018, 35, 285–304. [Google Scholar] [CrossRef]

- Chaw, L.Y.; Tang, C.M. What Makes Learning Management Systems Effective for Learning? J. Educ. Technol. Syst. 2018, 47, 152–169. [Google Scholar] [CrossRef]

- Ke, P.; Su, F. Mediating effects of user experience usability: An empirical study on mobile library application in China. Electron. Libr. 2018, 36, 892–909. [Google Scholar] [CrossRef]

- Alksasbeh, M.; Abuhelaleh, M.; Almaiah, M.A.; Al-Jaafreh, M.; Karaka, A.A. Towards a model of quality features for mobile social networks apps in learning environments: An extended information system success model. Int. J. Interact. Mob. Technol. 2019, 13, 75–93. [Google Scholar] [CrossRef]

- Hariguna, T.; Rahardja, U.; Aini, Q.; Nurfaizah. Effect of social media activities to determinants public participate intention of e-government. Procedia Comput. Sci. 2019, 161, 233–241. [Google Scholar] [CrossRef]

- Chen, Y.C.; Hu, L.T.; Tseng, K.C.; Juang, W.J.; Chang, C.K. Cross-boundary e-government systems: Determinants of performance. Gov. Inf. Q. 2019, 36, 449–459. [Google Scholar] [CrossRef]

- Lee, E.Y.; Jeon, Y.J.J. The difference of user satisfaction and net benefit of a mobile learning management system according to self-directed learning: An investigation of cyber university students in hospitality. Sustainability 2020, 12, 2672. [Google Scholar] [CrossRef]

- Dalle, J.; Mahmud, D.H.; Prasetia, I.; Baharuddin. Delone and mclean model evaluation of information system success: A case study of master program of civil engineering universitas lambung mangkurat. Int. J. Adv. Sci. Technol. 2020, 29, 1909–1919. [Google Scholar]

- Salam, M.; Farooq, M.S. Does sociability quality of web-based collaborative learning information system influence students’ satisfaction and system usage? Int. J. Educ. Technol. High. Educ. 2020, 17, 26–32. [Google Scholar] [CrossRef]

- Koh, J.H.L.; Kan, R.Y.P. Perceptions of learning management system quality, satisfaction, and usage: Differences among students of the arts. Australas. J. Educ. Technol. 2020, 36, 26–40. [Google Scholar] [CrossRef]

- Lin, F.; Fofanah, S.S.; Liang, D. Assessing citizen adoption of e-Government initiatives in Gambia: A validation of the technology acceptance model in information systems success. Gov. Inf. Q. 2011, 28, 271–279. [Google Scholar] [CrossRef]

- Wang, W.; Wang, C. An empirical study of instructor adoption of web-based learning systems. Comput. Educ. 2009, 53, 761–774. [Google Scholar] [CrossRef]

- Yi, C.; Liao, P.; Huang, C.; Hwang, I. Acceptance of Mobile Learning: A Respecification and Validation of Information System Success. Eng. Technol. 2009, 41, 730–762. [Google Scholar]

- Almarashdeh, I.A.; Sahari, N.; Zin, N.A.M.; Alsmadi, M. The success of learning management system among distance learners in Malaysian universities. J. Theor. Appl. Inf. Technol. 2010, 21, 80–91. [Google Scholar]

- Lin, W.S.; Wang, C.H. Antecedences to continued intentions of adopting e-learning system in blended learning instruction: A contingency framework based on models of information system success and task-technology fit. Comput. Educ. 2012, 58, 88–99. [Google Scholar] [CrossRef]

- Li, Y.; Duan, Y.; Fu, Z.; Alford, P. An empirical study on behavioural intention to reuse e-learning systems in rural China. Br. J. Educ. Technol. 2012, 43, 933–948. [Google Scholar] [CrossRef]

- Tella, A. System-related factors that predict students’ satisfaction with the blackboard learning system at the University of Botswana. Afr. J. Libr. Arch. Inf. Sci. 2012, 22, 41–52. [Google Scholar]

- Akram, M.S.; Malik, A. Evaluating citizens’ readiness to embrace e-government services. In Proceedings of the 2012 ACM Conference on High Integrity Language Technology, Boston, MA, USA, 2–6 December 2012. [Google Scholar] [CrossRef]

- Ayyash, M.M.; Ahmad, K.; Singh, D. A hybrid information system model for trust in e-government initiative adoption in public sector organization. Int. J. Bus. Inf. Syst. 2012, 11, 162–179. [Google Scholar]

- Ayyash, M.M.; Ahmad, K.; Singh, D. Investigating the effect of information systems factors on trust in e-government initiative adoption in palestinian public sector. Res. J. Appl. Sci. Eng. Technol. 2013, 5, 3865–3875. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, C. Empirical study on continuance intentions towards E-Learning 2.0 systems. Behav. Inf. Technol. 2014, 33, 1027–1038. [Google Scholar] [CrossRef]

- Cauter, L.V.; Snoeck, M.; Crompvoets, J. PA meets is research: Analyzing failure of intergovernmental information systems via is adoption and success models. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Sebetci, Ö.; Aksu, G. Evaluating e-government systems in Turkey: The case of the ‘e-movable system. Inf. Polity 2014, 19, 225–243. [Google Scholar] [CrossRef]

- Danila, R.; Abdullah, A. User’s Satisfaction on E-government Services: An Integrated Model. Procedia-Soc. Behav. Sci. 2014, 164, 575–582. [Google Scholar] [CrossRef]

- Chang, C.C.; Liang, C.; Shu, K.M.; Chiu, Y.C. Alteration of influencing factors of e-learning continued intention for different degrees of online participation. Int. Rev. Res. Open Distance Learn. 2015, 16, 33–61. [Google Scholar] [CrossRef]

- Wang, Y.-S.; Li, C.-H.; Yeh, C.-R.; Cheng, S.-T.; Chiou, C.-C.; Tang, Y.-C.; Tang, T.-I. A conceptual model for assessing blog-based learning system success in the context of business education. Int. J. Manag. Educ. 2016, 14, 379–389. [Google Scholar] [CrossRef]

- Hu, J.; Zhang, Y. Chinese students’ behavior intention to use mobile library apps and effects of education level and discipline. Libr. Hi-Tech. 2016, 34, 639–656. [Google Scholar] [CrossRef]

- Yu, T.K.; Yu, T.Y. Modelling the factors that affect individuals zutilization of online learning systems: An empirical study combining the task technology fit model with the theory of planned behaviour. Br. J. Educ. Technol. 2010, 41, 1003–1017. [Google Scholar] [CrossRef]

- Aldholay, A.H.; Isaac, O.; Abdullah, Z.; Ramayah, T. The role of transformational leadership as a mediating variable in DeLone and McLean information system success model: The context of online learning usage in Yemen. Telemat. Inform. 2018, 35, 1421–1437. [Google Scholar] [CrossRef]

- Ghazal, S.; Aldowah, H.; Umar, I.; Bervell, B. Acceptance and satisfaction of learning management system enabled blended learning based on a modified DeLone-McLean information system success model. Int. J. Inf. Technol. Proj. Manag. 2018, 9, 52–71. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Wang, Y.S.; Lin, H.H.; Tsai, T.H. Developing and validating a model for assessing paid mobile learning app success. Interact. Learn. Environ. 2019, 27, 458–477. [Google Scholar] [CrossRef]

- Xu, F.; Du, J.T. Factors influencing users’ satisfaction and loyalty to digital libraries in Chinese universities. Comput. Hum. Behav. 2018, 83, 64–72. [Google Scholar] [CrossRef]

- Aldholay, A.H.; Abdullah, Z.; Ramayah, T.; Isaac, O.; Mutahar, A.M. Online learning usage and performance among students within public universities in Yemen. Int. J. Serv. Stand. 2018, 12, 163–179. [Google Scholar] [CrossRef]

- Gan, C.L.; Balakrishnan, V. Mobile Technology in the Classroom: What Drives Student-Lecturer Interactions? Int. J. Hum. Comput. Interact. 2018, 34, 666–679. [Google Scholar] [CrossRef]

- Priskila, R.; Setyohadi, D.B.; Santoso, A.J. An investigation of factors affecting the success of regional financial management information system (case study of Palangka Raya Government). In Proceedings of the 2018 International Seminar on Research of Information Technology and Intelligent Systems ISRITI, Ypguakarta, Indonesia, 21–22 November 2018. [Google Scholar] [CrossRef]

- Fahrianta, R.Y.; Chandrarin, G.; Subiyantoro, E. The Conceptual Model of Integration of Acceptance and Use of Technology with the Information Systems Success. In Proceedings of the 2018 IOP Conference Series: Materials Science and Engineering, Bandung, Indonesian, 9 May 2018. [Google Scholar] [CrossRef]

- Mauritsius, S.T. A study on senior high school students’ acceptance of mobile learning management system. J. Theor. Appl. Inf. Technol. 2019, 97, 3638–3649. [Google Scholar]

- Farida, M.H.; Margianti, E.S.; Fanreza, R. The determinants and impact of system usage and satisfaction on e-learning success and faculty-student interaction in indonesian private universities. Malays. J. Consum. Fam. Econ. 2019, 23, 85–99. [Google Scholar] [CrossRef]

- Albelali, S.A.; Alaulamie, A.A. Gender Differences in Students’ Continuous Adoption of Mobile Learning in Saudi Higher Education. In Proceedings of the 2nd International Conference on Computer Applications & Information Security ICCAIS 2019, Riyadh, Saudi Arabia, 1–3 May 2019. [Google Scholar] [CrossRef]

- Jacob, D.W.; Fudzee, M.F.M.; Salamat, M.A.; Herawan, T. A review of the generic end-user adoption of e-government services. Int. Rev. Adm. Sci. 2019, 85, 799–818. [Google Scholar] [CrossRef]

- Alkraiji, A.I. Citizen Satisfaction with Mandatory E-Government Services: A Conceptual Framework and an Empirical Validation. IEEE Access 2020, 8, 117253–117265. [Google Scholar] [CrossRef]

- Alkraiji, A.I. An examination of citizen satisfaction with mandatory e-government services: Comparison of two information systems success models. Tran. Gov. People Process Policy 2021, 15, 36–58. [Google Scholar] [CrossRef]

- Fitriati, A.; Pratama, B.; Tubastuvi, N.; Anggoro, S. The study of DeLone-McLean information system success model: The relationship between system quality and information quality. J. Theor. Appl. Inf. Tech. 2020, 98, 477–483. [Google Scholar]

- Almaiah, M.A.; Jalil, M.M.A.; Man, M. Empirical investigation to explore factors that achieve high quality of mobile learning system based on students’ perspectives. Eng. Sci. Technol. Int. J. 2016, 19, 1314–1320. [Google Scholar] [CrossRef]

- Adams, D.A.; Nelson, R.R.; Todd, P.A. Perceived usefulness, ease of use, and usage of information technology: A replication. MIS Q. 1992, 16, 227–247. [Google Scholar] [CrossRef]

- Hendrickson, A.R.; Massy, P.D.; Cronan, T.P. On the test-retest reliability of perceived usefulness and perceived ease of use scales. MIS Q. 1993, 17, 227–230. [Google Scholar] [CrossRef]

- Karahanna, E.; Straub, D.W. The Psychological Origins of Perceived Usefulness and Ease of Use. Inf. Manag. 1999, 35, 237–250. [Google Scholar] [CrossRef]

- Stefanovic, D.; Spasojevic, I.; Havzi, S.; Lolic, T.; Ristic, S. Information systems success models in the e-learning context: A systematic literature review. In Proceedings of the 2021 20th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 17–19 March 2021. [Google Scholar] [CrossRef]

- Ciric, D.; Lolic, T.; Gracanin, D.; Stefanovic, D.; Lalic, B. The Application of ICT Solutions in Manufacturing Companies in Serbia. In Proceedings of the IFIP Advances in Information and Communication Technology, Wroclaw, Poland, 3–6 July 2020. [Google Scholar] [CrossRef]

- Prahalad; Hemmer, G. The core competence of the corporation. Harv. Bus. Rev. 1990, 68, 79–91. [Google Scholar]

- Yusuf, Y.Y.; Sarhadi, M.; Gunasekaran, A. Agile manufacturing: The drivers, concepts and attributes. Int. J. Prod. Econ. 1999, 62, 33–43. [Google Scholar] [CrossRef]

- Forsythe, S. Human factors in agile manufacturing: A brief overview with emphasis on communication and information infrastructure. Hum. Factors Ergon. Manuf. 1997, 7, 3–10. [Google Scholar] [CrossRef]

- Ciric, D.; Delic, M.; Lalic, B.; Gracanin, D.; Lolic, T. Exploring the link between project management approach. Adv. Prod. Eng. Manag. 2021, 16, 99–111. [Google Scholar] [CrossRef]

- Bourdon, S.; Ollet-Haudebert, I. Towards An Understanding of Knowledge Management Systems—UTAUT Revisited. In Proceedings of the 15th Americas Conference on Information Systems AMCIS 2009, San Francisco, CA, USA, 6–9 August 2009. [Google Scholar]

- Martins, C.; Tiago, O.; Popovič, A. Understanding the internet banking adoption: A unified theory of acceptance and use of technology and perceived risk application. Int. J. Inf. Manag. 2014, 34, 1–13. [Google Scholar] [CrossRef]

- Herrero, Á.; Martín, H.S.; Salmones, M.G.-D. Explaining the adoption of social networks sites for sharing user-generated content: A revision of the UTAUT2. Comput. Hum. Behav. 2017, 71, 209–217. [Google Scholar] [CrossRef]

- Griffin, B.; Hesketh, B. Adaptable Behaviours for Successful Work and Career Adjustment. Aust. J. Psychol. 2006, 55, 65–73. [Google Scholar] [CrossRef]

- Dawis, R.V. The Minnesota Theory of Work Adjustment; John Wiley & Sons: New York, NY, USA, 2005. [Google Scholar]

- Sherehiy, B.; Karwowski, K.; Layer, J. A Review of Enterprise Agility: Concepts, Frameworks, and Attributes. Int. J. Ind. Ergon. 2007, 37, 445–460. [Google Scholar] [CrossRef]

- Alavi, S.; Wahab, D.A. A Review on Workforce Agility. Res. J. Appl. Sci. Eng. Technol. 2013, 5, 4195–4199. [Google Scholar] [CrossRef]

- Tarhini, A.; Ra’ed, M.; Al-Busaidi, K.A.A.; Ashraf, B.M.; Mahmoud, M. Factors influencing students’ adoption of e-learning: A structural equation modeling approach. J. Int. Educ. Bus. 2017, 11, 299–312. [Google Scholar] [CrossRef]

- Mahande, R.D.; Makassar, U.N.; Malago, J.D. An E-Learning Acceptance Evaluation Through Utaut Model in a Postgraduate Program. J. Educ. Online 2019, 16, 1–10. [Google Scholar] [CrossRef]

- Ra’ed, M.; Tarhini, A.; Bany, M.; Ashraf, B.M.; Maqableh, M. Modeling Factors Affecting Student’s Usage Behaviour of E-Learning Systems in Lebanon. Int. J. Bus. Manag. 2017, 10, 164–182. [Google Scholar] [CrossRef]

- Tan, P.J.B. Applying the UTAUT to Understand Factors Affecting the Use of English E-Learning Websites in Taiwan. SAGE Open 2013, 3, 1–12. [Google Scholar] [CrossRef]

- Abdekhoda, M.; Dehnad, A.; Ghazi Mirsaeed, S.J.; Zarea Gavgani, V. Factors influencing the adoption of E-learning in Tabriz University of Medical Sciences. Med. J. Islam. Repub. Iran 2016, 30, 457. [Google Scholar]

- Prasad, P.W.C.; Maag, A.; Redestowicz, M.; Hoe, L.S. Unfamiliar Technology: Reaction of International Students to Blended Learning. Comput. Educ. 2018, 122, 92–103. [Google Scholar] [CrossRef]

- Sabraz, R.S.; Rustih, M. University students’ intention to use e-learning systems. Interact. Technol. Smart Educ. 2019, 16, 219–238. [Google Scholar] [CrossRef]

- Yang, S. Understanding Undergraduate Students’ Adoption of Mobile Learning Model: A Perspective of the Extended UTAUT2. J. Converg. Inf. Technol. 2013, 8, 969–979. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, T.; Shu, J.; Liu, H. Identifying key factors affecting college students’ adoption of the e-learning system in mandatory blended learning environments. Interact. Learn. Environ. 2020, 30, 1388–1401. [Google Scholar] [CrossRef]

- Cai, Z.; Huang, Q.; Liu, H.; Wang, X. Improving the agility of employees through enterprise social media: The mediating role of psychological conditions. Int. J. Inf. Manag. 2018, 38, 52–63. [Google Scholar] [CrossRef]

- Alavi, S. The influence of workforce agility on external manufacturing flexibility of Iranian SMEs. Int. J. Technol. Learn. Innov. Dev. 2017, 8, 111–127. [Google Scholar] [CrossRef]

- Sherehiy, B.; Karwowski, W. The relationship between work organization and workforce agility in small manufacturing enterprises. Int. J. Ind. Ergon. 2014, 44, 466–473. [Google Scholar] [CrossRef]

- Wang, Y.S.; Wu, M.C.; Wang, H.Y. Investigating the determinants and age and gender differences in the acceptance of mobile learning. Br. J. Educ. Technol. 2009, 40, 92–118. [Google Scholar] [CrossRef]

- Persico, D.; Manca, S.; Pozzi, F. Adapting the technology acceptance model to evaluate the innovative potential of e-learning systems. Comput. Hum. Behav. 2014, 30, 614–622. [Google Scholar] [CrossRef]

- Somaieh, A.; Dzuraidah, A.W.; Norhamidi, M.; Behrooz, A.S. Organic structure and zorganizational learning as the main antecedents of workforce agility. Int. J. Prod. Res. 2014, 52, 6273–6295. [Google Scholar] [CrossRef]

- Dillman, D.A. Mail and Internet Surveys: The Tailored Design Method, 2nd ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2007. [Google Scholar]

- Nunnally, H.; Bernstein, J. Psihometric Theory; McGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Tabachnick, L.S.; Fidell, B.G. Using Multivariate Statistics; Pearson Education, Inc.: Boston, MA, USA, 2007. [Google Scholar]

- Bentler, D.G.; Bonett, P.M. Significance tests and goodness of fit in the analysis of covariance structures. Psychol. Bull. 1980, 88, 588–606. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Cordato, D.J.; Fatima Shad, K.; Soubra, W.; Beran, R.G. Health Research and Education during and after the COVID-19 Pandemic: An Australian Clinician and Researcher Perspective. Diagnostics 2023, 13, 289. [Google Scholar] [CrossRef]

- Sommovigo, V.; Bernuzzi, C.; Finstad, G.L.; Setti, I.; Gabanelli, P.; Giorgi, G.; Fiabane, E. How and When May Technostress Impact Workers’ Psycho-Physical Health and Work-Family Interface? A Study during the COVID-19 Pandemic in Italy. Int. J. Environ. Res. Public Health 2023, 20, 1266. [Google Scholar] [CrossRef]

- Kalkanis, K.; Kiskira, K.; Papageorgas, P.; Kaminaris, S.D.; Piromalis, D.; Banis, G.; Mpelesis, D.; Batagiannis, A. Advanced Manufacturing Design of an Emergency Mechanical Ventilator via 3D Printing—Effective Crisis Response. Sustainability 2023, 15, 2857. [Google Scholar] [CrossRef]

- Zin, K.S.L.T.; Kim, S.; Kim, H.-S.; Feyissa, I.F. A Study on Technology Acceptance of Digital Healthcare among Older Korean Adults Using Extended Tam (Extended Technology Acceptance Model). Adm. Sci. 2023, 13, 42. [Google Scholar] [CrossRef]

- Marsden, A.R.; Zander, K.K.; Lassa, J.A. Smallholder Farming during COVID-19: A Systematic Review Concerning Impacts, Adaptations, Barriers, Policy, and Planning for Future Pandemics. Land 2023, 12, 404. [Google Scholar] [CrossRef]

- Chrysafiadi, K.; Virvou, M.; Tsihrintzis, G.A. A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19. Electronics 2023, 12, 428. [Google Scholar] [CrossRef]

- Garrido-Gutiérrez, P.; Sánchez-Chaparro, T.; Sánchez-Naranjo, M.J. Student Acceptance of E-Learning during the COVID-19 Outbreak at Engineering Universities in Spain. Educ. Sci. 2023, 13, 77. [Google Scholar] [CrossRef]

- Mutizwa, M.R.; Ozdamli, F.; Karagozlu, D. Smart Learning Environments during Pandemic. Trends High. Educ. 2023, 2, 16–28. [Google Scholar] [CrossRef]

- Jafar, A.; Dollah, R.; Mittal, P.; Idris, A.; Kim, J.E.; Abdullah, M.S.; Joko, E.P.; Tejuddin, D.N.A.; Sakke, N.; Zakaria, N.S.; et al. Readiness and Challenges of E-Learning during the COVID-19 Pandemic Era: A Space Analysis in Peninsular Malaysia. Int. J. Environ. Res. Public Health 2023, 20, 905. [Google Scholar] [CrossRef]

- Oprea, O.M.; Bujor, I.E.; Cristofor, A.E.; Ursache, A.; Sandu, B.; Lozneanu, L.; Mandici, C.E.; Szalontay, A.S.; Gaina, M.A.; Matasariu, D.-R. COVID-19 Pandemic Affects Children’s Education but Opens up a New Learning System in a Romanian Rural Area. Children 2023, 10, 92. [Google Scholar] [CrossRef]

- Alharbi, B.A.; Ibrahem, U.M.; Moussa, M.A.; Abdelwahab, S.M.; Diab, H.M. COVID-19 the Gateway for Future Learning: The Impact of Online Teaching on the Future Learning Environment. Educ. Sci. 2022, 12, 917. [Google Scholar] [CrossRef]

- Anderson, P.J.; England, D.E.; Barber, L.D. Preservice Teacher Perceptions of the Online Teaching and Learning Environment during COVID-19 Lockdown in the UAE. Educ. Sci. 2022, 12, 911. [Google Scholar] [CrossRef]

- Shabeeb, M.A.; Sobaih, A.E.E.; Elshaer, I.A. Examining Learning Experience and Satisfaction of Accounting Students in Higher Education before and amid COVID-19. Int. J. Environ. Res. Public Health 2022, 19, 16164. [Google Scholar] [CrossRef] [PubMed]

- Al-Shamali, S.; Al-Shamali, A.; Alsaber, A.; Al-Kandari, A.; AlMutairi, S.; Alaya, A. Impact of Organizational Culture on Academics’ Readiness and Behavioral Intention to Implement eLearning Changes in Kuwaiti Universities during COVID-19. Sustainability 2022, 14, 15824. [Google Scholar] [CrossRef]

- Silva, S.; Fernandes, J.; Peres, P.; Lima, V.; Silva, C. Teachers’ Perceptions of Remote Learning during the Pandemic: A Case Study. Educ. Sci. 2022, 12, 698. [Google Scholar] [CrossRef]

- Antoniadis, K.; Zafiropoulos, K.; Mitsiou, D. Measuring Distance Learning System Adoption in a Greek University during the Pandemic Using the UTAUT Model, Trust in Government, Perceived University Efficiency and Coronavirus Fear. Educ. Sci. 2022, 12, 625. [Google Scholar] [CrossRef]

| Model | Studies | No. Studies | % |

|---|---|---|---|

| D&M | [21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58] | 37 | 52 |

| TAM | [59] | 1 | 1 |

| Combined model | [60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90] | 31 | 43 |

| New model | [71,91,92] | 3 | 4 |

| Factor | Manifest Variable | Sources |

|---|---|---|

| Performance expectancy (PE) | Usage in the working process | [2,121] |

| Faster obligation fulfillment | [2,121] | |

| Increase in work productivity | [2,121] | |

| Easier working | [2] | |

| Better learning performance | [2] | |

| Effort expectancy (EE) | System usage: clear and understandable | [2,121] |

| Fast system understanding | [2,121] | |

| Simplicity of using | [2,121] | |

| Learning to handle the system easily | [2,121] | |

| System responsiveness | [2] | |

| Social influence (SI) | Colleague influence | [2,121] |

| Team co-worker influence | [2,121] | |

| Colleagues’ willingness to help influence | [2,121] | |

| Institution influence | [2,121] | |

| The feeling of belonging | [2] | |

| Facilitating conditions (FC) | Owning resources | [2] |

| Owning competence | [2] | |

| Compatibility with other systems | [2] | |

| Fitting into the way of working | [2] | |

| User manual instructions | [2] | |

| Behavioral intention (BI) | Intention to use the system in the future | [2,121] |

| Prediction of future usage | [2,121] | |

| Planning to use the system in the future | [2,121] | |

| Use behavior (UB) | System functionalities * | [122] |

| Proactivity (P) | Seek work improvement opportunities | [107,120,123] |

| Seek effective ways to work | [107,119,120,123] | |

| Leaving it to chance; not reacting | [105,107] | |

| Adherence to work rules and procedures | [105,107] | |

| Finding additional resources at work | [119,120,123] | |

| Adaptability (A) | Adaptive to team changes | [119,120,123] |

| Critical feedback acceptance | [105,107] | |

| Adaptive to the new situation | [107,120,123] | |

| New equipment use | [119,123] | |

| Keeping up to date | [119,123] | |

| Adaptive to tasks switching | [119,123] | |

| Resilience (R) | Efficiency in stressful situations | [107,119,120,123] |

| Working under pressure | [107,119,120,123] | |

| Reaction to problems | [107] | |

| Taking action | [119,123] |

| No. | % | No. | % | |

|---|---|---|---|---|

| Experience in using e-learning IS | UNS | PICB | ||

| Without prior experience | 140 | 36.7 | 10 | 6.7 |

| Less than 1 year | 64 | 16.8 | 38 | 25.5 |

| Between 1 and 3 years | 62 | 16.3 | 14 | 9.4 |

| More than 3 years | 115 | 30.2 | 87 | 58.4 |

| Total | 381 | 100.0 | 149 | 100.0 |

| Average e-learning IS daily usage | ||||

| Less than 1 h | 223 | 58.5 | 29 | 19.5 |

| Between 1 h to 3 h | 140 | 36.7 | 74 | 49.7 |

| Between 3 h to 5 h | 15 | 3.9 | 34 | 22.8 |

| Between 5 h to 7 h | 3 | 0.8 | 11 | 7.4 |

| More than 7 h | 0 | 0 | 1 | 0.7 |

| Total | 381 | 100.0 | 149 | 100.0 |

| CR | AVE | MSV | ASV | BI | PE | A | EE | FR | R | UB | SI | P | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BI | 0.979 | 0.938 | 0.276 | 0.129 | 0.969 a | ||||||||

| PE | 0.949 | 0.789 | 0.425 | 0.142 | 0.456 | 0.888 a | |||||||

| A | 0.863 | 0.562 | 0.530 | 0.194 | 0.308 | 0.355 | 0.750 a | ||||||

| EE | 0.916 | 0.685 | 0.425 | 0.162 | 0.333 | 0.652 | 0.437 | 0.828 a | |||||

| FR | 0.761 | 0.532 | 0.179 | 0.103 | 0.307 | 0.137 | 0.406 | 0.423 | 0.729 a | ||||

| R | 0.814 | 0.595 | 0.530 | 0.180 | 0.343 | 0.317 | 0.728 | 0.395 | 0.373 | 0.772 a | |||

| UB | 0.737 | 0.588 | 0.105 | 0.059 | 0.324 | 0.233 | 0.314 | 0.153 | 0.078 | 0.314 | 0.698 a | ||

| SI | 0.803 | 0.607 | 0.276 | 0.121 | 0.525 | 0.381 | 0.295 | 0.361 | 0.368 | 0.323 | 0.262 | 0.779 a | |

| P | 0.840 | 0.646 | 0.257 | 0.093 | 0.171 | 0.244 | 0.507 | 0.283 | 0.296 | 0.439 | 0.129 | 0.154 | 0.804 a |

| CR | AVE | MSV | ASV | P | PE | EE | A | SI | SU | BI | R | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | 0.817 | 0.619 | 0.238 | 0.097 | 0.787 a | |||||||

| PE | 0.894 | 0.630 | 0.407 | 0.163 | 0.170 | 0.794 a | ||||||

| EE | 0.883 | 0.655 | 0.407 | 0.210 | 0.274 | 0.638 | 0.809 a | |||||

| A | 0.756 | 0.620 | 0.367 | 0.183 | 0.488 | 0.254 | 0.428 | 0.787 a | ||||

| SI | 0.844 | 0.664 | 0.062 | 0.025 | 0.000 | 0.228 | 0.248 | 0.130 | 0.815 a | |||

| SU | 0.717 | 0.462 | 0.279 | 0.125 | 0.246 | 0.290 | 0.343 | 0.377 | 0.106 | 0.680 a | ||

| BI | 0.958 | 0.884 | 0.348 | 0.226 | 0.344 | 0.590 | 0.546 | 0.517 | 0.154 | 0.528 | 0.940 a | |

| R | 0.852 | 0.661 | 0.367 | 0.207 | 0.397 | 0.393 | 0.570 | 0.606 | 0.114 | 0.425 | 0.498 | 0.813 a |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vuckovic, T.; Stefanovic, D.; Ciric Lalic, D.; Dionisio, R.; Oliveira, Â.; Przulj, D. The Extended Information Systems Success Measurement Model: e-Learning Perspective. Appl. Sci. 2023, 13, 3258. https://doi.org/10.3390/app13053258

Vuckovic T, Stefanovic D, Ciric Lalic D, Dionisio R, Oliveira Â, Przulj D. The Extended Information Systems Success Measurement Model: e-Learning Perspective. Applied Sciences. 2023; 13(5):3258. https://doi.org/10.3390/app13053258

Chicago/Turabian StyleVuckovic, Teodora, Darko Stefanovic, Danijela Ciric Lalic, Rogério Dionisio, Ângela Oliveira, and Djordje Przulj. 2023. "The Extended Information Systems Success Measurement Model: e-Learning Perspective" Applied Sciences 13, no. 5: 3258. https://doi.org/10.3390/app13053258

APA StyleVuckovic, T., Stefanovic, D., Ciric Lalic, D., Dionisio, R., Oliveira, Â., & Przulj, D. (2023). The Extended Information Systems Success Measurement Model: e-Learning Perspective. Applied Sciences, 13(5), 3258. https://doi.org/10.3390/app13053258