Abstract

Although learning analytics benefit learning, its uptake by higher educational institutions remains low. Adopting learning analytics is a complex undertaking, and higher educational institutions lack insight into how to build organizational capabilities to successfully adopt learning analytics at scale. This paper describes the ex-post evaluation of a capability model for learning analytics via a mixed-method approach. The model intends to help practitioners such as program managers, policymakers, and senior management by providing them a comprehensive overview of necessary capabilities and their operationalization. Qualitative data were collected during pluralistic walk-throughs with 26 participants at five educational institutions and a group discussion with seven learning analytics experts. Quantitative data about the model’s perceived usefulness and ease-of-use was collected via a survey (n = 23). The study’s outcomes show that the model helps practitioners to plan learning analytics adoption at their higher educational institutions. The study also shows the applicability of pluralistic walk-throughs as a method for ex-post evaluation of Design Science Research artefacts.

1. Introduction

The digitization of education led to the increased availability of learner data (Ferguson, 2012). These learner data are collected, analyzed, and used to perform interventions to improve education []. This process is called learning analytics (LA). LA is effective in improving student outcomes []. For this reason, many higher educational institutions (HEIs) seek to adopt LA into their educational processes.

Over the years, researchers designed models, frameworks, and other instruments to support LA adoption [,,,,,]. However, despite these models’ existence, only a few institutions successfully implemented LA at scale [,,]. The extant implementation models do not offer practical insights into operationalizing the critical dimensions for LA adoption []. They also only focus on specific elements like policy development and do not provide solutions to challenges identified by institutions while using the models []. This calls for a model that incorporates all dimensions important to successful LA uptake as well as prescribing in detail how to operationalize these dimensions.

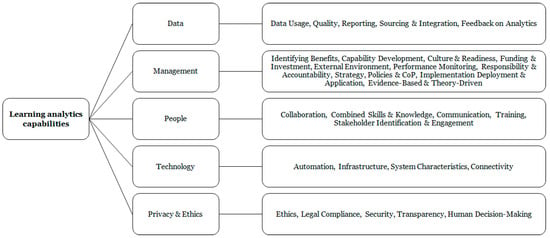

During previous research, we designed a capability model for learning analytics based on literature []. This model was refined by conducting interviews []. The model comprises 30 different organizational capabilities, divided into five categories—see Figure 1. Definitions for each capability are available as Supplementary Materials (Table S1). To increase its practical relevance, the model prescribes multiple ways to operationalize each capability. For example, the capability Data Quality prescribes the use of common definitions, standardized labelling of data, automation, timely input, et cetera. This way, users do not only obtain insight into what capabilities they need to (further) develop at their institutions, but also what this means in practice. All operationalizations are available as Supplementary Materials (Table S2). So, the model not only describes the ‘what’, but also prescribes the ‘how’. Intended users of the model are practitioners such as program managers, policymakers, and senior management who need to plan LA adoption at their institution.

Figure 1.

Capability model for learning analytics.

The model’s development process followed the steps of the Design Science Research framework []. An essential step in design science research is evaluating the designed artefact [,,,]. After its design, formative evaluation, and refinement, the model’s validity must be demonstrated by returning it “into the environment for study and evaluation in the application domain” []. This paper describes the artefact’s evaluation with real users in a real-world context. It answers the main research question, “Does the Learning Analytics Capability Model help practitioners with their task of planning learning analytics adoption at higher educational institutions?” The objective of this research is to (1) evaluate the effectiveness of the LACM, (2) measure the users’ perceptions of the model’s usefulness, and (3) evaluate the model’s completeness. To achieve this goal, we organized five pluralistic walkthroughs with 26 participants responsible for LA implementation at their institution, conducted a survey, and interviewed seven experts during a group interview.

The relevance of our work is twofold. First, it provides an empirically validated capability model by applying it in a real-world setting. Our research shows what capabilities are often overlooked when planning the implementation of LA. Second, it further established pluralistic walk-throughs as an evaluation method in design science research. Most often, pluralistic walk-throughs are applied to research user interfaces’ usability []. However, we used pluralistic walk-throughs to evaluate the capability model. Our work substantiates pluralistic walk-throughs’ applicability as an evaluation method in situations where artefacts need to be used in real-world settings while time is constrained.

This paper is structured as follows. In the next section, we will provide an overview of our study’s background and relevant literature. This includes a description of our artefact’s design process. We then present the methodology we used for the model’s evaluation, i.e., a mixed-method approach comprising pluralistic walk-throughs, a group discussion, and a survey. Next, we describe the analyses we performed and their outcomes. Finally, we conclude our work with a discussion on the results and their implications, our study’s limitations, and directions for future research.

2. Background

In this section, we describe the background of our study based on relevant literature. We start with a description of existing LA models and frameworks. Next, the design process of the capability model is explained. Finally, we describe the evaluation’s role in the design process from a design science research perspective.

2.1. Learning Analytics Adoption

LA is “the measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environment in which it occurs” []. Although LA enhances education, not many HEIs have adopted LA at scale yet [,]. A systematic literature review by Viberg and others [] shows that there is little evidence that LA is used at scale. They conclude that LA tools are deployed in only 6% of the analyzed research papers. Similar findings are reported by Tsai and Gasevic [], who interviewed LA experts and surveyed European HEIs. Only 2 out of 46 surveyed institutions adopted institution-wide LA. HEIs face multiple challenges when adopting LA, including technical factors, human factors, and organizational factors [].

Several models aim to overcome implementation challenges and to support LA uptake. For example, the Learning Analytics Framework [], the Learning Analytics Readiness Instrument [], the Learning Analytics Sophistication Model [], the Rapid Outcome Mapping Approach [], and the Supporting Higher Education to Intergrade Learning Analytics framework []. However, these models have their shortcomings [,,]. They are often not grounded in management theory, they lack clear operational descriptions on developing essential dimensions, and their empirical validation is still low.

To overcome the existing models’ limitations, a capability model for LA was designed: the Learning Analytics Capability Model (LACM) []. This model builds on strategic management theory, that is, the resource-based view (RBV). The LACM takes a resource-based perspective because the lack of the right organizational resources is a major reason for HEIs not to adopt LA []. Although resource-based models exist in adjacent research fields like business analytics and big data analytics [,], no such model is available for the LA domain. Moreover, the capability model provides many ways to operationalize capabilities in practice. The capability model is a suitable response to the challenges faced by HEI when implementing LA. For example, the model addresses all four main challenges summarized by Tsai and others []. The capability model prescribed the need to engage all stakeholders and foster a data-informed culture (challenge 1). It calls for a theory-driven and evidence-based approach to ensure pedagogical issues are properly addressed (challenge 2). Since the model is based on the RBV, resource demands are fundamental to it (challenge 3). Finally, privacy and ethics have a central place in the model as these comprise one of the five critical categories (challenge 4).

LA aims to improve learning environment, learning processes, and learning outcomes []. It can be applied to achieve a wide variety of goals, e.g., supporting self-regulated learning, monitoring learning behavior, improving learning materials, measuring learning gains, and improving retention. As many goals require similar capabilities, the LACM seems goal-agnostic. So, the model is beneficial to all institutions, independent of their LA goals. This is reflected in the capability Data Usage, which includes many ways to use LA in practice.

2.2. Designing a Capability Model for Learning Analytics

The RBV provides a theoretical basis for improving processes, performance, and creating competitive advantages via the organization’s resources [,]. Capabilities refer to the organization’s capacity to deploy resources to achieve the desired goal and are often developed by combining physical, human, and technological resources []. A key feature of capabilities is their purpose of enhancing the productivity of organizations’ other resources []. Another key feature is that capabilities are embedded in organizations and cannot be easily transferred. Thus, HEIs that adopt LA can purchase commodity assets like data warehouses and data science software, but they need to develop their own LA capabilities.

Design science research principles [] are suitable for providing solutions to wicked problems. These problems are characterized by complex interaction between the problems’ subcomponents and solutions, and their multidisciplinary nature calls for well-developed human social abilities. LA implementation involves multiple critical dimensions [] and requires a multidisciplinary approach [,]. This makes LA adoption a complex problem; hence, we used design science research principles for the development of an LA capability model. The DSR Framework [] is the de facto standard to conduct and evaluate DSR []. Design science research projects comprise three cycles: the rigor cycle, the design cycle, and the relevance cycle []. The rigor cycle and design cycle were followed during two previous studies [,]. The next step is the relevance cycle, in which the model’s validity is evaluated via field testing in the application domain.

2.3. Evaluation of a Design Science Research Artefact

Evaluation of the designed artefacts is a crucial step in design science research [,,,]. A design science research process is a sequence of expert activities that produces a model, and “[t]he utility, quality, and efficacy of a design artefact must be rigorously demonstrated via well-executed evaluation methods (p. 85)” []. This means that the capability model must be studied and evaluated in the application domain []. Hevner and others [] provide guidelines that can be used as criteria and standards to evaluate design science research. However, there is consensus that not all these guidelines have to be followed to demonstrate the artefact’s validity []. What is important is the need to address and solve a problem, have a clear design artefact, and perform some form of evaluation. This last step includes, among others, practice-based evaluation of effectiveness, usefulness, and ease of use []. Evaluation calls for real practitioners as participants and the artefact’s instantiation. This is achieved via the use of empirical evaluation methods [].

3. Materials and Methods

This section describes our research goal and the research questions we will answer in our study. We then elaborate on the mixed method approach we used. Next, we present the setup of our data collection, as well as the used research instruments. A pilot test was held to increase the research’s rigor. Finally, we give an overview of the analyses that we performed on the collected data.

3.1. Research Goal and Methods

The main research question for this research is, “Does the Learning Analytics Capability Model help practitioners with their task of planning learning analytics adoption at higher educational institutions?”

As suggested by Prat and others [], we applied a commonly used evaluation style, namely practice-based evaluation. Three evaluation criteria are applied. First, we need to establish that the capability model works in a naturalistic situation, i.e., to measure its effectiveness []. Second, since the model is used in the application domain, it is also important to include its usefulness in the evaluation, i.e., the “degree to which the artefact positively impacts individuals’ task performance (p. 266)” []. Finally, a third element for the evaluation of models relates to their completeness [,]. Completeness can be defined as the “degree to which the structure of the artefact contains all necessary elements and relationships between elements (p. 266)” [].

To ensure the quality of our study, we used different research methods. Mixed-method research increases research data confidence as one method’s weaknesses are counterbalanced with another’s strengths and vice versa []. Three different methods are applied: (1) pluralistic walk-throughs, (2) expert evaluation via a group discussion, and (3) a survey. Table 1 provides details on data collection and analysis. We provide argumentation for the use of these methods in the following subsections.

Table 1.

Relation between the evaluation criteria, methods, and data analysis.

3.2. Pluralistic Walk-Throughs

In an optimal situation, the implementation of LA with the capability model’s support was studied over time in a real-world environment. However, in practice, such a process takes multiple years. As time is constrained, we opted for a method that simulates the model’s use in a real-world setting, but faster. Prat and others [] suggest to creatively and pragmatically generate new evaluation methods. In line with this suggestion, we used pluralistic walk-throughs. A pluralistic walk-through, also known as participatory design review [], allows for reviewing a product design by a group of stakeholders with varying competencies []. This aligns with the model’s goal, as stakeholder involvement is an important element in LA implementation []. One benefit of conducting pluralistic walk-throughs is that it is rapid and generates immediate feedback from users, reducing the time necessary for the test–redesign–retest cycle []. Moreover, they are cost-effective and easy-to-use []. Pluralistic walk-throughs are often utilized to evaluate user interfaces and validate other sorts of IT artefacts [,]. For instance, Dahlberg [] evaluates an application’s design in its working context during five walk-throughs. Pluralistic walk-throughs are used in the LA domain before. For example, to support an LA toolkit design for teachers [].

Based on the characteristics provided by Bias [], we designed the following pluralistic walk-through for practitioners:

- We organized multiple sessions with stakeholders from different institutions as participants. In each session, the participants were from one single organization. They were the capability model’s target users—policymakers, senior managers, program directors, learning analysts, et cetera (characteristic 1). Each pluralistic walk-through lasted between two and three hours.

- To complete a planning task, the participants used the capability model. A digital version of the model was available to support the task (characteristic 2). Details on the digital tool are provided in Section 3.6.

- During the pluralistic walk-through, participants were asked to solve a planning task: plan the implementation of an LA program at their institution to reach a predefined goal they have with LA (characteristic 3). This task led to a ‘roadmap’ in which implementation process for the next two years was planned.

- During the pluralistic walk-throughs, one researcher represented the designers of the capability model. Another researcher took notes on interesting situations when the participants performed the task (characteristic 4). We also video-recorded each walk-through so they could be transcribed and analyzed.

- At the end of each pluralistic walk-through, there was a group discussion moderated by the researchers. During the discussion, participants elaborated on decisions made during the process and discussed whether the capability model provided sufficient support to complete the task (characteristic 5).

Similar to Rödle and others [], we split each walk-through in two phases. We did so to test whether the capability model gave participants new insights in what capabilities are important for the uptake of LA. The first phase was a baseline measurement to research what capabilities are already known and identified as important by the participants without the use of the capability model. In the second phase, we introduced the model and measured what capabilities were present in the roadmap they made during phase one. To make the capabilities measurable, we formulated between one and three questions for each capability based on their definitions. The participants were asked to analyze and enhance the roadmap they made in the previous phase using the capability model. This showed the effectiveness of the model (evaluation criterium 1). Afterward, we discussed the outcomes as well as the model’s usefulness and completeness with the participants (evaluation criteria 2 and 3). To stir the discussion, we presented the following three statements to the participants to react on:

- The capability model positively contributes to the adoption of LA by Dutch HEIs.

- The operational descriptions provided by the model help to make the adoption of LA more concrete.

- The capability model is complete. That is, there are no missing capabilities that are important to the adoption of LA by HEIs.

As usability is only one element we wish to evaluate, we decided to broaden the scope and invite LA experts rather than usability experts. Since the experts already know much about LA’s capabilities and the capability model will provide them with fewer new insights than the novice practitioners; the novice practitioners’ input can be collected separately. All sessions were hosted online via Microsoft Teams or Zoom to comply with COVID-19 regulations at that time.

3.3. Expert Evaluation

Variations on the characteristics of pluralistic walk-throughs exist. Riihiaho [] separates users’ and experts’ participation to give sufficient credit to the users’ feedback and to save participants’ time. We opted for the same approach and organized different meetings for novice practitioners (with no or limited experience with LA implementation) and experts (much experience with LA implementation). During an expert evaluation, an artefact is assessed by one or multiple experts []. We invited experts with multiple years of experience in implementing LA at their institution to participate in a group discussion. Group discussions, also known as group interviews, have multiple advantages compared to individual interviews. The interaction between participants enriches the data, leads to more follow-up questions, and they are relatively easy to organize and conduct [].

The experts were asked to use the capability model to identify what capabilities are already present at their own institution and to investigate which ones are still missing. This task gave them hands-on experience with the model. We then organized a group discussion to research whether they deem the capability model useful for practitioners who want to implement LA (evaluation criterium 2) and whether they believe the model is complete (evaluation criterium 3). During the session, a presentation on the development of the capability model was given first. Next, the outcomes of the experts’ interaction with an online tool were presented, followed by a group discussion. During this discussion, we asked the experts to react to the statements presented earlier.

3.4. Survey

Participants to both the pluralistic walk-throughs and the expert evaluation were asked to anonymously fill out an individual survey. Together with the data collected during the group discussions, the survey data are used to measure evaluation criterium 2. Surveys help to provide generalizable statements about a study’s object and are a good method for verification [].

In the survey, participants could score the capability model’s usefulness, ease-of-use, and describe why it is (not) helping them implement LA at scale at their institution. To this end, we asked questions derived from the Technology Acceptance Model (TAM) []. This model measures peoples’ intentions to use a specific technology or service. It is one of the most widely used models in Information Systems research [] and has been applied in the LA domain before [,]. In line with the latter studies, we focused on perceived usefulness and ease-of-use, ignoring external variables. External variables include user involvement in the design [], which was not the case when developing the capability model. Usefulness and ease-of-use proved to be important to the acceptance of technology in the educational domain [] and capture the personal side of design science research []. For our survey, we used the same questions as Ali et al. [], who studied the adoption of LA tools. Although we tested a model’s usefulness instead of an LA tool’s usefulness, we instantiated our model via a tool and deemed the same questions relevant.

The survey comprised 10 questions: three questions about the model’s general perception, three questions about the perceived usefulness, and three about the ease-of-use. Answers were given by a Likert response scale of 1 (totally disagree) to 5 (totally agree). The tenth question was an open one on why the capability model is (not) helping participants implementing LA at their institution. As all participants in our sessions are Dutch-speaking, the original questions from Ali and others [] were separately translated from English to Dutch by two researchers. Any discrepancies were discussed before finalizing the questionnaire. Surveys were distributed via Microsoft Forms. The survey questions are presented in Appendix A.

3.5. Participants

We now describe the participants’ selection and characteristics for both the pluralistic walk-throughs and the expert evaluation.

3.5.1. Participants for Pluralistic Walk-Throughs

A call for participation in our walk-through was made via SURF Communities, a Dutch website about innovation in the educational domain. The call was aimed at policymakers, IT staff, information managers, project managers, institutional researchers, and data analysts from HEIs with limited experience with LA but wanting to boost and scale up its use. We described the sessions’ goal and set-up and asked to get in touch with those interested in participating. Five institutions signed up to the pluralistic walk-throughs: one Dutch technical university, two Dutch universities of applied sciences, one Dutch institution for senior secondary vocational education, and one Belgian university of applied sciences.

3.5.2. Participants for Expert Evaluation

The experts all participate in a national program to boost the use of LA in the Netherlands. Via already-established contacts, we were able to organize a meeting with these experts. At the time of the session, the project ran for two years. However, most experts have a longer history of implementing LA. Therefore, they have profound knowledge and practical experience with the adoption of LA. During the session, seven experts from seven different institutions were present.

3.6. Tools and Pilot Session

Testing research instruments is an important step to increase a study’s rigor []. Therefore, we performed one pilot session and used the experience gained during that session to enhance research materials, planning, tools, et cetera. Based on the pilot, some small adjustments were made, such as assigning a chairman/chairwoman and asking this person to lead the tasks at hand, formalizing discussion statements rather than asking open questions, or posting the assignments in the online chat so they could be read again.

One major change was made to the way the roadmap is enhanced in the second phase of the pluralistic walk-through. To this end, we used a custom-built, online tool. In this tool, the 814 operational descriptions from the capability model are clustered in 138 different subgroups. Per subgroup, one question was formulated, and each question could be answered with either ‘Yes’ or ‘No’. After answering, the tool calculated to what degree capabilities are already present and provided advice on building the missing ones. However, during the pilot session, it became clear that answering all 138 questions took a very long time. This made the walk-through tedious. The questions were also too detailed compared to the elements described in the roadmap. Therefore, we decided to formulate questions at a first-order capability-level instead. This reduced the number of questions to 37. As we could not change the online tool on short notice, an Excel file with drop-down menus, conditional formatting, and pivot tables was used instead to support the task of refining the roadmap. The Excel file was used during the other four pluralistic walk-throughs.

3.7. Analysis

When we determined what properties of the capability model to evaluate, we needed to consider in what way the collected data would be analyzed []. With regards to the qualitative data, we analyzed what capabilities were present in the roadmaps made during the pluralistic walk-throughs. We did so via axial coding with codes derived from the capability model. Two researchers individually coded all statements made in the roadmaps. They used the capabilities’ definitions (Table S1) as a reference guide. Afterwards, any discrepancies between coders were discussed until consensus was reached. To further enhance our research’s quality, we verbatim transcribed the audio recordings of the pluralistic walk-throughs. These transcripts were also coded via axial coding and analyzed to see whether the roadmaps’ capabilities reflect what was discussed during the roadmaps’ design process. All coding was performed in Atlas.ti. Coded data were used to research the frequency of appearance of the different capabilities.

The quantitative survey data were analyzed using descriptive statistics. There are two streams of thinking on whether Likert scales should be considered as ordinal data or interval data []. In line with other scholars within the LA domain [,] and since our survey contains more than one Likert item, we deemed our data at interval level []. This allowed for calculating mean scores and standard deviations.

4. Results

In this section, we present the results of our research. In total, 26 practitioners from five different educational institutions participated in the pluralistic walk-throughs. The institutions want to use learning analytics for different goals: improving retention, improving assessments, monitoring learning behavior, and supporting students’ choices regarding elective courses. Intended users are teachers and students. One institution did not want to commit to a particular goal; they suggested consulting relevant stakeholders first and made this part of their implementation roadmap. The modal number of participants is four per session. This is in line with other studies that use pluralistic walk-throughs as a research method, where the number of participants lies between 3 and 18 [,]. The characteristics of the participating institutions are described in Table 2. The institutions’ names are substituted with fictional ones to ensure anonymity. Besides the pluralistic walk-throughs, we organized a group discussion with expert users to discuss the capability model. During this group discussion, seven experts were present and commented on the model. For our survey, we received 23 valid responses.

Table 2.

Overview of participating institutions.

Please note that all sessions are held in Dutch and that all quotes are our translation. Participants to the pluralistic walkthroughs, experts, and respondents to the survey are named Px, Ex, and Rx, respectively. Several experts affiliate to multiple institutions and the responds to the survey are anonymous, so in this paper we only refer to institutions’ names by participants to the pluralistic walkthroughs.

4.1. Effectiveness of the Capability Model

In each session, participants were asked to develop two roadmaps—the first one based on prior knowledge and own experiences, and the second one enhanced with the help of the capability model. All five participating institutions made the first roadmap. However, it is important to note that not all institutions made the second, enhanced roadmap. The participants from Alpha decided that they wanted to discuss the new insights they obtained from the capability model before making a new roadmap. As one participant mentions, “I think we would benefit more from it if we [make a new roadmap] more thoroughly on a later moment, as we face an important issue regarding our strategy” (Alpha, P4). On the other hand, Bravo participants decided that the project they had envisioned should be run on a small scale first and large-scale implementation cannot be planned at this moment. The institution lacks a clear vision on the goals and application of LA and the participants deemed themselves incapable of making important decisions towards this end. They believe the organization should first decide on its strategy towards LA: “What is the ethical framework, what is the overall goal? […] then you have a foundation you can further build on” (Bravo, P2). This indicates that institutions need to have at least the capabilities of Strategy, Ethics, identifying benefits, and Policy amd Code of Practice developed to a certain degree before implementation at scale becomes possible.

Aggregating the outcomes of all pluralistic walk-throughs, 88 capabilities were used to construct the roadmaps—see Table 3. The roadmaps are axial-coded based on the capabilities of the model. The initial coder agreement was 65%. This relative low number was the result of differences in the interpretations of the capabilities’ definitions. The coders discussed the discrepancies to achieve agreement. In the walk-throughs’ second phase, the participants gained access to the capability model and supporting Excel file to enhance their roadmap. This led to 28 more capabilities added to the second roadmaps. When we only consider the institutions that made a second roadmap—Charlie, Delta, and Echo—this is an increase of 45% (62 capabilities in their first roadmaps, 90 in their second).

Table 3.

Codes assigned to the first roadmaps (1) and the second, enhanced roadmaps (2). Numbers with an asterisk (*) indicate an increase in capabilities. Numbers with an exclamation mark (!) indicate the uptake of new capabilities.

The capability Implementation, Deployment, and Application is the most frequently present. This is logical, as this capability relates to the planning of LA implementation at an institution, which is exactly the task of the pluralistic walk-throughs. Further analysis shows that not all capability categories are present in all roadmaps. For example, the category Data is absent in the roadmaps of Alpha and Echo. Moreover, the category People is (almost) entirely missing from the roadmaps of Bravo, Delta, and Echo. Most noticeably, however, is the absence of the category Privacy and Ethics. This category was completely absent in the roadmaps of Bravo, Delta, and Echo, and was only mentioned once in the roadmap of Alpha.

Our study investigates whether the capability model helps stakeholders identify capabilities that are entirely overlooked in the implementation roadmap. Therefore, we analyze what unique capabilities can be distinguished, i.e., capabilities entirely absent in the first roadmaps but added to the enhanced ones. These are shown with an exclamation mark in Table 3. Notice that all institutions added Ethics to their second roadmap. When asked why no capabilities from the category Privacy and Ethics were initially planned, participants said that this was already present or discussed within the organization: “The ethical part is described in our vision on data. […] For learning analytics, there is a privacy statement” (Echo, P5), “the discussion about ethics is an old one” (Delta, P3), or “we have been busy to get [privacy and ethics] organized but I think it is not mentioned in our discussion” (Charlie, P4). This endorses Bravo’s observation that institutions must decide on ethical considerations before starting the implementation. Nonetheless, the participants agreed this topic should have a more prominent place in the implementation process. As a result, capabilities regarding ethics were added to the second, enhanced roadmaps of all institutions. Moreover, two institutions added capabilities regarding capability development to their roadmap, while Quality and Stakeholder identification and engagement were each included in one roadmap. Although this does not sound much in absolute numbers, without these capabilities, successful adoption of LA is placed in serious jeopardy. For example, involving stakeholders is crucial for effective implementation []. The capability model helps identify capabilities that are missing in the roadmap: “you immediately see which topics got a lot of our attention and which ones got less” (Echo, P1).

While analyzing the roadmaps, it became apparent that many capabilities were mentioned during the sessions but not all of them were added to the roadmaps themselves. Axial coding of the transcripts supports this observation. Many capabilities were discussed but not made explicit in the roadmap. Remarkably, capabilities from the category Data are discussed by Alpha and Echo but none of the capabilities from this category made it to the roadmap. This shows that the capability model serves two goals: (1) inform practitioners about capabilities that are yet unknown to them, and (2) support users making explicit choices about what capabilities to adopt in their plan towards LA implementation. The latter is also expressed by one of the participants: “For some cases [the need for certain capabilities] might be implicit but it would be good to make them explicit, so they can be thought about. And if you think that they are not applicable, you need to explain why you think so” (Delta, P3).

4.2. Perceived Usefulness of the Capability Model

To research the capability model’s perceived usefulness, we held group discussions with both the participants to the pluralistic walk-throughs and the expert evaluation. Participants are asked to react to statements regarding the model’s positive impact and the operational descriptions it provides.

The experts reacted positively to the statement that the capability model supports LA’s adoption at Dutch HEIs. They believe that the model provides an overview of important aspects. However, the model’s detailedness might serve the self-fulfilling prophecy that when institutions think LA is too complex to implement, the model supports this feeling. This became apparent during the pluralistic walk-throughs when no enhanced roadmap was made by participants from Bravo as they believed certain capabilities, e.g., Strategy and Policy and Code of Practice, must developed first. According to the experts, the capability model’s added value lies in the discussion practitioners have when using the model together to plan LA implementation. As one expert mentions, “the value lies in the dialog” (E2). Furthermore, the experts think that the operational descriptions are supportive. Sometimes, a capability is already partly developed, but operational descriptions help identify weak spots and enable institutions to develop this capability further. The participants of the pluralistic walk-throughs were also positive about the model. According to them, it helps to get insights into the aspects important for LA implementation: “there are some things you don’t think about spontaneously” (Alpha, P4) and “[t]he model helped me to see clearer [whether we] thought well enough about all main points” (Charlie, P2). The model “contributes to a better plan and a more complete plan” (Delta, P3). This reflects the earlier observation that only a small portion of discussed capabilities was included in the roadmaps. So, the model helps to make a more explicit plan.

Besides group interviews, we used a survey based on the Technology Acceptance Model to research the model’s perceived usefulness and ease-of-use. The survey contains three groups of closed-ended questions: questions 1 to 3 measure overall perception, questions 4 to 6 measure perceived usefulness, and questions 7 to 9 measure ease-of-use. The tenth question is an open-ended question regarding the effectiveness of the capability model. We report the mean (M) and standard deviation (SD) in the text for each factor. See Table 4 for the descriptive statistics per survey item. In line with Rienties and others [], we take 3.5 or higher as a positive cut-off value and anything lower than 3 as a negative cut-off value. We regard scores between those numbers as neutral. In total, we received 23 valid responses (n = 23).

Table 4.

Descriptive statistics per survey item.

The overall perception of the model is positive (M = 3.8, SD = 0.3). The questions whether respondents think the capability model is useful and whether they would like to use it in their work are rated highly positive. The question whether the model is more useful than comparable models scores neutral. However, this is probably due to the limited experience with other models, letting respondents answer neutrally to this question.

The capability model’s perceived usefulness is positive (M = 4.1, SD = 0.5). The respondents think the model enables them to gain insight into the capabilities necessary for the successful implementation of LA and that the model helps to identify what capabilities need to be (further) developed at their institution. Although still positive, the third item in this factor ranks the lowest: the respondents score the question whether the capability model provides them with relevant information in what way to operationalize LA capabilities with a 3.7 (SD = 0.8). This might result from the large number of operational descriptions (814) in the model and the sessions’ relatively short duration (between two and three hours). Due to this combination, participants were probably not able to fully use the model’s fine-grained constructs.

The third and last measured factor, ease-of-use, scores the lowest, yet positive (M = 3.5, SD = 0.6). Although respondents think the capability model is easy to understand and its use is intuitive, 35% of respondents believe it is overburdened with information. This is also expressed in answers to the open question, “the model [is] extensive and therefore not clear” (R5) and “[a]t first sight, there are a lot of questions and there is a lot of information” (R8).

In the answers to the open questions, most respondents write that they think the capability model is beneficial and useful. The model, for example, “provides a clear framework, insights in what is necessary, as well as what steps to take” (R20) and it “offers mind-sets to develop policy further” (R15). Like what the experts mentioned in the group discussion, some people believe that the model can serve as a checklist (R12) and believe it helps start conversations with other stakeholders (R23).

4.3. Completeness of the Capability Model

To measure evaluation criterium 3, we researched the capability model’s completeness, i.e., whether all capabilities important to LA adoption are present. Data collected during the group discussions as well as via the open-ended survey question were used to this end. We consider the model to be complete when the experts and participants do not know from their own experience capabilities that are not present in the model.

Asked to comment on the model’s completeness, the experts think that the pedagogical interventions that follow the analysis and visualization of learner data are yet underexposed. The model comprises a capability to monitor the performance of the analytical processes and applications (Performance monitoring), but this does not include the measurement of improvements made to education. The experts suggest adding this to the model. The participants in the pluralistic walk-throughs also mention some potential shortcomings. Most prominent, the position of pedagogical use of LA in the capability model is not apparent enough. That is, the role of remediation is underexposed (Alpha, P1), and it is unclear how to consider the contextual setting of education (Charlie, P1). Although these aspects are part of the capabilities Implementation, Deployment, and Application, and Evidence-based and Theory-driven, respectively, this might not be clear enough.

Other improvements to the model are suggested in the answers to the open question in the survey. Two respondents think the capability model alone is not enough to put LA into practice. As one respondent puts it: “additional materials can be developed that focus on the ‘how’. The current model mainly visualizes the ‘what’” (R8). This calls for the development of additional materials like templates with concrete advice on how to plan the capabilities’ development. Another respondent suggests adding elements specific to education to the model, as the current model is “quite generic and could also be applied to other analytical domains” (R18). This is in line with comments made in the group discussions with both practitioners and experts. That the model is generic is—at least to a certain degree—to be expected as the first version of the model is exapted from literature from the big data analytics and business analytics domains []. Nonetheless, it highlights the need to better position the role of educational context and theory in the model.

5. Discussion

The research’s goal was to evaluate the LACM in its application domain by answering the main research question: “Does the Learning Analytics Capability Model help practitioners with their task of planning learning analytics adoption at higher educational institutions?” Evaluation criteria related to the model’s effectiveness, perceived usefulness, and completeness. The model is designed to support practitioners such as program managers, policymakers, and senior management by providing (1) an overview of necessary capabilities and (2) insight into how to operationalize these capabilities. This study shows that the capability model is successful in its task. The remainder of this section discusses the outcomes of our work, its relevance for research and practice, the study’s limitations, and provides recommendations for future research.

5.1. Research Outcomes

The first evaluation criterium measures the capability model’s effectiveness to practitioners who need to plan the adoption of LA at their institution. The participants to our research represented different stakeholders at educational institutions, which is important for successful LA adoption []. The model proved effective in supporting practitioners in planning LA adoption. Our analysis showed that many capabilities are overlooked when creating implementation roadmaps. Most notably, there was almost no attention to privacy and ethics. This is remarkable as these are important aspects of learning analytics [,] and one of the main issues limiting LA adoption []. The model enables participants to make more complete and more explicit roadmaps. Using the model, the number of capabilities within roadmaps increased by 45%.

The second evaluation criterium regards the model’s perceived usefulness. During the group discussions, both experts and practitioners were positive about the model’s scope and depth. The survey outcomes support this conclusion: both the overall perception and perceived usefulness score well. However, the model’s ease-of-use scores mediocre. This might result from the large number of operational definitions. Especially when we used the online tool with 138 different questions in our first pilot pluralistic walk-through, the participants might have been overwhelmed. This relates to the model’s instantiation validity, i.e., “the extent to which an artefact is a valid instantiation of a theoretical construct or a manifestation of a design principle (p. 430)” []. Due to the complexity of the model’s instantiation, the model itself is perceived as hard to use. The lack of practical guidelines is a common problem shared between LA models []. We plan to enhance this during future research.

The third evaluation criterium considers the model’s completeness. All necessary capabilities are present in the model. Nonetheless, some improvements can be made. First, the scope of Performance monitoring must be broadened. Its current definition is, “In what way the performance of analytical processes and applications are measured.” This definition is too narrow. The pluralistic walk-throughs’ outcomes show that processes and systems’ performance must be monitored, and the interventions’ performance should be measured. This is in line with other scholars’ opinions [,,]. Second, the position of pedagogical theory and context and the evaluation of interventions’ impact should be made more prominent. This is an essential conclusion as LA’s benefits to teaching and education are often overlooked []. Lastly, some participants suggest the development of supporting materials to enhance the practical use of the model.

5.2. Implications for Research and Practice

HEIs face many challenges when implementing LA []. Our model helps to overcomes many of these challenges and enables the LA domain to optimize learning. Especially the capabilities’ operationalizations are beneficial, as existing models do not provide direct solutions for adoption challenges [].

Other domains might also benefit from this research. One pluralistic walk-through participant has much experience with data analytics in the energy industry and the financial industry. He mentions that “it is all relevant when assembling any data analytics team” (Echo, P2). This comment shows that our model’s relevance goes beyond the LA field and might also be applicable in other domains. This is an important knowledge contribution as it allows for extending a known solution (our artefact) to new problems, e.g., data analytics in a nascent application domain.

This study’s main contribution to the design science research domain is the evidence that pluralistic walk-throughs can be conducted to ex-post evaluate an artefact. There is debate about what method is suitable for evaluating what type of artefact [,]. However, no hard rules exist. Some scholars argue that the evaluation must be designed pragmatically [], encouraging researchers to generate creative, new methods. In our research, we needed a method that simulates the capability model’s use in practice by real users, but that could be applied in a limited amount of time. Pluralistic walk-throughs are often applied for ex-ante evaluation when designers are still willing and able to make changes to the design []. By adapting this method, we were able to use it for ex-post evaluation as well. This enriches current knowledge about what methods to use in what situation.

5.3. Limitations

Our research has several limitations. First, since participants voluntarily joined our sessions, there might be non-response or self-selecting bias. This might affect the research outcomes as it is impossible to determine what differences exist between our sample and the population []. However, we mitigated this effect by having a well-balanced mix of participating institutions and people: 26 practitioners who represented 5 different educational institutions that are comparable with other institutions in the Netherlands. This poses another limitation. As we only invited Dutch-speaking participants and thus institutions, outcomes might not be generalizable to institutions in different countries. Further research is necessary to address this shortcoming.

The quality of survey items is another caveat of our research. In survey research, the validity of a questionnaire is analyzed via factor analysis. However, this requires a large enough sample size. A common rule is to have 10 to 15 respondents per variable [], which in our case (9 questions) means between 90 and 135 respondents. We only had 23 respondents, so validity could not be statistically established. Moreover, the question whether the capability model is more useful than comparable models might score lower than expected due to participants’ limited experience with other models. Two respondents explicitly state that they do not know other models and therefore cannot answer the question. The current questionnaire, however, requires an answer. When we remove this question from the analysis, overall perception of the model raises to 4.1 (SD = 0.3). Although the survey was tested before use, this issue was overlooked until the survey was already distributed. At that point, it could no longer be repaired. This is a known problem when using surveys as research method []. Nonetheless, this should be considered in future research.

5.4. Future Work

Our model proved effective and useful to practitioners. Nonetheless, some improvements can be made to the presentation of the model. Most importantly, the position of pedagogical theory and the measurement of the interventions’ effect on learning can be enhanced. Furthermore, in the survey, the model scores relatively low on the factor ease-of-use. Some of the participants request additional materials such as templates or tools to support the capability model’s use. Future work could focus on developing supporting material, which is also carried out for, e.g., the SHEILA framework []. The current model helps users identify important capabilities, but it lacks clear steps regarding in what order to develop these capabilities. Knowing what capabilities must be developed next helps allocating resources to achieve this development. For example, funding might be secured well in advance to hire experts to design functional LA systems. Considering different types of LA adoption models [,,], the capability model is an input model. Further research can help transform the current input model into a process model. This is beneficial, as process models are more suitable for capturing the complexity of higher education than input models []. A last direction for future work is the capability model’s generalizability to educational contexts outside the Netherlands. We invite other researchers to use the model in other settings and report on the outcomes.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app13053236/s1, Table S1: Definitions of learning analytics capabilities; Table S2: Operationalizations of learning analytics capabilities.

Author Contributions

Conceptualization, J.K. and E.v.d.S.; methodology, J.K. and J.V.; validation, E.v.d.S., J.V. and R.v.d.W.; formal analysis, J.K. and E.v.d.S.; investigation, J.K.; resources, J.K.; data curation, J.K.; writing—original draft preparation, J.K. and E.v.d.S.; writing—review and editing, J.V. and R.v.d.W.; visualization, J.K.; supervision, J.V.; project administration, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Questions relate to the general perception of the Learning Analytics Capability Model, its perceived usefulness, and ease-of-use. The survey uses a Likert response scale of 1 (totally disagree) to 5 (totally agree).

- All in all, I find the Learning Analytics Capability Model a useful model.

- I would like to be able to use the Learning Analytics Capability Model in my work.

- The Learning Analytics Capability Model provides me with more useful insights and feedback than other similar models I tried/used.

- The Learning Analytics Capability Model enables me to get insights in the capabilities necessary for the successful uptake of learning analytics in my institution.

- The information provided by the Learning Analytics Capability Model helps me identify what capabilities need to be (further) developed at my institution.

- The Learning Analytics Capability Model provides relevant information in what way to operationalize learning analytics capabilities.

- The Learning Analytics Capability Model is easy to understand.

- The use of the Learning Analytics Capability Model is intuitive enough.

- The Learning Analytics Capability Model is overburdened with information.

- (Open question) The Learning Analytics Capability Model is (not) helping me implementing learning analytics at scale at my institution because.

References

- Clow, D. The Learning Analytics Cycle: Closing the Loop Effectively. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; pp. 134–138. [Google Scholar]

- Foster, C.; Francis, P. A Systematic Review on the Deployment and Effectiveness of Data Analytics in Higher Education to Improve Student Outcomes. Assess. Eval. High. Educ. 2019, 45, 822–841. [Google Scholar] [CrossRef]

- Arnold, K.E.; Lonn, S.; Pistilli, M.D. An Exercise in Institutional Reflection: The Learning Analytics Readiness Instrument (LARI). In Proceedings of the Fourth International Conference on Learning Analytics And Knowledge, Indianapolis, IN, USA, 24–28 March 2014; pp. 163–167. [Google Scholar]

- Bichsel, J. Analytics in Higher Education: Benefits, Barriers, Progress, and Recommendations; EDUCAUSE Center for Applied Research: Louisville, CO, USA, 2012. [Google Scholar]

- Ferguson, R.; Clow, D.; Macfadyen, L.; Essa, A.; Dawson, S.; Alexander, S. Setting Learning Analytics in Context: Overcoming the Barriers to Large-Scale Adoption. In Proceedings of the Fourth International Conference on Learning Analytics and Knowledge, Indianapolis, IN, USA, 24–28 March 2014; pp. 251–253. [Google Scholar]

- Greller, W.; Drachsler, H. Translating Learning into Numbers: A Generic Framework for Learning Analytics. Educ. Technol. Soc. 2012, 15, 42–57. [Google Scholar]

- Siemens, G.; Dawson, S.; Lynch, G. Improving the Quality and Productivity of the Higher Education Sector; Teacher Education Ministerial Advisory Group: Canberra, Australia, 2013. [Google Scholar]

- Tsai, Y.-S.; Moreno-Marcos, P.M.; Tammets, K.; Kollom, K.; Gašević, D. SHEILA Policy Framework: Informing Institutional Strategies and Policy Processes of Learning Analytics. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, Sydney, Australia, 7–9 March 2018; pp. 320–329. [Google Scholar]

- Dawson, S.; Poquet, O.; Colvin, C.; Rogers, T.; Pardo, A.; Gasevic, D. Rethinking Learning Analytics Adoption through Complexity Leadership Theory. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, Sydney, Australia, 7–9 March 2018; pp. 236–244. [Google Scholar]

- Gasevic, D.; Tsai, Y.-S.; Dawson, S.; Pardo, A. How Do We Start? An Approach to Learning Analytics Adoption in Higher Education. Int. J. Inf. Learn. Technol. 2019, 36, 342–353. [Google Scholar] [CrossRef]

- Viberg, O.; Hatakka, M.; Bälter, O.; Mavroudi, A. The Current Landscape of Learning Analytics in Higher Education. Comput. Human Behav. 2018, 89, 98–110. [Google Scholar] [CrossRef]

- Broos, T.; Hilliger, I.; Pérez-Sanagustín, M.; Htun, N.; Millecamp, M.; Pesántez-Cabrera, P.; Solano-Quinde, L.; Siguenza-Guzman, L.; Zuñiga-Prieto, M.; Verbert, K.; et al. Coordinating Learning Analytics Policymaking and Implementation at Scale. Br. J. Educ. Technol. 2020, 51, 938–954. [Google Scholar] [CrossRef]

- Knobbout, J.H.; van der Stappen, E. A Capability Model for Learning Analytics Adoption: Identifying Organizational Capabilities from Literature on Big Data Analytics, Business Analytics, and Learning Analytics. Int. J. Learn. Anal. Artif. Intell. Educ. 2020, 2, 47–66. [Google Scholar] [CrossRef]

- Knobbout, J.H.; van der Stappen, E.; Versendaal, J. Refining the Learning Analytics Capability Model. In Proceedings of the 26th Americas Conference on Infor-mation Systems (AMCIS), Salt Lake City, UT, USA, 10–14 August 2020. [Google Scholar]

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design Science in Information Systems Research. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Peffers, K.; Rothenberger, M.; Tuunanen, T.; Vaezi, R. Design Science Research Evaluation. In Proceedings of the International Conference on Design Science Research in Information Systems, Las Vegas, NV, USA, 14–15 May 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 398–410. [Google Scholar]

- Prat, N.; Comyn-Wattiau, I.; Akoka, J. A Taxonomy of Evaluation Methods for Information Systems Artifacts. J. Manag. Inf. Syst. 2015, 32, 229–267. [Google Scholar] [CrossRef]

- Venable, J. Design Science Research Post Hevner et al.: Criteria, Standards, Guidelines, and Expectations. In Proceedings of the International Conference on Design Science Research in Information Systems, St. Gallen, Switzerland, 4–5 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 109–123. [Google Scholar]

- Hevner, A.R. A Three Cycle View of Design Science Research. Scand. J. Inf. Syst. 2007, 19, 87–92. [Google Scholar]

- Bias, R.G. The Pluralistic Usability Walkthrough: Coordinated Empathies. In Usability Inspection Methods; John Wiley & Sons: New York, NY, USA, 1994; pp. 63–76. [Google Scholar]

- Long, P.; Siemens, G.; Conole, G.; Gašević, D. Message from the LAK 2011 General & Program Chairs. In Proceedings of the 1st International Conference on Learning Analytics and Knowledge, Banff, AB, Canada, 27 February–1 March 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 3–4. [Google Scholar]

- Tsai, Y.-S.; Gašević, D. The State of Learning Analytics in Europe. 2017. Available online: http://sheilaproject.eu/wp-content/uploads/2017/04/The-state-of-learning-analytics-in-Europe.pdf (accessed on 22 February 2023).

- Alzahrani, A.S.; Tsai, Y.-S.; Iqbal, S.; Marcos, P.M.M.; Scheffel, M.; Drachsler, H.; Kloos, C.D.; Aljohani, N.; Gasevic, D. Untangling Connections between Challenges in the Adoption of Learning Analytics in Higher Education. Educ. Inf. Technol. 2022, 1–33. [Google Scholar] [CrossRef]

- Colvin, C.; Dawson, S.; Wade, A.; Gašević, D. Addressing the Challenges of Institutional Adoption. In Handbook of Learning Analytics; Lang, C., Siemens, G., Wise, A., Gasevic, D., Eds.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2017; pp. 281–289. [Google Scholar]

- Tsai, Y.-S.; Gašević, D. Learning Analytics in Higher Education—Challenges and Policies: A Review of Eight Learning Analytics Policies. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 233–242. [Google Scholar]

- Cosic, R.; Shanks, S.; Maynard, G. A Business Analytics Capability Framework. Australas. J. Inf. Syst. 2015, 19, S5–S19. [Google Scholar] [CrossRef]

- Gupta, M.; George, J.F. Toward the Development of a Big Data Analytics Capability. Inf. Manag. 2016, 53, 1049–1064. [Google Scholar] [CrossRef]

- Tsai, Y.-S.; Rates, D.; Moreno-Marcos, P.M.; Muñoz-Merino, P.J.; Jivet, I.; Scheffel, M.; Drachsler, H.; Kloos, C.D.; Gašević, D. Learning Analytics in European Higher Education—Trends and Barriers. Comput. Educ. 2020, 155, 103933. [Google Scholar] [CrossRef]

- Knobbout, J.H.; van der Stappen, E. Where Is the Learning in Learning Analytics? A Systematic Literature Review on the Operationalization of Learning-Related Constructs in the Evaluation of Learning Analytics Interventions. IEEE Trans. Learn. Technol. 2020, 13, 631–645. [Google Scholar] [CrossRef]

- Barney, J. Firm Resources and Sustained Competitive Advantage. J. Manag. 1991, 17, 99–120. [Google Scholar] [CrossRef]

- Grant, R.M. The Resource-Based Theory of Competitive Advantage: Implications for Strategy Formulation. Calif. Manag. Rev. 1991, 33, 114–135. [Google Scholar] [CrossRef]

- Amit, R.; Schoemaker, P.J.H. Strategic Assets and Organizational Rent. Strateg. Manag. J. 1993, 14, 33–46. [Google Scholar] [CrossRef]

- Makadok, R. Toward a Synthesis of the Resource-Based and Dynamic-Capability Views of Rent Creation. Strateg. Manag. J. 2001, 22, 387–401. [Google Scholar] [CrossRef]

- Hilliger, I.; Ortiz-Rojas, M.; Pesántez-Cabrera, P.; Scheihing, E.; Tsai, Y.-S.; Muñoz-Merino, P.J.; Broos, T.; Whitelock-Wainwright, A.; Pérez-Sanagustín, M. Identifying Needs for Learning Analytics Adoption in Latin American Universities: A Mixed-Methods Approach. Internet High. Educ. 2020, 45, 100726. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Inspection Methods. In Conference Companion on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 1994; pp. 413–414. [Google Scholar]

- Venable, J.; Pries-Heje, J.; Baskerville, R. FEDS: A Framework for Evaluation in Design Science Research. Eur. J. Inf. Syst. 2016, 25, 77–89. [Google Scholar] [CrossRef]

- March, S.T.; Smith, G.F. Design and Natural Science Research on Information Technology. Decis. Support Syst. 1995, 15, 251–266. [Google Scholar] [CrossRef]

- Thurmond, V.A. The Point of Triangulation. J. Nurs. Scholarsh. 2001, 33, 253–258. [Google Scholar] [CrossRef] [PubMed]

- Kusters, R.J.G.; Versendaal, J. Horizontal Collaborative E-Purchasing for Hospitals: IT for Addressing Collaborative Purchasing Impediments. J. Int. Technol. Inf. Manag. 2013, 22, 65–83. [Google Scholar] [CrossRef]

- Thorvald, P.; Lindblom, J.; Schmitz, S. Modified Pluralistic Walkthrough for Method Evaluation in Manufacturing. Procedia Manuf. 2015, 3, 5139–5146. [Google Scholar] [CrossRef]

- Emaus, T.; Versendaal, J.; Kloos, V.; Helms, R. Purchasing 2.0: An Explorative Study in the Telecom Sector on the Potential of Web 2.0 in Purchasing. In Proceedings of the 7th International Conference on Enterprise Systems, Accounting and Logistics (ICESAL 2010), Rhodes Island, Greece, 28–29 June 2010. [Google Scholar]

- Dahlberg, P. Local Mobility. Ph.D. Thesis, Göteborg University, Gothenburg, Sweden, 2003. [Google Scholar]

- Dyckhoff, A.L.; Zielke, D.; Bültmann, M.; Chatti, M.A.; Schroeder, U. Design and Implementation of a Learning Analytics Toolkit for Teachers. Educ. Technol. Soc. 2012, 15, 58–76. [Google Scholar]

- Rödle, W.; Wimmer, S.; Zahn, J.; Prokosch, H.-U.; Hinkes, B.; Neubert, A.; Rascher, W.; Kraus, S.; Toddenroth, D.; Sedlmayr, B. User-Centered Development of an Online Platform for Drug Dosing Recommendations in Pediatrics. Appl. Clin. Inform. 2019, 10, 570–579. [Google Scholar] [CrossRef]

- Riihiaho, S. The Pluralistic Usability Walk-through Method. Ergon. Des. 2002, 10, 23–27. [Google Scholar] [CrossRef]

- Conway, G.; Doherty, E.; Carcary, M. Evaluation of a Focus Group Approach to Developing a Survey Instrument. In Proceedings of the European Conference on Research Methods for Business & Management Studies, Rome, Italy, 12–13 July 2018; 2018; pp. 92–98. [Google Scholar]

- Gable, G.G. Integrating Case Study and Survey Research Methods: An Example in Information Systems. Eur. J. Inf. Syst. 1994, 3, 112–126. [Google Scholar] [CrossRef]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1985. [Google Scholar]

- King, W.R.; He, J. A Meta-Analysis of the Technology Acceptance Model. Inf. Manag. 2006, 43, 740–755. [Google Scholar] [CrossRef]

- Ali, L.; Asadi, M.; Gašević, D.; Jovanović, J.; Hatala, M. Factors Influencing Beliefs for Adoption of a Learning Analytics Tool: An Empirical Study. Comput. Educ. 2013, 62, 130–148. [Google Scholar] [CrossRef]

- Rienties, B.; Herodotou, C.; Olney, T.; Schencks, M.; Boroowa, A. Making Sense of Learning Analytics Dashboards: A Technology Acceptance Perspective of 95 Teachers. Int. Rev. Res. Open Distrib. Learn. 2018, 19, 186–202. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Model of the Antecedents of Perceived Ease of Use: Development and Test. Decis. Sci. 1996, 27, 451–481. [Google Scholar] [CrossRef]

- Herodotou, C.; Rienties, B.; Boroowa, A.; Zdrahal, Z.; Hlosta, M. A Large-Scale Implementation of Predictive Learning Analytics in Higher Education: The Teachers’ Role and Perspective. Educ. Technol. Res. Dev. 2019, 67, 1273–1306. [Google Scholar] [CrossRef]

- Boudreau, M.-C.; Gefen, D.; Straub, D.W. Validation in Information Systems Research: A State-of-the-Art Assessment. MIS Q. 2001, 25, 1–16. [Google Scholar] [CrossRef]

- Carifio, J.; Perla, R. Resolving the 50-Year Debate around Using and Misusing Likert Scales. Med. Educ. 2008, 42, 1150–1152. [Google Scholar] [CrossRef]

- Dahl, S.G.; Allender, L.; Kelley, T.; Adkins, R. Transitioning Software to the Windows Environment-Challenges and Innovations. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1995, 39, 1224–1227. [Google Scholar] [CrossRef]

- Meixner, B.; Matusik, K.; Grill, C.; Kosch, H. Towards an Easy to Use Authoring Tool for Interactive Non-Linear Video. Multimed. Tools Appl. 2014, 70, 1251–1276. [Google Scholar] [CrossRef]

- Gasevic, D.; Dawson, S.; Jovanovic, J. Ethics and Privacy as Enablers of Learning Analytics. J. Learn. Anal. 2016, 3, 1–4. [Google Scholar] [CrossRef]

- Pardo, A.; Siemens, G. Ethical and Privacy Principles for Learning Analytics. Br. J. Educ. Technol. 2014, 45, 438–450. [Google Scholar] [CrossRef]

- Lukyanenko, R.; Parsons, J. Guidelines for Establishing Instantiation Validity in IT Artifacts: A Survey of IS Research Instantiation Validity and Artifact Sampling View Project Participatory Design of User-Generated Content Systems View Project. In New Horizons in Design Science: Broadening the Research Agenda: 10th International Conference, DESRIST 2015, Dublin, Ireland, 20–22 May 2015; Springer: Berlin, Germany, 2015; Volume 9073, pp. 430–438. [Google Scholar] [CrossRef]

- Baker, R.S. Challenges for the Future of Educational Data Mining: The Baker Learning Analytics Prizes. J. Educ. Data Min. 2019, 11, 1–17. [Google Scholar]

- Knight, S.; Gibson, A.; Shibani, A. Implementing Learning Analytics for Learning Impact: Taking Tools to Task. Internet High. Educ. 2020, 45, 100729. [Google Scholar] [CrossRef]

- Winter, R. Design Science Research in Europe. Eur. J. Inf. Syst. 2008, 17, 470–475. [Google Scholar] [CrossRef]

- Bryman, A.; Bell, E. Business Research Methods, 2nd ed.; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Field, A. Discovering Statistics Using SPSS, 2nd ed.; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2005. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).