Abstract

The power distribution network business gradually extends from the grid domain to the social service domain, and the new business keeps expanding. The edge device uses microservice architecture and container technology to realize the processing of different services by one physical device. Although the power distribution network IoT with cloud-edge architecture has good scalability, scenarios with insufficient resources for edge devices may occur. In order to support the scheduling and collaborative processing of tasks under the resource-constrained scenario from the edge device, this paper proposes a cloud-edge collaborative online scheduling method for the distribution of station area tasks under the microservice architecture. The article models the characteristics of power tasks and their constraints in the cloud-edge containerized scenario, designs the priority policy and task assignment policy based on the cloud-edge scheduling mechanism of containerized power tasks, and schedules the tasks in real time by an improved online algorithm. Simulation results show that the algorithm proposed in this paper has high task execution efficiency, can improve the completion rate of important tasks with limited resources of edge devices, and improves system security through resource replacement.

1. Introduction

With the construction of the IoT for power distribution, the number of terminal devices and tasks is rapidly increasing, and the traditional cloud computing model has bottlenecks in terms of response speed and communication latency [1,2]. Migrating some of the computations from the cloud to the edge computing nodes on the data source side can reduce the pressure on the cloud and satisfy real-time data analysis and low-latency task processing [3]. Power distribution IoT cloud and edge are based on the idea of software definition and the decoupling of hardware and software through microservice architecture to further reduce the application control granularity [4]. The literature [5] proposes an edge computing-based distribution automation architecture, including a five-layer architecture: master station, medium-voltage lines, distribution transformers, low-voltage lines, and low-voltage users. The intelligent distribution terminal is connected to the cloud master station, providing data to the cloud master station and accepting control commands from the cloud master station. At the same time, it supports massive equipment access and receives data uploaded from the end-side as well as issuing commands to the end-side equipment. The literature [6] proposes a real-time monitoring framework for a smart grid based on edge computing. With edge computing, the process of data mining and processing is moved to the edge server. While applications are increasing with the expansion of new services, there may be a shortage of device computing, storage, and communication resources. Using the characteristics of microservices independent of each other, according to the differentiated needs of application services, selecting some applications to offload to the cloud for execution and releasing the resources of the device are of great significance to fully utilize the respective advantages of the cloud edge and improve the efficiency of power task execution.

There are many studies on task scheduling for grid devices. The literature [7] proposes an edge computing-based architecture for the optimal scheduling of active distribution grids. The grid is optimized by a control center in the region and edge computing nodes are used for the optimization of distributed energy sources. By establishing collaborative optimization between the control center and the edge nodes, the amount of information exchange as well as the bandwidth requirements are reduced. The literature [8] adds alternate edge nodes to the system architecture that only compute and do not generate tasks. Tasks can be offloaded to either the local edge node, the residential cloud platform, or the alternate edge computing node. The scheduling process considers the task latency and energy consumption, and uses game theory to offload the residential electricity tasks to achieve multi-party optimality by solving the Nash equilibrium. Under the cable monitoring application, the literature [9] models the task queue in the edge nodes and the task allocation among the edge nodes to solve the problem using an improved particle swarm algorithm. The proposed strategy reduces the execution time of tasks and improves the resource utilization of the system. By describing tasks with dependencies through a workflow model, the literature [10] proposes a list-based task scheduling algorithm that reduces task execution time and improves service quality by considering the running speed of containers and task concurrency in the task scheduling process.

The limitation of computational capacity and resources of the edge server in processing tasks is better solved. The literature [11] uses virtual machines as task processing carriers to meet the requirements of latency-sensitive tasks by solving three subproblems: load balancing, resource allocation, and task assignment. The literature [12] proposes a task allocation mechanism based on the cooperation of edge computing nodes and solves the dual edge node cooperative task allocation problem using an improved particle swarm optimization algorithm to minimize the average task completion latency. The literature [13] establishes a task cloud-side collaboration mechanism based on microservice architecture. The tasks are modeled with directed acyclic graphs, and the scheduling process considers two offloading methods, edge-cloud offloading and edge-side offloading, to minimize the system offloading cost to determine the optimal offloading method. However, the existing scheduling strategies do not consider the influence of power task characteristics and container state on scheduling decisions under microservice architecture, and focus on scheduling tasks in an offline manner, lacking research on multi-task online scheduling.

In this paper, we propose an online scheduling strategy for collaborative cloud-side scheduling of distribution station area tasks under a microservice architecture. The tasks are modeled using directed acyclic graphs. The scheduling strategy considers the differentiated characteristics and container states of power tasks, and is divided into two parts, priority strategy and task assignment strategy, and realizes real-time scheduling of multiple tasks in different environments by an improved online algorithm. The structure of the paper is as follows: Section 1 introduces the research background; Section 2 analyzes the application scenario and task characteristics; Section 3 establishes the system model based on container technology and microservice architecture; Section 4 performs the analysis of constraints and algorithm design; and Section 5 performs simulation verification.

2. Application Scenario of Power Distribution Station Area

With the development of IoT in distribution, the distribution station area involves a variety of application scenarios, including data analysis of the distribution station area, intelligent operation and maintenance of distribution equipment and fault repair, energy use analysis and energy saving and carbon reduction, coordinated control of source-grid-load-storage driven by dual carbon, power quality governance, etc. [14,15] Each scenario will contain multiple types of applications. Take the source-grid-load-storage coordination and control scenario as an example, it requires demand-side response, integrated energy services, new energy consumption, grid peak-valley regulation, etc.

The tasks in the distribution station area are characterized by diversity, dynamism, and differentiation. Diversity is reflected in the variety of tasks and the number of scenarios involved; dynamism is reflected in the random arrival of tasks on the one hand and the large change in task information at each arrival on the other hand. Differentiation is reflected in the fact that different tasks differ greatly in terms of data volume, time delay, and importance, as well as in processing methods and resource requirements.

3. Task Scheduling Model for Distribution Stations under Cloud-Edge Collaboration

3.1. Edge Equipment in the Power Distribution Station Area

In response to the new needs of diversified, dynamic, and differentiated tasks in the distribution station area and the deepening of the business scope to the customer, the edge equipment formed a new architecture with microservices as the execution carrier [16]. In microservice architecture, a single application is divided into various small, interconnected microservices, each accomplishing a single function and remaining independent and decoupled from each other. Due to the good isolation performance of containers and the advantage of lightweight, the deployment of microservices types of applications through containers enables the processing of multiple types of tasks by edge devices and guarantees task isolation as well as data security.

There are two types of container operation states in the edge equipment, not running and in operation. Here, zero is used to indicate that the task is not running and one indicates that the task is running. The number of containers that are running at a given moment in the edge device can be obtained from the container state (denoted as ). The edge device has limited resources and therefore it is difficult to support multiple containers running concurrently. To simplify the analysis, assume that the maximum number of containers that the edge device can support running concurrently is M, and the number of running containers of the edge device is A (). When A is equal to M, the edge device is considered resource-constrained, and when the number of running containers of all edge devices reaches M, the edge computing system is considered resource-constrained.

3.2. Task Model

A series of power tasks can be generated at any time in any order, and the set of power tasks is denoted as . The task is denoted as , where is the arrival time of the task, is the deadline of the task, is task type, and .

Power tasks can be divided into general tasks, alarm tasks, and fault handling tasks [16]. General tasks include load forecasting, electric vehicle charging management, etc. Alarm tasks include the three-phase unbalance alarm, power theft alarm, etc. Fault handling tasks complete functions such as fault confirmation and fault location. The value ranges of the three types of tasks are shown in Section 4.1.2. denotes the structure of task .

The completion of a power task depends on several microservices, and multiple microservices with dependencies can be modeled by a Directed Acyclic Graph (DAG) [13], denoted as , where is a node of the graph representing the k microservices invoked by the task. is a set of directed edges that represent dependencies between microservices. For example, a directed edge indicates that microservice depends on . The set of such microservices as is called a predecessor microservice, and denotes the set of all predecessor microservices of . The set of microservices such as is called the successor microservice, and denotes the set of all successor microservices of . denotes the set consisting of the weights of each directed edge, where the weight refers to the amount of data transferred between two microservices. denotes the amount of data transferred between and .

Microservices that do not contain any predecessor microservices are referred to as entry microservices and microservices that do not contain any successor microservices are referred to as exit microservices. A task often contains multiple entry microservices or exit microservices. During task processing, task data information from the terminal is uploaded to the edge device in the region, and when the task is processed at the co-processing edge device, the result data is sent back to the edge device in the region. In order to calculate the delay caused by the transmission of the input data of the task as well as the result data to the task scheduling, and considering that both the input data and the result data have to reach the edge device in the region, the virtual entry microservice and the virtual exit microservice that do not occupy any time and resources are added. The virtual entry microservice transmits the collected information to the receiving microservice, and the virtual exit microservice receives the result information of task completion.

The set of microservices invoked by the task is denoted as , where the sum of the weights of the edges between the virtual entry microservice and each actual entry microservice represents the amount of input data for this power task, and the sum of the weights of the edges between each actual exit microservice and the virtual exit microservice represents the amount of output data. Since the initial data of the power task is sent from the sensing layer terminal to the edge device and the task processing result is also sent to the edge device, the virtual ingress microservice and the virtual egress microservice must be executed at the edge device and cannot be offloaded.

3.3. Mapping of Microservices to Containers

According to the correspondence between microservices and containers, each microservice can correspond to a container to handle it. The mapping relationship between microservices and containers can be represented as , where is the set of all microservices and is the set of all containers. For any and , represents the microservice v that will be processed by container c.

Assuming that the cloud and edge have images of all containers, there are three scenarios when microservices are processed by the corresponding containers. (1) The container corresponding to v is running (). Assuming that each container can only process one microservice it is responsible for at a certain time period, when a large number of similar microservices arrive, needs to enter the container waiting queue to wait for execution. (2) The container corresponding to is not running and the edge device is not resource constrained (). Disregarding the container initialization configuration time, can be executed immediately. (3) The container corresponding to is not running and the edge device is resource constrained (). needs to wait for the rest of the containers to enter the idle state to release the edge device resources before being executed. Assuming that the resources of the cloud are sufficient, all three cases need to be considered in the edge device, and only the first two cases need to be considered in the cloud.

3.4. Cloud-Edge Scheduling Mechanism for Power Tasks under Microservice Architecture

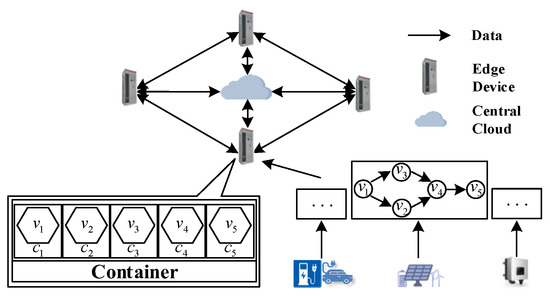

The system consists of several edge devices and a central cloud, which can be denoted as , where N is the total number of edge devices and the central cloud, is the central cloud, and the rest are edge devices. Each edge device can be responsible for the processing of tasks within one or several distribution stations, and the central cloud manages all devices. After a power task request is generated, it is submitted to the edge device in the region to wait for processing. Until the task arrives, the task information is unknown, such as the microservice invoked, the amount of input data, the deadline, etc.

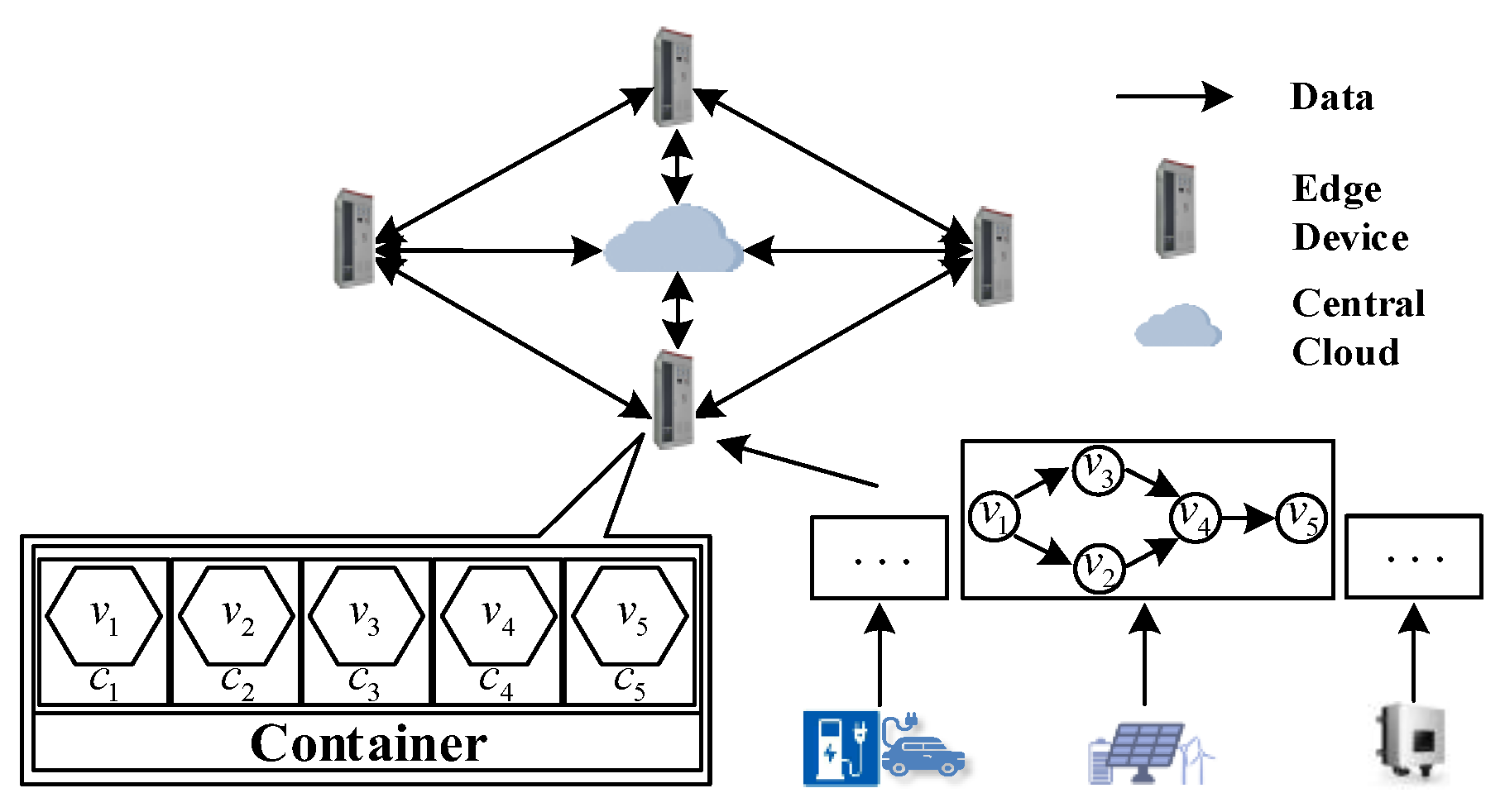

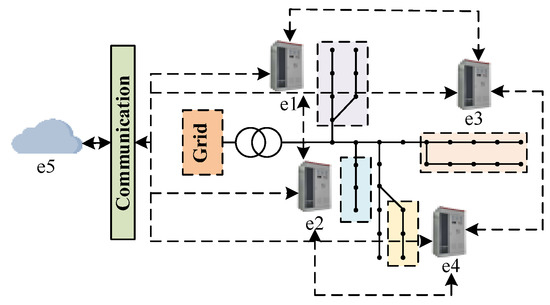

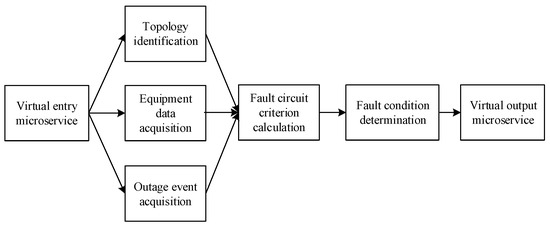

denotes the i-th microservice invoked by task . The processing time of at device is , which can be estimated based on historical information [17]. Due to the independence between microservices, the edge devices offload some or all of the microservices to the cloud or other edge devices according to the resources of the devices and the needs of the tasks, and collaborate with each other to perform the tasks through the replacement of communication and computational resources. In the data transfer process, the data transfer rate between devices and is , and . When , then , ignoring the transmission time of data within the same device. The completion of the virtual exit microservice indicates the completion of the whole task. The cloud-edge scheduling mechanism for power tasks under the microservice architecture is shown in Figure 1.

Figure 1.

Cloud-edge scheduling mechanism for power tasks under microservice architecture.

4. Cloud-Edge Collaborative Online Scheduling Algorithm

Diverse, dynamic, and differentiated features of tasks and limited and fluctuating resources of edge devices are the main difficulties when scheduling tasks. Due to the real-time requirements of task scheduling, the scheduling algorithm cannot be too complex. Based on the above scenario requirement analysis and referring to the idea of task scheduling proposed in the literature [17], the Cloud-Edge Collaborative Online Scheduling Algorithm (CECA) for station area tasks is proposed. CECA is based on a scheduling list and mainly consists of two parts: prioritization strategy and task assignment strategy.

4.1. Prioritization Strategy

4.1.1. Prioritization of Microservices

A power task consists of a series of microservices, and prioritizing microservices is crucial for task scheduling [18]. If there are dependencies between microservices, the execution order cannot be exchanged. For example, the sampling class microservices must be executed first before the later analysis and control class microservices can be executed. In addition, the execution order of parallel microservices affects the total delay of task processing [19]. In this paper, Equation (1) is used to calculate the priority of each microservice in the power task.

where is the scheduling priority of , is the average processing time of , as shown in Equation (2), which is the ratio of the sum of the processing time of the tasks at each device to the number of devices. The average processing time of the central cloud is set to 0.8 times of the processing time of the edge devices. According to Equation (1), the scheduling priority of all microservices will be lower than their predecessor microservices, and the parallel microservices with high communication cost will get scheduled first.

4.1.2. Prioritization of Tasks

The previous section defines the microservice prioritization relationships for specific DAG graphs, but priority relationships among multiple power tasks need to be addressed as well. When the edge computing system is resource constrained, important tasks should be prioritized.

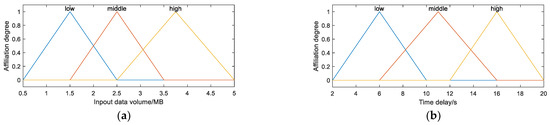

The fuzzy logic based approach has low computational complexity and can be computed with uncertain information [20], so fuzzy logic is used to prioritize different tasks. The steps are as follows.

- (1)

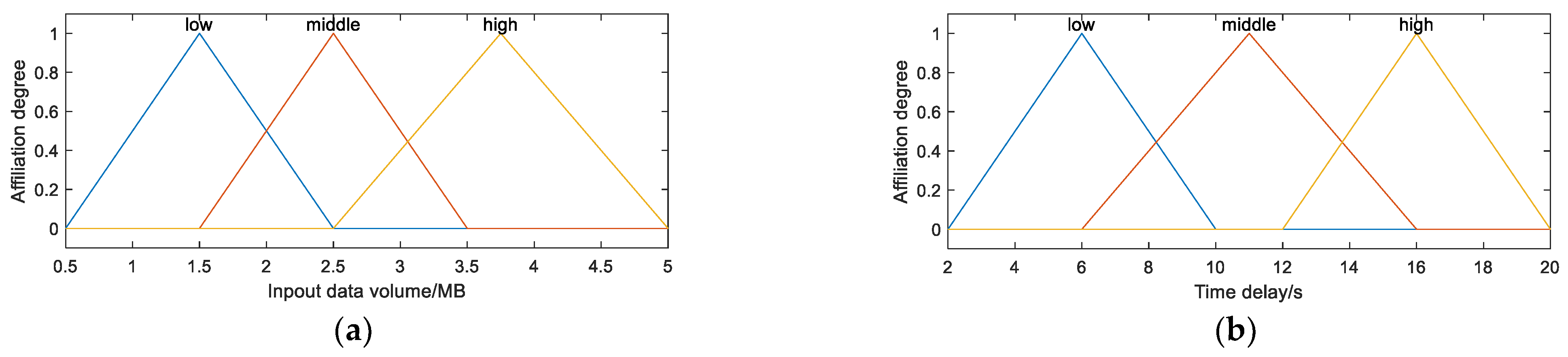

- Fuzzification. We use the triangular affiliation function with high computational efficiency, which is calculated as follows.x is an input or output variable, a, b, c are given real numbers, and . We select three variables that have a significant impact on task execution performance as inputs: time delay, task type value, and input data volume. For time delay and input data volume, low, medium, and high are used as linguistic variables. For task type values, general, alarm, and fault are used as linguistic variables. The output is task priority and very low, low, medium, high, and very high are used as linguistic variables. The affiliation functions of the input and output variables are shown in Figure 2.

Figure 2. Diagram of affiliation degree. (a) Input data volume affiliation function. (b) Time delay affiliation function. (c) Task type value affiliation function. (d) Task priority affiliation function.

Figure 2. Diagram of affiliation degree. (a) Input data volume affiliation function. (b) Time delay affiliation function. (c) Task type value affiliation function. (d) Task priority affiliation function. - (2)

- Fuzzy inference. Inference is performed using fuzzy rules, and the result of the inference is a fuzzy variable for defuzzification. A fuzzy rule is a simple if-then rule with a condition and a conclusion. Since there are 3 affiliation functions and 3 linguistic variables for each affiliation function, there are 27 fuzzy rules. The rules are shown in Table A1.

- (3)

- Deblurring. The center-of-mass method is used to perform the deblurring calculation with the following formula.in the formula is the priority of each task.

4.2. Task Allocation Strategy

Based on the above analysis of the three cases of container processing of microservices, the waiting time of microservice when it is assigned to device for execution can be calculated by Equation (5).

denotes the earliest end time of microservice at device . Microservice is the queue-ending microservice of container wait queue in device , and . denotes the set of queue-ending microservices of the waiting queue of all containers in device .

There are two constraints in task scheduling: priority and capability. The priority constraint means that the microservice cannot execute before the data from its predecessor microservice are transferred to this microservice. The capability constraint means that the microservice must withstand waiting time on the device until the device has the capability to execute this microservice.

The priority constraint:

is the earliest execution time of at device . The minimum value of this time can be obtained by traversing each edge device with the cloud. is the device to which microservice is assigned.

The capability constraint:

The earliest execution time of microservice in device is

The ending time is

Each microservice is assigned to the device that can be executed earliest according to Equation (10).

4.3. Scheduling Algorithms

The algorithm in this paper is based on a scheduling list that greedily assigns each microservice to the device that can be executed earliest. Based on the task scheduling strategy proposed in the literature [18], different power task priorities are calculated using fuzzy logic, subdividing the processing of microservices in containers and establishing the corresponding mathematical model. In the microservice scheduling sequence, different tasks are scheduled for resource constrained situations.

4.3.1. Scheduling List

Create a scheduling list of multiple tasks, and when task arrives at a certain moment, calculate its task priority according to fuzzy logic, and calculate each microservice priority according to Equation (1) to generate a scheduling list of , and add to D immediately.

4.3.2. Microservice Scheduling Order

When the resources of the edge computing system are not constrained, the earliest execution time of each microservice at the top of the scheduling list in D is calculated according to Equation (8). The microservice with the earliest arrival time and the smallest execution time is selected and allocated according to Equation (10).

When the edge computing system is resource-constrained, important tasks such as fault handling may not receive priority scheduling due to the large amount of input data and the long waiting time. Such tasks may not be completed before the deadline, which is detrimental to the security of the power system. Therefore, it should be ensured that important tasks are dispatched first when resources are constrained, which reflects the priority of important tasks to use resources.

This strategy reduces the processing time of important tasks but prolongs the completion time of other tasks. When the edge computing system is resource-constrained, if the priority of each task in D is relatively low, the processing time of all tasks except the highest priority task will be prolonged significantly. To avoid this situation, according to the task priority affiliation function, the horizontal coordinate of the intersection of high priority and very high priority is 0.8. Assume that the scheduling list with the highest priority is in D, and that only when the priority is greater than 0.8, the most preceding microservice is assigned to the target device according to Equation (10). If there are multiple tasks with the same priority, one is randomly selected for scheduling.

To improve the security in the task scheduling process, the information of microservices is removed from D when they are assigned for execution, and the information of is removed from D when all microservices in the scheduling list are assigned and completed. According to Equation (8), we can know the earliest execution time of . If it is earlier than the task deadline, it means that the task is completed; otherwise, the task is not completed.

5. Simulation Verification

5.1. Test Systems

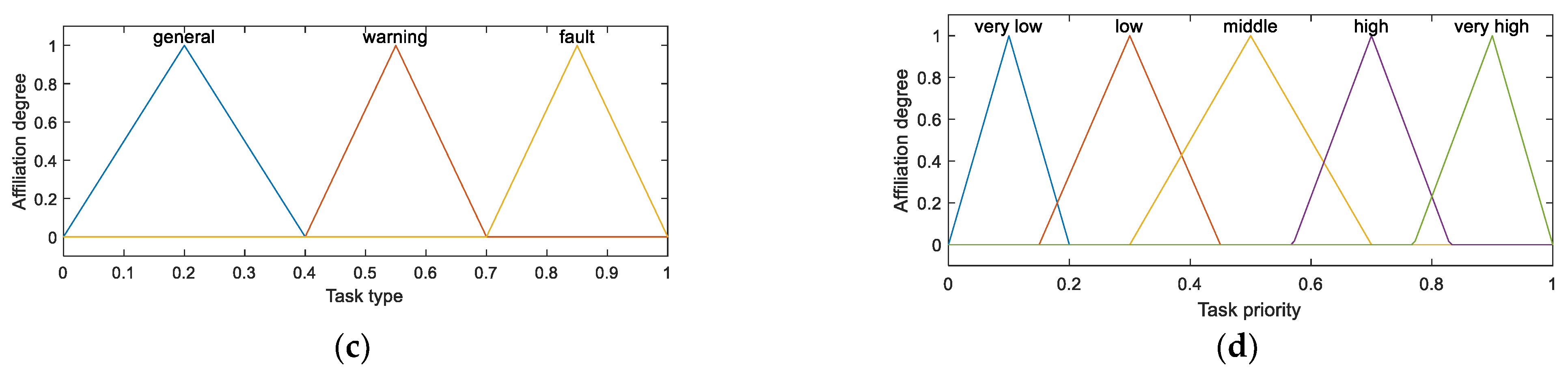

In order to verify the performance of the algorithm proposed in this paper, the algorithm is implemented and tested using Python. The test system is a modified IEEE 33-node system [13], which is shown in Figure 3.

Figure 3.

An Improved IEEE 33 System.

A total of four edge devices and one central cloud are deployed in the power distribution station area. The number of microservices invoked in each power task is 5–9. The amount of data processed by each microservice is between (0.1, 1.2) MB, the amount of data transferred between the virtual entry microservice and each entry microservice is between (0.5, 1) MB, the amount of data transferred between the remaining microservices with dependencies is between (0.1, 0.5) MB, and the latency of each task is between (2, 20) s [21]. The number of concurrent containers supported by each edge device is set to four. The central cloud can support all business containers concurrently, so it is set to nine. Because there is a long delay in data transmission between cloud edges, the communication time between the edge devices and the cloud is set to 20 times the communication time between the edge devices [17].

5.2. Comparison Algorithm

For the comparison of the algorithms proposed in this paper, the following three algorithms for scheduling strategies are considered for comparison.

- Only Edge Device Algorithm (OEDA): all tasks are processed at the initial edge device where they arrive, and no offloading is performed [17].

- Only Cloud Algorithm (OCA): All microservices are offloaded to the cloud for execution, except for virtual entry and exit microservices [22].

- First Come First Service Algorithm (FCFS): Based on queuing, scheduling is carried out based on arrival time. Once a task is started, individual microservices are processed continuously until the task is completed, and no other tasks are scheduled during this process [18].

5.3. Test Results and Analysis

In order to test the performance of the algorithm with different parameter settings, 200 tasks are randomly generated, and the generated 200 tasks are scheduled with the scheduling algorithm of this paper and three comparative scheduling algorithms, respectively.

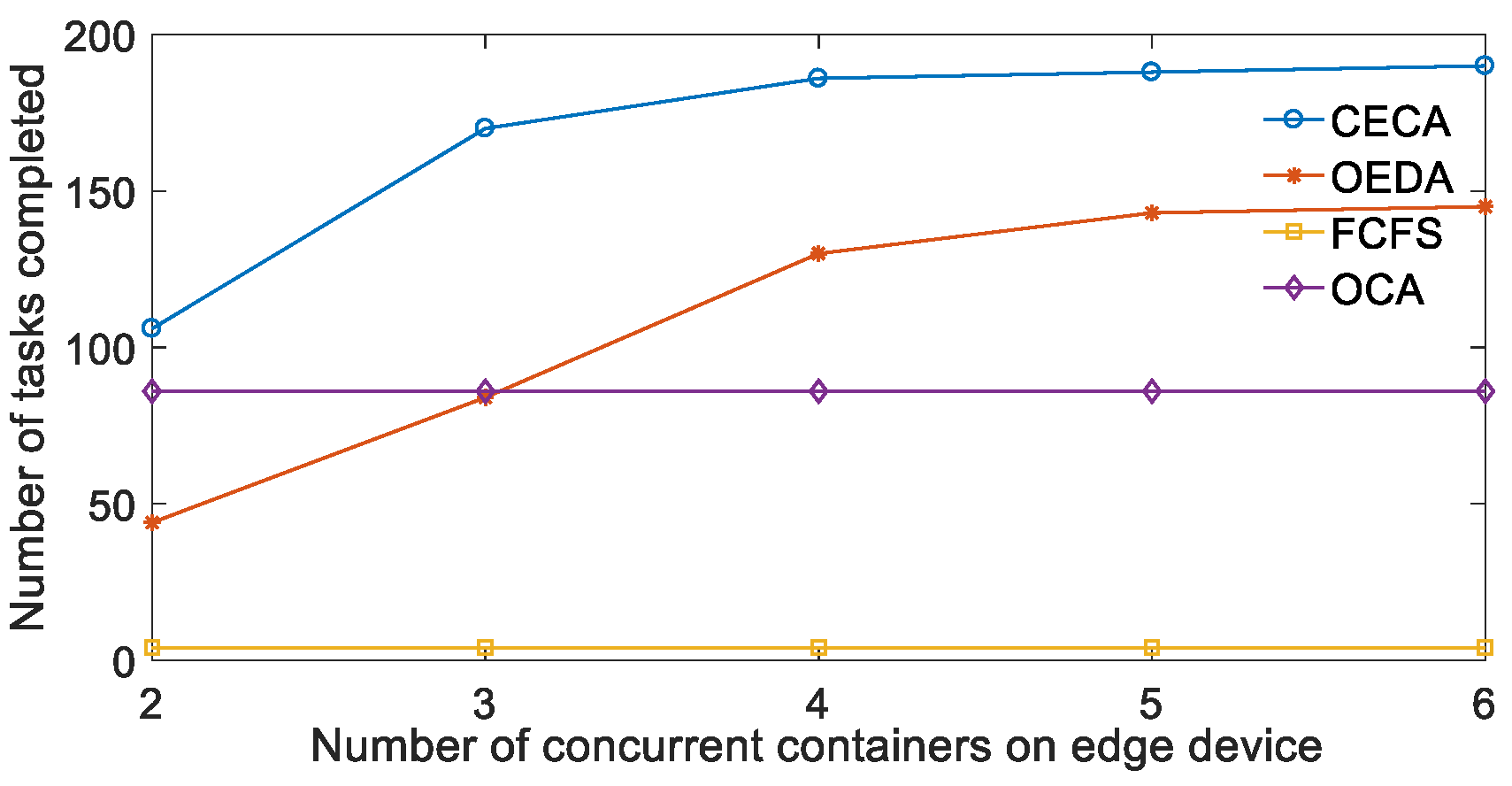

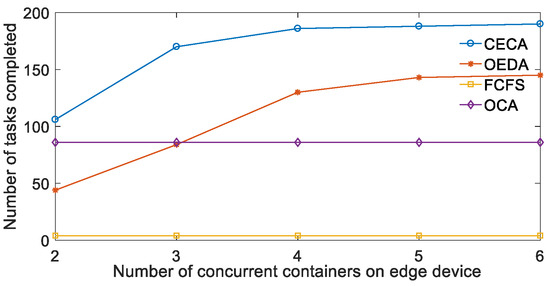

Figure 4 compares the number of tasks completed by the four algorithms with different numbers of concurrent containers supported by the edge devices. The number of concurrent containers supported by the set edge devices is 2, 3, 4, 5, and 6, respectively.

Figure 4.

The impact of the number of concurrent containers of the edge device on task completion.

In Figure 4, the number of tasks completed by both CECA and OEDA increases as the number of containers supported by the edge device for concurrency increases. OCA has the same number of task completions because it is not affected by the edge devices. FCFS schedules microservices for one task at a time, which will only occupy one container and does not involve the concurrent operation of containers. In the same case, CECA can handle more tasks simultaneously.

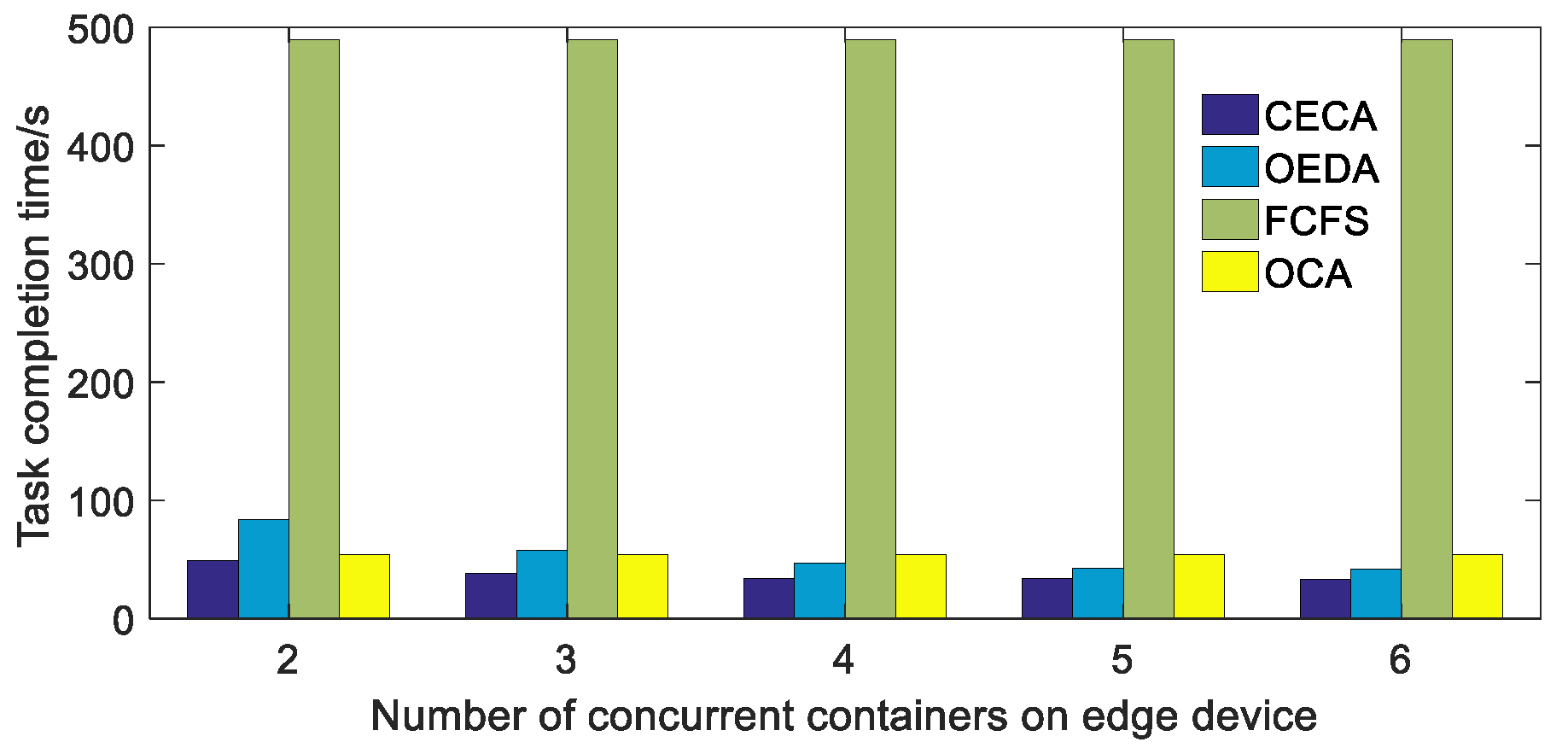

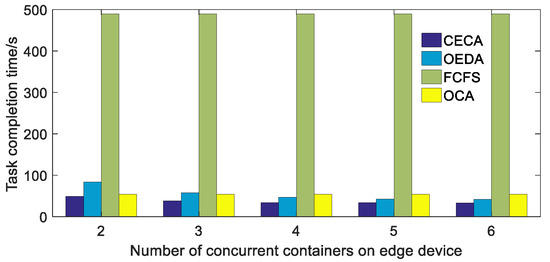

Figure 5 compares the task completion times of the four algorithms with different numbers of concurrent containers supported by the edge devices. The number of concurrent containers supported by the edge devices is set to 2, 3, 4, 5, and 6, respectively.

Figure 5.

The effect of the number of concurrent containers in the edge device on the task completion time.

In Figure 5, both CECA and OEDA task completion times decrease as the number of concurrent containers supported by the edge device increases. OCA and FCFS are not affected. In the same case, CECA task completion time is the least. Combining the results in Figure 4, CECA can complete more tasks in less time and has higher task processing efficiency.

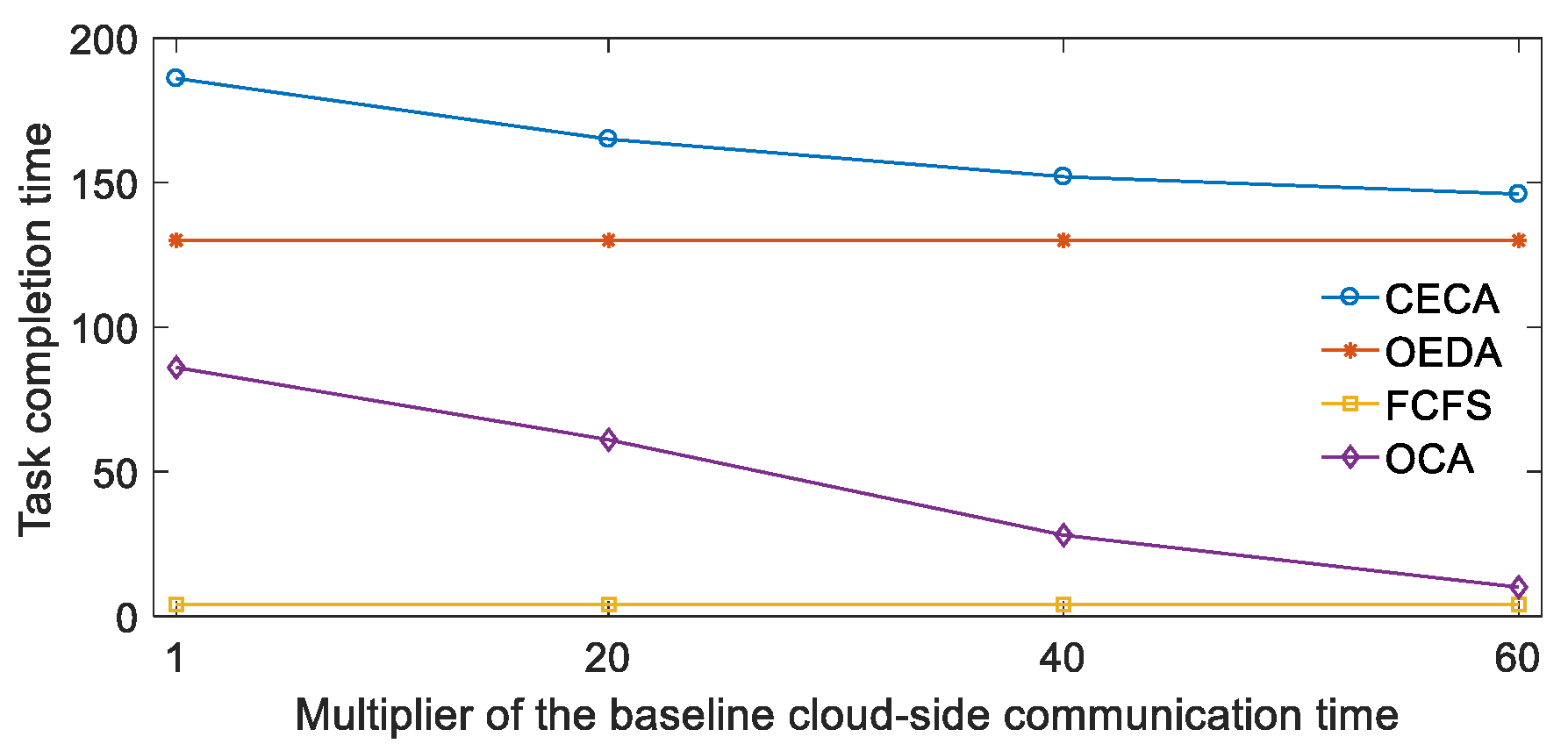

Figure 6 compares the number of tasks completed by the four algorithms at different cloud edge communication times. The cloud edge communication time is set to the default value of 1×, 20×, 40×, and 60×.

Figure 6.

Impact of cloud-edge communication time on the number of tasks completed by different algorithms.

In Figure 6, the number of tasks completed by both CECA and OCA decreases as the cloud edge communication time increases, but the number of tasks completed by CECA decreases relatively slowly and OCA decreases significantly, indicating that CECA is less affected by changes in cloud edge communication resources. Even in an environment with poor cloud-edge communication, CECA is able to make more tasks processed in a timely manner by means of inter-device collaboration.

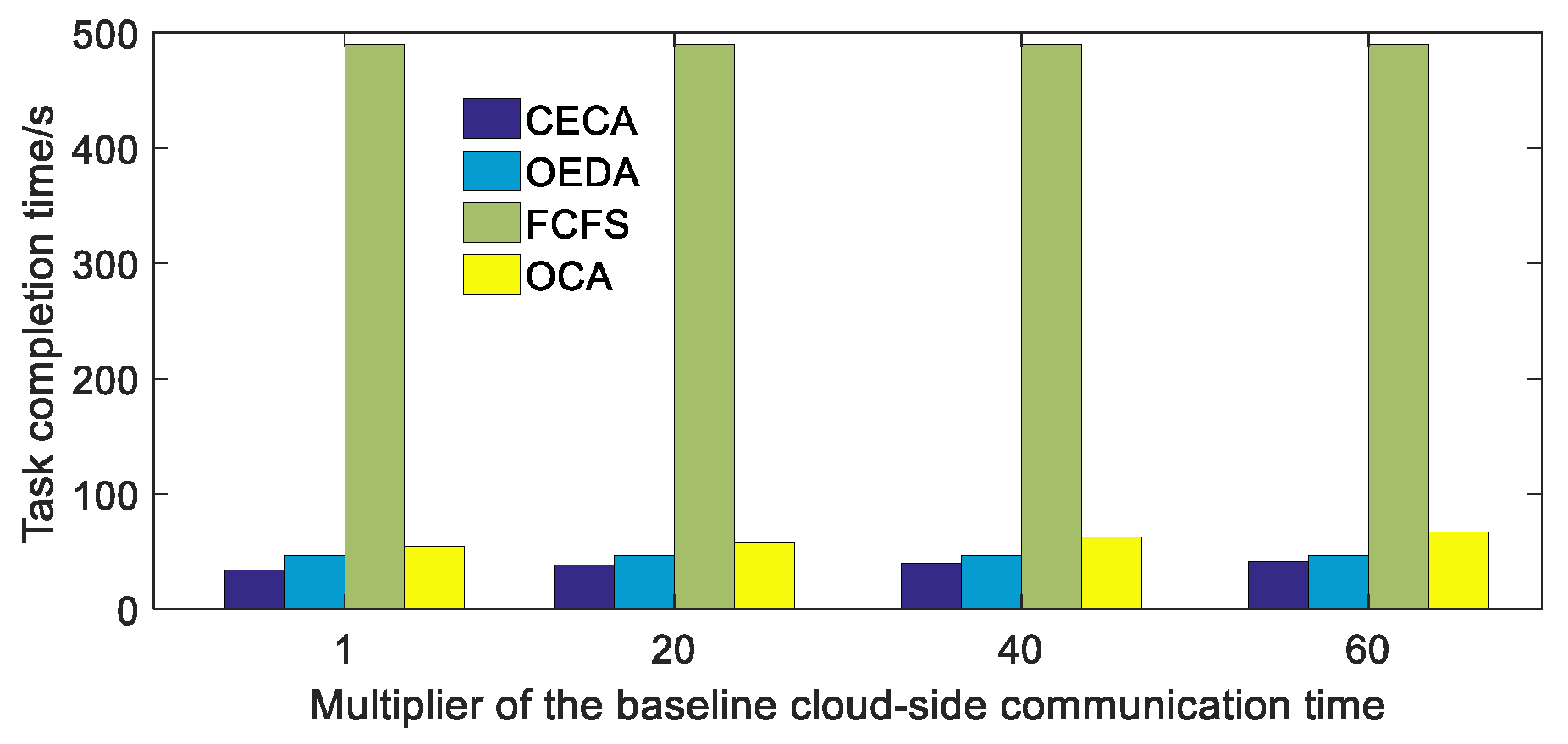

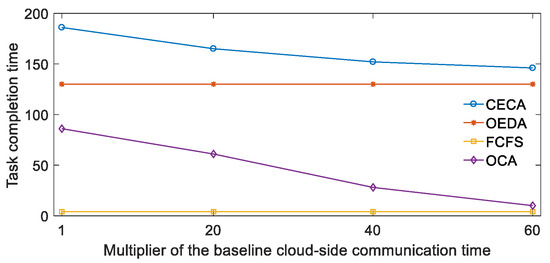

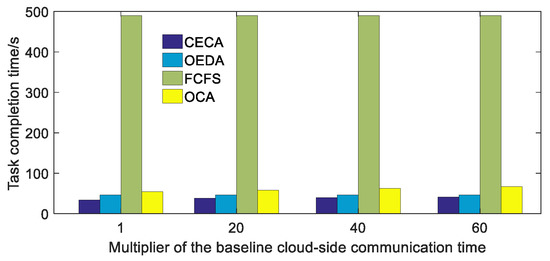

Figure 7 compares the task completion times of the four algorithms for different cloud edge communication times. The cloud edge communication time is set to 1×, 20×, 40×, and 60× of the default value.

Figure 7.

Impact of cloud-edge communication time on the completion time of tasks by different algorithms.

In Figure 7, both CECA and OCA task completion times increase as the cloud-edge communication time increases, and the CECA task completion time is the shortest with the other same settings. Combining the results in Figure 6, CECA still has high task execution efficiency when the communication environment is disturbed, which in turn improves the security of task scheduling.

5.4. Critical Task Scheduling Simulation

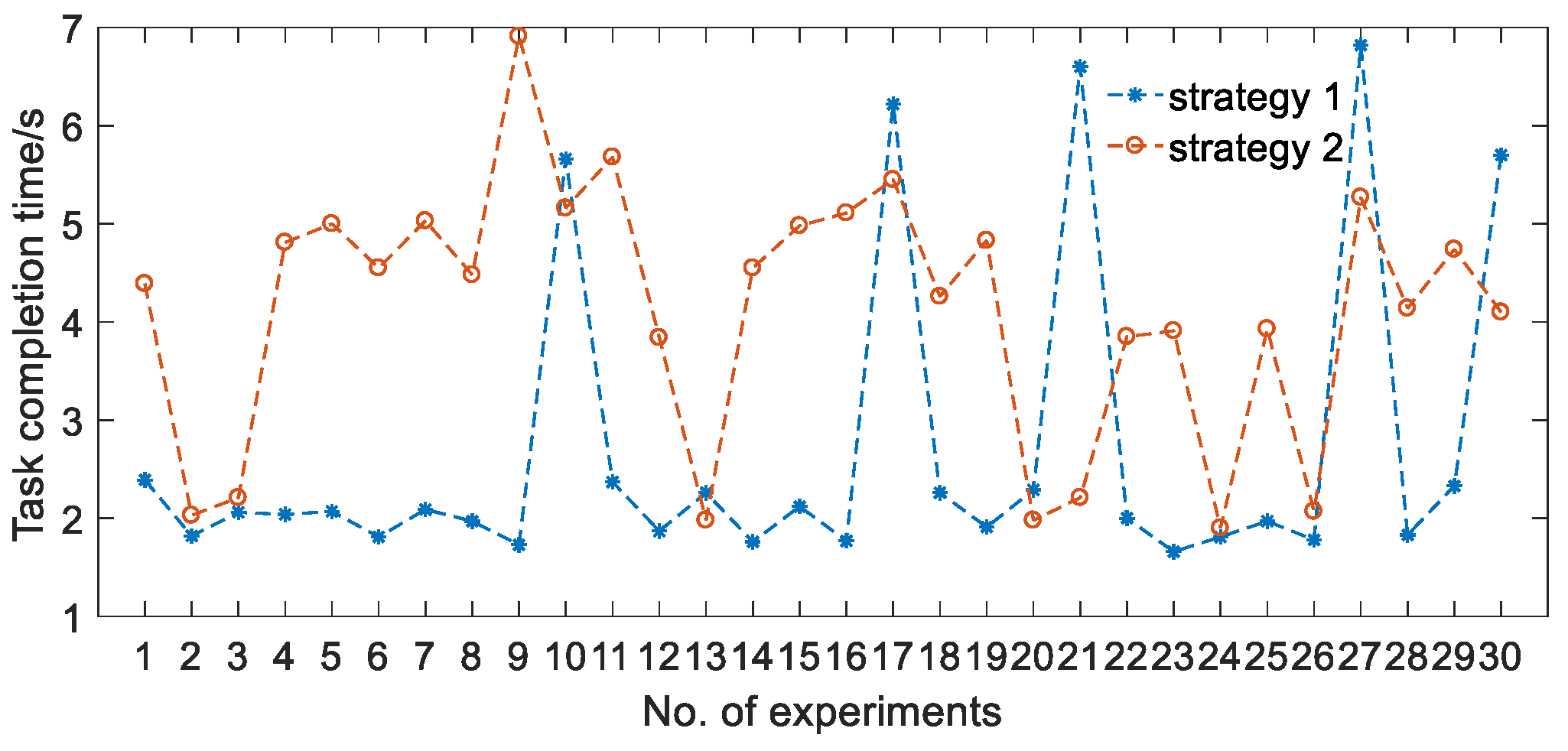

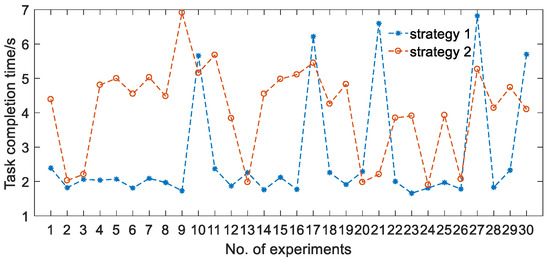

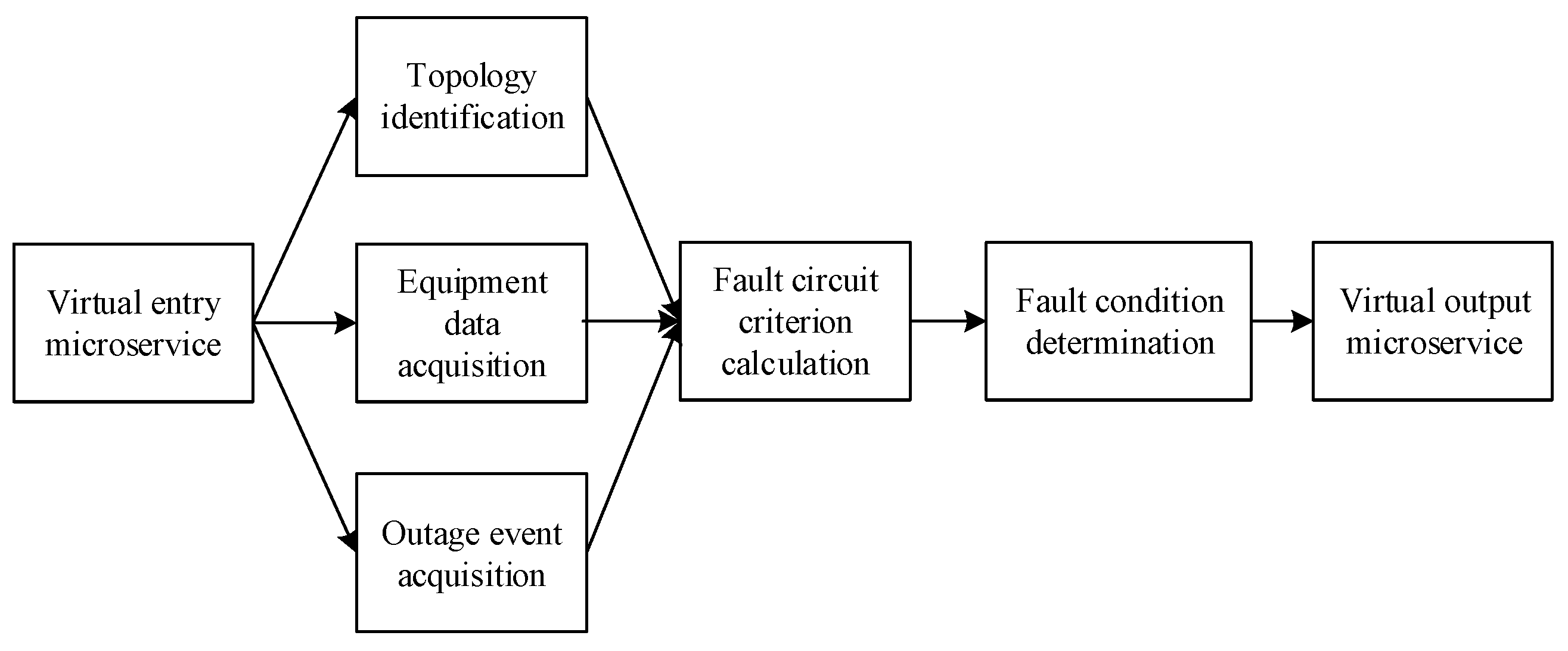

In order to compare the completion of important tasks when the resources of the edge computing system are limited, the scheduling processing of the fault handling tasks is simulated according to the actual operation scenario of the distribution station area. The parameters and structure of the tasks are shown in Figure A1 and Table A2. The scheduling was performed using the strategy of this paper (denoted as strategy 1) and the strategy of the literature [23] (denoted as strategy 2), respectively. Thirty random tests were conducted to observe the task processing, and the results are shown in Figure 8.

Figure 8.

Task processing time under strategy 1 and strategy 2.

In Figure 8, the processing time of the task under strategy 1 is significantly smaller than the processing time of the task under strategy 2. The task completion rate is 83.3% under strategy 1 and 23.3% under strategy 2, with a task delay requirement of 3.2 s. This is because strategy 2 is only based on the order of the scheduling list to select the microservice that can be executed at the earliest, which is less flexible and cannot adapt to the special task requirements. During the operation of the grid, tasks such as fault handling will have a large impact on the grid if they are not handled in a timely manner [24]. The strategy proposed in this paper can reduce the processing time of important tasks when the resources of the edge computing system are limited, and thus can improve the stability and security of the grid operation.

In summary, the cloud-edge collaborative online scheduling strategy for station tasks can meet the needs of various tasks under different resource conditions of the system with higher task processing efficiency. The method provides a solution to the task scheduling problem when the resources of the edge devices are limited.

6. Conclusions

In order to realize the scheduling and collaborative processing of power tasks from the edge equipment level to support the resource-constrained situation, a cloud-edge collaborative online scheduling strategy for power distribution station area tasks under the microservice architecture is proposed. The article analyzes the diversified, dynamic, and differentiated features of station tasks from the perspective of power distribution station tasks. At the device level, the edge devices are characterized by limited and fluctuating resources. Under the microservice architecture, the tasks are modeled using directed acyclic graphs and three processing cases of microservices are distinguished. Based on the cloud-edge scheduling mechanism of power tasks under the microservice architecture, a collaborative cloud-edge online scheduling algorithm for station tasks is proposed for the above characteristics. The priorities of different power tasks are calculated by fuzzy logic, and the tasks are scheduled online according to the different resources of the edge devices. The article simulates the impact of the number of concurrent containers supported by the edge devices and the cloud edge communication time on different strategies, and performs a comparative analysis. The results show that under the same scenario, the proposed strategy can handle more tasks in less time and has a higher task execution efficiency. By simulating the completion of important tasks when the edge computing system is resource-constrained, it is verified that the proposed strategy can improve the completion rate of important tasks and system security when the edge device is resource-constrained through resource replacement.

Author Contributions

Conceptualization, X.Z. and R.C.; methodology, R.C. and Q.C.; software, R.C.; validation, Q.C.; formal analysis, R.C. and Q.C.; investigation, R.C.; resources, X.Z.; writing—original draft preparation, R.C. and Q.C.; writing—review and editing, R.C.; visualization, Q.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available on request due to restrictions, e.g., privacy and ethical reasons.

Acknowledgments

The authors are very grateful to the Shandong Kehui Power Automation Co., Ltd. for support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Fuzzy rules.

Table A1.

Fuzzy rules.

| No. | Input Data Volume | Time Delay | Task Type Value | Task Priority |

|---|---|---|---|---|

| R1 | Low | High | general | Very Low |

| R2 | Low | High | warning | Very Low |

| R3 | Low | Middle | general | Low |

| R4 | Low | Middle | warning | Low |

| R5 | Low | High | fault | Low |

| R6 | Middle | High | general | Low |

| R7 | Middle | High | warning | Low |

| R8 | High | High | general | Low |

| R9 | High | High | warning | Low |

| R10 | Low | Low | general | Middle |

| R11 | Low | Low | warning | Middle |

| R12 | Low | Middle | fault | Middle |

| R13 | Middle | Middle | general | Middle |

| R14 | Middle | Middle | warning | Middle |

| R15 | Middle | High | fault | Middle |

| R16 | High | Middle | general | Middle |

| R17 | High | Middle | warning | Middle |

| R18 | High | High | fault | Middle |

| R19 | Low | Low | fault | High |

| R20 | Middle | Low | general | High |

| R21 | Middle | Low | warning | High |

| R22 | Middle | Middle | fault | High |

| R23 | High | Low | general | High |

| R24 | High | Middle | fault | High |

| R25 | High | Low | warning | Very High |

| R26 | Middle | Low | fault | Very High |

| R27 | High | Low | fault | Very High |

Figure A1.

Fault diagnosis task directed acyclic graph.

Figure A1.

Fault diagnosis task directed acyclic graph.

Table A2.

Fault diagnosis task parameters.

Table A2.

Fault diagnosis task parameters.

| Microservice Name | Number | Processing Data Volume/MB | Transfer Data Volume/MB | Task Type Value | Time Delay/s |

|---|---|---|---|---|---|

| Virtual entry microservice | 0 | 0 | ) | 0.9 | 3.2 |

| ) | |||||

| ) | |||||

| Topology identification | 1 | 0.85 | 0.35 | ||

| Equipment data acquisition | 2 | 0.80 | 0.37 | ||

| Outage event acquisition | 3 | 0.57 | 0.22 | ||

| Fault circuit criterion calculation | 4 | 0.76 | 0.25 | ||

| Fault condition determination | 5 | 0.36 | 0.18 | ||

| Virtual output microservice | 6 | 0 | 0 |

References

- Chen, H.Y.; Wang, X.J.; Li, Z.H.; Chen, W.T.; Cai, Y.Z. Distributed sensing and cooperative estimation/detection of ubiquitous power internet of things. Prot. Control Mod. Power Syst. 2019, 4, 151–158. [Google Scholar] [CrossRef]

- Bedi, G.; Venayagamoorthy, G.K.; Singh, R.; Brooks, R.; Wang, K. Review of Internet of Things (IoT) in electric power and energy systems. IEEE Internet Things J. 2018, 5, 847–870. [Google Scholar] [CrossRef]

- Chen, S.L.; Wen, H.; Wu, J.S.; Lei, W.X.; Hou, W.J.; Liu, W.J.; Xu, A.D.; Jiang, Y.X. Internet of things based smart grids supported by intelligent edge computing. IEEE Access 2019, 7, 74089–74102. [Google Scholar] [CrossRef]

- Lü, J.; Luan, W.P.; Liu, R.; Wang, P.; Lin, J. Architecture of distribution internet of things based on widespread sensing & software defined technology. Power Syst. Technol. 2018, 42, 3108–3115. [Google Scholar] [CrossRef]

- Chen, J.M.; Wei, J.; Hao, J.; Guo, Y.J. Application prospect of edge computing in smart distribution. In Proceedings of the 2018 China International Conference on Electricity Distribution (CICED), Tianjin, China, 17–19 September 2018; pp. 1370–1375. [Google Scholar]

- Huang, Y.; Lu, Y.; Wang, F.; Fan, X.; Liu, J.; Leung, V.C.M. An Edge Computing Framework for Real-Time Monitoring in Smart Grid. In Proceedings of the 2018 IEEE International Conference on Industrial Internet (ICII), Seattle, WA, USA, 21–23 October 2018; pp. 99–108. [Google Scholar] [CrossRef]

- Wang, J.; Peng, Y. Distributed Optimal Dispatching of Multi-Entity Distribution Network with Demand Response and Edge Computing. IEEE Access 2020, 8, 141923–141931. [Google Scholar] [CrossRef]

- Chen, Z.; Xiao, X.; Wang, H.W.; Luo, H.H.; Chen, X. Optimization strategy for unloading power tasks in residential areas based on alternate edge nodes. J. Zhejiang Univ. Eng. Sci. 2021, 55, 917–926. [Google Scholar]

- Shao, S.; Zhang, Q.; Guo, S.; Qi, F. Task Allocation Mechanism for Cable Real-Time Online Monitoring Business Based on Edge Computing. IEEE Syst. J. 2021, 15, 1344–1355. [Google Scholar] [CrossRef]

- Ding, Z.; Wang, S.; Pan, M. QoS-Constrained Service Selection for Networked Microservices. IEEE Access 2020, 8, 39285–39299. [Google Scholar] [CrossRef]

- Niu, X.D.; Shao, S.J.; Chen, X.; Zhou, J.; Guo, S.Y.; Chen, X.Y.; Qi, F. Workload allocation mechanism for minimum service delay in edge computing-based power internet of things. IEEE Access 2019, 7, 83771–83784. [Google Scholar] [CrossRef]

- Wang, Q.J.; Shao, S.J.; Guo, S.Y.; Qiu, X.S.; Wang, Z.L. Task allocation mechanism of power internet of things based on cooperative edge computing. IEEE Access 2020, 8, 158488–158501. [Google Scholar] [CrossRef]

- Kang, Y.Q.; Cai, Z.X.; Zeng, X.; Sun, Y.Y.; Hu, K.Q.; Cen, B.W. An offloading cost based cloud-edge collaborative scheduling method for power distribution network energy management applications. South. Power Syst. Technol. 2021, 15, 61–68. [Google Scholar]

- Zhou, B.; Sun, Y.; Mahato, N.K.; Xu, X.T.; Yang, H.X.; Gong, G.J. Optimization Control Strategy of Electricity Information Acquisition System Based on Edge-Cloud Computing Collaboration. In Proceedings of the 2020 5th Asia Conference on Power and Electrical Engineering (ACPEE), Chengdu, China, 4–7 June 2020; pp. 54–59. [Google Scholar] [CrossRef]

- Deng, F.; Zu, Y.R.; Mao, Y.; Zeng, X.J.; Li, Z.W.; Tang, X.; Wang, Y. A method for distribution network line selection and fault location based on a hierarchical fault monitoring and control system. Int. J. Electr. Power Energy Syst. 2020, 123, 106061. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, N.; Xu, Z.; Tang, B.; Ma, H. Microservice Based Video Cloud Platform with Performance-Aware Service Path Selection. In Proceedings of the 2018 IEEE International Conference on Web Services (ICWS), San Francisco, CA, USA, 2–7 July 2018; pp. 306–309. [Google Scholar] [CrossRef]

- Liu, L.Y.; Huang, H.Q.; Tan, H.S.; Cao, W.L.; Yang, P.L.; Li, X.Y. Online DAG scheduling with on-demand function configuration in edge computing. In Proceedings of the International Conference on Wireless Algorithms, Systems and Applications (WASA), Honolulu, HI, USA, 24–26 June 2019; pp. 213–224. [Google Scholar]

- Shu, C.; Zhao, Z.W.; Han, Y.P.; Min, G.Y.; Duan, H.C. Multi-user offloading for edge computing networks: A dependency-aware and latency-optimal approach. IEEE Internet Things J. 2020, 7, 1678–1689. [Google Scholar] [CrossRef]

- Gao, Z.H.; Hao, W.M.; Han, Z.; Yang, S.Y. Q-learning-based task offloading and resources optimization for a collaborative computing system. IEEE Access 2020, 8, 149011–149024. [Google Scholar] [CrossRef]

- Sonmez, C.; Ozgovde, A.; Ersoy, C. Fuzzy workload orchestration for edge computing. IEEE Trans. Netw. Serv. Manag. 2019, 16, 769–782. [Google Scholar] [CrossRef]

- Ceng, X.; Cai, Z.X.; Sun, Y.Y.; Hu, K.Q.; Zhang, Z.Y.; Liu, Y. Workload modeling method for power distribution monitoring business in integrated energy system. Distrib. Util. 2020, 37, 42–49,57. [Google Scholar]

- Lu, T.; Zeng, F.; Shen, J.; Chen, J.; Shu, W.; Zhang, W. A scheduling scheme in a container-based edge computing environment using deep reinforcement learning. In Proceedings of the International Conference on Mobility, Sensing and Networking, Exeter, UK, 13–15 December 2021; pp. 56–65. [Google Scholar] [CrossRef]

- Wang, D.W.; Liu, T.H. Scheduling method for burst data processing task in full-service unified data center of electric power system. Autom. Electr. Power Syst. 2018, 42, 177–184. [Google Scholar]

- Gunaratne, N.G.T.; Abdollahian, M.; Huda, S.; Ali, M.; Fortino, G. An Edge Tier Task Offloading to Identify Sources of Variance Shifts in Smart Grid Using a Hybrid of Wrapper and Filter Approaches. IEEE Trans. Green Commun. Netw. 2022, 6, 329–340. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).