Learning with Interactive Knowledge Representations

Abstract

:1. Introduction

2. Systems Thinking with Qualitative Representations in Secondary Education

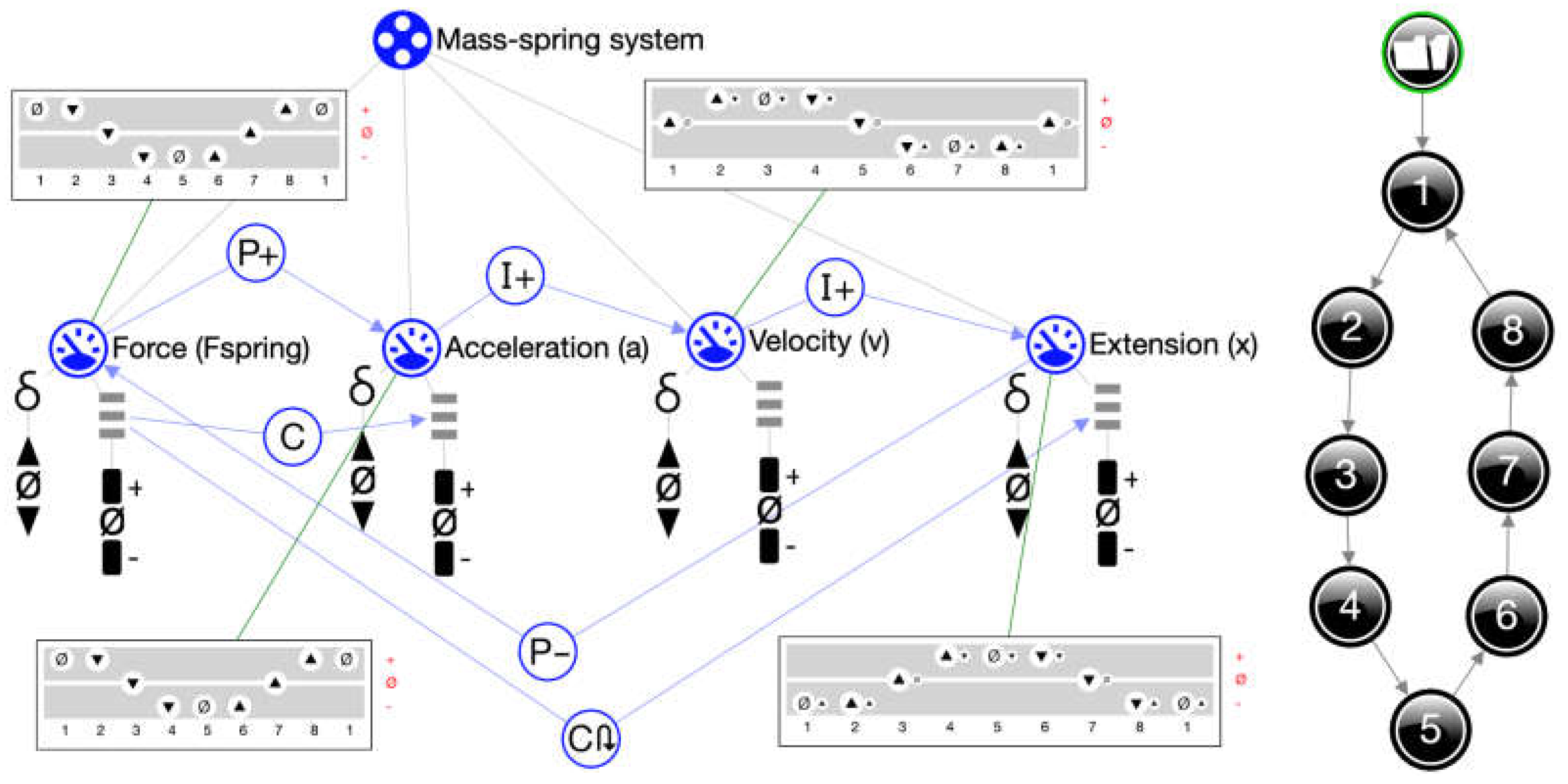

2.1. The Educational Instrument

2.2. Interactive Features

2.3. Results

2.4. Discussion

3. The Hands-on and Minds-on Challenge in Primary Education

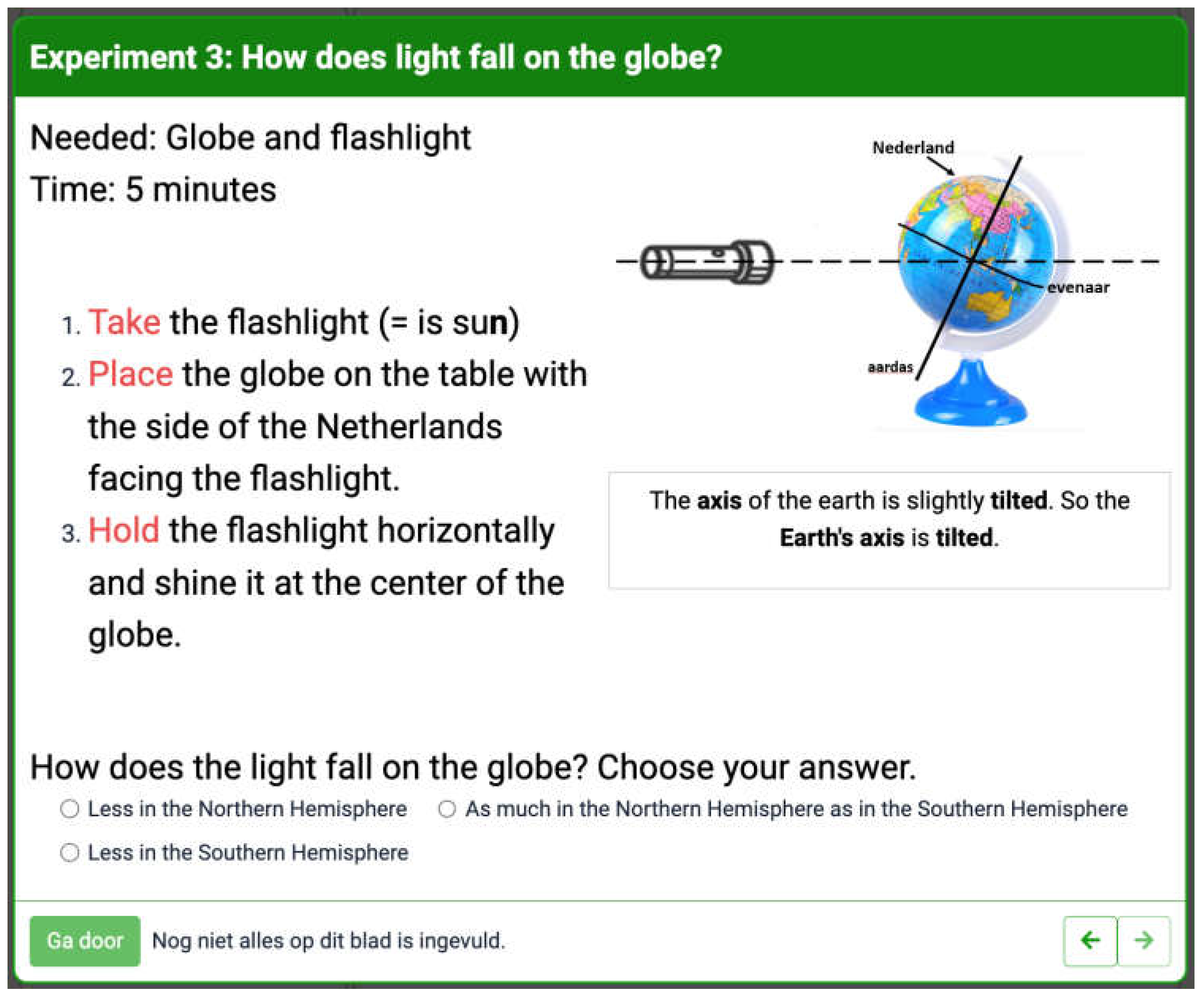

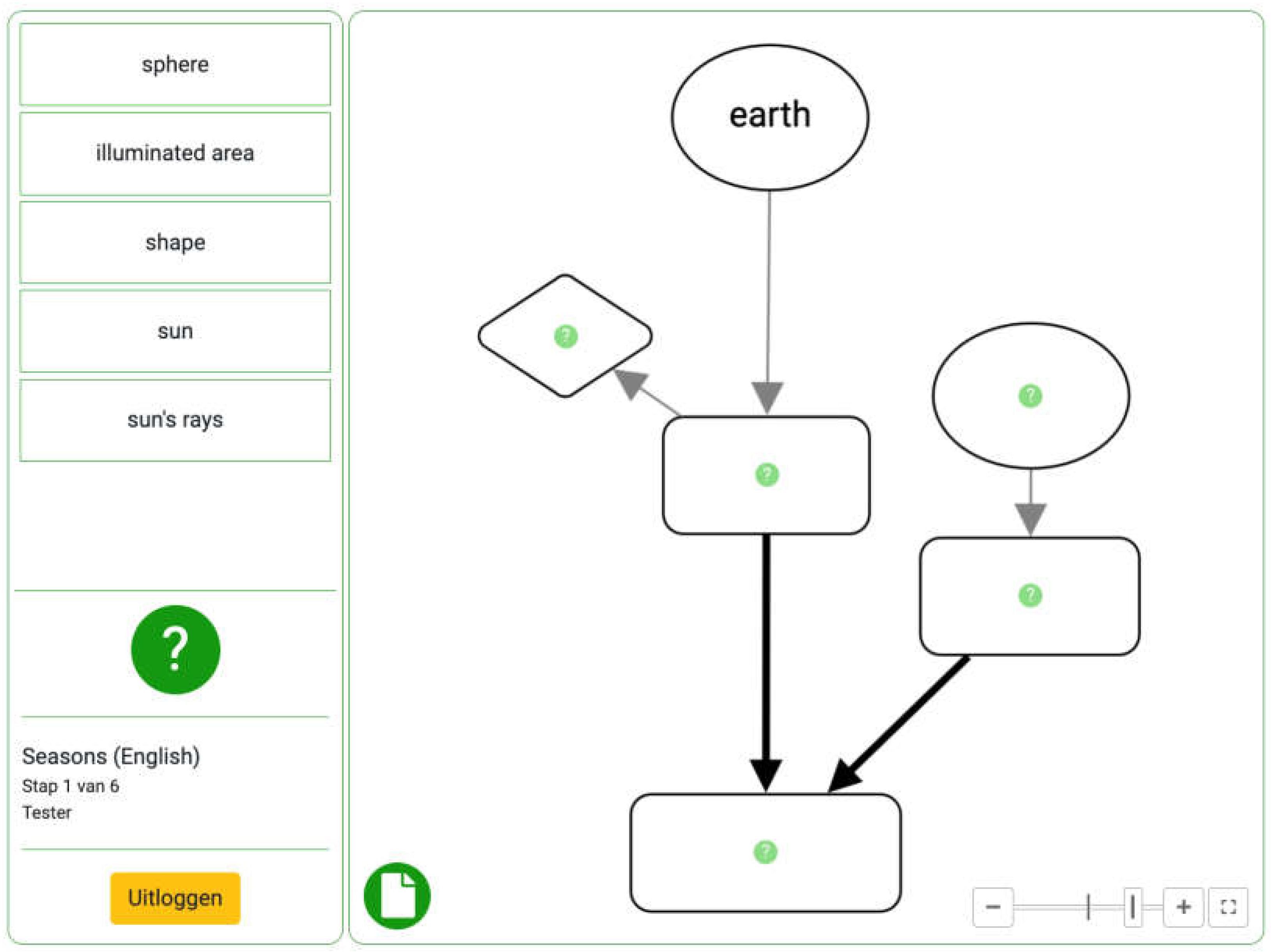

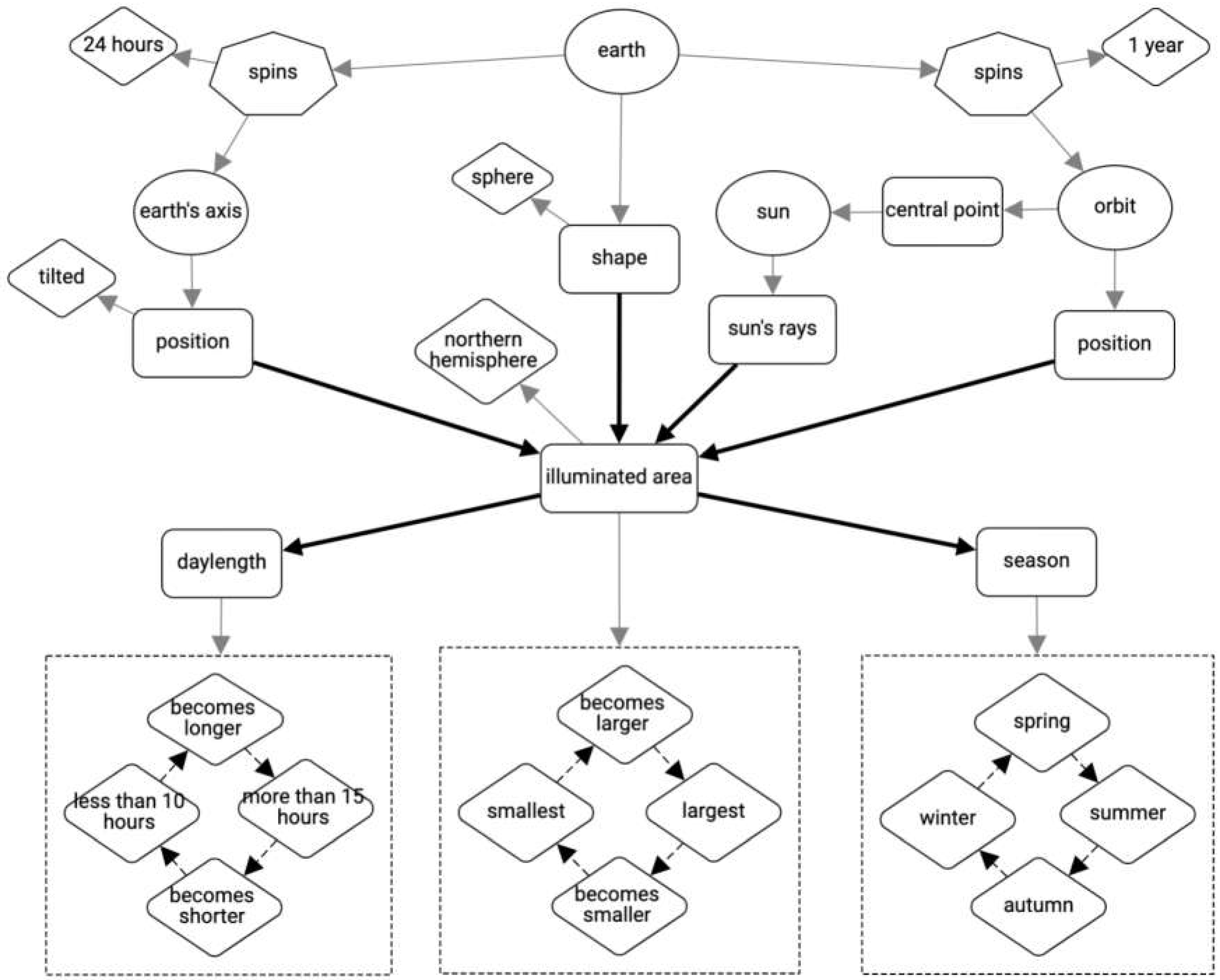

3.1. The Educational Instrument

3.2. Interactive Features

3.3. Results

- Seasons, focusing on cause-and-effect relationships.

- Sound, focusing on cause-and-effect relationships.

- Fixtures (3a) and Animals (3b), focusing on classification.

- The bicycle, focusing on thinking in systems.

3.4. Discussion

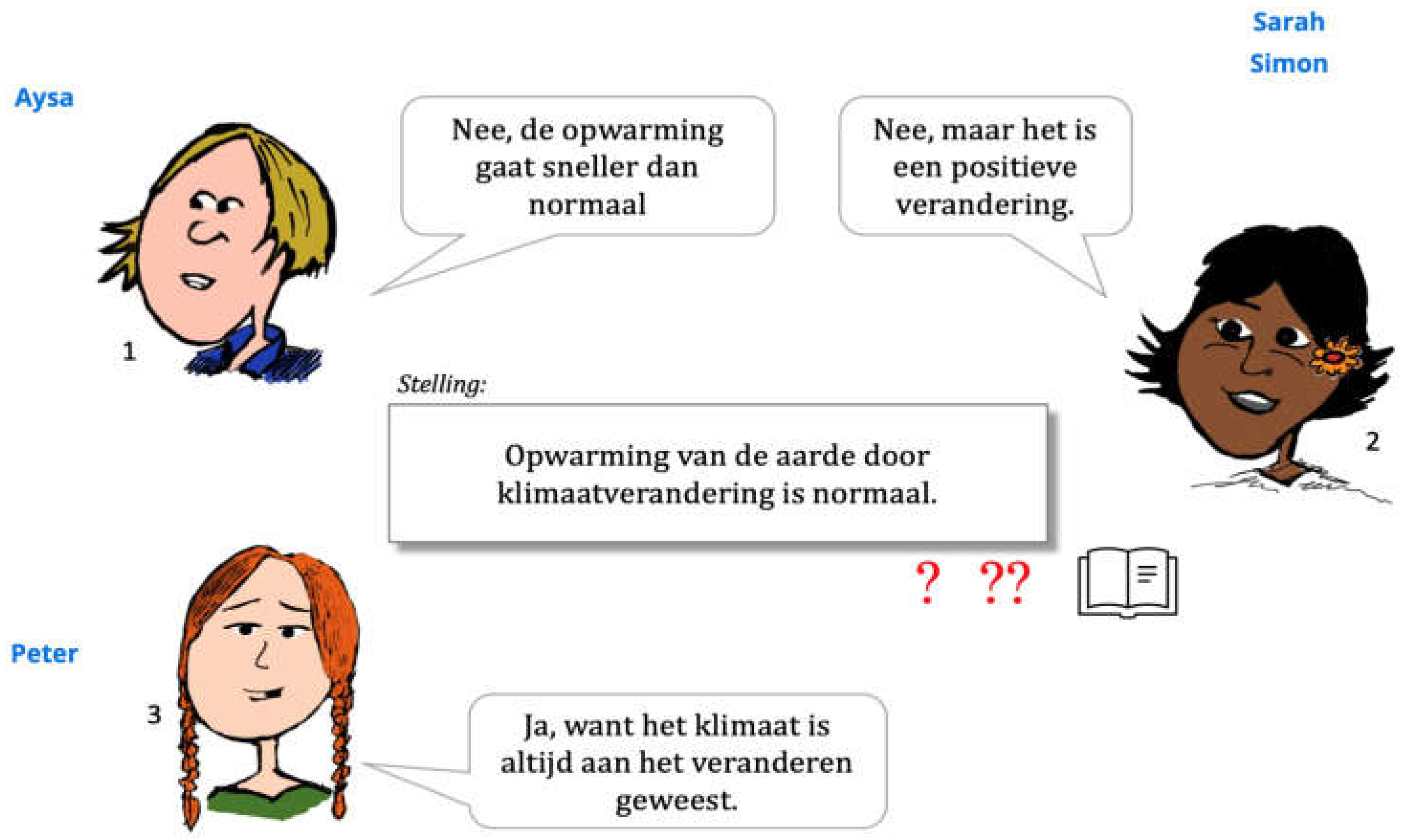

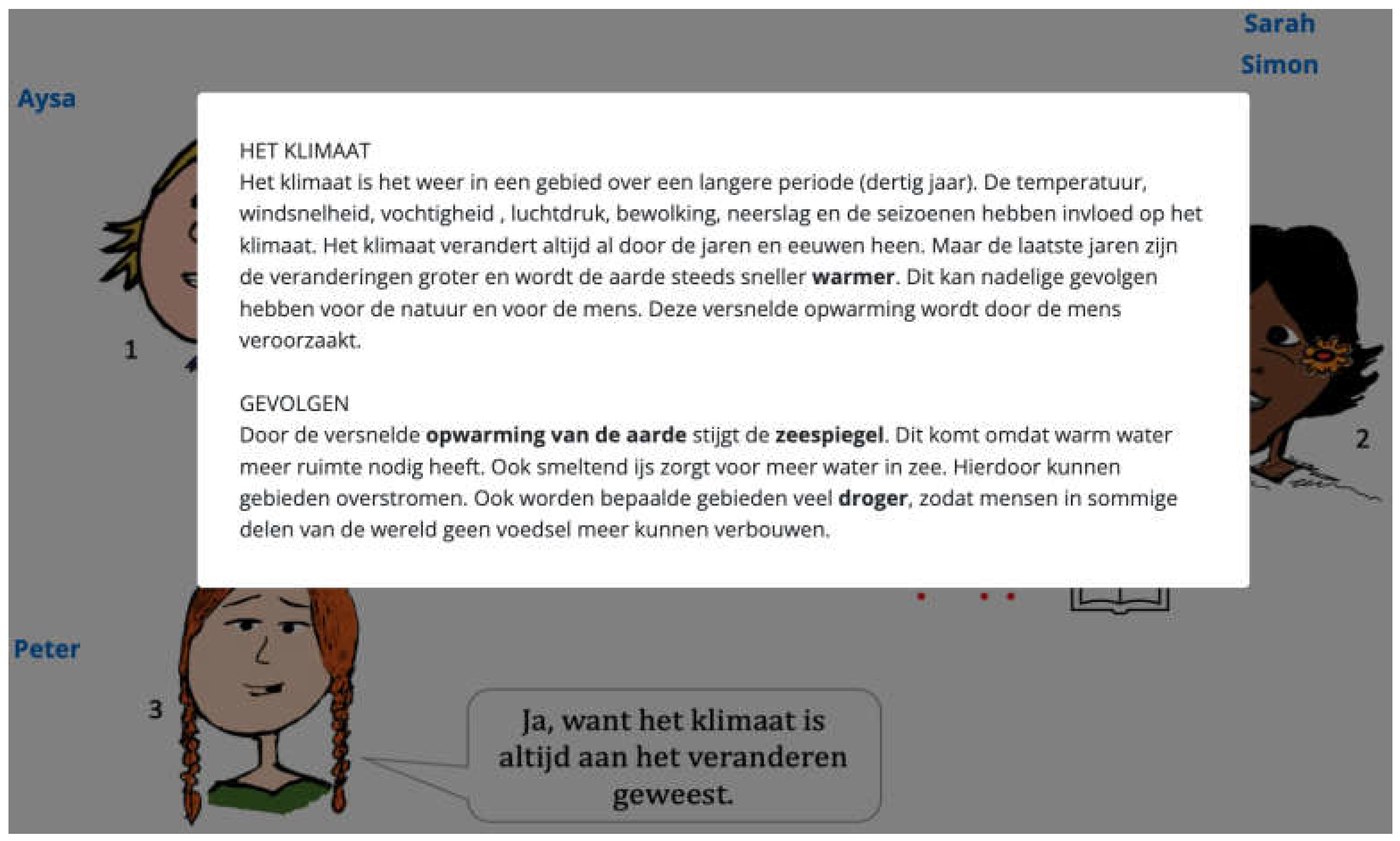

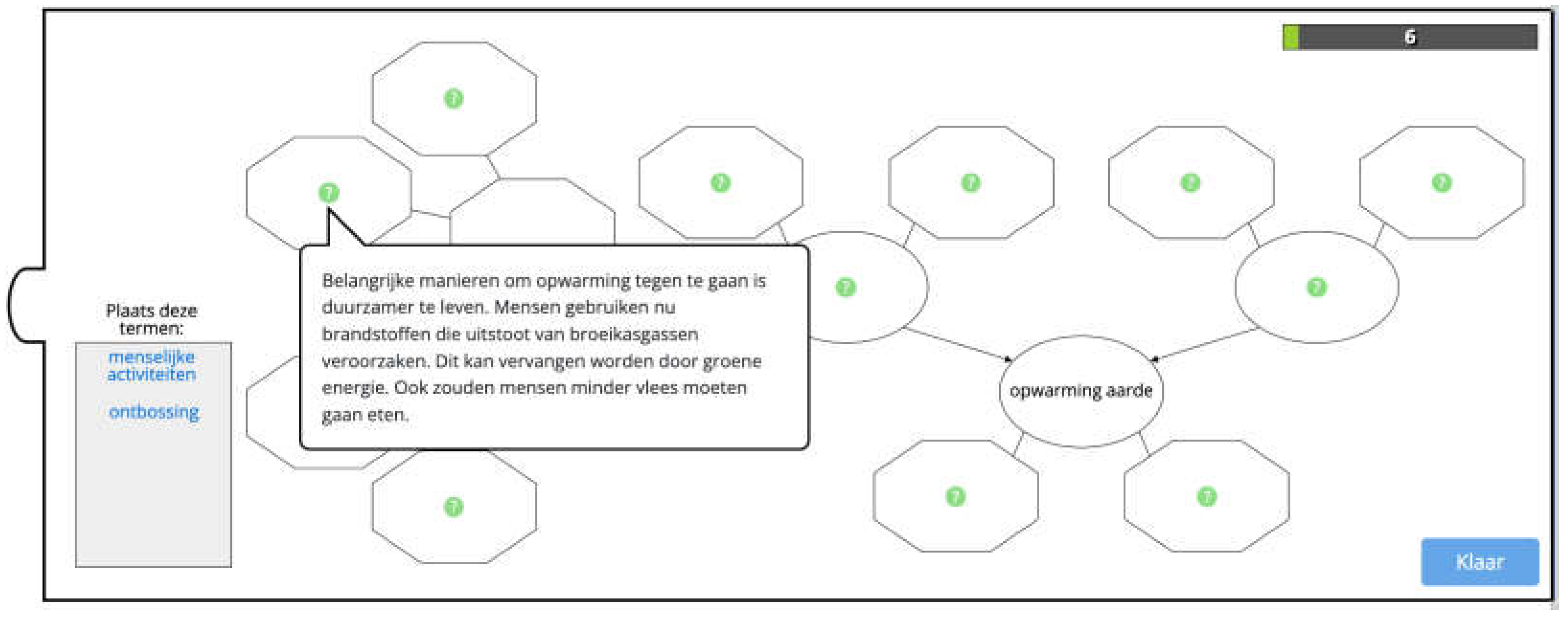

4. Addressing Controversial Topics with Interactive Concept Cartoons

4.1. The Educational Instrument

4.2. Interactive Features

4.3. Results

4.4. Discussion

5. General Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Harmelen, F.V.; Lifschitz, V.; Porter, B. (Eds.) Handbook of Knowledge Representation; Elsevier: Amsterdam, The Netherlands, 2008; p. 1035. [Google Scholar]

- Davis, R.; Shrobe, H.; Szolovits, R. What is a knowledge representation? AI Mag. 1993, 14, 17–33. [Google Scholar]

- Newell, A.; Simon, H.A. Computer science as empirical inquiry: Symbols and search. In ACM Turing Award Lectures; Elsevier: Amsterdam, The Netherlands, 1976; Volume 19, pp. 113–126. [Google Scholar]

- Buitrago, M.; Chiappe, A. Representation of knowledge in digital educational environments: A systematic review of literature. Australas. J. Educ. Techno. 2019, 35, 46–62. [Google Scholar] [CrossRef]

- Disessa, A.A. Metarepresentation: Native competence and targets for instruction. Cogn. Instr. 2004, 22, 293–331. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Reiner, M.; Nakhleh, M. (Eds.) Visualization: Theory and Practice in Science Education; Springer: Cham, Switzerland, 2008; Volume 3, p. 325. [Google Scholar]

- van Buuren, O.; Heck, A.; Ellermeijer, T. Understanding of relation structures of graphical models by lower secondary students. Res. Sci. Educ. 2016, 46, 633–666. [Google Scholar] [CrossRef]

- Cook, M.P. Visual representations in science education: The influence of prior knowledge and cognitive load theory on instructional design principles. Sci. Educ. 2006, 90, 1073–1091. [Google Scholar] [CrossRef]

- Cox, R. Representation construction, externalised cognition and individual differences. Learn. Instr. 1999, 9, 343–363. [Google Scholar] [CrossRef]

- Prain, V.; Tytler, R. Learning through Constructing Representations in Science: A framework of representational construction affordances. Int. J. Sci. Educ. 2012, 34, 2751–2773. [Google Scholar] [CrossRef]

- Larkin, J.H.; Simon, H.A. Why a diagram is (sometimes) worth ten thousand words. Cogn. Sci. 1987, 11, 65–100. [Google Scholar] [CrossRef]

- Clark, J.M.; Paivio, A. Dual coding theory and education. Educ. Psychol. Rev. 1991, 3, 149–170. [Google Scholar] [CrossRef]

- Lin, P.C.; Hou, H.T.; Chang, K.E. The development of a collaborative problem solving environment that integrates a scaffolding mind tool and simulation-based learning: An analysis of learners’ performance and their cognitive process in discussion. Interact. Learn. Environ. 2022, 30, 1273–1290. [Google Scholar] [CrossRef]

- Tippett, C.D. What recent research on diagrams suggests about learning with rather than learning from visual representations in science. Int. J. Sci. Educ. 2016, 38, 725–746. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Cox, R.; Brna, P. Twenty Years on: Reflections on Supporting the Use of External Representations in Problem Solving. Int. J. Artif. Intell. Educ. 2016, 26, 193–204. [Google Scholar] [CrossRef]

- Jonassen, D.H.; Carr, C.S. Mindtools: Affording multiple knowledge representations for learning. In Computers as Cognitive Tools, No More Walls; Routledge: London, UK, 2020; Volume II, pp. 165–196. [Google Scholar]

- Mayer, R.E. The promise of multimedia learning: Using the same instructional design methods across different media. Learn. Instr. 2003, 13, 125–139. [Google Scholar] [CrossRef]

- Sharma, L.; Garg, P.K. (Eds.) Knowledge representation in artificial intelligence: An overview. In Artificial Intelligence—Technologies, Applications, and Challenges; Chapman and Hall: London, UK, 2021; pp. 19–28. [Google Scholar]

- Forbus, K.D. Qualitative representations. In How People Reason and Learn about the Continuous World; MIT Press: Cambridge, UK, 2018; p. 440. [Google Scholar]

- Bredeweg, B.; Forbus, K.D. Qualitative Modeling in Education. AI Mag. 2003, 24, 35–46. [Google Scholar]

- Cronin, M.A.; Gonzalez, C.; Sterman, J.D. Why don’t well-educated adults understand accumulation? A challenge to researchers, educators, and citizens. Organ. Behav. Hum. Decis. Process. 2009, 108, 116–130. [Google Scholar] [CrossRef]

- Jensen, E.; Brehmer, B. Understanding and control of a simple dynamic system. Syst. Dyn. Rev. 2003, 19, 119–137. [Google Scholar] [CrossRef]

- Hajian, S. Transfer of Learning and Teaching: A Review of Transfer Theories and Effective Instructional Practices. IAFOR J. Educ. 2019, 7, 93–111. [Google Scholar] [CrossRef]

- Okada, A.L.P.; Buckingham Shum, S.J.; Sherborne, T. (Eds.) Knowledge Cartography: Software Tools and Mapping Techniques; Springer: London, UK, 2014; p. 540. [Google Scholar]

- Bouwer, A.; Bredeweg, B. Graphical means for inspecting qualitative models of system behaviour. Instr. Sci. 2010, 38, 173–208. [Google Scholar] [CrossRef]

- Bredeweg, B.; Linnebank, F.; Bouwer, A.; Liem, J. Garp3—Workbench for qualitative modelling and simulation. Ecol. Inform. 2009, 4, 263–281. [Google Scholar] [CrossRef]

- Bredeweg, B.; Liem, J.; Beek, W.; Salles, P.; Linnebank, F. Learning spaces as representational scaffolds for learning conceptual knowledge of system behaviour. In European Conference on Technology Enhanced Learning; Wolpers, M., Kirschner, P.A., Scheffel, M., Lindstaedt, S., Dimitrova, V., Eds.; Springer: Cham, Switzerland, 2010; LNCS Volume 6383, pp. 46–61. [Google Scholar]

- Novak, J.D.; Gowin, D.B. Learning how to Learn; Cambridge University Press: New York, NY, USA, 1984; p. 216. [Google Scholar]

- Bredeweg, B.; Kragten, M.; Spitz, L. Qualitative Representations for Systems Thinking in Secondary Education. In Proceedings of the 34th International Workshop on Qualitative Reasoning, Montreal, Canada, 19 August 2021. [Google Scholar]

- Kragten, M.; Loek Spitz, L.; Bredeweg, B. Learning Domain Knowledge and Systems Thinking using Qualitative Representations in Secondary Education (grade 9–10). In Proceedings of the 34th International workshop on Qualitative Reasoning, Montreal, QC, Canada, 19 August 2021. [Google Scholar]

- Spitz, L.; Kragten, M.; Bredeweg, B. Exploring the working and effectiveness of norm-model feedback in conceptual modelling—A pre-liminary report. In Artificial Intelligence in Education; Roll, I., McNamara, D., Sosnovsky, S., Luckin, R., Dimitrova., V., Eds.; Springer: Cham, Switzerland, 2021; LNAI Volume 12749, pp. 325–330. [Google Scholar]

- Sins, P.; Savelsbergh, E.; van Joolingen, W. The Difficult Process of Scientific Modelling: An analysis of novices’ reasoning during computer-based modelling. Int. J. Sci. Educ. 2005, 27, 1695–1721. [Google Scholar] [CrossRef]

- Bredeweg, B.; Kragten, M. Requirements and challenges for hybrid intelligence: A case-study in education. Front. Artif. Intell. 2022, 5, 891630. [Google Scholar] [CrossRef] [PubMed]

- Holstein, K.; McLaren, B.M.; Aleven, V. Designing for complementarity: Teacher and student needs for orchestration support in AI-enhanced classrooms. In Artificial Intelligence in Education; Isotani, S., Millán, E., Ogan, A., Hastings, P., McLaren, B., Luckin, R., Eds.; Springer: Cham, Switzerland, 2019; LNCS Volume 11625, pp. 157–171. [Google Scholar]

- Meelissen, M.; Punter, A. Twintig jaar TIMSS. In Ontwikkelingen in Leerling Prestaties in de Exacte Vakken in het Basisonderwijs 1995–2015; Universiteit Twente: Enschede, The Netherlands, 2016; p. 109. [Google Scholar]

- van Aalderen-Smeets, S.I.; Walma van der Molen, J.H.; Asma, L.J.F. Primary teachers’ attitudes toward science: A new theoretical framework. Sci. Educ. 2012, 96, 158–182. [Google Scholar] [CrossRef]

- van Slim, T.; van Schaik, J.E.; Dobber, M.; Hotze, A.C.G.; Raijmakers, M.E.J. Struggling or succeeding in science and technology education: Elementary school students’ individual differences during inquiry and design-based learning. Front. Educ. 2022, 7, 842537. [Google Scholar] [CrossRef]

- Osborne, J. Teaching scientific practices: Meeting the challenge of change. J. Sci. Teach. Educ. 2014, 25, 177–196. [Google Scholar] [CrossRef]

- Roth, K.J. Elementary science teaching. In Handbook of Research on Science Education; Lederman, N.G., Abell, S.K., Eds.; Routledge: London, UK, 2014; Volume II, pp. 361–393. [Google Scholar]

- Spaan, W.; Oostdam, R.; Schuitema, J.; Pijls, M. Analysing teacher behaviour in synthesizing hands-on and minds-on during practical work. Res. Sci. Technol. Educ. 2022, 1–18. [Google Scholar] [CrossRef]

- Forsthuber, B.; Motiejunaite, A.; de Almeida Coutinho, A.S. Science Education in Europe: National Policies, Practices and Research. In Education, Audiovisual and Culture Executive Agency: European Commission; EU Bookshop: New South Wales, Sydney, 2011; p. 166. [Google Scholar] [CrossRef]

- Bredeweg, B. Kunstmatige Intelligentie in Het Onderwijs: Leren Met Interactieve Kennisrepresentaties. Hogeschool van Amsterdam: Amsterdam. 2019. Available online: https://research.hva.nl/en/publications/kunstmatige-intelligentie-in-het-onderwijs-leren-met-interactieve (accessed on 19 February 2023).

- Cañas, A.J.; Reiska, P.; Mollits, A. Developing higher-order thinking skills with concept mapping: A case of pedagogic frailty. Knowl. Manag. E-Learn. 2017, 9, 348–365. [Google Scholar]

- Louman, E.; van Eijck, T. Leren redeneren. Didactief. 2022. Available online: https://didactiefonline.nl/artikel/leren-redeneren (accessed on 19 February 2023).

- National Research Council. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas. In Committee Conceptual Framework New K-12 Science Education Standards; The National Academies Press: Washington, DC, USA, 2012; p. 401. [Google Scholar]

- Cross, R. Will public trust in science survive the pandemic? Chem. Eng. News 2021, 99, 3. Available online: https://cen.acs.org/policy/global-health/Will-public-trust-in-science-survive-the-pandemic/99/i3 (accessed on 19 February 2023).

- Lederman, N.G.; Lederman, J.S. Research on teaching and learning of nature of science. In Handbook of Research on Science Education; Lederman, N.G., Abell, S.K., Eds.; Routledge: London, UK, 2014; Volume II, pp. 614–634. [Google Scholar]

- Schuitema, J.; Veugelers, W.; Rijlaarsdam, G.; Ten Dam, G. Two instructional designs for dialogic citizenship education: An effect study. Br. J. Educ. Psychol. 2009, 79, 439–461. [Google Scholar] [CrossRef]

- Radstake, H.; Leeman, Y. Guiding discussions in the class about ethnic diversity. Intercult. Educ. 2010, 21, 429–442. [Google Scholar] [CrossRef]

- Abd-El-Khalick, F. Teaching with and about nature of science, and science teacher knowledge domains. Sci. Educ. 2013, 22, 2087–2107. [Google Scholar] [CrossRef]

- Naylor, S.; Keogh, B.; Downing, B. Argumentation and primary science. Res. Sci. Educ. 2007, 37, 17–39. [Google Scholar] [CrossRef]

- Kruit, P.; Bredeweg, B. Interactive concept cartoons: Exploring an instrument for developing scientific literacy. In European Conference on Technology Enhanced Learning; Alario-Hoyos, C., Rodríguez-Triana, M.J., Scheffel, M., Arnedillo-Sánchez, I., Dennerlein, S.M., Eds.; Springer: Cham, Switzerland, 2020; LNCS Volume 12315, pp. 404–409. [Google Scholar]

- Law, V.; Chen, C.H. Promoting science learning in game-based learning with question prompts and feedback. Comp. Educ. 2016, 103, 134–143. [Google Scholar] [CrossRef]

- Mercier, H.; Dan Sperber, D. Why do humans reason? Arguments for an argumentative theory. Behav. Brain Sci. 2011, 34, 57–111. [Google Scholar] [CrossRef]

- Biswas, G.; Segedy, J.R.; Bunchongchit, K. From design to implementation to practice a learning by teaching system: Betty’s Brain. Int. J. Artif. Intell. Educ. 2016, 26, 350–364. [Google Scholar] [CrossRef]

| Ingredient Type | Description |

|---|---|

| Entity | Physical objects and/or abstract concepts that together constitute the system. |

| Configuration | Structural relationships between entities. |

| Quantity | Changeable and measurable features of entities. |

| Quantity space | Set of values that a particular quantity can take on. |

| Value | Specific value that a quantity has in a particular state. |

| Direction of change (∂) | In each state, a quantity is either decreasing, steady, or increasing. |

| Causal dependency | Quantity relationships that define how the causing quantity affects the influenced quantity. |

| Correspondence | Co-occurring values and co-occurring directions of change between quantities. |

| (In)equality | Ordering information between quantities, values, and directions of change (<, ≤, =, ≥, >). |

| Calculus | Constraints between quantities, values, and directions of change (A + B = C or A − B = C). |

| Conditional statement | IF A THEN B, where A and B can refer to any of the above-mentioned ingredients. |

| Ingredient Type | Description |

|---|---|

| State | Period during which a system does not change the dynamics of its behaviour. |

| Transition | Change in system behaviour resulting in moving from the current state to a successive state. |

| State-graph | Total set of states and transitions that describe the possible behaviours of the system. |

| Path | Set of successive states and the accompanying transitions. |

| Value-history | Overview of value assignments present in selected states. |

| (In)equality-history | Overview of (in)equality statements present in selected states. |

| Level | Biology | Economics | Geography | Physics |

|---|---|---|---|---|

| 2 | Circulatory system, greenhouse effect, mutations, food chains | Market mechanism, industrial revolution | Poverty | Calorimetry, force and motion, sound, star properties, electrical circuit |

| 3 | Blood sugar, biodiversity, photosynthesis | Pensions | Centre-periphery model, Neolithic age | Gas law, energy transformation, star states |

| 4 | Enzymes, hormone regulation, population dynamics, homeostasis | Business cycle | Climate change | Force and motion, mass spring system, star formation, circular and elliptical orbits |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bredeweg, B.; Kragten, M.; Holt, J.; Kruit, P.; van Eijck, T.; Pijls, M.; Bouwer, A.; Sprinkhuizen, M.; Jaspar, E.; de Boer, M. Learning with Interactive Knowledge Representations. Appl. Sci. 2023, 13, 5256. https://doi.org/10.3390/app13095256

Bredeweg B, Kragten M, Holt J, Kruit P, van Eijck T, Pijls M, Bouwer A, Sprinkhuizen M, Jaspar E, de Boer M. Learning with Interactive Knowledge Representations. Applied Sciences. 2023; 13(9):5256. https://doi.org/10.3390/app13095256

Chicago/Turabian StyleBredeweg, Bert, Marco Kragten, Joanna Holt, Patricia Kruit, Tom van Eijck, Monique Pijls, Anders Bouwer, Malou Sprinkhuizen, Emile Jaspar, and Muriel de Boer. 2023. "Learning with Interactive Knowledge Representations" Applied Sciences 13, no. 9: 5256. https://doi.org/10.3390/app13095256

APA StyleBredeweg, B., Kragten, M., Holt, J., Kruit, P., van Eijck, T., Pijls, M., Bouwer, A., Sprinkhuizen, M., Jaspar, E., & de Boer, M. (2023). Learning with Interactive Knowledge Representations. Applied Sciences, 13(9), 5256. https://doi.org/10.3390/app13095256