Multizone Leak Detection Method for Metal Hose Based on YOLOv5 and OMD-ViBe Algorithm

Abstract

:1. Introduction

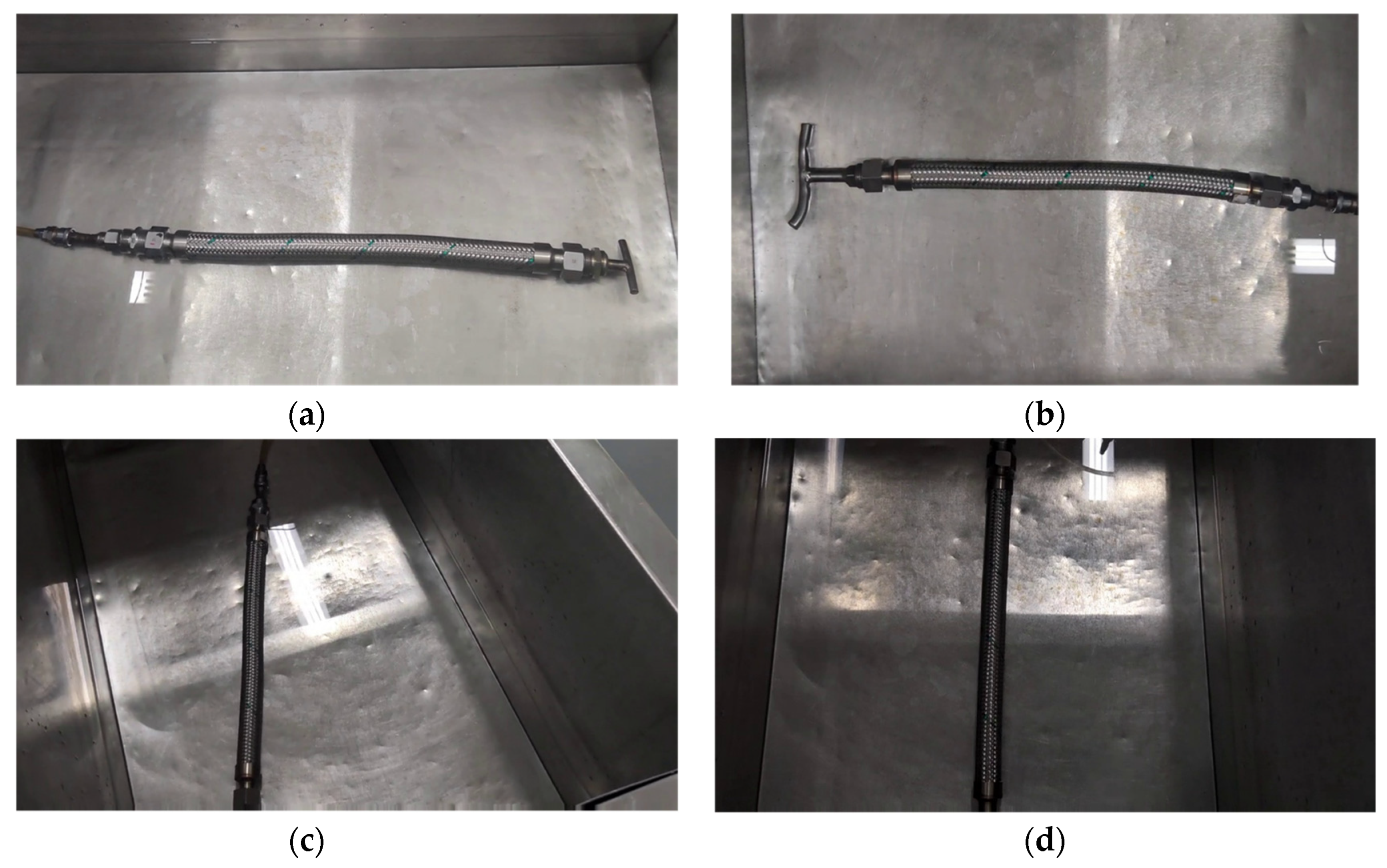

2. Leak Detection Methods

2.1. Detection Process

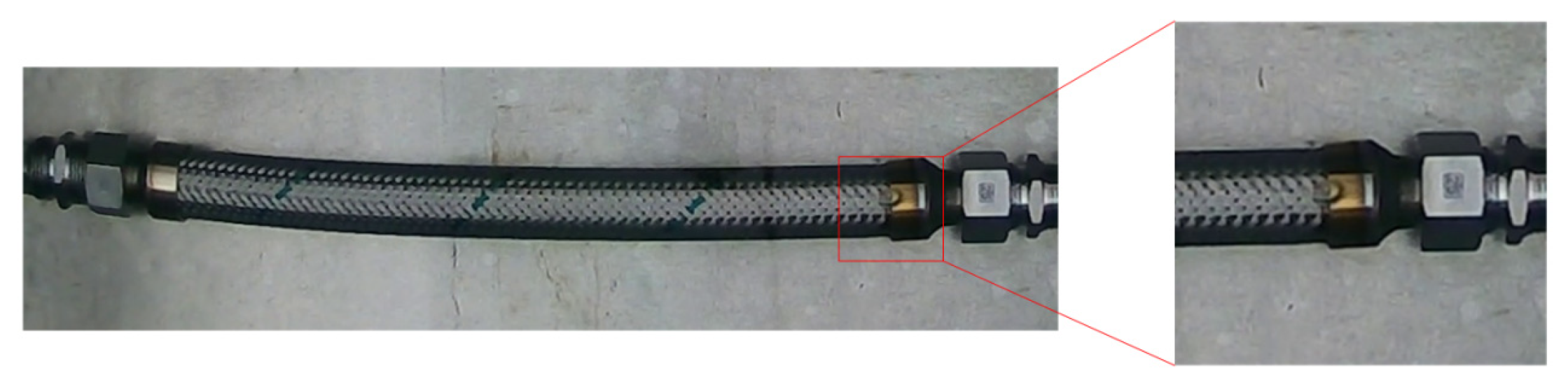

2.2. ROI Rectification

2.3. OMD-ViBe

2.3.1. ViBe Theory

2.3.2. Improving ViBe

2.4. Foreground Frequency Calculation Method

2.5. Leakage Point Location

2.6. Leakage Calculation

3. Experiment and Results

3.1. Evaluation Index

3.2. Experimental Results

3.2.1. YOLOv5 Results

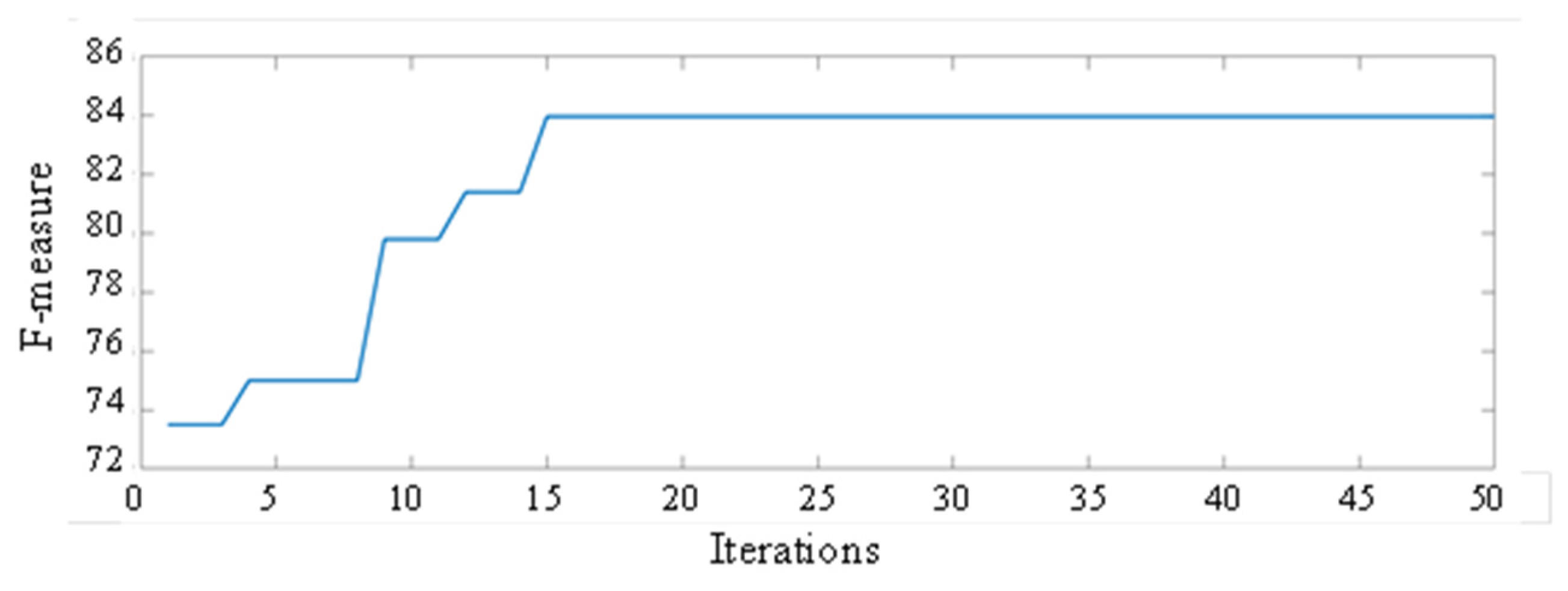

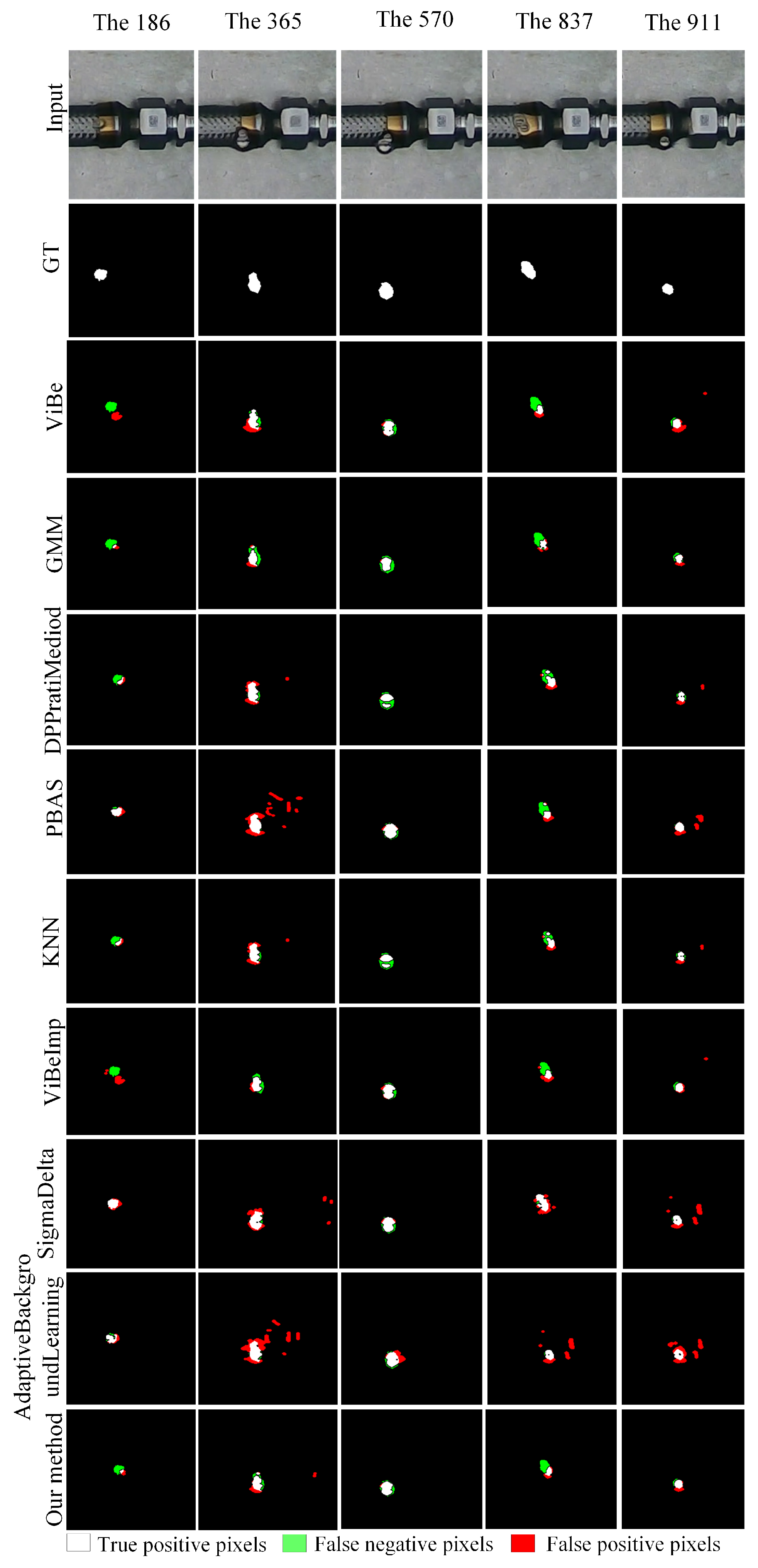

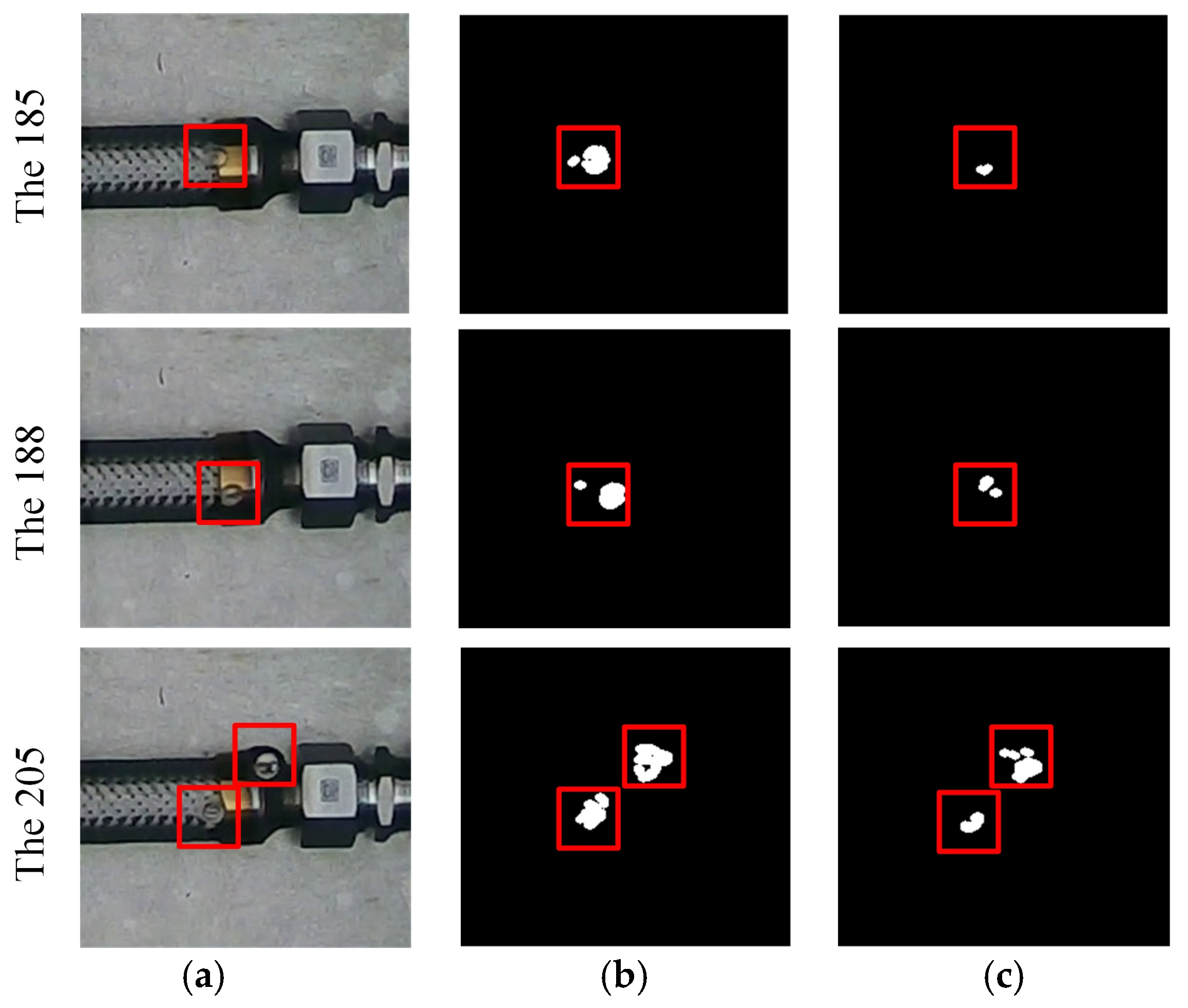

3.2.2. Bubble Recognition Based on OMD-ViBe

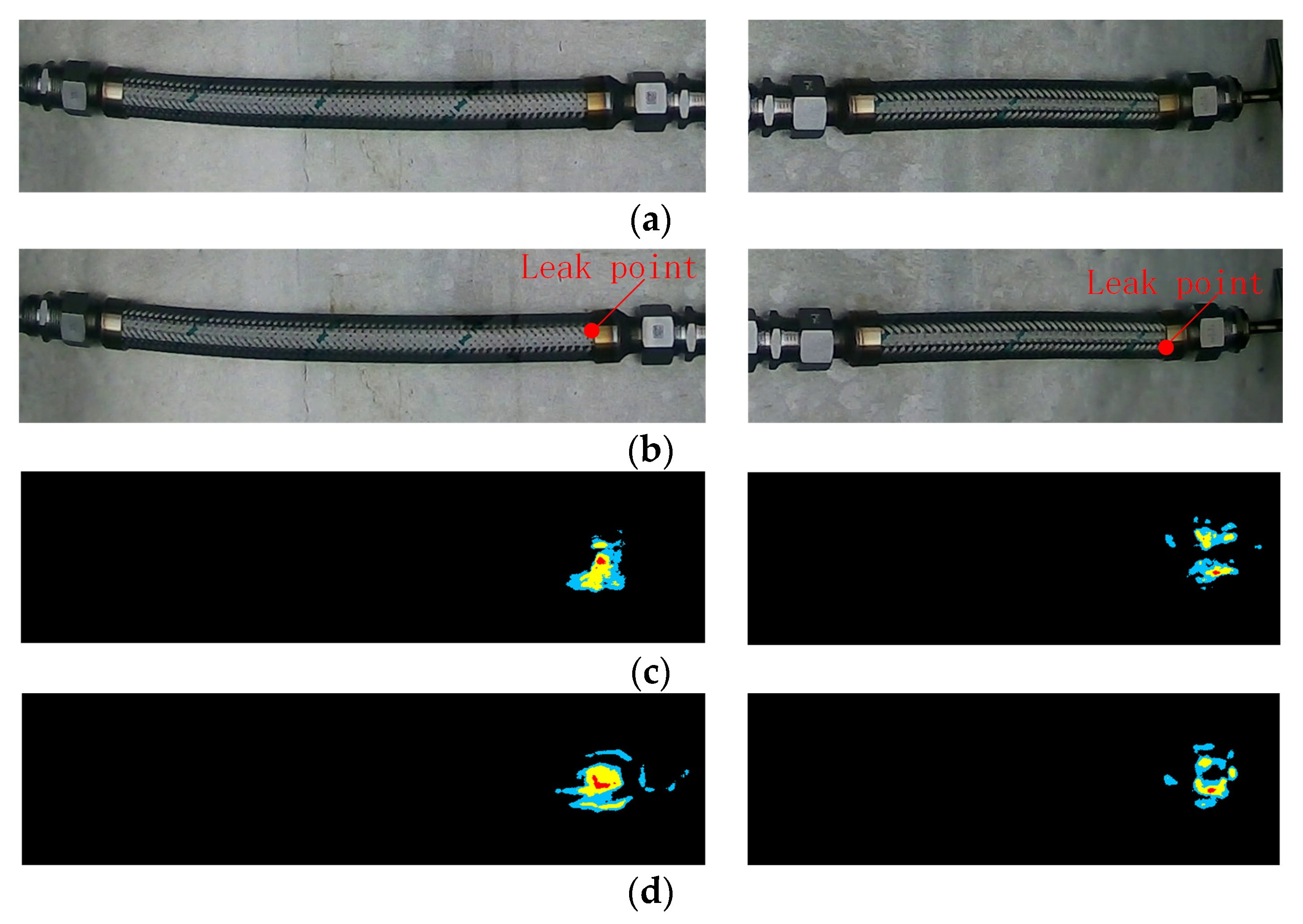

3.2.3. Leakage Calculation

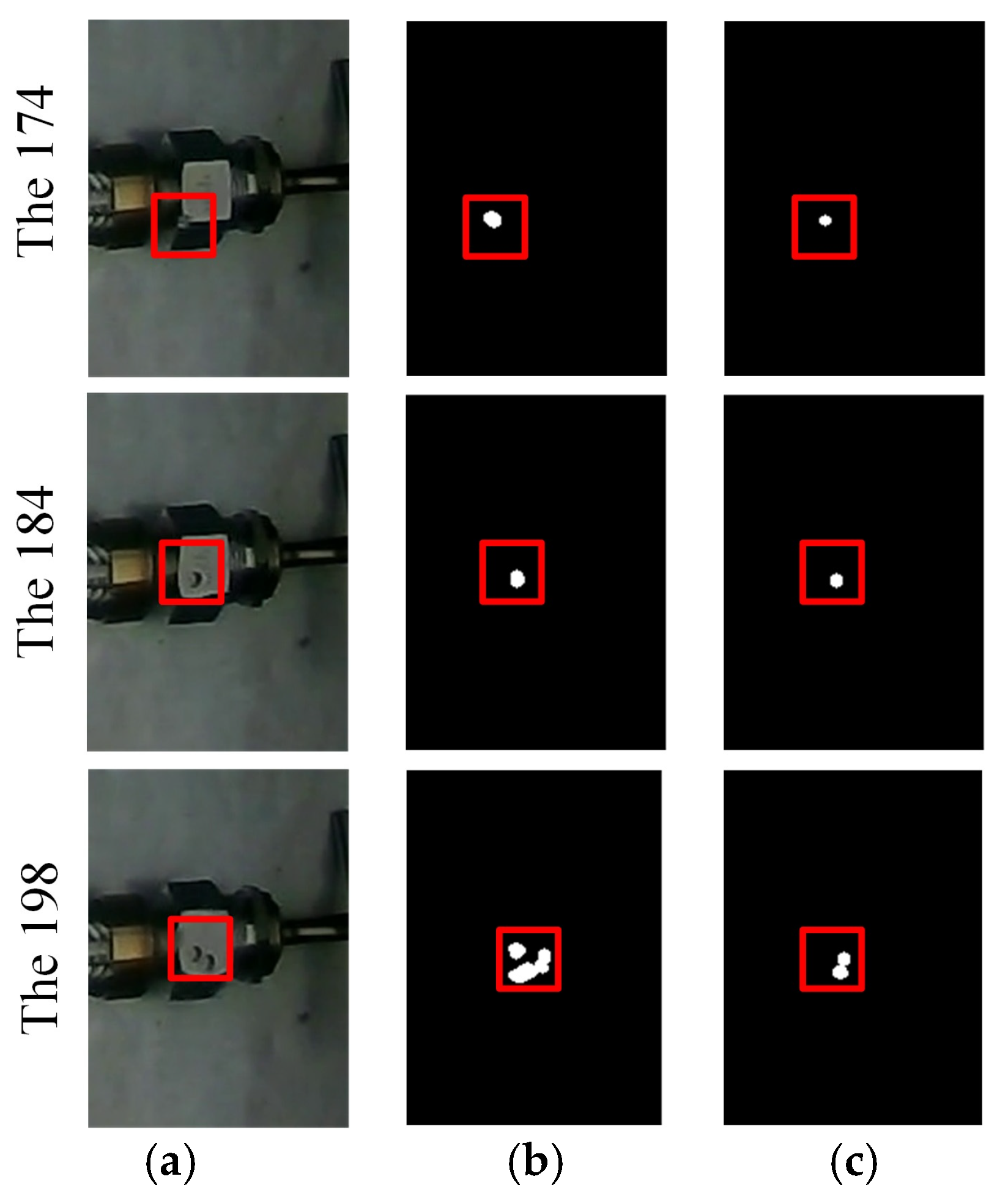

3.2.4. Leak Location

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, F.; Zhang, L.; Dong, S. Noise-Pressure interaction model for gas pipeline leakage detection and location. Measurement 2021, 184, 109906. [Google Scholar] [CrossRef]

- Yang, J.; Mostaghimi, H.; Hugo, R. Pipeline leak and volume rate detections through Artificial intelligence and vibration analysis. Measurement 2021, 187, 110368. [Google Scholar] [CrossRef]

- Shi, G.; Qi, W.; Chen, P.; Fan, M.; Li, J. Experimental study of leakage location in a heating pipeline based on the negative pressure wave and wavelet analysis. J. Vib. Shock. 2021, 40, 212–218. [Google Scholar] [CrossRef]

- Juan, L.; Zheng, Q.; Qian, Z.H. A novel Location Algorithm for Pipeline Leakage Based on the Attenuation of Negative Pressure Wave. Process Saf. Environ. Prot. 2019, 123, 309–316. [Google Scholar] [CrossRef]

- Song, Y.; Li, S. Gas leak detection in galvanised steel pipe with internal flow noise using convolutional neural network. Process Saf. Environ. Prot. 2020, 146, 736–744. [Google Scholar] [CrossRef]

- Zhou, M.; Yang, Y.; Xu, Y. Pipeline Leak Detection and Localization Approach Based on Ensemble TL1DCNN. IEEE Access 2021, 9, 47565–47578. [Google Scholar] [CrossRef]

- Lang, X.; Hu, Z.; Li, P. Pipeline Leak Aperture Recognition Based on Wavelet Packet Analysis and a Deep Belief Network with ICR. Wirel. Commun. Mob. Comput. 2018, 2018, 1–8. [Google Scholar] [CrossRef]

- Lyu, Y.; Jamil, M.; Ma, P. An Ultrasonic-Based Detection of Air-Leakage for the Unclosed Components of Aircraft. Aerospace 2021, 8, 55. [Google Scholar] [CrossRef]

- Quy, T.B.; Kim, J.M. Real-Time Leak Detection for a Gas Pipeline Using a k-NN Classifier and Hybrid AE Features. Sensors 2021, 21, 367. [Google Scholar] [CrossRef]

- Li, S.; Wen, Y.; Li, P. Leak Detection and Location for Gas Pipelines Using Acoustic Emission Sensors. In Proceedings of the IEEE Conference on International Ultrasonics Symposium, Dresden, Germany, 7–10 October 2012; pp. 957–960. [Google Scholar] [CrossRef]

- Xue, H.; Wu, D.; Wang, Y. Research on Ultrasonic Leak Detection Methods of Fuel Tank. In Proceedings of the IEEE Conference on International Ultrasonics Symposium (IUS), Taibei, Twaiwan, 21–24 October 2015. [Google Scholar] [CrossRef]

- Wang, J.; Tchapmi, L.P.; Ravikumar, A.P. Machine vision for natural gas methane emissions detection using an infrared camera. Appl. Energy 2020, 257, 28. [Google Scholar] [CrossRef]

- Wang, J.; Ji, J.; Ravikumar, A.P. VideoGasNet: Deep Learning for Natural Gas Methane Leak Classification Using an Infrared Camera. Energy 2021, 238, 121516. [Google Scholar] [CrossRef]

- Guan, H.; Xiao, T.; Luo, W. Automatic fault diagnosis algorithm for hot water pipes based on infrared thermal images. Build. Environ. 2022, 218, 109111. [Google Scholar] [CrossRef]

- Yu, X.; Tian, X. A fault detection algorithm for pipeline insulation layer based on immune neural network. Int. J. Press. Vessel. Pip. 2022, 196, 104611. [Google Scholar] [CrossRef]

- Penteado, C.; Olivatti, Y.; Lopes, G.; Rodrigues, P.; Filev, R. Water leaks detection based on thermal images. In Proceedings of the IEEE Conference on International Smart Cities Conference, Kansas, MO, USA, 16–19 September 2018; p. 8. [Google Scholar]

- Wang, M.; Hong, H.Y.; Huang, L.K. Infrared Video Based Gas Leak Detection Method Using Modified FAST Features. In Proceedings of the MIPPR 2017 of the Conference, Xiangyang, China, 28–29 October 2017; p. 10611. [Google Scholar] [CrossRef]

- Jadin, M.S.; Ghazali, K.H. Gas Leakage Detection Using Thermal Imaging Technique. In Proceedings of the Computer Modelling and Simulation of the Conference, Cambridge, UK, 26–28 March 2014; pp. 302–306. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P. Mask R-Cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. Comput. Vis. Pattern Recognit. 2018, 1804, 02767. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D. Ssd: Single shot multibox detector. In Proceedings of the IEEE International Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Pan, H.; An, Y.; Lei, M. The Research of Air Tightness Detection Method Based on Semi-Blind Restoration for Sealed Containers. Chem. Pharm. Res. 2015, 7, 1485–1491. Available online: https://www.researchgate.net/publication/306135850-_The_research_of_air_tightness_detection_method_based_on_semi-blind_restoration_for_sealed_containers (accessed on 13 August 2021).

- Fahimipirehgalin, M.; Trunzer, E.; Odenweller, M. Automatic Visual Leakage Detection and Localization from Pipelines in Chemical Process Plants Using Machine Vision Techniques. Engineering 2021, 7, 758–776. [Google Scholar] [CrossRef]

- Saworski, B.; Zielinski, O. Comparison of machine vision based methods for online in situ oil seep detection and quantification. In Proceedings of the OCEANS 2009-EUROPE of the Conference, Bremen, Germany, 11–14 May 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Gao, F.; Lin, J.; Ge, Y. A Mechanism and Method of Leak Detection for Pressure Vessel: Whether, When, and How. IEEE Trans. Instrum. Meas. 2020, 69, 6004–6015. [Google Scholar] [CrossRef]

- Schiller, I.; Koch, R. Improved video segmentation by adaptive combination of depth keying and mixture-of-gaussians. In Proceedings of the 17th Scandinavian Conference on Image Analysis, Ystad, Sweden, 23–27 May 2011; pp. 59–68. [Google Scholar] [CrossRef]

- Van, D.M.; Paquot, O. Background subtraction: Experiments and improvements for ViBe. In Proceedings of the IEEE Computer society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 32–37. [Google Scholar] [CrossRef]

- Cucchiara, R.; Grana, C.; Piccardi, M. Detecting moving objects, ghosts, and shadows in video streams. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1337–1342. [Google Scholar] [CrossRef]

- Hofmann, M.; Tiefenbacher, P.; Rigoll, G. Background segmentation with feedback: The pixel-based adaptive segmenter. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; p. 6. [Google Scholar]

- Zhang, C.; Chen, S.C.; Shyu, M.L. Adaptive background learning for vehicle detection and spatiotemporal tracking. In Proceedings of the Joint Conference of the Fourth International Conference, Singapore, 15–18 December 2003; pp. 797–801. [Google Scholar] [CrossRef]

- Zhou, X.; Liu, X.; Jiang, A. Improving video segmentation by fusing depth cues and the visual background extractor (ViBe) algorithm. Sensors 2017, 17, 1177. [Google Scholar] [CrossRef] [PubMed]

- Qin, G.; Yang, S.; Li, S. A Vehicle Path Tracking System With Cooperative Recognition of License Plates and Traffic Network Big Data. IEEE Trans. Netw. Sci. Eng. 2022, 9, 1033–1043. [Google Scholar] [CrossRef]

- Lyu, C.; Liu, Y.; Wang, X.; Chen, Y.; Jin, J.; Yang, J. Visual Early Leakage Detection for Industrial Surveillance Environments. IEEE Trans. Ind. Inform. 2022, 18, 3670–3680. [Google Scholar] [CrossRef]

- Dai, Y.; Yang, L. Detecting moving object from dynamic background video sequences via simulating heat conduction. J. Vis. Commun. Image Represent. 2022, 83, 103439. [Google Scholar] [CrossRef]

| Algorithm | Precision | Recall | F-Measure | PWC |

|---|---|---|---|---|

| ViBe | 72.84% | 87.87% | 79.64% | 0.113% |

| GMM | 68.33% | 87.88% | 76.88% | 0.096% |

| DPPratiMediod [30] | 74.53% | 79.17% | 76.78% | 0.111% |

| PBAS [31] | 61.89% | 97.65% | 75.77% | 0.165% |

| KNN | 74.53% | 79.17% | 76.78% | 0.111% |

| ViBeImp [32] | 86.17% | 78.54% | 82.18% | 0.084% |

| SigmaDelta | 56.69% | 98.59% | 71.28% | 0.201% |

| Adaptive Background Learning | 51.74% | 97.30% | 67.56% | 0.246% |

| Our method | 80.88% | 87.31% | 83.97% | 0.081% |

| Algorithm | Precision | Recall | F-Measure | PWC |

|---|---|---|---|---|

| ViBe | 81.64% | 66.01% | 71.12% | 0.08% |

| GMM | 68.49% | 66.48% | 60.58% | 0.12% |

| DPPratiMediod | 73.57% | 85.10% | 77.99% | 0.08% |

| PBAS | 62.64% | 93.78% | 73.21% | 0.12% |

| KNN | 66.48% | 94.64% | 76.85% | 0.010% |

| ViBeImp | 61.14% | 66.75% | 61.46% | 0.11% |

| SigmaDelta | 56.99% | 97.08% | 70.33% | 0.15% |

| Adaptive Background Learning | 59.22% | 94.94% | 71.43% | 0.14% |

| Our method | 85.47% | 77.18% | 81.11% | 0.05% |

| Pressure (Mpa) | Leak 1 Pressure Drop (Pa) | Leak 2 Pressure Drop (Pa) | Leak 1 Leakage Rate (mL/min) | Leak 2 Leakage Rate (mL/min) |

|---|---|---|---|---|

| 0.2 | 4652 | 1233 | 0.235 | 0.092 |

| 0.3 | 8259 | 2259 | 0.416 | 0.168 |

| 0.4 | 13,654 | 5322 | 0.688 | 0.397 |

| Pressure (Mpa) | No Leak Pressure Drop (Pa) | Combined Pressure Drop (Pa) | No Leak Leakage Rate (mL/min) | Combined Leakage Rate (mL/min) |

|---|---|---|---|---|

| 0.2 | 2 | 2705 | 0.0007 | 0.338 |

| 0.3 | 3 | 4653 | 0.0011 | 0.581 |

| 0.4 | 6 | 8934 | 0.0021 | 1.116 |

| Algorithm | Leak 1 Leakage Rate (mL/min) | Leak 2 Leakage Rate (mL/min) | Leak 1 Error | Leak 2 Error |

|---|---|---|---|---|

| ViBe | 0.755 | 0.361 | 9.71% | 9.01% |

| GMM | 0.711 | 0.405 | 3.35% | 2.00% |

| DPPratiMediod | 0.635 | 0.481 | 7.76% | 21.25% |

| PBAS | 0.709 | 0.407 | 3.01% | 2.58% |

| KNN | 0.701 | 0.415 | 1.89% | 4.53% |

| ViBeImp | 0.757 | 0.359 | 9.98% | 9.48% |

| SigmaDelta | 0.699 | 0.417 | 1.58% | 5.07% |

| Adaptive Background Learning | 0.722 | 0.394 | 5.00% | 0.85% |

| Proposed Method | 0.685 | 0.431 | 0.37% | 3.45% |

| Algorithm | Leak 1 Location | Leak 2 Location | Leak 1 Error | Leak 2 Error |

|---|---|---|---|---|

| ViBe | 91.97% | 86.22% | 2.44% | 0.74% |

| GMM | 80.12% | 86.48% | 9.41% | 1% |

| DPPratiMediod | 81.33% | 86.88% | 8.2% | 1.53% |

| PBAS | 91.16% | 86.35% | 1.63% | 0.87% |

| KNN | 85.54% | 86.48% | 3.99% | 1% |

| ViBeImp | 91.16% | 87.14% | 1.63% | 1.66% |

| SigmaDelta | 86.55% | 86.35% | 2.98% | 0.87% |

| Adaptive Background Learning | 85.54% | 86.35% | 3.99% | 0.87% |

| Proposed Method | 90.36% | 85.95%% | 0.83% | 0.47% |

| Pressure (Mpa) | Number | YOLOv5 Detection Time (ms/image) | OMD-ViBe Detection Time (ms/image) | YOLOv5 Recognition Accuracy |

|---|---|---|---|---|

| 0.2 | 1 | 32.5 | 6.2 | 98.7% |

| 2 | 32.8 | 5.8 | 98.4% | |

| 3 | 34.1 | 5.9 | 99.2% | |

| 0.3 | 1 | 33.2 | 6.0 | 98.5% |

| 2 | 33.4 | 6.0 | 98.4% | |

| 3 | 31.7 | 5.9 | 98.2% | |

| 0.4 | 1 | 32.4 | 6.0 | 98.7% |

| 2 | 30.6 | 6.1 | 99.1% | |

| 3 | 33.8 | 6.1 | 98.6% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, R.; Wu, Z.; Zhang, D.; Chen, J. Multizone Leak Detection Method for Metal Hose Based on YOLOv5 and OMD-ViBe Algorithm. Appl. Sci. 2023, 13, 5269. https://doi.org/10.3390/app13095269

Chen R, Wu Z, Zhang D, Chen J. Multizone Leak Detection Method for Metal Hose Based on YOLOv5 and OMD-ViBe Algorithm. Applied Sciences. 2023; 13(9):5269. https://doi.org/10.3390/app13095269

Chicago/Turabian StyleChen, Renshuo, Zhijun Wu, Dan Zhang, and Jiaoliao Chen. 2023. "Multizone Leak Detection Method for Metal Hose Based on YOLOv5 and OMD-ViBe Algorithm" Applied Sciences 13, no. 9: 5269. https://doi.org/10.3390/app13095269

APA StyleChen, R., Wu, Z., Zhang, D., & Chen, J. (2023). Multizone Leak Detection Method for Metal Hose Based on YOLOv5 and OMD-ViBe Algorithm. Applied Sciences, 13(9), 5269. https://doi.org/10.3390/app13095269