GAN Data Augmentation Methods in Rock Classification

Abstract

1. Introduction

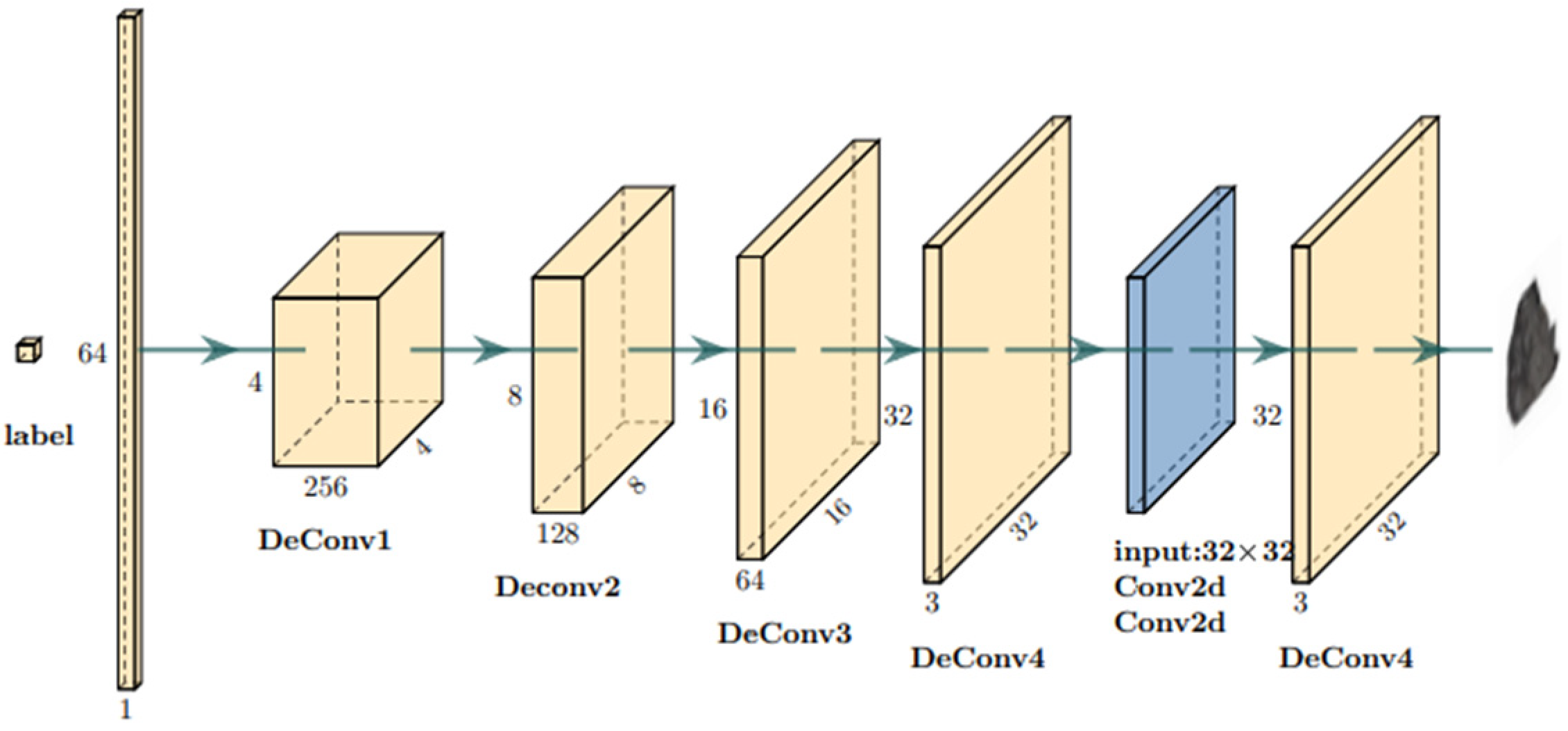

2. Generating Adversarial Networks

3. CRDCGAN Algorithm

3.1. Loss Function Improvements

3.2. Join Condition Information

3.3. Add Residuals Module

4. Experimental Procedure and Analysis

4.1. Evaluation Indicators

4.2. Experimental Environment Configuration

4.3. Experimental Procedure

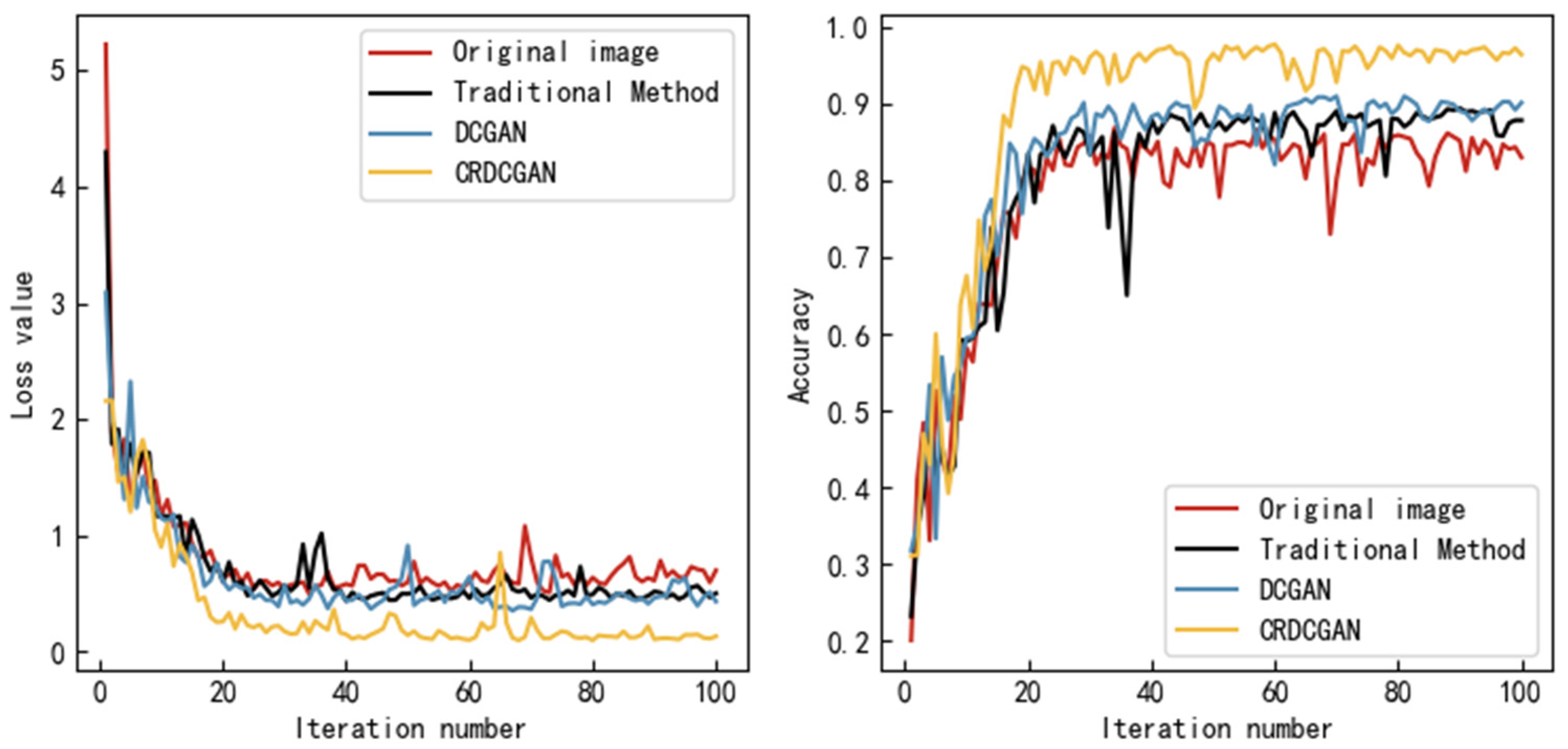

4.4. Experimental Procedure and Analysis of Results

4.5. Experimental Comparison of Different Data Augmentation Methods on Public Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Murdie, R. Geological Survey of Western Australia: Geological Survey of Western Australia’s Accelerated Geoscience Program. Preview 2021, 2021, 24–25. [Google Scholar] [CrossRef]

- Chen, C.; Chen, L.; Huang, Z. Study on Support Design and Parameter Optimization of Broken Soft Large-Section Roadway at High Altitude. Min. Res. Dev. 2022, 42, 88–94. [Google Scholar] [CrossRef]

- Wang, W.; Li, Q.; Zhang, D.; Li, H.; Wang, H. A survey of ore image processing based on deep learning. Chin. J. Eng. 2023, 45, 621–631. [Google Scholar] [CrossRef]

- Xu, S.; Zhou, Y. Artificial intelligence identification of ore minerals under microscope based on deep learning algorithm. Acta Petrol. Sin. 2018, 34, 3244–3252. [Google Scholar]

- Li, C.; Zhang, X.; Zhu, H.; Zhang, M. Research on Dangerous Behavior Identification Method Based on Transfer Learning. Sci. Technol. Eng. 2019, 19, 187–192. [Google Scholar]

- Wu, W.; Qi, Q.; Yu, X. Deep learning-based data privacy protection in software-defined industrial networking. Comput. Electr. Eng. 2023, 106, 108578. [Google Scholar] [CrossRef]

- Pu, Y.; Apel, D.B.; Szmigiel, A.; Chen, J. Image Recognition of Coal and Coal Gangue Using a Convolutional Neural Network and Transfer Learning. Energies 2019, 12, 1735. [Google Scholar] [CrossRef]

- Tian, Z.; Wang, M.; Wu, J.; Gui, W.; Wang, W. Mine Image Enhancement Algorithm Based on Dual Domain Decomposition. Acta Photonica Sin. 2019, 48, 107–119. [Google Scholar]

- Hong, H.; Zheng, L.; Zhu, J.; Pan, S.; Zhou, K. Automatic Recognition of Coal and Gangue based on Convolution Neural Network. Coal Eng. 2017, 49, 30–34. [Google Scholar]

- Baraboshkin, E.E.; Ismailova, L.S.; Orlov, D.M.; Zhukovskaya, E.A.; Kalmykov, G.A.; Khotylev, O.V.; Baraboshkin, E.Y.; Koroteev, D.A. Deep convolutions for in-depth automated rock typing. Comput. Geosci. 2019, 135, 104330. [Google Scholar] [CrossRef]

- Cheng, G.; Zhang, F. Super-resolution Reconstruction of Rock Slice Image Based on SinGAN. J. Xi’an Shiyou Univ. (Nat. Sci. Ed.) 2021, 36, 116–121. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Liang, H.; Lu, H.; Feng, K.; Liu, Y.; Li, J.; Meng, L. Application of the improved NOFRFs weighted contribution rate based on KL divergence to rotor rub-impact. Nonlinear Dyn. 2021, 104, 3937–3954. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, J.; Sun, N.; Yang, Q.; Wang, L. IT-SVO: Improved Semi-Direct Monocular Visual Odometry Combined with JS Divergence in Restricted Mobile Devices. Sensors 2021, 21, 2025. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Song, D. Research on resource allocation strategy of group robot system. J. Xi’an Univ. Sci. Technol. 2022, 42, 818–825. [Google Scholar] [CrossRef]

- Zhang, E.; Gu, G.; Zhao, C.; Zhao, Z. Research progress on generative adversarial network. Appl. Res. Comput. 2021, 38, 968–974. [Google Scholar] [CrossRef]

- Randforf, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, B.; Meng, X.; Zhang, X. Pavement Crack Recognition Algorithm Based on Transposed Convolutional Neural Network. J. South China Univ. Technol. (Nat. Sci. Ed.) 2021, 49, 124–132. [Google Scholar]

- Arjovsky, M.; Chintala, B.; Bottou, L. Wasserstein Generative Adversarial Networks. In Proceedings of the 34th International Conference on Machine Learning, International Convention Centre, Sydney, NSW, Australia, 6–11 August 2017. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 700–778. [Google Scholar]

- Zhang, X.; Li, L.; Di, D.; Wang, J.; Chen, G.; Jing, W.; Emam, M. SERNet: Squeeze and Excitation Residual Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 4770. [Google Scholar] [CrossRef]

- Wang, J.; Tang, L.; Wang, C.; Zhu, R.; Dong, R.; Zheng, L.; Sha, W.; Huang, L.; Li, P.; Weng, S. Multi-scale convolution neural network with residual modules for determination of drugs in human hair using surface-enhanced Raman spectroscopy with a gold nanorod film self-assembled by inverted evaporation. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 280, 121463. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, K.; Zhang, H. Miner’s emotion recognition based on deep wise separable convolution neural network miniXception. J. Xi’an Univ. Sci. Technol. 2022, 42, 562–571. [Google Scholar]

- Song, Y.; Wang, X.; Lei, L. Evaluating evidence reliability based on confusion matrix. Syst. Eng. Electron. 2015, 37, 974–978. [Google Scholar] [CrossRef]

| Type | Precision | Recall | F1 Score | Number |

|---|---|---|---|---|

| Basalt | 0.8438 | 0.7714 | 0.8060 | 27 |

| Coal | 0.9061 | 0.9820 | 0.9425 | 164 |

| Granite | 0.9000 | 0.7941 | 0.8437 | 27 |

| Limestone | 0.9630 | 0.7123 | 0.8189 | 104 |

| Marble | 0.6632 | 0.8514 | 0.7456 | 126 |

| Quartzite | 0.7753 | 0.7797 | 0.7775 | 138 |

| Sandstone | 0.9273 | 0.8361 | 0.8793 | 102 |

| Type | Precision | Recall | F1 Score | Number |

|---|---|---|---|---|

| Basalt | 0.8710 | 0.7714 | 0.8182 | 27 |

| Coal | 0.9647 | 0.9820 | 0.9733 | 164 |

| Granite | 1.0000 | 0.8235 | 0.9032 | 28 |

| Limestone | 0.9348 | 0.8836 | 0.9085 | 129 |

| Marble | 0.7922 | 0.8243 | 0.8079 | 122 |

| Quartzite | 0.8146 | 0.8192 | 0.8169 | 145 |

| Sandstone | 0.8692 | 0.9262 | 0.8968 | 113 |

| Type | Precision | Recall | F1 Score | Number |

|---|---|---|---|---|

| Basalt | 0.8857 | 0.8857 | 0.8857 | 31 |

| Coal | 0.9375 | 0.9880 | 0.9621 | 165 |

| Granite | 0.8333 | 0.8824 | 0.8571 | 30 |

| Limestone | 0.9007 | 0.9315 | 0.9158 | 136 |

| Marble | 0.8333 | 0.8446 | 0.8389 | 125 |

| Quartzite | 0.9432 | 0.8305 | 0.8833 | 147 |

| Sandstone | 0.9040 | 0.9262 | 0.9150 | 113 |

| Type | Precision | Recall | F1 Score | Number |

|---|---|---|---|---|

| Basalt | 1.000 | 0.9429 | 0.9706 | 33 |

| Coal | 0.9880 | 0.9820 | 0.9850 | 164 |

| Granite | 1.000 | 0.9411 | 0.9697 | 32 |

| Limestone | 0.9589 | 0.9589 | 0.9589 | 140 |

| Marble | 0.9063 | 0.9797 | 0.9416 | 145 |

| Quartzite | 0.9881 | 0.9379 | 0.9623 | 166 |

| Sandstone | 0.9597 | 0.9754 | 0.9675 | 119 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, G.; Cai, Z.; Wang, X.; Dang, X. GAN Data Augmentation Methods in Rock Classification. Appl. Sci. 2023, 13, 5316. https://doi.org/10.3390/app13095316

Zhao G, Cai Z, Wang X, Dang X. GAN Data Augmentation Methods in Rock Classification. Applied Sciences. 2023; 13(9):5316. https://doi.org/10.3390/app13095316

Chicago/Turabian StyleZhao, Gaochang, Zhao Cai, Xin Wang, and Xiaohu Dang. 2023. "GAN Data Augmentation Methods in Rock Classification" Applied Sciences 13, no. 9: 5316. https://doi.org/10.3390/app13095316

APA StyleZhao, G., Cai, Z., Wang, X., & Dang, X. (2023). GAN Data Augmentation Methods in Rock Classification. Applied Sciences, 13(9), 5316. https://doi.org/10.3390/app13095316