Intelligent Space Object Detection Driven by Data from Space Objects

Abstract

:1. Introduction

- A space target component detection dataset of 17,942 images was created;

- An image fusion method was adopted to simulate the real scenarios in space to the maximum extent;

- Semi-automatic labeling was performed for dataset annotation, which reduced the cost of manual annotation significantly;

- The YOLOv7-R model algorithm was proposed, whose better performance was verified.

2. Dataset Construction and Labeling

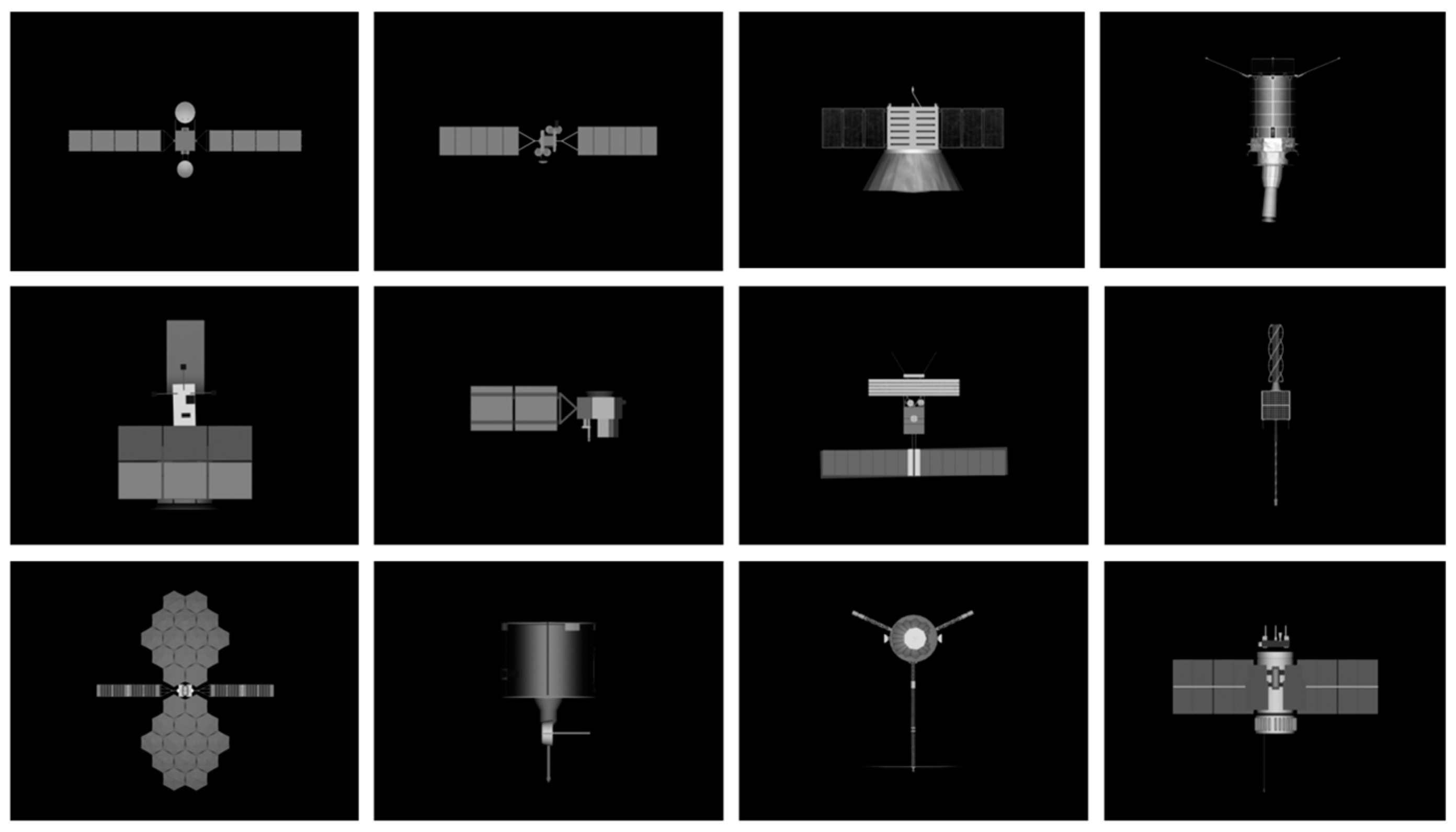

2.1. Dataset Construction

- Camera Setup: In space-based visible light imaging detection of space targets, there is a long distance between the space target and the camera, and the distance of observation far exceeds the size of the space observation target. Therefore, orthographic projection is performed in the process of simulation. Also, a camera is used for alignment with the satellite, with a field of view set to 45°. Thus, both are aligned with the z-axis. This allows the model to be positioned at the center of the camera in the initial state, which makes all parts visible.

- Light Source Setup: In the space environment, sunlight is almost comparable to parallel light. Hence, a parallel white light source is introduced at the initial position of the camera. Without attenuation, it has an intensity multiplier coefficient of 0.906. By adjusting this multiplier coefficient, it is achievable to obtain the simulated images with varied brightness.

- Adjusting Positions: There are different viewpoints and lighting conditions because of the changes in the relative positions between the camera, light source, and satellite model. To capture the simulated images from all viewpoints, the position of light source relative to the camera is kept unchanged and the model remains stationary, while the camera is moved along the Earth’s spherical surface centered on the satellite model. By means of latitude and longitude representation as a geographic method, sampling is conducted along the 0° to ±90° latitude line, which leads to 210 sampling viewpoints.

- Model Rendering Output: The output image resolution is set to 640 × 480 pixels, and the image attribute is set to 256-bit pixels, with the contour information of the target retained effectively.

2.2. Data Enhancement

2.3. Dataset Labeling

3. Space Object Detection Algorithm Model

3.1. Introduction to YOLOv7

3.2. ELAN

3.3. RepPoints and ELAN-R

4. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharma, S.; D’Amico, S. Neural Network-Based Pose Estimation for Noncooperative Spacecraft Rendezvous. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4638–4658. [Google Scholar] [CrossRef]

- Phisannupawong, T.; Kamsing, P.; Torteeka, P.; Channumsin, S.; Sawangwit, U.; Hematulin, W.; Jarawan, T.; Somjit, T.; Yooyen, S.; Delahaye, D.; et al. Vision-Based Spacecraft Pose Estimation via a Deep Convolutional Neural Network for Noncooperative Docking Operations. Aerospace 2020, 7, 126. [Google Scholar] [CrossRef]

- Hoang, D.A.; Chen, B.; Chin, T.-J. A Spacecraft Dataset for Detection, Segmentation and Parts Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–26 June 2021. [Google Scholar]

- Sato, T.; Wakayama, T.; Tanaka, T.; Ikeda, K.-I.; Kimura, I. Shape of Space Debris as Estimated from Radar Cross Section Variations. J. Spacecr. Rocket. 1994, 31, 665–670. [Google Scholar] [CrossRef]

- Rossi, A. The Earth Orbiting Space Debris. Serbian Astron. J. 2005, 170, 1–12. [Google Scholar] [CrossRef]

- Linares, R.; Furfaro, R. Space Object classification using deep Convolutional Neural Networks. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016. [Google Scholar]

- Zhang, X.; Xiang, J.; Zhang, Y. Space object detection in video satellite images using motion information. Int. J. Aerosp. Eng. 2017, 2017, 1024529. [Google Scholar]

- Yan, Z.; Song, X. Spacecraft Detection Based on Deep Convolutional Neural Network. In Proceedings of the 2018 IEEE 3rd International Conference on Signal and Image Processing (ICSIP), Shenzhen, China, 13–15 July 2018. [Google Scholar]

- Yang, X.; Wu, T.; Zhang, L.; Yang, D.; Wang, N.; Song, B.; Gao, X. CNN with spatio-temporal information for fast suspicious object detection and recognition in THz security images. Signal Process. 2019, 160, 202–214. [Google Scholar] [CrossRef]

- Wu, T.; Yang, X.; Song, B.; Wang, N.; Gao, X.; Kuang, L.; Nan, X.; Chen, Y.; Yang, D. T-SCNN: A Two-Stage Convolutional Neural Network for Space Target Recognition. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, July 28–August 2 2019. [Google Scholar]

- Yang, X.; Wu, T.; Wang, N.; Huang, Y.; Song, B.; Gao, X. HCNN-PSI: A hybrid CNN with partial semantic information for space target recognition. Pattern Recognit. 2020, 108, 107531. [Google Scholar] [CrossRef]

- Musallam, M.A.; Al Ismaeil, K.; Oyedotun, O.; Perez, M.D.; Poucet, M.; Aouada, D. SPARK: Spacecraft recognition leveraging knowledge of space environment. arXiv 2021, arXiv:2104.05978. [Google Scholar]

- Song, J.; Rondao, D.; Aouf, N. Deep learning-based spacecraft relative navigation methods: A survey. Acta Astronaut. 2022, 191, 22–40. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934, 10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430, 08430. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Xie, R.; Zlatanova, S.; Lee, J.; Aleksandrov, M. A Motion-Based Conceptual Space Model to Support 3D Evacuation Simulation in Indoor Environments. ISPRS Int. J. Geo-Inf. 2023, 12, 494. [Google Scholar] [CrossRef]

- Ali, H.A.H.; Seytnazarov, S. Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms. Sensors 2023, 23, 9726. [Google Scholar] [CrossRef] [PubMed]

- Zheng, X.; Feng, R.; Fan, J.; Han, W.; Yu, S.; Chen, J. MSISR-STF: Spatiotemporal Fusion via Multilevel Single-Image Super-Resolution. Remote Sens. 2023, 15, 5675. [Google Scholar] [CrossRef]

- Eker, A.G.; Pehlivanoğlu, M.K.; İnce, İ.; Duru, N. Deep Learning and Transfer Learning Based Brain Tumor Segmentation. In Proceedings of the 2023 8th International Conference on Computer Science and Engineering (UBMK), Burdur, Turkiye, 13–15 September 2023. [Google Scholar]

- Zhang, H.; Liu, Z.; Jiang, Z. BUAA-SID1. 0 space object image dataset. Spacecr. Recovery Remote Sens. 2010, 31, 65–71. [Google Scholar]

- Shen, X.; Xu, B.; Shen, H. Indoor Localization System Based on RSSI-APIT Algorithm. Sensors 2023, 23, 9620. [Google Scholar] [CrossRef]

- Wu, X.; Wang, C.; Tian, Z.; Huang, X.; Wang, Q. Research on Belt Deviation Fault Detection Technology of Belt Conveyors Based on Machine Vision. Machines 2023, 11, 1039. [Google Scholar] [CrossRef]

- Mai, H.T.; Ngo, D.Q.; Nguyen, H.P.T.; La, D.D. Fabrication of a Reflective Optical Imaging Device for Early Detection of Breast Cancer. Bioengineering 2023, 10, 1272. [Google Scholar] [CrossRef] [PubMed]

- Vazquez Alejos, A.; Dawood, M. Multipath Detection and Mitigation of Random Noise Signals Propagated through Naturally Lossy Dispersive Media for Radar Applications. Sensors 2023, 23, 9447. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Zhu, T.; Nie, M.; Liu, Z. More Reliable Neighborhood Contrastive Learning for Novel Class Discovery in Sensor-Based Human Activity Recognition. Sensors 2023, 23, 9529. [Google Scholar] [CrossRef] [PubMed]

- Shaheed, K.; Qureshi, I.; Abbas, F.; Jabbar, S.; Abbas, Q.; Ahmad, H.; Sajid, M.Z. EfficientRMT-Net—An Efficient ResNet-50 and Vision Transformers Approach for Classifying Potato Plant Leaf Diseases. Sensors 2023, 23, 9516. [Google Scholar] [CrossRef]

- Altwijri, O.; Alanazi, R.; Aleid, A.; Alhussaini, K.; Aloqalaa, Z.; Almijalli, M.; Saad, A. Novel Deep-Learning Approach for Automatic Diagnosis of Alzheimer’s Disease from MRI. Appl. Sci. 2023, 13, 13051. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets V2: More Deformable, Better Results. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Doi, J.; Yamanaka, M. Discrete finger and palmar feature extraction for personal authentication. IEEE Trans. Instrum. Meas. 2005, 54, 2213–2219. [Google Scholar] [CrossRef]

- Pan, X.; Zhu, S.; He, Y.; Chen, X.; Li, J.; Zhang, A. Improved Self-Adaption Matched Filter for Moving Target Detection. In Proceedings of the 2019 IEEE International Conference on Computational Electromagnetics (ICCEM), Shanghai, China, 20–22 March 2019. [Google Scholar]

- Zhang, Y.; Han, J.H.; Kwon, Y.W.; Moon, Y.S. A New Architecture of Feature Pyramid Network for Object Detection. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

| Solar Panels on Satellites | Body of the Satellite | Circular Antenna on Satellites | Optical Payload on Satellites | Antenna Array on Satellites |

|---|---|---|---|---|

| panel | body | antenna | optical-load | antenna-rod |

| Panel | Body | Antenna | Optical-Load | Antenna-Rod | Accuracy | |

|---|---|---|---|---|---|---|

| YOLOv3 | 0.915 | 0.931 | 0.934 | 0.892 | 0.905 | 0.927 |

| YOLOv4 | 0.979 | 0.98 | 0.965 | 0.936 | 0.952 | 0.963 |

| YOLOv5l | 0.941 | 0.939 | 0.933 | 0.892 | 0.963 | 0.934 |

| YOLOv6l | 0.975 | 0.975 | 0.957 | 0.945 | 0.957 | 0.962 |

| YOLOv8l | 0.98 | 0.982 | 0.968 | 0.955 | 0.95 | 0.969 |

| YOLOR | 0.979 | 0.981 | 0.96 | 0.948 | 0.96 | 0.966 |

| YOLOv7 | 0.978 | 0.983 | 0.962 | 0.938 | 0.959 | 0.964 |

| YOLOv7-R | 0.986 | 0.99 | 0.972 | 0.974 | 0.993 | 0.983 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Q.; Li, X.; Xie, M.; Zhen, J. Intelligent Space Object Detection Driven by Data from Space Objects. Appl. Sci. 2024, 14, 333. https://doi.org/10.3390/app14010333

Tang Q, Li X, Xie M, Zhen J. Intelligent Space Object Detection Driven by Data from Space Objects. Applied Sciences. 2024; 14(1):333. https://doi.org/10.3390/app14010333

Chicago/Turabian StyleTang, Qiang, Xiangwei Li, Meilin Xie, and Jialiang Zhen. 2024. "Intelligent Space Object Detection Driven by Data from Space Objects" Applied Sciences 14, no. 1: 333. https://doi.org/10.3390/app14010333