Abstract

The artificial intelligence (AI) industry is increasingly integrating with diverse sectors such as smart logistics, FinTech, entertainment, and cloud computing. This expansion has led to the coexistence of heterogeneous applications within multi-tenant systems, presenting significant scheduling challenges. This paper addresses these challenges by exploring the scheduling of various machine learning workloads in large-scale, multi-tenant cloud systems that utilize heterogeneous GPUs. Traditional scheduling strategies often struggle to achieve satisfactory results due to low GPU utilization in these complex environments. To address this issue, we propose a novel scheduling approach that employs a genetic optimization technique, implemented within a process-oriented discrete-event simulation framework, to effectively orchestrate various machine learning tasks. We evaluate our approach using workload traces from Alibaba’s MLaaS cluster with over 6000 heterogeneous GPUs. The results show that our scheduling improves GPU utilization by 12.8% compared to Round-Robin scheduling, demonstrating the effectiveness of the solution in optimizing cloud-based GPU scheduling.

1. Introduction

With the rapid advancement in artificial intelligence (AI) industries and the explosion of large datasets, heterogeneous GPUs within MLaaS (Machine Learning as a Service) frameworks are being swiftly deployed to execute various AI applications [1]. These extensive GPU clusters are crucial for handling a huge number of machine learning (ML) tasks [2,3], such as training and inference, significantly speeding up processes across numerous AI applications [4,5].

However, the diversity of ML tasks coupled with varied GPU configurations, poses significant challenges in resource management and scheduling. Task scheduling has been widely studied in a variety of systems, ranging from low-end mobile devices to high-end industrial systems [6,7,8]. However, ML workloads have significantly different resource usage patterns from traditional applications, making it difficult to efficiently manage system resources [9,10,11]. In particular, GPUs in large-scale production clusters often exhibit low utilizations of less than 50% when executing ML workloads [12,13,14]. This underutilization of advanced GPU features is primarily due to the increasing number of small tasks, such as inference, and this trend is expected to continue in the near future [15].

In response to these challenges, the Multi Instance-GPU (MIG) feature within NVIDIA’s Ampere architecture has been designed to enable GPU sharing across tasks [16]. Nevertheless, this solution does not ensure high GPU utilization, as fragmentation issues often arise when GPUs are only partially allocated. This fragmentation is a recurring problem in shared-GPU environments.

Traditional solutions such as packing strategies have been utilized to mitigate fragmentation issues [17]. Conceptually, task scheduling in GPU environments can be likened to a multi-dimensional bin-packing problem, where tasks and nodes are comparable to spheres and boxes, aiming to minimize the number of boxes used. However, traditional bin-packing methods often fall short in environments with multiple GPUs, due to intrinsic differences in the scheduling needs of GPU sharing.

To better tackle these issues, this paper explores two bin-packing models tailored for GPU scheduling. The first model seeks to consolidate multiple GPUs into a single machine by aggregating their capacities. Although theoretically appealing, this model falls short in practical scenarios due to its failure to consider individual GPU fragmentation. The second model treats each GPU as a separate resource dimension, acknowledging that GPUs, unlike CPUs and memory, are interdependent, with tasks on one GPU often relying on others, rendering traditional bin-packing strategies insufficient for real-world GPU scheduling challenges.

To address these complexities, we propose a novel scheduling method that incorporates a genetic optimization technique within the Simpy 4.1.1 discrete-event simulation framework [18]. This approach specifically targets the issue of GPU underutilization due to fragmentation and aims to enhance overall GPU efficiency. By conducting large-scale simulations on Alibaba’s PAI (Platform for AI) cluster, which includes over 1800 nodes and more than 6000 GPUs [19,20], we demonstrate that our scheduling policy improves GPU utilization by 12.8% compared to Round-Robin scheduling and also performs better than the established bin-packing-based scheduling method, Tetris [21]. This substantial improvement highlights the effectiveness of our approach in optimizing resource allocation within cloud-based ML environments.

The remainder of this paper is organized as follows. In Section 2, we will briefly discuss the problem definition and the basic model for our scheduling. Section 3 describes the genetic optimization of our task scheduling in detail. Section 4 validates the effectiveness of the proposed scheduling based on simulation experiments. Finally, Section 5 concludes this paper.

2. Problem Definition and the Basic Model

2.1. Overview of GPU Utilization in Multi-Tenant Environments

GPUs are costly resources when compared to CPUs and other computing resources. In multi-tenant environments executing ML workloads, it is common to encounter significant underutilization of GPUs. Reports from various industries indicate that the average GPU utilization often remains below 50%, which poses substantial challenges in resource planning within large-scale AI server clusters [12,13]. Notably, in Alibaba’s cloud machine learning platform, PAI, approximately 76% of all GPUs show an average utilization of less than 10% [22], highlighting a systemic issue where many training tasks do not fully leverage the capabilities of modern GPUs [23,24].

2.2. Challenges of GPU-Sharing Technology

To address underutilization, GPU-sharing technology has been implemented, which allows the partial allocation of a single GPU to perform multiple tasks. This technology aims to reduce the number of GPUs required per workload, thereby enabling more ML tasks to be executed within the same resource framework. However, as the volume of ML workloads increases, the cluster can experience extended waiting times, task timeouts, and even scheduling failures. Particularly, when GPU allocation ratios exceed 80%, the system begins to suffer from severe GPU fragmentation, making it impossible to accommodate new tasks despite available GPUs, thus leading to resource wastage [25].

2.3. GPU Fragmentation and Allocation Challenges

Unlike traditional resource fragmentation, GPU fragmentation is exacerbated by the need for the continuous allocation of GPU segments. This requirement is fundamentally different from other resource allocations, such as disk space, where discontinuous allocation can resolve fragmentation [26]. Also, traditional task-scheduling approaches, akin to multi-dimensional bin-packing problems, fail to address the unique challenges posed by GPU-sharing technology [27,28]. These approaches typically model tasks and nodes as spheres and boxes, aiming to minimize the number of boxes. However, in environments where GPUs are shared, traditional bin-packing strategies become ineffective, because they do not account for the unique allocation boundaries and interchangeable nature of partial GPU allocations.

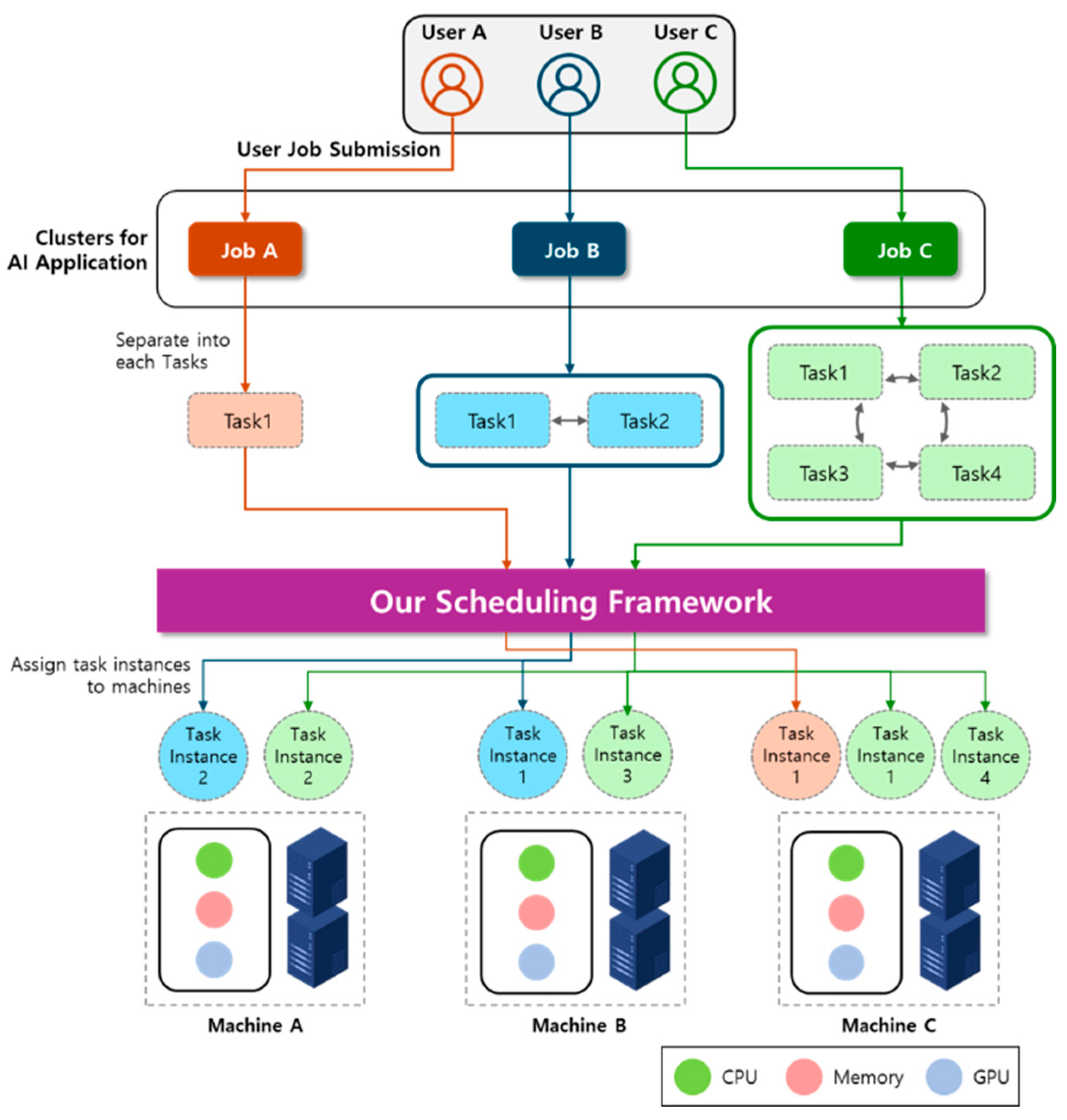

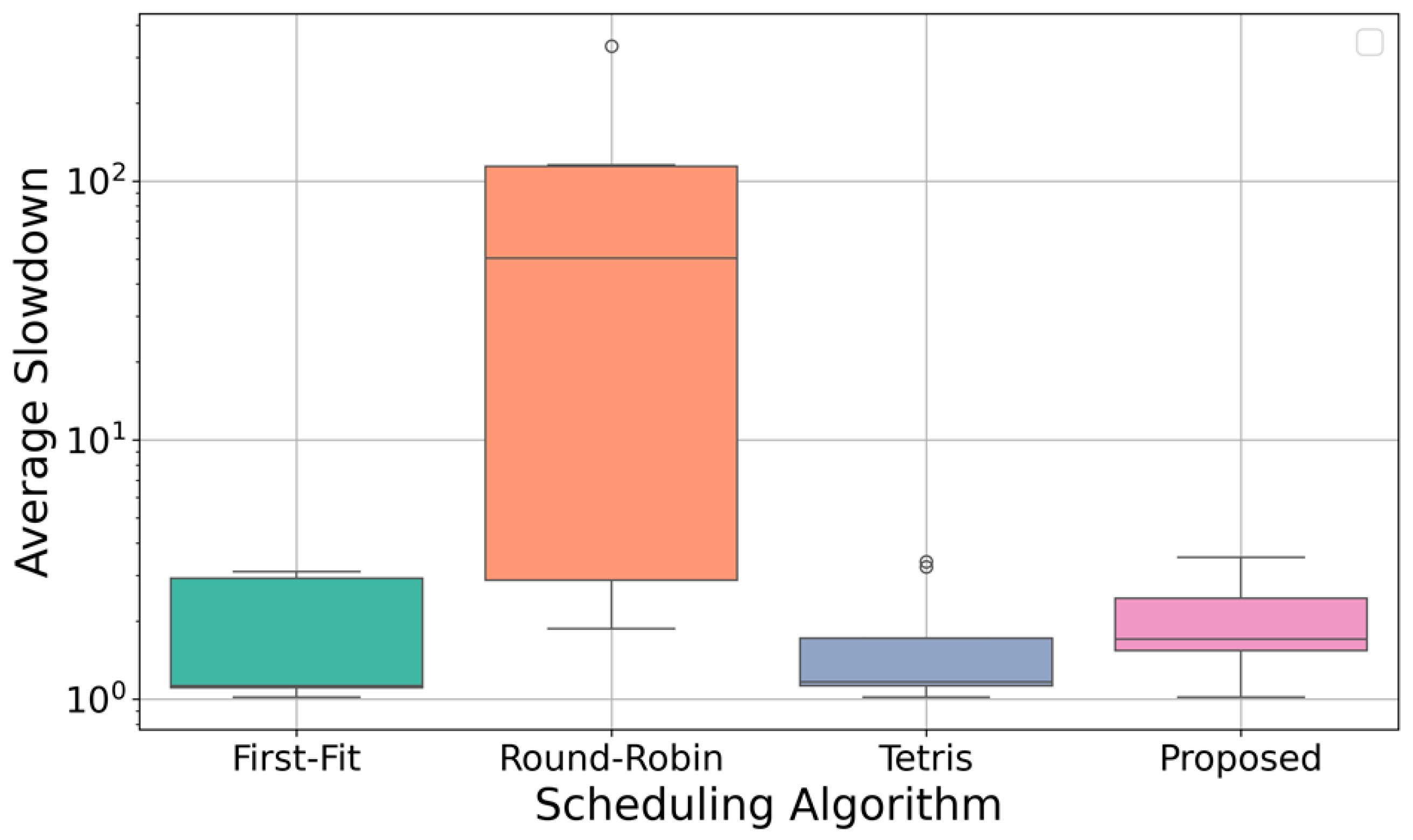

2.4. Scheduling System Architecture

As depicted in Figure 1, our proposed scheduling system architecture accommodates various types of ML jobs developed in frameworks like TensorFlow 2.1.0, PyTorch 1.11.0, and Graph-Learn 0.0.3. These jobs are parsed into tasks based on their roles, with each task comprising multiple task instances executed across several cluster machines. We monitor the CPU, memory, and GPU resource requirements from the user-submitted jobs and search for optimized pairs of machines and task instances that can satisfy these demands. Our scheduling method prioritizes pairs that significantly enhance GPU utilization within the cluster.

Figure 1.

The basic architecture of the proposed scheduling system.

2.5. Optimization of GPU Cluster Utilization

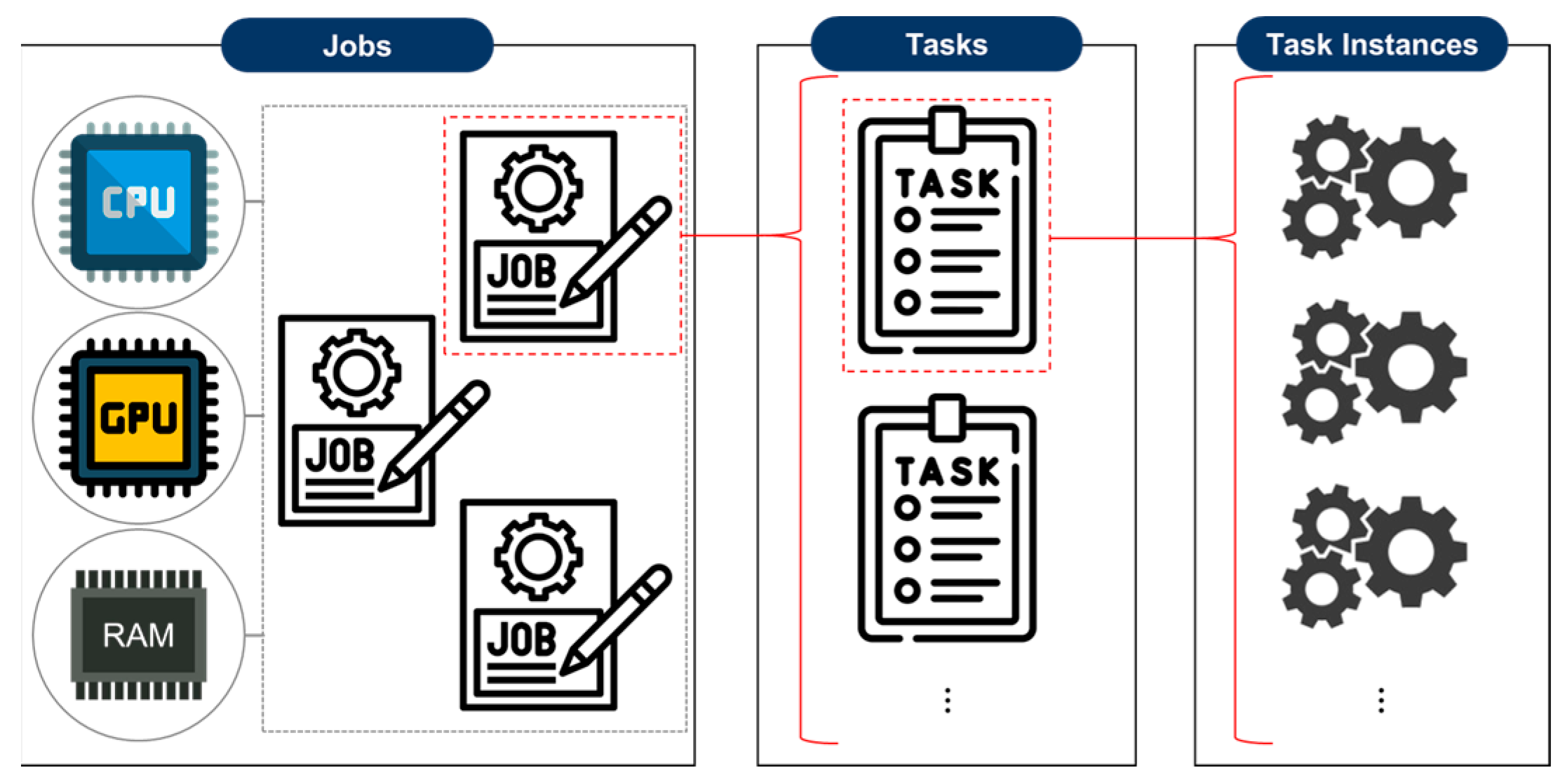

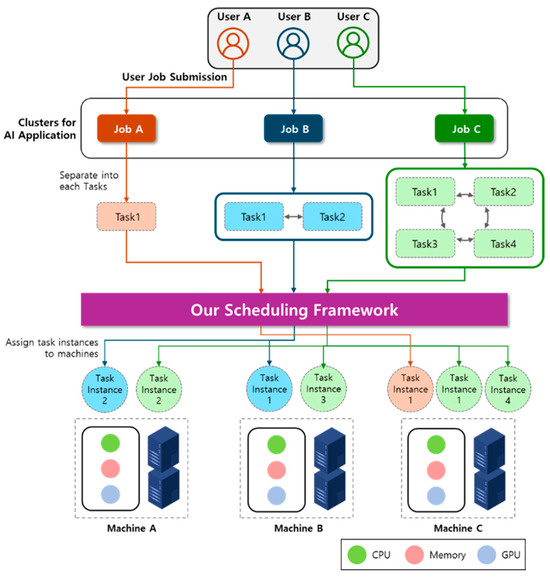

Our primary goal of scheduling is to optimize GPU utilization by efficiently assigning task instances to the appropriate machines , considering the resource requirements of each task instance and the available resources of each machine. The hierarchical structure of jobs, tasks, and task instances is depicted in Figure 2. Our scheduling algorithm first merges the list of task instances with available machines in the cluster, to create a candidate-scheduling list containing all feasible machine and task instance pairs (). Only pairs where a machine can accommodate a task instance based on resource availability, are included in the candidate list. For a machine to host a task instance , the available CPU, memory, and GPU resources , , of the machine must meet the CPU, memory, and GPU resource demands , , of the task instance . The key objective is to find the pair of task instance and machine with the highest GPU utilization, denoted as . By assigning task instance to machine , the scheduling of a single task instance is completed. This process is repeated for all task instances submitted to the cluster. The details of this algorithm are outlined in Algorithm 1, which provides a step-by-step description of the process.

| Algorithm 1 Overall Scheduling Algorithm |

|

Figure 2.

Hierarchical structure of jobs, tasks, and task instances in our scheduling.

3. Scheduling Optimization by Genetic Algorithms

The genetic algorithm (GA) is a search heuristic inspired by the natural evolutionary processes of genetics [29]. In this paper, the search domain encompasses the available resource capacities of GPU within each machine in the cluster. The primary objective of the genetic algorithm discussed in this paper is to discover a scheduling solution that optimizes the utilization of GPUs across the entire cluster. To accomplish this goal, our genetic algorithm manages a specific number of solutions, referred to as a population, and evolves this population to find the best solution.

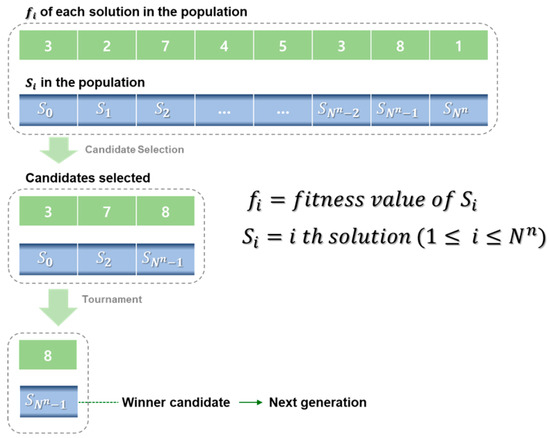

Our genetic algorithm initiates by generating an initial population randomly. It then chooses a subset of solutions from this population to produce new offspring solutions. As the algorithm progresses through generations, it sustains the population size by replacing some solutions with newly created ones to evolve the population. Ultimately, the solution with the highest fitness value, which will be defined later, within the final population is chosen as the resolution to the scheduling issue addressed by the genetic algorithm. Algorithm 2 provides a concise overview of our GA-based scheduling approach.

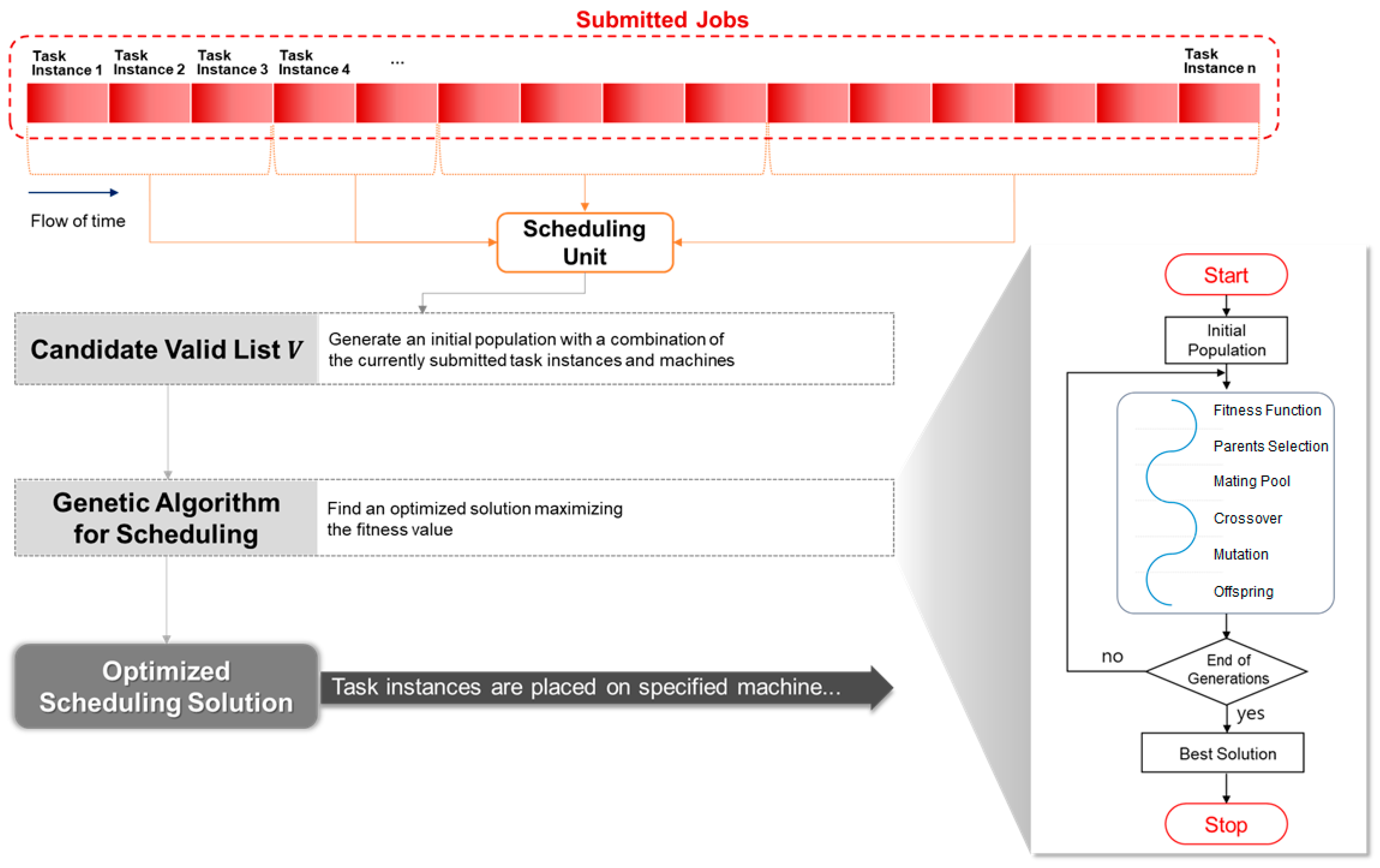

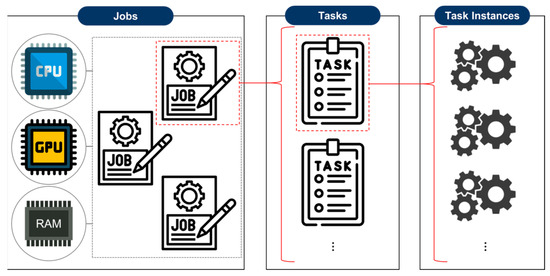

Our genetic algorithm operates by taking a list V containing pairs of <machine, task instance> as its input. Initially, V is designated as the starting set of solutions, and its fitness value is computed. The algorithm then proceeds with iterative generations to find the most superior solution. Parents are chosen to produce offspring in the mating pool, followed by the execution of a crossover operation. Subsequently, an adaptive mutation is introduced to generate the succeeding set of solutions. Then, the fitness values for the newly generated solutions are evaluated. This iterative process is repeated across multiple generations until the population converges. Finally, the best solution from the generated population is selected to determine which machine within the cluster each task instance will be assigned to. Figure 3 briefly illustrates the overview of our genetic algorithm.

| Algorithm 2 Genetic Algorithm for Scheduling |

| Input: Output:

|

Figure 3.

Overview of proposed genetic algorithm.

3.1. Fitness Function

The genetic algorithm employs a fitness function to assess the effectiveness of solutions. The fitness function outlined in Algorithm 3 evaluates the quality of a solution, denoted as s, consisting of a machine id m and a task instance id t. The fitness function verifies whether the machine associated with m can accommodate the task instance linked to t. In order for task instance t to be accommodated by machine m, the available resources of machine m—denoted as P, Q, and R—must meet the required resources of task instance t, denoted as p, q, and r. If this condition is met, the function yields the increased GPU utilization as the fitness value when task instance t is scheduled on machine m. Conversely, if the accommodation is not feasible, the fitness value returned is −1.

| Algorithm 3 Fitness Function |

| Input: Output:

|

3.2. Problem Encoding and Population Size

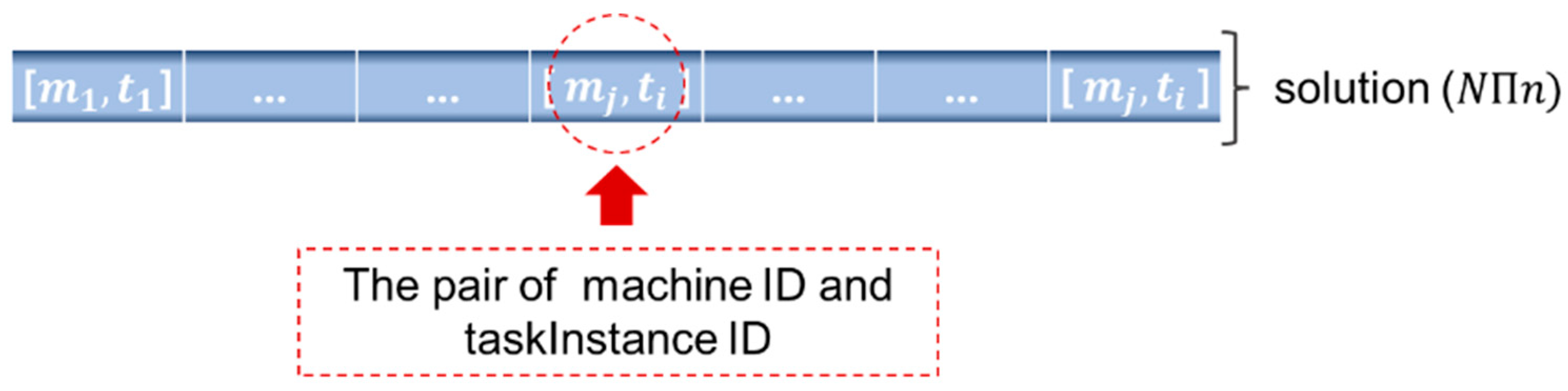

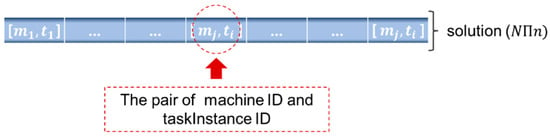

In our scheduling, a single solution is characterized by the pair , where denotes the identifier of one of the machines in the cluster and represents the identifier of one of the task instances awaiting scheduling. This solution entails the allocation of task instance to machine . By combining the task instances awaiting scheduling and the machines within the cluster, we generate a solution. Consequently, the entire population comprises all possible permutations of solutions that can be derived from these task instances and machines.

Our solution encodes the machine id to which each task instance should be assigned, using an array of integers as shown in Figure 4. Each element of the solution represents the machine id where the corresponding indexed task instance will be placed. Therefore, the length of the solution is equal to the number of task instances to be scheduled.

Figure 4.

Encoding of our genetic algorithm.

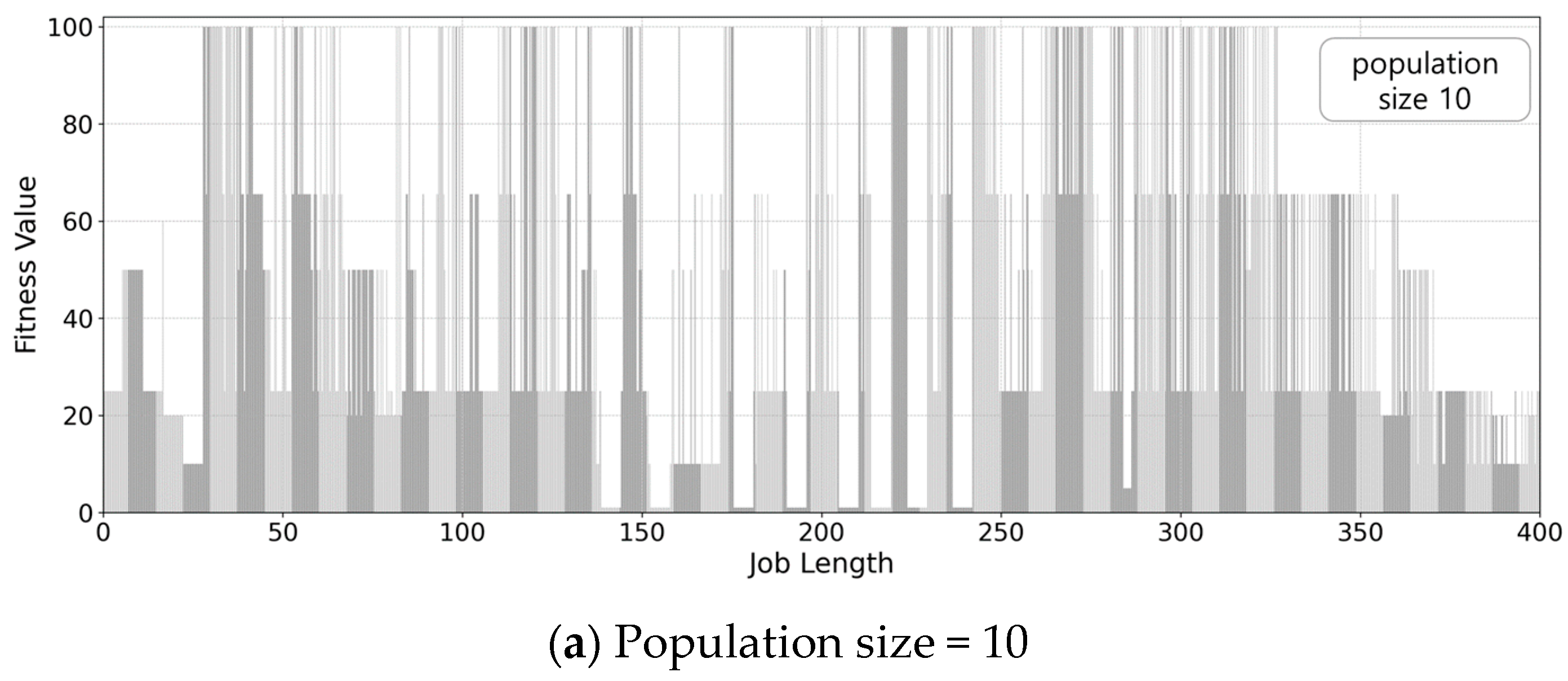

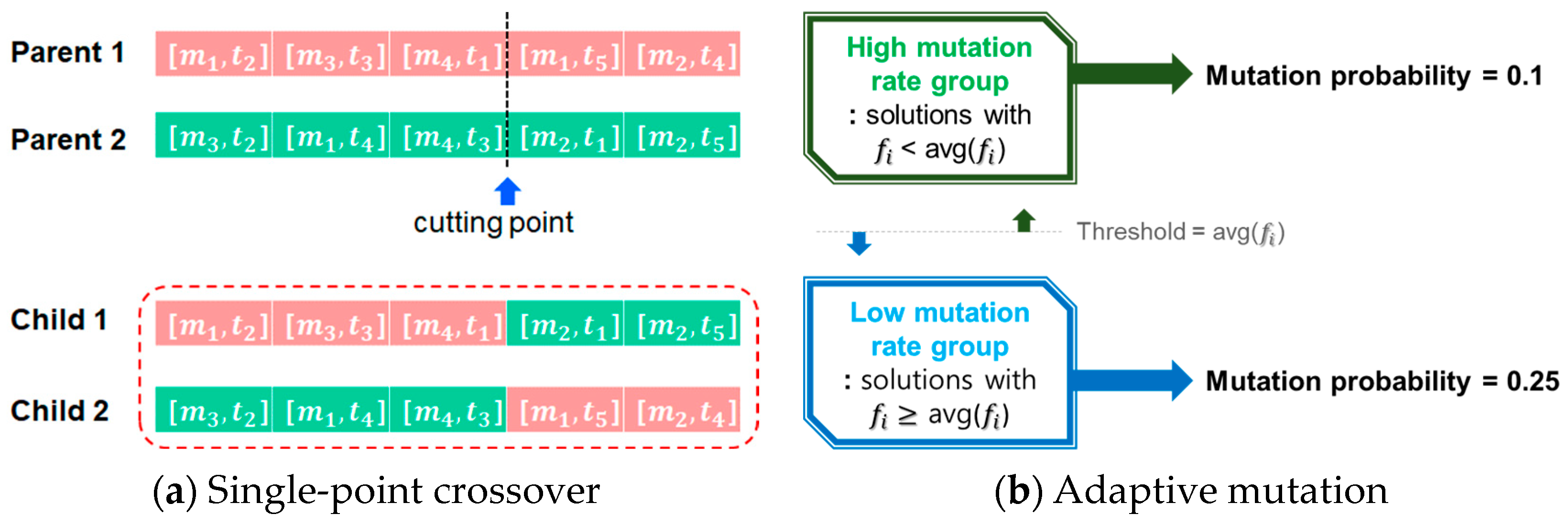

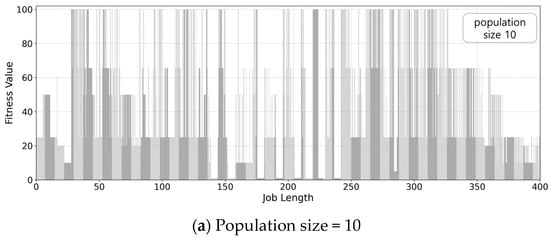

To determine the population size, we investigate the relationship between the population size and fitness function ranges. Figure 5 shows the fitness value of the final selected solutions when the population size is set to 10, 100, and 1000, respectively. As shown in the figure, a population size of 10 does not produce competitive results in terms of fitness value, but the fitness values are very similar when the population size is 100 and 1000. We choose a population size of 100, as it incurs a low overhead but exhibits sufficiently good results. Based on this, we randomly generate 100 solutions as the initial population.

Figure 5.

Fitness value as population size is varied.

3.3. Selection

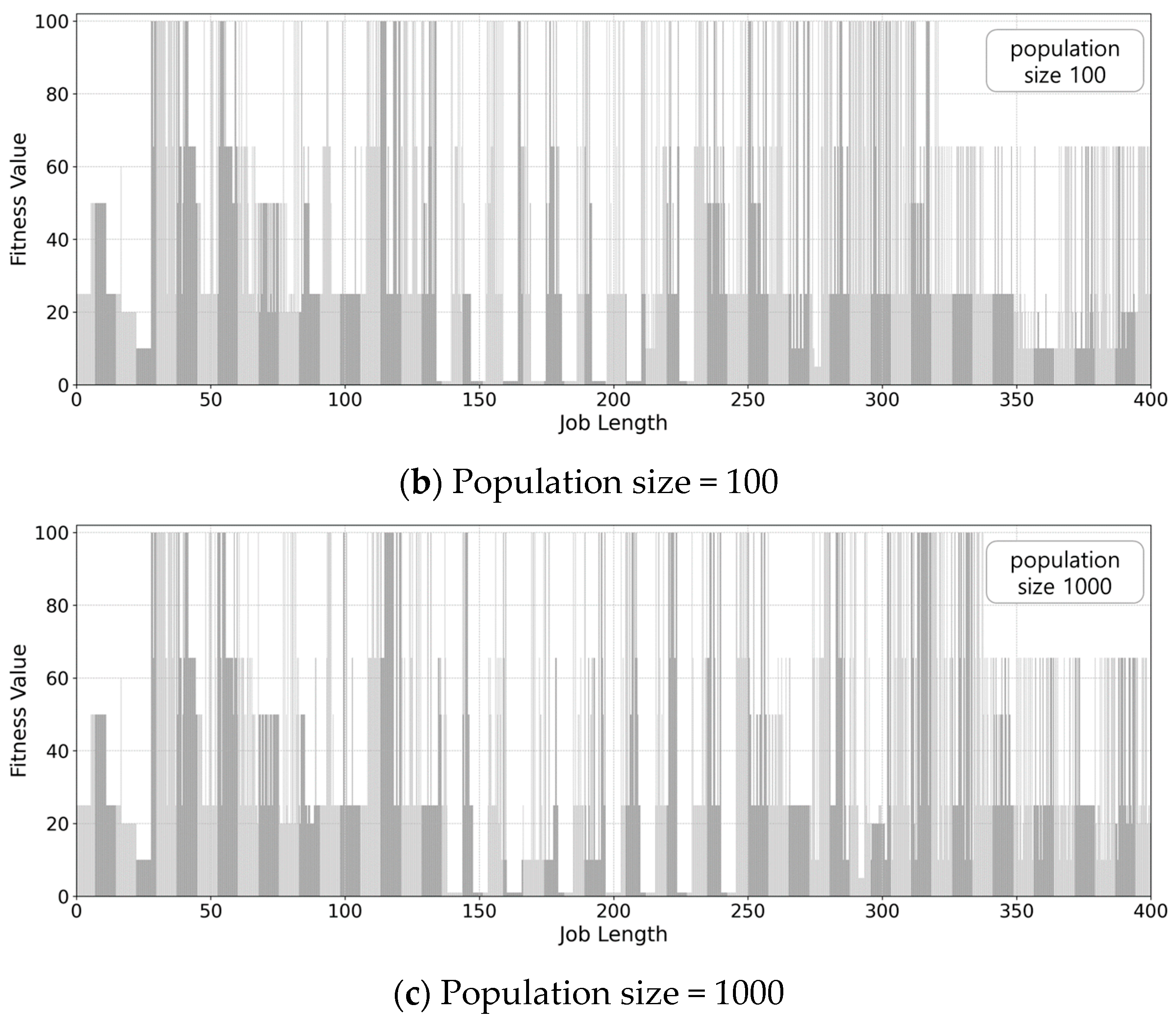

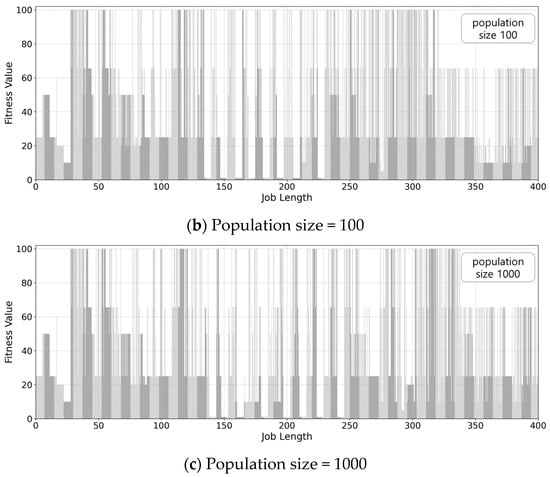

The process of selecting parents for generating offspring in the next generation, known as the selection operation, is typically guided by the fitness function. This function prioritizes solutions with superior fitness levels, increasing their likelihood of being chosen as parents. However, an overemphasis on high-quality solutions during selection may result in premature convergence to a local optimum. To mitigate this issue, a tournament selection approach is implemented, wherein 20% of the population’s solutions are randomly sampled, and the top solution with the highest fitness value among these sampled solutions is designated as the parent solution. This method facilitates the selection of parents for producing the subsequent generation’s offspring solutions within the population, as illustrated in Figure 6.

Figure 6.

Tournament selection used in our genetic algorithm.

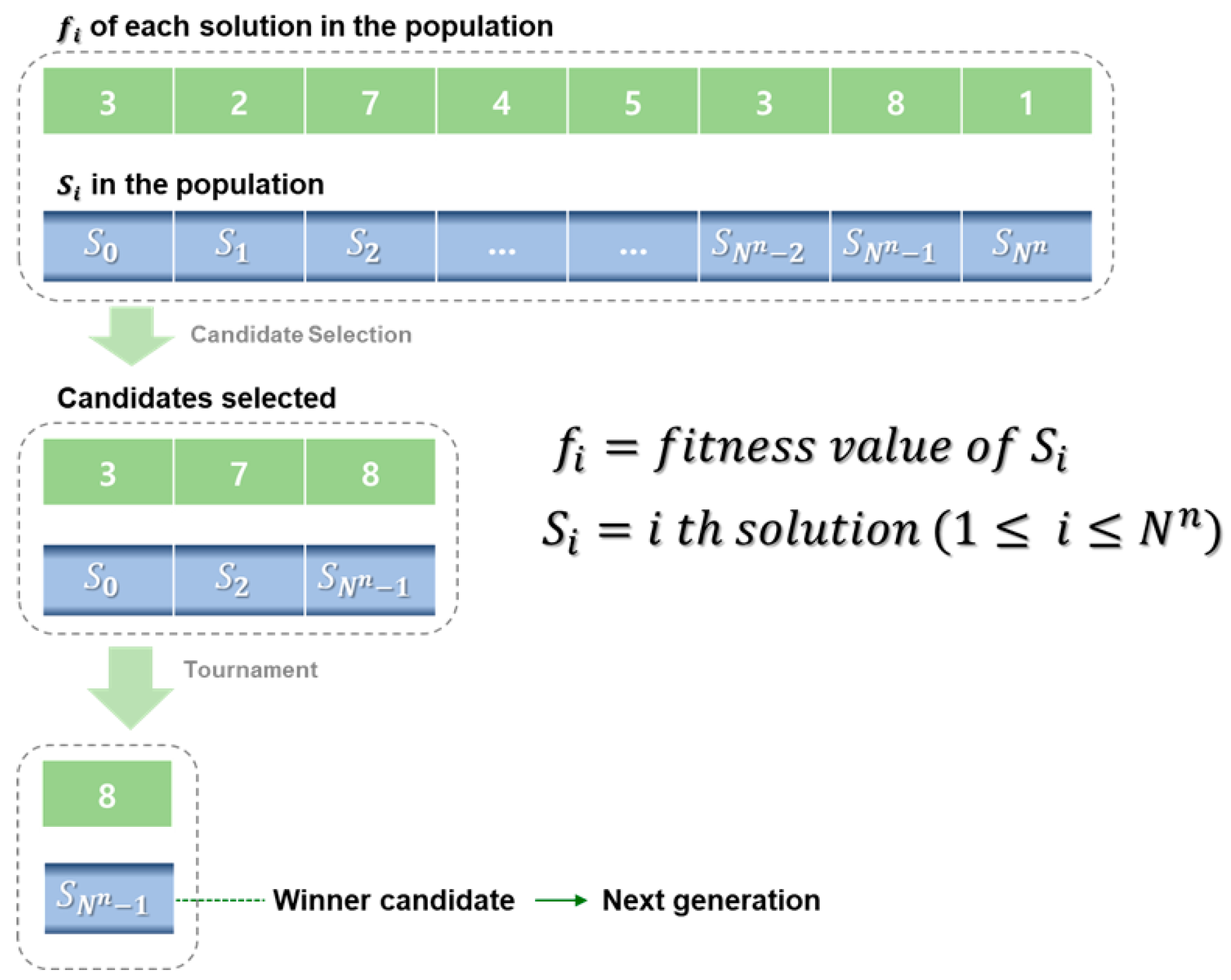

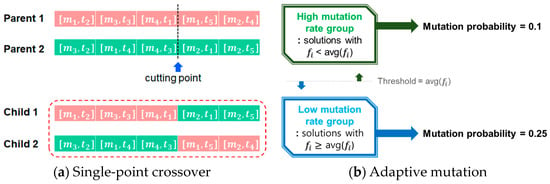

3.4. Crossover and Mutation

After selection, crossover procedures are applied to the chosen parent solutions to produce offspring solutions for subsequent generations. This process involves combining parent solutions to yield new solutions, known as children, thereby facilitating the enhancement of the population over time. In our genetic algorithm, a widely recognized single-point crossover technique is employed to generate fresh offspring solutions. In a single-point crossover, each parent possesses a single cutting point, and the offspring are derived by replicating distinct segments of each parent’s solution based on this point. In the context of our scheduling, offspring solutions are created by interchanging combinations of machines and task instances through the single-point crossover operation, as illustrated in Figure 7a.

Figure 7.

Crossover and mutation operations used in our genetic algorithms.

In genetic algorithms, a crossover operation can result in invalid solutions. In our case, as solutions are composed of items [mj, ti], where mj is a machine and ti is a task instance, some task instances might be duplicated, while others might disappear after crossover. This issue can be resolved by imposing a constraint on the encoding process to ensure that task numbers in a solution are in increasing order. Specifically, instead of task instances being placed in any order within the solution, we assume that they appear sequentially from t1 to tn. This assumption ensures that after a crossover, every task instance appears exactly once in the solution.

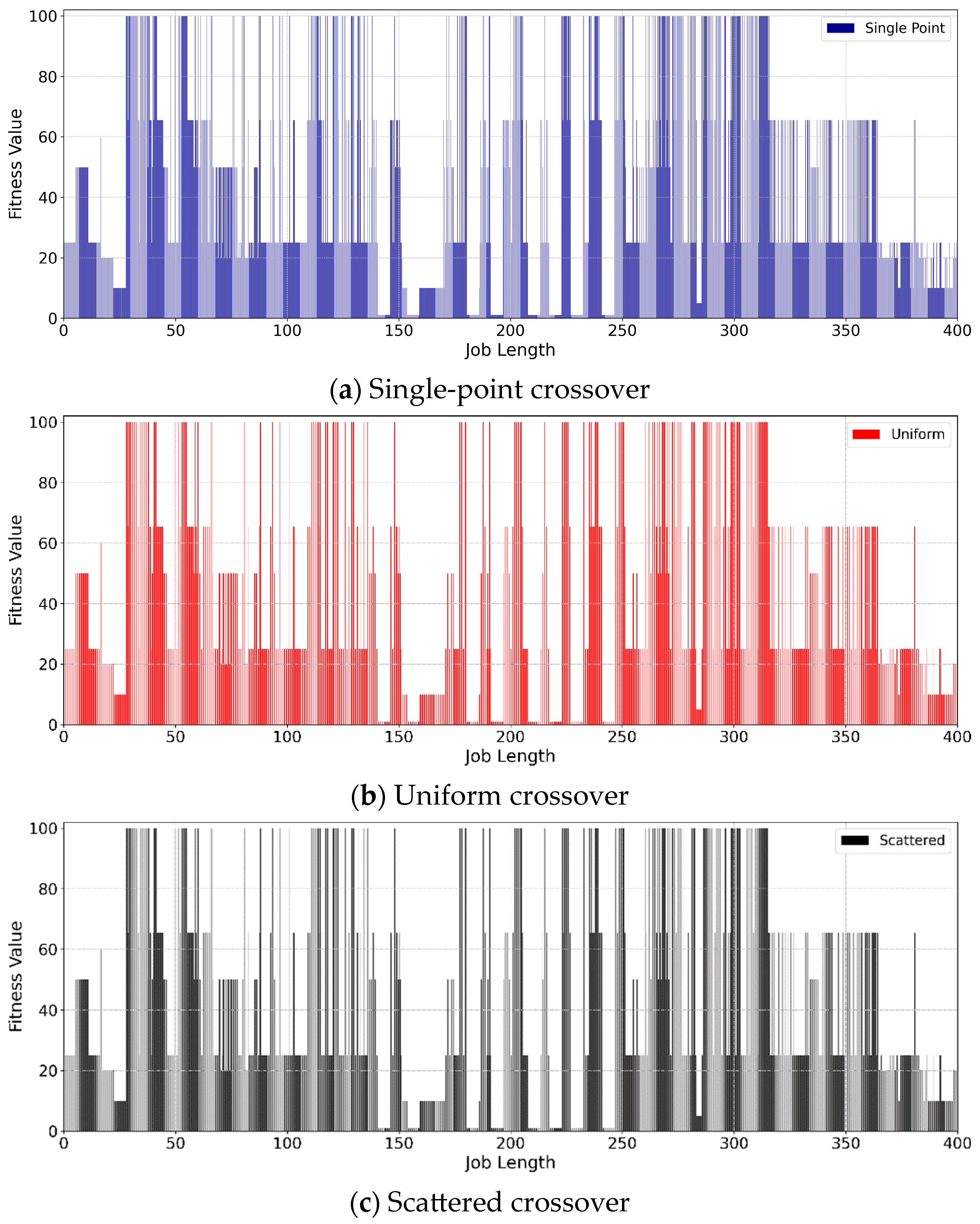

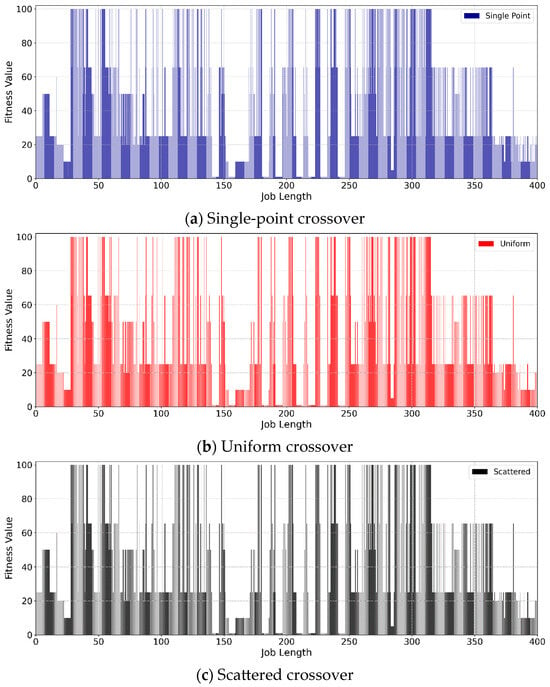

To assess the effectiveness of our single-point crossover operation, we investigate its fitness value in comparison with two other popular crossover operations: uniform crossover and scattered crossover. As shown in Figure 8, the three crossover methods exhibit very similar results. We chose the single-point crossover as it is the simplest among the three considered, yet it still exhibits competitive results.

Figure 8.

Fitness value as crossover operation is varied.

The mutation operation is employed to alter particular components of solutions produced through crossover operations, in order to explore the problem space more extensively. These mutation operations are recognized for their role in preserving diversity among the population and preventing premature convergence towards a local optimum. Within our genetic algorithm framework, an adaptive mutation operation is implemented. This strategy involves initially computing the average fitness value across the population and establishing this value as a threshold. Solutions surpassing this threshold are subjected to a reduced mutation probability of 0.25, while those with lower fitness values are assigned a higher mutation probability of 0.1, thereby facilitating mutation operations. This methodology is illustrated in Figure 7b.

3.5. Replacement

After the completion of crossover and mutation procedures, the resultant offspring solutions are utilized to supplant the existing solutions within the population, thereby constituting a fresh population. In the context of our study, 40% of the solutions present in the population are substituted with newly derived offspring solutions. Generally, solutions possessing inferior fitness values are replaced by the newly generated solutions during each iteration. This mechanism functions by replacing incumbent generations, as opposed to merely appending newly mutated entities to the succeeding generation. Consequently, upon the creation of new entities after the selection, crossover, and mutation stages, an existing entity is systematically replaced by a novel one [30].

4. Performance Evaluation

In this section, we perform simulation experiments to validate the effectiveness of the proposed scheduling in terms of GPU utilization within clusters. For comparison, we simulate other scheduling algorithms utilized for machine learning tasks, along with the proposed scheduling. The results suggest that the proposed algorithm can function as a proficient scheduling strategy in cluster settings where GPUs are shared, leading to enhanced GPU utilization without performance penalties.

We implement a scheduling simulation framework to evaluate the performance of machine learning scheduling. The framework is based on Simpy, a process-based discrete-event simulation framework, and utilizes PyGAD 3.3.1, an open-source Python library for genetic algorithms [31]. This paper constructs the input data for scheduling based on ML workload traces collected over two months from the Alibaba PAI cluster. As shown in Table 1, this cluster includes over 1800 nodes and more than 6000 GPUs, and the machines are heterogeneous in terms of hardware and resource configuration. The workloads submitted to the cluster are a mix of training and inference tasks and include a variety of machine learning algorithms such as CNN, RNN, transformer-based language models, and reinforcement learning. A total of 1200 tasks, comprising 7500 task instances, were submitted, and the trace data include submission time, cluster usage time, and requests for CPU, memory, and GPU quantities per instance. This paper uses a sampling of the entire workload trace to generate input data for scheduling.

Table 1.

The experimental configuration of the proposed scheduling.

As jobs are submitted at different times in real scenarios, our scheduling should be updated periodically, implying that the genetic algorithms are run using jobs submitted during the current period, as well as those remaining in the queue from the previous period that have not yet been dispatched. It is important to note that this periodic scheduling does not affect jobs that have already been dispatched and are currently being executed. In our performance evaluations, which are conducted through simulations that incorporate complete job histories, we recognize the submission times of all jobs from the beginning of our scheduling process. Nevertheless, we perform our scheduling periodically, assuming knowledge of only those job submission times that occur up to the current simulation point.

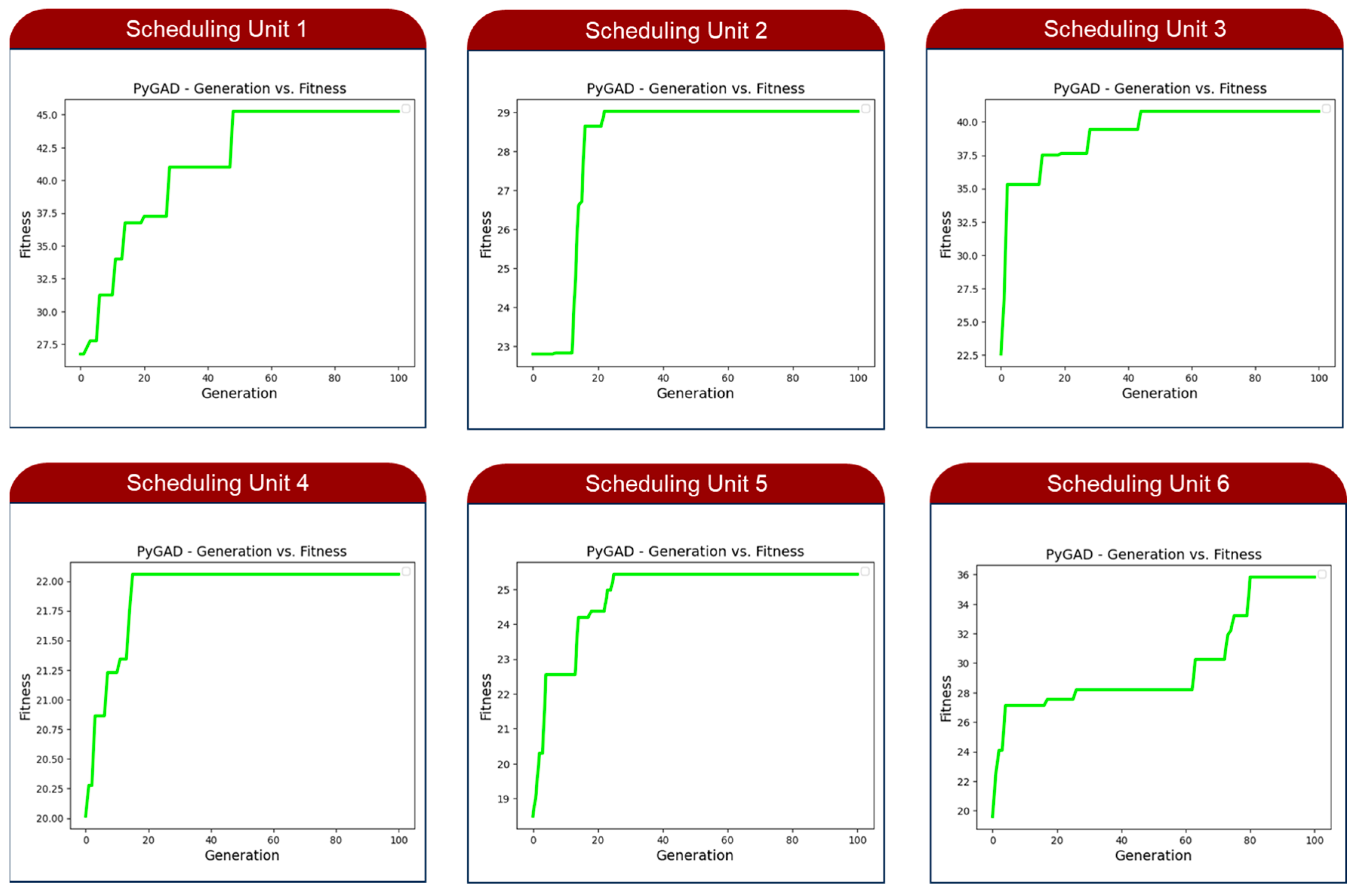

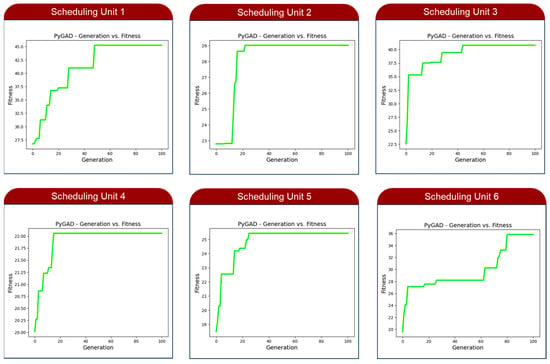

4.1. Convergence Test

To ensure the convergence of the genetic algorithm we implement, we assess its performance by comparing the fitness metrics of individual solutions within the existing population over time. Figure 9 illustrates the trend of fitness values observed throughout the genetic algorithm’s evolution, where the fitness values steadily increase towards the maximum possible value across successive generations within a specific scheduling unit. This graphical representation clearly delineates the evolutionary trajectory of the population as it seeks to pinpoint the optimized solution by applying the genetic algorithm to each scheduling unit. Additionally, this visual aids in monitoring the gradual convergence of fitness values as generations progress within each scheduling unit.

Figure 9.

Fitness values of proposed genetic algorithm as generation evolves.

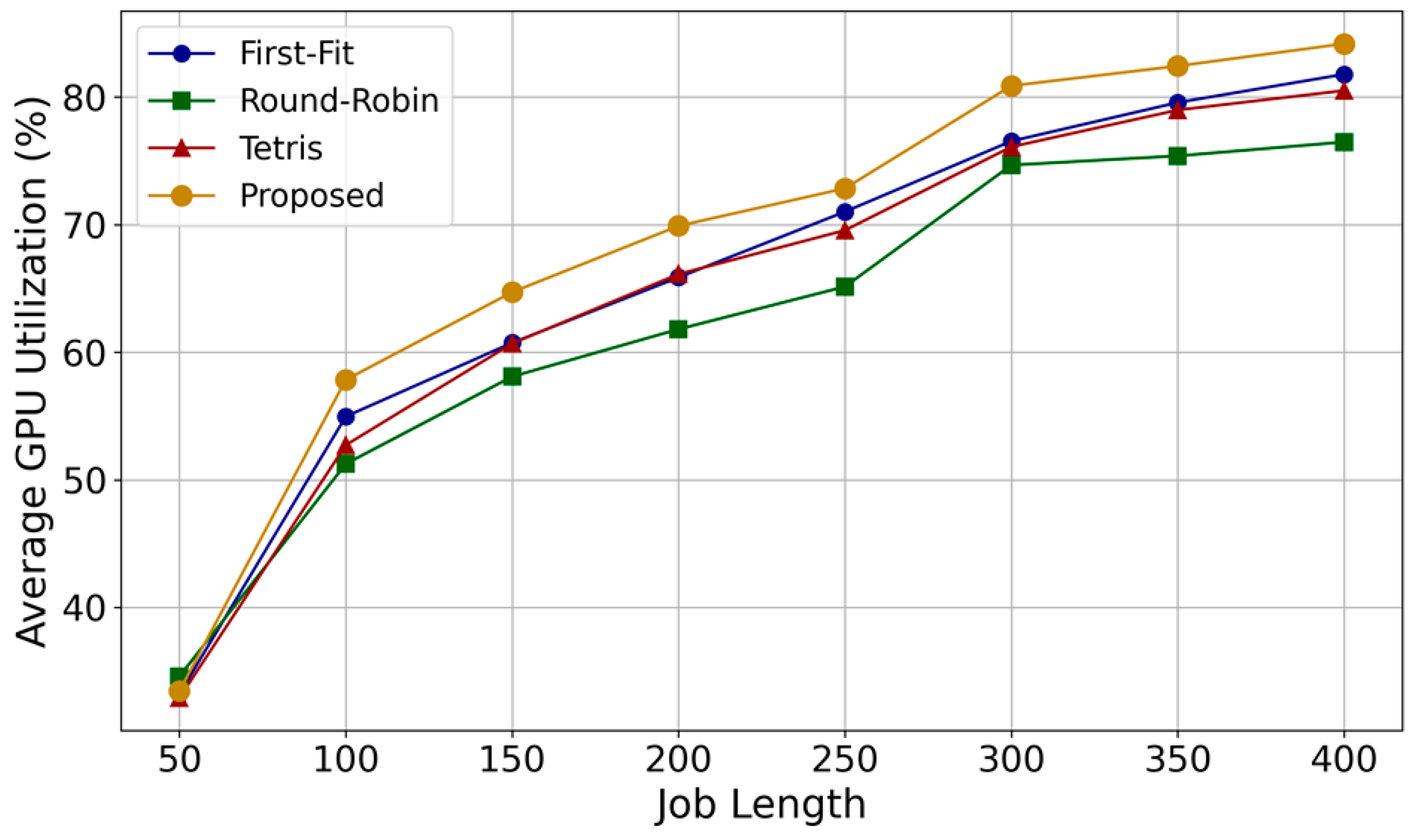

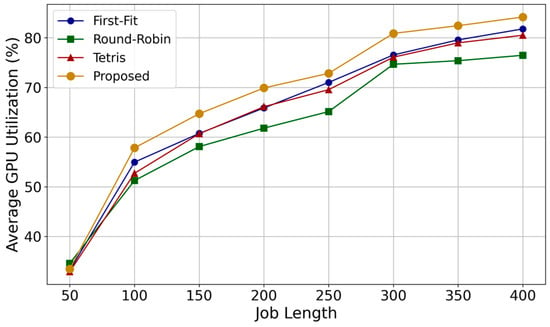

4.2. GPU Utilization

In this subsection, we present experimental results for a comparison between the proposed scheduling and other existing scheduling policies in terms of cluster GPU utilization. The scheduling policies compared to our algorithm include First-Fit, Round-Robin, and the Tetris algorithm, which schedules tasks based on a bin-packing approach. Figure 10 displays a graph showing the GPU utilization of the cluster over the course of a day, with jobs scheduled based on time of day. The x-axis represents the number of jobs processed up to that point, and the y-axis shows the GPU utilization of the cluster after scheduling the corresponding number of jobs.

Figure 10.

Comparison of GPU utilization.

From the graph, it is evident that the Round-Robin scheduling algorithm, which is widely used in task scheduling, is not suitable for maximizing GPU utilization when considering the diversity of ML workloads and the heterogeneity of GPU resources in the cluster. Note that Round-Robin scheduling assigns tasks in the queue to each GPU machine in turn without considering the load of the tasks and the capacity of GPUs, resulting in some GPUs being overloaded, while others are underutilized.

Tetris and First-Fit exhibit similar GPU utilization patterns, with each algorithm alternately gaining and losing advantage over time. Note that the Tetris algorithm schedules tasks by projecting each task’s resource demands into Euclidean space and selecting the task–machine pair with the highest dot product, proving suboptimal for maximizing GPU utilization. As shown in the figure, however, the proposed algorithm consistently achieves the best results in terms of GPU utilization across the cluster. This is due to the optimized selection of task–machine pairs in terms of GPU utilization, leading to the minimized fragmentation of GPU resources across clusters. The improvement of the proposed algorithm against Round-Robin, First-Fit, and Tetris in terms of GPU utilization is 12.8%, 6.5%, and 6.6%, respectively.

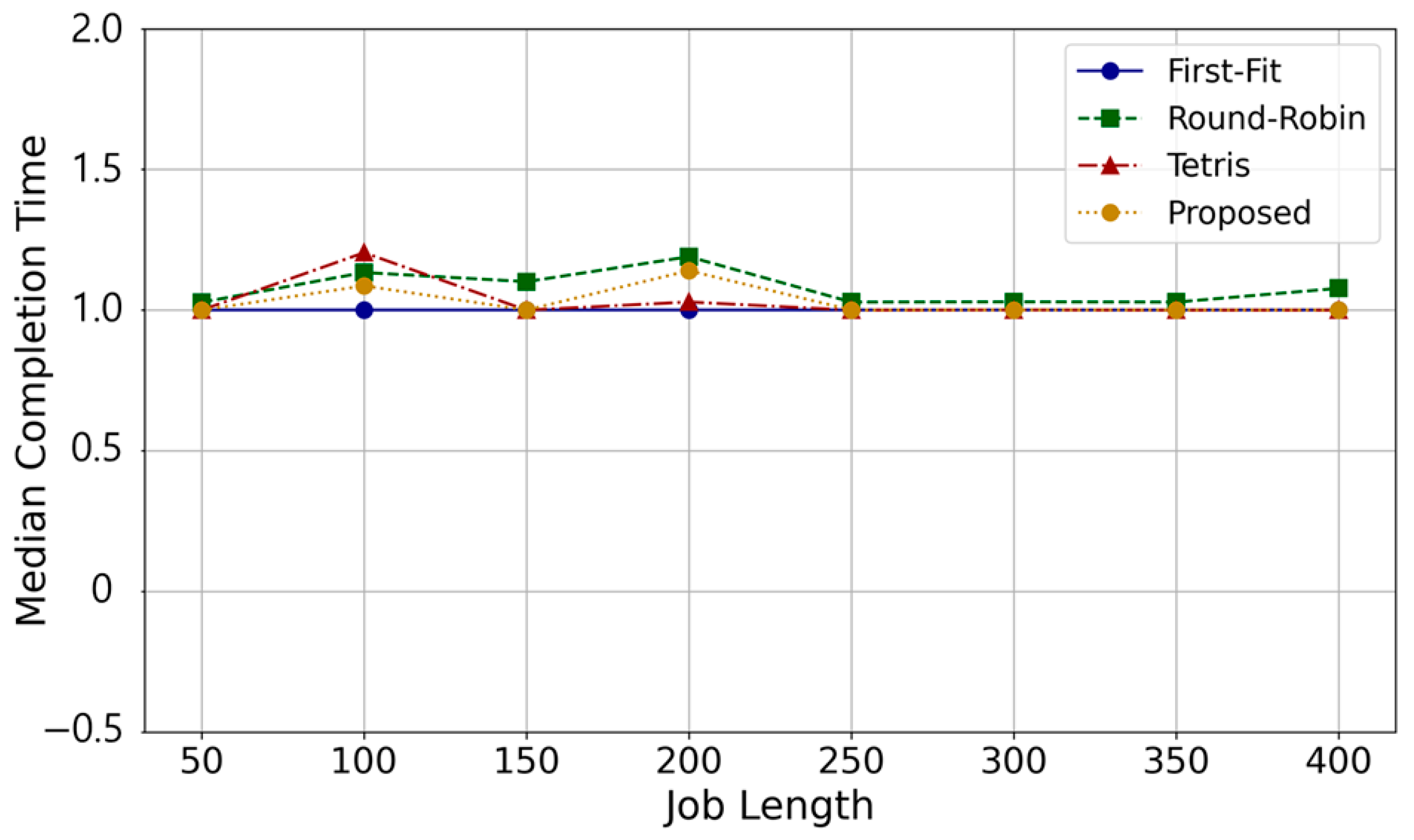

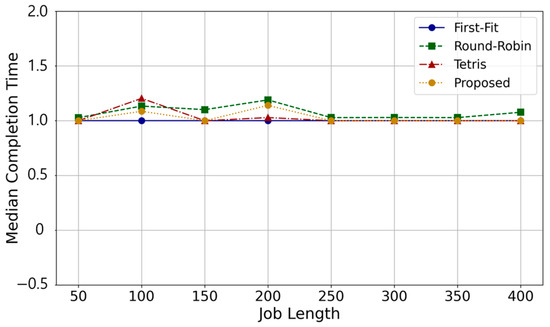

4.3. Completion Time

Even if GPU utilization increases, a performance penalty may occur from the user’s perspective. To validate this, we measure the completion time of jobs for each algorithm and compare the results. Figure 11 depicts the completion time of jobs when scheduled using our proposed algorithm and the comparison algorithms, First-Fit, Round-Robin, and Tetris. The completion time of each job is calculated by subtracting the start time from the finish time of the job. The graph illustrates that our algorithm achieves completion times for scheduled jobs like those of the other three algorithms, implicating that it can perform scheduling without a performance overhead. This demonstrates the efficiency of our algorithm in handling job scheduling, while maintaining a competitive performance from a user perspective.

Figure 11.

Comparison of completion time.

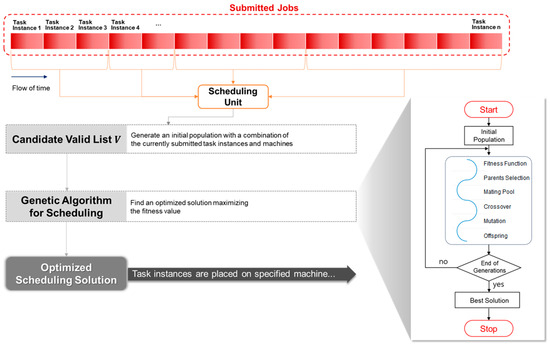

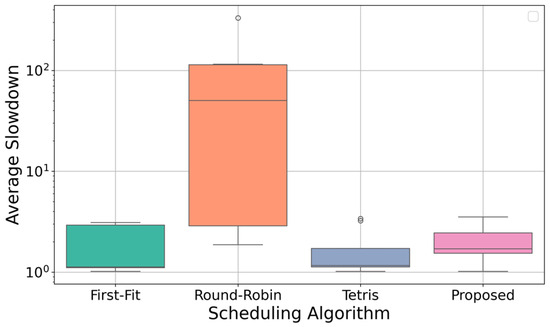

4.4. Average Slowdown

In our study, the aim of the genetic optimization is to improve GPU utilization, and thus the fitness function was defined accordingly. So, our optimization does not explicitly consider the waiting time of each task when evaluating solutions. However, as GPU utilization improves, the overall waiting time for tasks is also reduced, by maximizing the activation of idle GPUs. To demonstrate this, we show the average slowdown of tasks for each algorithm in Figure 12. As shown in the figure, our scheduling performs significantly better than Round-Robin scheduling with respect to the average slowdown of tasks. Of course, our scheduling does not address fairness issues related to the waiting times of individual tasks, which may lead to some tasks experiencing longer waits. However, the fairness issue is not explicitly considered by other algorithms either, and it is not significantly problematic in our algorithm, as demonstrated by our experimental results. Nevertheless, if addressing this issue is indeed necessary, it is possible to define a fitness function that imposes penalties when there is a significant variance in the waiting times of tasks.

Figure 12.

Average slowdown as scheduling algorithm is varied.

5. Conclusions

Modern MLaaS clusters utilize GPU-sharing technology to manage ever-growing machine learning workloads across heterogeneous GPU resources. However, this approach can cause resource fragmentation, which in turn decreases overall GPU utilization. In this paper, we addressed the challenge of suboptimal GPU utilization within large-scale clusters equipped with heterogeneous GPUs. We introduced a novel scheduling method that employs genetic optimization techniques and compared it with existing scheduling algorithms. Our genetic optimization process consists of machine and task instance pair encoding, single-point crossover, adaptive mutation, and replacement. Our scheduling is performed periodically based on newly submitted job information during the current period, as well as the remaining jobs in the queue from the previous period that have not yet been dispatched. We ensured that our scheduling can be applied to a variety of ML workloads including CNNs, RNNs, transformer-based language models, and reinforcement learning tasks. This encompasses over 1200 tasks, comprising 7500 task instances, on large-scale heterogeneous GPU clusters with over 1800 nodes and 6000 GPUs. Our findings demonstrated that the proposed method enhances GPU utilization in the cluster by 12.8% compared to the widely used Round-Robin algorithm, without incurring performance trade-offs, when compared to conventional scheduling strategies.

Author Contributions

S.K. implemented the architecture and algorithm and performed the experiments. H.B. designed the work and provided expertise. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by the Institute of Information and Communications Technology Planning and Evaluation (IITP) grant funded by the Korean government (MSIT) (RS-2021-II212068, Artificial Intelligence Innovation Hub) and (RS-2022-00155966, Artificial Intelligence Convergence Innovation Human Resources Development (Ewha University)).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in Alibaba Cluster Trace at https://github.com/alibaba/clusterdata/tree/master/cluster-trace-gpu-v2020 (accessed on 6 May 2024), reference number [20].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Weng, Q.; Xiao, W.; Yu, Y.; Wang, W.; Wang, C.; He, J.; Li, Y.; Zhang, L.; Lin, W.; Ding, Y. MLaaS in the Wild: Workload Analysis and Scheduling in Large-Scale Heterogeneous GPU Clusters. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI 22), USENIX Association, Renton, WA, USA, 4–6 April 2022; pp. 945–960. [Google Scholar]

- Jeon, M.; Venkataraman, S.; Phanishayee, A.; Qian, J.; Xiao, W.; Yang, F. Analysis of Large-Scale Multi-Tenant GPU Clusters for DNN Training Workloads. In Proceedings of the 2019 USENIX Annual Technical Conference (USENIX ATC 19), Renton, WA, USA, 10–12 July 2019; pp. 947–960. [Google Scholar]

- Hazelwood, K.; Bird, S.; Brooks, D.; Chintala, S.; Diril, U.; Dzhulgakov, D.; Fawzy, M.; Jia, B.; Jia, Y.; Kalro, A.; et al. Applied Machine Learning at Facebook: A Datacenter Infrastructure Perspective. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), IEEE, Vienna, Austria, 24–28 February 2018; pp. 620–629. [Google Scholar]

- Mohan, J.; Phanishayee, A.; Kulkarni, J.; Chidambaram, V. Looking Beyond GPUs for DNN Scheduling on Multi-Tenant Clusters. In Proceedings of the 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22), USENIX Association, Carlsbad, CA, USA, 11–13 July 2022; pp. 579–596. [Google Scholar]

- Zhao, H.; Han, Z.; Yang, Z.; Zhang, Q.; Yang, F.; Zhou, L.; Yang, M.; Lau, F.C.; Wang, Y.; Xiong, Y.; et al. HiveD: Sharing a GPU Cluster for Deep Learning with Guarantees. In Proceedings of the 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), Online, 4–6 November 2020; pp. 515–532. [Google Scholar]

- Yoo, S.; Jo, Y.; Bahn, H. Integrated Scheduling of Real-time and Interactive Tasks for Configurable Industrial Systems. IEEE Trans. Ind. Inform. 2022, 18, 631–641. [Google Scholar] [CrossRef]

- Ki, S.; Byun, G.; Cho, K.; Bahn, H. Co-Optimizing CPU Voltage, Memory Placement, and Task Offloading for Energy-Efficient Mobile Systems. IEEE Internet Things J. 2023, 10, 9177–9192. [Google Scholar] [CrossRef]

- Gong, R.; Li, D.; Hong, L.; Xie, N. Task Scheduling in Cloud Computing Environment Based on Enhanced Marine Predator Algorithm. Clust. Comput. 2024, 27, 1109–1123. [Google Scholar] [CrossRef]

- Park, S.; Bahn, H. Trace-based Performance Analysis for Deep Learning in Edge Container Environments. In Proceedings of the 8th IEEE International Conference on Fog and Mobile Edge Computing (FMEC), Tartu, Estonia, 18–20 September 2023; pp. 87–92. [Google Scholar]

- Lee, J.; Bahn, H. File Access Characteristics of Deep Learning Workloads and Cache-Friendly Data Management. In Proceedings of the 10th IEEE International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Palembang, Indonesia, 20–21 September 2023; pp. 328–331. [Google Scholar]

- Liang, Q.; Hanafy, W.; Ali-Eldin, A.; Shenoy, P. Model-driven Cluster Resource Management for AI Workloads in Edge Clouds. ACM Trans. Auton. Adapt. Syst. 2023, 18, 1–26. [Google Scholar] [CrossRef]

- Hu, Q.; Sun, P.; Yan, S.; Wen, Y.; Zhang, T. Characterization and Prediction of Deep Learning Workloads in Large-scale GPU Datacenters. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, St. Louis, MO, USA, 14–19 November 2021; pp. 1–15. [Google Scholar]

- Narayanan, D.; Santhanam, K.; Kazhamiaka, F.; Phanishayee, A.; Zaharia, M. Heterogeneity-Aware Cluster Scheduling Policies for Deep Learning Workloads. In Proceedings of the 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), Online, 4–6 November 2020; pp. 481–498. [Google Scholar]

- Li, J.; Xu, H.; Zhu, Y.; Liu, Z.; Guo, C.; Wang, C. Lyra: Elastic Scheduling for Deep Learning Clusters. In Proceedings of the 18th European Conference on Computer Systems (EuroSys 23), Rome, Italy, 8–12 May 2023; pp. 835–850. [Google Scholar]

- Yu, F.; Wang, D.; Shangguan, L.; Zhang, M.; Tang, X.; Liu, C.; Chen, X. A Survey of Large-scale Deep Learning Serving System Optimization: Challenges and Opportunities. arXiv 2021, arXiv:2111.14247. [Google Scholar]

- NVIDIA Multi-Instance GPU. Available online: https://www.nvidia.com/en-us/technologies/multi-instance-gpu/ (accessed on 6 May 2024).

- Xiao, W.; Bhardwaj, R.; Ramjee, R.; Sivathanu, M.; Kwatra, N.; Han, Z.; Patel, P.; Peng, X.; Zhao, H.; Zhang, Q.; et al. Gandiva: Introspective Cluster Scheduling for Deep Learning. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), USENIX Association, Carlsbad, CA, USA, 8–10 October 2018; pp. 595–610. [Google Scholar]

- Simpy: Discrete Event Simulation for Python. Available online: https://simpy.readthedocs.io/en/latest/index.html (accessed on 6 May 2024).

- Alibaba. Machine Learning Platform for AI. Available online: https://www.alibabacloud.com/product/machine-learning (accessed on 6 May 2024).

- Alibaba Cluster Trace. Available online: https://github.com/alibaba/clusterdata/tree/master/cluster-trace-gpu-v2020 (accessed on 6 May 2024).

- Grandl, R.; Ananthanarayanan, G.; Kandula, S.; Rao, S.; Akella, A. Multi-resource Packing for Cluster Schedulers. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 455–466. [Google Scholar] [CrossRef]

- Wang, S.; Chen, S.; Shi, Y. GPARS: Graph Predictive Algorithm for Efficient Resource Scheduling in Heterogeneous GPU Clusters. Future Gener. Comput. Syst. 2024, 152, 127–137. [Google Scholar] [CrossRef]

- Bai, Z.; Zhang, Z.; Zhu, Y.; Jin, X. PipeSwitch: Fast Pipelined Context Switching for Deep Learning Applications. In Proceedings of the 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), Online, 4–6 November 2020; pp. 499–514. [Google Scholar]

- Shukla, D.; Sivathanu, M.; Viswanatha, S.; Gulavani, B.; Nehme, R.; Agrawal, A.; Chen, C.; Kwatra, N.; Ramjee, R.; Sharma, P.; et al. Singularity: Planet-scale, Preemptive and Elastic Scheduling of AI Workloads. arXiv 2022, arXiv:2202.07848. [Google Scholar]

- Weng, Q.; Yang, L.; Yu, Y.; Wang, W.; Tang, X.; Yang, G.; Zhang, L. Beware of Fragmentation: Scheduling GPU-Sharing Workloads with Fragmentation Gradient Descent. In Proceedings of the 2023 USENIX Annual Technical Conference (USENIX ATC 23), USENIX Association, Boston, MA, USA, 10–12 July 2023; pp. 995–1008. [Google Scholar]

- Silberschatz, A.; Gagne, G.; Galvin, P. Operating System Concepts, 10th ed.; Wiley: Hoboken, NJ, USA, 2018. [Google Scholar]

- Panigrahy, R.; Talwar, K.; Uyeda, L.K.; Wieder, U. Heuristics for Vector Bin Packing. In Microsoft Research Technical Report; Microsoft: Redmond, WA, USA, 2011; Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2011/01/VBPackingESA11.pdf (accessed on 6 May 2024).

- Nagel, L.; Popov, N.; Süß, T.; Wang, Z. Analysis of Heuristics for Vector Scheduling and Vector Bin Packing. In Learning and Intelligent Optimization; Sellmann, M., Tierney, K., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 583–598. [Google Scholar]

- Goldberg, D.E. Genetic Algorithms in Search, Optimization and Machine Learning, 1st ed.; Addison-Wesley Longman Publishing Co., Inc.: Albany, NY, USA, 1989. [Google Scholar]

- Whitley, D. GENITOR: A Different Genetic Algorithm. In Proceedings of the 4th Rocky Mountain Conference on Artificial Intelligence, Denver, CO, USA, 8–9 June 1988; pp. 118–130. [Google Scholar]

- Gad, A. PyGAD: Genetic Algorithm in Python. Available online: https://github.com/ahmedfgad/GeneticAlgorithmPython (accessed on 6 May 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).