TYCOS: A Specialized Dataset for Typical Components of Satellites

Abstract

1. Introduction

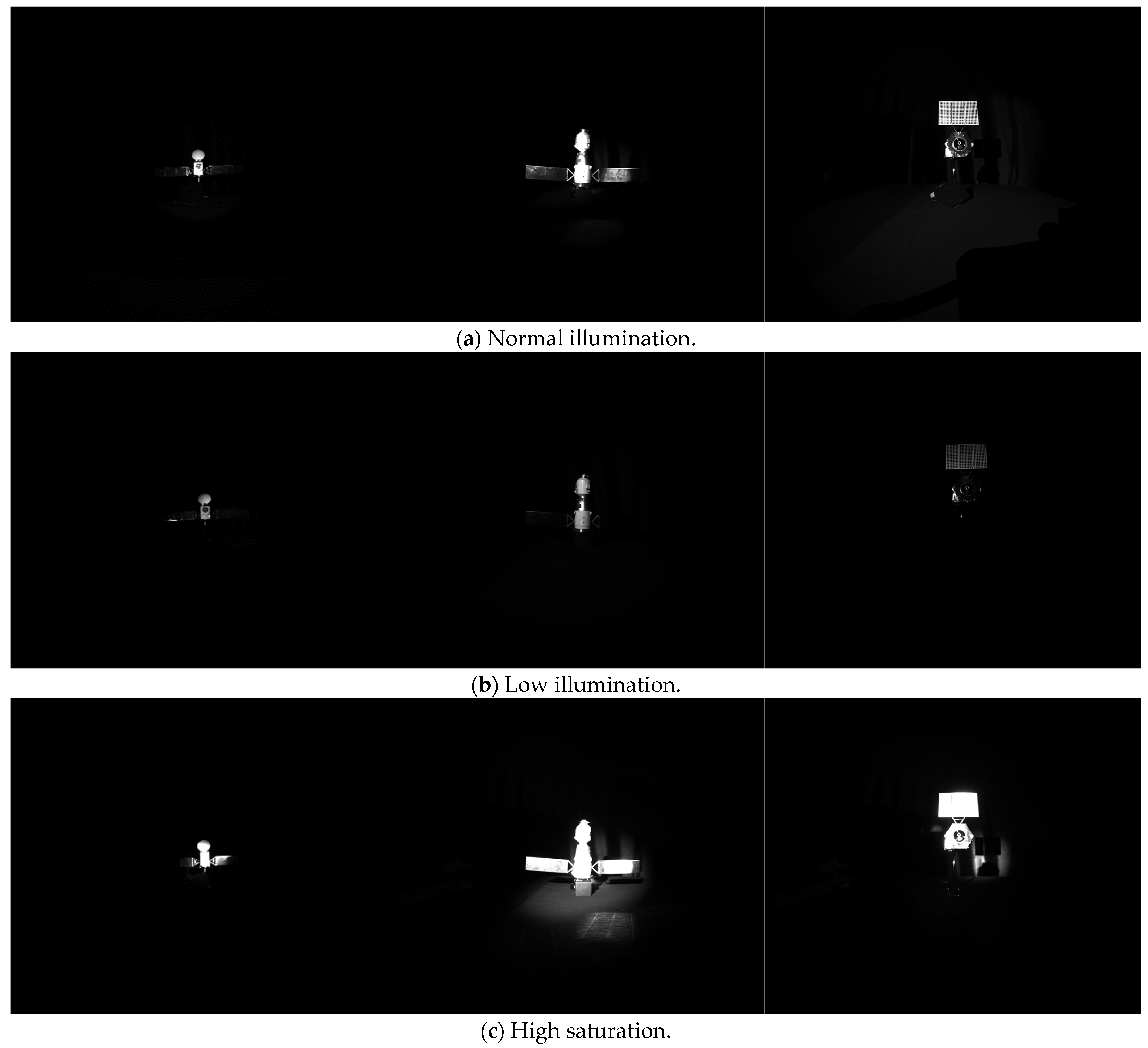

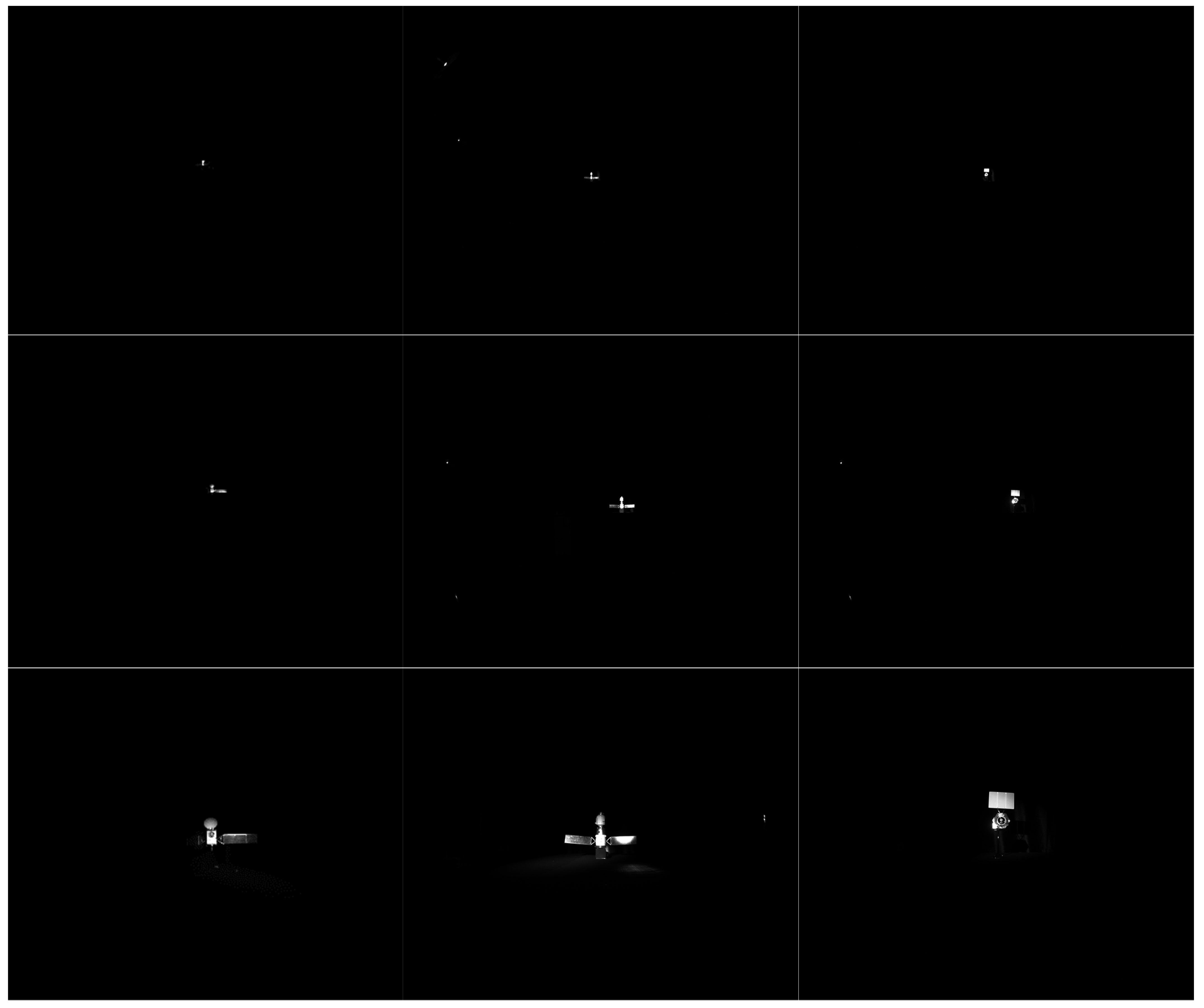

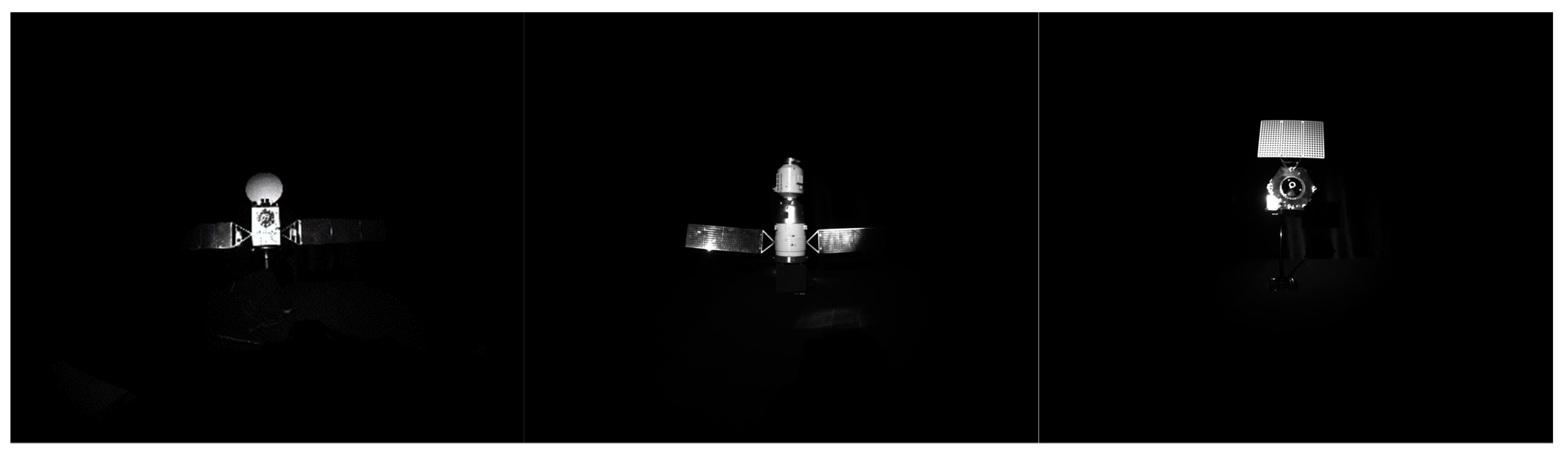

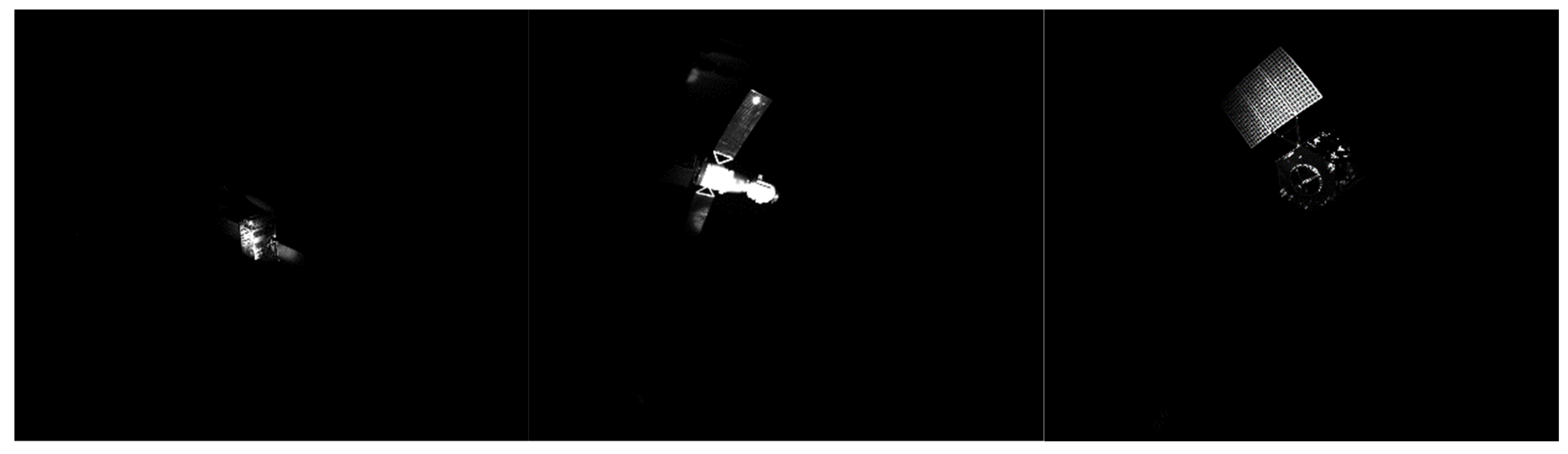

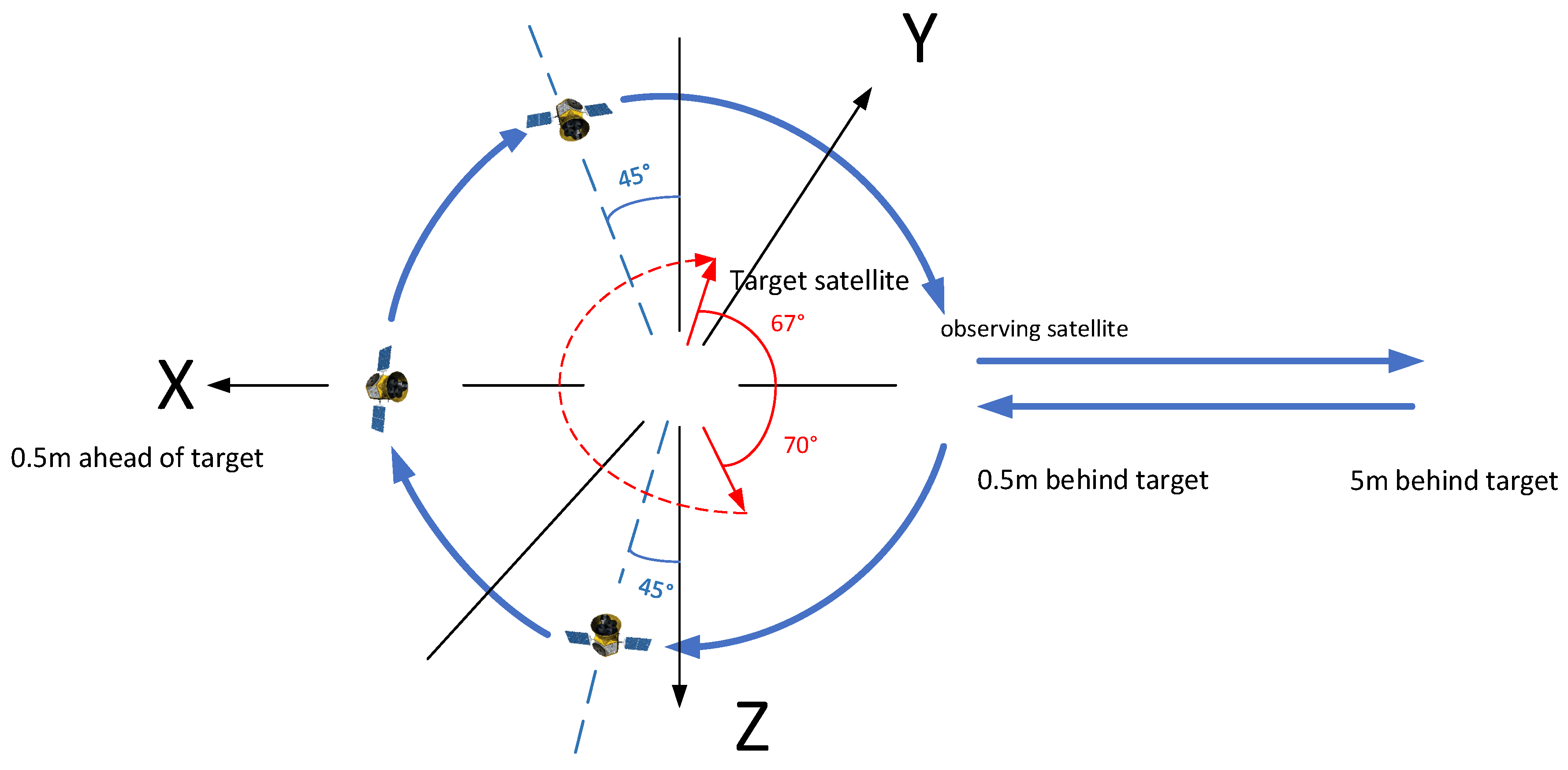

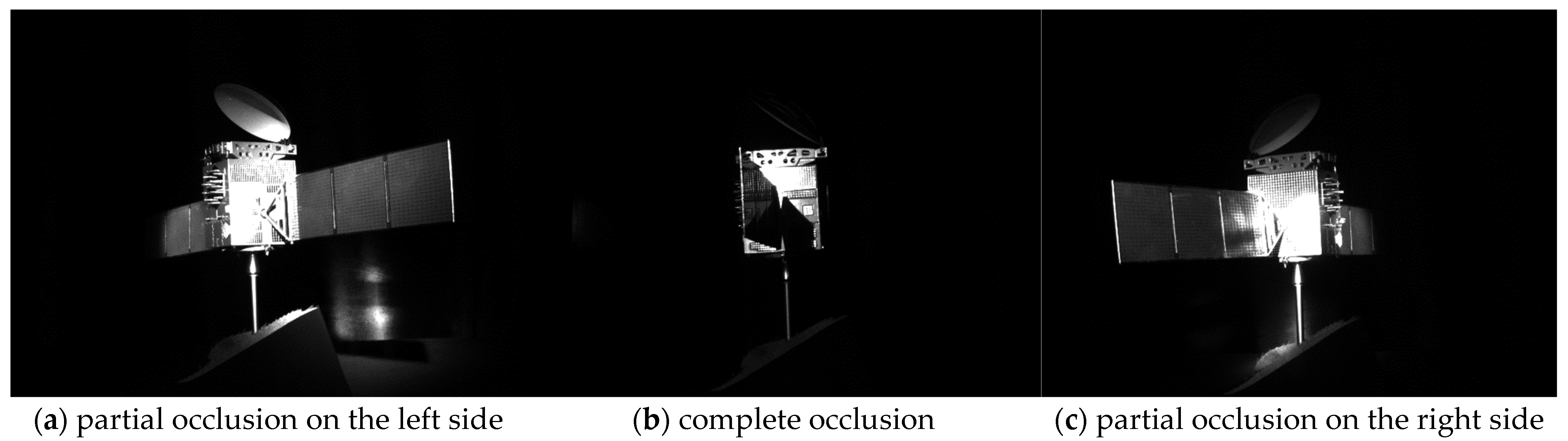

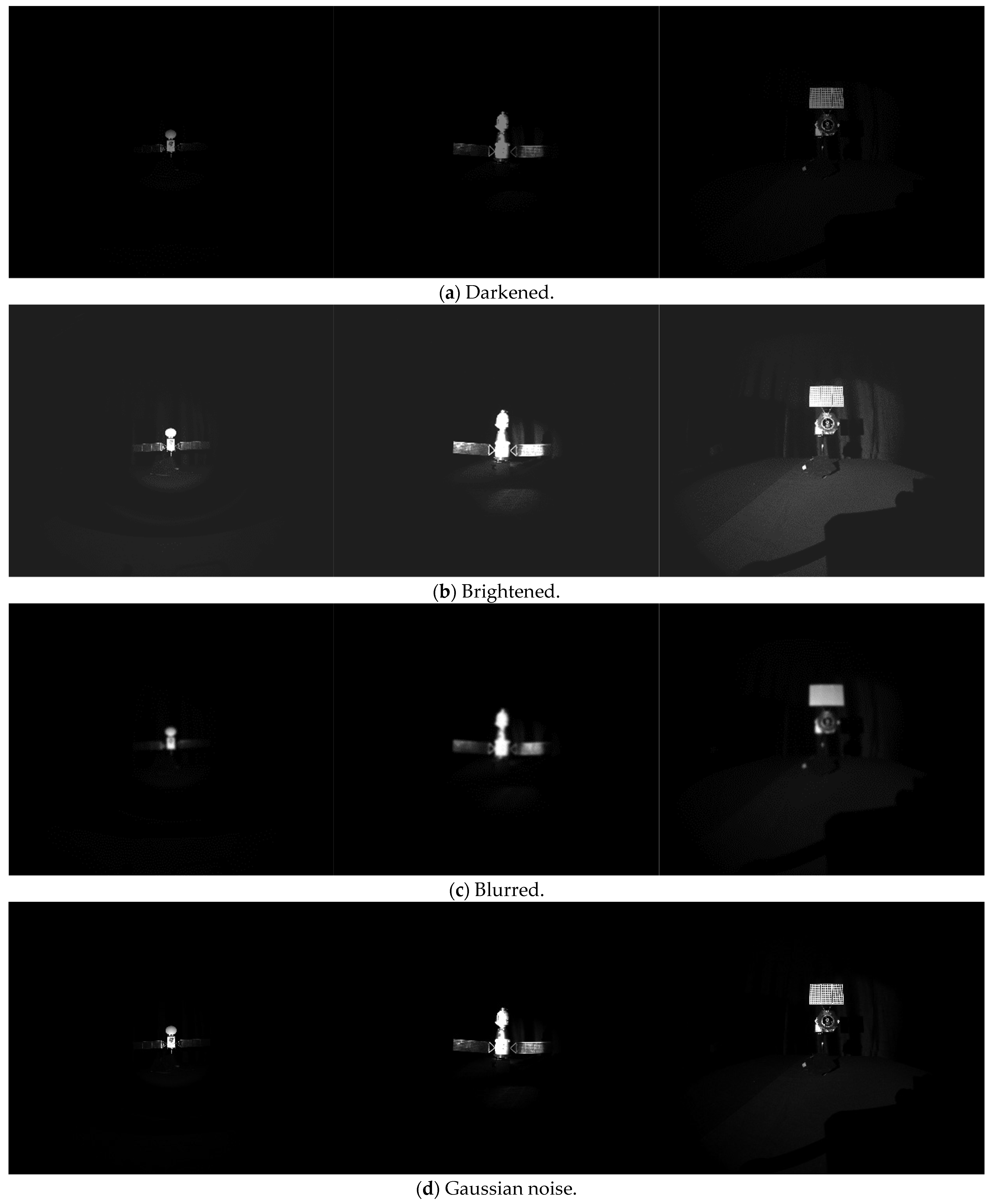

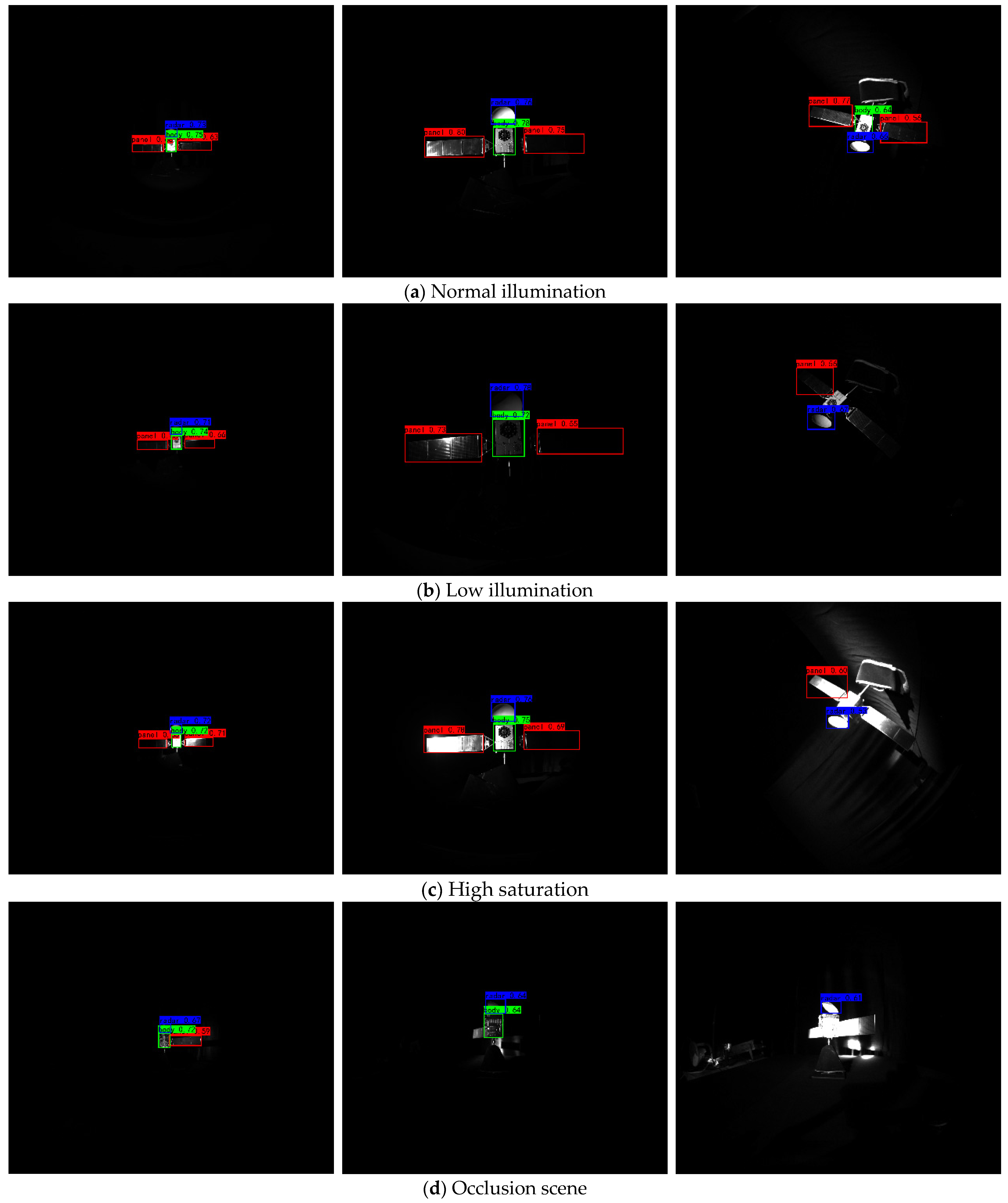

- A unified dataset for the detection of key satellite components is established, meticulously crafted to accurately represent key satellite components. In addition, employing a semi-physical collection system, this dataset accurately replicates the scenarios encountered in space capture missions. In terms of illumination, it encompasses the following three distinct lighting conditions: normal illumination, low illumination, and high saturation. Regarding motion states, the dataset simulates the hovering and approaching states of the observing satellite in the capture missions, as well as the rolling state of the target satellite, effectively linking imaging effects with the motion states of targets. Furthermore, in addressing occlusion challenges, the dataset includes scenarios where the body of the target satellite occludes its own solar panels. Compared with other existing datasets, our dataset can better reflect the real-space imaging effect and reflect the real-space conditions.

- A comprehensive validation analysis of current mainstream object detection algorithms on the dataset is conducted, in order to establish initial detection benchmarks. Eight sets of classic and advanced detection models are evaluated in this paper. Aiming to address the challenges posed by the higher demand for the detection accuracy of space targets, appropriate backbones are selected to improve the initial detection benchmarks.

- Images capturing all spatial directions and distances of up to 5 m are included in the proposed dataset. This dataset faithfully replicates direct sunlight in satellite observation images, offering an unparalleled quantity and quality of images that depict key components of satellite models, facilitating a comprehensive evaluation of the robustness of key satellite component detection across a wide array of high-fidelity environments.

2. Related Work

2.1. Existing Space Scene Datasets

2.2. Satellite Target Detection and Recognition Algorithms

3. The TYCOS Dataset

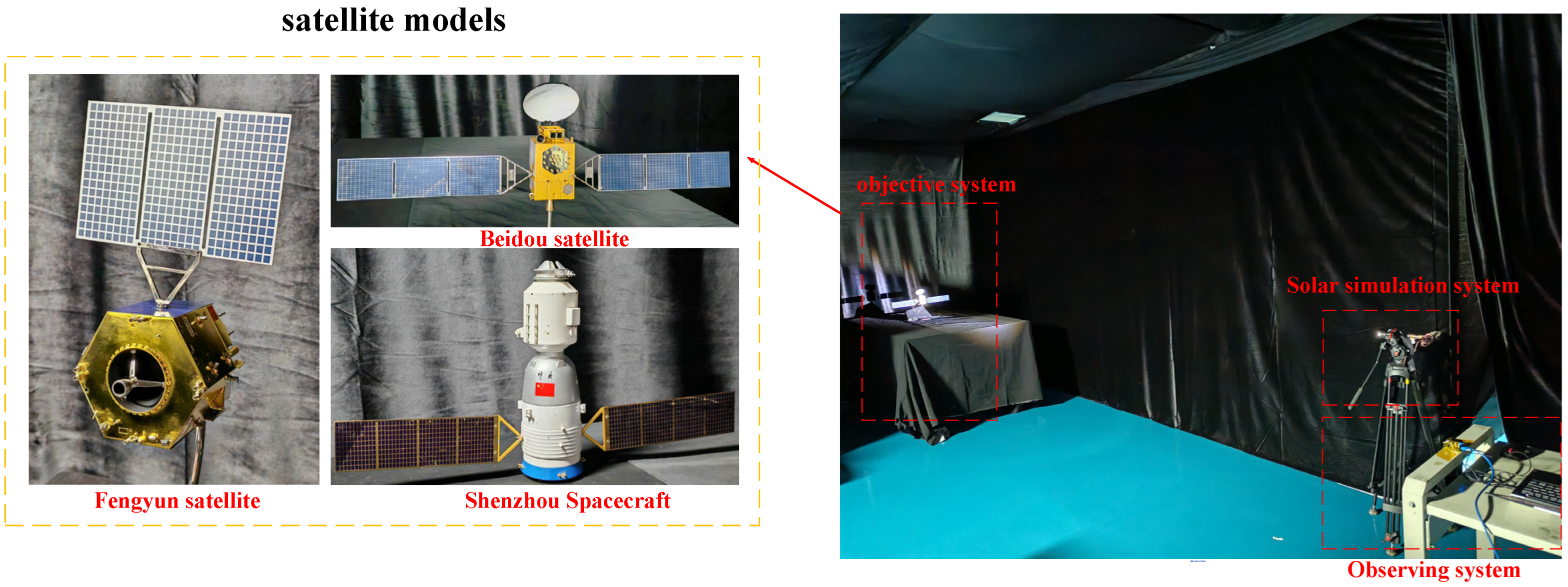

3.1. The Image Acquisition System

3.2. Simulation of Multiple Operating Conditions

3.3. Data Augmentation

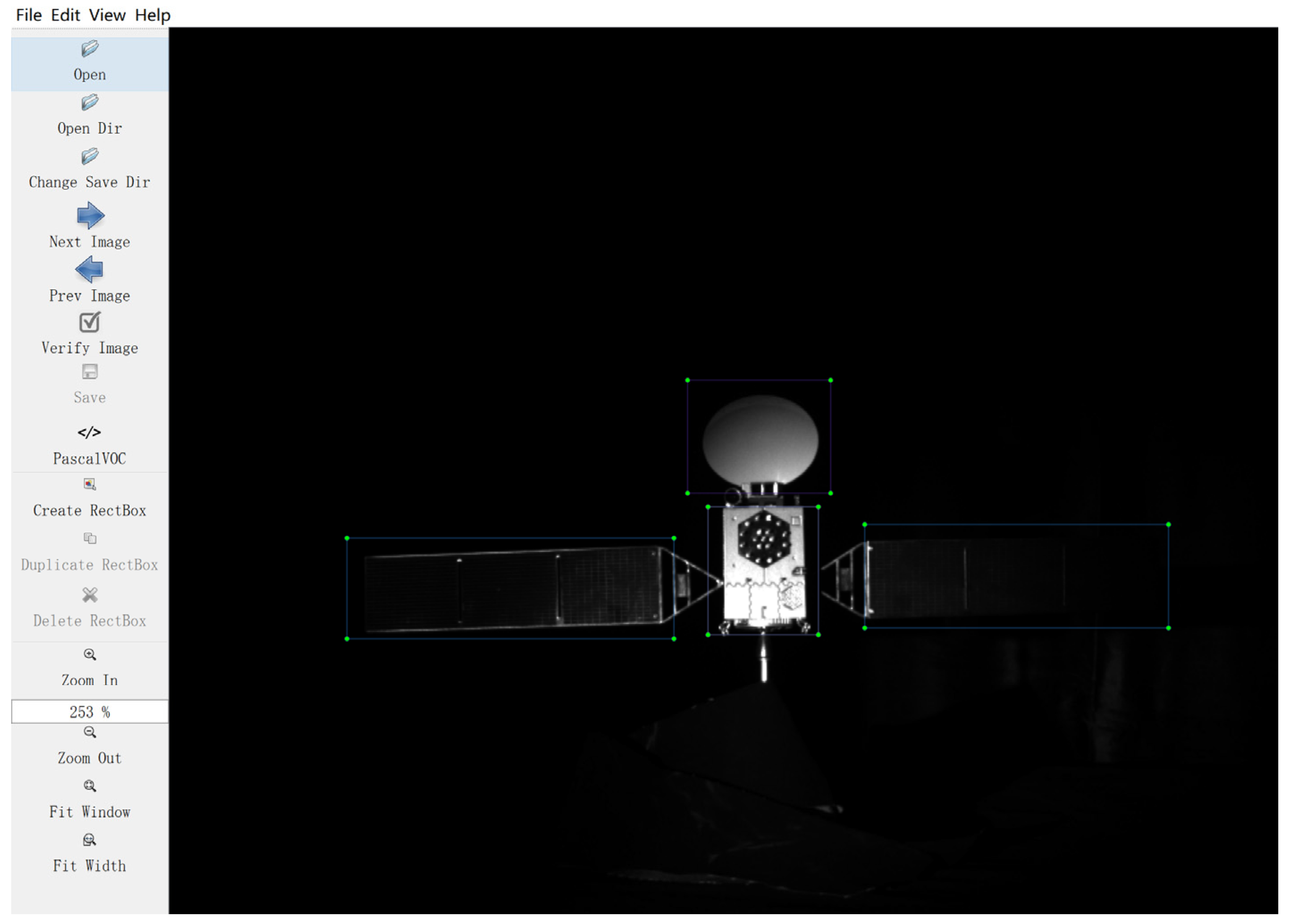

3.4. Image Annotation

4. Evaluation

4.1. Training Parameters

4.2. Evaluation Criteria

4.3. Key Component Detection Benchmark

4.4. Visualization

4.5. A Quantitative Comparison with Other Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schild, M.; Noomen, R. Sun-synchronous spacecraft compliance with international space debris guidelines. Adv. Space Res. Off. J. Comm. Space Res. COSPAR 2023, 72, 2585–2596. [Google Scholar] [CrossRef]

- Bao, W.; Yin, C.; Huang, X.; Wei, Y.I.; Dadras, S. Artificial intelligence in impact damage evaluation of space debris for spacecraft. Front. Inf. Technol. Electron. Eng. 2022, 23, 511–514. [Google Scholar] [CrossRef]

- Hu, D.Q.; Chi, R.Q.; Liu, Y.Y.; Pang, B.J. Sensitivity analysis of spacecraft in micrometeoroids and orbital debris environment based on panel method. Def. Technol. 2023, 19, 126–142. [Google Scholar] [CrossRef]

- Xie, X.; Lang, C.; Miao, S.; Cheng, G.; Li, K.; Han, J. Mutual-Assistance Learning for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15171–15184. [Google Scholar] [CrossRef] [PubMed]

- Ge, Z.; Zhang, Y.; Jiang, Y.; Ge, H.; Wu, X.; Jia, Z.; Wang, H.; Jia, K. Lightweight YOLOv7 Algorithm for Multi-Object Recognition on Contrabands in Terahertz Images. Appl. Sci. 2024, 14, 1398. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Bechini, M.; Lavagna, M.; Lunghi, P. Dataset generation and validation for spacecraft pose estimation via monocular images processing. Acta Astronaut. 2023, 204, 358–369. [Google Scholar] [CrossRef]

- Khoroshylov, S.; Redka, M. Deep learning for space guidance, navigation, and control. Space Sci. Technol. 2021, 27, 38–52. [Google Scholar]

- Izzo, D.; Märtens, M.; Pan, B. A survey on artificial intelligence trends in spacecraft guidance dynamics and control. Astrodynamics 2019, 3, 287–299. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Boezio, M.; Munini, R.; Picozza, P. Cosmic ray detection in space. Prog. Part. Nucl. Phys. 2020, 112, 103765. [Google Scholar] [CrossRef]

- Dong, A.; Liu, X. Automated detection of corona cavities from SDO images with YOLO. In Proceedings of the 2021 IEEE Seventh International Conference On Multimedia Big Data (Bigmm), Taichung, Taiwan, 15–17 November 2021; pp. 49–56. [Google Scholar]

- Leira, F.S.; Helgesen, H.H.; Johansen, T.A.; Fossen, T.I. Object detection, recognition, and tracking from UAVs using a thermal camera. J. Field Robot. 2021, 38, 242–267. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, P.; Wu, C.; Chen, K.; Ren, W.; Liu, L.; Tang, Y.; Ji, C.; Sang, X. SIASAIL-I solar sail: From system design to on-orbit demonstration mission. Acta Astronaut. 2022, 192, 133–142. [Google Scholar] [CrossRef]

- Lei, G.; Yin, C.; Huang, X.; Cheng, Y.H.; Dadras, S.; Shi, A. Using an Optimal Multi-Target Image Segmentation Based Feature Extraction Method to Detect Hypervelocity Impact Damage for Spacecraft. IEEE Sens. J. 2021, 21, 20258–20272. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, R.; Liu, F.; Yang, S.; Hou, B.; Li, L.; Tang, X. New Generation Deep Learning for Video Object Detection: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3195–3215. [Google Scholar] [CrossRef] [PubMed]

- Juliu, L.; Xiangsuo, F.; Huajin, C.; Bing, L.; Lei, M.; Zhiyong, X. Dim and Small Target Detection Based on Improved Spatio-Temporal Filtering. IEEE Photonics J. 2022, 14, 7801211. [Google Scholar] [CrossRef]

- Xiang, A.; Zhang, L.; Fan, L. Shadow removal of spacecraft images with multi-illumination angles image fusion. Aerosp. Sci. Technol. 2023, 140, 108453. [Google Scholar] [CrossRef]

- Kang, J.; Tariq, S.; Oh, H.; Woo, S.S. A Survey of Deep Learning-Based Object Detection Methods and Datasets for Overhead Imagery. IEEE Access 2022, 10, 20118–20134. [Google Scholar] [CrossRef]

- Chen, W.; Wang, H.; Li, H.; Li, Q.; Yang, Y.; Yang, K. Real-Time Garbage Object Detection with Data Augmentation and Feature Fusion Using SUAV Low-Altitude Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6003005. [Google Scholar] [CrossRef]

- Huang, C.; Yang, Z.; Wen, J.; Xu, Y.; Jiang, Q.; Yang, J.; Wang, Y. Self-Supervision-Augmented Deep Autoencoder for Unsupervised Visual Anomaly Detection. IEEE Trans. Cybern. 2022, 52, 13834–13847. [Google Scholar] [CrossRef]

- Park, T.H.; Mrtens, M.; Lecuyer, G.; Izzo, D.; D’Amico, S. SPEED+: Next-Generation Dataset for Spacecraft Pose Estimation across Domain Gap. In Proceedings of the IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022. [Google Scholar]

- Zhang, C.; Guo, B.; Liao, N.; Zhong, Q.; Liu, H.; Li, C.; Gong, J. STAR-24K: A Public Dataset for Space Common Target Detection. KSII Trans. Internet Inf. Syst. 2022, 16, 365–380. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5602511. [Google Scholar] [CrossRef]

- Liu, F.; Chen, R.; Zhang, J.; Xing, K.; Liu, H.; Qin, J. R2YOLOX: A Lightweight Refined Anchor-Free Rotated Detector for Object Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5632715. [Google Scholar] [CrossRef]

- Kisantal, M.; Sharma, S.; Park, T.H.; Izzo, D.; Martens, M.; D’Amico, S. Satellite Pose Estimation Challenge: Dataset, Competition Design, and Results. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4083–4098. [Google Scholar] [CrossRef]

- Hoang, D.A.; Chen, B.; Chin, T.J. A Spacecraft Dataset for Detection, Segmentation and Parts Recognition. arXiv 2021, arXiv:2106.08186. [Google Scholar]

- Musallam, M.A.; Gaudilliere, V.; Ghorbel, E.; Ismaeil, K.A.; Perez, M.D.; Poucet, M.; Aouada, D. Spacecraft Recognition Leveraging Knowledge of Space Environment: Simulator, Dataset, Competition Design and Analysis. In Proceedings of the 2021 IEEE International Conference on Image Processing Challenges (ICIPC), Anchorage, AK, USA, 19–22 September 2021. [Google Scholar]

- Musallam, M.A.; Ismaeil, K.A.; Oyedotun, O.; Perez, M.D.; Poucet, M.; Aouada, D. SPARK: SPAcecraft Recognition leveraging Knowledge of Space Environment. arXiv 2021, arXiv:2104.05978. [Google Scholar]

- Mahendrakar, T.; White, R.T.; Wilde, M.; Kish, B.; Silver, I. Real-time satellite component recognition with YOLO-V5. In Proceedings of the 35th Annual Small Satellite Conference, Online, 7–12 August 2021. [Google Scholar]

- Xu, J.; Song, B.; Yang, X.; Nan, X. An Improved Deep Keypoint Detection Network for Space Targets Pose Estimation. Remote Sens. 2020, 12, 3857. [Google Scholar] [CrossRef]

- Chen, Y.; Gao, J.; Zhang, K. R-CNN-Based Satellite Components Detection in Optical Images. Int. J. Aerosp. Eng. 2020, 2020, 8816187. [Google Scholar] [CrossRef]

- Cao, Y.; Cheng, X.; Mu, J.; Li, D.; Han, F. Detection Method Based on Image Enhancement and an Improved Faster R-CNN for Failed Satellite Components. IEEE Trans. Instrum. Meas. 2023, 72, 5005213. [Google Scholar] [CrossRef]

| Dataset | SPEED | SPEED+ | Dung | SPARK | Satellite-DS |

|---|---|---|---|---|---|

| Scene | Synthetic | Synthetic and real | Synthetic | Synthetic | Real |

| Illumination | Yes | Yes | No | No | Yes |

| Motion States | No | No | No | No | Yes |

| Occlusion | No | No | No | No | No |

| Resolution | 1920 × 1200 | 1920 × 1200 | 1280 × 720 | 256 × 256 | 1936 × 1456 |

| Categories | 4 | 4 | 2 | 11 | 9 |

| Application | Pose estimation | Pose estimation | Pose estimation | Pose estimation | Detection segmentation |

| Open Source | Yes | Yes | Yes | Yes | No |

| Satellite | Proportion | Dimensions (l × w × h) (mm) | Dimensions of the Solar Panels (mm) | Mass (kg) |

|---|---|---|---|---|

| BeiDou-3 | 1:35 | 510 × 70 × 140 | 200 × 50 | 0.79 |

| Fengyun-4 | 1:30 | 240 × 150 × 280 | 153 × 102 | 0.79 |

| Shenzhou-14 | 1:40 | 180 × 370 × 300 | 164 × 50 | 1.46 |

| Parameters | Value |

|---|---|

| Detector Signal | IMX250 |

| Pixel Size | 3.45 μm × 3.45 μm |

| Target Surface Size | 2/3″ |

| Resolution | 2432 × 2048 |

| Dynamic Range | 75.4 dB |

| Gain | 0 dB~20 dB |

| Exposure Time | 15 μs 10 s |

| Frame Rate | 140 fps |

| Parameters | Value |

|---|---|

| LED Bulb | T6 |

| Weight | 145 g |

| Source | one |

| Dimensions | 9.5 × 6.2 × 8.8 cm |

| States | Classification | Statistic | Number of Instances in Dataset |

|---|---|---|---|

| Illuminations | Low illumination | 3415 | 9564 |

| High saturation | 2968 | ||

| Normal illumination | 3181 | ||

| Motion States | Approaching states | 5236 | 15,940 |

| Hovering states | 5191 | ||

| Random roll state | 5513 | ||

| Occlusion States | Partial occlusion on the left side | 2657 | 6376 |

| Partial occlusion on the right side | 2385 | ||

| Complete occlusion | 1334 | ||

| Total | 31,880 | ||

| Number | Model | Main Network | 1—Solar Panel | 2—Body | 3—Radar | mAP |

|---|---|---|---|---|---|---|

| 1 | YOLOv3 | DarkNet | 64.31 | 88.16 | 73.54 | 75.34 |

| 2 | YOLOv4 | DarkNet | 66.63 | 90.79 | 73.61 | 77.01 |

| 3 | YOLOv5 | DarkNet | 71.24 | 93.60 | 71.54 | 78.79 |

| 4 | YOLOv6 | DarkNet | 73.51 | 93.68 | 72.98 | 80.06 |

| 5 | YOLOv7 | DarkNet | 85.61 | 95.32 | 80.21 | 87.05 |

| 6 | YOLOv8 | DarkNet | 91.07 1 | 99.67 1 | 91.09 1 | 93.94 1 |

| 7 | YOLOF | DarkNet | 73.54 | 92.18 | 74.05 | 79.92 |

| 8 | YOLOX | DarkNet | 75.60 | 97.22 | 78.96 | 83.93 |

| Dataset | Type | Faster-R-CNN | YOLOv3 | YOLOv5 | YOLOv8 |

|---|---|---|---|---|---|

| SPEED | Synthetic | 93.09 1 | 71.28 1 | 78.57 1 | 93.56 1 |

| SPEED+ | Synthetic and real | 92.20 | 68.37 | 75.05 | 93.12 |

| TYCOS | Real | 90.61 | 64.31 | 71.24 | 91.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bian, H.; Cao, J.; Zhang, G.; Zhang, Z.; Li, C.; Dong, J. TYCOS: A Specialized Dataset for Typical Components of Satellites. Appl. Sci. 2024, 14, 4757. https://doi.org/10.3390/app14114757

Bian H, Cao J, Zhang G, Zhang Z, Li C, Dong J. TYCOS: A Specialized Dataset for Typical Components of Satellites. Applied Sciences. 2024; 14(11):4757. https://doi.org/10.3390/app14114757

Chicago/Turabian StyleBian, He, Jianzhong Cao, Gaopeng Zhang, Zhe Zhang, Cheng Li, and Junpeng Dong. 2024. "TYCOS: A Specialized Dataset for Typical Components of Satellites" Applied Sciences 14, no. 11: 4757. https://doi.org/10.3390/app14114757

APA StyleBian, H., Cao, J., Zhang, G., Zhang, Z., Li, C., & Dong, J. (2024). TYCOS: A Specialized Dataset for Typical Components of Satellites. Applied Sciences, 14(11), 4757. https://doi.org/10.3390/app14114757