The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel

Abstract

:1. Introduction

2. Materials

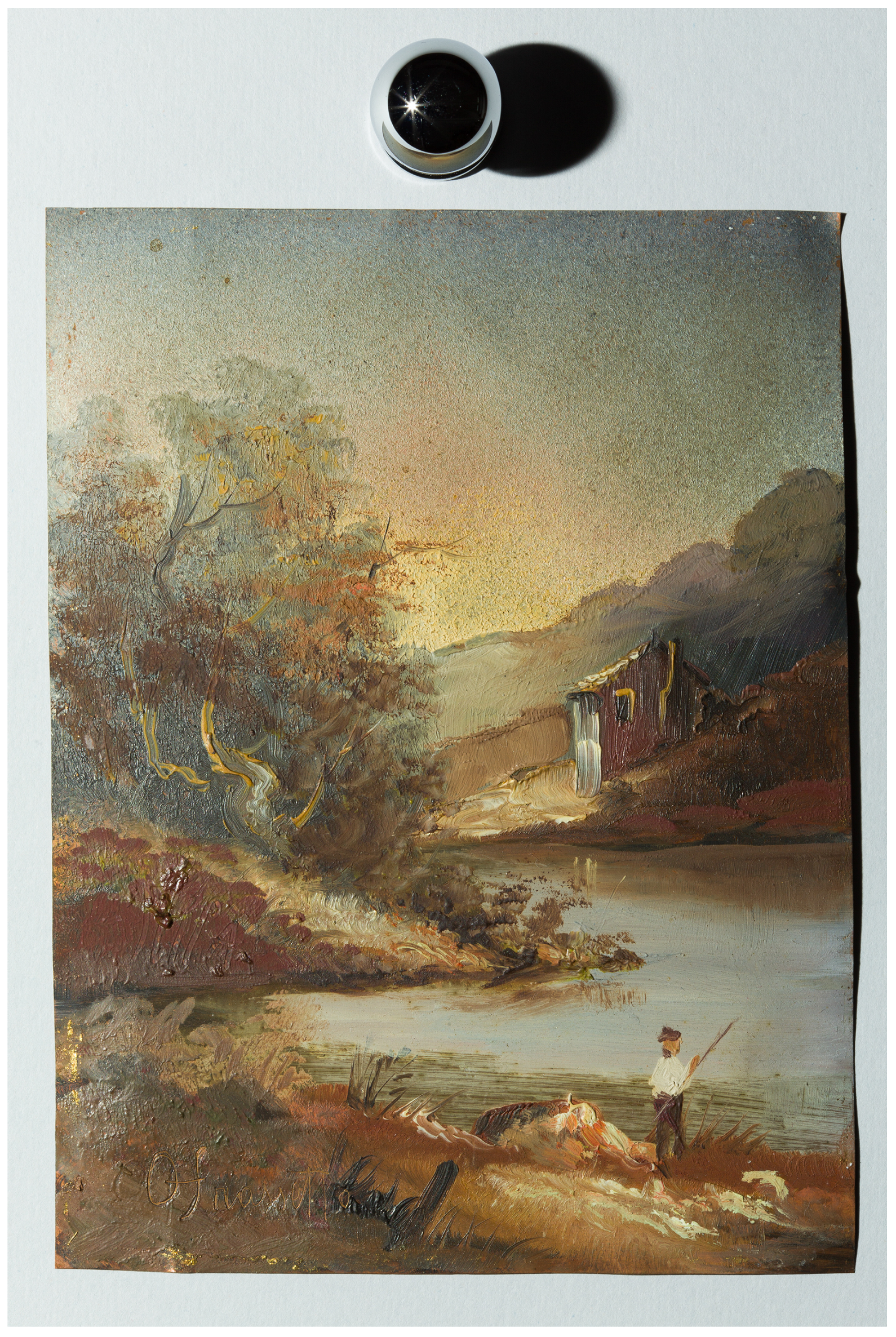

2.1. Small Test Object for RTI vs. V-RTI Comparison

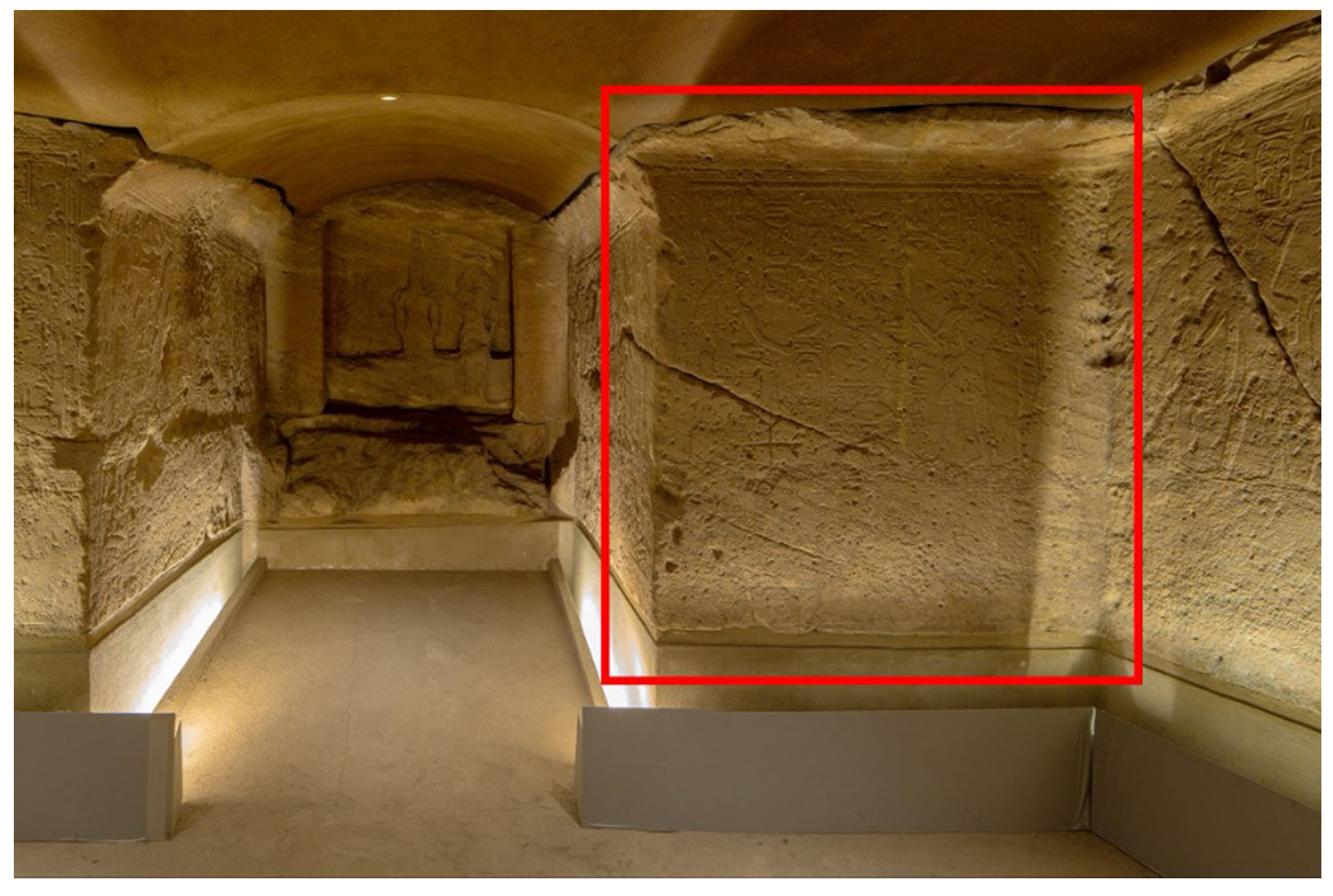

2.2. An Ancient Egyptian Bas-Relief

3. Methods

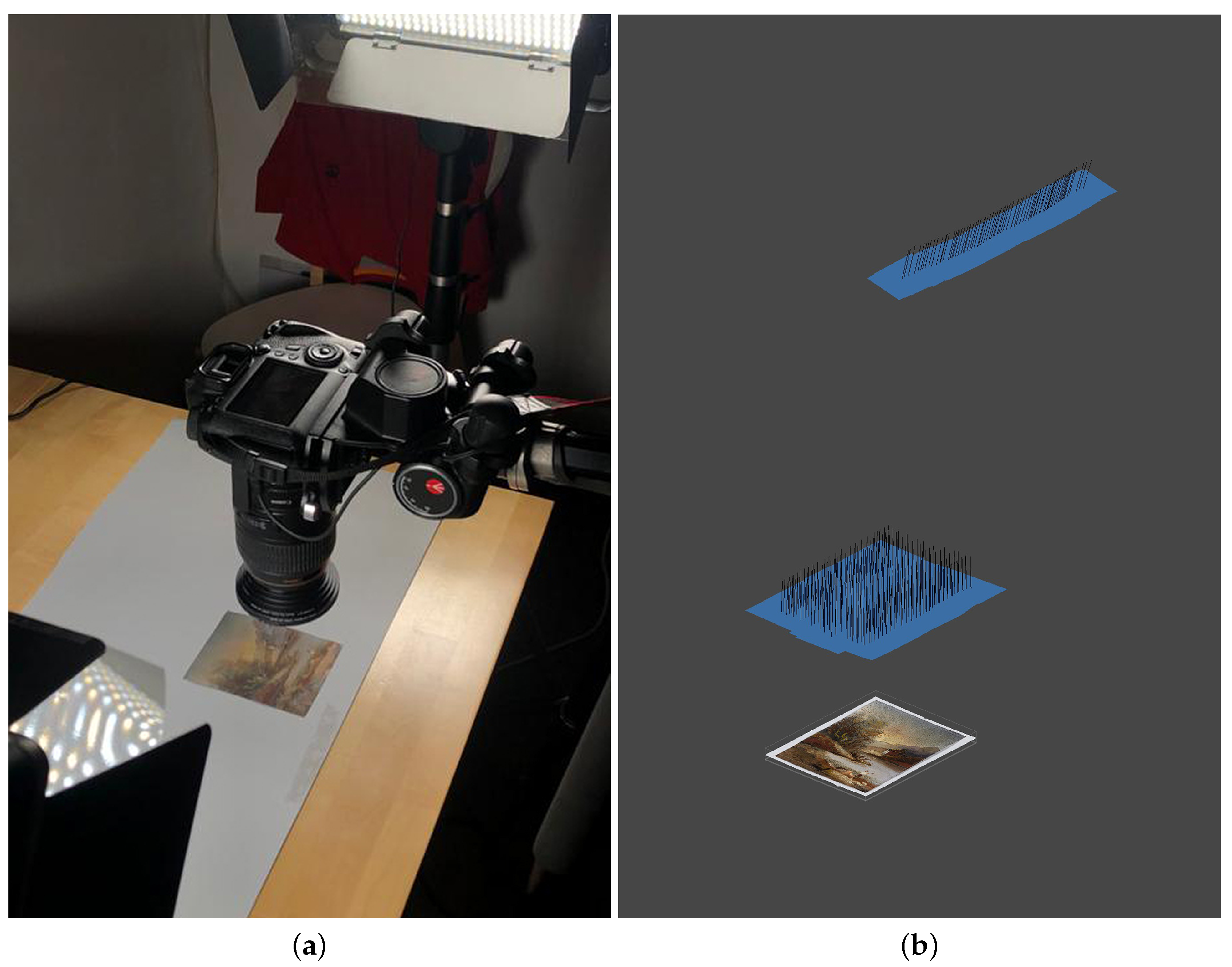

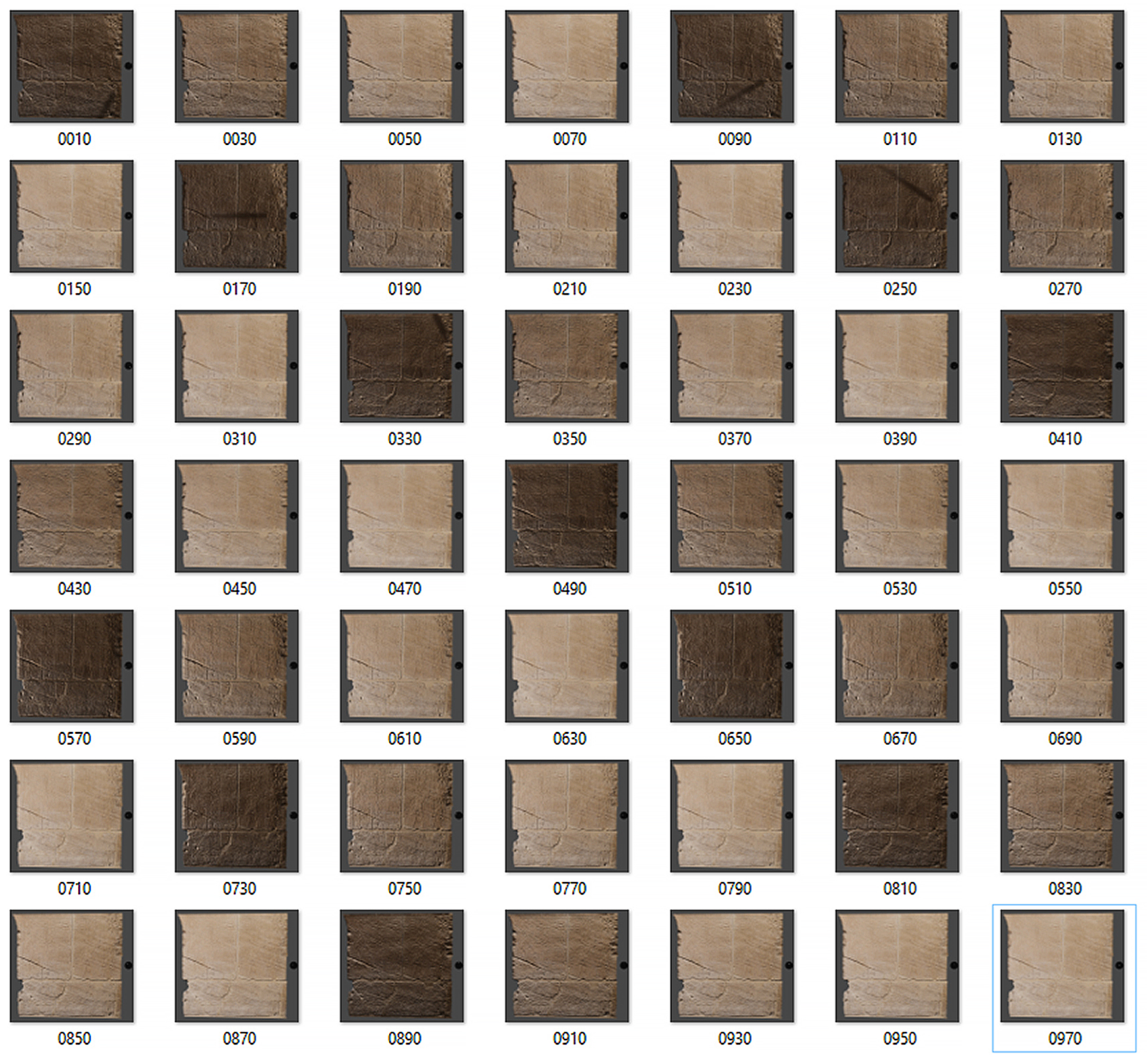

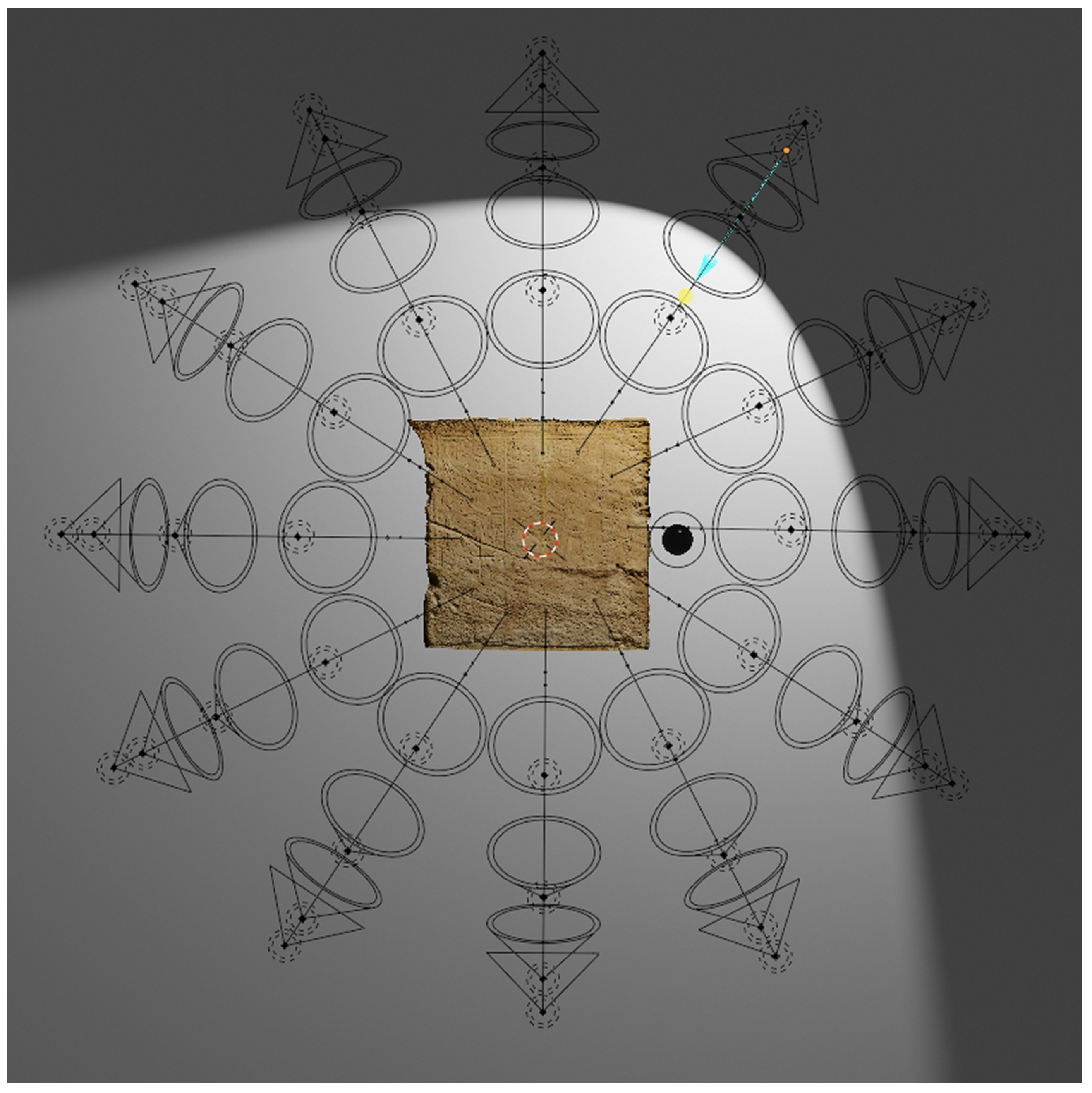

3.1. V-RTI: Data Acquisition

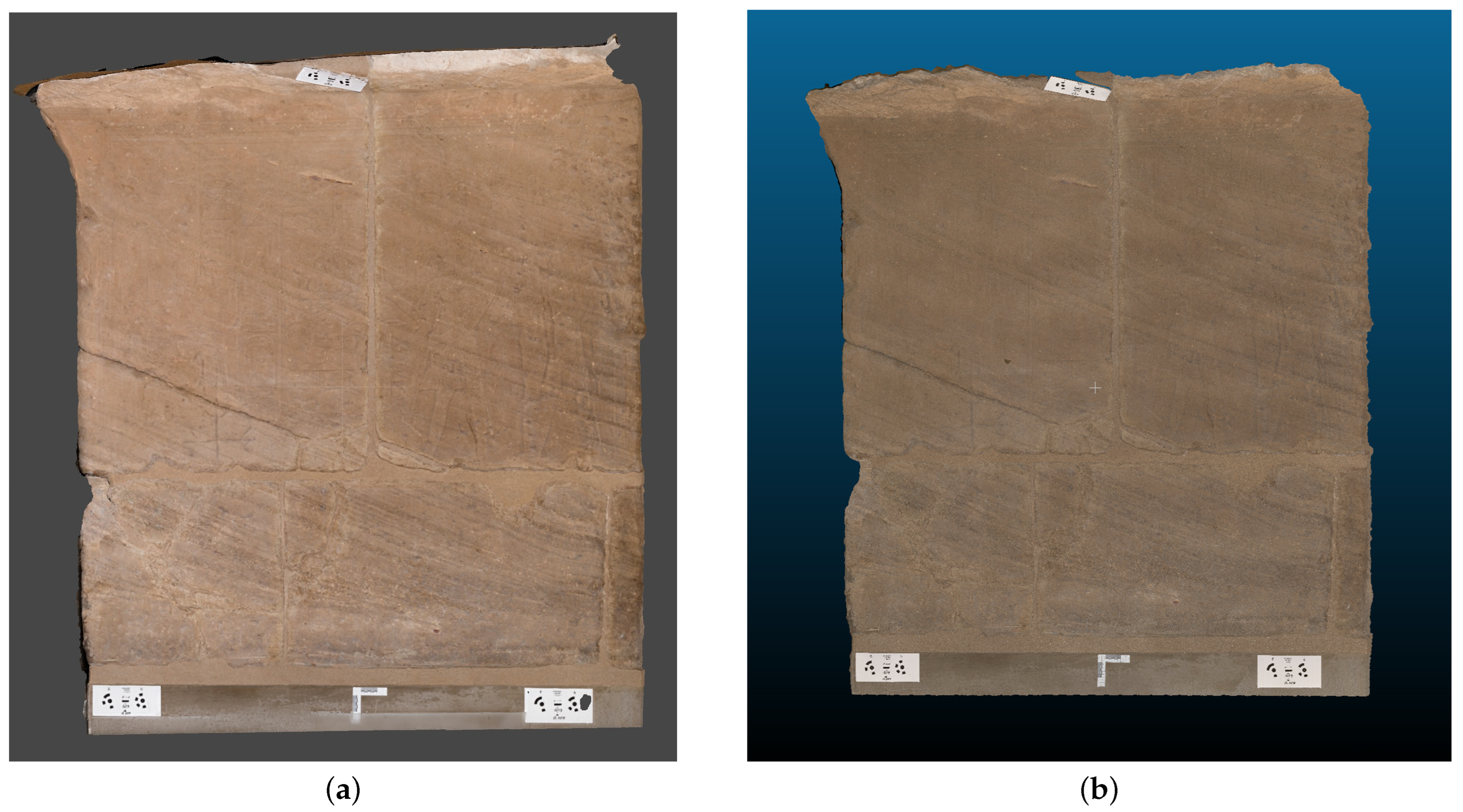

3.1.1. Photogrammetric Image Acquisition of Ellesiya Bas-Relief

3.1.2. Photogrammetric Image Acquisition of Small Test Object

3.2. Data Processing: A Novel Approach to Generate V-RTI

3.2.1. Pre-Processing Image Data

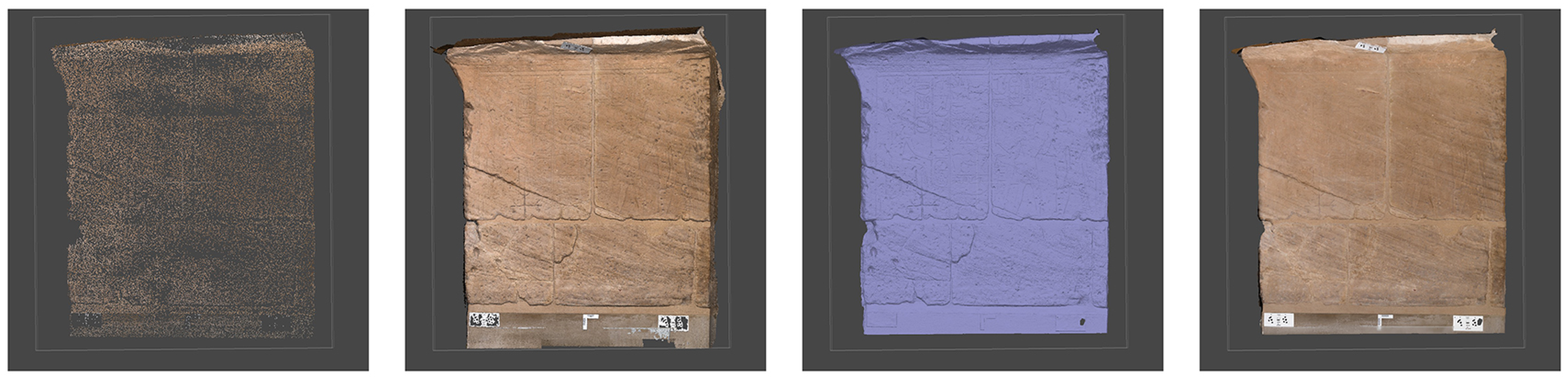

3.2.2. Structure from Motion

3.2.3. VR Environment

3.2.4. Computing the V-RTI

3.3. V-RTI Validation: Ground-Truth Comparisons

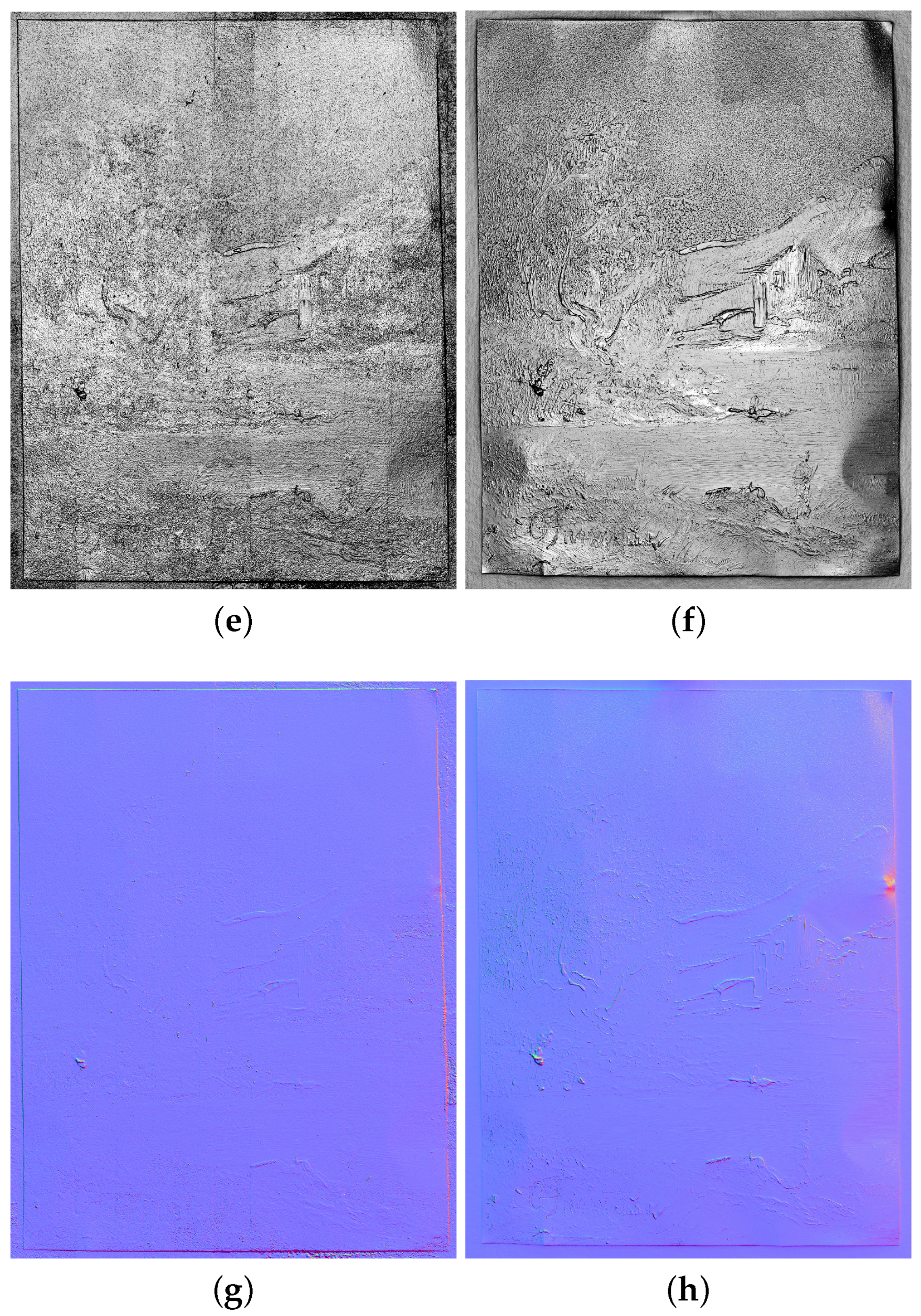

3.3.1. SfM vs. SLS

3.3.2. RTI vs. V-RTI

4. Results

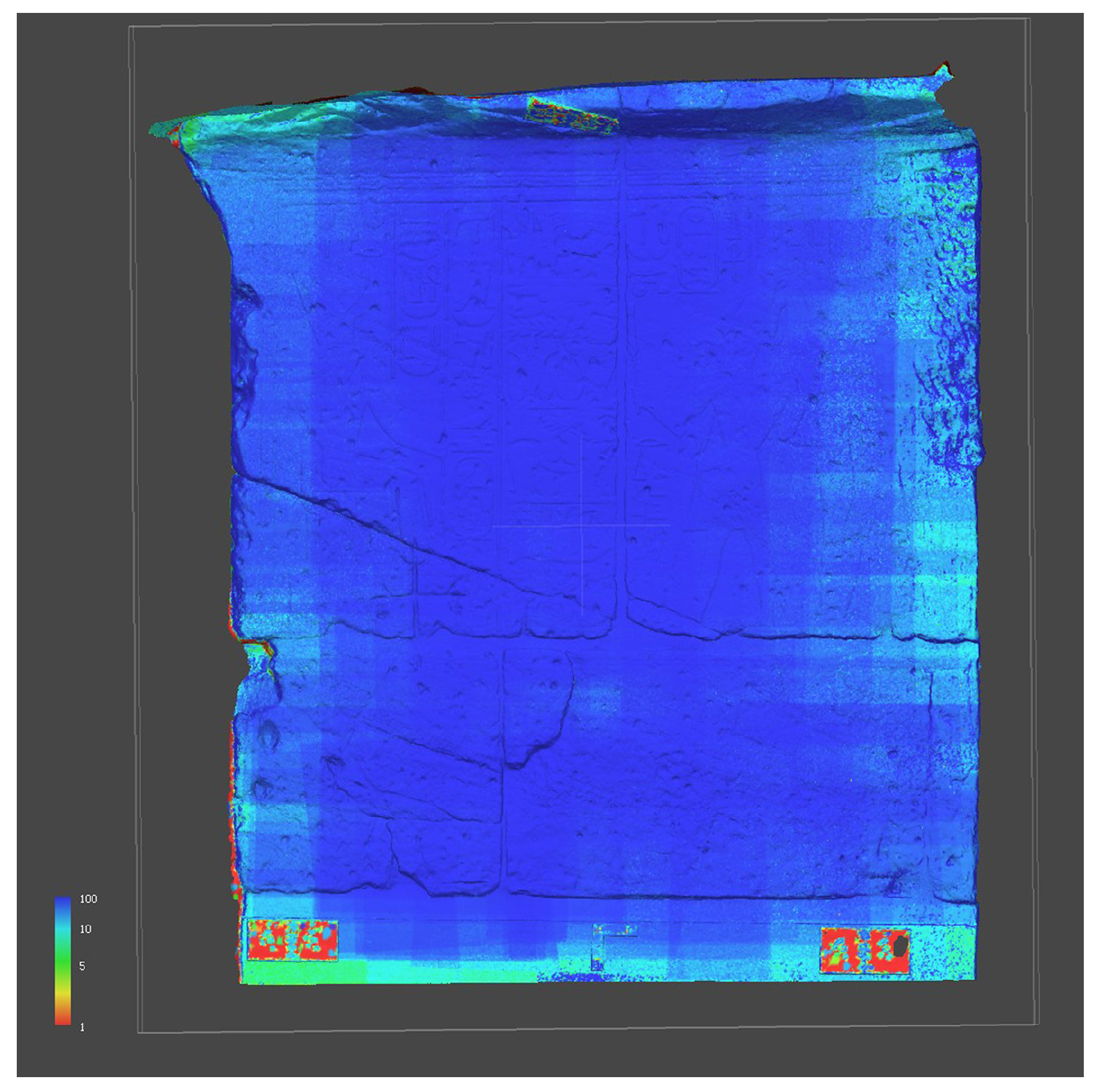

4.1. Photogrammetry and SfM vs. SLS Comparison

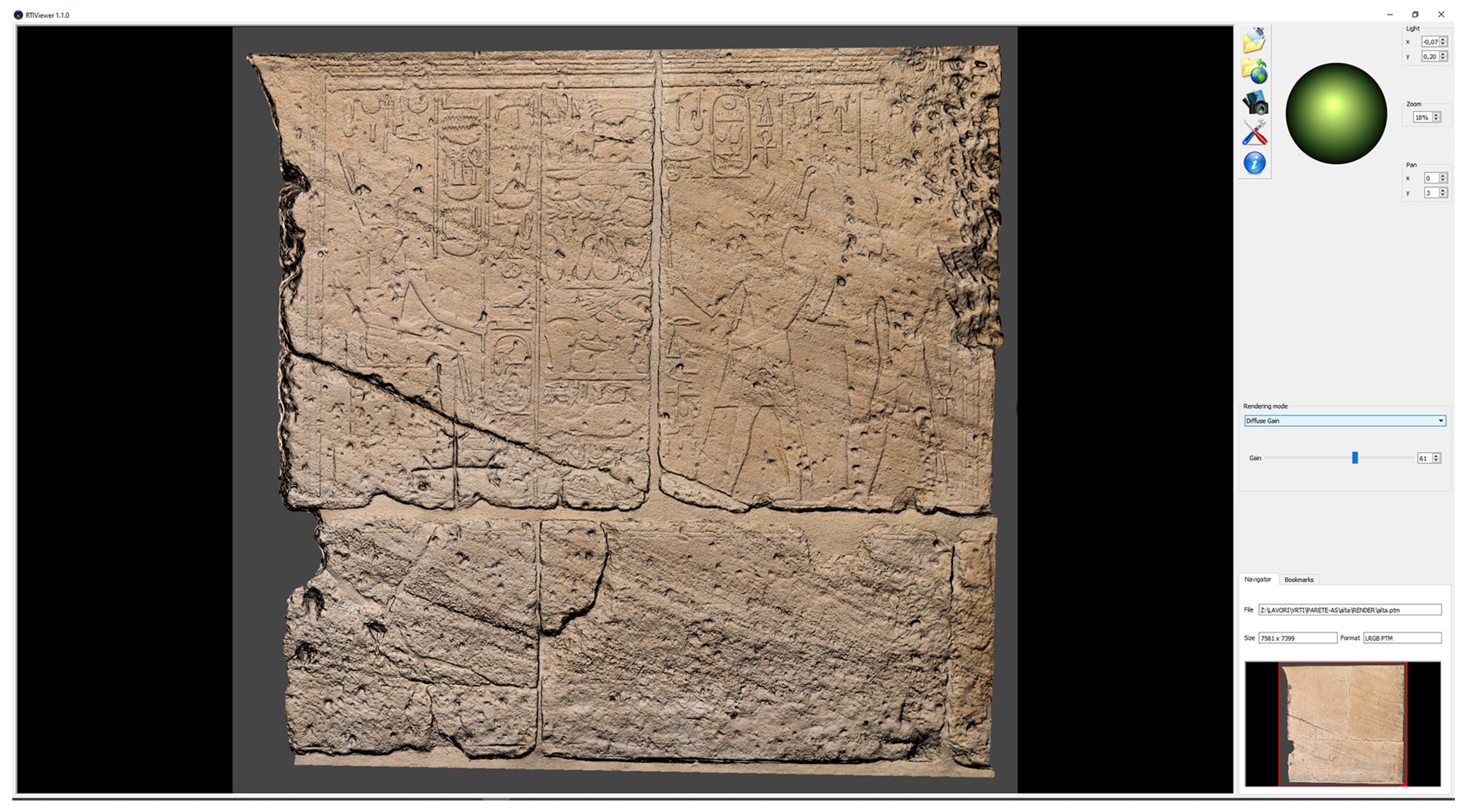

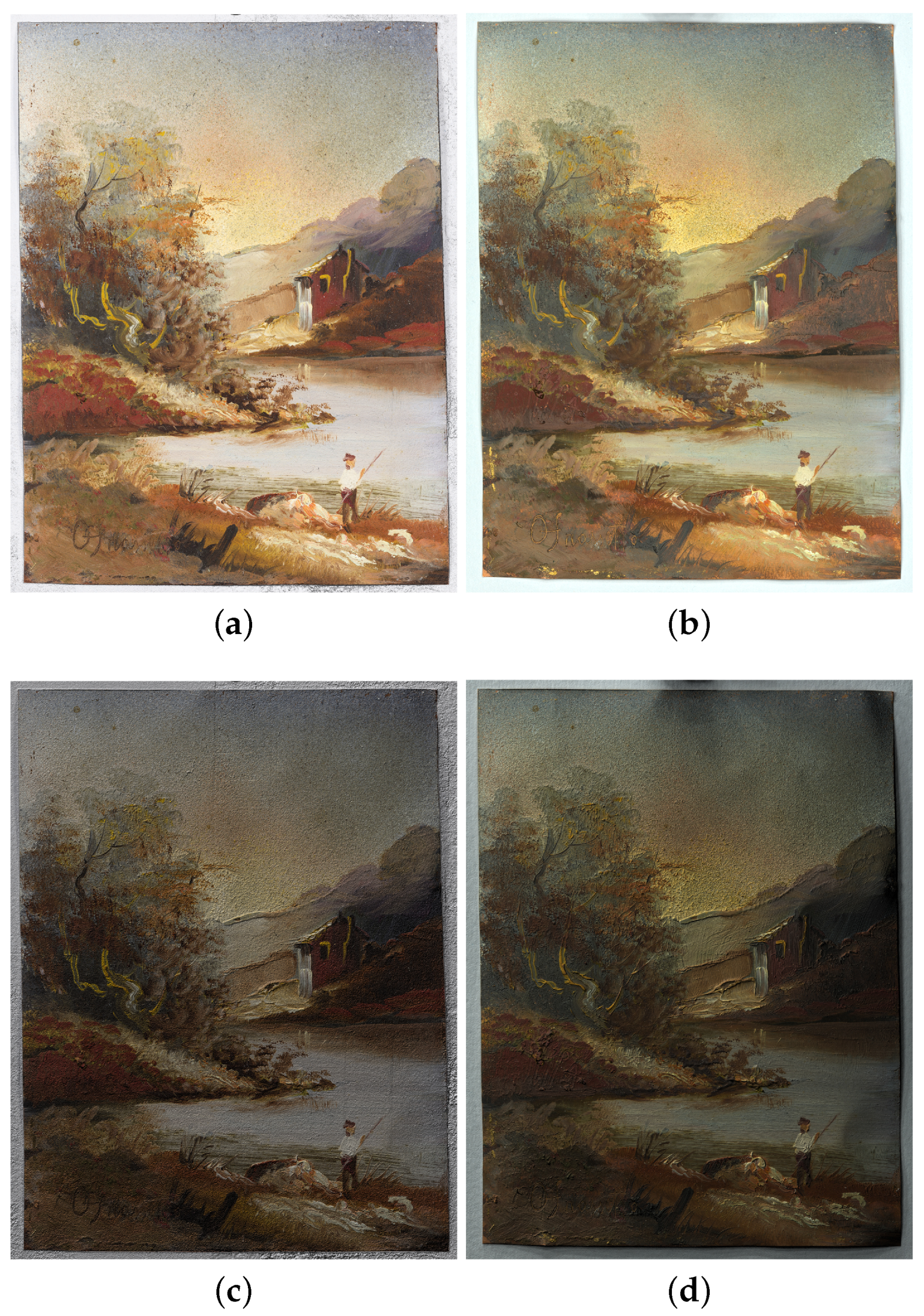

4.2. RTI vs. V-RTI

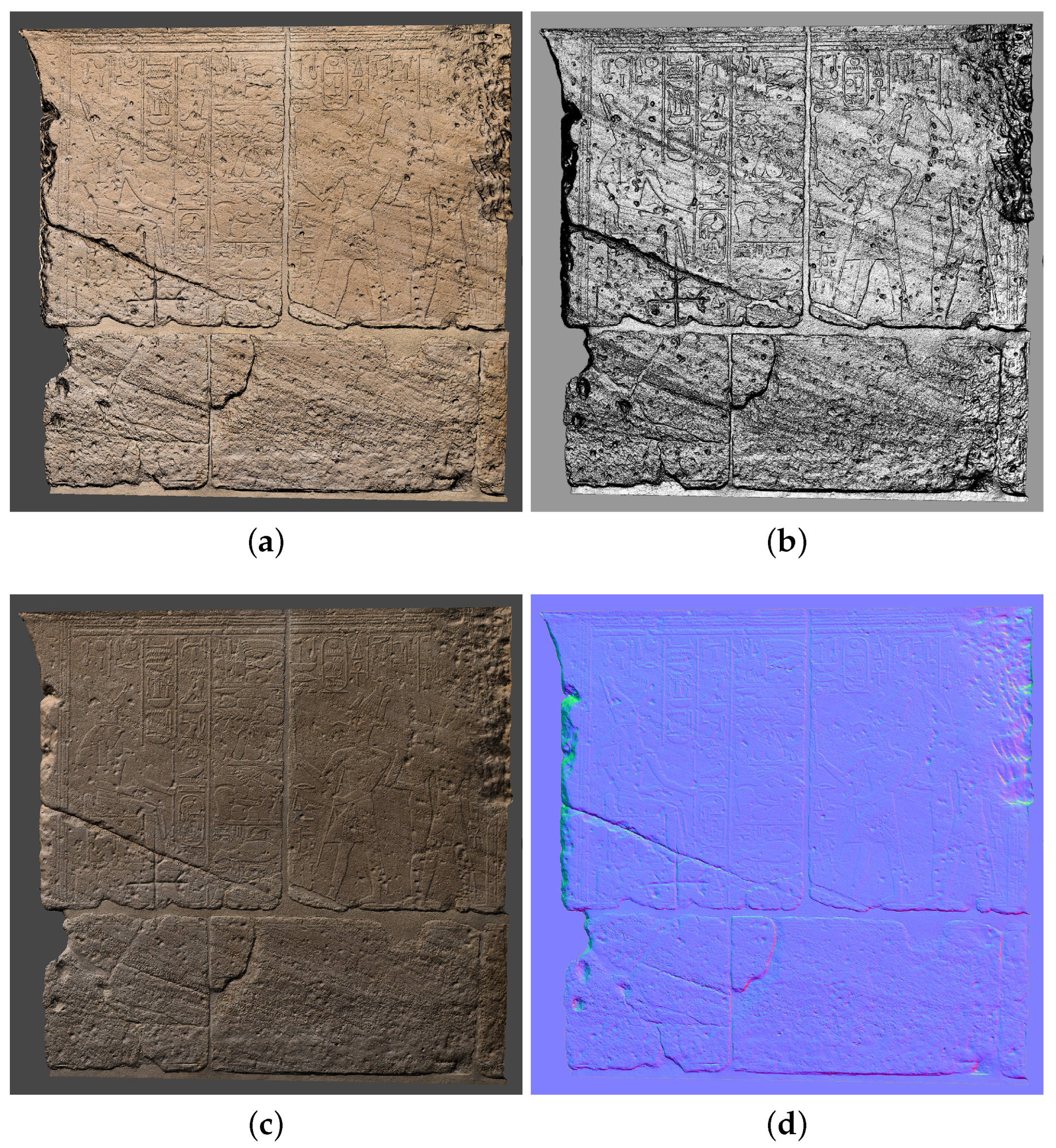

4.3. V-RTI

5. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RTI | Reflectance Transformation Imaging |

| 3D | Three-dimensional |

| SfM | Structure from Motion |

| V-RTI | Virtual Reflectance Transformation Imaging |

| 2D | Two-dimensional |

| SLAM | Simultaneous Localization and Mapping system |

| H-RTI | Highlights Reflectance Transformation Imaging |

| D-RTI | Dome Reflectance Transformation Imaging |

| CCR | Centro per la Conservazione ed il Restauro dei Beni Culturali “La Venaria Reale” |

| VR | Virtual Reality |

| GSD | Ground Sampling Distance |

| LED | Light Emitting Diodes |

| VCSEL | Vertical Cavity Surface Emitting Laser |

| ICP | Iterative Closest Point |

| PTM | Polynomial Texture Mapping |

| SLS | Structured Light Scanner |

References

- Matteini, M. The Extraordinary Role of the Imaging Techniques in the Conservation and Valorization of Cultural Heritage. In Electronic Imaging & the Visual Arts. EVA 2018 Florence; Firenze University Press: Firenze, Italy, 2018; pp. 127–129. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F. Combining photogrammetry and photometric stereo to achieve precise and complete 3D reconstruction. Sensors 2022, 22, 8172. [Google Scholar] [CrossRef] [PubMed]

- Cantó, A.; Lerma, J.L.; Martínez Valle, R.; Villaverde, V. Multi-light photogrammetric survey applied to the complex documentation of engravings in Palaeolithic rock art: The Cova de les Meravelles (Gandia, Valencia, Spain). Herit. Sci. 2022, 10, 169. [Google Scholar] [CrossRef]

- Es Sebar, L.; Lombardo, L.; Buscaglia, P.; Cavaleri, T.; Lo Giudice, A.; Re, A.; Borla, M.; Aicardi, S.; Grassini, S. 3D multispectral imaging for cultural heritage preservation: The case study of a wooden sculpture of the Museo Egizio di Torino. Heritage 2023, 6, 2783–2795. [Google Scholar] [CrossRef]

- Santoro, V.; Patrucco, G.; Lingua, A.; Spanò, A. Multispectral Uav Data Enhancing the Knowledge of Landscape Heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1419–1426. [Google Scholar] [CrossRef]

- Alsadik, B.; Gerke, M.; Vosselman, G.; Daham, A.; Jasim, L. Minimal camera networks for 3D image based modeling of cultural heritage objects. Sensors 2014, 14, 5785–5804. [Google Scholar] [CrossRef] [PubMed]

- Lech, P.; Klebowski, M.; Beldyga, M.; Ostrowski, W. Application of a stand-alone RTI measuring system with an integrated camera in cultural heritage digitisation. J. Archaeol. Sci. Rep. 2024, 53, 104318. [Google Scholar]

- Frank, E.; Heath, S.; Stein, C. Integration of photogrammetry, reflectance transformation imaging (RTI), and multiband imaging (MBI) for visualization, documentation, and analysis of archaeological and related materials. ISAW Pap. 2021, 21. [Google Scholar]

- Frood, E.; Howley, K. Applications of Reflectance Transformation Imaging (RTI) in the Study of Temple Graffiti; Cambridge Scholars Publishing: Cambridge, UK, 2014. [Google Scholar]

- Piquette, K.E. Documenting archaeological surfaces with Reflectance Transformation Imaging (RTI). In Science in the Study of Ancient Egypt; Routledge: New York, NY, USA, 2015; pp. 298–304. [Google Scholar]

- Kleinitz, C. Reflectance Transformation Imaging (RTI) in der Bestandsdokumentation der Sekundärbilder Und-Inschriften von Musawwarat es Sufra im Rahmen des Musawwarat Graffiti Project; Der Antike Sudan. Mitteilungen der Sudanarchäologischen Gesellschaft zu Berlin e. V.: Berlin, Germany, 2012; pp. 7–20. [Google Scholar]

- Min, J.; Jeong, S.; Park, K.; Choi, Y.; Lee, D.; Ahn, J.; Har, D.; Ahn, S. Reflectance transformation imaging for documenting changes through treatment of Joseon dynasty coins. Herit. Sci. 2021, 9, 105. [Google Scholar] [CrossRef]

- Florindi, S.; Revedin, A.; Aranguren, B.; Palleschi, V. Application of reflectance transformation imaging to experimental archaeology studies. Heritage 2020, 3, 1279–1286. [Google Scholar] [CrossRef]

- Newman, S.E. Applications of reflectance transformation imaging (RTI) to the study of bone surface modifications. J. Archaeol. Sci. 2015, 53, 536–549. [Google Scholar] [CrossRef]

- Relight, RTI Sofware. Available online: https://vcg.isti.cnr.it/relight/#download (accessed on 3 May 2024).

- Mudge, M.; Malzbender, T.; Schroer, C.; Lum, M. New reflection transformation imaging methods for rock art and multiple-viewpoint display. In Proceedings of the VAST 2006: 7th International Symposium on Virtual Reality, Archaeology and Cultural Heritage, Nicosia, Cyprus, 4 November 2006; Ioannides, M., Arnold, D., Niccolucci, F., Mania, K., Eds.; Volume 6, pp. 195–202. [Google Scholar]

- Khawaja, M.A.; George, S.; Marzani, F.; Hardeberg, J.Y.; Mansouri, A. An interactive method for adaptive acquisition in Reflectance Transformation Imaging for cultural heritage. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1690–1698. [Google Scholar]

- Mytum, H.; Peterson, J.R. The application of reflectance transformation imaging (RTI) in historical archaeology. Hist. Archaeol. 2018, 52, 489–503. [Google Scholar] [CrossRef]

- Kinsman, T. An easy to build reflectance transformation imaging (RTI) system. J. Biocommun. 2016, 40, 10–13. [Google Scholar] [CrossRef]

- Hughes-Hallett, M.; Young, C.; Messier, P. A review of RTI and an investigation into the applicability of micro-RTI as a tool for the documentation and conservation of modern and contemporary paintings. J. Am. Inst. Conserv. 2021, 60, 18–31. [Google Scholar] [CrossRef]

- Vietti, A.; Parvis, M.; Donato, N.; Grassini, S.; Lombardo, L. Development of a low-cost and portable device for Reflectance Transformation Imaging. In Proceedings of the 2023 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Kuala Lumpur, Malaysia, 22–25 May 2023; pp. 1–6. [Google Scholar]

- Luxman, R.; Castro, Y.E.; Chatoux, H.; Nurit, M.; Siatou, A.; Le Goïc, G.; Brambilla, L.; Degrigny, C.; Marzani, F.; Mansouri, A. LightBot: A multi-light position robotic acquisition system for adaptive capturing of cultural heritage surfaces. J. Imaging 2022, 8, 134. [Google Scholar] [CrossRef]

- Di Iorio, F.; Es Sebar, L.; Lombardo, L.; Vietti, A.; Aicardi, S.; Pozzi, F.; Grassini, S. An improved methodology for extending the applicability of Reflectance Transformation Imaging to confined sites. In Proceedings of the 2023 IMEKO International Conference on Metrology for Archaeology and Cultural Heritage (MetroArchaeo 2023), Rome, Italy, 19–21 October 2023; pp. 137–141. [Google Scholar]

- Greaves, A.M.; Duffy, S.; Peterson, J.R.; Tekoğlu, Ş.R.; Hirt, A. Carved in stone: Field trials of virtual reflectance transformation imaging (V-RTI) in classical Telmessos (Fethiye). J. Field Archaeol. 2020, 45, 542–555. [Google Scholar] [CrossRef]

- Remondino, F. Heritage recording and 3D modeling with photogrammetry and 3D scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Es Sebar, L.; Angelini, E.; Grassini, S.; Parvis, M.; Lombardo, L. A trustable 3D photogrammetry approach for cultural heritage. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Parvis, M.; Es Sebar, L.; Grassini, S.; Angelini, E. Monitoraggio dello stato di conservazione delle opere d’arte della Collezione Gori. Metall. Ital. 2020, 4, 73–77. [Google Scholar]

- Tamborrino, R.; Wendrich, W. Cultural heritage in context: The temples of Nubia, digital technologies and the future of conservation. J. Inst. Conserv. 2017, 40, 168–182. [Google Scholar] [CrossRef]

- Hassan, F.A. The Aswan high dam and the international rescue Nubia campaign. Afr. Archaeol. Rev. 2007, 24, 73–94. [Google Scholar] [CrossRef]

- Desroches Noblecourt, C.; Donadoni, S.; Al-Din Moukhtar, J.; El-Achiery, H.; Aly, M.; Dewachter, M. Le Speos d’El-Lessiya I-II, Collection Scientifique; Centre de Documentation et d’Études sur l’Ancienne Égypte: Le Caire, Egypt, 1968; pp. 62–63. [Google Scholar]

- Curto, S. Il Tempio di Ellesija.Museo Egizio di Torino: Quaderno 6; Museo egizio di Torino: Turin, Italy, 1970. [Google Scholar]

- Curto, S. Lo Speos di Ellesija: Un Tempio della Nubia Salvato dalle Acque del Lago Nasser; Scala: Firenze, Italy, 2010. [Google Scholar]

- Farella, E.; Morelli, L.; Grilli, E.; Rigon, S.; Remondino, F. Handling critical aspects in massive photogrammetric digitization of museum assets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 215–222. [Google Scholar] [CrossRef]

- Es Sebar, L.; Grassini, S.; Parvis, M.; Lombardo, L. A low-cost automatic acquisition system for photogrammetry. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 17–20 May 2021; pp. 1–6. [Google Scholar]

- Es Sebar, L.; Lombardo, L.; Parvis, M.; Angelini, E.; Re, A.; Grassini, S. A metrological approach for multispectral photogrammetry. ACTA IMEKO 2021, 10, 111–116. [Google Scholar] [CrossRef]

- Zakrzewski, S.; Shortland, A.; Rowland, J. Science in the Study of Ancient Egypt; Routledge: New York, NY, USA; London, UK, 2015. [Google Scholar]

- Dyer, J.; Verri, G.; Cupitt, J. Multispectral Imaging in Reflectance and Photo-Induced Luminscence Modes: A User Manual; The British Museum: London, UK, 2013. [Google Scholar]

- Rahrig, M.; Herrero Cortell, M.Á.; Lerma, J.L. Multiband Photogrammetry and Hybrid Image Analysis for the Investigation of a Wall Painting by Paolo de San Leocadio and Francesco Pagano in the Cathedral of Valencia. Sensors 2023, 23, 2301. [Google Scholar] [CrossRef] [PubMed]

- Agisoft. Metashape, Photogrammetry Reconstruction Software. Available online: https://www.agisoft.com/ (accessed on 3 May 2024).

- Blender Online Community Blender—A 3D Modelling and Rendering Package. 2018. Available online: http://www.blender.org/ (accessed on 3 May 2024).

- Ariadne, The Visual Media Service Provides Easy Publication and Presentation on the web. Available online: https://visual.ariadne-infrastructure.eu/ (accessed on 3 May 2024).

- CloudCompare, 3D Point Cloud and Mesh Processing Software, Open Source Project. Available online: https://www.danielgm.net/cc/ (accessed on 3 May 2024).

- Porter, S.T.; Huber, N.; Hoyer, C.; Floss, H. Portable and low-cost solutions to the imaging of Paleolithic art objects: A comparison of photogrammetry and reflectance transformation imaging. J. Archaeol. Sci. Rep. 2016, 10, 859–863. [Google Scholar] [CrossRef]

- Croci, S.; Es Sebar, L.; Lombardo, L.; Di Iorio, F.; Buscaglia, P.; Taverni, F.; Aicardi, S.; Grassini, S. Dimensional accuracy assessment of 3D models based on photogrammetry and 3D scanner: A case study from the Museo Egizio of Turin. In Proceedings of the 2024 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 20–23 May 2024. [Google Scholar]

- Ruiz, R.M.; Torres, M.T.M.; Allegue, P.S. Comparative analysis between the main 3d scanning techniques: Photogrammetry, terrestrial laser scanner, and structured light scanner in religious imagery: The case of the holy christ of the blood. ACM J. Comput. Cult. Herit. (JOCCH) 2021, 15, 18. [Google Scholar] [CrossRef]

- Gebler, O.F.; Goudswaard, M.; Hicks, B.; Jones, D.; Nassehi, A.; Snider, C.; Yon, J. A comparison of structured light scanning and photogrammetry for the digitisation of physical prototypes. Proc. Des. Soc. 2021, 1, 11–20. [Google Scholar] [CrossRef]

- Min, J.; Ahn, J.; Ahn, S.; Choi, H.; Ahn, S. Digital imaging methods for painting analysis: The application of RTI and 3D scanning to the study of brushstrokes and paintings. Multimed. Tools Appl. 2020, 79, 25427–25439. [Google Scholar] [CrossRef]

| Photogrammetry Setup Specification | |

|---|---|

| Camera model | Canon EOS 6D |

| Sensor type | CMOS 20.2 Mpx |

| Sensor size | Full frame (35.8 × 23.9 mm) |

| Image size | 5472 × 3648 pixels |

| Focal length | Canon EF 50 mm f/1.8 STM |

| Strobe model | Yongnuo YN685II, guide number 60 |

| Polarizer, on strobe | Linear polarizing filter sheet (380–700 nm) |

| Polarizer, on camera | Hoya HD circular polarizing filter (380–700 nm) |

| Artec Leo SLS | |

|---|---|

| 3D model resolution: | up to 0.2 mm |

| Texture resolution: | 2.3 mpx, 24 bpp |

| Data acquisition: | 35 mln points/s |

| 3D Model of the Ellesiya Bas-Relief | |

|---|---|

| Number of images | 179 |

| Ground resolution | 0.179 mm/pix |

| RMS reprojection error | 0.22 pix |

| Error on scale bars | 4.81 × |

| Tie points | 48 k |

| Dense cloud | 46 mln |

| Mesh | 41 mln |

| Texture | 8192 × 8192 pix |

| Product | Resolution | Unit of Measure |

|---|---|---|

| Sparse Cloud | 69 k | points |

| Dense Cloud | 60 mln | points |

| Mesh | 45 mln | faces |

| Texture | 8192 × 8192 | pixels |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di Iorio, F.; Es Sebar, L.; Croci, S.; Taverni, F.; Auenmüller, J.; Pozzi, F.; Grassini, S. The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel. Appl. Sci. 2024, 14, 4768. https://doi.org/10.3390/app14114768

Di Iorio F, Es Sebar L, Croci S, Taverni F, Auenmüller J, Pozzi F, Grassini S. The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel. Applied Sciences. 2024; 14(11):4768. https://doi.org/10.3390/app14114768

Chicago/Turabian StyleDi Iorio, Federico, Leila Es Sebar, Sara Croci, Federico Taverni, Johannes Auenmüller, Federica Pozzi, and Sabrina Grassini. 2024. "The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel" Applied Sciences 14, no. 11: 4768. https://doi.org/10.3390/app14114768

APA StyleDi Iorio, F., Es Sebar, L., Croci, S., Taverni, F., Auenmüller, J., Pozzi, F., & Grassini, S. (2024). The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel. Applied Sciences, 14(11), 4768. https://doi.org/10.3390/app14114768