An Ergonomic Risk Assessment System Based on 3D Human Pose Estimation and Collaborative Robot

Abstract

1. Introduction and Literature Review

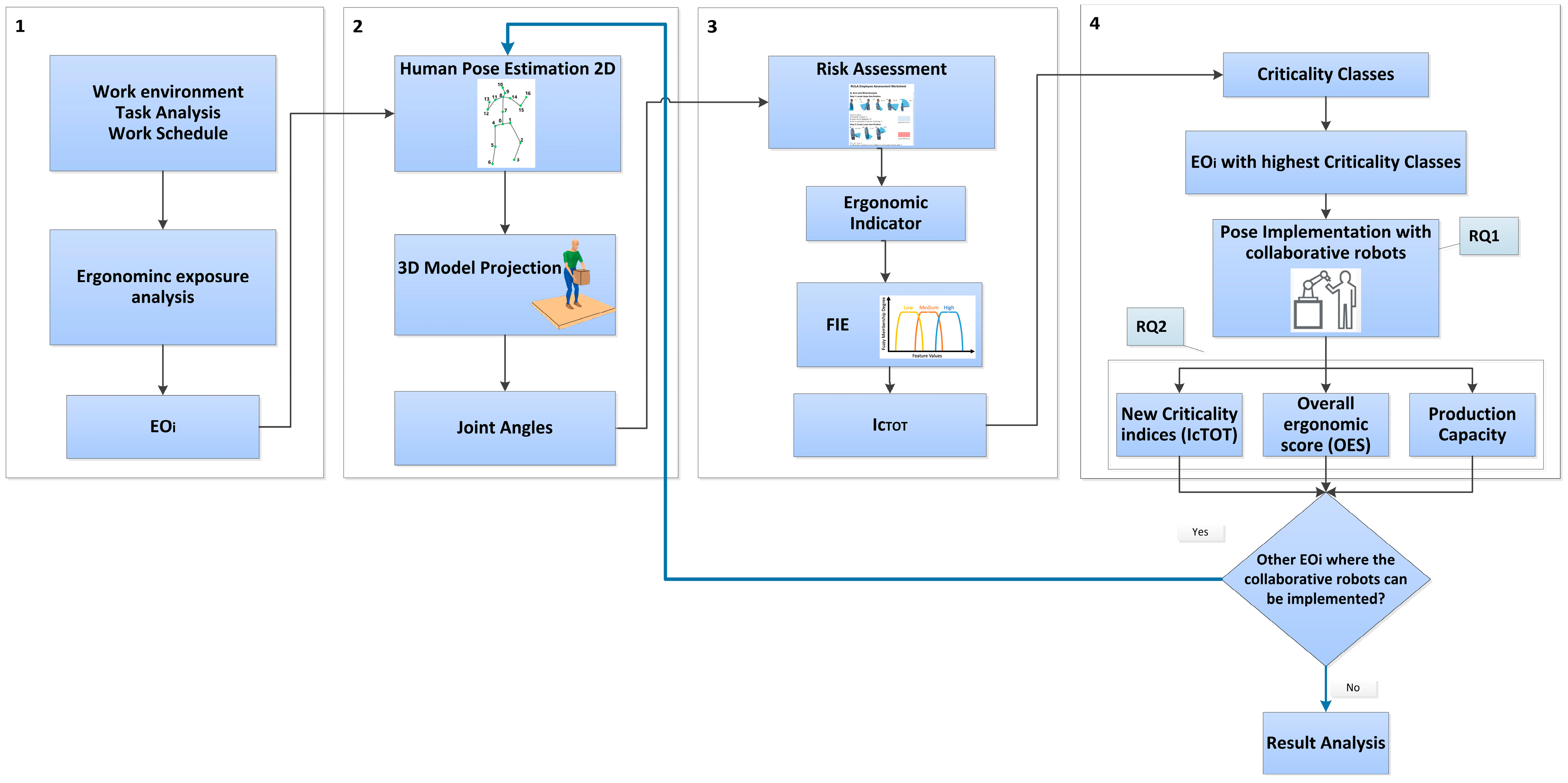

2. Methods

- Worker activities are divided into EOis [36]. These EOis define the input of the ergonomic analysis.

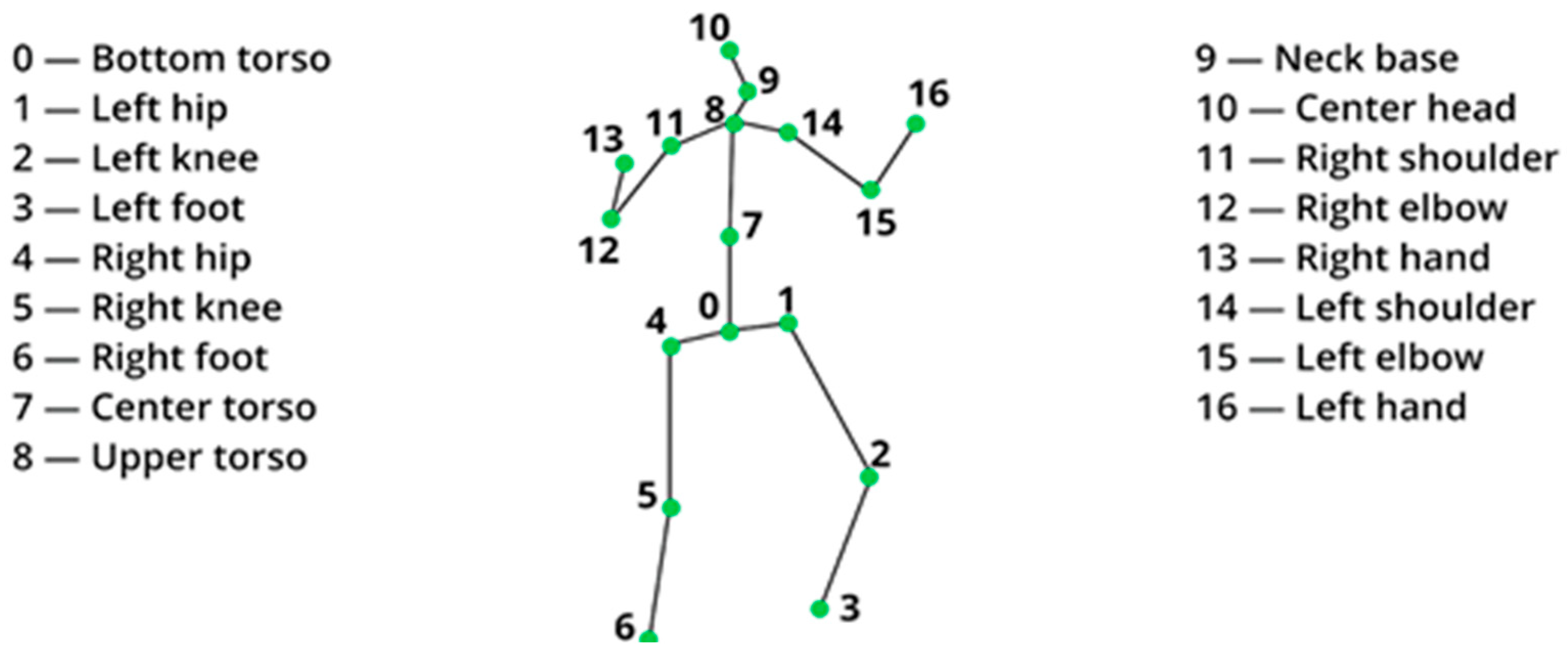

- A system based on artificial vision is introduced for the estimation of human poses in 3D; this outperforms some methods evaluated in the literature in which the estimation of posture scores in the workplace is evaluated in posture images by detecting the 2D coordinates of the body joints, including the wrist joints [1,37].

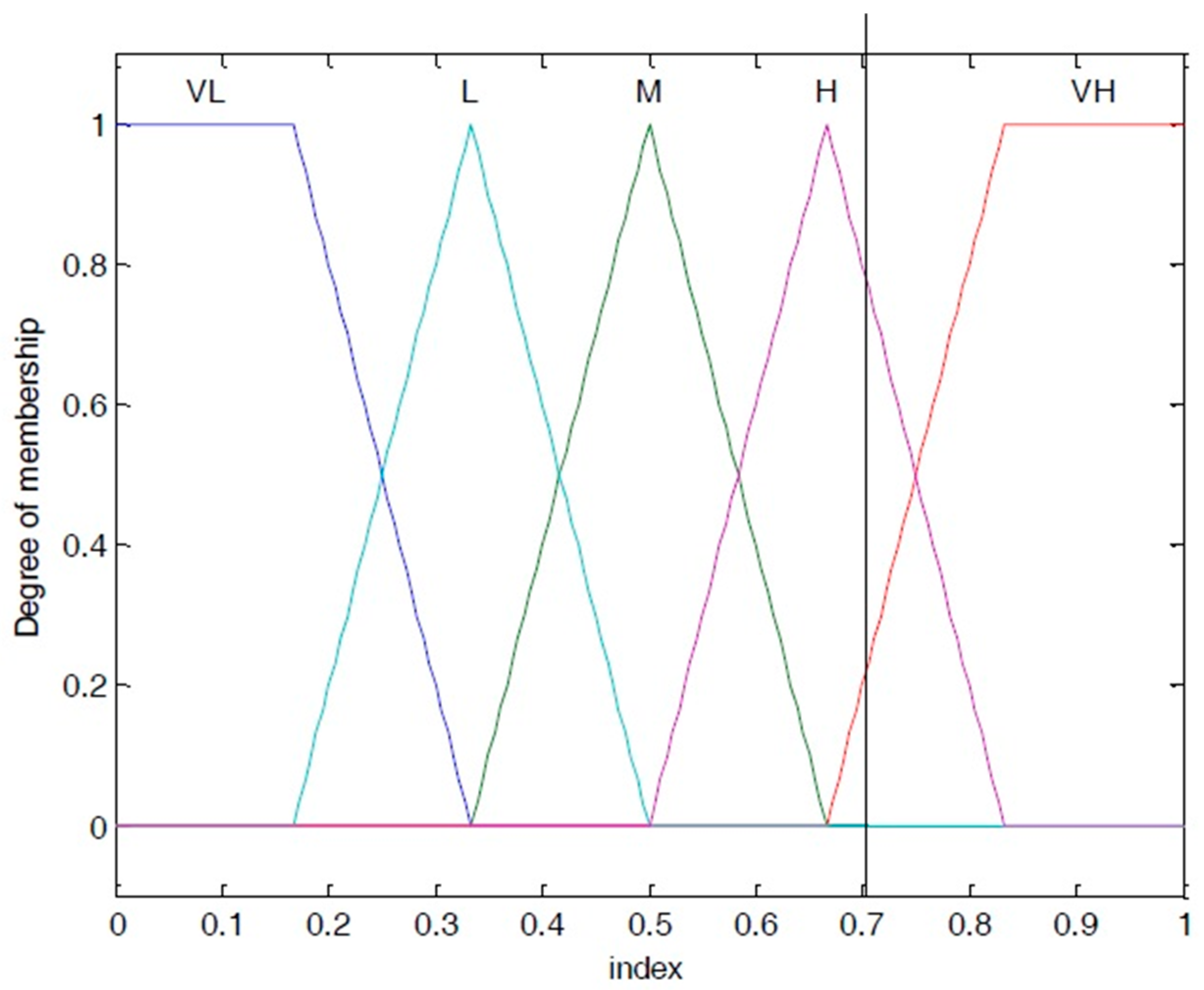

- The total criticality indices for the EOis are balanced through a fuzzy interface. These indices summarize the workers’ ergonomic stress during manufacturing operations.

- A cobot is implemented for elementary operations with higher criticality classes.

- A knowledge base contains all information about a system and allows other entities to process input data and obtain outputs. This information can be divided into (i) a database and (ii) a rule base. The former contains the descriptions of all variables, including membership functions, while the latter contains the inference rules.

- Since the input data are almost always crisp and the fuzzy system works on “fuzzy” sets, a conversion is required to translate standard numeric data into fuzzy data. The operation that implements this transformation is called fuzzification. It is conducted using membership functions of the variables being processed. To defuzzify the input value, the membership degree is set for each linguistic term of the variable.

- The phase in which the returned fuzzy values are converted into usable numbers is called defuzzification. In this phase, we start with a particular fuzzy set obtained through inference. This fuzzy set is often irregularly shaped due to the combination of the results of various rules, and a single sharp value must be found that best represents it [52]. The resulting values represent the final output of the system and are used as control actions or decision parameters.

- Rule #1: if (neck is VL) and (right_elbow is VL) and (right_Shoulder is VH) and (Spine is VL) and (Repetition of the same movements is VL), then (IcTOT is L)

- Rule #2: if (neck is VH) and (right_elbow is VH) and (right_Shoulder is H) and (Spine is VH) and (Repetition of the same movements is VH), then (IcTOT is VH)

- Ic neck = 1;

- Ic right_elbow = 1;

- Ic spine = 1;

- Ic Repetition of the same movements = 1;

- Ic right_Shoulder = 0.702.

- Loading the .fis fuzzy inference file (which contains all the system settings saved through the Fuzzy Logic Toolbox) into the Matlab R2023b workspace;

- Reading and normalizing the input array from the workspace (collecting the ergonomic parameters measured for each elementary operation, i.e., neck bending angle and shoulder angle);

- Computing IcTOT through the fuzzy inference engine for each analyzed variable.

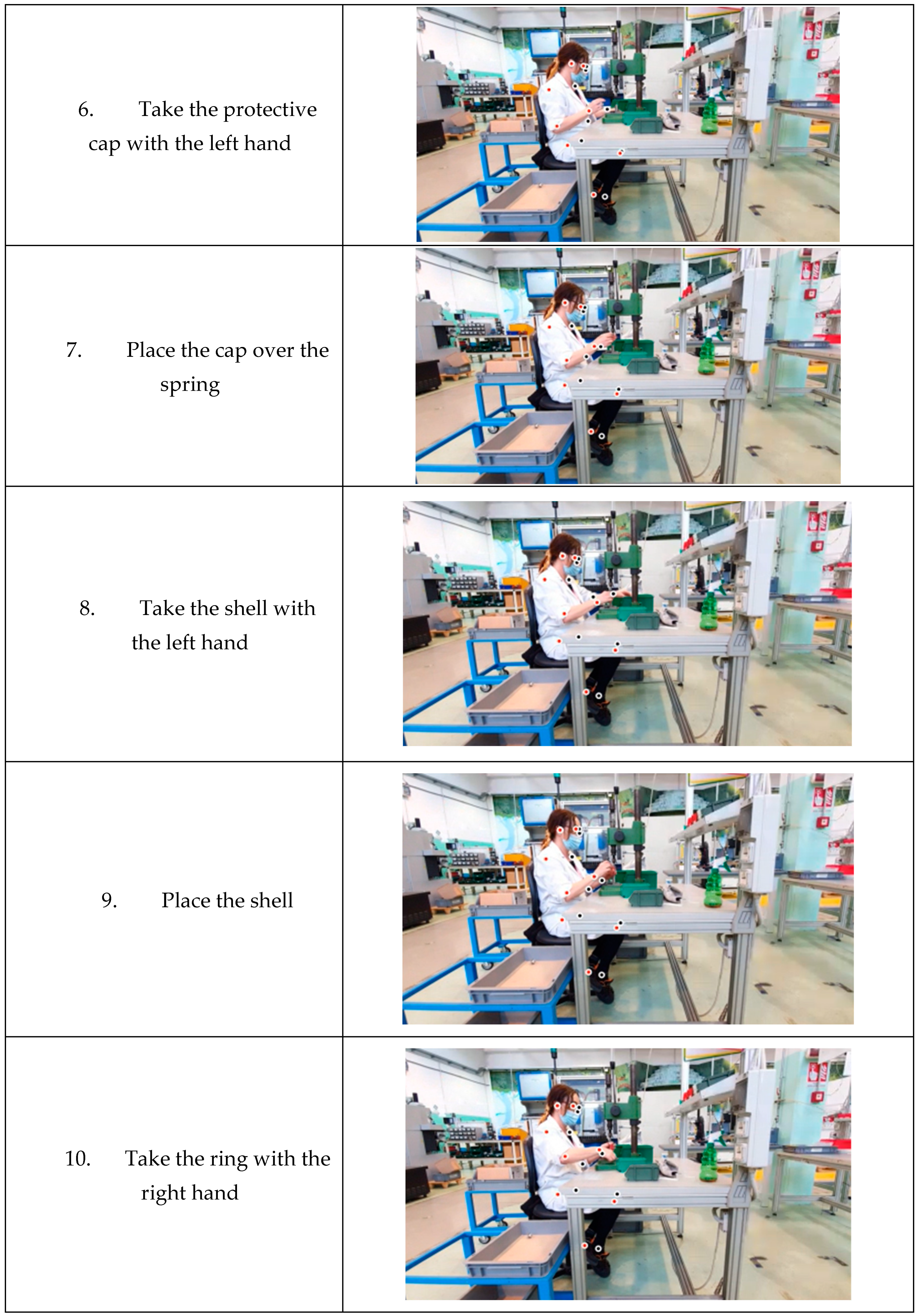

3. Industrial Application

- Trunk bending angle;

- Neck bending/rotation angle;

- Left or right elbow angle;

- Spine;

- Number of repetitions of the same movements.

- Right elbow = 0.571

- Right shoulder = 0.761

- Neck bending = 0.655

- Spine = 0.137

- Repetition of the same movements = 0.630

4. Results Analysis

- ○

- PES is the sum of each score obtained for a single elementary operation;

- ○

- NUM_DOM is the number of selected ergonomic domains;

- ○

- NUM_ELEM_OPS is the number of the EOis.

5. Discussion

- The need to modify the layout of the workstation and the entire line;

- The need to adopt specific semi-finished containers for handling by the cobot;

- The costs of purchasing and programming the cobot and training the operator to interact with it.

6. Conclusions

- Testing our methodology on different personal protective equipment worn by operators, or considering different environmental conditions, to evaluate whether they can influence the obtained results.

- The processing of video sequences in real-time systems with an automatic alerting system for identifying the most critical operations.

- The evolution of 3D pose estimations for multiple people in the same area.

- Comparing ergonomic assessments between workers and investigating the impact of cobot implementation on different workstations while changing the operators.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Massiris Fernández, M.; Fernández, J.Á.; Bajo, J.M.; Delrieux, C.A. Ergonomic risk assessment based on computer vision and machine learning. Comput. Ind. Eng. 2020, 149, 106816. [Google Scholar] [CrossRef]

- Middlesworth, M. How to Prevent Sprains and Strains in the Workplace. 2015. Available online: https://ergo-plus.com/prevent-sprains-strains-workplace (accessed on 31 January 2024).

- Van Der Beek, A.J.; Dennerlein, J.T.; Huysmans, M.A.; Mathiassen, S.E.; Burdorf, A.; Van Mechelen, W.; Coenen, P. A research framework for the development and implementation of interventions preventing work-related musculoskeletal disorders. Scand. J. Work Environ. Health 2017, 526–539. [Google Scholar] [CrossRef] [PubMed]

- Ng, A.; Hayes, M.J.; Polster, A. Musculoskeletal disorders and working posture among dental and oral health students. Healthcare 2016, 4, 13. [Google Scholar] [CrossRef] [PubMed]

- Luttmann, A.; Jager, M.; Griefahn, B.; Caffier, G.; Liebers, F. Preventing Musculoskeletal Disorders in the Workplace; World Health Organization: Geneva, Switzerland, 2003. [Google Scholar]

- Roman-Liu, D. Comparison of concepts in easy-to-use methods for MSD risk assessment. Appl. Ergon. 2014, 45, 420–427. [Google Scholar] [CrossRef]

- Ha, C.; Roquelaure, Y.; Leclerc, A.; Touranchet, A.; Goldberg, M.; Imbernon, E. The French musculoskeletal disorders surveillance program: Pays de la Loire network. Occup. Environ. Med. 2009, 66, 471–479. [Google Scholar] [CrossRef] [PubMed]

- Jeong, S.O.; Kook, J. CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe. Appl. Sci. 2023, 13, 938. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Kee, D. An empirical comparison of OWAS, RULA and REBA based on self-reported discomfort. Int. J. Occup. Saf. Ergon. 2020, 26, 285–295. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.K.; Lee, S.Y.; Lee, K.S.; Kim, D.M. Comparisons of ergonomic evaluation tools (ALLA, RULA, REBA and OWAS) for farm work. Int. J. Occup. Saf. Ergon. 2018, 24, 218–223. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Han, S.; Gül, M.; Al-Hussein, M.; El-Rich, M. 3D visualization-based ergonomic risk assessment and work modification framework and its validation for a lifting task. J. Constr. Eng. Manag. 2018, 144, 04017093. [Google Scholar] [CrossRef]

- Andrews, D.M.; Fiedler, K.M.; Weir, P.L.; Callaghan, J.P. The effect of posture category salience on decision times and errors when using observation-based posture assessment methods. Ergonomics 2012, 55, 1548–1558. [Google Scholar] [CrossRef]

- Sasikumar, V. A model for predicting the risk of musculoskeletal disorders among computer professionals. Int. J. Occup. Saf. Ergon. 2018, 26, 384–396. [Google Scholar] [CrossRef] [PubMed]

- Plantard, P.; Shum, H.P.; Le Pierres, A.S.; Multon, F. Validation of an ergonomic assessment method using Kinect data in real workplace conditions. Appl. Ergon. 2017, 65, 562–569. [Google Scholar] [CrossRef] [PubMed]

- Nath, N.D.; Akhavian, R.; Behzadan, A.H. Ergonomic analysis of construction worker’s body postures using wearable mobile sensors. Appl. Ergon. 2017, 62, 107–117. [Google Scholar] [CrossRef] [PubMed]

- Jayaram, U.; Jayaram, S.; Shaikh, I.; Kim, Y.; Palmer, C. Introducing quantitative analysis methods into virtual environments for real-time and continuous ergonomic evaluations. Comput. Ind. 2006, 57, 283–296. [Google Scholar] [CrossRef]

- Zhang, H.; Yan, X.; Li, H. Ergonomic posture recognition using 3D view-invariant features from single ordinary camera. Autom. Constr. 2018, 94, 1–10. [Google Scholar] [CrossRef]

- Papoutsakis, K.; Papadopoulos, G.; Maniadakis, M.; Papadopoulos, T.; Lourakis, M.; Pateraki, M.; Varlamis, I. Detection of physical strain and fatigue in industrial environments using visual and non-visual low-cost sensors. Technologies 2022, 10, 42. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, X.; Li, H.; Luo, X.; Guo, H.; Fang, Q. Joint-level vision-based ergonomic assessment tool for construction workers. J. Constr. Eng. Manag. 2019, 145, 04019025. [Google Scholar] [CrossRef]

- Vignais, N.; Bernard, F.; Touvenot, G.; Sagot, J.C. Physical risk factors identification based on body sensor network combined to videotaping. Appl. Ergon. 2017, 65, 410–417. [Google Scholar] [CrossRef] [PubMed]

- Yan, X.; Li, H.; Wang, C.; Seo, J.; Zhang, H.; Wang, H. Development of ergonomic posture recognition technique based on 2D ordinary camera for construction hazard prevention through view-invariant features in 2D skeleton motion. Adv. Eng. Inform. 2017, 34, 152–163. [Google Scholar] [CrossRef]

- Battini, D.; Persona, A.; Sgarbossa, F. Innovative real-time system to integrate ergonomic evaluations into warehouse design and management. Comput. Ind. Eng. 2014, 77, 1–10. [Google Scholar] [CrossRef]

- Xu, X.; Robertson, M.; Chen, K.B.; Lin, J.H.; McGorry, R.W. Using the Microsoft Kinect™ to assess 3-D shoulder kinematics during computer use. Appl. Ergon. 2017, 65, 418–423. [Google Scholar] [CrossRef] [PubMed]

- Fang, W.; Love, P.E.; Luo, H.; Ding, L. Computer vision for behaviour-based safety in construction: A review and future directions. Adv. Eng. Inform. 2020, 43, 100980. [Google Scholar] [CrossRef]

- Liu, M.; Han, S.; Lee, S. Tracking-based 3D human skeleton extraction from stereo video camera toward an on-site safety and ergonomic analysis. Constr. Innov. 2016, 16, 348–367. [Google Scholar] [CrossRef]

- Seo, J.; Alwasel, A.; Lee, S.; Abdel-Rahman, E.M.; Haas, C. A comparative study of in-field motion capture approaches for body kinematics measurement in construction. Robotica 2019, 37, 928–946. [Google Scholar] [CrossRef]

- Li, L.; Martin, T.; Xu, X. A novel vision-based real-time method for evaluating postural risk factors associated with musculoskeletal disorders. Appl. Ergon. 2020, 87, 103138. [Google Scholar] [CrossRef]

- Peppoloni, L.; Filippeschi, A.; Ruffaldi, E.; Avizzano, C.A. A novel wearable system for the online assessment of risk for biomechanical load in repetitive efforts. Int. J. Ind. Ergon. 2016, 52, 1–11. [Google Scholar] [CrossRef]

- Clark, R.A.; Pua, Y.H.; Fortin, K.; Ritchie, C.; Webster, K.E.; Denehy, L.; Bryant, A.L. Validity of the Microsoft Kinect for assessment of postural control. Gait Posture 2012, 36, 372–377. [Google Scholar] [CrossRef] [PubMed]

- Trumble, M.; Gilbert, A.; Hilton, A.; Collomosse, J. Deep autoencoder for combined human pose estimation and body model upscaling. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 784–800. [Google Scholar]

- Von Marcard, T.; Henschel, R.; Black, M.J.; Rosenhahn, B.; Pons-Moll, G. Recovering accurate 3d human pose in the wild using imus and a moving camera. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 601–617. [Google Scholar]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7753–7762. [Google Scholar]

- El Makrini, I.; Merckaert, K.; De Winter, J.; Lefeber, D.; Vanderborght, B. Task allocation for improved ergonomics in Human-Robot Collaborative Assembly. Interact. Stud. 2019, 20, 102–133. [Google Scholar] [CrossRef]

- Parra, P.S.; Calleros, O.L.; Ramirez-Serrano, A. Human-robot collaboration systems: Components and applications. In Proceedings of the International Conference of Control, Dynamic Systems, and Robotics, Virtual, 9–11 November 2020; Volume 150, pp. 1–9. [Google Scholar]

- Battini, D.; Faccio, M.; Persona, A.; Sgarbossa, F. New methodological framework to improve productivity and ergonomics in assembly system design. Int. J. Ind. Ergon. 2011, 41, 30–42. [Google Scholar] [CrossRef]

- Nayak, G.K.; Kim, E. Development of a fully automated RULA assessment system based on computer vision. Int. J. Ind. Ergon. 2021, 86, 103218. [Google Scholar] [CrossRef]

- Shuval, K.; Donchin, M. Prevalence of upper extremity musculoskeletal symptoms and ergonomic risk factors at a Hi-Tech company in Israel. Int. J. Ind. Ergon. 2005, 35, 569–581. [Google Scholar] [CrossRef]

- Das, B.; Shikdar, A.A.; Winters, T. Workstation redesign for a repetitive drill press operation: A combined work design and ergonomics approach. Hum. Factors Ergon. Manuf. Serv. Ind. 2007, 17, 395–410. [Google Scholar] [CrossRef]

- Savino, M.M.; Battini, D.; Riccio, C. Visual management and artificial intelligence integrated in a new fuzzy-based full body postural assessment. Comput. Ind. Eng. 2017, 111, 596–608. [Google Scholar] [CrossRef]

- Klir, J.; Yuan, B. Fuzzy Sets and Fuzzy Logic: Theory and Applications; Prentice Hall: Upper Saddle River, NJ, USA, 1995. [Google Scholar]

- Li, H.X.; Al-Hussein, M.; Lei, Z.; Ajweh, Z. Risk identification and assessment of modular construction utilizing fuzzy analytic hierarchy process (AHP) and simulation. Can. J. Civ. Eng. 2013, 40, 1184–1195. [Google Scholar] [CrossRef]

- Markowski, A.S.; Mannan, M.S.; Bigoszewska, A. Fuzzy logic for process safety analysis. J. Loss Prev. Process Ind. 2009, 22, 695–702. [Google Scholar] [CrossRef]

- Nasirzadeh, F.; Afshar, A.; Khanzadi, M.; Howick, S. Integrating system dynamics and fuzzy logic modelling for construction risk management. Constr. Manag. Econ. 2008, 26, 1197–1212. [Google Scholar] [CrossRef]

- Zioa, E.; Baraldia, P.; Librizzia, M. A fuzzy set-based approach for modeling dependence among human errors. Fuzzy Sets Syst. 2008, 160, 1947–1964. [Google Scholar] [CrossRef]

- Marseguerra, M.; Zio Enrico Librizzi, M. Human reliability analysis by fuzzy “CREAM”. Risk Anal. 2007, 27, 137–154. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.J.; Bishu, R.R. Uncertainty of human error and fuzzy approach to human reliability analysis. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2006, 14, 111–129. [Google Scholar] [CrossRef]

- Konstandinidou, M.; Nivolianitou, Z.; Kiranoudis, C.; Markatos, N. A fuzzy modeling application of CREAM methodology for human reliability analysis. Reliab. Eng. Syst. Saf. 2006, 91, 706–716. [Google Scholar] [CrossRef]

- Li, P.C.; Chen, G.H.; Dai, L.C.; Li, Z. Fuzzy logic-based approach for identifying the risk importance of human error. Saf. Sci. 2010, 48, 902–913. [Google Scholar] [CrossRef]

- Golabchi, A.; Han, S.; Fayek, A.R. A fuzzy logic approach to posture-based ergonomic analysis for field observation and assessment of construction manual operations. Can. J. Civ. Eng. 2016, 43, 294–303. [Google Scholar] [CrossRef]

- Contreras-Valenzuela, M.R.; Seuret-Jiménez, D.; Hdz-Jasso, A.M.; León Hernández, V.A.; Abundes-Recilla, A.N.; Trutié-Carrero, E. Design of a Fuzzy Logic Evaluation to Determine the Ergonomic Risk Level of Manual Material Handling Tasks. Int. J. Environ. Res. Public Health 2022, 19, 6511. [Google Scholar] [CrossRef]

- Campanella, P. Neuro-Fuzzy Learning in Context Educative. In Proceedings of the 2021 19th International Conference on Emerging eLearning Technologies and Applications (ICETA), Košice, Slovakia, 11–12 November 2021; pp. 58–69. [Google Scholar]

- Bukowski, L.; Feliks, J. Application of fuzzy sets in evaluation of failure likelihood. In Proceedings of the 18th International Conference on Systems Engineering (ICSEng’05), Las Vegas, NV, USA, 16–18 August 2005; pp. 170–175. [Google Scholar]

- Villani, V.; Sabattini, L.; Czerniaki, J.N.; Mertens, A.; Vogel-Heuser, B.; Fantuzzi, C. Towards modern inclusive factories: A methodology for the development of smart adaptive human-machine interfaces. In Proceedings of the 2017 22nd IEEE international conference on emerging technologies and factory automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–7. [Google Scholar]

- Cherubini, A.; Passama, R.; Crosnier, A.; Lasnier, A.; Fraisse, P. Collaborative manufacturing with physical human–robot interaction. Robot. Comput.-Integr. Manuf. 2016, 40, 1–13. [Google Scholar] [CrossRef]

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Li, L.; Xu, X. A deep learning-based RULA method for working posture assessment. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2019, 63, 1090–1094. [Google Scholar] [CrossRef]

| Angle Name | Acronym | Points of the Skeleton Joints Involved |

|---|---|---|

| Left elbow | EL | <13, 12, 11 |

| Right elbow | ER | <14, 15, 16 |

| Left shoulder | SL | <12, 11, 08 |

| Right shoulder | SR | <15, 16, 08 |

| Left clavicle | CL | <11, 08, 07 |

| Right clavicle | RC | <14, 08, 07 |

| Left knee | KL | <04, 05, 06 |

| Right knee | KR | <01, 02, 03 |

| Neck twisting | NT | <10, 09 08 |

| Neck bending left | NB | <09, 08, 11 |

| Neck bending right | NBR | <09, 08, 14 |

| Neck flexion | NF | <09, 08, 00 |

| Trunk twisting right | TT | <11, 00, 04 |

| Trunk twisting left | TTL | <14, 00, 04 |

| Trunk Bending | TB | <04, 00, 07 |

| Domain Group | Ergonomic Indicator | Score Level According to RULA | |||

|---|---|---|---|---|---|

| Low | Medium | High | |||

| Upper Quadrant (UQ) | Trunk bending angle (degree) | (0°–15°) | (15°–30°) | (>30°) | |

| Left or right elbow angle (degree) | (0°–15°) | (15°–40°) | (>45°) | ||

| Left or right shoulder (degree) | (20°–45°) | (45°–90°) | (>90°) | ||

| Neck bending or rotation angle (degree) | (0°–10°) | (10°–20°) | (>20°) | ||

| Forearm rotation angle (degree) | (0°–90°) | (>90°) | (>90° and crossed) | ||

| Spine (degree) | (0°–20°) | (20°–60°) | (>60°) | ||

| Wrist bending angle (degree) | The value is calculated considering the ulnar or radial deviation (inward or outward rotation) according to the RULA and Health Safety Executive guidelines (2014) | (0°) The wrist is not subject to rotation. | (+15°; −15°) | (>15°) | |

| Stereotypy, loads, typical actions (TA) | Arm position for material withdrawal | (Without extending an arm) | (Extending an arm) | (Two hands needed) | |

| Trunk rotation (degree) | (0°–45°) | (45°–90°) | (>90°) | ||

| Repetition of the same movements (RM) | This parameter refers to high repetition of the same movements | (From 25% to 50% of the cycle time) | (From 51% to 80% of the cycle time) | (>80%) | |

| Right Elbow [angles] | Left Elbow [angles] | Right Shoulder [angles] | Left Shoulder [angles] | Neck Bending [angles] | Spine [angles] | Repetition of the Same Movements [time] | |

|---|---|---|---|---|---|---|---|

| EO1 | 102.695 | 97.214 | 68.531 | 69.910 | 26.200 | 98.224 | 239.786 |

| EO2 | 107.364 | 90.895 | 68.257 | 69.259 | 29.533 | 97.857 | 235.964 |

| EO3 | 112.442 | 106.222 | 68.574 | 69.125 | 30.267 | 96.867 | 254.074 |

| EO4 | 110.278 | 102.848 | 64.148 | 58.482 | 27.388 | 93.154 | 297.116 |

| EO5 | 111.060 | 97.371 | 63.036 | 60.891 | 24.281 | 92.560 | 198.744 |

| EO6 | 111.554 | 90.522 | 63.151 | 59.894 | 25.081 | 94.731 | 298.116 |

| EO7 | 104.628 | 96.774 | 63.587 | 61.163 | 27.969 | 95.033 | 245.700 |

| EO8 | 104.122 | 99.810 | 63.661 | 61.779 | 27.365 | 96.354 | 267.540 |

| EO9 | 109.308 | 113.187 | 64.225 | 60.825 | 22.259 | 92.860 | 236.054 |

| EO10 | 84.007 | 95.473 | 67.993 | 59.761 | 15.216 | 95.207 | 253.508 |

| EO11 | 90.000 | 100.099 | 69.199 | 14.468 | 14.468 | 94.822 | 305.760 |

| EO12 | 125.132 | 92.035 | 83.918 | 64.064 | 15.285 | 99.599 | 259.820 |

| EO13 | 108.646 | 92.460 | 68.662 | 61.729 | 13.165 | 96.608 | 278.650 |

| EO14 | 38.817 | 77.647 | 63.000 | 69.242 | 21.789 | 96.694 | 229.170 |

| Ergonomic Parameters | |||||

|---|---|---|---|---|---|

| Right Elbow | Right Shoulder | Neck Bending | Spine | Repetition of the Same Movements | |

| Range According to RULA | (0°–45°) | (20°–90°) | (0°–20°) | (0°–60°) | (From 25% to 80%) |

| Elementary Operation | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value |

| EO1 | 0.571 | 0.761 | 0.655 | 0.137 | 0.630 |

| EO2 | 0.596 | 0.758 | 0.738 | 0.131 | 0.620 |

| EO3 | 0.625 | 0.762 | 0.757 | 0.114 | 0.670 |

| EO4 | 0.613 | 0.713 | 0.685 | 0.052 | 0.780 |

| EO5 | 0.617 | 0.700 | 0.607 | 0.0427 | 0.520 |

| EO6 | 0.620 | 0.702 | 0.627 | 0.0789 | 0.580 |

| EO7 | 0.581 | 0.707 | 0.699 | 0.0839 | 0.780 |

| EO8 | 0.578 | 0.707 | 0.684 | 0.1059 | 0.660 |

| EO9 | 0.607 | 0.714 | 0.556 | 0.0477 | 0.700 |

| EO10 | 0.467 | 0.755 | 0.380 | 0.0868 | 0.618 |

| EO11 | 0.500 | 0.769 | 0.362 | 0.0804 | 0.664 |

| EO12 | 0.695 | 0.932 | 0.382 | 0.1600 | 0.800 |

| EO13 | 0.604 | 0.763 | 0.329 | 0.1101 | 0.680 |

| EO14 | 0.882 | 0.700 | 0.595 | 0.4449 | 0.750 |

| Elementary Operation | IcTOT | Criticality Class |

|---|---|---|

| EO1 | 0.69 | Medium |

| EO2 | 0.69 | Medium |

| EO3 | 0.7 | Medium |

| EO4 | 0.65 | Medium |

| EO5 | 0.41 | Medium |

| EO6 | 0.44 | Medium |

| EO7 | 0.7 | Medium |

| EO8 | 0.63 | Medium |

| EO9 | 0.60 | Medium |

| EO10 | 0.33 | Low |

| EO11 | 0.39 | Medium |

| EO12 | 0.77 | High |

| EO13 | 0.44 | Medium |

| EO14 | 0.833 | High |

| Elementary Operation | Corrective Actions Proposed | Corrective Action |

|---|---|---|

| EO14 | Implementation of a collaborative robot |  |

| Elementary Operation | IcTOT | Criticality Class |

|---|---|---|

| EO1 | 0.69 | Medium |

| EO2 | 0.69 | Medium |

| EO3 | 0.7 | Medium |

| EO4 | 0.65 | Medium |

| EO5 | 0.41 | Medium |

| EO6 | 0.44 | Medium |

| EO7 | 0.7 | Medium |

| EO8 | 0.63 | Medium |

| EO9 | 0.60 | Medium |

| EO10 | 0.33 | Low |

| EO11 | 0.39 | Medium |

| EO12 | 0.77 | High |

| EO13 | 0.44 | Medium |

| EO14 | 0 | Low |

| Ergonomic Parameters | |||||

|---|---|---|---|---|---|

| Right Elbow | Right Shoulder | Neck Bending | Spine | Repetition of the Same Movements | |

| Range According to RULA | (0°–45°) | (20°–90°) | (0°–20°) | (0°–60°) | (From 25% to 80%) |

| Elementary Operation | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value | Normalized to the Maximum Value |

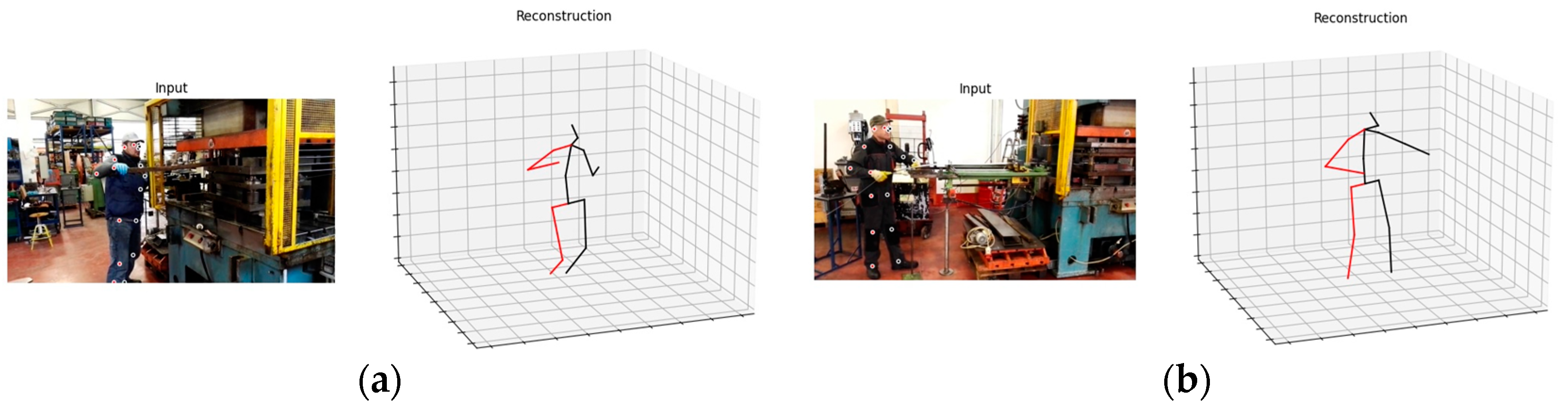

| EOi in Figure 10a | 0.438 | 0.977 | 0.596 | 0.272 | 0.158 |

| EOi in Figure 10b | 0.497 | 0.791 | 0.475 | 0.103 | 0.354 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Menanno, M.; Riccio, C.; Benedetto, V.; Gissi, F.; Savino, M.M.; Troiano, L. An Ergonomic Risk Assessment System Based on 3D Human Pose Estimation and Collaborative Robot. Appl. Sci. 2024, 14, 4823. https://doi.org/10.3390/app14114823

Menanno M, Riccio C, Benedetto V, Gissi F, Savino MM, Troiano L. An Ergonomic Risk Assessment System Based on 3D Human Pose Estimation and Collaborative Robot. Applied Sciences. 2024; 14(11):4823. https://doi.org/10.3390/app14114823

Chicago/Turabian StyleMenanno, Marialuisa, Carlo Riccio, Vincenzo Benedetto, Francesco Gissi, Matteo Mario Savino, and Luigi Troiano. 2024. "An Ergonomic Risk Assessment System Based on 3D Human Pose Estimation and Collaborative Robot" Applied Sciences 14, no. 11: 4823. https://doi.org/10.3390/app14114823

APA StyleMenanno, M., Riccio, C., Benedetto, V., Gissi, F., Savino, M. M., & Troiano, L. (2024). An Ergonomic Risk Assessment System Based on 3D Human Pose Estimation and Collaborative Robot. Applied Sciences, 14(11), 4823. https://doi.org/10.3390/app14114823