A Deep Learning Approach for the Fast Generation of Synthetic Computed Tomography from Low-Dose Cone Beam Computed Tomography Images on a Linear Accelerator Equipped with Artificial Intelligence

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients

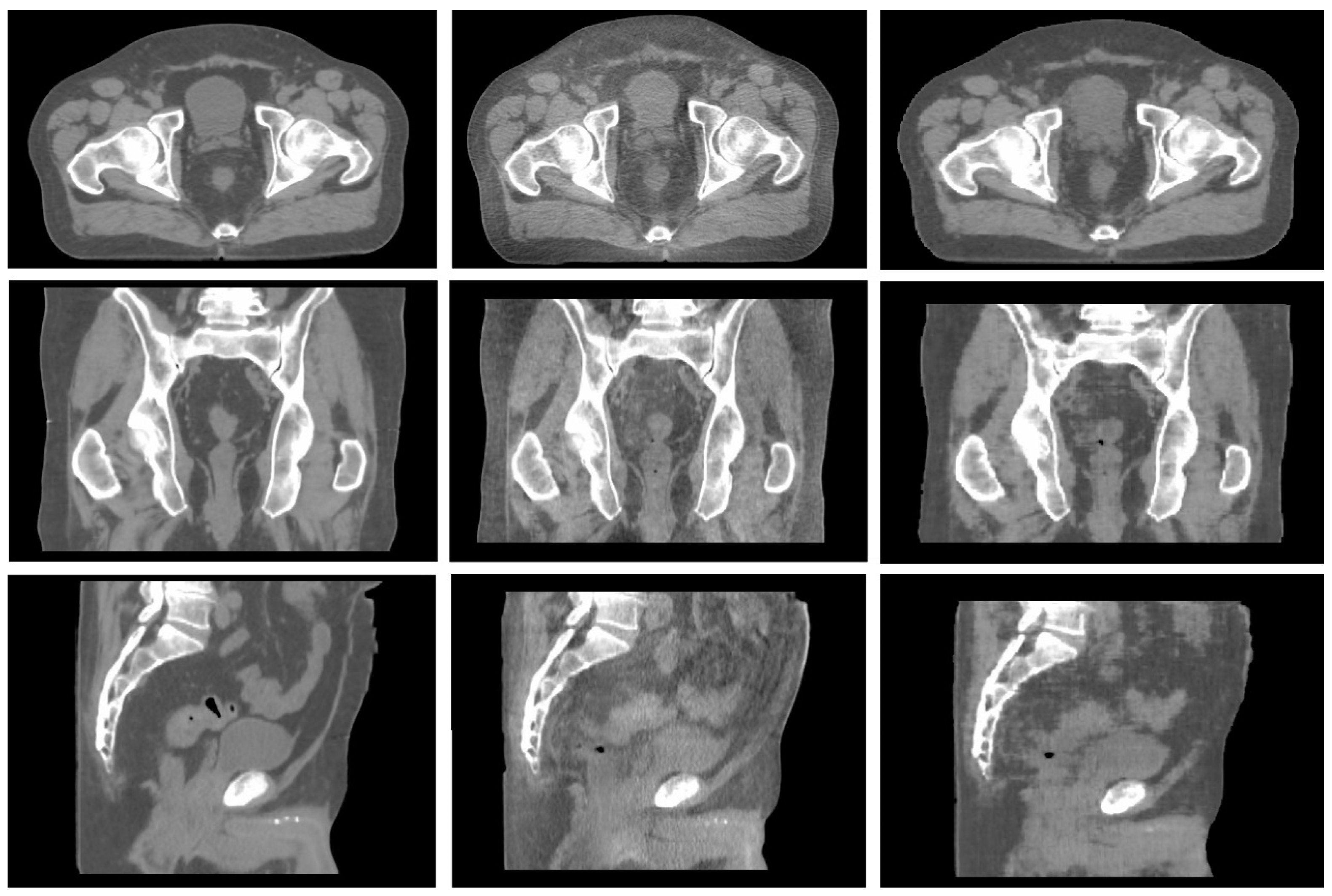

2.2. Synthetic CT Generation

- -

- High anatomical correspondence in terms of bony anatomy.

- -

- Shape correspondence related to the patient’s body is displayed in the two images.

- -

- High agreement in terms of location and volume of air pockets.

- -

- No presence of image artifacts in CBCT due to the presence of large air bubbles.

- -

- No presence of cut images due to a reduced field of view (FOV), a condition typical of apical CBCT slices.

2.3. Synthetic CT Evaluation

- -

- Percentage volume covered by 95% of the prescription dose (V95% [%]).

- -

- Percentage volume covered by 105% of the prescription dose (V105% [%]).

- -

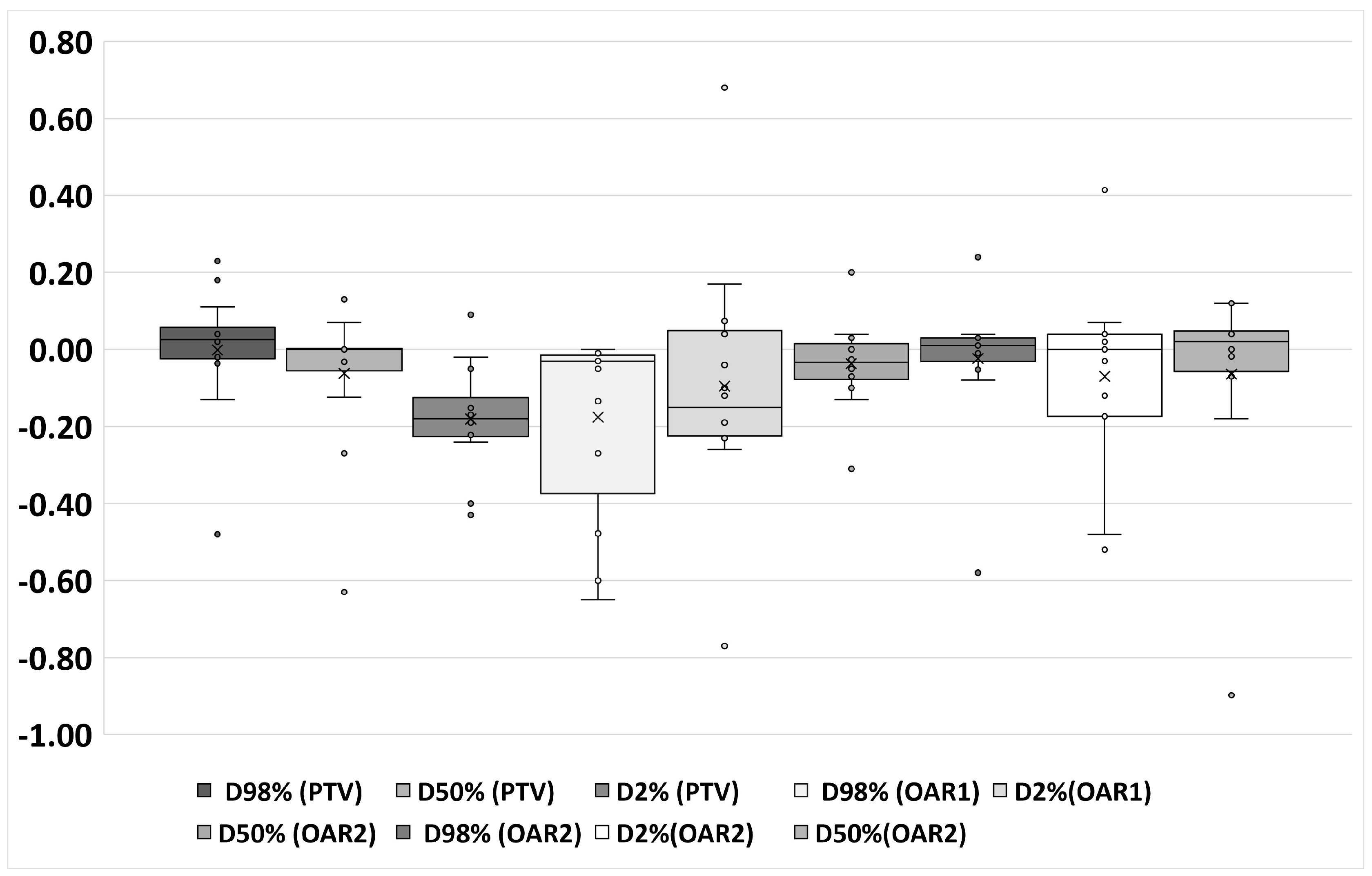

- Near-maximum dose in Gy, which is the dose covering 2% of the PTV volume (D2%[Gy]).

- -

- Near-minimum dose in Gy, which is the dose covering 98% of the PTV volume (D98%[Gy]).

- -

- Median dose in Gy, which is the dose covering 50% of the PTV (D50%[Gy]).

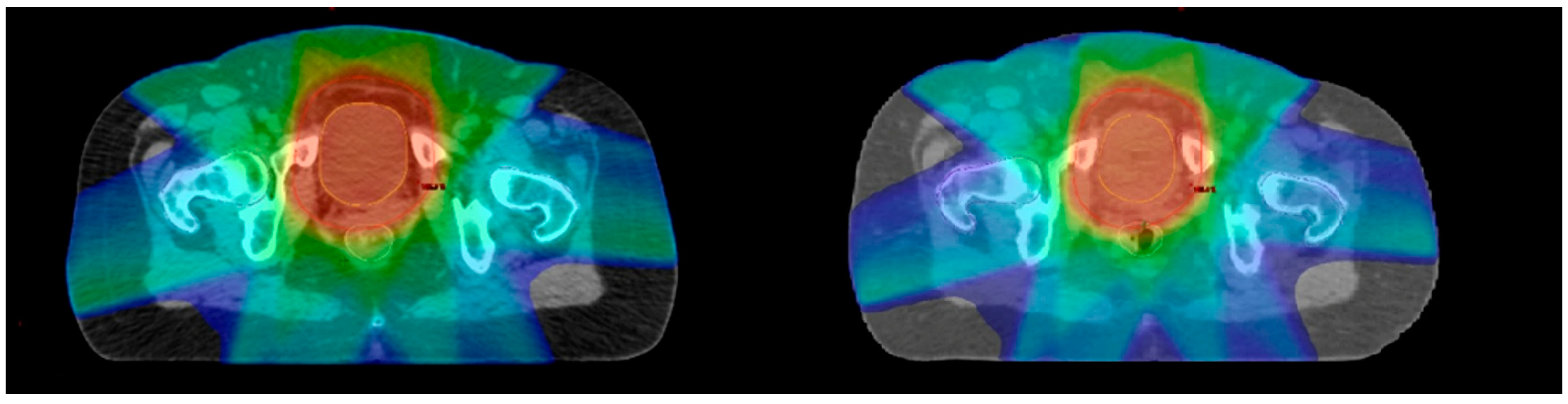

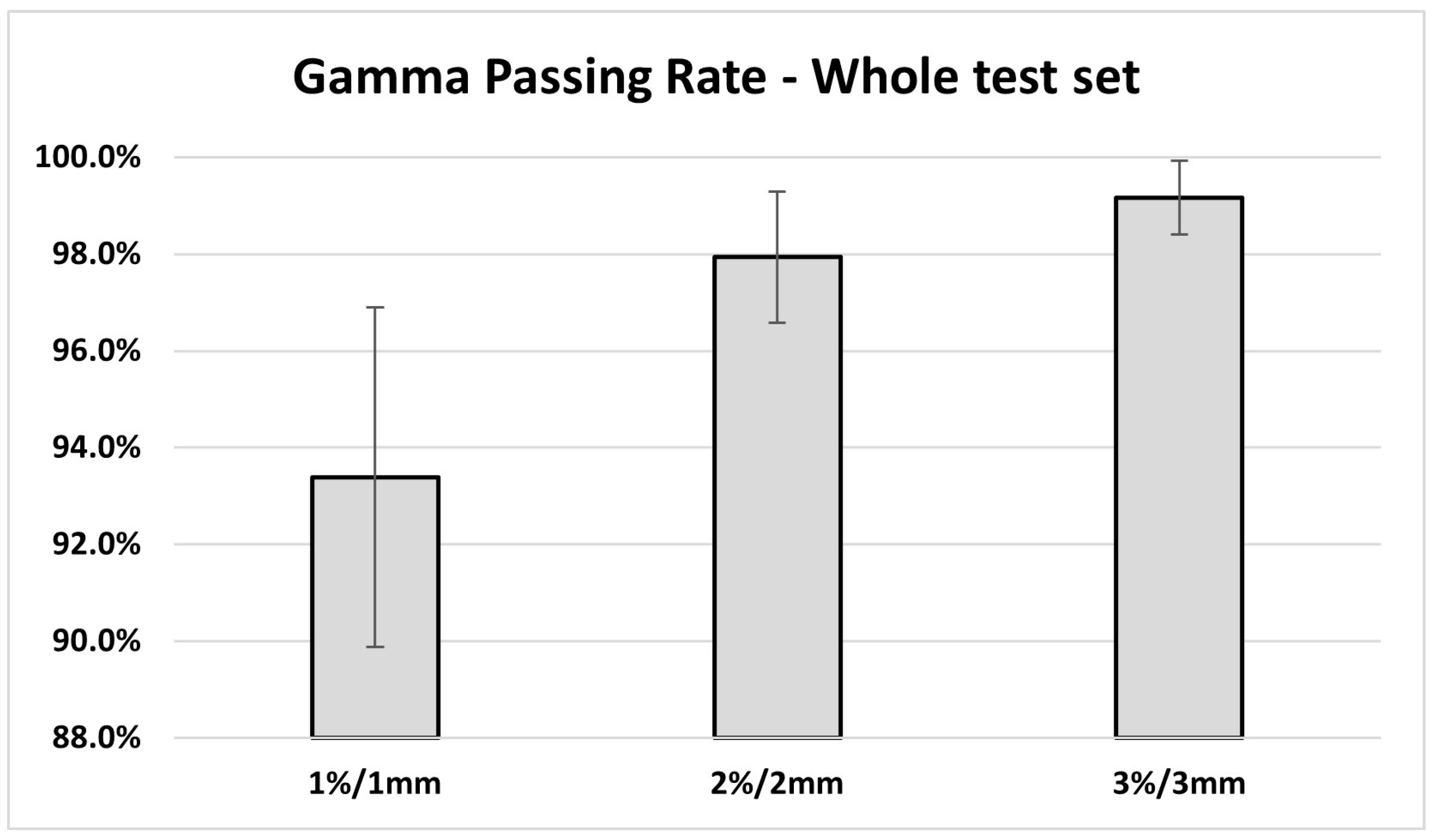

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amisha, N.; Malik, P.; Pathania, M.; Rathaur, V.K. Overview of Artificial Intelligence in Medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef]

- Chamunyonga, C.; Edwards, C.; Caldwell, P.; Rutledge, P.; Burbery, J. The Impact of Artificial Intelligence and Machine Learning in Radiation Therapy: Considerations for Future Curriculum Enhancement. J. Med. Imaging Radiat. Sci. 2020, 51, 214–220. [Google Scholar] [CrossRef]

- He, C.; Liu, W.; Xu, J.; Huang, Y.; Dong, Z.; Wu, Y.; Kharrazi, H. Efficiency, Accuracy, and Health Professional’s Perspectives Regarding Artificial Intelligence in Radiology Practice: A Scoping Review. iRADIOLOGY 2024, 2, 156–172. [Google Scholar] [CrossRef]

- Archambault, L.; Christopher Boylan, C.B.; Drew Bullock, D.B.; Tomasz Morgas, T.M.; Jarkko Peltola, J.P.; Emmi Ruokokoski, E.R.; Angelo Genghi, A.G.; Benjamin Haas, B.H.; Pauli Suhonen, P.S.; Stephen Thompson, S.T. Making On-Line Adaptive Radiotherapy Possible Using Artificial Intellgence and Machine Learning for Efficient Daily Replanning. Med. Phys. Int. J. 2020, 8, 10. [Google Scholar]

- Rudra, S.; Jiang, N.; Rosenberg, S.A.; Olsen, J.R.; Roach, M.C.; Wan, L.; Portelance, L.; Mellon, E.A.; Bruynzeel, A.; Lagerwaard, F.; et al. Using Adaptive Magnetic Resonance Image-Guided Radiation Therapy for Treatment of Inoperable Pancreatic Cancer. Cancer Med. 2019, 8, 2123–2132. [Google Scholar] [CrossRef]

- Cusumano, D.; Boldrini, L.; Dhont, J.; Fiorino, C.; Green, O.; Güngör, G.; Jornet, N.; Klüter, S.; Landry, G.; Mattiucci, G.C.; et al. Artificial Intelligence in Magnetic Resonance Guided Radiotherapy: Medical and Physical Considerations on State of Art and Future Perspectives. Phys. Med. 2021, 85, 175–191. [Google Scholar] [CrossRef]

- Arabi, H.; Dowling, J.A.; Burgos, N.; Han, X.; Greer, P.B.; Koutsouvelis, N.; Zaidi, H. Comparison of Synthetic CT Generation Algorithms for MRI-Only Radiation Planning in the Pelvic Region. In 2018 IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Johnstone, E.; Wyatt, J.J.; Henry, A.M.; Short, S.C.; Sebag-Montefiore, D.; Murray, L.; Kelly, C.G.; McCallum, H.M.; Speight, R. Systematic Review of Synthetic Computed Tomography Generation Methodologies for Use in Magnetic Resonance Imaging-Only Radiation Therapy. Int. J. Radiat. Oncol. Biol. Phys. 2018, 100, 199–217. [Google Scholar] [CrossRef]

- Mittauer, K.E.; Hill, P.M.; Geurts, M.W.; De Costa, A.-M.; Kimple, R.J.; Bassetti, M.F.; Bayouth, J.E. STAT-ART: The Promise and Practice of a Rapid Palliative Single Session of MR-Guided Online Adaptive Radiotherapy (ART). Front. Oncol. 2019, 9, 1013. [Google Scholar] [CrossRef]

- Cusumano, D.; Placidi, L.; Teodoli, S.; Boldrini, L.; Greco, F.; Longo, S.; Cellini, F.; Dinapoli, N.; Valentini, V.; De Spirito, M.; et al. On the Accuracy of Bulk Synthetic CT for MR-Guided Online Adaptive Radiotherapy. Radiol. Med. 2019, 125, 157–164. [Google Scholar] [CrossRef]

- Kim, J.; Garbarino, K.; Schultz, L.; Levin, K.; Movsas, B.; Siddiqui, M.S.; Chetty, I.J.; Glide-Hurst, C. Dosimetric Evaluation of Synthetic CT Relative to Bulk Density Assignment-Based Magnetic Resonance-Only Approaches for Prostate Radiotherapy. Radiat Oncol. 2015, 10, 239. [Google Scholar] [CrossRef]

- Rigaud, B.; Simon, A.; Castelli, J.; Lafond, C.; Acosta, O.; Haigron, P.; Cazoulat, G.; de Crevoisier, R. Deformable Image Registration for Radiation Therapy: Principle, Methods, Applications and Evaluation. Acta Oncol. 2019, 58, 1225–1237. [Google Scholar] [CrossRef]

- Largent, A.; Barateau, A.; Nunes, J.-C.; Mylona, E.; Castelli, J.; Lafond, C.; Greer, P.B.; Dowling, J.A.; Baxter, J.; Saint-Jalmes, H.; et al. Comparison of Deep Learning-Based and Patch-Based Methods for Pseudo-CT Generation in MRI-Based Prostate Dose Planning. Int. J. Radiat. Oncol. Biol. Phys. 2019, 105, 1137–1150. [Google Scholar] [CrossRef]

- Gupta, D.; Kim, M.; Vineberg, K.A.; Balter, J.M. Generation of Synthetic CT Images From MRI for Treatment Planning and Patient Positioning Using a 3-Channel U-Net Trained on Sagittal Images. Front. Oncol. 2019, 9, 964. [Google Scholar] [CrossRef]

- Chen, S.; Qin, A.; Zhou, D.; Yan, D. Technical Note: U-Net-Generated Synthetic CT Images for Magnetic Resonance Imaging-Only Prostate Intensity-Modulated Radiation Therapy Treatment Planning. Med. Phys. 2018, 45, 5659–5665. [Google Scholar] [CrossRef]

- Cusumano, D.; Lenkowicz, J.; Votta, C.; Boldrini, L.; Placidi, L.; Catucci, F.; Dinapoli, N.; Antonelli, M.V.; Romano, A.; De Luca, V.; et al. A Deep Learning Approach to Generate Synthetic CT in Low Field MR-Guided Adaptive Radiotherapy for Abdominal and Pelvic Cases. Radiother. Oncol. 2020, 153, 205–212. [Google Scholar] [CrossRef]

- Lenkowicz, J.; Votta, C.; Nardini, M.; Quaranta, F.; Catucci, F.; Boldrini, L.; Vagni, M.; Menna, S.; Placidi, L.; Romano, A.; et al. A Deep Learning Approach to Generate Synthetic CT in Low Field MR-Guided Radiotherapy for Lung Cases. Radiother. Oncol. 2022, 176, 31–38. [Google Scholar] [CrossRef]

- Szmul, A.; Taylor, S.; Lim, P.; Cantwell, J.; Moreira, I.; Zhang, Y.; D’Souza, D.; Moinuddin, S.; Gaze, M.N.; Gains, J.; et al. Deep Learning Based Synthetic CT from Cone Beam CT Generation for Abdominal Paediatric Radiotherapy. Phys. Med. Biol. 2023, 68, 105006. [Google Scholar] [CrossRef]

- Gao, L.; Xie, K.; Wu, X.; Lu, Z.; Li, C.; Sun, J.; Lin, T.; Sui, J.; Ni, X. Generating Synthetic CT from Low-Dose Cone-Beam CT by Using Generative Adversarial Networks for Adaptive Radiotherapy. Radiat. Oncol. 2021, 16, 202. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. arXiv 2018, arXiv:1611.07004. [Google Scholar]

- Dinapoli, N.; Alitto, A.R.; Vallati, M.; Gatta, R.; Autorino, R.; Boldrini, L.; Damiani, A.; Valentini, V. Moddicom: A Complete and Easily Accessible Library for Prognostic Evaluations Relying on Image Features. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2015, 2015, 771–774. [Google Scholar] [CrossRef]

- Song, J.-H.; Kim, M.-J.; Park, S.-H.; Lee, S.-R.; Lee, M.-Y.; Lee, D.S.; Suh, T.S. Gamma Analysis Dependence on Specified Low-Dose Thresholds for VMAT QA. J. Appl. Clin. Med. Phys. 2015, 16, 263–272. [Google Scholar] [CrossRef]

- Taylor, J. Introduction to Error Analysis, the Study of Uncertainties in Physical Measurements, 2nd ed.; University Science Books: Mill Valley, CA, USA, 1997; ISBN 9780935702422. [Google Scholar]

- Chen, L.; Liang, X.; Shen, C.; Jiang, S.; Wang, J. Synthetic CT Generation from CBCT Images via Deep Learning. Med. Phys. 2020, 47, 1115–1125. [Google Scholar] [CrossRef]

- Wang, X.; Jian, W.; Zhang, B.; Zhu, L.; He, Q.; Jin, H.; Yang, G.; Cai, C.; Meng, H.; Tan, X.; et al. Synthetic CT Generation from Cone-Beam CT Using Deep-Learning for Breast Adaptive Radiotherapy. J. Radiat. Res. Appl. Sci. 2022, 15, 275–282. [Google Scholar] [CrossRef]

- Harms, J.; Lei, Y.; Wang, T.; Zhang, R.; Zhou, J.; Tang, X.; Curran, W.J.; Liu, T.; Yang, X. Paired Cycle-GAN-Based Image Correction for Quantitative Cone-Beam Computed Tomography. Med. Phys. 2019, 46, 3998–4009. [Google Scholar] [CrossRef]

- Dong, G.; Zhang, C.; Liang, X.; Deng, L.; Zhu, Y.; Zhu, X.; Zhou, X.; Song, L.; Zhao, X.; Xie, Y. A Deep Unsupervised Learning Model for Artifact Correction of Pelvis Cone-Beam CT. Front. Oncol. 2021, 11, 686875. [Google Scholar] [CrossRef]

- Peng, J.; Qiu, R.L.J.; Wynne, J.F.; Chang, C.-W.; Pan, S.; Wang, T.; Roper, J.; Liu, T.; Patel, P.R.; Yu, D.S.; et al. CBCT-Based Synthetic CT Image Generation Using Conditional Denoising Diffusion Probabilistic Model. Med. Phys. 2024, 51, 1847–1859. [Google Scholar] [CrossRef]

- Pan, S.; Abouei, E.; Wynne, J.; Chang, C.-W.; Wang, T.; Qiu, R.L.J.; Li, Y.; Peng, J.; Roper, J.; Patel, P.; et al. Synthetic CT Generation from MRI Using 3D Transformer-Based Denoising Diffusion Model. Med. Phys. 2024, 51, 2538–2548. [Google Scholar] [CrossRef]

- Hoffmans-Holtzer, N.; Magallon-Baro, A.; de Pree, I.; Slagter, C.; Xu, J.; Thill, D.; Olofsen-van Acht, M.; Hoogeman, M.; Petit, S. Evaluating AI-Generated CBCT-Based Synthetic CT Images for Target Delineation in Palliative Treatments of Pelvic Bone Metastasis at Conventional C-Arm Linacs. Radiother. Oncol. 2024, 192, 110110. [Google Scholar] [CrossRef]

- Elmpt, W.v.; Taasti, V.T.; Redalen, K.R. Current and Future Developments of Synthetic Computed Tomography Generation for Radiotherapy. Phys. Imaging Radiat. Oncol. 2023, 28, 100521. [Google Scholar] [CrossRef]

- Dal Bello, R.; Lapaeva, M.; La Greca Saint-Esteven, A.; Wallimann, P.; Günther, M.; Konukoglu, E.; Andratschke, N.; Guckenberger, M.; Tanadini-Lang, S. Patient-Specific Quality Assurance Strategies for Synthetic Computed Tomography in Magnetic Resonance-Only Radiotherapy of the Abdomen. Phys. Imaging Radiat. Oncol. 2023, 27, 100464. [Google Scholar] [CrossRef]

| Parameters | Training (n = 39) | Test (n = 14) |

|---|---|---|

| Sex | ||

| Male | 20 | 7 |

| Female | 19 | 7 |

| Mean age (range) | 64.9 (48–82) | 66.7 (49–83) |

| Tumour | ||

| Primary | 20 | 7 |

| Metastases | 19 | 7 |

| Prescription Dose | ||

| 67.5 Gy in 25 fx | 5 | |

| 55 Gy in 25 fx | 2 | |

| 30 Gy in 10 fx | 6 | |

| 20 Gy in 4 fx | 1 |

| Parameter | Curative | Palliative | p-Value |

|---|---|---|---|

| 1%/1 mm | 94.7 ± 1.2% | 92.1 ± 4.6% | 0.19 |

| 2%/2 mm | 98.3 ± 0.4% | 97.6 ± 1.9% | 0.42 |

| 3%/3 mm | 99.3 ± 0.4% | 99.0 ± 1.0% | 0.54 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vellini, L.; Zucca, S.; Lenkowicz, J.; Menna, S.; Catucci, F.; Quaranta, F.; Pilloni, E.; D'Aviero, A.; Aquilano, M.; Di Dio, C.; et al. A Deep Learning Approach for the Fast Generation of Synthetic Computed Tomography from Low-Dose Cone Beam Computed Tomography Images on a Linear Accelerator Equipped with Artificial Intelligence. Appl. Sci. 2024, 14, 4844. https://doi.org/10.3390/app14114844

Vellini L, Zucca S, Lenkowicz J, Menna S, Catucci F, Quaranta F, Pilloni E, D'Aviero A, Aquilano M, Di Dio C, et al. A Deep Learning Approach for the Fast Generation of Synthetic Computed Tomography from Low-Dose Cone Beam Computed Tomography Images on a Linear Accelerator Equipped with Artificial Intelligence. Applied Sciences. 2024; 14(11):4844. https://doi.org/10.3390/app14114844

Chicago/Turabian StyleVellini, Luca, Sergio Zucca, Jacopo Lenkowicz, Sebastiano Menna, Francesco Catucci, Flaviovincenzo Quaranta, Elisa Pilloni, Andrea D'Aviero, Michele Aquilano, Carmela Di Dio, and et al. 2024. "A Deep Learning Approach for the Fast Generation of Synthetic Computed Tomography from Low-Dose Cone Beam Computed Tomography Images on a Linear Accelerator Equipped with Artificial Intelligence" Applied Sciences 14, no. 11: 4844. https://doi.org/10.3390/app14114844

APA StyleVellini, L., Zucca, S., Lenkowicz, J., Menna, S., Catucci, F., Quaranta, F., Pilloni, E., D'Aviero, A., Aquilano, M., Di Dio, C., Iezzi, M., Re, A., Preziosi, F., Piras, A., Boschetti, A., Piccari, D., Mattiucci, G. C., & Cusumano, D. (2024). A Deep Learning Approach for the Fast Generation of Synthetic Computed Tomography from Low-Dose Cone Beam Computed Tomography Images on a Linear Accelerator Equipped with Artificial Intelligence. Applied Sciences, 14(11), 4844. https://doi.org/10.3390/app14114844