Aerial Image Segmentation of Nematode-Affected Pine Trees with U-Net Convolutional Neural Network

Abstract

1. Introduction

- (1)

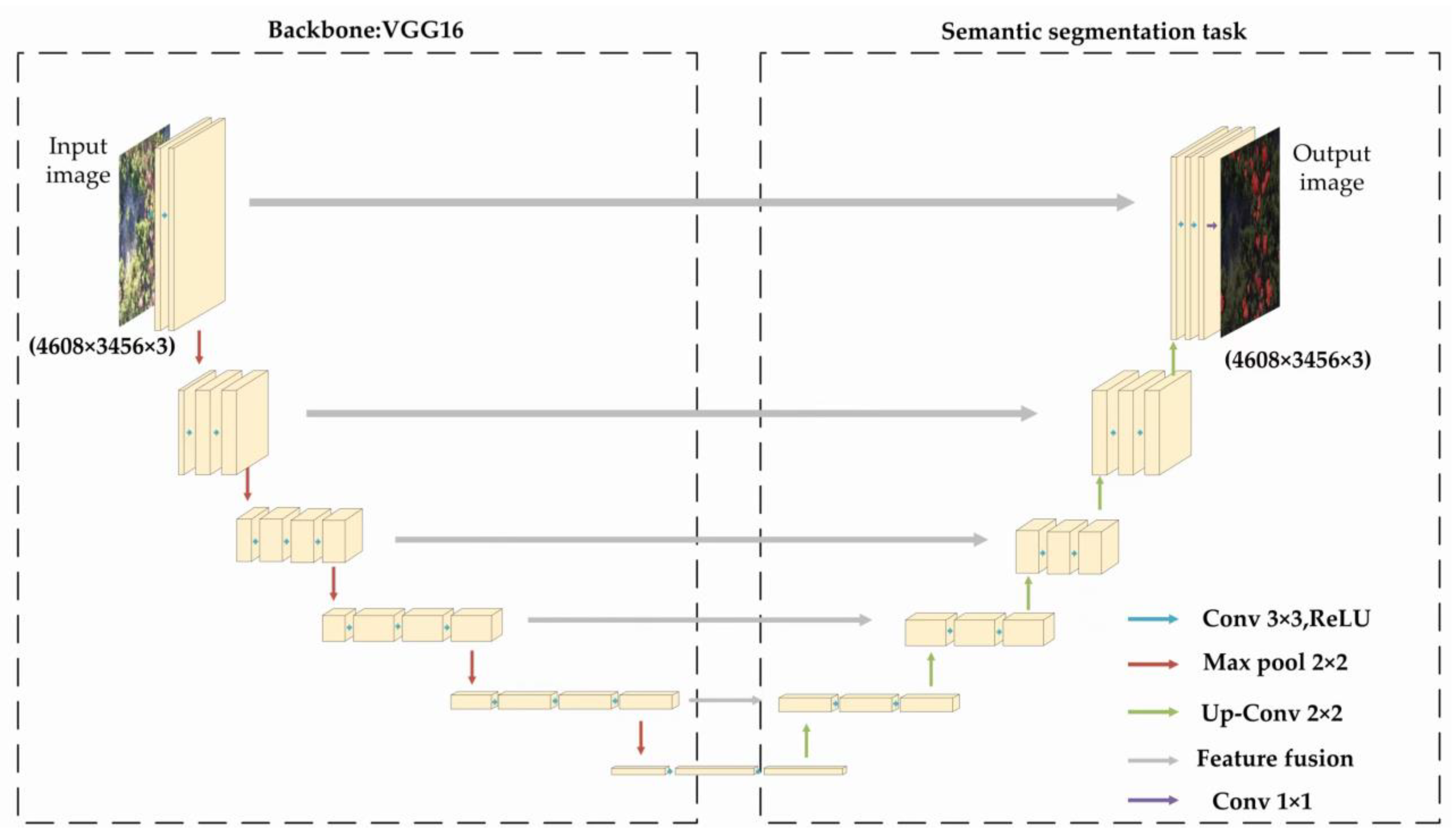

- Increase the depth of the U-Net network to facilitate high-dimensional feature extraction.

- (2)

- Using the characteristics of a small convolutional kernel of the VGG backbone network to significantly reduce the number of parameters, thereby speeding up training and improving segmentation accuracy.

2. Materials and Methods

2.1. Materials

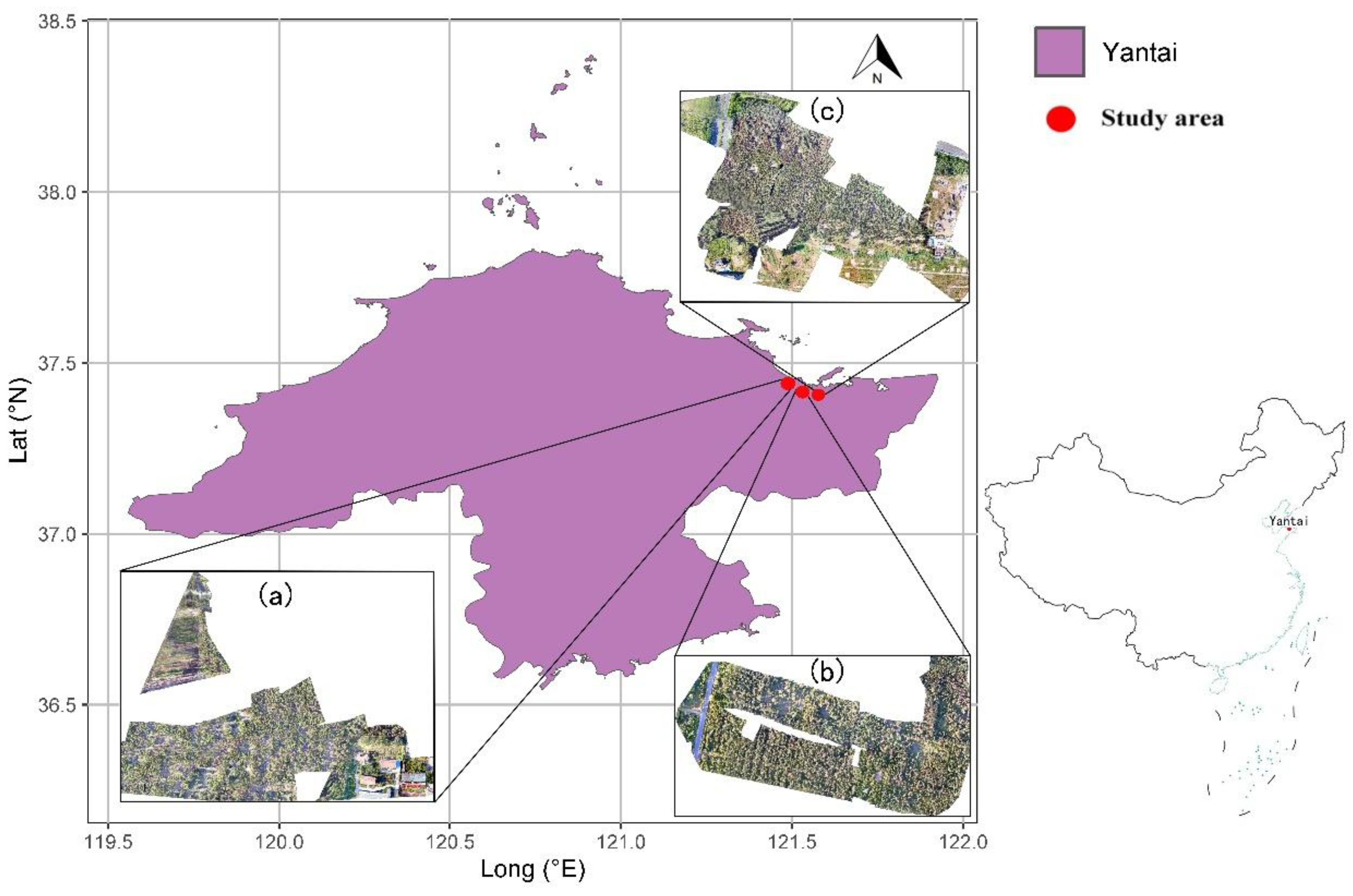

2.1.1. Study Area

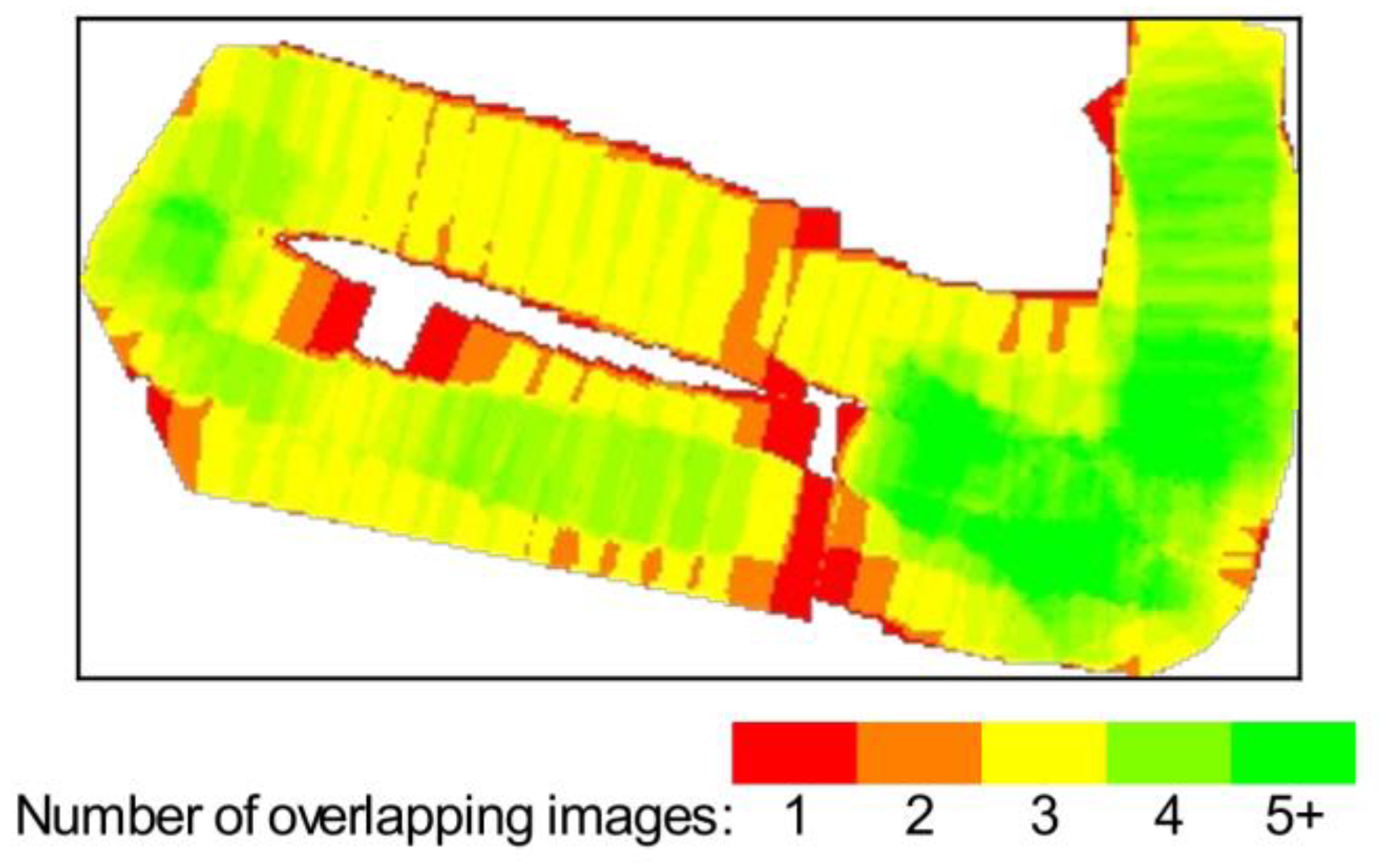

2.1.2. Input Data

2.2. Methods

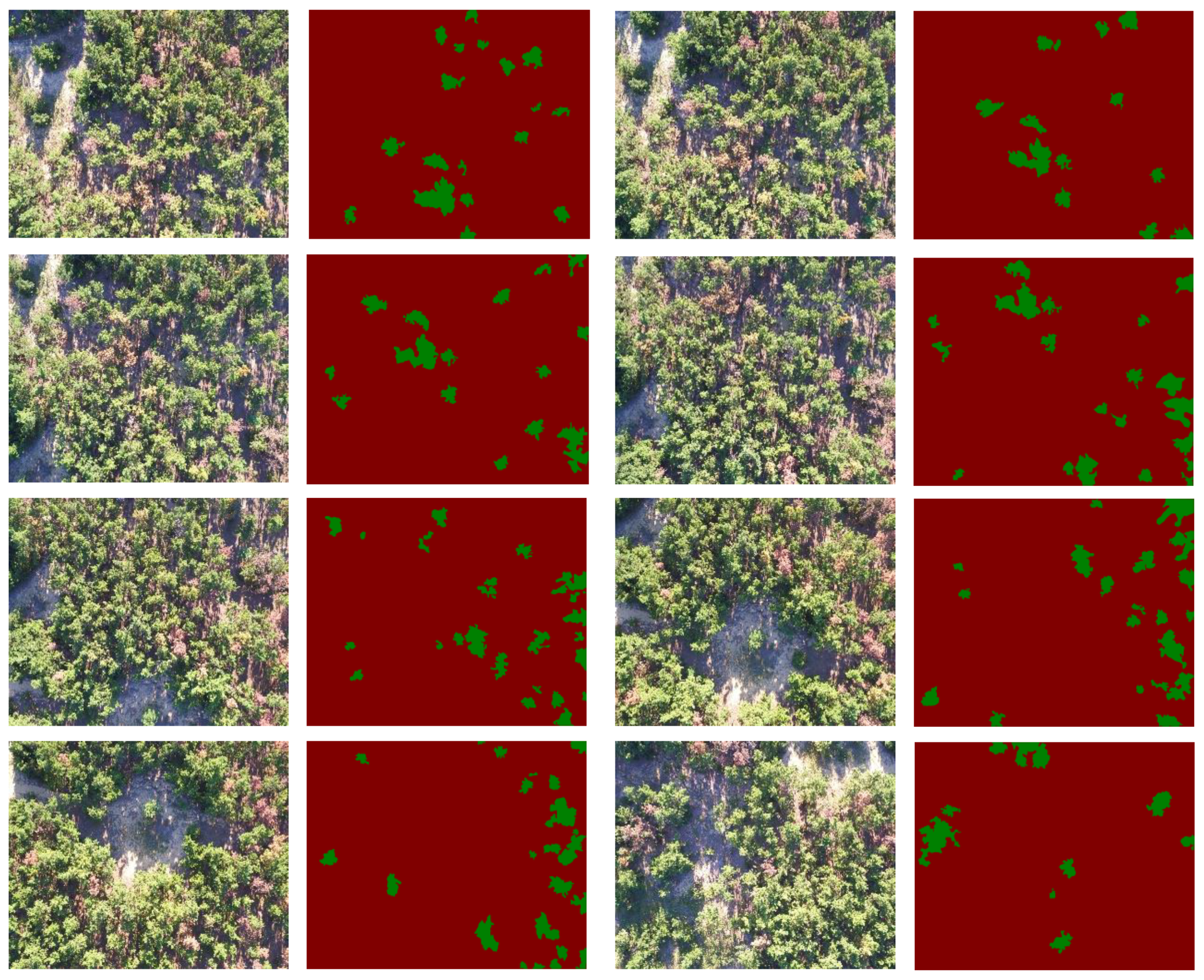

2.2.1. Image Segmentation Dataset

2.2.2. U-Net Construction

2.2.3. Loss Function

2.2.4. Evaluation Systems

2.2.5. Model Training

3. Results

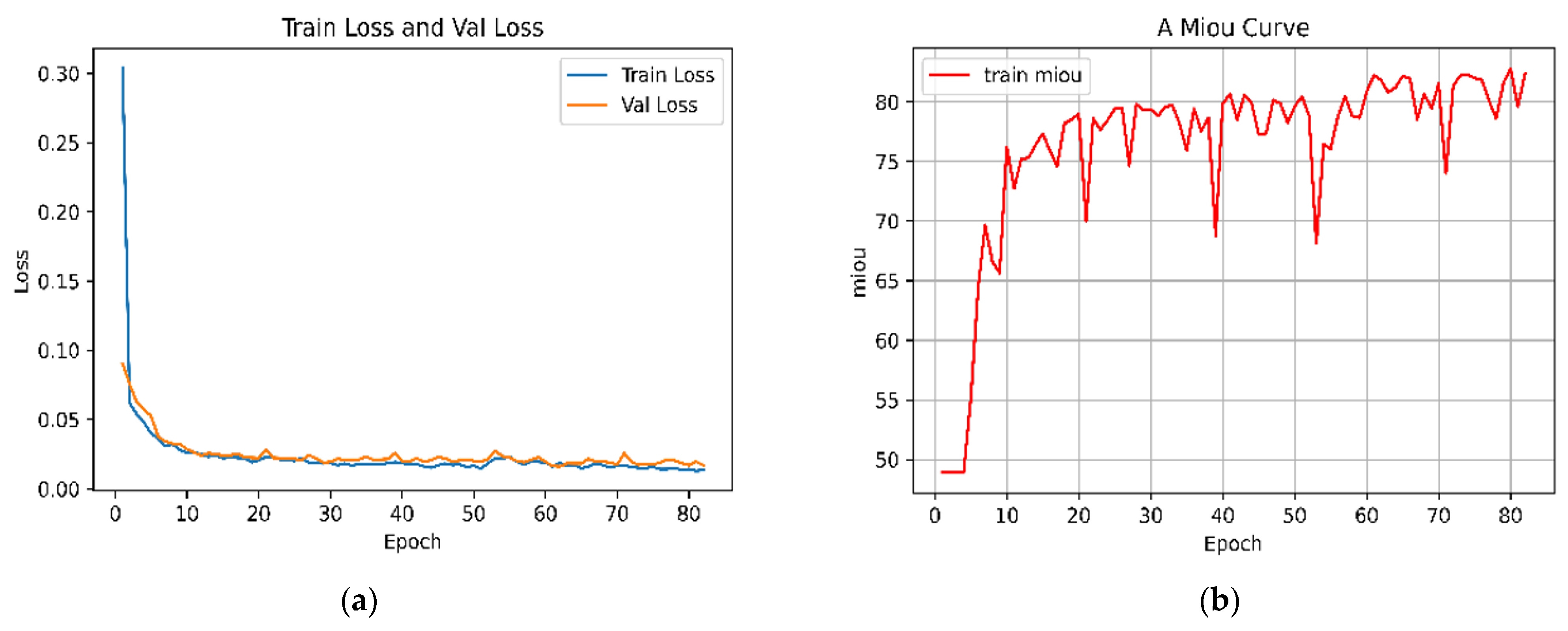

3.1. Model Training Results

3.2. Results of Monitoring Pine Nematode Disease Trees

3.3. Comparison of Model Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ikegami, M.; Jenkins, T.A.R. Estimate global risks of a forest disease under current and future climates using species distribution model and simple thermal model–Pine Wilt disease as a model case. For. Ecol. Manag. 2018, 409, 343–352. [Google Scholar] [CrossRef]

- Ichihara, Y.; Fukuda, K.; Suzuki, K. Early symptom development and histological changes associated with migration of Bursaphe-lenchus xylophilus in seedling tissues of Pinus thunbergii. Plant Dis. 2000, 84, 675–680. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Zhou, Y.; Li, X.; Zhang, Y.; Wang, Y. Occurrence of major forest pests in 2020 and prediction of occurrence trend in 2021 in China. For. Pest Dis. 2021, 40, 45–48. [Google Scholar]

- Jiang, M.; Huang, B.; Yu, X.; Zheng, W.T.; Lin, Y.L.; Liao, M.N.; Ni, J. Distribution, damage and control of pine wilt disease. J. Zhejiang For. Sci. Technol. 2018, 38, 83–91. [Google Scholar]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Li, X. Use satellite remote sensing data to grasp the surgery of forests. World For. Res. 1992, 50. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, X.L.; An, S.J. spectral characteristics analysis of pinus massoniana suffered by Bursaphelenchus xylophilus. Remote Sens. Technol. Appl. 2007, 22, 367–370. [Google Scholar]

- Xu, H.C.; Luo, Y.Q.; Zhang, T.T.; Shi, Y.J. Changes of reflectance spectra of pine needles in different stage after being infected by pine wood nematode. Spectrosc. Spectr. Anal. 2011, 31, 1352–1356. [Google Scholar]

- Li, F.; Liu, Z.; Shen, W.; Wang, Y.; Wang, Y.; Ge, C.; Sun, F.; Lan, P. A Remote Sensing and Airborne Edge-Computing Based Detection System for Pine Wilt Diseas. IEEE Access 2021, 9, 66346–66360. [Google Scholar] [CrossRef]

- Lee, D.S.; Choi, W.I.; Nam, Y.; Park, Y.S. Predicting potential occurrence of pine wilt disease based on environmental factors in South Korea using machine learning algorithms. Ecol. Inform. 2021, 64, 101378. [Google Scholar] [CrossRef]

- Xiong, Y.; Zhang, Z.; Chen, F. Comparison of Artificial Neural Network and Support Vector Machine Methods for Urban Land Use/Cover Classifications from Remote Sensing Images. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010. [Google Scholar]

- Zhang, S.; Huang, H.; Huang, Y.; Cheng, D.; Huang, J. A GA and SVM Classification Model for Pine Wilt Disease Detection Using UAV-Based Hyperspectral Imagery. Appl. Sci. 2022, 12, 6676. [Google Scholar] [CrossRef]

- Zhou, H.; Yuan, X.; Zhou, H.; Shen, H.; Ma, L.; Sun, L.; Fang, G.; Sun, H. Surveillance of pine wilt disease by high resolution satellite. J. For. Res. 2022, 33, 1401–1408. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, W.; Quan, Y.; Zhong, X.; Song, Y.; Li, Q.; Dauphin, G.; Wang, Y.; Xing, M. A Novel Spatial-Spectral Random Forest Algorithm for Pine WILT Monitoring. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 6045–6048. [Google Scholar] [CrossRef]

- Zhang, R.; You, J.; Lee, J. Detecting Pine Trees Damaged by Wilt Disease Using Deep Learning Techniques Applied to Multi-Spectral Images. IEEE Access 2022, 10, 39108–39118. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, W.; Wang, J. Counting of Pine Wood Nematode Based on VDNet Convolutional Neural Network. In Proceedings of the 2022 4th International Conference on Robotics and Computer Vision (ICRCV), Wuhan, China, 25–27 September 2022; pp. 164–168. [Google Scholar] [CrossRef]

- Gong, H.; Ding, Y.; Li, D.; Wang, W.; Li, Z. Recognition of Pine Wood Affected by Pine Wilt Disease Based on YOLOv5. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 4753–4757. [Google Scholar] [CrossRef]

- Park, H.G.; Yun, J.P.; Kim, M.Y.; Jeong, S.H. Multichannel Object Detection for Detecting Suspected Trees with Pine Wilt Disease Using Multispectral Drone Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8350–8358. [Google Scholar] [CrossRef]

- Huang, J.; Lu, X.; Chen, L.; Sun, H.; Wang, S.; Fang, G. Accurate Identification of Pine Wood Nematode Disease with a Deep Convolution Neural Network. Remote Sens. 2022, 14, 913. [Google Scholar] [CrossRef]

- Qin, B.; Sun, F.; Shen, W.; Dong, B.; Ma, S.; Huo, X.; Lan, P. Deep learning-based pine nematode trees’ identification using multispectral and visible UAV imagery. Drones 2023, 7, 183. [Google Scholar] [CrossRef]

- Deng, X.; Tong, Z.; Lan, Y.; Huang, Z. Detection and Location of Dead Trees with Pine Wilt Disease Based on Deep Learning and UAV Remote Sensing. AgriEngineering 2020, 2, 294–307. [Google Scholar] [CrossRef]

- Li, H.; Chen, L.; Yao, Z.; Li, N.; Long, L.; Zhang, X. Intelligent Identification of Pine Wilt Disease Infected Individual Trees Using UAV-Based Hyperspectral Imagery. Remote Sens. 2023, 15, 3295. [Google Scholar] [CrossRef]

- Lee, M.-G.; Cho, H.-B.; Youm, S.-K.; Kim, S.-W. Detection of Pine Wilt Disease Using Time Series UAV Imagery and Deep Learning Semantic Segmentation. Forests 2023, 14, 1576. [Google Scholar] [CrossRef]

- Berry, M.V.; Lewis, Z.V.; Nye, J.F. On the Weierstrass-Mandelbrot fractal function. Proc. R. Soc. Lond. A Math. Phys. Sci. 1980, 370, 459–484. [Google Scholar]

- Guariglia, E.; Silvestrov, S. Fractional-Wavelet Analysis of Positive definite Distributions and Wavelets on D′(C) D′(C). In Engineering Mathematics II: Algebraic, Stochastic and Analysis Structures for Networks, Data Classification and Optimization; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 337–353. [Google Scholar]

- Yang, L.; Su, H.; Zhong, C.; Meng, Z.; Luo, H.; Li, X.; Tang, Y.Y.; Lu, Y. Hyperspectral image classification using wavelet transform-based smooth ordering. Int. J. Wavelets Multiresolut. Inf. Process. 2019, 17, 1950050. [Google Scholar] [CrossRef]

- Zheng, X.; Tang, Y.Y.; Zhou, J. A framework of adaptive multiscale wavelet decomposition for signals on undirected graphs. IEEE Trans. Signal Process. 2019, 67, 1696–1711. [Google Scholar] [CrossRef]

- Li, W.; An, B.; Kong, Y. Data Augmentation Method on Pine Wilt Disease Recognition. In Proceedings of the International Conference on Intelligence Science, Xi’an, China, 28–31 October 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 458–465. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587v2. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large Kernel Matters—Improve Semantic Segmentation by Global Convolutional Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1743–1751. [Google Scholar]

| UAV & Camera | Parameters | Value |

|---|---|---|

| Name | DJI_M600 (DJI, Shenzhen, China) |

| Maximum take-off weight | 11 kg | |

| Weights | 4.4 kg | |

| Endurance | 15 min | |

| Effective working hours | 12 min | |

| Name | GaiaSky-mini |

| Aperture | f/3.5 | |

| Exposure time | 1/240 s | |

| ISO speed | 100~200 | |

| Exposure compensation | 0-stop aperture | |

| Monitoring range | 15 mm | |

| Maximum aperture | 1.7 mm | |

| Spectral coverage | 450~1000 nm | |

| Spectral resolution | 3.5 nm |

| Target Epoch | Index | |||

|---|---|---|---|---|

| MIoU | MPA | Accuracy | F1 Score | |

| 82 | 82.79 | 88.0 | 99.15 | 0.881 |

| 77 | 81.83 | 90.83 | 99.01 | 0.877 |

| 81 | 81.62 | 85.13 | 99.13 | 0.885 |

| Model | Training Time | Convergence Rate (Time) | MIoU | MPA | Accuracy | F1 Score |

|---|---|---|---|---|---|---|

| VGG-U-Net | 16 min, 32 s | 9 epochs | 81.62 | 85.13 | 99.13 | 0.885 |

| ResNet50 | 32 min, 53 s | 25 epochs | 71.5 | 74.6 | 98.78 | 0.765 |

| DeepLab v3+ | 15 min, 46 s | 18 epochs | 48.9 | 50 | 73.5 | 0.497 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, J.; Xu, Q.; Gao, M.; Ning, J.; Jiang, X.; Gao, M. Aerial Image Segmentation of Nematode-Affected Pine Trees with U-Net Convolutional Neural Network. Appl. Sci. 2024, 14, 5087. https://doi.org/10.3390/app14125087

Shen J, Xu Q, Gao M, Ning J, Jiang X, Gao M. Aerial Image Segmentation of Nematode-Affected Pine Trees with U-Net Convolutional Neural Network. Applied Sciences. 2024; 14(12):5087. https://doi.org/10.3390/app14125087

Chicago/Turabian StyleShen, Jiankang, Qinghua Xu, Mingyang Gao, Jicai Ning, Xiaopeng Jiang, and Meng Gao. 2024. "Aerial Image Segmentation of Nematode-Affected Pine Trees with U-Net Convolutional Neural Network" Applied Sciences 14, no. 12: 5087. https://doi.org/10.3390/app14125087

APA StyleShen, J., Xu, Q., Gao, M., Ning, J., Jiang, X., & Gao, M. (2024). Aerial Image Segmentation of Nematode-Affected Pine Trees with U-Net Convolutional Neural Network. Applied Sciences, 14(12), 5087. https://doi.org/10.3390/app14125087