AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications

Abstract

:1. Introduction

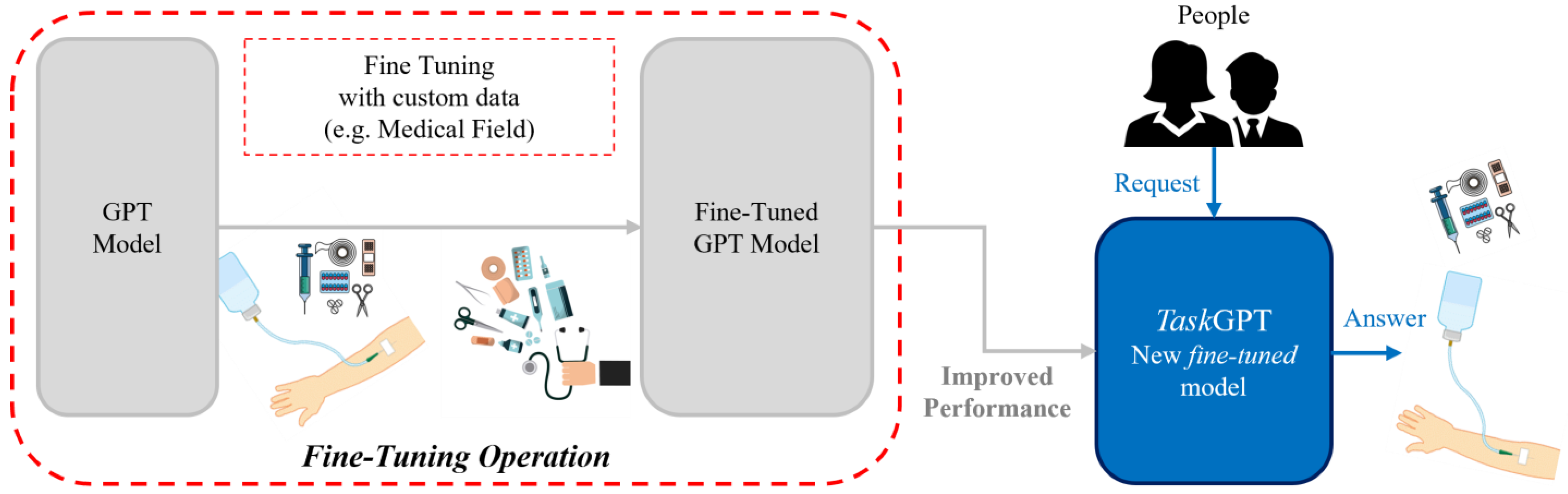

1.1. Technical Background

Natural Language Processing and Detailed Aspects of AI Chatbots

- Menu/button-based chatbots: Use buttons and menus, are common and simple, follow decision tree logic. Users make selections to receive answers.

- Keyword recognition-based chatbots: use AI to identify and respond to specific keywords from user input.

- Contextual chatbots: use AI and machine learning to understand user intentions and sentiments through technologies like voice recognition.

- Understanding: chatbots use NLP to comprehend user requests and human language complexities.

- Contextual responses: NLP allows chatbots to provide relevant, context-aware answers.

- Continuous learning: chatbots learn new language patterns from interactions, staying current with trends.

- Training data: chatbots learn from extensive conversational data to understand user questions and provide suitable answers.

- Pattern recognition: chatbots identify patterns in user behavior and language to predict and generate accurate responses.

- Feedback loop: machine learning continuously refines chatbot algorithms, improving accuracy with each interaction.

1.2. Aim of the Study

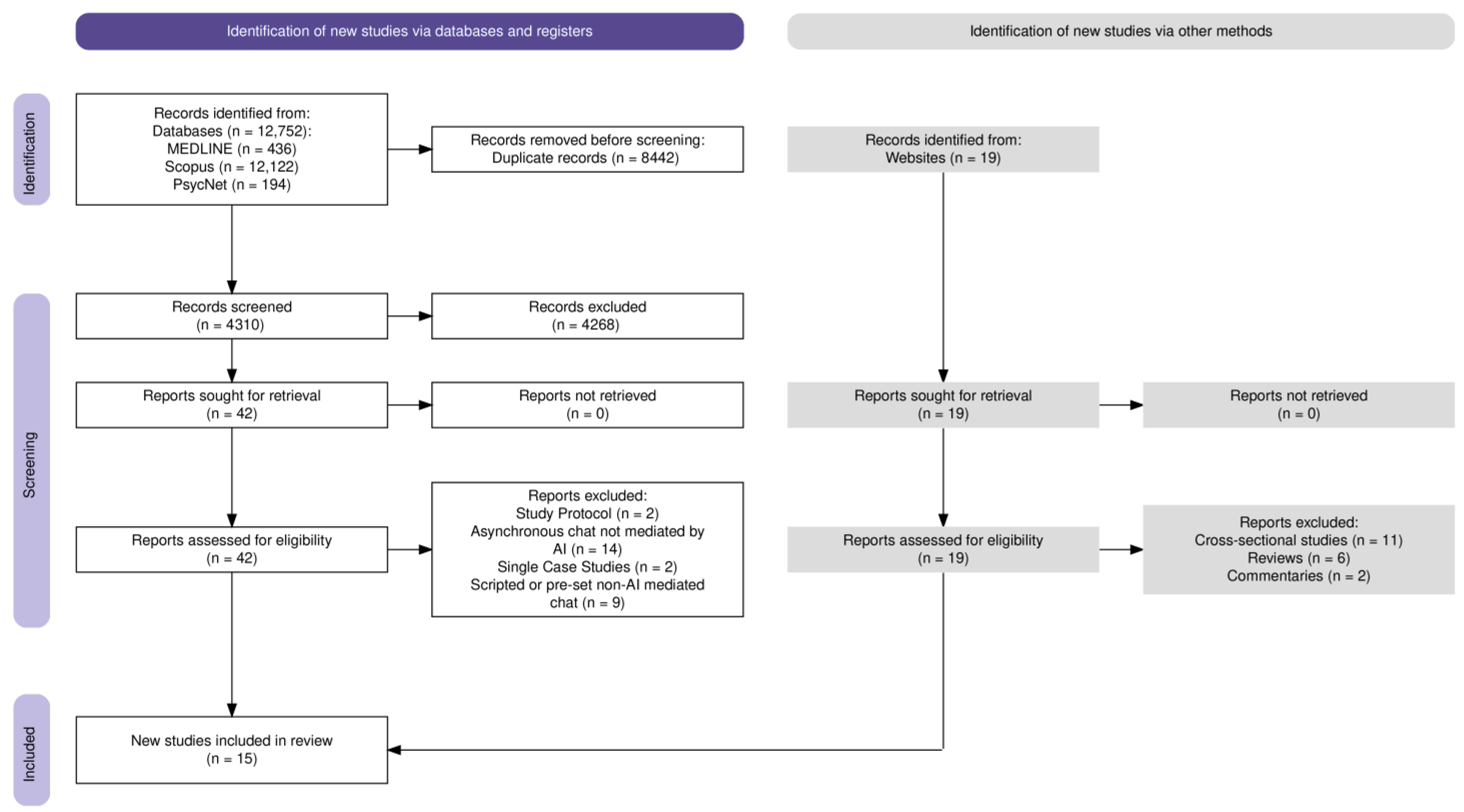

2. Materials and Methods

2.1. Search Strategy

2.2. Database Searches

2.3. Inclusion and Exclusion Criteria

- Clinical trials;

- Randomized controlled trials (RCTs);

- Articles written in any language;

- Chatbot interventions mediated by modern AI architectures/frameworks;

- Chatbots utilizing rule-based systems, natural language processing (NLP), or machine learning;

- Pilot studies examining chatbot interventions for mental health conditions.

- Cross-sectional studies;

- Reviews;

- Commentaries;

- Editorials;

- Protocols;

- Case studies;

- Older chatbot systems not based on modern AI architectures/frameworks;

- Human-to-human asynchronous communication platforms without AI mediation;

- Scripted or pre-set chat systems without AI-driven conversation simulation;

- Studies not focused on chatbot interventions or mental health conditions.

2.4. Study Selection

2.5. Data Extraction

2.6. Quality Assessment

2.7. Analysis

3. Results

3.1. Characteristics of Included Studies

3.2. Mental Health Allies during COVID-19

3.3. Supporting Emotional Well-Being in Specific Health Conditions

3.4. Tackling Substance Use and Addiction

3.5. Preventive Care: Targeting Eating Disorders and HIV Prevention

3.6. Enhancing Well-Being in Young Cancer Survivors

3.7. Panic Disorder Management

3.8. Problem-Solving in Older Adults

3.9. Usability and Engagement

3.10. Risk of Bias

3.10.1. Risk of Bias in Randomized Trials (RoB 2)

- D1: Bias arising from the randomization process.

- D2: Bias due to deviations from intended interventions.

- D3: Bias due to missing outcome data.

- D4: Bias in measurement of the outcome.

- D5: Bias in selection of the reported result.

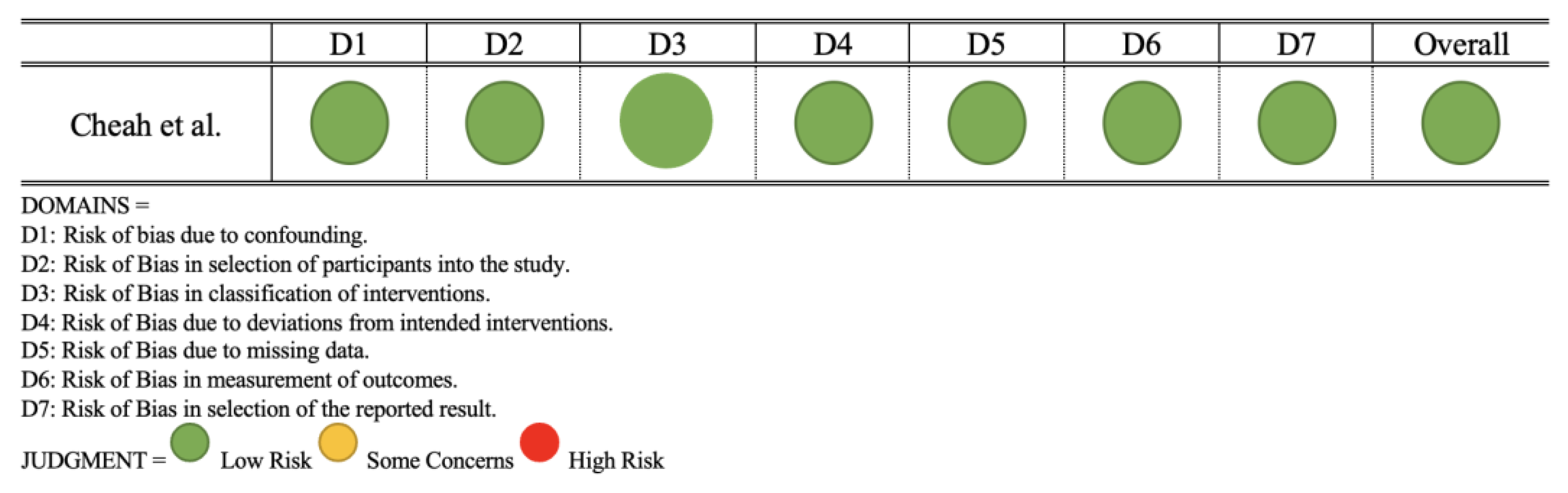

3.10.2. Risk of Bias in Non-Randomized Studies (ROBINS-E)

- D1: Risk of bias due to confounding.

- D2: Risk of bias in selection of participants into the study.

- D3: Risk of bias in classification of interventions.

- D4: Risk of bias due to deviations from intended interventions.

- D5: Risk of bias due to missing data.

- D6: Risk of bias in measurement of outcomes.

- D7: Risk of bias in selection of the reported result.

4. Discussion

Limitations and Future Research Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization Mental Health. Available online: https://www.who.int/health-topics/mental-health (accessed on 13 May 2024).

- Brunier, A.; WHO Media Team. WHO Report Highlights Global Shortfall in Investment in Mental Health. Available online: https://www.who.int/news/item/08-10-2021-who-report-highlights-global-shortfall-in-investment-in-mental-health (accessed on 13 May 2024).

- Rising Demand for Mental Health Workers Globally. IHNA Blog. 2022. Available online: https://www.ihna.edu.au/blog/2022/09/rising-demand-for-mental-health-workers-globally/ (accessed on 13 May 2024).

- Eurostat Number of Psychiatrists: How Do Countries Compare? Available online: https://ec.europa.eu/eurostat/web/products-eurostat-news/-/DDN-20200506-1 (accessed on 13 May 2024).

- McKenzie, K.; Patel, V.; Araya, R. Learning from Low Income Countries: Mental Health. BMJ 2004, 329, 1138–1140. [Google Scholar] [CrossRef] [PubMed]

- Mental Health in Developed vs. Developing Countries. Jacinto Convit World Organization. 2021. Available online: https://www.jacintoconvit.org/social-science-series-5-mental-health-in-developed-vs-developing-countries/ (accessed on 13 May 2024).

- WHO Media Team. WHO Highlights Urgent Need to Transform Mental Health and Mental Health Care. Available online: https://www.who.int/news/item/17-06-2022-who-highlights-urgent-need-to-transform-mental-health-and-mental-health-care (accessed on 13 May 2024).

- Hester, R.D. Lack of Access to Mental Health Services Contributing to the High Suicide Rates among Veterans. Int. J. Ment. Health Syst. 2017, 11, 47. [Google Scholar] [CrossRef] [PubMed]

- Kapur, N.; Gorman, L.S.; Quinlivan, L.; Webb, R.T. Mental Health Services: Quality, Safety and Suicide. BMJ Qual. Saf. 2022, 31, 419–422. [Google Scholar] [CrossRef] [PubMed]

- Caponnetto, P.; Milazzo, M. Cyber Health Psychology: The Use of New Technologies at the Service of Psychological Well Being and Health Empowerment. Health Psych. Res. 2019, 7, 8559. [Google Scholar] [CrossRef] [PubMed]

- Caponnetto, P.; Casu, M. Update on Cyber Health Psychology: Virtual Reality and Mobile Health Tools in Psychotherapy, Clinical Rehabilitation, and Addiction Treatment. Int. J. Environ. Res. Public Health 2022, 19, 3516. [Google Scholar] [CrossRef]

- Ancis, J.R. The Age of Cyberpsychology: An Overview. Technol. Mind Behav. 2020, 1. [Google Scholar] [CrossRef]

- Taylor, C.B.; Graham, A.K.; Flatt, R.E.; Waldherr, K.; Fitzsimmons-Craft, E.E. Current State of Scientific Evidence on Internet-Based Interventions for the Treatment of Depression, Anxiety, Eating Disorders and Substance Abuse: An Overview of Systematic Reviews and Meta-Analyses. Eur. J. Public Health 2021, 31, i3–i10. [Google Scholar] [CrossRef] [PubMed]

- Spiegel, B.M.R.; Liran, O.; Clark, A.; Samaan, J.S.; Khalil, C.; Chernoff, R.; Reddy, K.; Mehra, M. Feasibility of Combining Spatial Computing and AI for Mental Health Support in Anxiety and Depression. NPJ Digit. Med. 2024, 7, 22. [Google Scholar] [CrossRef] [PubMed]

- Li, J. Digital Technologies for Mental Health Improvements in the COVID-19 Pandemic: A Scoping Review. BMC Public Health 2023, 23, 413. [Google Scholar] [CrossRef]

- Viduani, A.; Cosenza, V.; Araújo, R.M.; Kieling, C. Chatbots in the Field of Mental Health: Challenges and Opportunities. In Digital Mental Health: A Practitioner’s Guide; Passos, I.C., Rabelo-da-Ponte, F.D., Kapczinski, F., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 133–148. ISBN 978-3-031-10698-9. [Google Scholar]

- Song, I.; Pendse, S.R.; Kumar, N.; De Choudhury, M. The Typing Cure: Experiences with Large Language Model Chatbots for Mental Health Support. arXiv 2024, arXiv:2401.14362. [Google Scholar]

- Abd-alrazaq, A.A.; Alajlani, M.; Alalwan, A.A.; Bewick, B.M.; Gardner, P.; Househ, M. An Overview of the Features of Chatbots in Mental Health: A Scoping Review. Int. J. Med. Inform. 2019, 132, 103978. [Google Scholar] [CrossRef] [PubMed]

- Moore, J.R.; Caudill, R. The Bot Will See You Now: A History and Review of Interactive Computerized Mental Health Programs. Psychiatr. Clin. N. Am. 2019, 42, 627–634. [Google Scholar] [CrossRef] [PubMed]

- Grand View Research. Mental Health Apps Market Size and Share Report. 2030. Available online: https://web.archive.org/web/20240705093326/https://www.grandviewresearch.com/industry-analysis/mental-health-apps-market-report (accessed on 13 May 2024).

- Ceci, L. Topic: Meditation and Mental Wellness Apps. Available online: https://www.statista.com/topics/11045/meditation-and-mental-wellness-apps/ (accessed on 13 May 2024).

- Adam, M.; Wessel, M.; Benlian, A. AI-Based Chatbots in Customer Service and Their Effects on User Compliance. Electron Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Andre, D. What Is a Chatbot? All About AI: Golden Grove, Australia, 2023. [Google Scholar]

- Adamopoulou, E.; Moussiades, L. An Overview of Chatbot Technology. In Proceedings of the Artificial Intelligence Applications and Innovations; Maglogiannis, I., Iliadis, L., Pimenidis, E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 373–383. [Google Scholar]

- Moilanen, J.; van Berkel, N.; Visuri, A.; Gadiraju, U.; van der Maden, W.; Hosio, S. Supporting Mental Health Self-Care Discovery through a Chatbot. Front. Digit. Health 2023, 5, 1034724. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, C.; Pal, S.; Bhattacharya, M.; Dash, S.; Lee, S.-S. Overview of Chatbots with Special Emphasis on Artificial Intelligence-Enabled ChatGPT in Medical Science. Front. Artif. Intell. 2023, 6, 1237704. [Google Scholar] [CrossRef]

- Zhong, W.; Luo, J.; Zhang, H. The Therapeutic Effectiveness of Artificial Intelligence-Based Chatbots in Alleviation of Depressive and Anxiety Symptoms in Short-Course Treatments: A Systematic Review and Meta-Analysis. J. Affect. Disord. 2024, 356, 459–469. [Google Scholar] [CrossRef]

- Zafar, F.; Fakhare Alam, L.; Vivas, R.R.; Wang, J.; Whei, S.J.; Mehmood, S.; Sadeghzadegan, A.; Lakkimsetti, M.; Nazir, Z. The Role of Artificial Intelligence in Identifying Depression and Anxiety: A Comprehensive Literature Review. Cureus 2024, 16, e56472. [Google Scholar] [CrossRef] [PubMed]

- Boucher, E.M.; Harake, N.R.; Ward, H.E.; Stoeckl, S.E.; Vargas, J.; Minkel, J.; Parks, A.C.; Zilca, R. Artificially Intelligent Chatbots in Digital Mental Health Interventions: A Review. Expert Rev. Med. Devices 2021, 18, 37–49. [Google Scholar] [CrossRef] [PubMed]

- Balcombe, L. AI Chatbots in Digital Mental Health. Informatics 2023, 10, 82. [Google Scholar] [CrossRef]

- Ali, B.; Ravi, V.; Bhushan, C.; Santhosh, M.G.; Shiva Shankar, O. Chatbot via Machine Learning and Deep Learning Hybrid. In Modern Approaches in Machine Learning and Cognitive Science: A Walkthrough; Latest Trends in AI; Gunjan, V.K., Zurada, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 2, pp. 255–265. ISBN 978-3-030-68291-0. [Google Scholar]

- Azwary, F.; Indriani, F.; Nugrahadi, D.T. Question Answering System Berbasis Artificial Intelligence Markup Language Sebagai Media Informasi. KLIK—Kumpul. J. Ilmu Komput. 2016, 3, 48–60. [Google Scholar] [CrossRef]

- Abdul-Kader, S.A.; Woods, J. Survey on Chatbot Design Techniques in Speech Conversation Systems. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 72–80. [Google Scholar] [CrossRef]

- Shevat, A. Designing Bots: Creating Conversational Experiences; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017; ISBN 978-1-4919-7484-1. [Google Scholar]

- Gupta, A.; Hathwar, D.; Vijayakumar, A. Introduction to AI Chatbots. Int. J. Eng. Res. Technol. 2020, 9, 255–258. [Google Scholar]

- Trofymenko, O.; Prokop, Y.; Zadereyko, O.; Loginova, N. Classification of Chatbots. Syst. Technol. 2022, 2, 147–159. [Google Scholar] [CrossRef]

- Hussain, S.; Ameri Sianaki, O.; Ababneh, N. A Survey on Conversational Agents/Chatbots Classification and Design Techniques. In Proceedings of the Web, Artificial Intelligence and Network Applications; Barolli, L., Takizawa, M., Xhafa, F., Enokido, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 946–956. [Google Scholar]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with Educational Chatbots: A Systematic Review. Educ. Inf. Technol. 2023, 28, 973–1018. [Google Scholar] [CrossRef]

- Labadze, L.; Grigolia, M.; Machaidze, L. Role of AI Chatbots in Education: Systematic Literature Review. Int. J. Educ. Technol. High. Educ. 2023, 20, 56. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, B. Continuous Knowledge Learning in Chatbots. In Lifelong Machine Learning; Chen, Z., Liu, B., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 131–138. ISBN 978-3-031-01581-6. [Google Scholar]

- Biesialska, M.; Biesialska, K.; Costa-jussà, M.R. Continual Lifelong Learning in Natural Language Processing: A Survey. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 6523–6541. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Hoffmann, J.; Borgeaud, S.; Mensch, A.; Buchatskaya, E.; Cai, T.; Rutherford, E.; Casas, D.d.L.; Hendricks, L.A.; Welbl, J.; Clark, A.; et al. Training Compute-Optimal Large Language Models. arXiv 2022, arXiv:2203.15556. [Google Scholar]

- Auma, D. Using Transfer Learning for Chatbots in Python. Medium. 2023. Available online: https://medium.com/@dianaauma2/using-transfer-learning-for-chatbots-in-python-93e937936ad8 (accessed on 13 May 2024).

- Kulkarni, A.; Shivananda, A.; Kulkarni, A. Building a Chatbot Using Transfer Learning. In Natural Language Processing Projects: Build Next-Generation NLP Applications Using AI Techniques; Kulkarni, A., Shivananda, A., Kulkarni, A., Eds.; Apress: Berkeley, CA, USA, 2022; pp. 239–255. ISBN 978-1-4842-7386-9. [Google Scholar]

- Pal, A.; Minervini, P.; Motzfeldt, A.G.; Gema, A.P.; Alex, B. Openlifescienceai/Open_medical_llm_leaderboard. 2024. Available online: https://huggingface.co/blog/leaderboard-medicalllm (accessed on 13 May 2024).

- Jahan, I.; Laskar, M.T.R.; Peng, C.; Huang, J.X. A Comprehensive Evaluation of Large Language Models on Benchmark Biomedical Text Processing Tasks. Comput. Biol. Med. 2024, 171, 108189. [Google Scholar] [CrossRef]

- Wang, B.; Chen, W.; Pei, H.; Xie, C.; Kang, M.; Zhang, C.; Xu, C.; Xiong, Z.; Dutta, R.; Schaeffer, R.; et al. DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models. Adv. Neural Inf. Process. Syst. 2023, 36, 31232–31339. [Google Scholar]

- Xu, X.; Yao, B.; Dong, Y.; Gabriel, S.; Yu, H.; Hendler, J.; Ghassemi, M.; Dey, A.K.; Wang, D. Mental-LLM: Leveraging Large Language Models for Mental Health Prediction via Online Text Data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2024, 8, 1–32. [Google Scholar] [CrossRef]

- Jin, H.; Chen, S.; Wu, M.; Zhu, K.Q. PsyEval: A Comprehensive Large Language Model Evaluation Benchmark for Mental Health. arXiv 2023, arXiv:2311.09189. [Google Scholar]

- Qi, H.; Zhao, Q.; Song, C.; Zhai, W.; Luo, D.; Liu, S.; Yu, Y.J.; Wang, F.; Zou, H.; Yang, B.X.; et al. Supervised Learning and Large Language Model Benchmarks on Mental Health Datasets: Cognitive Distortions and Suicidal Risks in Chinese Social Media. Res. Sq. 2023. [Google Scholar] [CrossRef]

- Obradovich, N.; Khalsa, S.S.; Khan, W.U.; Suh, J.; Perlis, R.H.; Ajilore, O.; Paulus, M.P. Opportunities and Risks of Large Language Models in Psychiatry. NPP—Digit. Psychiatry Neurosci. 2024, 2, 8. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Sterne, J.A.C.; Savović, J.; Page, M.J.; Elbers, R.G.; Blencowe, N.S.; Boutron, I.; Cates, C.J.; Cheng, H.-Y.; Corbett, M.S.; Eldridge, S.M.; et al. RoB 2: A Revised Tool for Assessing Risk of Bias in Randomised Trials. BMJ 2019, 366, l4898. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Morgan, R.L.; Rooney, A.A.; Taylor, K.W.; Thayer, K.A.; Silva, R.A.; Lemeris, C.; Akl, E.A.; Bateson, T.F.; Berkman, N.D.; et al. A Tool to Assess Risk of Bias in Non-Randomized Follow-up Studies of Exposure Effects (ROBINS-E). Environ. Int. 2024, 186, 108602. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R Package and Shiny App for Producing PRISMA 2020-compliant Flow Diagrams, with Interactivity for Optimised Digital Transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef]

- He, Y.; Yang, L.; Zhu, X.; Wu, B.; Zhang, S.; Qian, C.; Tian, T. Mental Health Chatbot for Young Adults with Depressive Symptoms during the COVID-19 Pandemic: Single-Blind, Three-Arm Randomized Controlled Trial. J. Med. Internet Res. 2022, 24, e40719. [Google Scholar] [CrossRef]

- Peuters, C.; Maenhout, L.; Cardon, G.; De Paepe, A.; DeSmet, A.; Lauwerier, E.; Leta, K.; Crombez, G. A Mobile Healthy Lifestyle Intervention to Promote Mental Health in Adolescence: A Mixed-Methods Evaluation. BMC Public Health 2024, 24, 44. [Google Scholar] [CrossRef]

- Yasukawa, S.; Tanaka, T.; Yamane, K.; Kano, R.; Sakata, M.; Noma, H.; Furukawa, T.A.; Kishimoto, T. A Chatbot to Improve Adherence to Internet-Based Cognitive–Behavioural Therapy among Workers with Subthreshold Depression: A Randomised Controlled Trial. BMJ Ment. Health 2024, 27, e300881. [Google Scholar] [CrossRef]

- Ogawa, M.; Oyama, G.; Morito, K.; Kobayashi, M.; Yamada, Y.; Shinkawa, K.; Kamo, H.; Hatano, T.; Hattori, N. Can AI Make People Happy? The Effect of AI-Based Chatbot on Smile and Speech in Parkinson’s Disease. Park. Relat. Disord. 2022, 99, 43–46. [Google Scholar] [CrossRef]

- Ulrich, S.; Gantenbein, A.R.; Zuber, V.; Von Wyl, A.; Kowatsch, T.; Künzli, H. Development and Evaluation of a Smartphone-Based Chatbot Coach to Facilitate a Balanced Lifestyle in Individuals with Headaches (BalanceUP App): Randomized Controlled Trial. J. Med. Internet Res. 2024, 26, e50132. [Google Scholar] [CrossRef]

- Vereschagin, M.; Wang, A.Y.; Richardson, C.G.; Xie, H.; Munthali, R.J.; Hudec, K.L.; Leung, C.; Wojcik, K.D.; Munro, L.; Halli, P.; et al. Effectiveness of the Minder Mobile Mental Health and Substance Use Intervention for University Students: Randomized Controlled Trial. J. Med. Internet Res. 2024, 26, e54287. [Google Scholar] [CrossRef]

- Prochaska, J.J.; Vogel, E.A.; Chieng, A.; Kendra, M.; Baiocchi, M.; Pajarito, S.; Robinson, A. A Therapeutic Relational Agent for Reducing Problematic Substance Use (Woebot): Development and Usability Study. J. Med. Internet Res. 2021, 23, e24850. [Google Scholar] [CrossRef]

- So, R.; Emura, N.; Okazaki, K.; Takeda, S.; Sunami, T.; Kitagawa, K.; Takebayashi, Y.; Furukawa, T.A. Guided versus Unguided Chatbot-Delivered Cognitive Behavioral Intervention for Individuals with Moderate-Risk and Problem Gambling: A Randomized Controlled Trial (GAMBOT2 Study). Addict. Behav. 2024, 149, 107889. [Google Scholar] [CrossRef]

- Olano-Espinosa, E.; Avila-Tomas, J.F.; Minue-Lorenzo, C.; Matilla-Pardo, B.; Serrano Serrano, M.E.; Martinez-Suberviola, F.J.; Gil-Conesa, M.; Del Cura-González, I. Dejal@ Group Effectiveness of a Conversational Chatbot (Dejal@bot) for the Adult Population to Quit Smoking: Pragmatic, Multicenter, Controlled, Randomized Clinical Trial in Primary Care. JMIR Mhealth Uhealth 2022, 10, e34273. [Google Scholar] [CrossRef]

- Fitzsimmons-Craft, E.E.; Chan, W.W.; Smith, A.C.; Firebaugh, M.; Fowler, L.A.; Topooco, N.; DePietro, B.; Wilfley, D.E.; Taylor, C.B.; Jacobson, N.C. Effectiveness of a Chatbot for Eating Disorders Prevention: A Randomized Clinical Trial. Int. J. Eat. Disord. 2022, 55, 343–353. [Google Scholar] [CrossRef]

- Cheah, M.H.; Gan, Y.N.; Altice, F.L.; Wickersham, J.A.; Shrestha, R.; Salleh, N.A.M.; Ng, K.S.; Azwa, I.; Balakrishnan, V.; Kamarulzaman, A.; et al. Testing the Feasibility and Acceptability of Using an Artificial Intelligence Chatbot to Promote HIV Testing and Pre-Exposure Prophylaxis in Malaysia: Mixed Methods Study. JMIR Hum. Factors 2024, 11, e52055. [Google Scholar] [CrossRef]

- Greer, S.; Ramo, D.; Chang, Y.-J.; Fu, M.; Moskowitz, J.; Haritatos, J. Use of the Chatbot “Vivibot” to Deliver Positive Psychology Skills and Promote Well-Being among Young People after Cancer Treatment: Randomized Controlled Feasibility Trial. JMIR Mhealth Uhealth 2019, 7, e15018. [Google Scholar] [CrossRef]

- Oh, J.; Jang, S.; Kim, H.; Kim, J.-J. Efficacy of Mobile App-Based Interactive Cognitive Behavioral Therapy Using a Chatbot for Panic Disorder. Int. J. Med. Inform. 2020, 140, 104171. [Google Scholar] [CrossRef] [PubMed]

- Bennion, M.R.; Hardy, G.E.; Moore, R.K.; Kellett, S.; Millings, A. Usability, Acceptability, and Effectiveness of Web-Based Conversational Agents to Facilitate Problem Solving in Older Adults: Controlled Study. J. Med. Internet Res. 2020, 22, e16794. [Google Scholar] [CrossRef] [PubMed]

- Thunström, A.O.; Carlsen, H.K.; Ali, L.; Larson, T.; Hellström, A.; Steingrimsson, S. Usability Comparison among Healthy Participants of an Anthropomorphic Digital Human and a Text-Based Chatbot as a Responder to Questions on Mental Health: Randomized Controlled Trial. JMIR Hum. Factors 2024, 11, e54581. [Google Scholar] [CrossRef]

- Lisetti, C. 10 Advantages of Using Avatars in Patient-Centered Computer-Based Interventions for Behavior Change. SIGHIT Rec. 2012, 2, 28. [Google Scholar] [CrossRef]

- Han, H.J.; Mendu, S.; Jaworski, B.K.; Owen, J.E.; Abdullah, S. Preliminary Evaluation of a Conversational Agent to Support Self-Management of Individuals Living with Posttraumatic Stress Disorder: Interview Study with Clinical Experts. JMIR Form. Res. 2023, 7, e45894. [Google Scholar] [CrossRef]

- Hudlicka, E. Chapter 4—Virtual Affective Agents and Therapeutic Games. In Artificial Intelligence in Behavioral and Mental Health Care; Luxton, D.D., Ed.; Academic Press: San Diego, CA, USA, 2016; pp. 81–115. ISBN 978-0-12-420248-1. [Google Scholar]

- Richards, D.; Vythilingam, R.; Formosa, P. A Principlist-Based Study of the Ethical Design and Acceptability of Artificial Social Agents. Int. J. Hum.-Comput. Stud. 2023, 172, 102980. [Google Scholar] [CrossRef]

- Casu, M.; Guarnera, L.; Caponnetto, P.; Battiato, S. GenAI Mirage: The Impostor Bias and the Deepfake Detection Challenge in the Era of Artificial Illusions. Forensic Sci. Int. Digit. Investig. 2024, 50, 301795. [Google Scholar] [CrossRef]

| Name of the Paper | Year | Authors | Study Design | Sample | Main Outcome |

|---|---|---|---|---|---|

| Mental Health Chatbot for Young Adults With Depressive Symptoms During the COVID-19 Pandemic: Single-Blind, Three-Arm Randomized Controlled Trial | 2022 | He et al. [58] | Randomized controlled trial | 148 | The AI chatbot XiaoE significantly reduced depressive symptoms in college students compared to control groups, with a moderate effect size post-intervention and a small effect size at 1-month follow-up. |

| A mobile healthy lifestyle intervention to promote mental health in adolescence: a mixed-methods evaluation | 2024 | Peuters et al. [59] | Cluster-controlled trial with process evaluation interviews | 279 | Positive effects on physical activity, sleep quality, and positive moods. Engagement was a challenge, but users highlighted the importance of gamification, self-regulation techniques, and personalized information from the chatbot. |

| A chatbot to improve adherence to internet-based cognitive-behavioural therapy among workers with subthreshold depression: a randomized controlled trial | 2024 | Yasukawa et al. [60] | Randomized controlled trial | 142 | The addition of a chatbot sending personalized messages resulted in significantly higher iCBT completion rates compared to the control group, but both groups showed similar improvements in depression and anxiety symptoms. |

| Can AI make people happy? The effect of AI-based chatbot on smile and speech in Parkinson’s disease | 2022 | Ogawa et al. [61] | Open-label randomized study | 20 | The chatbot group exhibited increased smile parameters and reduced filler words in speech, suggesting improved facial expressivity and fluency, although clinical rating scales did not show significant differences. |

| Development and Evaluation of a Smartphone-Based Chatbot Coach to Facilitate a Balanced Lifestyle in Individuals With Headaches (BalanceUP App): Randomized Controlled Trial | 2024 | Ulrich et al. [62] | Randomized controlled trial | 198 | The BalanceUP app, utilizing a chat-based interface with predefined and free-text input options, significantly improved mental well-being for individuals with migraines. |

| Effectiveness of the Minder Mobile Mental Health and Substance Use Intervention for University Students: Randomized Controlled Trial | 2024 | Vereschagin et al. [63] | Randomized controlled trial | 1210 | The Minder app, integrating an AI chatbot delivering cognitive–behavioral therapy, reduced anxiety and depressive symptoms, improved mental well-being, and decreased the frequency of cannabis use and alcohol consumption among university students. |

| A Therapeutic Relational Agent for Reducing Problematic Substance Use (Woebot): Development and Usability Study | 2021 | Prochaska et al. [64] | 8-week usability study | 51 | The automated therapeutic intervention W-SUDs led to significant improvements in self-reported substance use, cravings, mental health outcomes, and confidence to resist urges. |

| Guided versus unguided chatbot-delivered cognitive behavioral intervention for individuals with moderate-risk and problem gambling: A randomized controlled trial (GAMBOT2 study) | 2024 | So et al. [65] | Randomized controlled trial | 97 | Both groups (guided and unguided GAMBOT2 intervention) showed significant within-group improvements in gambling outcomes, with no significant between-group differences. |

| Effectiveness of a Conversational Chatbot (Dejal@bot) for the Adult Population to Quit Smoking: Pragmatic, Multicenter, Controlled, Randomized Clinical Trial in Primary Care | 2022 | Olano-Espinosa et al. [66] | Pragmatic, multicenter randomized controlled trial | 460 | The Dejal@bot chatbot intervention had a significantly higher 6-month continuous smoking abstinence rate (26.0%) compared to usual care (18.8%). |

| Effectiveness of a chatbot for eating disorders prevention: A randomized clinical trial | 2022 | Fitzsimmons-Craft et al. [67] | Randomized controlled trial | 700 | The Tessa chatbot intervention resulted in significantly greater reductions in weight and shape concerns compared to the waitlist control group, with reduced odds of developing an eating disorder. |

| Testing the Feasibility and Acceptability of Using an Artificial Intelligence Chatbot to Promote HIV Testing and Pre-Exposure Prophylaxis in Malaysia: Mixed Methods Study | 2024 | Cheah et al. [68] | Beta testing mixed methods study | 14 | The chatbot was found to be feasible and acceptable, with participants rating it highly on quality, satisfaction, intention to continue using, and willingness to refer it to others. |

| Use of the Chatbot “Vivibot” to Deliver Positive Psychology Skills and Promote Well-Being Among Young People After Cancer Treatment: Randomized Controlled Feasibility Trial | 2019 | Greer et al. [69] | Pilot randomized controlled trial | 45 | The Vivibot chatbot group exhibited a trend toward greater reduction in anxiety symptoms compared to the control group, along with an increase in daily positive emotions among young cancer survivors. |

| Efficacy of mobile app-based interactive cognitive behavioral therapy using a chatbot for panic disorder | 2020 | Oh et al. [70] | Randomized controlled trial | 41 | The mobile app chatbot group showed significantly greater reductions in panic disorder severity measured by the Panic Disorder Severity Scale compared to the book group. |

| Usability, Acceptability, and Effectiveness of Web-Based Conversational Agents to Facilitate Problem Solving in Older Adults: Controlled Study | 2020 | Bennion et al. [71] | Study comparing two AI chatbots (MYLO and ELIZA) | 112 | Both chatbots enabled significant reductions in problem distress and depression/anxiety/stress, with MYLO showing greater reductions in problem distress at follow-up compared to ELIZA. |

| Usability Comparison Among Healthy Participants of an Anthropomorphic Digital Human and a Text-Based Chatbot as a Responder to Questions on Mental Health: Randomized Controlled Trial | 2024 | Thunström et al. [72] | Randomized controlled trial | 45 | The text-only chatbot interface had higher usability scores compared to the digital human interface. Emotional responses varied, with the digital human group reporting higher nervousness. |

| Application Area | Studies | Strengths | Flaws |

|---|---|---|---|

| Mental Health Support During COVID-19 | He et al. [58]; Peuters et al. [59] | Accessible, scalable interventions during the pandemic | Challenges with engagement, technical glitches |

| Interventions for Specific Health Conditions | Yasukawa et al. [60]; Ogawa et al. [61]; Ulrich et al. [62] | Personalized support, remote monitoring potential | Limited impact on clinical outcomes, usability issues |

| Addressing Substance Use and Addiction | Vereschagin et al. [63]; Prochaska et al. [64]; So et al. [65]; Olano-Espinosa et al. [66] | Accessible, scalable interventions, positive outcomes | Small effect sizes, need for more intensive therapist involvement |

| Preventive Care and Well-being | Fitzsimmons-Craft et al. [67]; Cheah et al. [68]; Greer et al. [69] | Promising for prevention, well-being promotion | Engagement challenges, need for larger trials |

| Panic Disorder Management | Oh et al. [70] | Potential for promoting healthy behaviors, privacy protection | Usability issues, need for cultural tailoring |

| Health Promotion | Bennion et al. [71]; | Accessible intervention, improvements in panic symptoms | Technical challenges, lower usability ratings |

| Usability and Engagement | Thunström et al. [72] | Evaluation of different interface designs | Limited to healthy participants, varied emotional responses |

| Chatbot Name | Authors | AI Chatbot Technology | Chatbot Protocol | Usability | Engagement |

|---|---|---|---|---|---|

| XiaoE | He et al. [58] | NLP; DL; ML; Rule-Based System. | Standalone | No Statistically Significant Usability Results | High |

| #LIFEGOALS | Peuters et al. [59] | NLP; ML. | Multi-Component mHealth Intervention | N/A | Mid |

| EPO | Yasukawa et al. [60] | NLP; ML; Rule-Based System; Personalization Techniques. | Chatbot and iCBT | Mid Usability | High |

| N/A | Ogawa et al. [61] | NLP; ML (Implied from Functionality description). | Chatbot and neurologist consultations | N/A | N/A |

| BalanceUP | Ulrich et al. [62] | NLP; Rule-Based System; ML; Free-Text Input. | Standalone | N/A | High |

| Minder | Vereschagin et al. [63] | Rule-Based System. | Multi-Component mHealth Intervention | N/A | Low |

| WoeBot | Prochaska et al. [64] | NLP; ML (Recurrent Neural Networks or Transformer Models); Sentiment Analysis; Emotion Detection; User Feedback Loops. | Standalone | High Usability | High |

| GAMBOT2 | So et al. [65] | NLP; ML; Reinforcement Learning. | Chatbot and Therapist Guidance | N/A | N/A |

| Dejal@bot | Olano-Espinosa et al. [66] | Intelligent Dictionaries; Expert System; Bayesian System (Probabilistic Approach). | Standalone | N/A | N/A |

| Tessa | Fitzsimmons-Craft et al. [67] | Rule-Based System (Algorithm-Based). | Standalone | N/A | Mid |

| N/A | Cheah et al. [68] | Rule-Based System (ELIZA); NLP (MYLO); ML (MYLO). | Chatbot and HIV Prevention and Testing Services | High Usability | N/A |

| Vivibot | Greer et al. [69] | Rule-Based System (Decision Tree Structure). | Standalone | High Usability | High |

| N/A | Oh et al. [70] | NLP; ML (Supervised or Reinforcement Learning). | Standalone | Low Usability | N/A |

| MYLO vs. ELIZA | Bennion et al. [71] | NLP; ML; Rule-Based System. | Standalone | MYLO showed better usability rates than ELIZA. | MYLO showed better engagement rates than ELIZA. |

| BETSY | Thunström et al. [72] | NLP (Dialogflow); ML (Supervised Learning Inferred); Avatar and Voice Interaction; EEG Data Analysis. | Standalone | Higher usability scores for the text-only chatbot compared to the digital human interface. | Human features elicit more social engagement. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casu, M.; Triscari, S.; Battiato, S.; Guarnera, L.; Caponnetto, P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Appl. Sci. 2024, 14, 5889. https://doi.org/10.3390/app14135889

Casu M, Triscari S, Battiato S, Guarnera L, Caponnetto P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Applied Sciences. 2024; 14(13):5889. https://doi.org/10.3390/app14135889

Chicago/Turabian StyleCasu, Mirko, Sergio Triscari, Sebastiano Battiato, Luca Guarnera, and Pasquale Caponnetto. 2024. "AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications" Applied Sciences 14, no. 13: 5889. https://doi.org/10.3390/app14135889

APA StyleCasu, M., Triscari, S., Battiato, S., Guarnera, L., & Caponnetto, P. (2024). AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Applied Sciences, 14(13), 5889. https://doi.org/10.3390/app14135889