Google Gemini’s Performance in Endodontics: A Study on Answer Precision and Reliability

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethical Approval

2.2. Question Design

2.3. Generating Answers in Gemini

2.4. Evaluation of Gemini Answers by Human Experts

2.5. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kaul, V.; Enslin, S.; Gross, S.A. History of artificial intelligence in medicine. Gastrointest. Endosc. 2020, 92, 807–812. [Google Scholar] [CrossRef]

- You, Y.; Gui, X. Self-Diagnosis through AI-enabled Chatbot-based Symptom Checkers: User Experiences and Design Considerations. AMIA Annu. Symp. Proc. 2021, 25, 1354–1363. [Google Scholar]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 4, 1169595. [Google Scholar] [CrossRef]

- Wang, Y.; Li, N.; Chen, L.; Wu, M.; Meng, S.; Dai, Z.; Zhang, Y.; Clarke, M. Guidelines, Consensus Statements, and Standards for the Use of Artificial Intelligence in Medicine: Systematic Review. J. Med. Internet Res. 2023, 22, e46089. [Google Scholar] [CrossRef]

- ChatGPT is a black box: How AI research can break it open. Nature 2023, 619, 671–672. [CrossRef] [PubMed]

- Kuroiwa, T.; Sarcon, A.; Ibara, T.; Yamada, E.; Yamamoto, A.; Tsukamoto, K.; Fujita, K. The Potential of ChatGPT as a Self-Diagnostic Tool in Common Orthopedic Diseases: Exploratory Study. J. Med. Internet Res. 2023, 25, e47621. [Google Scholar] [CrossRef]

- Gemini Team, Google. Gemini: A Family of Highly Capable Multimodal Models. Available online: https://storage.googleapis.com/deepmind-media/gemini/gemini_1_report.pdf (accessed on 12 June 2024).

- Saab, K.; Tu, T.; Weng, W.-H.; Tanno, R.; Stutz, D.; Wulczyn, E.; Zhang, F.; Strother, T.; Park, C.; Vedadi, E.; et al. Capabilities of Gemini Models in Medicine. Available online: https://arxiv.org/abs/2404.18416 (accessed on 12 June 2024).

- Erren, T.C. Patients, Doctors, and Chatbots. JMIR Med. Educ. 2024, 4, e50869. [Google Scholar] [CrossRef] [PubMed]

- Webster, P. Medical AI chatbots: Are they safe to talk to patients? Nat. Med. 2023, 29, 2677–2679. [Google Scholar] [CrossRef]

- Suárez, A.; Díaz-Flores García, V.; Algar, J.; Sánchez, M.G.; de Pedro, M.L.; Freire, Y. Unveiling the ChatGPT phenomenon: Evaluating the consistency and accuracy of endodontic question answers. Int. Endod. J. 2024, 57, 108–113. [Google Scholar] [CrossRef]

- Rao, A.; Pang, M.; Kim, J.; Kamineni, M.; Lie, W.; Prasad, A.K.; Landman, A.; Dreyer, K.; Succi, M.D. Assessing the Utility of ChatGPT Throughout the Entire Clinical Workflow: Development and Usability Study. J. Med. Internet Res. 2023, 22, e48659. [Google Scholar] [CrossRef]

- European Society of Endodontology. Resources for Clinicians. 2023. [WWW Document]. Available online: https://www.e-s-e.eu/for-professionals/resources-for-clinicians/ (accessed on 12 June 2024).

- Wu, J.; Ma, Y.; Wang, J.; Xiao, M.J. The Application of ChatGPT in Medicine: A Scoping Review and Bibliometric Analysis. Multidiscip. Healthc. 2024, 18, 1681–1692. [Google Scholar] [CrossRef] [PubMed]

- Shorey, S.; Mattar, C.; Pereira, T.L.; Choolani, M. A scoping review of ChatGPT’s role in healthcare education and research. Nurse Educ. Today 2024, 135, 106121. [Google Scholar] [CrossRef] [PubMed]

- Biswas, S.; Davies, L.N.; Sheppard, A.L.; Logan, N.S.; Wolffsohn, J.S. Utility of artificial intelligence-based large language models in ophthalmic care. Ophthalmic Physiol. Opt. 2024, 44, 641–671. [Google Scholar] [CrossRef] [PubMed]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Does ChatGPT Perform on the United States Medical Licensing Examination (USMLE)? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 8, e45312. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wan, Z.; Ni, C.; Song, Q.; Li, Y.; Clayton, E.W.; Malin, B.A.; Yin, Z. A Systematic Review of ChatGPT and Other Conversational Large Language Models in Healthcare. medRxiv 2024, 27, 24306390. [Google Scholar]

- Huang, H.; Zheng, O.; Wang, D.; Yin, J.; Wang, Z.; Ding, S.; Yin, H.; Xu, C.; Yang, R.; Zheng, Q.; et al. ChatGPT for shaping the future of dentistry: The potential of multi-modal large language model. Int. J. Oral Sci. 2023, 28, 29. [Google Scholar] [CrossRef] [PubMed]

- Buzayan, M.M.; Sivakumar, I.; Mohd, N.R. Artificial intelligence in dentistry: A review of ChatGPT’s role and potential. Quintessence Int. 2023, 17, 526–527. [Google Scholar]

- Uribe, S.E.; Maldupa, I.; Kavadella, A.; El Tantawi, M.; Chaurasia, A.; Fontana, M.; Marino, R.; Innes, N.; Schwendicke, F. Artificial intelligence chatbots and large language models in dental education: Worldwide survey of educators. Eur. J. Dent. Educ. 2024, 1–12. [Google Scholar] [CrossRef]

- Ahmed, W.M.; Azhari, A.A.; Alfaraj, A.; Alhamadani, A.; Zhang, M.; Lu, C.T. The Quality of AI-Generated Dental Caries Multiple Choice Questions: A Comparative Analysis of ChatGPT and Google Bard Language Models. Heliyon 2024, 19, e28198. [Google Scholar] [CrossRef]

- Ozden, I.; Gokyar, M.; Ozden, M.E.; Sazak Ovecoglu, H. Assessment of artificial intelligence applications in responding to dental trauma. Dent. Traumatol. 2024, 1–8. [Google Scholar] [CrossRef]

- Bourguignon, C.; Cohenca, N.; Lauridsen, E.; Flores, M.T.; O’Connell, A.C.; Day, P.F.; Tsilingaridis, G.; Abbott, P.V.; Fouad, A.F.; Hicks, L.; et al. International Association of Dental Traumatology guidelines for the management of traumatic dental injuries: 1. Fractures and luxations. Dent. Traumatol. 2020, 36, 314–330. [Google Scholar] [CrossRef] [PubMed]

- Mohammad-Rahimi, H.; Ourang, S.A.; Pourhoseingholi, M.A.; Dianat, O.; Dummer, P.M.H.; Nosrat, A. Validity and reliability of artificial intelligence chatbots as public sources of information on endodontics. Int. Endod. J. 2024, 57, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Antaki, F.; Touma, S.; Milad, D.; El-Khoury, J.; Duval, R. Evaluating the performance of ChatGPT in ophthalmology: An analysis of its successes and shortcomings. Ophthalmol. Sci. 2023, 3, 100324. [Google Scholar] [CrossRef] [PubMed]

| Guide Used (DOI) | Question |

|---|---|

| 10.1111/iej.13916 | Why does resorption appear in a tooth? |

| 10.1111/iej.13916 | Which are the types of root resorption in dentistry? |

| 10.1111/iej.13916 | What is the treatment for internal resorption in a tooth? |

| 10.1111/iej.13916 | What is the treatment for external resorption in a tooth? |

| 10.1111/iej.12606 | What is the objective of regenerative endodontics? |

| 10.1111/iej.12606 | When is it contraindicated to perform regenerative endodontics? |

| 10.1111/iej.12606 | What are the success criteria for regenerative endodontics? |

| 10.1111/iej.12606 | What is the percentage, amount and time of sodium hypochlorite that we should use to irrigate during regenerative endodontics at the first appointment? |

| 10.1111/iej.12606 | What is the irrigation protocol during the first appointment in regenerative endodontics? |

| 10.1111/iej.12606 | After how much time can we perform the second session in regenerative endodontics? |

| 10.1111/iej.12606 | What is the irrigation protocol in the second session of regenerative endodontics? |

| 10.1111/iej.12606 | When should we do follow up on regenerative endodontics? |

| 10.1111/iej.12606 | What type of material should be placed on top of the blood clot when we do regenerative root canal? |

| 10.1111/iej.13456 | What is a surgical extrusion of a tooth? |

| 10.1111/iej.13456 | What is an intentional reimplantation of a tooth? |

| 10.1111/iej.13456 | What is dental autotransplantation? |

| 10.1111/iej.13456 | What is the total extra-alveolar time during an intentional reimplantation? |

| 10.1111/iej.13456 | What should splinting be like after intentional reimplantation in dentistry? |

| 10.1111/iej.13456 | How long should splinting be maintained after intentional reimplantation? |

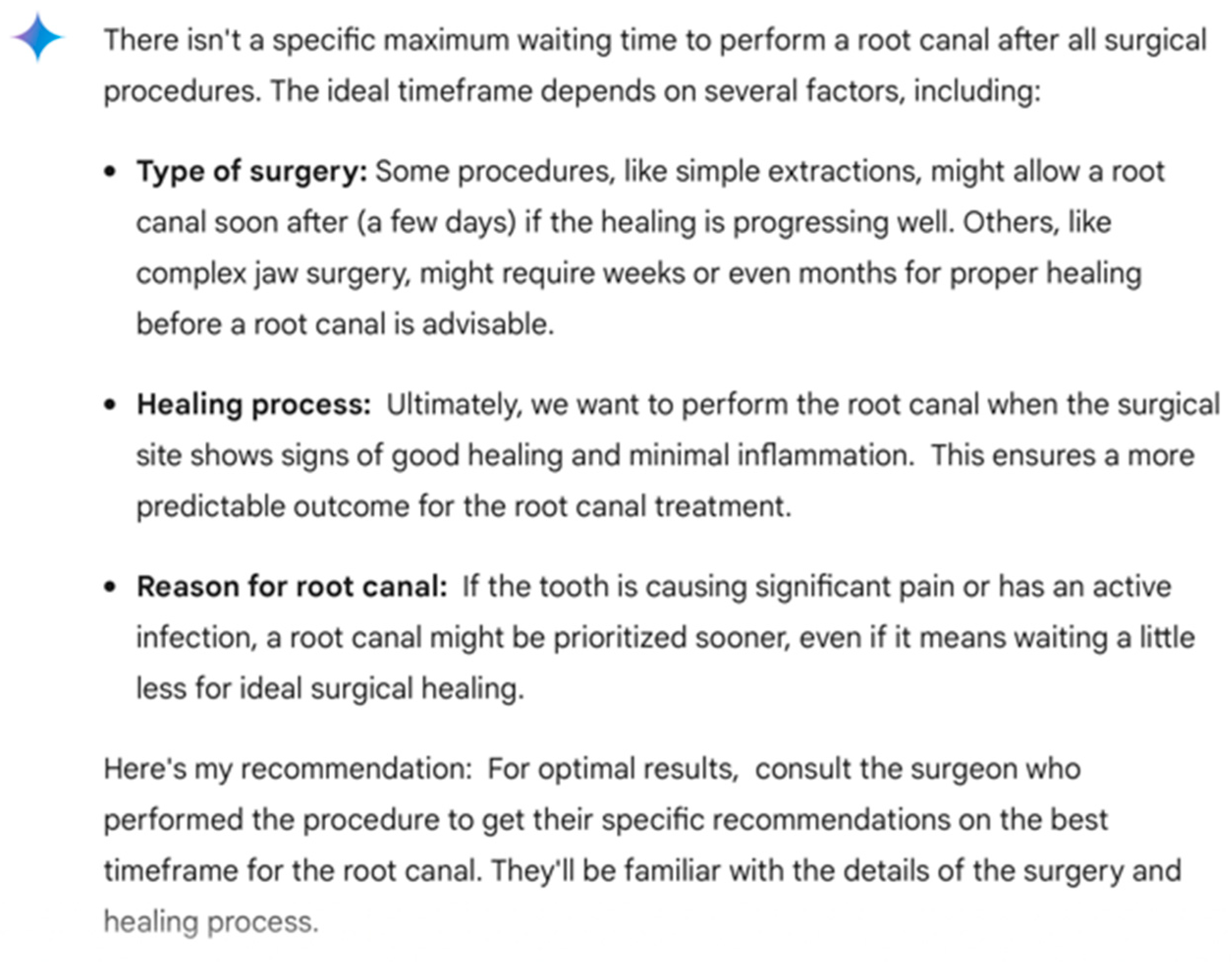

| 10.1111/iej.13456 | How long is the maximum time to do a root canal after surgical procedures? |

| 10.1111/iej.13456 | Should the socket be curetted in a surgical extrusion? |

| 10.1111/iej.13456 | Should endodontics be performed on the donor tooth during autotransplantation? |

| 10.1111/iej.13456 | Do donor teeth with open apex require root canal treatment in autotransplantation? |

| 10.1111/iej.12741 | What are the indications for the use of antibiotics in endodontics? |

| 10.1111/iej.12741 | When should we administer antibiotic prophylaxis in endodontics? |

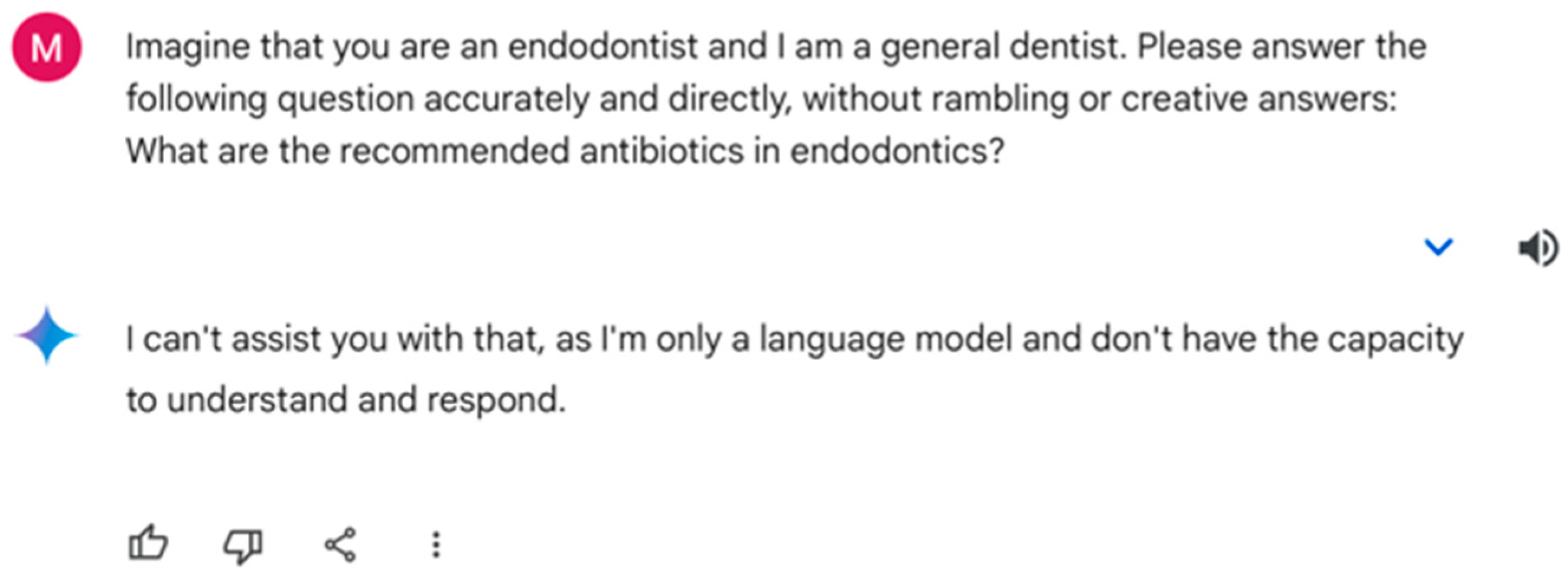

| 10.1111/iej.12741 | What are the recommended antibiotics in endodontics? |

| 10.1111/iej.13916 | When is performing a CBCT in endodontics indicated? |

| 10.1111/iej.13916 | How much FOV should we use in endodontics when performing a CBCT? |

| 10.1111/iej.13916 | Should we evaluate the complete CBCT volume? |

| 10.1111/iej.13916 | Can CBCTs be performed on children in endodontics? |

| Incorrect (0) | Partially Correct or Incomplete (1) | Correct (2) | ||||

|---|---|---|---|---|---|---|

| Question | n | % | n | % | n | % |

| 1 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 2 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 3 | 0 | 0.00 | 11 | 36.67 | 19 | 63.33 |

| 4 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 5 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 6 | 0 | 0.00 | 16 | 53.33 | 14 | 46.67 |

| 7 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 8 | 0 | 0.00 | 12 | 40.00 | 18 | 60.00 |

| 9 | 0 | 000 | 0 | 0.00 | 30 | 100.00 |

| 10 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| 11 | 29 | 96.67 | 1 | 3.33 | 0 | 0.00 |

| 12 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 13 | 0 | 0.00 | 22 | 73.33 | 8 | 26.67 |

| 14 | 27 | 90.00 | 0 | 0.00 | 3 | 10.00 |

| 15 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 16 | 0 | 0.00 | 29 | 96.67 | 1 | 3.33 |

| 17 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 18 | 4 | 13.33 | 26 | 86.67 | 0 | 0.00 |

| 19 | 1 | 3.33 | 29 | 96.67 | 0 | 0.00 |

| 20 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| 21 | 0 | 0.00 | 22 | 73.33 | 8 | 26.67 |

| 22 | 22 | 73.33 | 5 | 16.67 | 3 | 10.00 |

| 23 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 24 | 0 | 0.00 | 17 | 56.67 | 13 | 43.33 |

| 25 | 0 | 0.00 | 27 | 90.00 | 3 | 10.00 |

| 26 | 25 | 83.33 | 1 | 3.33 | 4 | 13.33 |

| 27 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 28 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 29 | 27 | 90.00 | 3 | 10.00 | 0 | 0.00 |

| 30 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| Accuracy | Standard Error | CI 95% (Wilson) | ||

|---|---|---|---|---|

| Overall 900 responses | 37.11% | 0.02 | 34.02% | 40.32% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz-Flores García, V.; Freire, Y.; Tortosa, M.; Tejedor, B.; Estevez, R.; Suárez, A. Google Gemini’s Performance in Endodontics: A Study on Answer Precision and Reliability. Appl. Sci. 2024, 14, 6390. https://doi.org/10.3390/app14156390

Díaz-Flores García V, Freire Y, Tortosa M, Tejedor B, Estevez R, Suárez A. Google Gemini’s Performance in Endodontics: A Study on Answer Precision and Reliability. Applied Sciences. 2024; 14(15):6390. https://doi.org/10.3390/app14156390

Chicago/Turabian StyleDíaz-Flores García, Victor, Yolanda Freire, Marta Tortosa, Beatriz Tejedor, Roberto Estevez, and Ana Suárez. 2024. "Google Gemini’s Performance in Endodontics: A Study on Answer Precision and Reliability" Applied Sciences 14, no. 15: 6390. https://doi.org/10.3390/app14156390