Age and Sex Estimation in Children and Young Adults Using Panoramic Radiographs with Convolutional Neural Networks

Abstract

1. Introduction

2. Materials and Methods

Statistics

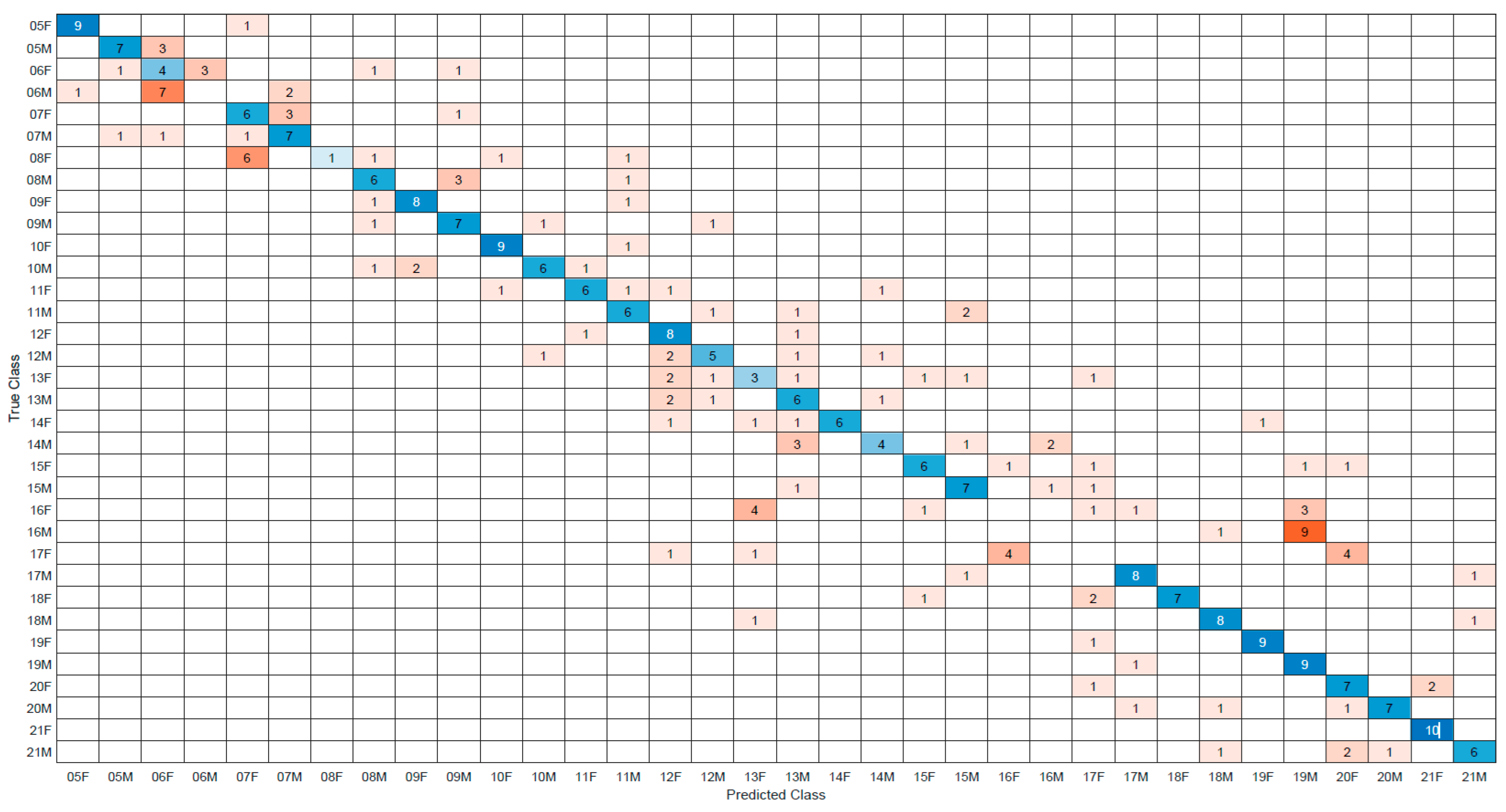

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, J.H.; Kim, Y.J.; Kim, K.G. Bone age estimation using deep learning and hand X-ray images. Biomed. Eng. Lett. 2020, 10, 323–331. [Google Scholar] [CrossRef] [PubMed]

- Manjunatha, B.; Soni, N.K. Estimation of age from development and eruption of teeth. Forensic Dent. Sci. 2014, 6, 73–76. [Google Scholar] [CrossRef] [PubMed]

- Marconi, V.; Iommi, M.; Monachesi, C.; Faragalli, A.; Skrami, E.; Gesuita, R.; Ferrante, L.; Carle, F. Validity of age estimation methods and reproducibility of bone/dental maturity indices for chronological age estimation: A systematic review and meta-analysis of validation studies. Sci. Rep. 2022, 12, 15607. [Google Scholar] [CrossRef] [PubMed]

- Krishan, K.; Kanchan, T.; Garg, A.K. Dental Evidence in Forensic Identification—An Overview, Methodology and Present Status. Open Dent. J. 2015, 9, 250–256. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, F.; Mohammadi, Y.; Ahmadvand, M.; Razaghi, P. Dental age estimation using cone-beam computed tomography: A systematic review and meta-analysis. Imaging Sci. Dent. 2023, 53, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Singhal, A.; Ramesh, V.; Balamurali, P. A comparative analysis of root dentin transparency with known age. J. Forensic Dent. Sci. 2010, 2, 18–21. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Kurniawan, A.; Chusida, A.n.; Atika, N.; Gianosa, T.K.; Solikhin, M.D.; Margaretha, M.S.; Utomo, H.; Marini, M.I.; Rizky, B.N.; Prakoeswa, B.F.W.R. The applicable dental age estimation methods for children and adolescents in Indonesia. Int. J. Dent. 2022, 2022, 6761476. [Google Scholar] [CrossRef] [PubMed]

- Cameriere, R.; Ferrante, L.; Cingolani, M. Age estimation in children by measurement of open apices in teeth. Int. J. Leg. Med. 2006, 120, 49–52. [Google Scholar] [CrossRef] [PubMed]

- Liversidge, H. Variation in modern human dental development. In Patterns of Growth and Development in the Genus Homo, 1st ed.; Thompson, J.L., Krovitz, G.E., Nelson, A.J., Eds.; Cambridge University Press: Cambridge, UK, 2003; pp. 73–113. [Google Scholar]

- Apaydin, B.; Yaşar, F. Accuracy of the Demirjian, Willems and Cameriere methods of estimating dental age on Turkish children. Niger. J. Clin. Pract. 2018, 21, 257–263. [Google Scholar] [CrossRef]

- Khazaei, M.; Mollabashi, V.; Khotanlou, H.; Farhadian, M. Sex determination from lateral cephalometric radiographs using an automated deep learning convolutional neural network. Imaging Sci. Dent. 2022, 52, 239–244. [Google Scholar] [CrossRef]

- Saric, R.; Kevric, J.; Hadziabdic, N.; Osmanovic, A.; Kadic, M.; Saracevic, M.; Jokic, D.; Rajs, V. Dental age assessment based on CBCT images using machine learning algorithms. Forensic Sci. Int. 2022, 334, 111245. [Google Scholar] [CrossRef] [PubMed]

- Ko, J.; Kim, Y.-R.; Hwang, H.-J.; Chang, W.-D.; Han, M.-S.; Nam, S.-H.; Jung, Y.-J. Dental Panoramic Radiography in Age Estimation for Dental Care using Dark-Net 19. J. Magn. 2022, 27, 485–491. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, D.; Wang, Y.; Peng, X.; Guo, C. Imaging features of medicine-related osteonecrosis of the jaws: Comparison between panoramic radiography and computed tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2016, 122, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Silva, G.; Oliveira, L.; Pithon, M. Automatic segmenting teeth in X-ray images: Trends, a novel data set, benchmarking and future perspectives. Expert Syst. Appl. 2018, 107, 15–31. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry–A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Alzahrani, S.; Al-Bander, B.; Al-Nuaimy, W. A Comprehensive Evaluation and Benchmarking of Convolutional Neural Networks for Melanoma Diagnosis. Cancers 2021, 13, 4494. [Google Scholar] [CrossRef] [PubMed]

- Eelbode, T.; Sinonquel, P.; Maes, F.; Bisschops, R. Pitfalls in training and validation of deep learning systems. Best Pract. Res. Clin. Gastroenterol. 2021, 52, 101712. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.-C.; Han, M.; Chi, Y.; Long, H.; Zhang, D.; Yang, J.; Yang, Y.; Chen, T.; Du, S. Accurate age classification using manual method and deep convolutional neural network based on orthopantomogram images. Int. J. Leg. Med. 2021, 135, 1589–1597. [Google Scholar] [CrossRef]

- Vila-Blanco, N.; Carreira, M.J.; Varas-Quintana, P.; Balsa-Castro, C.; Tomas, I. Deep neural networks for chronological age estimation from OPG images. IEEE Trans. Med. Imaging 2020, 39, 2374–2384. [Google Scholar] [CrossRef]

- Rajee, M.; Mythili, C. Gender classification on digital dental X-ray images using deep convolutional neural network. Biomed. Signal Process Control 2021, 69, 102939. [Google Scholar] [CrossRef]

- Ataş, I. Human gender prediction based on deep transfer learning from panoramic dental radiograph images. Trait. Du. Signal 2022, 39, 1585. [Google Scholar] [CrossRef]

- Mualla, N.; Houssein, E.H.; Hassan, M. Dental Age Estimation Based on X-ray Images. Comput. Mater. Contin. 2020, 62, 591–605. [Google Scholar] [CrossRef]

- Ataş, İ.; Özdemir, C.; Ataş, M.; Doğan, Y. Forensic dental age estimation using modified deep learning neural network. arXiv 2022, arXiv:2208.09799. [Google Scholar] [CrossRef]

- Štern, D.; Payer, C.; Urschler, M. Automated age estimation from MRI volumes of the hand. Med. Image Anal. 2019, 58, 101538. [Google Scholar] [CrossRef] [PubMed]

- Shanthi, N.; Yuvasri, P.; Vaishnavi, S.; Vidhya, P. Gender and Age Detection using Deep Convolutional Neural Networks. In Proceedings of the 4th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 January 2022; pp. 951–956. [Google Scholar]

- Kim, S.; Lee, Y.-H.; Noh, Y.-K.; Park, F.C.; Auh, Q.-S. Age-group determination of living individuals using first molar images based on artificial intelligence. Sci. Rep. 2021, 11, 1073. [Google Scholar] [CrossRef] [PubMed]

- Avuçlu, E.; Başçiftçi, F. The determination of age and gender by implementing new image processing methods and measurements to dental X-ray images. Med. Image Anal. 2020, 149, 106985. [Google Scholar] [CrossRef]

- Capitaneanu, C.; Willems, G.; Jacobs, R.; Fieuws, S.; Thevissen, P. Sex estimation based on tooth measurements using panoramic radiographs. Int. J. Leg. Med. 2017, 131, 813–821. [Google Scholar] [CrossRef] [PubMed]

- Banjšak, L.; Milošević, D.; Subašić, M. Implementation of artificial intelligence in chronological age estimation from orthopantomographic X-ray images of archaeological skull remains. Bull. Int. Assoc. Paleodont. 2020, 14, 122–129. [Google Scholar]

- Ali, L.; Alnajjar, F.; Jassmi, H.A.; Gocho, M.; Khan, W.; Serhani, M.A. Performance evaluation of deep CNN-based crack detection and localization techniques for concrete structures. Sensors 2021, 21, 1688. [Google Scholar] [CrossRef]

- Schmeling, A.; Olze, A.; Reisinger, W.; Geserick, G. Forensic age diagnostics of living people undergoing criminal proceedings. Forensic Sci. Int. 2004, 144, 243–245. [Google Scholar] [CrossRef]

| Age | Sex | Training | Validation | Test | Total |

|---|---|---|---|---|---|

| 5 | Male | 178 | 75 | 10 | 263 |

| Female | 164 | 69 | 10 | 243 | |

| 6 | Male | 165 | 70 | 10 | 245 |

| Female | 172 | 73 | 10 | 255 | |

| 7 | Male | 285 | 122 | 10 | 417 |

| Female | 277 | 118 | 10 | 405 | |

| 8 | Male | 176 | 75 | 10 | 261 |

| Female | 260 | 111 | 10 | 381 | |

| 9 | Male | 237 | 101 | 10 | 348 |

| Female | 190 | 81 | 10 | 281 | |

| 10 | Male | 157 | 67 | 10 | 234 |

| Female | 166 | 71 | 10 | 247 | |

| 11 | Male | 147 | 62 | 10 | 219 |

| Female | 125 | 53 | 10 | 188 | |

| 12 | Male | 110 | 47 | 10 | 167 |

| Female | 119 | 50 | 10 | 179 | |

| 13 | Male | 96 | 41 | 10 | 147 |

| Female | 103 | 44 | 10 | 157 | |

| 14 | Male | 90 | 38 | 10 | 138 |

| Female | 106 | 45 | 10 | 161 | |

| 15 | Male | 75 | 32 | 10 | 117 |

| Female | 115 | 49 | 10 | 174 | |

| 16 | Male | 77 | 33 | 10 | 120 |

| Female | 129 | 55 | 10 | 194 | |

| 17 | Male | 108 | 46 | 10 | 164 |

| Female | 178 | 76 | 10 | 264 | |

| 18 | Male | 108 | 46 | 10 | 164 |

| Female | 180 | 76 | 10 | 266 | |

| 19 | Male | 101 | 43 | 10 | 154 |

| Female | 159 | 68 | 10 | 237 | |

| 20 | Male | 113 | 48 | 10 | 171 |

| Female | 180 | 77 | 10 | 267 | |

| 21 | Male | 116 | 49 | 10 | 175 |

| Female | 185 | 78 | 10 | 273 | |

| Total | 5147 | 2189 | 340 | 7676 | |

| Input Resolution | Age Prediction Accuracy | Sex Prediction Accuracy | Age and Sex Prediction Accuracy | n | Mean Absolute Age Error | Std. Dev. | Std. Err. | 95% Confidence Interval | Min. | Max. | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Lower | Upper | |||||||||||

| DarkNet-19 | 256 × 256 | 80.59% | 86.18% | 70.59% | 340 | 0.4265 | 1.18641 | 0.06434 | 0.2999 | 0.5530 | 0 | 10 |

| DarkNet-53 | 256 × 256 | 77.35% | 87.94% | 70.88% | 340 | 0.5824 | 1.55783 | 0.08449 | 0.4162 | 0.7485 | 0 | 14 |

| Inception-Resnet-v2 | 299 × 299 | 68.53% | 79.12% | 59.41% | 340 | 0.6824 | 1.49107 | 0.08086 | 0.5233 | 0.8414 | 0 | 12 |

| VGG-19 | 224 × 224 | 67.94% | 80.88% | 59.71% | 340 | 0.6500 | 1.18415 | 0.06422 | 0.5237 | 0.7763 | 0 | 8 |

| DenseNet-201 | 224 × 224 | 67.06% | 81.76% | 59.71% | 340 | 0.7559 | 1.30434 | 0.07074 | 0.6167 | 0.8950 | 0 | 7 |

| ResNet-50 | 224 × 224 | 66.76% | 78.24% | 58.82% | 340 | 0.7471 | 1.39769 | 0.07580 | 0.5980 | 0.8962 | 0 | 7 |

| GoogLeNet | 224 × 224 | 64.12% | 84.71% | 58.24% | 340 | 0.7029 | 1.15086 | 0.06241 | 0.5802 | 0.8257 | 0 | 5 |

| VGG-16 | 224 × 224 | 63.82% | 77.35% | 55.00% | 340 | 1.0471 | 1.92273 | 0.10427 | 0.8420 | 1.2522 | 0 | 8 |

| SqueezeNet | 227 × 227 | 62.65% | 79.41% | 54.41% | 340 | 0.9882 | 1.76324 | 0.09562 | 0.8001 | 1.1763 | 0 | 10 |

| ResNet-101 | 224 × 224 | 60.29% | 75.88% | 51.18% | 340 | 0.9765 | 1.71735 | 0.09314 | 0.7933 | 1.1597 | 0 | 9 |

| ResNet-18 | 224 × 224 | 58.53% | 81.76% | 50.59% | 340 | 1.1147 | 1.86611 | 0.10120 | 0.9156 | 1.3138 | 0 | 15 |

| ShuffleNet | 224 × 224 | 57.65% | 73.24% | 47.94% | 340 | 0.8941 | 1.38916 | 0.07534 | 0.7459 | 1.0423 | 0 | 7 |

| MobileNet-v2 | 224 × 224 | 51.47% | 62.94% | 42.06% | 340 | 1.3265 | 2.05296 | 0.11134 | 1.1075 | 1.5455 | 0 | 12 |

| NasNet-Mobile | 224 × 224 | 49.12% | 71.18% | 40.29% | 340 | 1.0941 | 1.66960 | 0.09055 | 0.9160 | 1.2722 | 0 | 12 |

| AlexNet | 227 × 227 | 47.35% | 72.35% | 37.06% | 340 | 1.2059 | 1.66660 | 0.09038 | 1.0281 | 1.3837 | 0 | 9 |

| Xception | 299 × 299 | 44.12% | 76.47% | 35.29% | 340 | 1.1088 | 1.54096 | 0.08357 | 0.9444 | 1.2732 | 0 | 9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Şahin, T.N.; Kölüş, T. Age and Sex Estimation in Children and Young Adults Using Panoramic Radiographs with Convolutional Neural Networks. Appl. Sci. 2024, 14, 7014. https://doi.org/10.3390/app14167014

Şahin TN, Kölüş T. Age and Sex Estimation in Children and Young Adults Using Panoramic Radiographs with Convolutional Neural Networks. Applied Sciences. 2024; 14(16):7014. https://doi.org/10.3390/app14167014

Chicago/Turabian StyleŞahin, Tuğçe Nur, and Türkay Kölüş. 2024. "Age and Sex Estimation in Children and Young Adults Using Panoramic Radiographs with Convolutional Neural Networks" Applied Sciences 14, no. 16: 7014. https://doi.org/10.3390/app14167014

APA StyleŞahin, T. N., & Kölüş, T. (2024). Age and Sex Estimation in Children and Young Adults Using Panoramic Radiographs with Convolutional Neural Networks. Applied Sciences, 14(16), 7014. https://doi.org/10.3390/app14167014