Diagnosis of Pressure Ulcer Stage Using On-Device AI

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. YOLOv8 Model Description

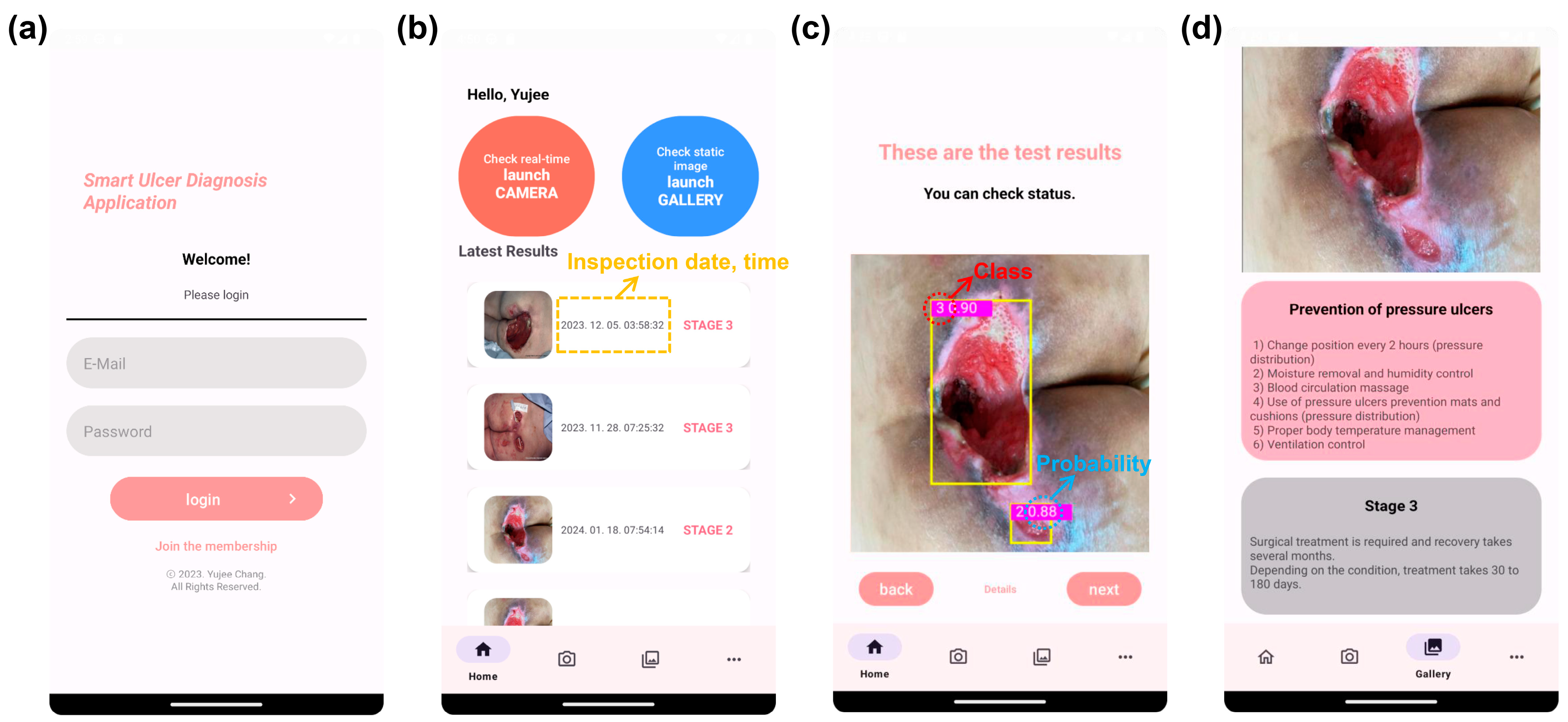

2.3. Mobile App Design

3. Results

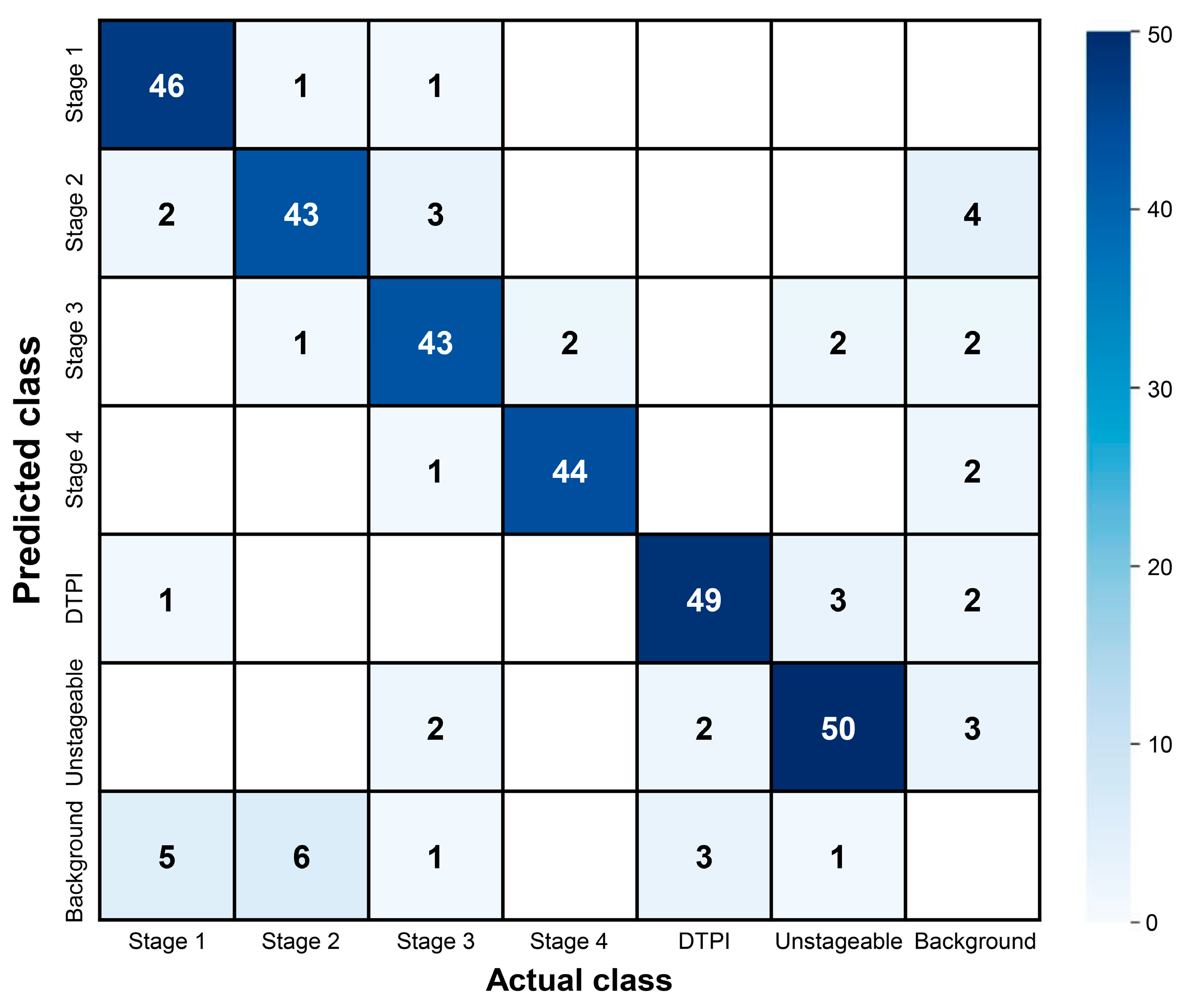

3.1. Pressure Ulcer Detection Performance

3.2. Pressure Ulcer Checker Mobile App

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edsberg, L.E.; Black, J.M.; Goldberg, M.; McNichol, L.; Moore, L.; Sieggreen, M. Revised national pressure ulcer advisory panel pressure injury staging system: Revised pressure injury staging system. J. Wound Ostomy Cont. Nurs. 2016, 43, 585. [Google Scholar] [CrossRef] [PubMed]

- Mervis, J.S.; Phillips, T.J. Pressure ulcers: Pathophysiology, epidemiology, risk factors, and presentation. J. Am. Acad. Dermatol. 2019, 81, 881–890. [Google Scholar] [CrossRef]

- Rasero, L.; Simonetti, M.; Falciani, F.; Fabbri, C.; Collini, F.; Dal Molin, A. Pressure ulcers in older adults: A prevalence study. Adv. Ski. Wound Care 2015, 28, 461–464. [Google Scholar] [CrossRef]

- Jaul, E.; Barron, J.; Rosenzweig, J.P.; Menczel, J. An overview of co-morbidities and the development of pressure ulcers among older adults. BMC Geriatr. 2018, 18, 305. [Google Scholar] [CrossRef] [PubMed]

- Mallick, A.N.; Bhandari, M.; Basumatary, B.; Gupta, S.; Arora, K.; Sahani, A.K. Risk factors for developing pressure ulcers in neonates and novel ideas for developing neonatal antipressure ulcers solutions. J. Clin. Neonatol. 2023, 12, 27–33. [Google Scholar] [CrossRef]

- Thomas, D.R. Prevention and treatment of pressure ulcers. J. Am. Med. Dir. Assoc. 2006, 7, 46–59. [Google Scholar] [CrossRef]

- Gorecki, C.; Brown, J.M.; Nelson, E.A.; Briggs, M.; Schoonhoven, L.; Dealey, C.; Defloor, T.; Nixon, J.; European Quality of Life Pressure Ulcer Project Group. Impact of pressure ulcers on quality of life in older patients: A systematic review. J. Am. Med. Dir. Assoc. 2009, 57, 1175–1183. [Google Scholar] [CrossRef]

- Thomas, D.R.; Goode, P.S.; Tarquine, P.H.; Allman, R.M. Hospital-acquired pressure ulcers and risk of death. J. Am. Geriatr. Soc. 1996, 44, 1435–1440. [Google Scholar] [CrossRef] [PubMed]

- Hajhosseini, B.; Longaker, M.T.; Gurtner, G.C. Pressure injury. Ann. Surg. 2020, 271, 671–679. [Google Scholar] [CrossRef]

- Moore, Z.; Avsar, P.; Conaty, L.; Moore, D.H.; Patton, D.; O’Connor, T. The prevalence of pressure ulcers in Europe, what does the European data tell us: A systematic review. J. Wound Care 2019, 28, 710–719. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, N.; Li, Z.; Xie, X.; Liu, T.; Ouyang, G. The global burden of decubitus ulcers from 1990 to 2019. Sci. Rep. 2021, 11, 21750. [Google Scholar] [CrossRef] [PubMed]

- Haavisto, E.; Stolt, M.; Puukka, P.; Korhonen, T.; Kielo-Viljamaa, E. Consistent practices in pressure ulcer prevention based on international care guidelines: A cross-sectional study. Int. Wound J. 2022, 19, 1141–1157. [Google Scholar] [CrossRef] [PubMed]

- Ankrom, M.A.; Bennett, R.G.; Sprigle, S.; Langemo, D.; Black, J.M.; Berlowitz, D.R.; Lyder, C.H. Pressure-related deep tissue injury under intact skin and the current pressure ulcer staging systems. Adv. Ski. Wound Care 2005, 18, 35–42. [Google Scholar] [CrossRef] [PubMed]

- Nixon, J.; Thorpe, H.; Barrow, H.; Phillips, A.; Andrea Nelson, E.; Mason, S.A.; Cullum, N. Reliability of pressure ulcer classification and diagnosis. J. Adv. Nurs. 2005, 50, 613–623. [Google Scholar] [CrossRef] [PubMed]

- Nancy, G.A.; Kalpana, R.; Nandhini, S. A study on pressure ulcer: Influencing factors and diagnostic techniques. Int. J. Low. Extrem. Wounds 2022, 21, 254–263. [Google Scholar] [CrossRef] [PubMed]

- Stausberg, J.; Lehmann, N.; Kröger, K.; Maier, I.; Niebel, W. Reliability and validity of pressure ulcer diagnosis and grading: An image-based survey. Int. J. Nurs. Stud. 2007, 44, 1316–1323. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Wainberg, M.; Merico, D.; Delong, A.; Frey, B.J. Deep learning in biomedicine. Nat. Biotechnol. 2018, 36, 829–838. [Google Scholar] [CrossRef]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef]

- Bote-Curiel, L.; Munoz-Romero, S.; Gerrero-Curieses, A.; Rojo-Álvarez, J.L. Deep learning and big data in healthcare: A double review for critical beginners. Appl. Sci. 2019, 9, 2331. [Google Scholar] [CrossRef]

- Tang, D.; Chen, J.; Ren, L.; Wang, X.; Li, D.; Zhang, H. Reviewing CAM-Based Deep Explainable Methods in Healthcare. Appl. Sci. 2024, 14, 4124. [Google Scholar] [CrossRef]

- Rippon, M.G.; Fleming, L.; Chen, T.; Rogers, A.A.; Ousey, K. Artificial intelligence in wound care: Diagnosis, assessment and treatment of hard-to-heal wounds: A narrative review. J. Wound Care 2024, 33, 229–242. [Google Scholar] [CrossRef] [PubMed]

- Dweekat, O.Y.; Lam, S.S.; McGrath, L. Machine learning techniques, applications, and potential future opportunities in pressure injuries (bedsores) management: A systematic review. Int. J. Environ. Res. Public Health 2023, 20, 796. [Google Scholar] [CrossRef] [PubMed]

- Cicceri, G.; De Vita, F.; Bruneo, D.; Merlino, G.; Puliafito, A. A deep learning approach for pressure ulcer prevention using wearable computing. Hum.-Centric Comput. Inf. Sci. 2020, 10, 5. [Google Scholar] [CrossRef]

- Zahia, S.; Sierra-Sosa, D.; Garcia-Zapirain, B.; Elmaghraby, A. Tissue classification and segmentation of pressure injuries using convolutional neural networks. Comput. Meth. Programs Biomed. 2018, 159, 51–58. [Google Scholar] [CrossRef]

- Elmogy, M.; García-Zapirain, B.; Burns, C.; Elmaghraby, A.; Ei-Baz, A. Tissues classification for pressure ulcer images based on 3D convolutional neural network. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Chang, C.W.; Christian, M.; Chang, D.H.; Lai, F.; Liu, T.J.; Chen, Y.S.; Chen, W.J. Deep learning approach based on superpixel segmentation assisted labeling for automatic pressure ulcer diagnosis. PLoS ONE 2022, 17, e0264139. [Google Scholar] [CrossRef]

- Chino, D.Y.; Scabora, L.C.; Cazzolato, M.T.; Jorge, A.E.; Traina, C., Jr.; Traina, A.J. Segmenting skin ulcers and measuring the wound area using deep convolutional networks. Comput. Meth. Programs Biomed. 2020, 191, 105376. [Google Scholar] [CrossRef] [PubMed]

- Pandey, B.; Joshi, D.; Arora, A.S.; Upadhyay, N.; Chhabra, H. A deep learning approach for automated detection and segmentation of pressure ulcers using infrared-based thermal imaging. IEEE Sens. J. 2022, 22, 14762–14768. [Google Scholar] [CrossRef]

- Liu, T.J.; Christian, M.; Chu, Y.-C.; Chen, Y.-C.; Chang, C.-W.; Lai, F.; Tai, H.-C. A pressure ulcers assessment system for diagnosis and decision making using convolutional neural networks. J. Formos. Med. Assoc. 2022, 121, 2227–2236. [Google Scholar] [CrossRef]

- Liu, T.J.; Wang, H.; Christian, M.; Chang, C.-W.; Lai, F.; Tai, H.-C. Automatic segmentation and measurement of pressure injuries using deep learning models and a LiDAR camera. Sci. Rep. 2023, 13, 680. [Google Scholar] [CrossRef]

- Kim, J.; Lee, C.; Choi, S.; Sung, D.-I.; Seo, J.; Lee, Y.N.; Lee, J.H.; Han, E.J.; Kim, A.Y.; Park, H.S. Augmented decision-making in wound care: Evaluating the clinical utility of a deep-learning model for pressure injury staging. Int. J. Med. Inform. 2023, 180, 105266. [Google Scholar] [CrossRef]

- Lau, C.H.; Yu, K.H.-O.; Yip, T.F.; Luk, L.Y.F.; Wai, A.K.C.; Sit, T.-Y.; Wong, J.Y.-H.; Ho, J.W.K. An artificial intelligence-enabled smartphone app for real-time pressure injury assessment. Front. Med. Technol. 2022, 4, 905074. [Google Scholar] [CrossRef] [PubMed]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.; Humayun, M. Yolo-based deep learning model for pressure ulcer detection and classification. Healthcare 2023, 11, 1222. [Google Scholar] [CrossRef]

- Fergus, P.; Chalmers, C.; Henderson, W.; Roberts, D.; Waraich, A. Pressure ulcer categorization and reporting in domiciliary settings using deep learning and mobile devices: A clinical trial to evaluate end-to-end performance. IEEE Access 2023, 11, 65138–65152. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Medetec Wound Database: Stock Pictures of Wounds. Available online: https://www.medetec.co.uk/files/medetec-image-databases.html (accessed on 7 August 2024).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- YOLO: A Brief History. Available online: https://docs.ultralytics.com (accessed on 16 July 2024).

- GitHub. Available online: https://github.com/ultralytics/ultralytics?tab=readme-ov-file (accessed on 16 July 2024).

- PyTorch Mobile Optimizer. Available online: https://pytorch.org/docs/stable/mobile_optimizer.html (accessed on 7 August 2024).

- Yacouby, R.; Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the First Workshop on Evaluation and Comparison of NLP Systems, Online, 20 November 2020. [Google Scholar]

- Sharma, N.; Baral, S.; Paing, M.P.; Chawuthai, R. Parking time violation tracking using yolov8 and tracking algorithms. Sensors 2023, 23, 5843. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A lightweight YOLOv8 tomato detection algorithm combining feature enhancement and attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Xiao, B.; Nguyen, M.; Yan, W.Q. Fruit ripeness identification using YOLOv8 model. Multimed. Tools Appl. 2024, 83, 28039–28056. [Google Scholar] [CrossRef]

- Chabi Adjobo, E.; Sanda Mahama, A.T.; Gouton, P.; Tossa, J. Automatic localization of five relevant Dermoscopic structures based on YOLOv8 for diagnosis improvement. J. Imaging 2023, 9, 148. [Google Scholar] [CrossRef]

| Reference | Model | Task | Description | Limitation |

|---|---|---|---|---|

| [24] | DNN | Classification | Monitored six postures of patients using inertial sensors and DNN | Cannot recognize pressure ulcer region and stage |

| [30] | Inception-ResNet-v2 | Classified the severity of erythema and necrosis with achieving over 97% classification accuracy | ||

| [32] | SE-ResNext101 | Categorized pressure ulcers into seven classes (stage 1–4, DTPI, unstageable, others) with achieving 71.5% F1 score | Cannot estimate pressure ulcer region | |

| [25] | CNN | Segmentation | Segmented a pressure ulcer RGB image to slough, granulation, and necrotic eschar tissue regions with achieving overall 92% classification accuracy [25] and 95% AUC [26] | Cannot predict detailed pressure ulcer stage |

| [26] | 3D CNN | |||

| [27] | Five CNN models | Applied U-Net, DeeplabV3, PsPet, FPN, and Mask R-CNN for tissue segmentation with achieving 99.6% tissue classification | ||

| [28] | U-Net | Segmented pressure ulcer wounds with achieving 90% F1 score | ||

| [31] | Mask R-CNN, U-Net | Segmented pressure ulcer region and measured its area via a LiDAR camera and the deep learning models with achieving 26.2% mean relative error | ||

| [29] | MobileNetV2 | Boundary detection | Detected pressure ulcer regions by displaying bounding boxes over them with achieving 75.9% mAP@50 | |

| [33] | YOLOv4 | Boundary detection, classification | Simultaneously predicted pressure ulcer boundaries and classes with achieving 63.2% accuracy [33], 73.2% F1 score [34], and 69.6% F1 score [35], respectively | Relatively low detection performance and portability |

| [34] | YOLOv5 | |||

| [35] | Faster R-CNN |

| Label | Image | Feature |

|---|---|---|

| Stage 1 |  |

|

| Stage 2 |  |

|

| Stage 3 |  |

|

| Stage 4 |  |

|

| DTPI |  |

|

| Unstageable |  |

|

| Model | Depth Multiple (d) | Width Multiple (w) | Ratio (r) | Trainable Parameters |

|---|---|---|---|---|

| YOLOv8n | 0.33 | 0.25 | 2.0 | 3.2 M |

| YOLOv8s | 0.33 | 0.50 | 2.0 | 28.6 M |

| YOLOv8m | 0.67 | 0.75 | 1.5 | 78.9 M |

| YOLOv8l | 1.00 | 1.00 | 1.0 | 165.2 M |

| YOLOv8x | 1.00 | 1.25 | 1.0 | 157.8 M |

| Model | Accuracy | Precision | Recall | F1 Score | mAP@50 | mAP@50-95 |

|---|---|---|---|---|---|---|

| YOLOv8n | 0.810 | 0.910 | 0.870 | 0.889 | 0.898 | 0.674 |

| YOLOv8s | 0.814 | 0.922 | 0.845 | 0.882 | 0.891 | 0.670 |

| YOLOv8m | 0.846 | 0.897 | 0.891 | 0.894 | 0.908 | 0.685 |

| YOLOv8l | 0.789 | 0.895 | 0.861 | 0.878 | 0.892 | 0.671 |

| YOLOv8x | 0.796 | 0.905 | 0.859 | 0.881 | 0.900 | 0.680 |

| Label | Precision | Recall | F1 Score | mAP@50 | mAP@50-95 |

|---|---|---|---|---|---|

| Overall | 0.897 | 0.891 | 0.894 | 0.908 | 0.685 |

| Stage 1 | 0.959 | 0.857 | 0.905 | 0.900 | 0.630 |

| Stage 2 | 0.794 | 0.843 | 0.818 | 0.839 | 0.564 |

| Stage 3 | 0.874 | 0.843 | 0.858 | 0.874 | 0.645 |

| Stage 4 | 0.938 | 0.957 | 0.947 | 0.975 | 0.922 |

| DTPI | 0.905 | 0.926 | 0.915 | 0.936 | 0.664 |

| Unstageable | 0.911 | 0.918 | 0.914 | 0.924 | 0.682 |

| Operating System | Android 10 or Newer |

| Application processor | ARMv8a 64 bit or higher performance |

| RAM | 4 GB or more |

| Storage | 1 GB or more |

| Model | Dataset Size | Classes | Accuracy | Precision | Recall | F1 Score | mAP@50 |

|---|---|---|---|---|---|---|---|

| Faster R-CNN [35] | 5084 | 6 (stage 1–4, DTPI, unstageable) | N/A | 0.776 | 0.641 | 0.696 | N/A |

| YOLOv4 [33] | 1432 | 6 (stage 1–4, unstageable, others) | 0.632 | N/A | N/A | N/A | N/A |

| YOLOv5s [34] | 1000+ | 5 (stage 1–4, non-pressure ulcer) | N/A | 0.781 | 0.685 | 0.732 | 0.769 |

| YOLOv8m (our study) | 2800 | 6 (stage 1–4, DTPI, unstageable) | 0.846 | 0.897 | 0.891 | 0.894 | 0.908 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, Y.; Kim, J.H.; Shin, H.W.; Ha, C.; Lee, S.Y.; Go, T. Diagnosis of Pressure Ulcer Stage Using On-Device AI. Appl. Sci. 2024, 14, 7124. https://doi.org/10.3390/app14167124

Chang Y, Kim JH, Shin HW, Ha C, Lee SY, Go T. Diagnosis of Pressure Ulcer Stage Using On-Device AI. Applied Sciences. 2024; 14(16):7124. https://doi.org/10.3390/app14167124

Chicago/Turabian StyleChang, Yujee, Jun Hyung Kim, Hyun Woo Shin, Changjin Ha, Seung Yeob Lee, and Taesik Go. 2024. "Diagnosis of Pressure Ulcer Stage Using On-Device AI" Applied Sciences 14, no. 16: 7124. https://doi.org/10.3390/app14167124

APA StyleChang, Y., Kim, J. H., Shin, H. W., Ha, C., Lee, S. Y., & Go, T. (2024). Diagnosis of Pressure Ulcer Stage Using On-Device AI. Applied Sciences, 14(16), 7124. https://doi.org/10.3390/app14167124