Domain-Aware Neural Network with a Novel Attention-Pooling Technology for Binary Sentiment Classification

Abstract

1. Introduction

“The food at this restaurant is delicious and reasonably priced.”

“This restaurant has poor service and an unpleasant atmosphere.”

“The food at this restaurant is delicious and reasonably priced, but the service and atmosphere are poor. Overall, you can give it a try.”

- (1)

- proposing an attention mechanism for multi-domain sentiment classification to take both the relative importance of features and the influence of the domain information into account, and expanding the set of existing attention mechanisms.

- (2)

- integrating attention with min-pooling to generate sharp probabilistic attention weights over words in a sentence to select salient features. To the best of our knowledge, the constructed model is distinct from other existing attention neural networks.

- (3)

- According to our experimental results, our model can obtain better performance on the Amazon sentiment analysis dataset at different scales.

2. Related Work

2.1. Sentiment Classification

2.2. Attention and Pooling Mechanisms in Neural Networks

2.3. Multi-Domain Scenarios

3. Methods

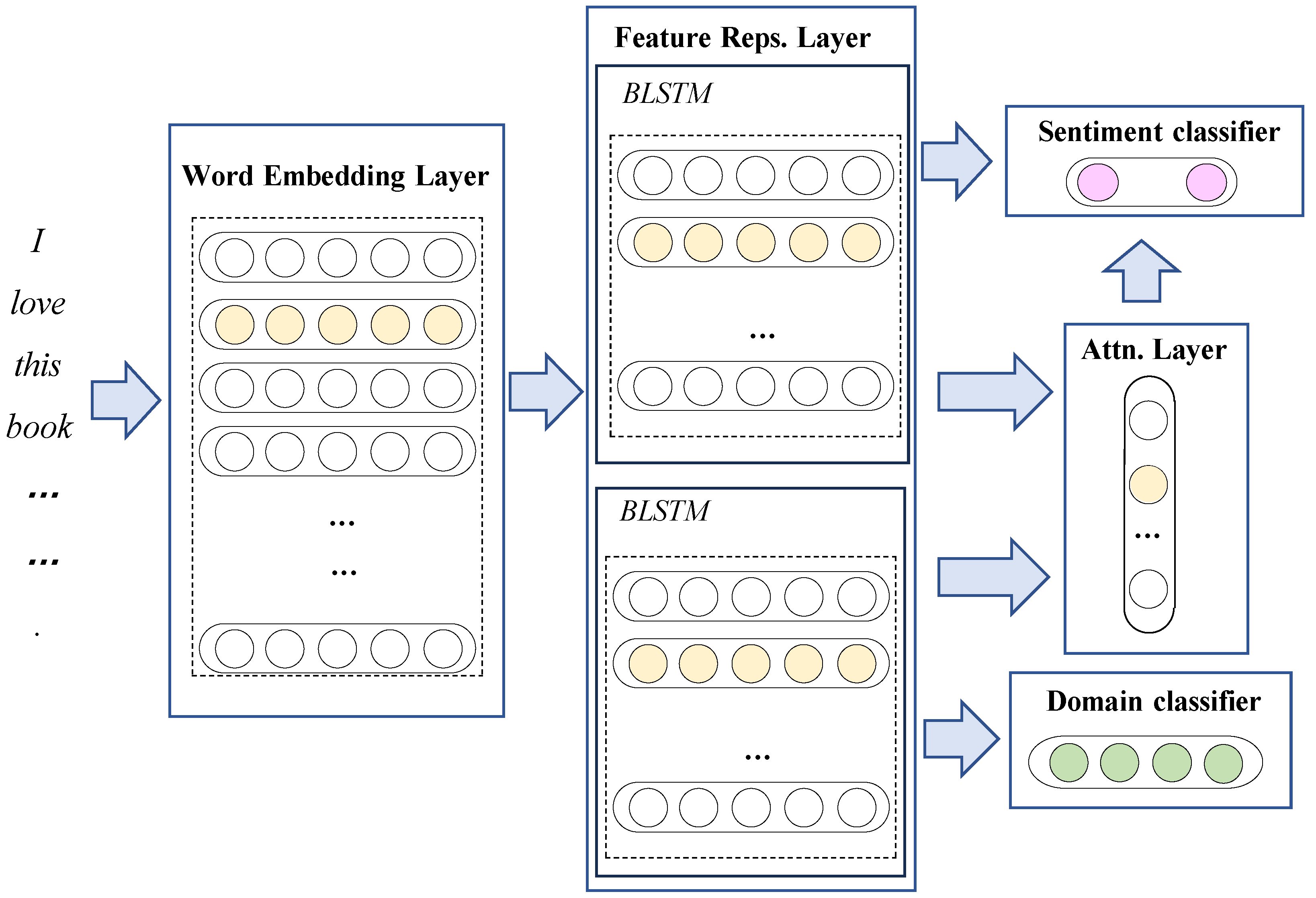

3.1. Overall Framework

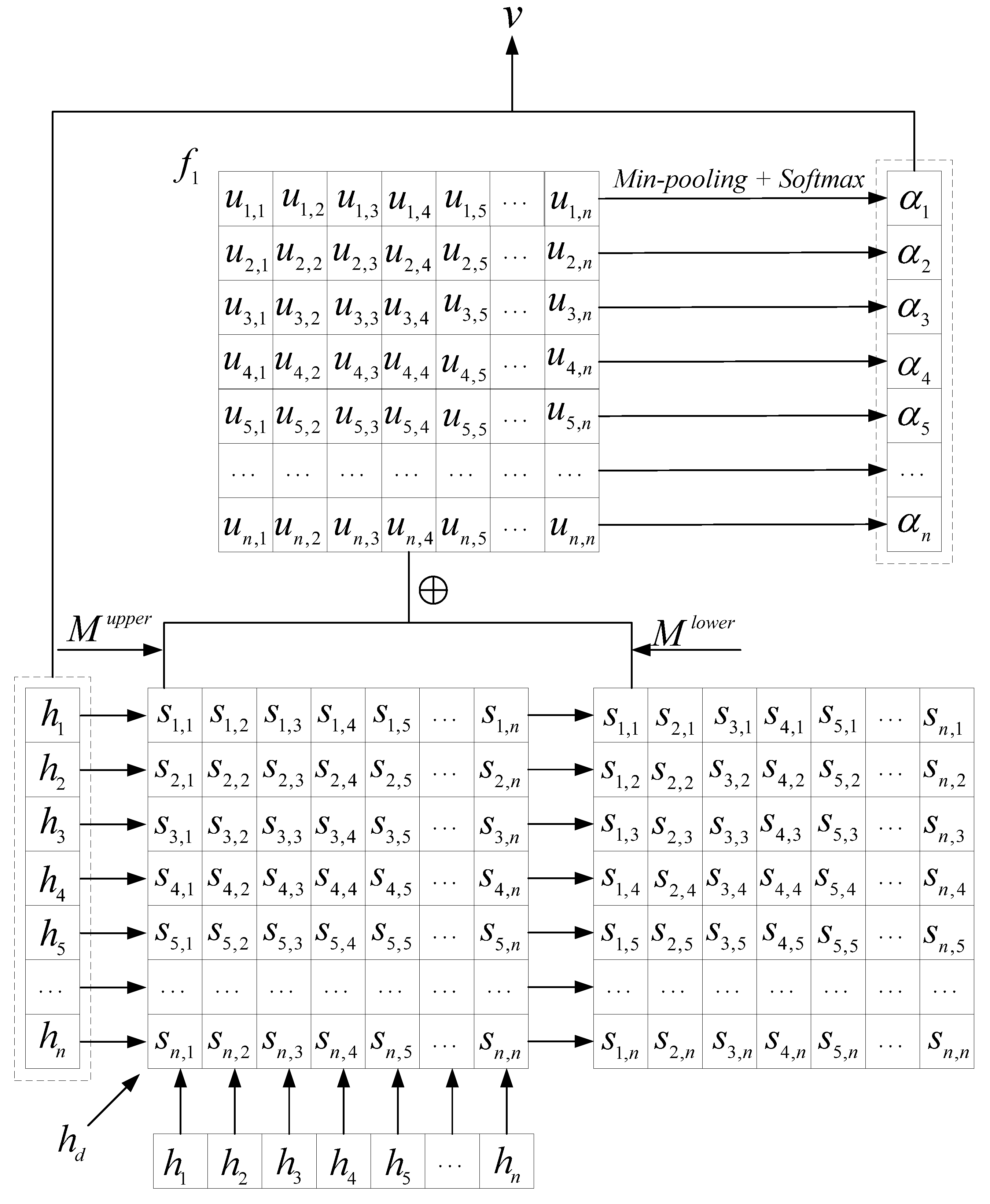

3.2. Proposed Attention Mechanism

3.3. Training

4. Experiments

4.1. Experimental Settings

4.2. Performance Evaluation

4.3. Comparative Experiments in Datasets with Different Domains

4.4. Pooling Techniques

4.5. Inverse Events

4.6. Computational Costs

4.7. Visualization of Attention Weights

“This is an excellent film, which unfortunately has not been given a decent treatment by the distributor. I was very disappointed in the quality of the release-the picture quality is poor inter-titles appear to be missing, and the score which has been added is just a reptition of long synth chords that don’t match the action on-screen. It’s a shame, because a film like this one deserves much better.”

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cao, Q.; Duan, W.; Gan, Q. Exploring Determinants of Voting for the “Helpfulness” of Online User Reviews: A Text Mining Approach. Decis. Support Syst. 2011, 50, 511–521. [Google Scholar] [CrossRef]

- Hu, N.; Bose, I.; Koh, N.S.; Liu, L. Manipulation of online reviews: An analysis of ratings, readability, and sentiments. Decis. Support Syst. 2012, 52, 674–684. [Google Scholar] [CrossRef]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Park, S.; Kim, Y. Building Thesaurus Lexicon Using Dictionary-Based Approach for Sentiment Classification. In Proceedings of the 14th IEEE International Conference on Software Engineering Research, Management and Applications (SERA), Towson, MD, USA, 8–10 June 2016; pp. 39–44. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osman, E.; Platt, J.; Scholkopf, B. Support Vector Machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Rasmussen, C.E. The Infinite Gaussian Mixture Model. In Proceedings of the Advances in Neural Information Processing Systems 12, NIPS 1999, Denver, CO, USA, 7 April 1999; Volume 12, pp. 554–560. [Google Scholar]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J. A Unified Architecture for Natural Language Processing: Deep Neural Networks with Multitask Learning. In Proceedings of the 25th International Conference on Machine Learning, COLING 2014, Dublin, Ireland, 23–29 August 2008; pp. 160–167. [Google Scholar] [CrossRef]

- Tao, H.; Tong, S.; Zhao, H.; Xu, T.; Jin, B.; Liu, Q. A Radical-Aware Attention-Based Model for Chinese Text Classification. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, Hilton Hawaiian Village, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5125–5132. [Google Scholar] [CrossRef]

- Shi, W.; Yu, Z. Sentiment Adaptive End-to-End Dialog Systems. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2018, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 1509–1519. [Google Scholar] [CrossRef]

- Xing, F.Z.; Cambria, E.; Welsch, R.E. Intelligent Asset Allocation via Market Sentiment Views. IEEE Comput. Intell. Mag. 2018, 13, 25–34. [Google Scholar] [CrossRef]

- Blitzer, J.; Dredze, M.; Pereira, F. Biographies, Bollywood, Boom-boxes and Blenders: Domain Adaptation for Sentiment Classification. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, ACL 2007, Prague, Czech Republic, 23–30 June 2007; pp. 440–447. [Google Scholar]

- Melville, P.; Gryc, W.; Lawrence, R.D. Sentiment Analysis of Blogs by Combining Lexical Knowledge with Text Classification. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD 2009, Paris, France, 28 June 2009; pp. 1275–1284. [Google Scholar] [CrossRef]

- Xing, F.Z.; Llucchini, F.P.; Cambria, E. Cognitive-Inspired Domain Adaptation of Sentiment Lexicons. Inf. Process. Manag. 2019, 56, 554–564. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-Level Convolutional Networks for Text Classification. In Proceedings of the 28th International Conference on Neural Information Processing Systems, NIPS 2015, Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 649–657. [Google Scholar]

- Tang, D.; Qin, B.; Liu, T. Document Modeling with Gated Recurrent Neural Network for Sentiment Classification. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, EMNLP 2015, Lisbon, Portugal, 17–21 September 2015; pp. 1422–1432. [Google Scholar] [CrossRef]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent Convolutional Neural Networks for Text Classification. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, AAAI 2015, Austin, TX, USA, 25–30 January 2015; pp. 2267–2273. [Google Scholar]

- Liu, B.; Guan, W.; Yang, C.; Fang, Z.; Lu, Z. Transformer and Graph Convolutional Network for Text Classification. Int. J. Comput. Intell. Syst. 2023, 16, 161. [Google Scholar] [CrossRef]

- Rocktäschel, T.; Grefenstette, E.; Hermann, K.M.; Kočiský, T.; Blunsom, P. Reasoning about Entailment with Neural Attention. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Kumar, A.; Irsoy, O.; Ondruska, P.; Iyyer, M.; Bradbury, J.; Gulrajani, I.; Zhong, V.; Paulus, R.; Socher, R. Ask Me Anything: Dynamic Memory Networks for Natural Language Processing. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1378–1387. [Google Scholar]

- Yang, M.; Yin, W.; Qu, Q.; Tu, W.; Shen, Y.; Chen, X. Neural Attentive Network for Cross-Domain Aspect-level Sentiment Classification. IEEE Trans. Affect. Comput. 2019, 12, 761–775. [Google Scholar] [CrossRef]

- Yuan, Z.; Wu, S.; Wu, F.; Liu, J.; Huang, Y. Domain Attention Model for Multi-Domain Sentiment Classification. Knowl. Based Syst. 2018, 155, 1–10. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zong, C. Multi-Domain Sentiment Classification. In Proceedings of the 46th Annual Meeting of the Association for Computational Linguistics, ACL 2008, Columbus, OH, USA, 15–20 June 2008; pp. 257–260. [Google Scholar]

- Wu, F.; Yuan, Z.; Huang, Y. Collaboratively Training Sentiment Classifiers for Multiple Domains. IEEE Trans. Knowl. Data Eng. 2017, 29, 1370–1383. [Google Scholar] [CrossRef]

- Evgeniou, T.; Pontil, M. Regularized Multi-Task Learning. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 109–117. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, J.; Ye, J. Malsar: Multi-task learning via structural regularization. Ariz. State Univ. 2011, 21, 1–50. [Google Scholar]

- Liu, P.; Qiu, X.; Huang, X. Adversarial Multi-task Learning for Text Classification. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 1–10. Available online: https://aclanthology.org/P17-1001/ (accessed on 30 July 2017).

- Liu, X.; Gao, J.; He, X.; Deng, L.; Duh, K.; Wang, Y. Representation Learning Using Multi-Task Deep Neural Networks for Semantic Classification and Information Retrieval. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL 2015, Denver, CO, USA, 31 May–5 June 2015; pp. 912–921. [Google Scholar] [CrossRef]

- Liu, P.; Qiu, X.; Huang, X. Deep Multi-Task Learning with Shared Memory for Text Classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, Austin, TX, USA, 1–4 November 2016; pp. 118–127. [Google Scholar]

- Dragoni, M.; Petrucci, G. A Neural Word Embeddings Approach for Multi-Domain Sentiment Analysis. IEEE Trans. Affect. Comput. 2017, 8, 457–470. [Google Scholar] [CrossRef]

- Katsarou, K.; Douss, N.; Stefanidis, K. REFORMIST: Hierarchical Attention Networks for Multi-Domain Sentiment Classification with Active Learning. In Proceedings of the 38th ACM/SIGAPP Symposium on Applied Computing, Tallinn, Estonia, 27–31 March 2023; pp. 919–928. [Google Scholar]

- Katsarou, K.; Jeney, R.; Stefanidis, K. MUTUAL: Multi-Domain Sentiment Classification via Uncertainty Sampling. In Proceedings of the 38th ACM/SIGAPP Symposium on Applied Computing, Tallinn, Estonia, 27–31 March 2023; pp. 331–339. [Google Scholar]

- Dai, Y.; El-Roby, A. DaCon: Multi-Domain Text Classification Using Domain Adversarial Contrastive Learning. In Proceedings of the International Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2023; pp. 40–52. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional Recurrent Neural Networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Zeiler, M.D. Adadelta: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2015, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

| Polarity | Negative | Positive | |

|---|---|---|---|

| Domain | (train/val/test) | (train/val/test) | |

| Book | 700/200/100 | 700/200/100 | |

| DVD | 700/200/100 | 700/200/100 | |

| Kitchen | 700/200/100 | 700/200/100 | |

| Electronics | 700/200/100 | 700/200/100 | |

| Amazon | N: 8000 (5600/1600/800) L: 5939 V: 2022 | ||

| Domain | Books | DVDs | Kitchne | Electronics | Overall | |

|---|---|---|---|---|---|---|

| Model | ||||||

| LS-single | 77.80 | 77.88 | 84.33 | 81.63 | 80.41 | |

| LS-all | 78.40 | 79.76 | 85.73 | 84.67 | 82.14 | |

| SVM-single | 78.56 | 78.66 | 84.74 | 83.03 | 81.25 | |

| SVM-all | 79.16 | 80.97 | 86.06 | 85.15 | 82.84 | |

| LSTM-single | 78.51 | 78.74 | 81.80 | 80.72 | 79.94 | |

| LSTM-all | 83.74 | 79.70 | 83.56 | 82.87 | 82.47 | |

| MTL-DNN | 79.70 | 80.50 | 82.80 | 82.50 | 81.38 | |

| MTL-CNN | 80.20 | 81.00 | 83.00 | 83.40 | 81.90 | |

| MTL-SM | 82.80 | 83.00 | 84.30 | 85.50 | 83.90 | |

| MDSC-Com | 79.07 | 80.09 | 85.54 | 83.77 | 82.12 | |

| RMTL | 81.33 | 82.18 | 87.02 | 85.49 | 84.01 | |

| MTL-Graph | 79.66 | 81.84 | 87.06 | 83.69 | 83.06 | |

| CMSC | 81.16 | 82.08 | 87.13 | 85.85 | 84.06 | |

| ASP-MTL | 84.00 | 85.50 | 86.20 | 86.80 | 85.63 | |

| NeuroSent | 79.66 | 80.90 | 86.86 | 86.41 | 83.46 | |

| DAM | 87.75 | 86.58 | 88.93 | 87.50 | 87.69 | |

| AP-MDSC | 90.04 | 84.01 | 88.07 | 89.90 | 88.01 | |

| Domain | Books | DVDs | Kitchne | Electronics | Music | Overall | |

|---|---|---|---|---|---|---|---|

| Model | |||||||

| DAM-2 | 87.57 | 82.57 | - | - | - | 85.07 | |

| AP-MDSC-2 | 90.10 | 84.65 | - | - | - | 87.38 | |

| DAM-3 | 88.02 | 86.00 | 84.45 | - | - | 86.16 | |

| AP-MDSC-3 | 89.21 | 84.65 | 86.14 | - | - | 86.67 | |

| DAM-4 | 87.75 | 86.58 | 88.93 | 87.50 | - | 87.69 | |

| AP-MDSC-4 | 90.04 | 84.01 | 88.07 | 89.90 | - | 88.01 | |

| DAM-5 | 90.79 | 84.95 | 87.72 | 87.72 | 88.76 | 87.99 | |

| AP-MDSC-5 | 91.73 | 83.96 | 87.72 | 87.92 | 88.86 | 88.05 | |

| Model | Accuracy |

|---|---|

| AP-MDSC (mean-pooling) | 87.13 |

| AP-MDSC (max-pooling) | 87.55 |

| AP-MDSC (min-pooling) | 88.01 |

| Model | Accuracy |

|---|---|

| AP-MDSC (U-unlimited) | 87.72 |

| AP-MDSC (U-limited) | 88.01 |

| Model | DAM-2 | DAM-3 | DAM-4 | DAM-5 |

| Time cost | 67∼72 | 133∼141 | 203∼214 | 267∼281 |

| Model | AP-MDSC-2 | AP-MDSC-3 | AP-MDSC-4 | AP-MDSC-5 |

| Time cost | 70∼76 | 139∼147 | 213∼22 | 284∼300 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, C.; Li, A.; Chen, Z.; Luan, G.; Guo, S. Domain-Aware Neural Network with a Novel Attention-Pooling Technology for Binary Sentiment Classification. Appl. Sci. 2024, 14, 7971. https://doi.org/10.3390/app14177971

Yue C, Li A, Chen Z, Luan G, Guo S. Domain-Aware Neural Network with a Novel Attention-Pooling Technology for Binary Sentiment Classification. Applied Sciences. 2024; 14(17):7971. https://doi.org/10.3390/app14177971

Chicago/Turabian StyleYue, Chunyi, Ang Li, Zhenjia Chen, Gan Luan, and Siyao Guo. 2024. "Domain-Aware Neural Network with a Novel Attention-Pooling Technology for Binary Sentiment Classification" Applied Sciences 14, no. 17: 7971. https://doi.org/10.3390/app14177971

APA StyleYue, C., Li, A., Chen, Z., Luan, G., & Guo, S. (2024). Domain-Aware Neural Network with a Novel Attention-Pooling Technology for Binary Sentiment Classification. Applied Sciences, 14(17), 7971. https://doi.org/10.3390/app14177971