Advanced Preprocessing Technique for Tomato Imagery in Gravimetric Analysis Applied to Robotic Harvesting

Abstract

:1. Introduction

1.1. Literature Review

1.2. Objectives

- The development of improved preprocessing techniques: This research introduces an improved tomato-image-preprocessing technique for robotic harvesting systems, involving the integration of part affinity fields (PAFs) and confidence maps. This provides improved accuracy for tomato-center-of-gravity localization, extending the capabilities of previous approaches focused mainly on fruit detection and localization.

- Quantitative improvement and practical applicability: In our study, a series of structured experiments were conducted to evaluate the improvements made by these methods. We evaluate the practical applicability of these methods in different real-world agricultural scenarios, providing a detailed understanding of the relationship between pretreatment and CNN performance.

- 3.

- The lack of attention to preprocessing in CNNs: Our study fills this gap by focusing on how image preprocessing affects the accuracy of CNN models, especially in precision agriculture.

- 4.

- Improved robustness and adaptability: The data augmentation and multispectral image-enhancement techniques used in this study improve the robustness and adaptability of the proposed model, given the variability in environmental conditions and tomato varieties.

- 5.

- Innovative approach to key point localization: This study focuses on the localization of key points, particularly the center of gravity, offering a new perspective and achieving high accuracy in this area.

2. Materials and Methods

2.1. Image Acquisition and Processing

2.2. Enhanced Pose Estimation via CNNs

2.3. Comparative Analysis of Preprocessing Techniques in Image-Feature Delineation

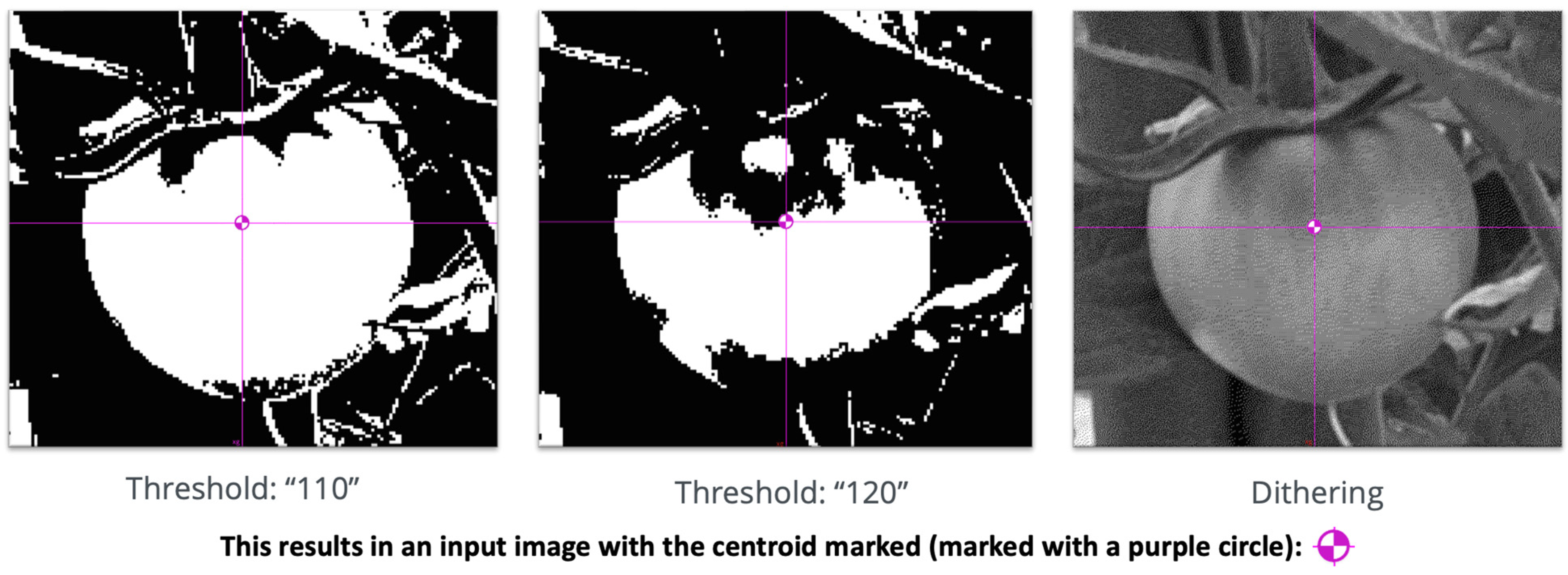

- Thresholding at an intensity of 110: This technique involves setting a threshold at an intensity value of 110. Pixels with an intensity that exceeds this value are labeled as the object (in this case, a tomato), whereas those with an intensity below this value are designated as the background. This approach is particularly advantageous for discerning the primary contours of the object in scenarios in which specific lighting conditions prevail.

- Thresholding at an intensity of 120: The functionally of this technique is similar to that mentioned above. The technique uses a slightly elevated threshold value. It has been proven to be particularly effective for images that have pronounced contrast or were captured under intense illumination.

- Dithering: Dithering is a sophisticated method that capitalizes on quantization errors to metamorphose the image into a monochromatic version, albeit with the enhanced retention of intricate details and textures. This method is instrumental in enabling the analytical model to pinpoint and identify complex regions or areas of the tomato that exhibit low contrast, which might otherwise be overlooked when using rudimentary binarization techniques.

2.4. Architecture for Tomato Pose Detection

- Initial stage: This stage contains a PAF branch and heat-map branch, denoted by L1 and S1, respectively. A PAF is used to encode parts of the objects and their connections, whereas the heat map branch is used to localize key points.

- Refinement stage 1: As with the initial stage, this stage involves reusing PAF branches and heat maps (L2 and S2) to further refine the results.

- Refinement stage t+1: This represents the final stage in an iterative refinement process, where ‘t’ indicates the number of intermediate refinement stages. Each subsequent stage involves the further improvement of localization accuracy.

3. Results

4. Discussion

- The core achievement of our research is the development of a hybrid model that synergizes CNNs with PAFs, which resulted in a remarkable recognition accuracy on a diverse dataset. This high accuracy, which was consistent under various environmental conditions and tomato varieties, is evidence of the model’s robustness and adaptability. The integration of DL with state-of-the-art image-processing techniques made our algorithm significantly outperform existing algorithms, which marks a substantial advancement in the field of agricultural automation.

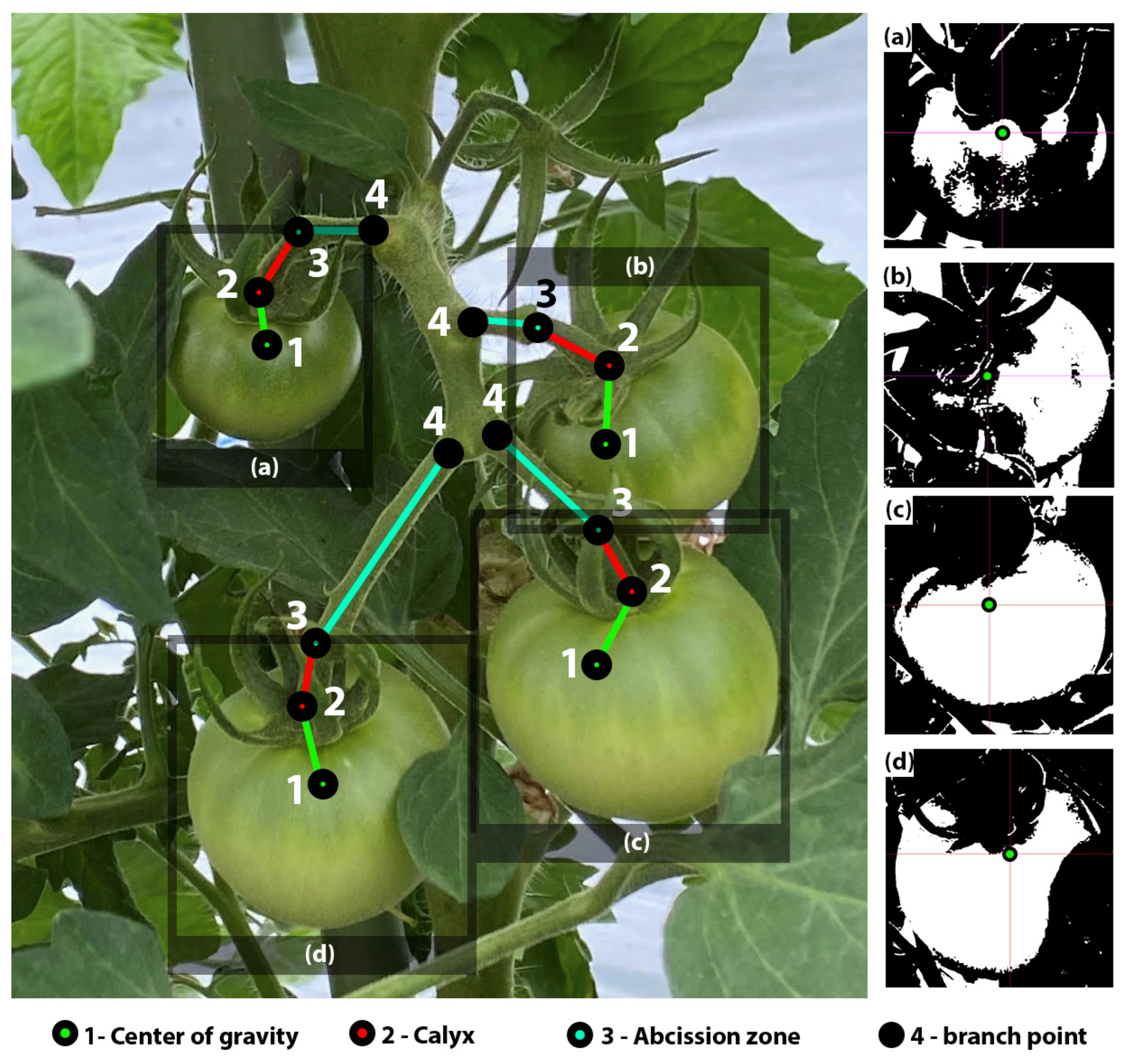

- Despite the overall success of our model, it encountered challenges in key point detection, particularly for images that contained small or clustered fruits. These errors, detailed in Table 1, provide crucial insights into the limitations of the current model. The higher error rates observed for the branching points caused by their frequent merging with the stem parts highlighted areas for future refinement. By contrast, the low number of pixel errors for the calyx and the tomato’s center-of-gravity point indicate their geometric importance in robotic systems in terms of achieving accurate harvest point identification.

- The findings have significant implications for the field of robotic harvesting. The model’s ability to accurately identify and position tomatoes paves the way for more efficient, precise, and scalable robotic harvesting solutions. This advancement could lead to a reduction in manual labor and an increase in harvesting efficiency, which are crucial for meeting the growing demands of the agricultural industry.

- Another critical aspect of our study is the model’s performance under various real-world agricultural conditions. The integration of multispectral analysis and data-enhancement techniques enabled the model to effectively adapt to various environmental conditions and characteristics of tomato varieties. This adaptability is essential for the practical application of the technology in diverse agricultural settings.

- Our study marks a significant step forward in agricultural automation and also provides opportunities for further research. Areas for future improvement include enhancing the model’s ability to process images that contain small or clustered fruits and refining the detection of more challenging key points, such as branching points. Additionally, extending the model’s application to other crops and agricultural practices could broaden its impact on the industry.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gebresenbet, G.; Bosona, T.; Patterson, D.J.; Persson, H.; Fischer, B.; Mandaluniz, N.; Chirici, G.; Zacepins, A.; Komašilovs, V.; Pitulac, T.; et al. A Concept for Application of Integrated Digital Technologies to Enhance Future Smart Agricultural Systems. Smart Agric. Technol. 2023, 5, 100255. [Google Scholar] [CrossRef]

- Khan, N.M.; Ray, R.L.; Sargani, G.R.; Ihtisham, M.; Khayyam, M.; Ismail, S. Current Progress and Future Prospects of Agriculture Technology: Gateway to Sustainable Agriculture. Sustainability 2021, 13, 4883. [Google Scholar] [CrossRef]

- Yadav, A.; Yadav, K.; Ahmad, R.; Abd-Elsalam, K.A. Emerging Frontiers in Nanotechnology for Precision Agriculture: Advancements, Hurdles and Prospects. Agrochemicals 2023, 2, 220–256. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A Review of the Use of Convolutional Neural Networks in Agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Debnath, O.; Saha, H.N. An IoT-Based Intelligent Farming Using CNN for Early Disease Detection in Rice Paddy. Microprocess. Microsyst. 2022, 94, 104631. [Google Scholar] [CrossRef]

- Sadenova, M.; Beisekenov, N.A.; Varbanov, P.S.; Pan, T. Application of Machine Learning and Neural Networks to Predict the Yield of Cereals, Legumes, Oilseeds and Forage Crops in Kazakhstan. Agriculture 2023, 13, 1195. [Google Scholar] [CrossRef]

- Rezk, N.G.; Attia, A.-F.; Elrashidy, M.; El-Sayed, A.; Hemdan, E.E. An Efficient Plant Disease Recognition System Using Hybrid Convolutional Neural Networks (CNNs) and Conditional Random Fields (CRFS) for Smart IoT Applications in Agriculture. Int. J. Comput. Intell. Syst. 2022, 15. [Google Scholar] [CrossRef]

- Chen, J.; Wu, J.; Wang, Z.; Qiang, H.; Cai, G.; Tan, C.; Zhao, C. Detecting Ripe Fruits under Natural Occlusion and Illumination Conditions. Comput. Electron. Agric. 2021, 190, 106450. [Google Scholar] [CrossRef]

- Magalhães, S.A.; Castro, L.; Moreira, G.; Santos, F.N.D.; Cunha, M.; Dias, J.; Moreira, A.P. Evaluating the Single-Shot MultiBox Detector and YOLO Deep Learning Models for the Detection of Tomatoes in a Greenhouse. Sensors 2021, 21, 3569. [Google Scholar] [CrossRef]

- Saranya, S.; Rajalaxmi, R.R.; Prabavathi, R.; Suganya, T.; Mohanapriya, S.; Tamilselvi, T. Deep Learning Techniques in Tomato Plant—A Review. J. Phys. 2021, 1767, 012010. [Google Scholar] [CrossRef]

- Fujinaga, T.; Yasukawa, S.; Ishii, K. Tomato Growth State Map for the Automation of Monitoring and Harvesting. J. Robot. Mechatron. 2020, 32, 1279–1291. [Google Scholar] [CrossRef]

- Skolik, P.; Morais, C.L.M.; Martin, F.; McAinsh, M.R. Determination of Developmental and Ripening Stages of Whole Tomato Fruit Using Portable Infrared Spectroscopy and Chemometrics. BMC Plant Biol. 2019, 19. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Chen, M.; Chen, Z.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Jun-Xiong, Z.; Zhang, F.; Gao, J. LACTA: A Lightweight and Accurate Algorithm for Cherry Tomato Detection in Unstructured Environments. Expert Syst. Appl. 2024, 238, 122073. [Google Scholar] [CrossRef]

- Yoshida, T.; Kawahara, T.; Fukao, T. Fruit Recognition Method for a Harvesting Robot with RGB-D Cameras. ROBOMECH J. 2022, 9, 15. [Google Scholar] [CrossRef]

- Indira, D.N.V.S.L.S.; Goddu, J.; Indraja, B.; Challa, V.M.L.; Manasa, B. A Review on Fruit Recognition and Feature Evaluation Using CNN. Mater. Today Proc. 2023, 80, 3438–3443. [Google Scholar] [CrossRef]

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A.M. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

- Rapado-Rincón, D.; Van Henten, E.J.; Kootstra, G. Development and Evaluation of Automated Localisation and Reconstruction of All Fruits on Tomato Plants in a Greenhouse Based on Multi-View Perception and 3D Multi-Object Tracking. Biosyst. Eng. 2023, 231, 78–91. [Google Scholar] [CrossRef]

- Afonso, M.V.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 571299. [Google Scholar] [CrossRef]

- Benavides, M.; Cantón-Garbín, M.; Sánchez-Molina, J.A.; Rodríguez, F.M. Automatic Tomato and Peduncle Location System Based on Computer Vision for Use in Robotized Harvesting. Appl. Sci. 2020, 10, 5887. [Google Scholar] [CrossRef]

- Li, R.; Ji, Z.; Hu, S.; Huang, X.; Yang, J.; Li, W. Tomato Maturity Recognition Model Based on Improved YOLOV5 in Greenhouse. Agronomy 2023, 13, 603. [Google Scholar] [CrossRef]

- Zheng, S.-H.; Liu, Y.; Weng, W.; Jia, X.; Yu, S.; Wu, Z. Tomato Recognition and Localization Method Based on Improved YOLOV5N-SEG Model and Binocular Stereo Vision. Agronomy 2023, 13, 2339. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. Robust Tomato Recognition for Robotic Harvesting Using Feature Images Fusion. Sensors 2016, 16, 173. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Zhang, B.; Zhou, J.; Yi, X.; Gu, B.-L.; Yang, X. Automatic Recognition of Ripening Tomatoes by Combining Multi-Feature Fusion with a Bi-Layer Classification Strategy for Harvesting Robots. Sensors 2019, 19, 612. [Google Scholar] [CrossRef] [PubMed]

- Xiang, R.; Zhang, M.; Zhang, J. Recognition for Stems of Tomato Plants at Night Based on a Hybrid Joint Neural Network. Agriculture 2022, 12, 743. [Google Scholar] [CrossRef]

- Kanda, P.S.; Xia, K.; Kyslytysna, A.; Owoola, E.O. Tomato Leaf Disease Recognition on Leaf Images Based on Fine-Tuned Residual Neural Networks. Plants 2022, 11, 2935. [Google Scholar] [CrossRef] [PubMed]

- Zu, L.; Zhao, Y.; Liu, J.; Su, F.; Zhang, Y.; Liu, P. Detection and Segmentation of Mature Green Tomatoes Based on Mask R-CNN with Automatic Image Acquisition Approach. Sensors 2021, 21, 7842. [Google Scholar] [CrossRef]

- Li, T.; Sun, M.; He, Q.; Zhang, G.; Shi, G.; Ding, X.; Shen, L. Tomato Recognition and Location Algorithm Based on Improved YOLOv5. Comput. Electron. Agric. 2023, 208, 107759. [Google Scholar] [CrossRef]

- Kaggle. Laboro Tomato. Available online: https://www.kaggle.com/datasets/nexuswho/laboro-tomato (accessed on 13 December 2023).

- Kaggle. Tomato Detection. Available online: https://www.kaggle.com/datasets/andrewmvd/tomato-detection (accessed on 13 December 2023).

- Gitgub. TomatOD. Available online: https://github.com/up2metric/tomatOD (accessed on 13 December 2023).

- Nakano, T.; Fujisawa, M.; Shima, Y.; Ito, Y. Expression Profiling of Tomato Pre-Abscission Pedicels Provides Insights into Abscission Zone Properties Including Competence to Respond to Abscission Signals. BMC Plant Biol. 2013, 13, 40. [Google Scholar] [CrossRef]

- Liu, J.; Peng, Y.; Faheem, M. Experimental and Theoretical Analysis of Fruit Plucking Patterns for Robotic Tomato Harvesting. Comput. Electron. Agric. 2020, 173, 105330. [Google Scholar] [CrossRef]

- Kim, T.-H.; Lee, D.-H.; Kim, K.-C.; Kim, Y.J. 2D Pose Estimation of Multiple Tomato Fruit-Bearing Systems for Robotic Harvesting. Comput. Electron. Agric. 2023, 211, 108004. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. arXiv 2017, arXiv:1611.08050. [Google Scholar] [CrossRef]

- Osokin, D. Real-Time 2D Multi-Person Pose Estimation on CPU: Lightweight OpenPose. arXiv 2018, arXiv:1811.12004. [Google Scholar] [CrossRef]

- Xu, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit Detection and Recognition Based on Deep Learning for Automatic Harvesting: An Overview and Review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Ali, M.F.; Jayakody, D.N.K.; Li, Y. Recent Trends in Underwater Visible Light Communication (UVLC) Systems. IEEE Access 2022, 10, 22169–22225. [Google Scholar] [CrossRef]

- Xu, P.; Fang, N.; Liu, N.; Lin, F.; Yang, S.; Ning, J. Visual Recognition of Cherry Tomatoes in Plant Factory Based on Improved Deep Instance Segmentation. Comput. Electron. Agric. 2022, 197, 106991. [Google Scholar] [CrossRef]

- Li, J.; Wang, X. Early Recognition of Tomato Gray Leaf Spot Disease Based on MobileNetv2-YOLOv3 Model. Plant Methods 2020, 16, 83. [Google Scholar] [CrossRef]

| Axis | Center of Gravity | Calyx | Abscission Zone | Branch Point | Total |

|---|---|---|---|---|---|

| X (pixel) | 5.3 | 2.6 | 4.1 | 10.6 | 5.65 |

| Y (pixel) | 6.2 | 3.0 | 4.7 | 13.1 | 6.75 |

| Overall average score (%) | 97.2 | 96.7 | 95.5 | 96.2 | 96.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beisekenov, N.; Hasegawa, H. Advanced Preprocessing Technique for Tomato Imagery in Gravimetric Analysis Applied to Robotic Harvesting. Appl. Sci. 2024, 14, 511. https://doi.org/10.3390/app14020511

Beisekenov N, Hasegawa H. Advanced Preprocessing Technique for Tomato Imagery in Gravimetric Analysis Applied to Robotic Harvesting. Applied Sciences. 2024; 14(2):511. https://doi.org/10.3390/app14020511

Chicago/Turabian StyleBeisekenov, Nail, and Hideo Hasegawa. 2024. "Advanced Preprocessing Technique for Tomato Imagery in Gravimetric Analysis Applied to Robotic Harvesting" Applied Sciences 14, no. 2: 511. https://doi.org/10.3390/app14020511