Leather Defect Detection Based on Improved YOLOv8 Model

Abstract

:1. Introduction

- (1)

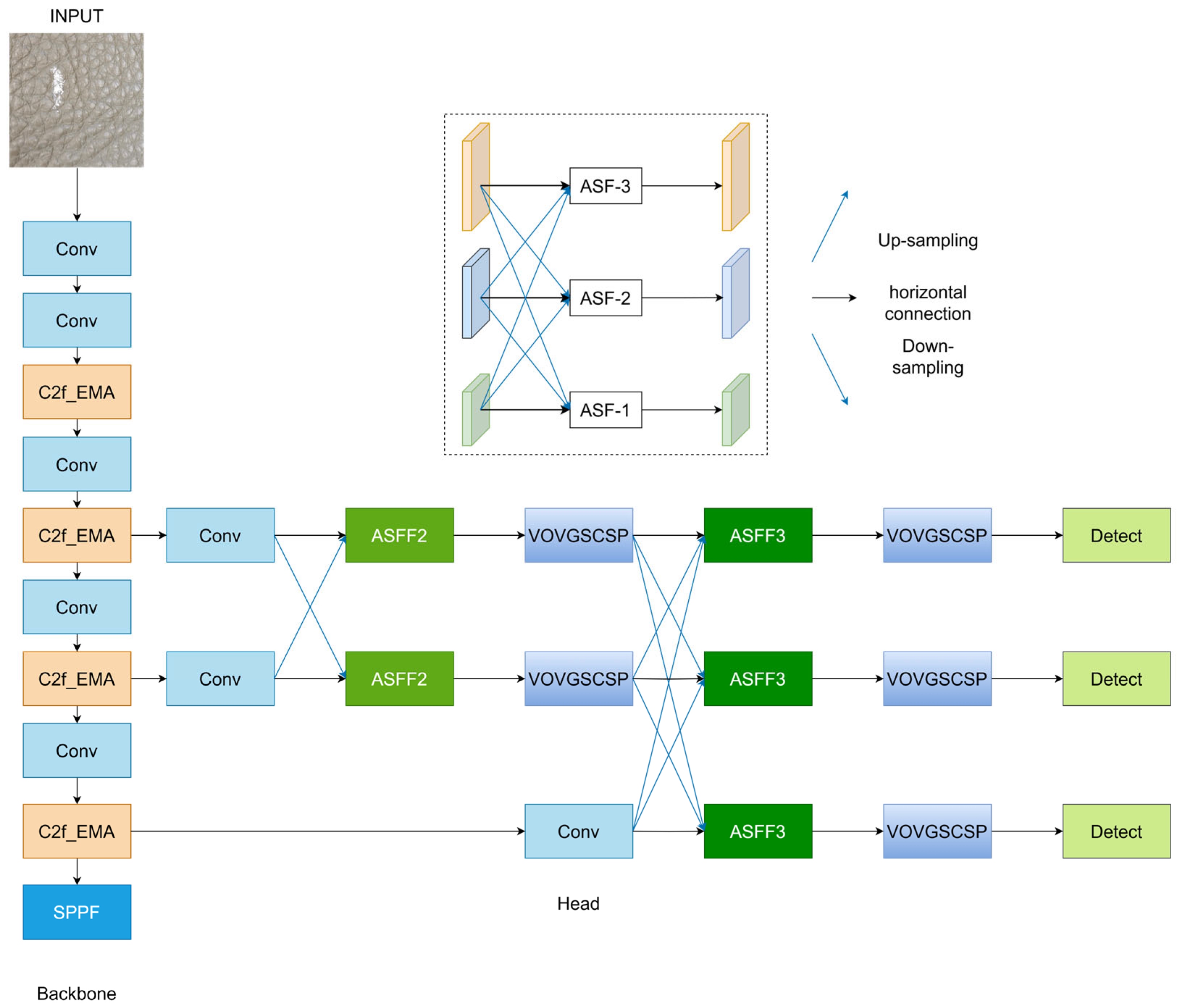

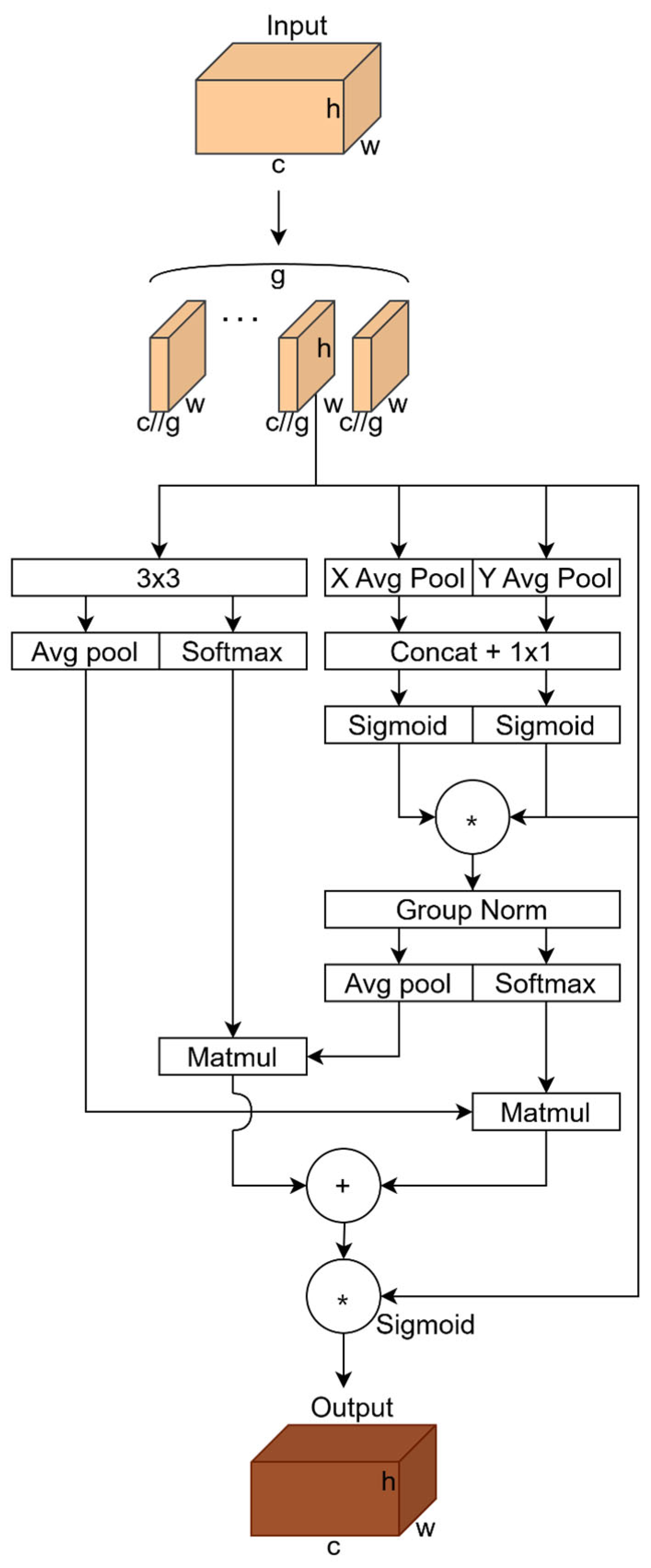

- The EMA [18] (Efficient Multi-scale Attention) mechanism has been incorporated into the backbone of the model, enabling better focus on key defect information in images and resistance to interference from leather texture.

- (2)

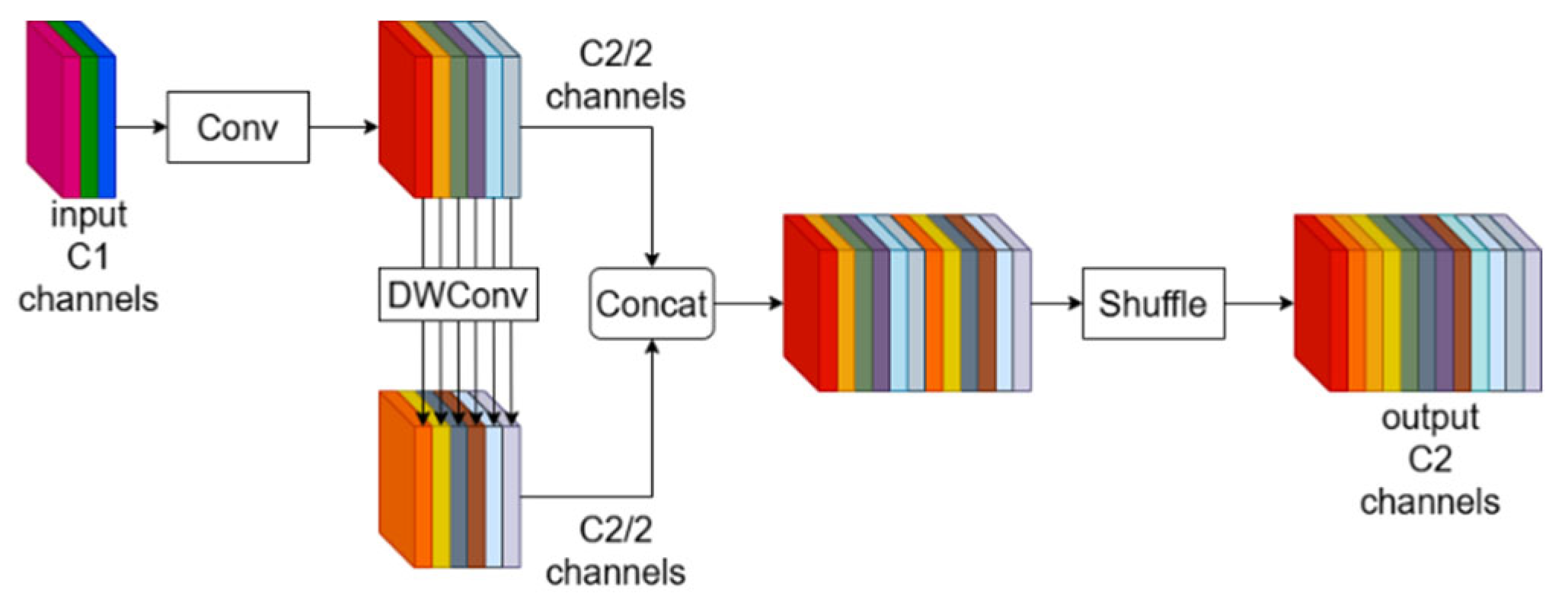

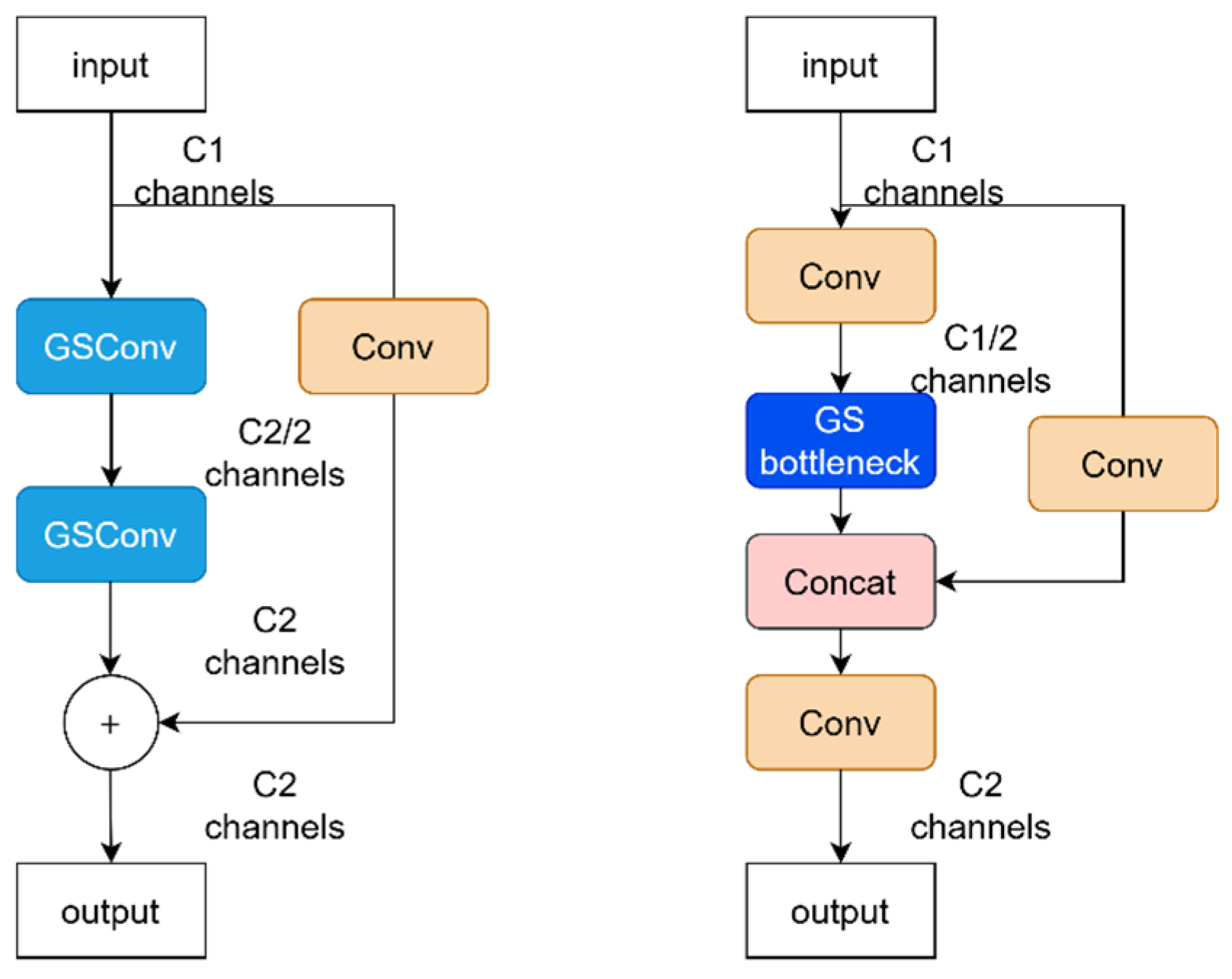

- Based on the multi-scale structure concept of AFPN (Asymptotic Feature Pyramid Network), AFPN [19] is integrated with the VOVGSCSP module containing GSConv [20] (Grouped Shuffle Convolution) into the model’s architecture, aiming to enhance the model’s multi-scale detection capability and reduce model complexity.

- (3)

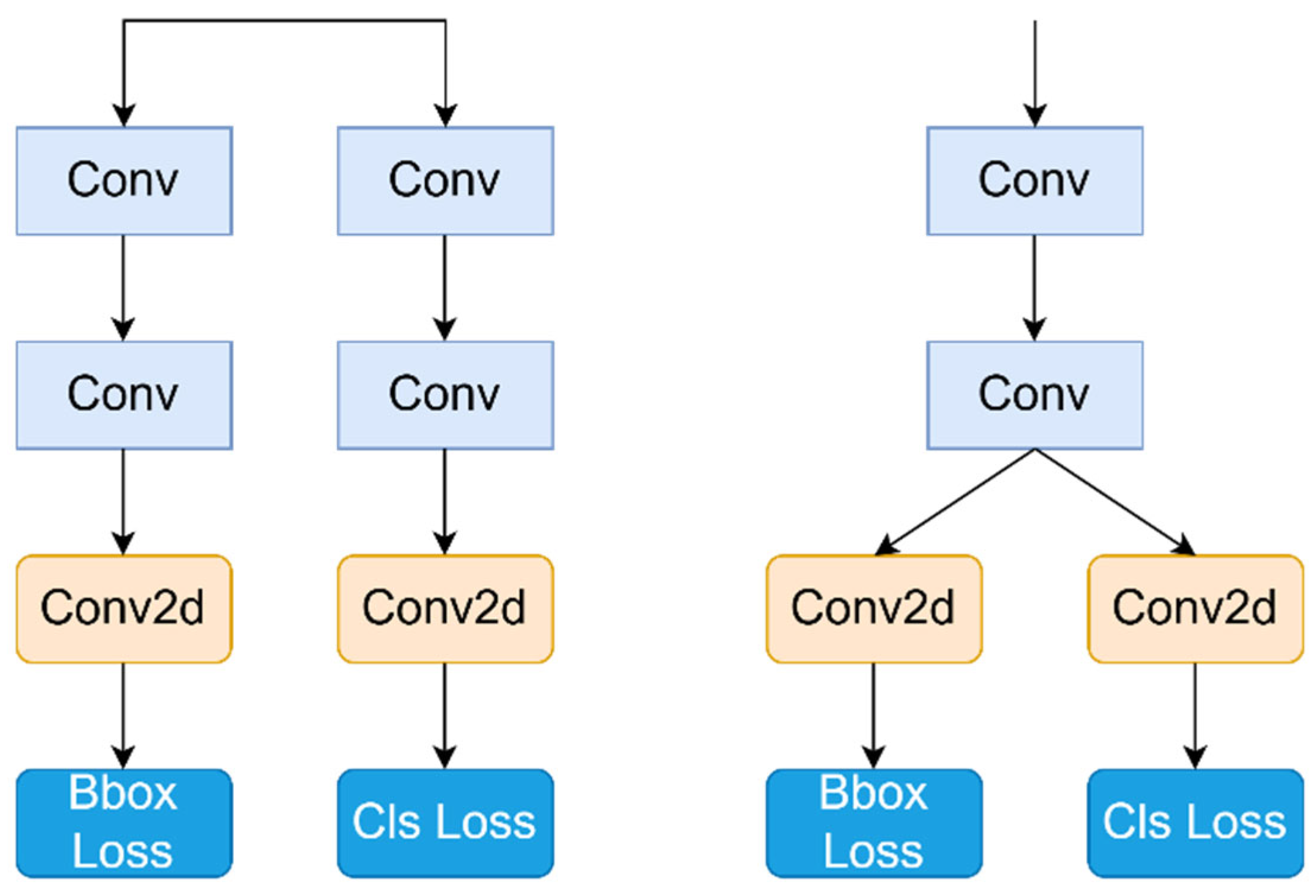

- The concept of shared convolutional layers is introduced to optimize the design of the YOLOv8 detection head, thereby reducing model parameters.

- (4)

- We have created a well-labeled leather defect dataset containing common leather defect classes, which facilitates training of the leather defect detection model.

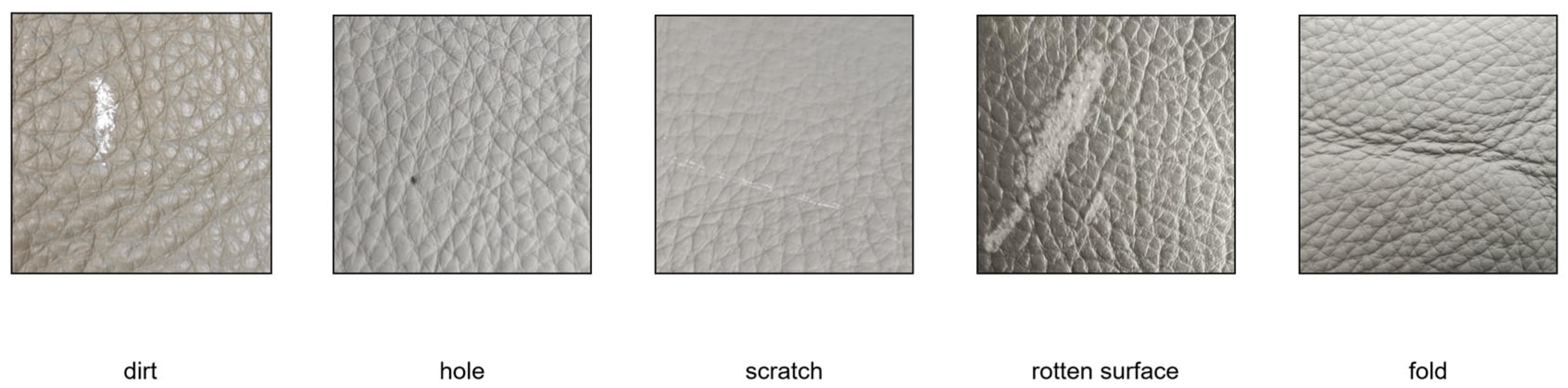

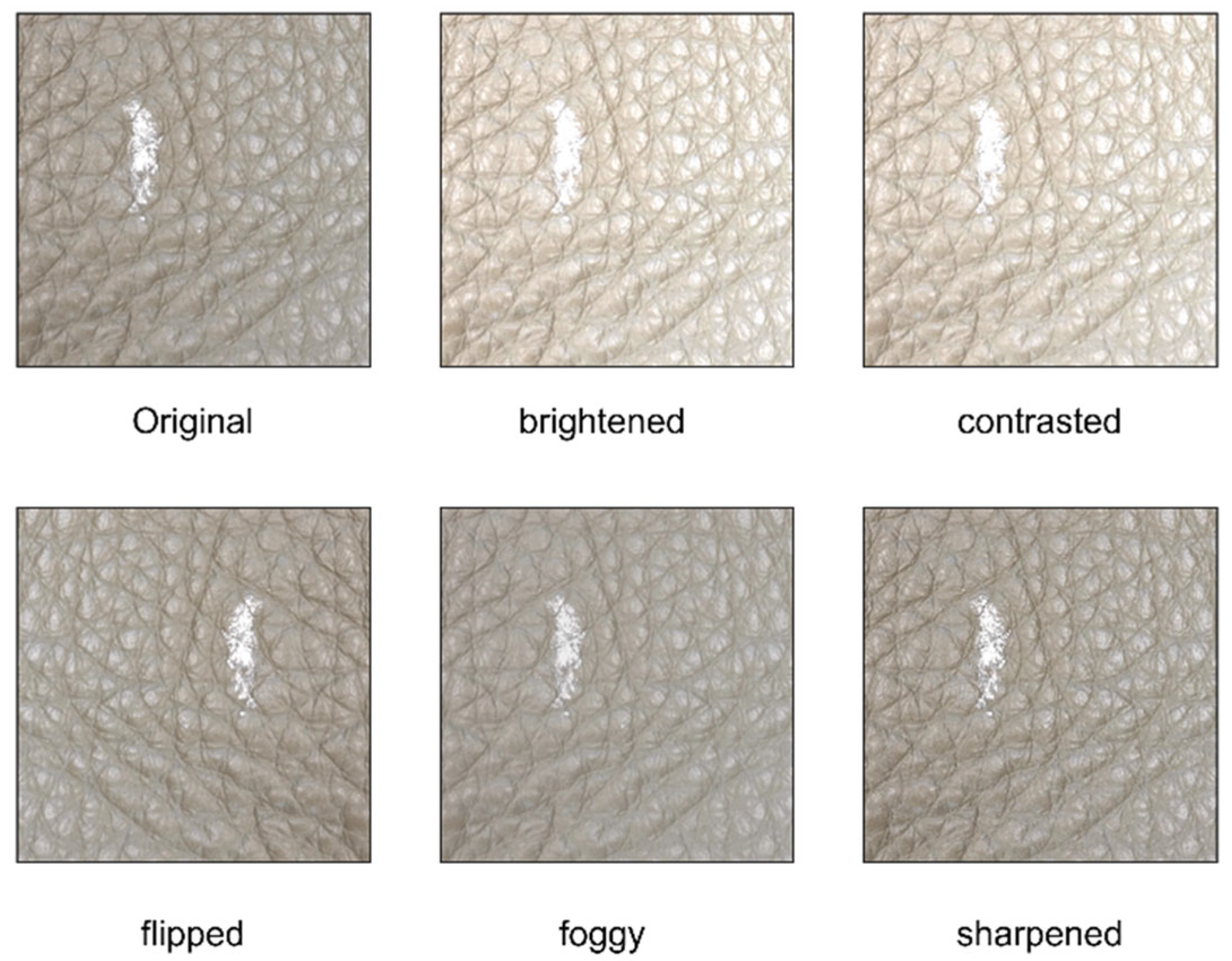

2. Dataset Preprocessing

2.1. Image Acquisition

2.2. Data Tagging and Enhancement

3. YOLOv8

4. Improved YOLOv8-AGE Algorithm

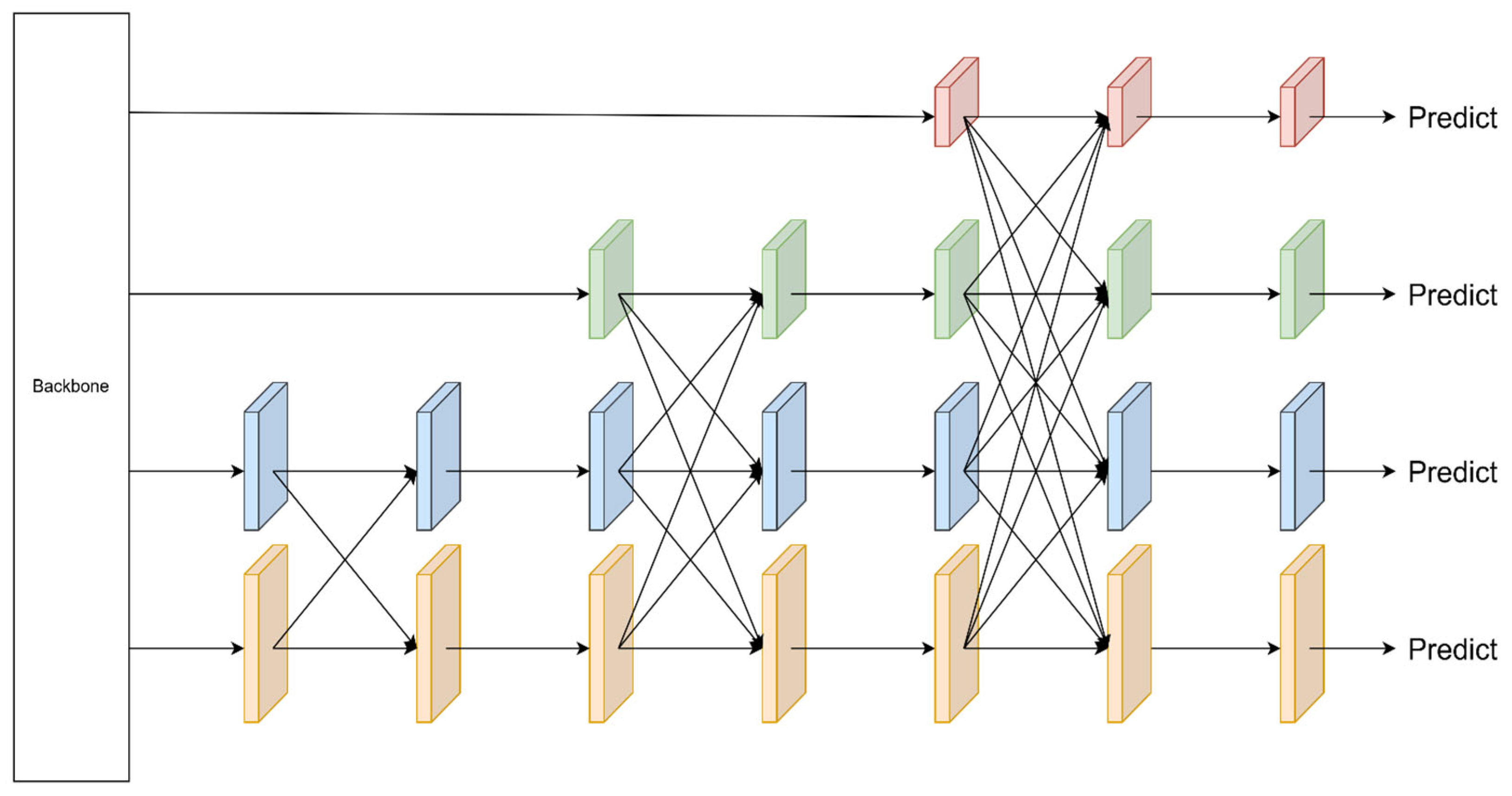

4.1. AFPN

4.2. VoV-GSCSP Module

4.3. EMA

4.4. Head for Shared Parameters

5. Experiments and Results

5.1. Experimental Configuration

5.2. Assessment of Indicators

5.3. Ablation Experiments

5.4. Comparative Experiment

5.5. Other Discussions

- (1)

- In introducing EMA, this paper explores two approaches to incorporating EMA within the C2f module. The first approach is to place the EMA before the first bottleneck within the C2f module, referred to as Model A. The second approach is to place the EMA before the final convolution within the C2f module, referred to as Model B. The two methods of incorporating the EMA produced different results. Without adding other modules, the mAP50 of model A was 0.961 higher than that of model B, which was 0.956, while the parameter and GFLOPS of model A were 8.4M and 3.05, which were lower than those of model B, which were 9.3 and 3.09, respectively. These indicate that the EMA joining approach represented by model A is better than model B in terms of performance, computational complexity, etc. Therefore, this study adopts the EMA joining approach for model A, the model with the EMA incorporation method.

- (2)

- To validate the generalization capability of the model, we additionally collected 200 images of leather defects that were not included in the original leather defect dataset. On this small dataset, the original YOLOv8n defect detection achieved an mAP of 0.915. In this paper, the YOLOv8-AGE for leather defect detection achieved an mAP of 0.937, indicating that the algorithm possesses good detection capabilities and generalization performance.

- (3)

- In comparison with existing solutions, our enhanced model demonstrates several advantages. Relative to the vision transformer (ViT)-based leather defect detection method proposed by Smith et al., our approach offers a significantly reduced number of parameters and computational complexity while maintaining comparable accuracy. This makes our model more efficient and less resource-intensive. Furthermore, when contrasted with the robust leather defect detection method based on trainable guided attention proposed by Masood et al., our study presents a model with a lower parameter count and leverages the simplicity of deployment associated with the YOLO series, which is crucial for practical industrial implementation.

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gan, Y.S.; Liong, S.T.; Zheng, D.; Xia, Y.; Wu, S.; Lin, M.; Huang, Y.C. Detection and localization of defects on natural leather surfaces. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 1785–1799. [Google Scholar] [CrossRef]

- Aslam, M.; Khan, T.M.; Naqvi, S.S.; Holmes, G.; Naffa, R. On the Application of Automated Machine Vision for Leather Defect Inspection and Grading: A Survey. IEEE Access 2019, 7, 176065–176086. [Google Scholar] [CrossRef]

- Limas-Serafim, A.F. Natural images segmentation for patterns recognition using edges pyramids and its application to the leather defects. In Proceedings of the IECON ’93—19th Annual Conference of IEEE Industrial Electronics, Maui, HI, USA, 15–19 November 1993; Volume 1353, pp. 1357–1360. [Google Scholar]

- Lovergine, F.P.; Branca, A.; Attolico, G.; Distante, A. Leather inspection by oriented texture analysis with a morphological approach. In Proceedings of the International Conference on Image Processing, Dublin, Ireland, 26–29 October 1997; Volume 662, pp. 669–671. [Google Scholar]

- Branca, A.; Lovergine, F.P.; Attolico, G.; Distante, A. Defect detection on leather by oriented singularities. In Proceedings of the Computer Analysis of Images and Patterns, Berlin, Heidelberg, 10–12 September 1997; pp. 223–230. [Google Scholar]

- Sobral, J.L. Leather Inspection Based on Wavelets. In Proceedings of the Pattern Recognition and Image Analysis, Berlin/Heidelberg, Germany, 7–9 June 2005; pp. 682–688. [Google Scholar]

- Jawahar, M.; Babu, N.K.C.; Vani, K. Leather texture classification using wavelet feature extraction technique. In Proceedings of the 2014 IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, 18–20 December 2014; pp. 1–4. [Google Scholar]

- He, F.Q.; Wang, W.; Chen, Z.C. Automatic visual inspection for leather manufacture. Key Eng. Mater. 2006, 326, 469–472. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Smith, A.D.; Du, S.; Kurien, A. Vision Transformers for Anomaly Detection and Localisation in Leather Surface Defect Classification Based on Low-Resolution Images and a Small Dataset. Appl. Sci. 2023, 13, 8716. [Google Scholar] [CrossRef]

- Aslam, M.; Naqvi, S.S.; Khan, T.M.; Holmes, G.; Naffa, R. Trainable guided attention based robust leather defect detection. Eng. Appl. Artif. Intell. 2023, 124, 106438. [Google Scholar] [CrossRef]

- Deng, J.; Liu, J.; Wu, C.; Zhong, T.; Gu, G.; Ling, B.W.K. A Novel Framework for Classifying Leather Surface Defects Based on a Parameter Optimized Residual Network. IEEE Access 2020, 8, 192109–192118. [Google Scholar] [CrossRef]

- Chen, Z.; Zhu, Q.; Zhou, X.; Deng, J.; Song, W. Experimental Study on YOLO-Based Leather Surface Defect Detection. IEEE Access 2024, 12, 32830–32848. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics. February 2020. [Online]. Available online: https://github.com/ultralytics/yolov5 (accessed on 15 March 2024.).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. February 2023. [Online]. Available online: https://github.com/ultralytics/ (accessed on 15 March 2024.).

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic feature pyramid network for object detection. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Maui, HI, USA, 1–4 October 2023; pp. 2184–2189. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. pp. 21–37. [Google Scholar]

| Dirt | Hole | Scratch | Rotton Surface | Fold |

|---|---|---|---|---|

| 1655 | 865 | 905 | 1080 | 820 |

| Options | Configuration |

|---|---|

| Operating system | Windows11 |

| CPU | AMD Ryzen7 6800H (AMD, Santa Clara, USA) |

| GPU | NVIDIA GeForce RTX 3050Ti |

| Deep Learning Framework | PyTorch 2.2.2 |

| Python | 3.10 |

| CUDA | 12.1 |

| AFPN | VoV-GSCSP | EMA | Target | mAP50 | mAP95 | Params (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|---|

| 0.932 | 0.734 | 3.01 | 8.1 | 66 | ||||

| √ | 0.937 | 0.737 | 2.59 | 8.2 | 66 | |||

| √ | 0.94 | 0.744 | 2.85 | 7.3 | 70 | |||

| √ | 0.943 | 0.734 | 3.05 | 8.4 | 55 | |||

| √ | 0.932 | 0.731 | 2.80 | 6.9 | 69 | |||

| √ | √ | 0.941 | 0.741 | 2.89 | 8.4 | 69 | ||

| √ | √ | 0.942 | 0.747 | 2.93 | 7.7 | 64 | ||

| √ | √ | √ | 0.946 | 0.741 | 2.93 | 8.7 | 65 | |

| √ | √ | √ | √ | 0.945 | 0.735 | 2.73 | 7.5 | 68 |

| Model | Hole | Scratch | Dirt | Rotten Surface | Fold |

|---|---|---|---|---|---|

| YOLOV8n (Ultralytics Corporation, MD, USA) | 0.96 | 0.894 | 0.977 | 0.951 | 0.878 |

| YOLOV8n+ AFPN | 0.964 | 0.903 | 0.98 | 0.955 | 0.883 |

| YOLOV8n+ GSconv | 0.969 | 0.907 | 0.982 | 0.960 | 0.882 |

| YOLOV8n+ EMA | 0.965 | 0.913 | 0.981 | 0.959 | 0.897 |

| Our model | 0.971 | 0.908 | 0.983 | 0.962 | 0.901 |

| Model | P | R | mAP50 | Params (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|

| YOLOv5n | 0.938 | 0.920 | 0.950 | 2.50 | 7.1 | 76 |

| YOLOv6n | 0.944 | 0.923 | 0.947 | 4.63 | 11.34 | 62 |

| YOLOv7-tiny | 0.879 | 0.919 | 0.937 | 6.01 | 13.0 | 73 |

| YOLOv8n | 0.957 | 0.920 | 0.954 | 3.01 | 8.1 | 66 |

| YOLOv8s | 0.953 | 0.939 | 0.963 | 11.13 | 28.4 | 47 |

| SSD | 0.881 | 0.587 | 0.79 | 3.98 | 5.61 | 81 |

| Our Model | 0.958 | 0.939 | 0.967 | 2.73 | 7.5 | 68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, Z.; Zhang, C.; Wei, W. Leather Defect Detection Based on Improved YOLOv8 Model. Appl. Sci. 2024, 14, 11566. https://doi.org/10.3390/app142411566

Peng Z, Zhang C, Wei W. Leather Defect Detection Based on Improved YOLOv8 Model. Applied Sciences. 2024; 14(24):11566. https://doi.org/10.3390/app142411566

Chicago/Turabian StylePeng, Zirui, Chen Zhang, and Wei Wei. 2024. "Leather Defect Detection Based on Improved YOLOv8 Model" Applied Sciences 14, no. 24: 11566. https://doi.org/10.3390/app142411566

APA StylePeng, Z., Zhang, C., & Wei, W. (2024). Leather Defect Detection Based on Improved YOLOv8 Model. Applied Sciences, 14(24), 11566. https://doi.org/10.3390/app142411566