An Experimental Demonstration of 2D-Multiple-Input-Multiple-Output-Based Deep Learning for Optical Camera Communication

Abstract

1. Introduction

- Wireless communication often uses RF waves, leveraging their far-reaching benefits. However, they come with two notable drawbacks: detrimental effects on human well-being and lack of effectiveness against electromagnetic impedance (EMI), which can lead to thinking twice about their implementation. In contrast, with this alternative medium, there is no known negative association between visible light waves and human health [7,8] if we provide flicker-free illumination.

- The apparent light wave transfer speed is in excess of multiple times larger than the RF data transmission. With its wide bandwidth, OWC is a compelling option for high-capacity communication systems owing to the much larger volumes of data that it can transmit.

- It is essential to consider that apparent light waves are more secure and more effective when view transmission is accomplished utilizing the channel model.

- The IEEE 802.15.7-2011 standard furnishes comprehensive details regarding VLC modes [9].

- The modulation schemes of the OCC system have the capacity to capture data from diverse light sources by utilizing an image sensor or camera.

- LED identification functions as a low-speed communication scheme in which LEDs are used as photodiodes, with data transmission rates less than 1 Mbps.

- High-speed Li-Fi: By using high-rate photodiode modulation schemes, the data rate is significantly increased to more than 1 Mbps at the physical layer.

2. Contributions of this Research

- Support for most types of commercial cameras: effective exposure time control makes the proposed method compatible with global-shutter and rolling-shutter cameras. Moreover, an RoI detection algorithm facilitates easy integration with widely accessible CCTV systems, which enhances the convenience of the application.

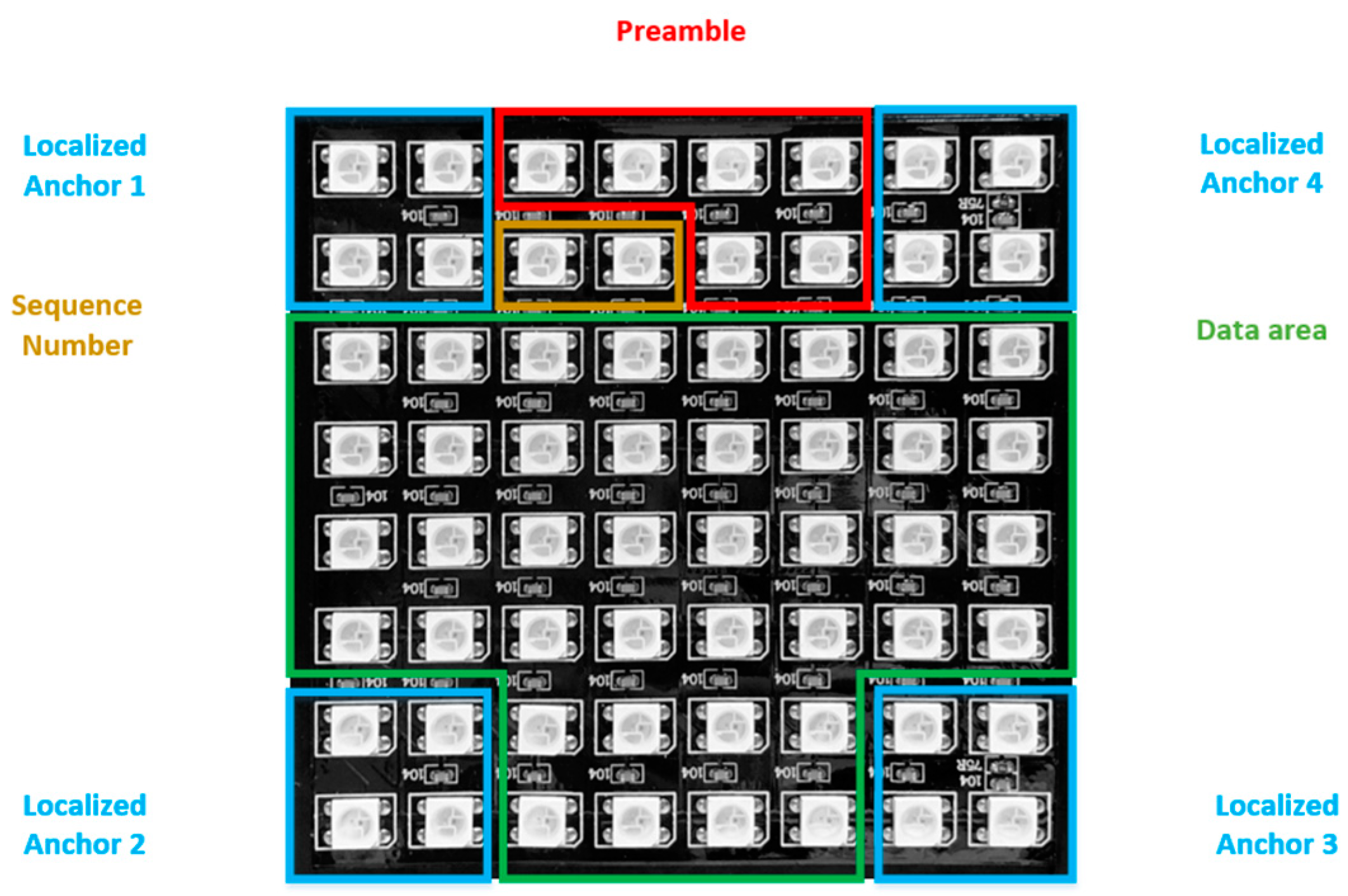

- Rotation support: The proposed scheme deploys a matrix transpose for recovery by taking advantage of the fact that rotation is based on bits. Through a well-construed arrangement of the four corners of the LED array, the receivers can effortlessly detect and accommodate rotations spanning 360°. Rotation support holds significance for IoT systems, ensuring that cameras can readily receive data from any angle in real-world environments.

- Frame rate variation support: Presently, it is still believed that the frame rate described in the specifications of global-shutter and rolling-shutter cameras are constant, for example, 60 or 500 fps. However, in reality, the frame rate can undergo variations and fluctuate during camera usage. This causes several difficulties in decoding data on the receiver side, as well as synchronizing data between the transmitter and receiver side of the OCC system. To determine whether a sub-data packet is dropped during the transmission and reception of data, we use the sequence number (SN) in each one.

- Data merger algorithm: As part of the proposed methodology, we include an SN in every sub-packet to identify where it falls in the sequence. We can adjust the SN length to best optimize system performance according to the length of the data packet.

- Detection of missing packets: Aiming to combine data packets from two successive images, we embed an SN in each packet. This SN facilitates the straightforward detection of missing packets by comparing the SNs in two consecutive images, assuming that the SN length is adequate for the task.

- Mobility support: A 2D-MIMO data transmission method that transfers data using an LED array that has multiple individual LEDs arranged in a matrix, like 8 by 8 or 16 by 16. When LEDs move at a specific speed and distance, conventional algorithms, such as the ROI method, become more difficult to use for LED recognition because the images of the LED array in the camera often appear noisy and hazy. In the meantime, YOLO algorithms are well-known for being incredibly effective methods for real-time object detection, especially for objects with a high rate of motion, such as cars and people. Therefore, we implemented the YOLOv8 algorithm for our OCC systems to improve the performance of LED array detection in mobile scenarios.

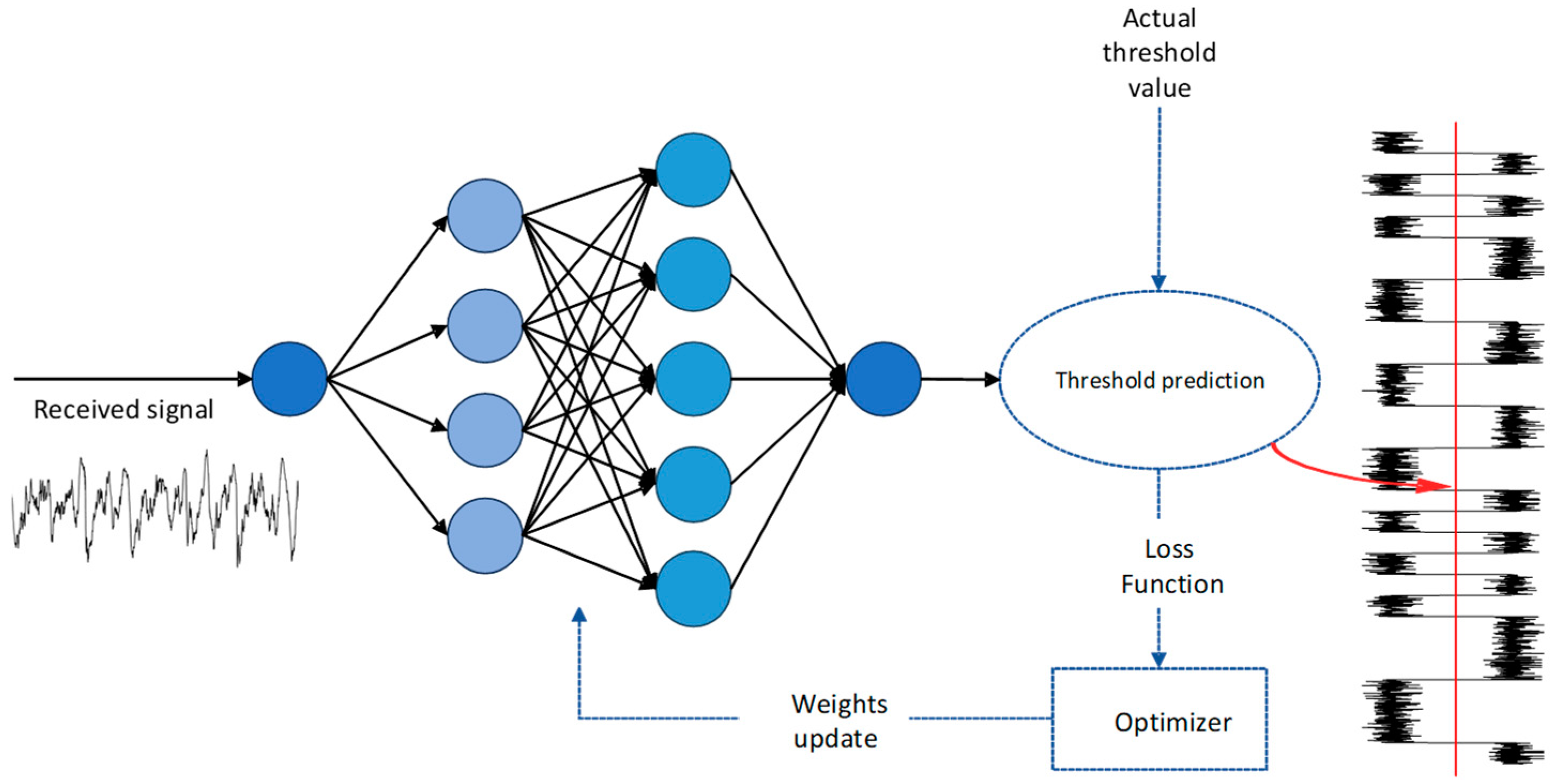

- Reducing BER: To reduce BER, we propose and apply a deep-learning decoder to improve data decoding by applying a deep-learning decoder model trained by the dataset we gathered manually under various conditions, particularly when the LED array moves at varying speeds. Meanwhile, the LED image captured by the camera is often blurred in a mobility environment, making it harder for traditional decoding techniques to distinguish between bits 0 and 1, which raises the BER of the modulation scheme.

3. System Architecture

3.1. Channel Coding

3.2. Deep Learning for Tracking and Detecting LEDs

3.3. Deep Learning for Decoding Data

4. Implementation

4.1. Noise Modeling and Computation of Pixel per Bit to Spectral Noise Density

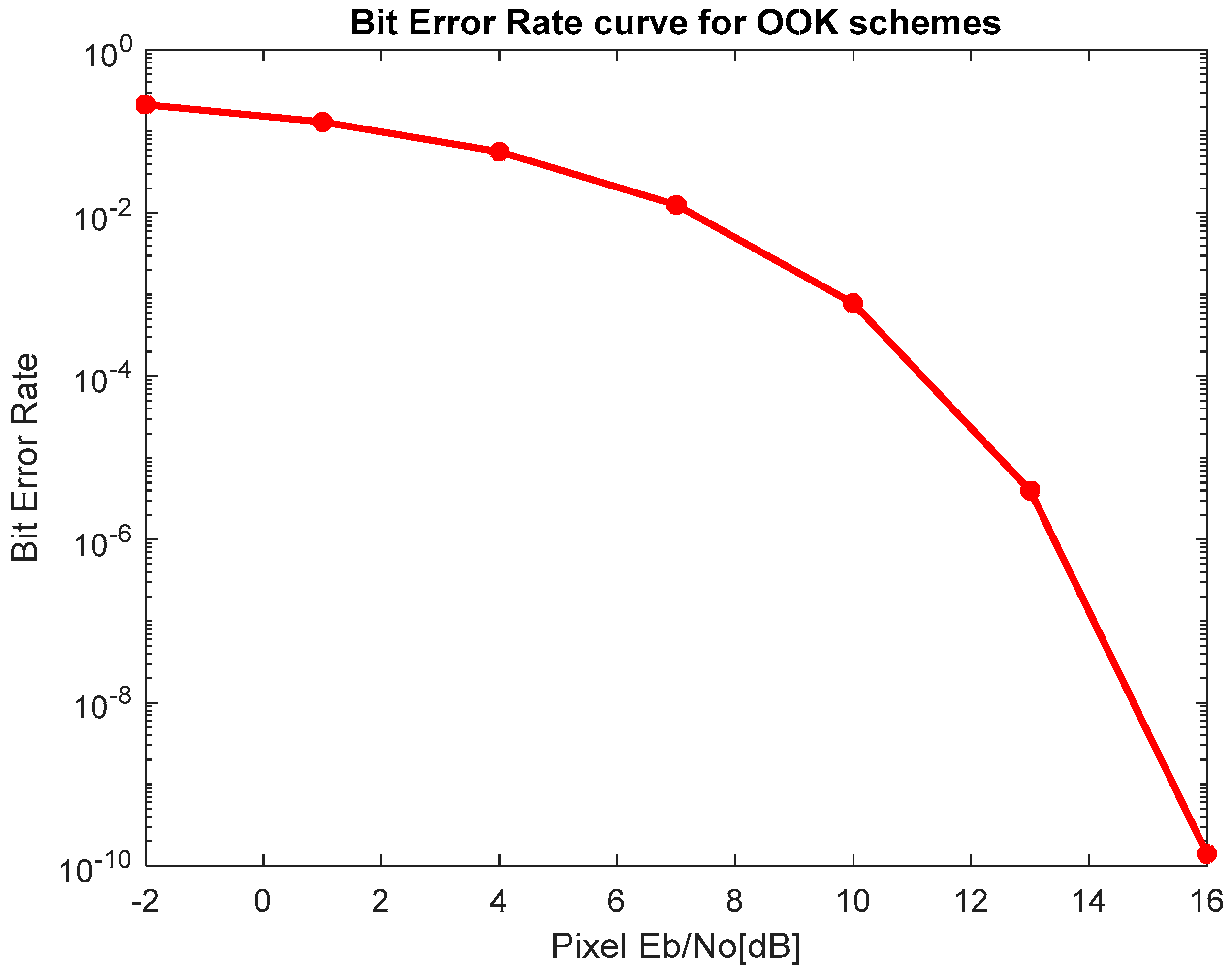

4.2. BER Estimation for Optical OOK Modulation

4.3. Proposed Modulation Scheme

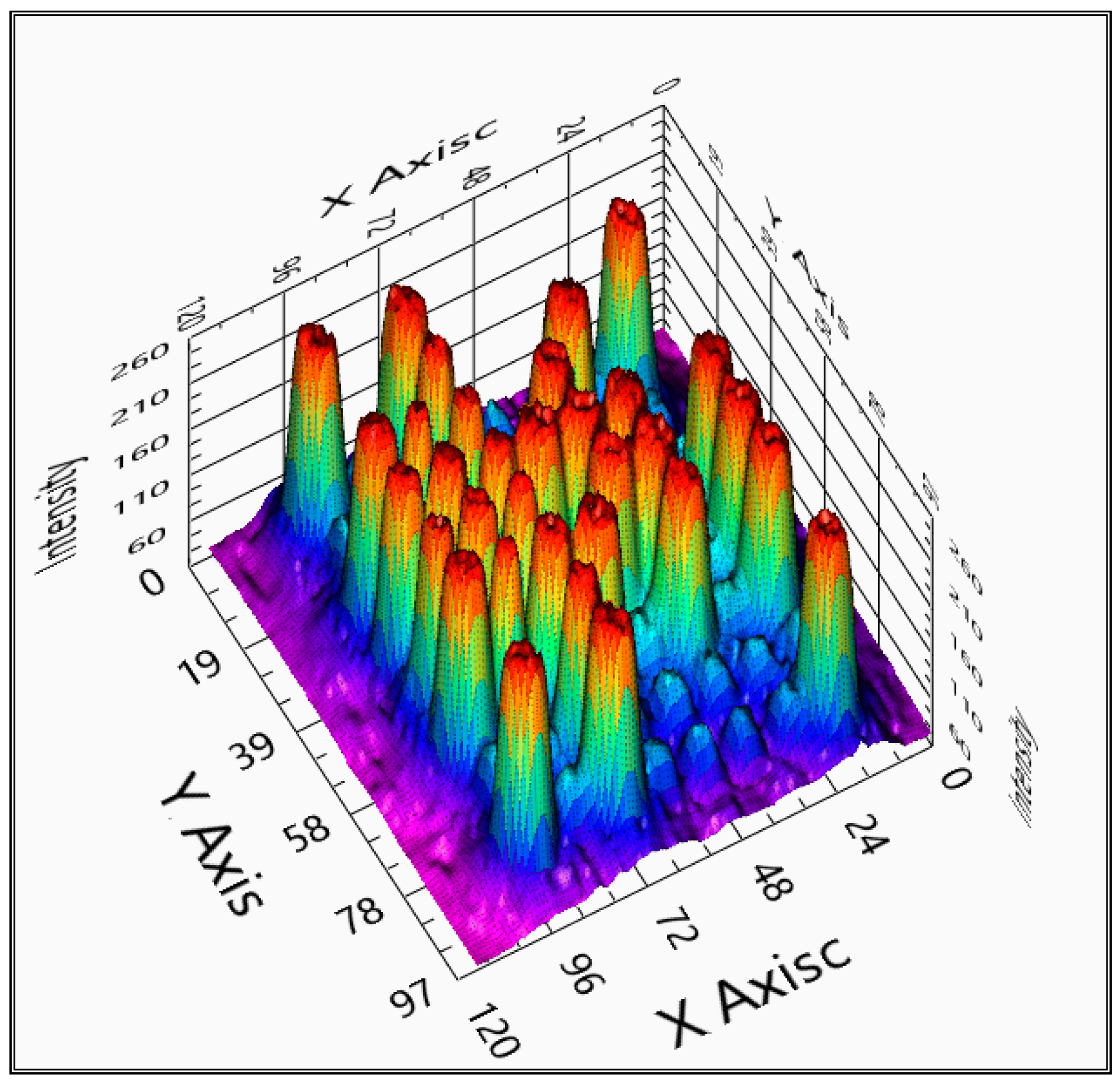

4.3.1. Oversampling

4.3.2. Undersampling

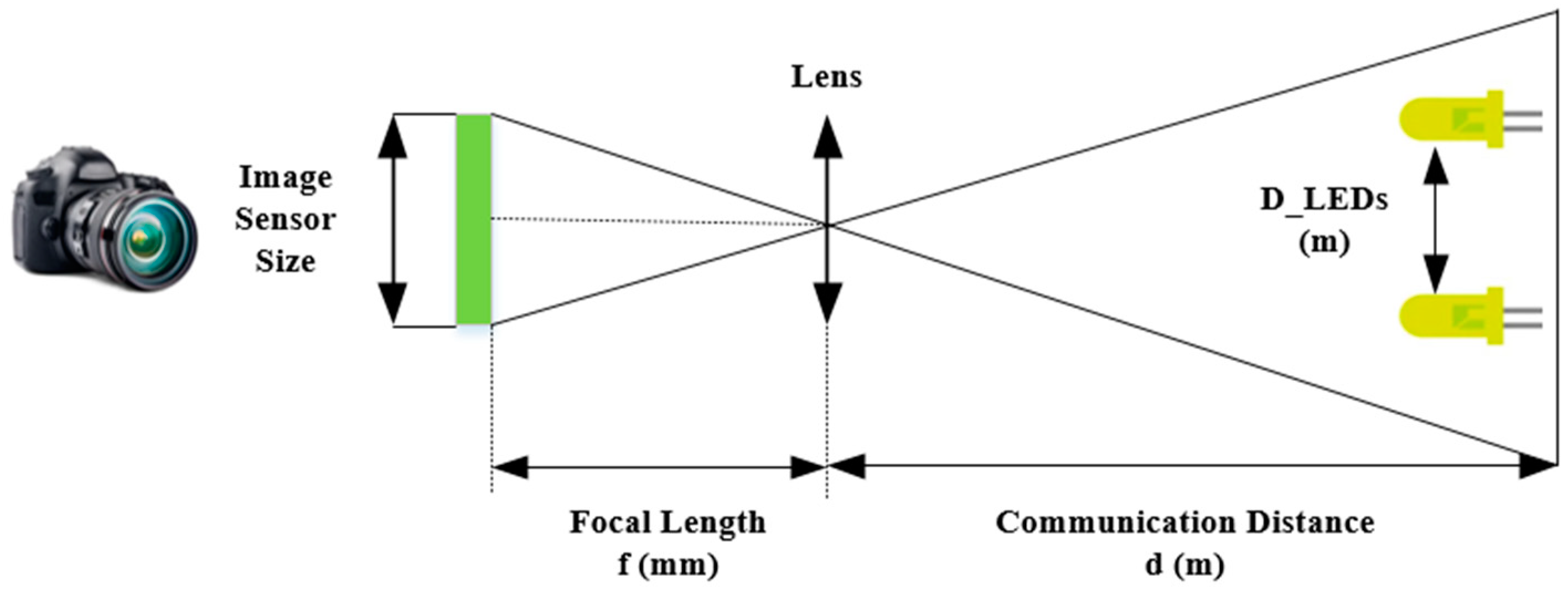

4.3.3. Communication Distance Calculation

4.3.4. Implementation Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, Y.L.; Qin, D.; Wang, L.-C.; Sim, G.H. 6G Massive Radio Access Networks: Key Applications, Requirements and Challenges. IEEE Open J. Veh. Technol. 2021, 2, 54–66. [Google Scholar] [CrossRef]

- Chettri, L.; Bera, R. A Comprehensive Survey on Internet of Things (IoT) toward 5G Wireless Systems. IEEE Internet Things J. 2020, 7, 16–32. [Google Scholar] [CrossRef]

- Seferagić, A.; Famaey, J.; De Poorter, E.; Hoebeke, J. Survey on Wireless Technology Trade-Offs for the Industrial Internet of Things. Sensors 2020, 20, 488. [Google Scholar] [CrossRef] [PubMed]

- Okechukwu, C.E. Effects of Radiofrequency Electromagnetic Field Exposure on Neurophysiology. Adv. Hum. Biol. 2020, 10, 6–10. [Google Scholar] [CrossRef]

- Nguyen, H.; Nguyen, V.; Nguyen, C.; Bui, V.; Jang, Y. Design and Implementation of 2D MIMO-Based Optical Camera Communication Using a Light-Emitting Diode Array for Long-Range Monitoring System. Sensors 2021, 21, 3023. [Google Scholar] [CrossRef] [PubMed]

- Saeed, N.; Guo, S.; Park, K.-H.; Al-Naffouri, T.Y.; Alouini, M.-S. Optical camera communications: Survey, use cases, challenges, and future trends. Phys. Commun. 2019, 37, 100900. [Google Scholar] [CrossRef]

- Boulogeorgos, A.-A.A.; Trevlakis, S.E.; Chatzidiamantis, N.D. Optical Wireless Communications for In-Body and Transdermal Biomedical Applications. IEEE Commun. Mag. 2021, 59, 119–125. [Google Scholar] [CrossRef]

- Karunatilaka, D.; Zafar, F.; Kalavally, V.; Parthiban, R. LED Based Indoor Visible Light Communications: State of the Art. IEEE Commun. Surv. Tutor. 2015, 17, 1649–1678. [Google Scholar] [CrossRef]

- IEEE Std 802.15.7-2011; IEEE Standard for Local and Metropolitan Area Networks—Part 15.7: Short-Range Wireless Optical Communication Using Visible Light. IEEE-SA: Piscataway, NJ, USA, 2011.

- IEEE Std 802.15.7-2018; IEEE Standard for Local and Metropolitan Area Networks—Part 15.7: Short-Range Optical Wireless Communications. IEEE-SA: Piscataway, NJ, USA, 2018.

- Rahimpour, S.; Kiyani, M.; Hodges, S.E.; Turner, D.A. Deep brain stimulation and electromagnetic interference. Clin. Neurol. Neurosurg. 2021, 203, 106577. [Google Scholar] [CrossRef]

- Ong, Z.; Rachim, V.P.; Chung, W.-Y. Novel Electromagnetic-Interference-Free Indoor Environment Monitoring System by Mobile Camera-Image-Sensor-Based VLC. IEEE Photonics J. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Haas, H.; Yin, L.; Wang, Y.; Chen, C. What is LiFi? J. Light. Technol. 2016, 34, 1533–1544. [Google Scholar] [CrossRef]

- Sheoran, S.; Garg, P.; Sharma, P.K. Location tracking for indoor VLC systems using intelligent photodiode receiver. IET Commun. 2018, 12, 1589–1594. [Google Scholar] [CrossRef]

- Haas, H.; Yin, L.; Chen, C.; Videv, S.; Parol, D.; Poves, E.; Alshaer, H.; Islim, M.S. Introduction to indoor networking concepts and challenges in LiFi. J. Opt. Commun. Netw. 2020, 12, A190–A203. [Google Scholar] [CrossRef]

- Khalighi, M.A.; Uysal, M. Survey on Free Space Optical Communication: A Communication Theory Perspective. IEEE Commun. Surv. Tutor. 2014, 16, 2231–2258. [Google Scholar] [CrossRef]

- Ali, A.Y.; Zhang, Z.; Zong, B. Pulse position and shape modulation for visible light communication system. In Proceedings of the 2014 International Conference on Electromagnetics in Advanced Applications (ICEAA), Palm Beach, Aruba, 3–8 August 2014; pp. 546–549. [Google Scholar] [CrossRef]

- Videv, S.; Haas, H. Practical space shift keying VLC system. In Proceedings of the 2014 IEEE Wireless Communications and Networking Conference (WCNC), Istanbul, Turkey, 6–9 April 2014; pp. 405–409. [Google Scholar] [CrossRef]

- Deng, P.; Kavehrad, M. Real-time software-defined single-carrier QAM MIMO visible light communication system. In Proceedings of the 2016 Integrated Communications Navigation and Surveillance (ICNS), Herndon, VA, USA, 19–21 April 2016; pp. 5A3-1–5A3-11. [Google Scholar] [CrossRef]

- Cai, H.-B.; Zhang, J.; Zhu, Y.-J.; Zhang, J.-K.; Yang, X. Optimal Constellation Design for Indoor 2 × 2 MIMO Visible Light Communications. IEEE Commun. Lett. 2016, 20, 264–267. [Google Scholar] [CrossRef]

- Nguyen, V.L.; Tran, D.H.; Nguyen, H.; Jang, Y.M. An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System. Appl. Sci. 2022, 12, 6935. [Google Scholar] [CrossRef]

- Nguyen, H.; Thieu, M.D.; Pham, T.L.; Nguyen, H.; Jang, Y.M. The impact of camera parameters on optical camera communication. In Proceedings of the International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Republic of Korea, 11–13 February 2019. [Google Scholar]

- Ayyash, M.; Elgala, H.; Khreishah, A.; Jungnickel, V.; Little, T.; Shao, S.; Rahaim, M.; Schulz, D.; Hilt, J.; Freund, R. Coexistence of WiFi and LiFi toward 5G: Concepts, opportunities, and challenges. IEEE Commun. Mag. 2016, 54, 64–71. [Google Scholar] [CrossRef]

- Huang, W.; Tian, P.; Xu, Z. Design and implementation of a real-time CIM-MIMO optical camera communication system. Opt. Express 2016, 24, 24567–24579. [Google Scholar] [CrossRef]

- Teli, S.R.; Matus, V.; Zvanovec, S.; Perez-Jimenez, R.; Vitek, S.; Ghassemlooy, Z. Optical Camera Communications for IoT–Rolling-Shutter Based MIMO Scheme with Grouped LED Array Transmitter. Sensors 2020, 20, 3361. [Google Scholar] [CrossRef]

- Isa, I.S.; Rosli, M.S.A.; Yusof, U.K.; Maruzuki, M.I.F.; Sulaiman, S.N. Optimizing the Hyperparameter Tuning of YOLOv5 for Underwater Detection. IEEE Access 2022, 10, 52818–52831. [Google Scholar] [CrossRef]

- Guan, W.; Li, J.; Wen, S.; Zhang, X.; Ye, Y.; Zheng, J.; Jiang, J. The Detection and Recognition of RGB-LED-ID Based on Visible Light Communication using Convolutional Neural Network. Appl. Sci. 2019, 9, 1400. [Google Scholar] [CrossRef]

- Hsieh, C.-H.; Lin, D.-C.; Wang, C.-J.; Chen, Z.-T.; Liaw, J.-J. Real-Time Car Detection and Driving Safety Alarm System WithGoogle Tensorflow Object Detection API. In Proceedings of the 2019 International Conference on Machine Learning and Cybernetics (ICMLC), Kobe, Japan, 7–10 July 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Nguyen, V.H.; Thieu, M.D.; Nguyen, H.; Jang, Y.M. Design and Implementation of the MIMO–COOK Scheme Using an Image Sensor for Long-Range Communication. Sensors 2020, 20, 2258. [Google Scholar] [CrossRef]

- Lin, H.; Si, J.; Abousleman, G.P. Region-of-interest detection and its application to image segmentation and compression. In Proceedings of the 2007 International Conference on Integration of Knowledge Intensive Multi-Agent Systems, Waltham, MA, USA, 30 April–3 May 2007. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef]

| Transmitter Side | ||

|---|---|---|

| LED array size | 8 × 8 | 16 × 16 |

| The number of LEDs | 64 | 256 |

| Power supply | 5 V, 2 W | 5 V, 5 W |

| FEC | Hamming (11/15) | |

| Packet rate | 60 packet/s | |

| Receiver side | ||

| Camera | Point Grey rolling-shutter camera | |

| Throughput | ||

| Uncode bit rate | 3.840 kbps | 15.360 kbps |

| Code bit rate | 2.816 kbps | 11.264 kbps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le, D.T.A.; Nguyen, H.; Jang, Y.M. An Experimental Demonstration of 2D-Multiple-Input-Multiple-Output-Based Deep Learning for Optical Camera Communication. Appl. Sci. 2024, 14, 1003. https://doi.org/10.3390/app14031003

Le DTA, Nguyen H, Jang YM. An Experimental Demonstration of 2D-Multiple-Input-Multiple-Output-Based Deep Learning for Optical Camera Communication. Applied Sciences. 2024; 14(3):1003. https://doi.org/10.3390/app14031003

Chicago/Turabian StyleLe, Duy Tuan Anh, Huy Nguyen, and Yeong Min Jang. 2024. "An Experimental Demonstration of 2D-Multiple-Input-Multiple-Output-Based Deep Learning for Optical Camera Communication" Applied Sciences 14, no. 3: 1003. https://doi.org/10.3390/app14031003

APA StyleLe, D. T. A., Nguyen, H., & Jang, Y. M. (2024). An Experimental Demonstration of 2D-Multiple-Input-Multiple-Output-Based Deep Learning for Optical Camera Communication. Applied Sciences, 14(3), 1003. https://doi.org/10.3390/app14031003