Abstract

Pattern recognition in myoelectric control that relies on the myoelectric activity associated with arm motions is an effective control method applied to myoelectric prostheses. Individuals with transhumeral amputation face significant challenges in effectively controlling their prosthetics, as muscle activation varies with changes in arm positions, leading to a notable decrease in the accuracy of motion pattern recognition and consequently resulting in a high rejection rate of prosthetic devices. Therefore, to achieve high accuracy and arm position stability in upper-arm motion recognition, we propose a Deep Adversarial Inception Domain Adaptation (DAIDA) based on the Inception feature module to enhance the generalization ability of the model. Surface electromyography (sEMG) signals were collected from 10 healthy subjects and two transhumeral amputees while performing hand, wrist, and elbow motions at three arm positions. The recognition performance of different feature modules was compared, and ultimately, accurate recognition of upper-arm motions was achieved using the Inception C module with a recognition accuracy of 90.70% ± 9.27%. Subsequently, validation was performed using data from different arm positions as source and target domains, and the results showed that compared to the direct use of a convolutional neural network (CNN), the recognition accuracy on untrained arm positions increased by 75.71% (p < 0.05), with a recognition accuracy of 91.25% ± 6.59%. Similarly, in testing scenarios involving multiple arm positions, there was a significant improvement in recognition accuracy, with recognition accuracy exceeding 90% for both healthy subjects and transhumeral amputees.

1. Introduction

Human arm amputation causes substantial functional and muscle loss for amputees. The loss of a hand or arm through amputation greatly decreases the quality of life of an individual [1]. Amputees sometimes vividly experience the presence of their missing limb and voluntarily execute “real” motions [2]. This form of motor execution, termed phantom limb movement (PML), exists with its own underlying neurophysiological mechanisms, and the associated muscle activity varies depending on the type of movement being executed [3]. Based on this theory, pattern-recognition-based myoelectric prostheses can recognize specific movement patterns by acquiring myoelectric signals from the residual limb through electrodes around the arm, visually linking the signals to the movements, and effectively enhancing the intuitiveness of prosthetic control [4,5,6,7,8,9,10,11].

However, transhumeral (TH—above the elbow) amputees suffer greater loss of muscles and functionality than transradial (TR—below the elbow) amputees. Operating a prosthesis requires excessive physical or mental effort on the part of the TH amputees because it necessitates the use of arm muscles that are not naturally involved in these motions, which results in inaccurate and unreliable recognition. It is difficult for the user to learn, making the prosthesis frustrating to use [11] and resulting in a higher rate of rejection compared to TR prostheses [12,13].

Enrichment of signal source techniques, including the use of high-density electrodes, filtering techniques and signal fusion, has become one of the main research directions to improve pattern recognition performance. High-density electrodes can cover a larger muscle area and provide rich feature information [14]. Filtering techniques improve the spatial resolution of EMG signals by reducing crosstalk between surface EMG signals [15]. Methods for monitoring brain activity, including electroencephalography (EEG) or functional near-infrared spectroscopy (fNIRS), which is non-invasive and has a relatively short time constant, have proven to be strong candidates for additional inputs for human–machine device control [16]. The fusion of brain activity information, complementing the limited EMG signals, can effectively classify the motor intentions of amputees. Although these methods have yielded satisfactory results in research, the complexity of devices associated with multiple types of information falls short of practical application scenarios.

In pattern recognition, traditional machine learning methods rely heavily on hand-crafted features and can only capture shallow features, resulting in limited accuracy. Previous research has shown that deep learning is a viable solution, deep learning can learn feature representations for classification tasks without domain-specific knowledge and outperform traditional machine learning in terms of recognition performance [17].

However, when subjects perform hand movements in different arm positions, the performance of the pattern recognition control scheme is inevitably affected. Several studies have shown that arm position increases classification errors when using training data from one arm position and test data from another [18,19,20,21,22]. Existing studies typically use data from multiple arm positions for training, using datasets or feature sets large enough to achieve the desired performance. However, this approach usually fails to achieve the desired performance and can place a significant burden on model updates.

To address the above issues, our research objective is to explore an algorithmic framework that can accurately recognize upper arm movements at different arm positions. In order to achieve this objective, a domain adversarial neural network named the Deep Adversarial Inception Domain Adaptation (DAIDA) is proposed. The main contributions of this paper are highlighted as follows:

- Leveraging the power of the Inception module, we incorporated parallel convolutional kernels of different sizes to enhance the feature-capturing capability of the model. This ensures the model’s ability to discern nuanced details crucial for upper arm motion recognition. Meanwhile, our algorithmic framework facilitates the seamless execution of two training phases without modifying any structures. These two training phases can eliminate heterogeneous features to ensure good motion recognition performance at the new arm position.

- To validate the effectiveness of our proposed model, we conducted experiments involving both able-bodied subjects and transhumeral amputees. This result showcases the model’s motion recognition accuracy and stability at different arm elevation positions, providing prospects for the advancement of myoelectric interfaces.

2. Methods

This section describes the proposed algorithmic framework to address the problem of accurate upper arm movement recognition and position change stability in transhumeral amputees. First, an experimental protocol was developed to acquire sEMG signals of upper arm movements in common arm positions, and the acquired signals were preprocessed as inputs to the model. Subsequently, the CNN model was determined by evaluating the performance of the parallel convolutional structure through several evaluation metrics. Finally, by combining domain adaptation and adversarial training, the DAIDA framework is proposed and evaluated under several training scenarios to solve the domain shift problem due to arm position changes.

2.1. Data Acquisition

A total of 12 subjects were recruited for the experiment, including 10 able-bodied subjects and 2 transhumeral amputees. Subjects gave consent to participate in the experiment, which was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The able-bodied subjects were adults with no musculoskeletal disorders or other serious health problems and who have good physical functioning. The eligibility criteria for the amputee subjects were preservation of the major muscles of the upper arm after the cutoff, good control in moving the phantom arm as well as repeatable phantom arm movements with a minimum amplitude of greater than 20 degrees, and absence of phantom arm pain. Based the joint project of the National Key Research and Development Program of China, the experiment has been approved by the Ethics Committee of Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences (YSB-2022-Y07026). Participants provided written informed consent to participate in this study. Information on the able-bodied and amputee subjects is shown in Table 1 and Table 2, respectively.

Table 1.

Information on the able-bodied subjects.

Table 2.

Information on the amputee subjects.

Eight sEMG electrodes were arranged equidistant and parallel from each other around the upper arms of both types of subjects to ensure that the electrodes covered the major muscles of the subjects’ biceps, triceps, and brachialis muscles while capturing as many valid sEMG signals as possible [23,24]. The skin on the upper arm of the subjects was treated with hair clipping, removal of dead skin and alcohol cleansing to ensure the stable acquisition of the surface sEMG signals. The sEMG signals were acquired using a Noraxon sEMG signal acquisition device with a sampling frequency of 1500 Hz.

During signal acquisition, subjects were asked to sit in front of the table and place their upper arms at the most comfortable positions on the support platform by adjusting the seat height and the tilt angle of the support platform. To prevent signal recordings from being affected by tabletop compression, the sensors located in a slot in the center of the support plate do not touch the support plate and remain suspended. Changes in arm position can also lead to significant differences in sEMG signals, affecting recognition performance. Three arm elevation positions were selected to complete sEMG acquisition experiments, as shown in Figure 1. Within these three arm elevation positions, subjects are able to carry out most of the activities required for daily life, including the following:

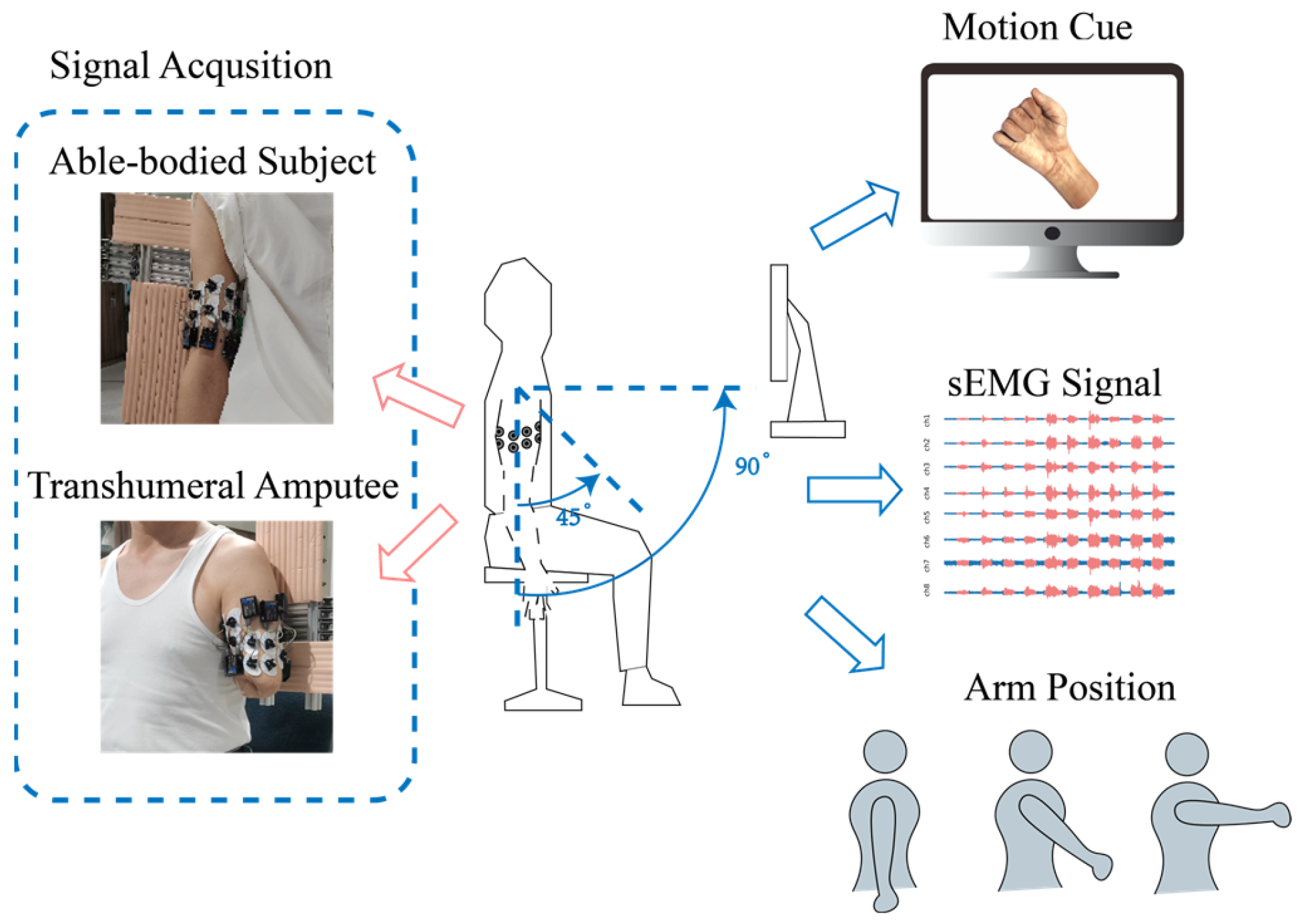

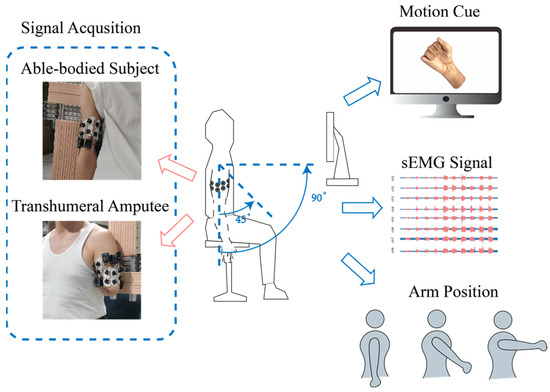

Figure 1.

Global view of sEMG acquisition experiment. On the left is the actual photo of the sensors’ installation; in the middle is the schematic diagram of the multi-arm-position sEMG signal acquisition experiment; and on the right are motion illustrations, the sEMG waveform graph and a schematic diagram of the three arm elevation positions.

- P1: upper arm parallel to the horizontal plane;

- P2: elevated upper arm to 45° from the horizontal plane;

- P3: elevated upper arm to 90° from the horizontal plane.

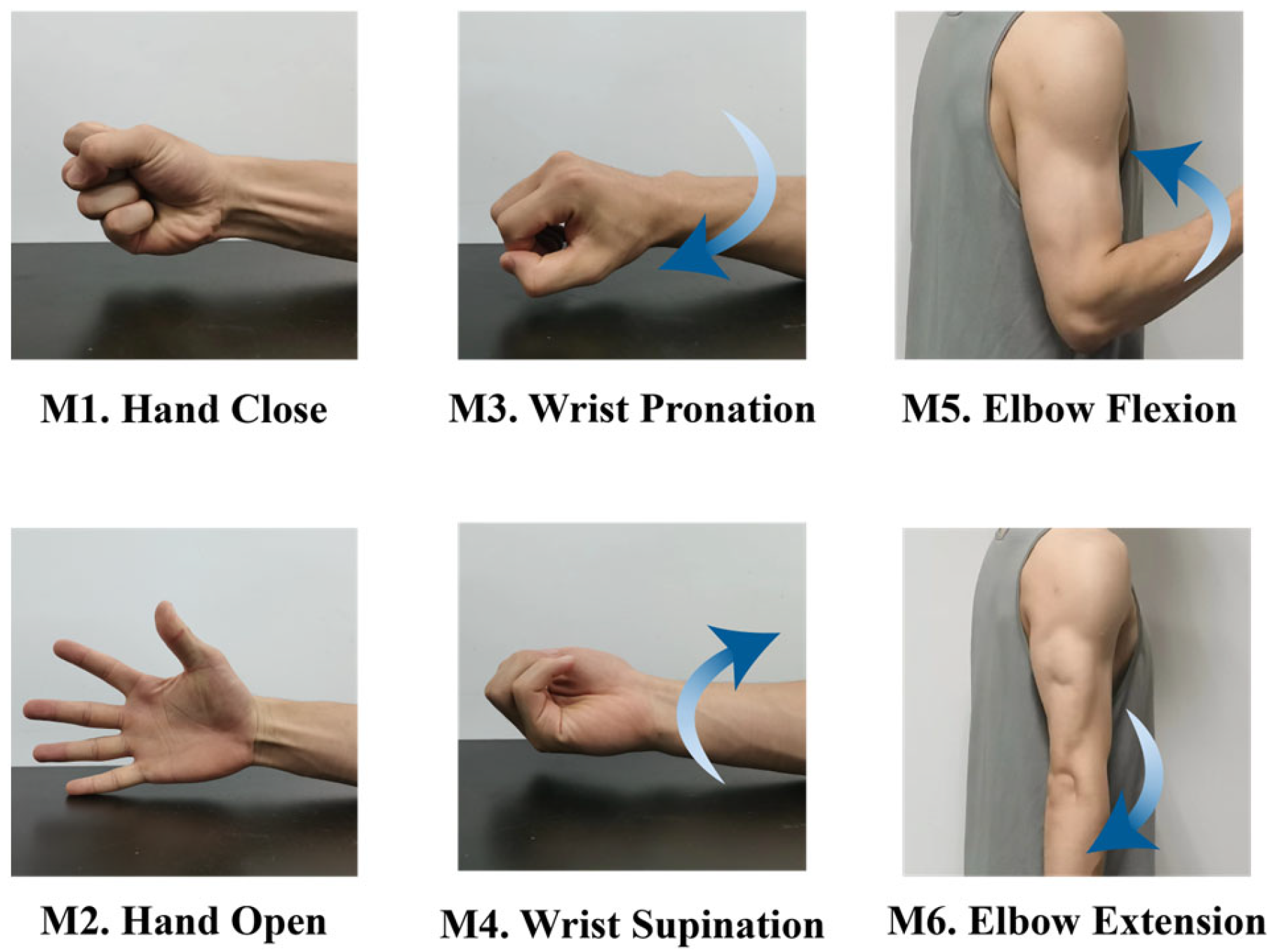

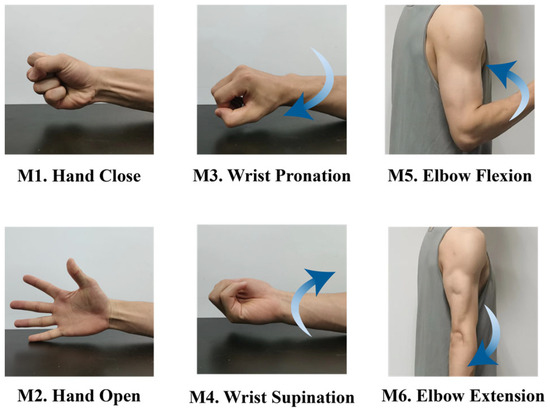

A video of the movements is displayed on the computer screen directly in front of the subject as a guide, and the subjects are asked to imitate the movement in the video synchronously with the guidance. The amputee subjects utilized their healthy arm as a mirror image to aid the stumped arm in replicating phantom limb movements, as shown in Figure 1. The phantom arm movements consisted of six movements of the hand, wrist and elbow, which were Hand Close, Hand Open, Wrist Pronation, Wrist Supination, Elbow Flexion and Elbow Extension, as shown in Figure 2.

Figure 2.

Schematic of hand, wrist and elbow motions.

The sEMG data were obtained by regular exercise–rest cycles. Each movement was acquired in ten repetitions. For each repetition, the subject held the muscle contraction for 5 s and then rested for 2 s. It was required that the amount of force with which the subject held the movement and the posture in which the movement was performed should be as consistent as possible. After the acquisition of ten repetitions of a gesture, a 1-minute rest was taken to avoid muscle fatigue.

sEMG signals were acquired from subjects for each set of movements at each arm position, with a 2 min rest after the end of the signal acquisition at each arm position. The guiding principle behind the adoption of the above procedure was the need to limit the recording time and fatigue, while avoiding possible stress effects of fully continuous movements or prolonged acquisitions. A total of 180 sets (3 arm positions × 6 motions × 10 repetitions) of experimental data were ultimately collected for each subject.

2.2. DAIDA Framework

2.2.1. Preprocessing

The sEMG signals are stochastic non-stationary signals and therefore need to be preprocessed before they can be used for subsequent classification. The preprocessing process of the data in this study consisted of filtering and noise reduction, normalization and active segment extraction.

According to the spectral energy distribution of the sEMG signal, the motion artifacts were removed using 4th-order 20–500 Hz Butterworth bandpass filtering, and the industrial frequency noise was removed using 50 Hz notch filtering. Because different subjects’ anatomical tissues, physiological states and other factors make the multichannel sEMG signals show large individual differences [25], in order to reduce the impact of individual differences on pattern recognition classification, it is necessary to standardize them by transforming sEMG signals from different subjects. The standardization method used in this article is Z-Score standardization. The transformation formula is (x − μ)/σ [26]. In this formula, μ is the mean and σ is the standard deviation. It converts data of different orders of magnitude into unitless values [27].

In order to obtain effective motion information, the determination of active segments is essential [28,29]. Therefore, in this study, an adaptive dual-thresholding method was used for the detection of active segments of sEMG signals [27]. According to studies, the optimal window length for sEMG-based pattern recognition (PR) falls within the range of 100 ms to 250 ms [30]. To ensure classification accuracy while taking into account Noraxon’s sampling rate, a window of 300 sample points in length was used, sliding over the data in incremental lengths of 150 sample points.

Eventually, the sEMG signals were converted into a suitable data format for input to the network, i.e., batch size × 1 × number of channels × window length.

2.2.2. The CNN Model

This section describes in detail the domain adaptive technique used to solve the problem of spatial inconsistency of features at different arm positions. Then, the architecture and training process of the proposed method are described.

In the practical application of myoelectric prosthetic hands, the effectiveness of pattern recognition may be greatly reduced due to changes in the distribution of signal features caused by changes in arm position. Different arm positions result in different muscle activations, leading to different data distributions [21]. This is one of the reasons why traditional models are unstable in action prediction under different arm positions. To address this problem, the DAIDA algorithmic framework proposed in this paper recognizes the sEMG signals of the upper arm under different arm positions. The idea of domain adaptation is utilized to make the feature learning distribution of the source domain close to the target domain.

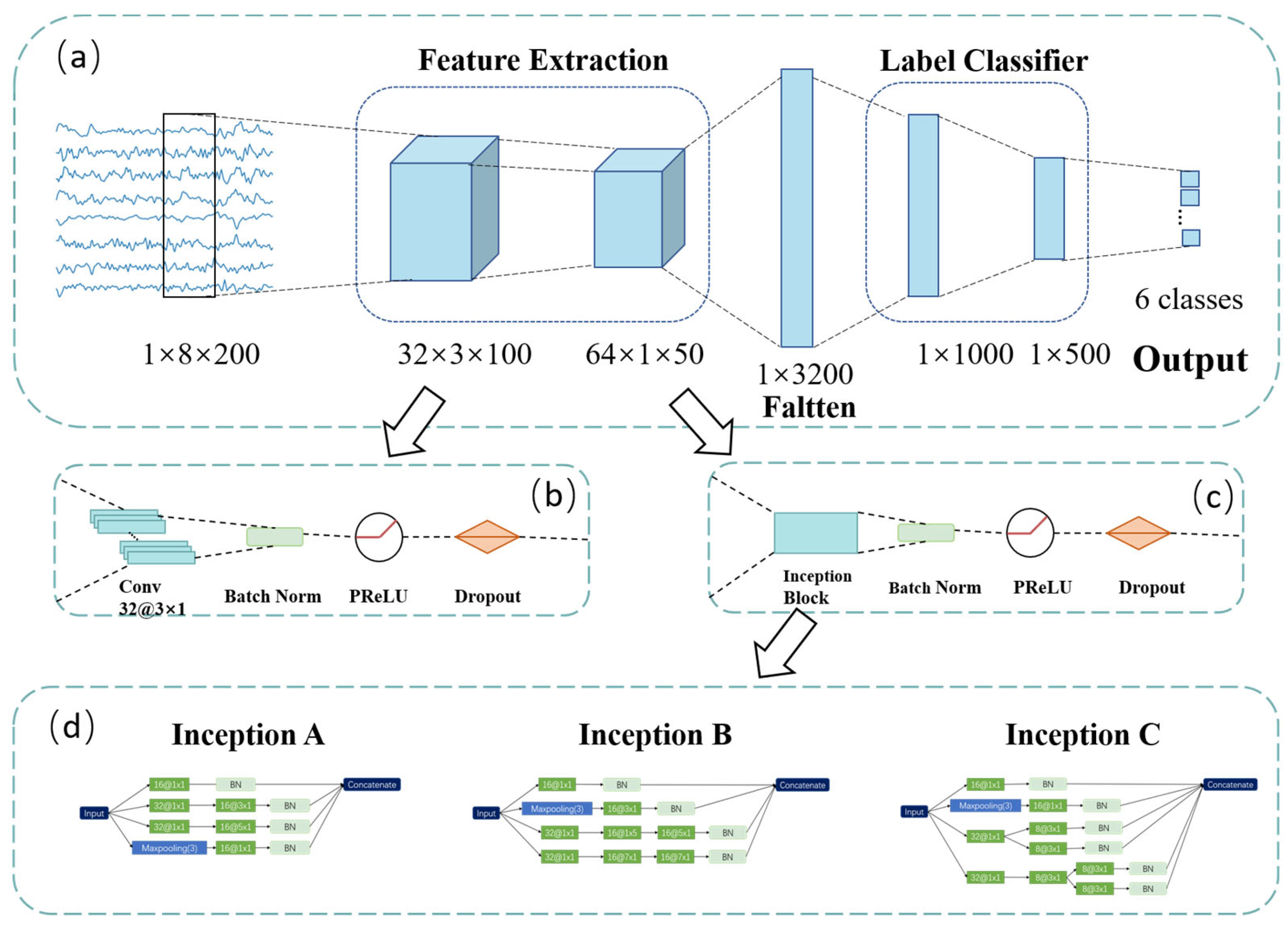

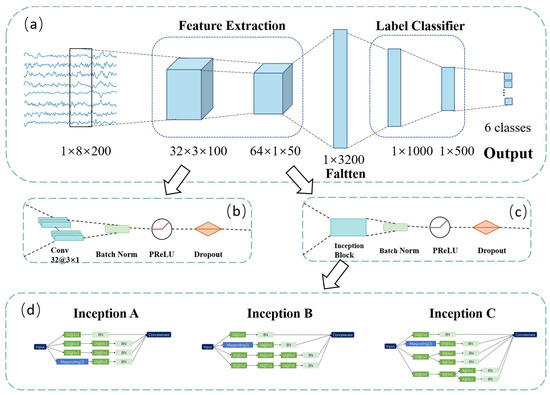

The DAIDA structure was constructed from two networks with almost identical CNN structure. The CNN model used serves as the baseline model in the study. The CNN is composed of two modules, a feature extraction module and a classification module, as shown in Figure 3. The feature extraction module consists of two blocks, each of which encapsulates a convolutional layer, followed by a batch normalization layer (BN), a Parametric Rectified Linear Unit (PReLU) layer, a dropout layer (p = 0.5) and a max-pooling layer. Inspired by the success of excellent models like Xception, which perform well in classification tasks and demonstrate good generalization ability on new datasets through transfer learning with approaches such as feature extraction and fine-tuning, we adopt the inception structure to replace regular convolution in order to efficiently capture the features of various scales of the input data [27,29]. This structure employs multiple parallel branches with different convolution and pooling operations to extract multi-scale information from the input feature map. Among them, branch one uses 1 × 1 convolution for channel transformation of the input, branch two performs spatial dimensionality reduction through 3 × 3 max-pooling and 1 × 1 convolution and branches three and four use 5 × 1 convolution and 7 × 1 convolution with different dimensions, respectively, to extract features at different scales. The outputs of these branches are spliced in the channel dimension to generate the final feature map. The classification module also comprises two blocks, each consisting of a fully connected layer, a batch normalization layer, a PReLU layer and a dropout layer (p = 0.5). The number of neurons in the fully connected layer is 1000 and 500, respectively. Adaptive moment estimation (ADAM) is employed to optimize the CNN with an initial learning rate of 0.001.

Figure 3.

Overview of the baseline model. (a) is the macroscopic diagram of the model. (b) is the architecture of the Stem Block. (c) is the architecture of the Inception Block. (d) shows the architectures of Inception A, the Inception B and the Inception C module, which are used to replace the Inception Block in (c), respectively.

In order to compare the effectiveness of feature extraction with score-parallel convolution, we compared various structures, as shown in Figure 3.

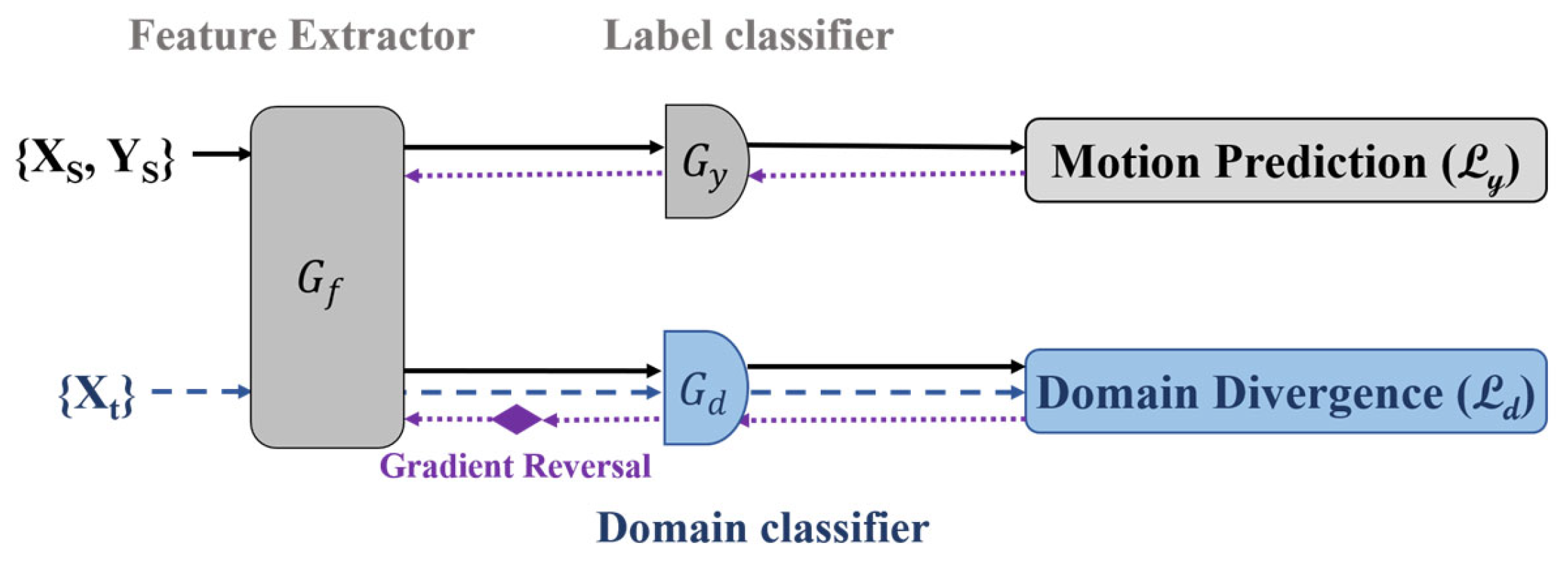

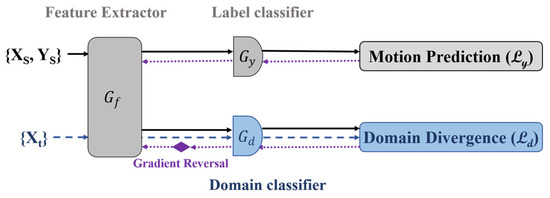

2.2.3. DAIDA Framework

The DAIDA framework we used, shown in Figure 4, has a similar structure to the baseline model, sharing the same convolutional module for feature extraction. The model then generates branches, one of which is a labeled classifier for the motion classification trained in the source and target domains. The second branch is a domain classifier using a Gradient Reversal Layer (GRL), which is set between the domain classifier and the feature extractor to determine whether the collected feature distributions are from the source or target domain. The GRL layer that maximizes the loss of the classifier forces the feature extraction block to learn features that are not related to the domain, i.e., domain-invariant features. Ultimately, the model learns features with better generalization performance. This operation takes place during the backpropagation phase and consists of multiplying the gradient by a negative value (−λ) while, during forward propagation, the gradient acts as an identity transform. The GRL can be easily implemented to any feed-forward network without any additional parameters.

Figure 4.

The process of domain adversarial training.

Consider a classification problem with the input feature vector space X and Y = {1, 2, 3, 4, 5, 6} as the set of labels in the output space. Let {Xs, Ys} and {Xt, Yt} be unknown joint distributions defined over X × Y, referred to as the source and target distributions, respectively. The unsupervised domain adaptation algorithm requires input as the labeled source domain data, sampled from {Xs, Ys}, and unlabeled target domain data, sampled from the marginal distribution {Xt}. The data from the source and target domains are fed into the feature extractor, and the features are classified by the domain classifier.

The architecture has two networks, and each network consists of a feature extractor and a classifier. The feature extractor , shown in Figure 4, is a parallel convolution network and takes the preprocessed sEMG input vector and generates a feature vector , i.e.,

The feature vector extracted from is mapped to a class label by the label classifier and to a domain label by the domain classifier , i.e.,

Both the label classifier and the domain classifier are multilayer feed-forward neural networks whose parameters are referred to as and , respectively. During training, the goal of the feature extractor is to minimize the classification error while making the domain classifier unable to accurately distinguish between the feature representations of the source and target domains. In this way, the feature extractor is able to learn feature representations that have good generalization ability to both the source and target domains.

In the proposed DAIDA scheme, we utilize parallel convolution to extract effective motion features, and employ adversarial training to simultaneously minimize the classification loss and maximize the diversity of domain distribution. This enables us to eventually achieve a position-invariant model. The complete DAIDA algorithm is described in Algorithm 1.

| Algorithm 1 Proposed DAIDA Algorithm |

| Input: (1) Training data: Labeled source and unlabeled target . (2) learning rate η, and iteration ; (3) Initialized parameters: of feature extractors, of label classifier, of domain classifier, weighting hyper-parameter . Output: Optimized parameters , and . for T = 1,2, …, do for N = 1,2, …, do Select mini batch: , from , Calculate weights: , and Calculate the losses: Calculate the final loss: Update the parameters: End for End for |

2.2.4. Training Scheme

The section aims to validate DAIDA’s performance in adapting to arm positions from multiple perspectives. We collected data from different arm positions to use as the source domain, target domain and testing datasets to validate the generalization performance of DAIDA. For each subject, data from one single arm position containing 6 motions × 10 repetitions were collected as an acquisition. These experiments were designed to test the arm position stability of DAIDA, with a CNN as the baseline for without-domain adaption. When testing the CNN, an odd number of repetitions was used for training and an even number of repetitions was used for testing. And when testing DAIDA, the 1st and 2nd repetitions were used for retraining as the target domain, and the remaining eight repetitions were used for testing. The pre-training datasets of DAIDA under each strategy comprised the same data as the CNN training sets as the source domain, and the testing set was different.

The purpose of the baseline model is to verify the feasibility of the CNN model for upper arm motion recognition and the separability of arm positions. The data used as input came from the sEMG signals obtained from a single subject’s upper arm position when completing a movement at a single position as a set. There were 10 healthy subjects and 2 transhumeral amputees, and each subject completed six motions in 3 arm positions, i.e., 18 sets of data in total. The experimental data for arm position separability came from a single action for all subjects, with a total of six sets of motions i.e., six sets of data to validate the variability in the same action between different arm positions.

The datasets used for training and testing for both types of experiments are from the same set of experimental data, and both types of experiments use only the source network for recognition.

The DAIDA model is proposed to address the performance degradation of models at different arm elevation positions. In this study, this problem is regarded as a transfer learning problem. To achieve the desired transfer learning effect, the model needs to extract similar deep features from sEMG signals belonging to both the source domain and the target domain. The distributions of the source domain and the target domain can be used to assess the similarity of the extracted deep features.

To validate the recognition performance of the model at different arm elevation positions, the specific validation program is described as follows, and six model testing scenarios are designed, as shown in Table 3:

Table 3.

Arm position domain adaptation training scheme.

- Without domain adaptation, training is solely based on labeled source domain data; i.e., the training data are labeled motion data from all positions and the test data are labeled data from one single position (No. 1).

- Domain adaptation is performed on source domain data; i.e., the test data come from the source domain and do not overlap with the retraining data (No. 2).

- Domain adaptation is performed on data from the target domain; i.e., the test data are from the target domain and from the same domain as the retraining data (No. 3).

- The ability to generalize the model after domain adaptation is determined; i.e., test data are not derived from the source nor the target domain (No. 4).

- The performance of the domain adaptation effect on the total dataset is determined; i.e., the source domain data from the sum of all arm position data, the target domain from data of one single arm position and the test data from a single arm position that are identical to (No. 5) or distinct from (No. 6) the target data.

2.3. Statistical Analysis

The performance of the models was represented using the most common evaluation measures, such as accuracy, precision, recall, specificity and F1 score. These metrics were defined as follows:

where TP is the true positive rate, TN is the true negative rate, FN is false negatives and FP is false positives. Accuracy represents the ratio of correct predictions over total predictions. Recall measures the proportion of true positive predictions among all actual positive instances. Specificity calculates the proportion of true negative predictions among all actual negative instances. Precision quantifies the proportion of true positive predictions among all positive predictions. Finally, the F1 score combines precision and recall into a single metric. The error represents the standard deviation of the accuracy for different gesture classes. Both metrics and error information are expressed as percentages.

To quantify the effectiveness of the proposed approach, we conducted statistical analysis via the t-test and the Wilcoxon rank sum test. The significance level was set at p < 0.05. In Section 3.1, we statistically analyze the various metrics of different feature extraction modules. In Section 3.2, we compare the performance differences of different training schemes for statistical tests. In Section 3.3, we only compare the accuracy numerically due to the small number of amputees.

3. Results

3.1. CNN Model Recognition Performance

Since the proposed DAIDA was an improved version of the Inception network, we justified improving the model by using different Inception blocks, namely, Inception A, Inception B and Inception C. The block architecture is presented in Figure 3d. The average recognition accuracy, precision, recall, specificity and F1 score of the different Inception blocks used under a single arm position are shown in Table 4. As illustrated in Table 4, the Inception C block outperforms the rest of the blocks in all parameter comparisons (p < 0.05). Meanwhile, the Inception structure outperforms conventional convolutional layers.

Table 4.

Recognition performance of different feature extraction modules.

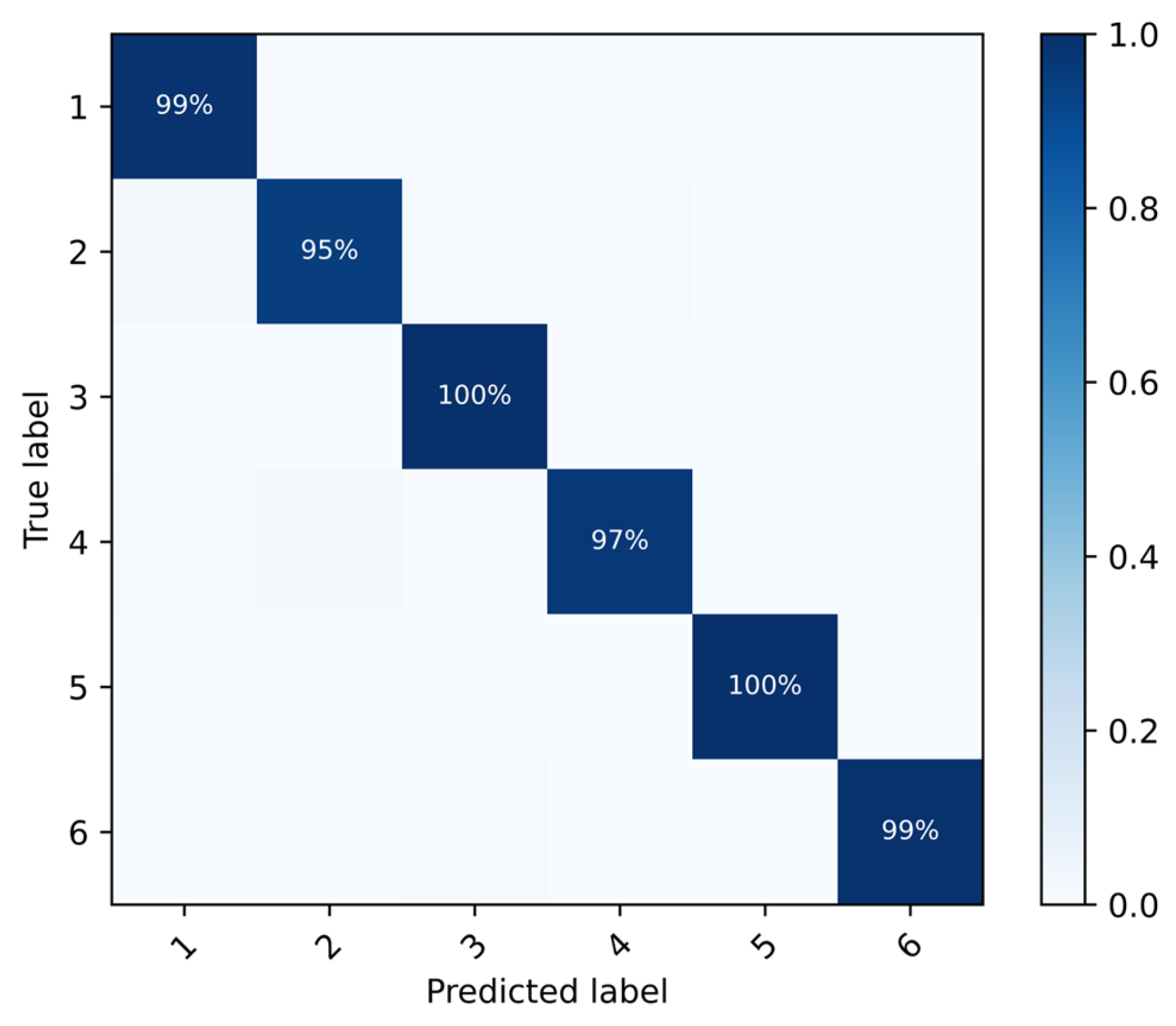

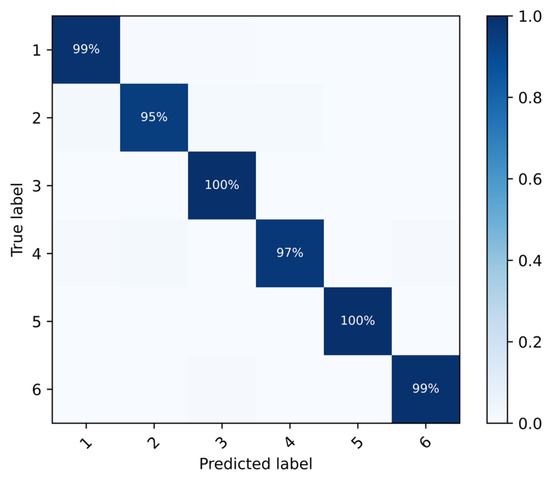

Figure 5 presents the performance of the CNN model at a single arm position, showing a confusion matrix with an average accuracy of 95.70 ± 1.27%.

Figure 5.

Confusion matrix for a single upper arm position at P1.

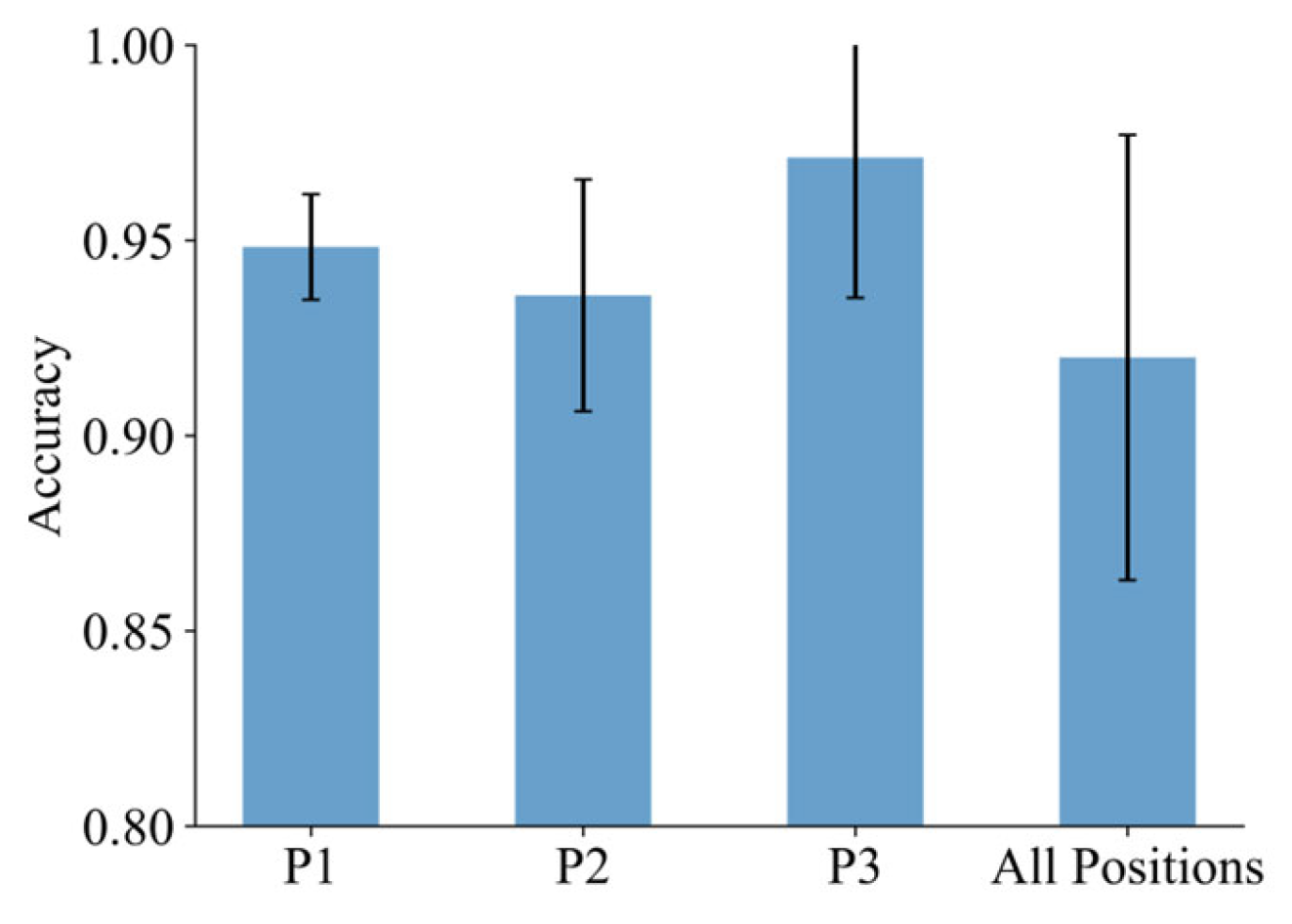

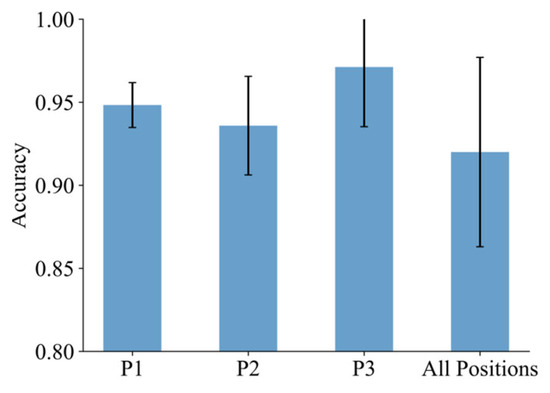

The baseline model discriminates the separability of arm positions, and the recognition results are shown in Figure 6. The average recognition accuracy at position P1 is 94.83% ± 1.35%; at position P2, it is 93.59% ± 2.97%; and at position P3, it is 97.12% ± 3.59%. Recognition accuracy was highest at position P3 and lowest at position P1. The recognition accuracy at all positions was 92.00% ± 5.70%.

Figure 6.

Arm position recognition performance.

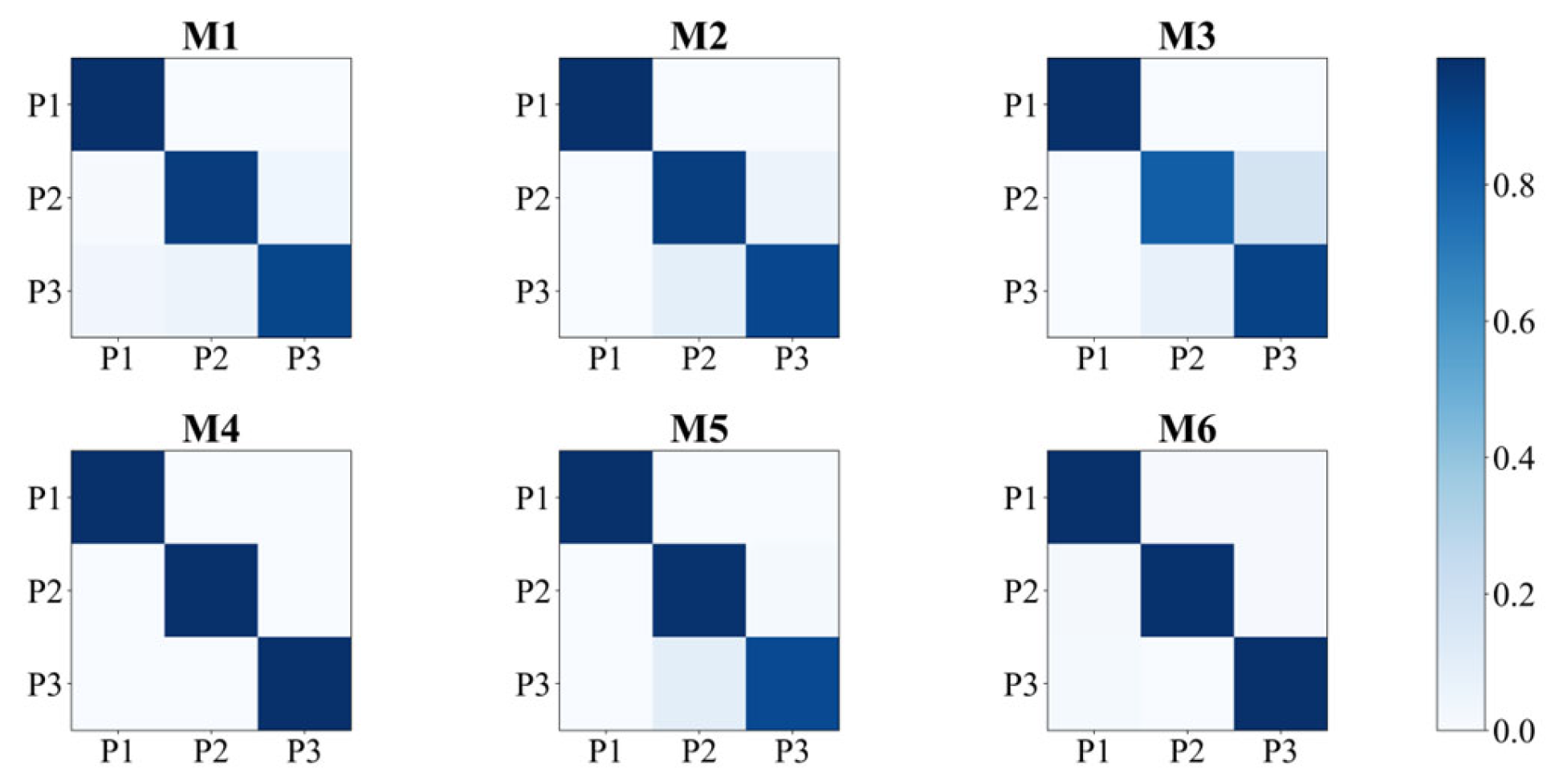

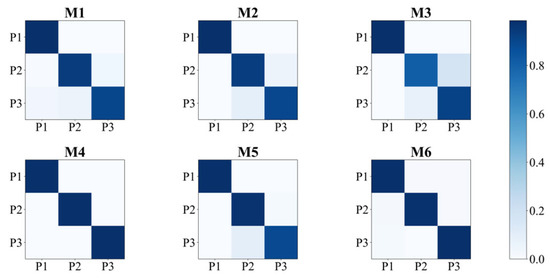

Similarly, the baseline model was used to determine the variability in the data for the six categories of motions with respect to arm position, with an average identification result of 96.32% ± 2.77%. The confusion matrices are shown in Figure 7.

Figure 7.

Performance of position separability. Each confusion matrix represents the classification performance of three arm positions for one motion.

3.2. Position Recalibration

We trained the source network using labeled data from three different arm positions as inputs and achieved an average accuracy of 95.70% ± 1.27%. Additionally, the average recognition accuracy at different arm elevation positions was 92.00% ± 5.70%, as shown in Figure 6.

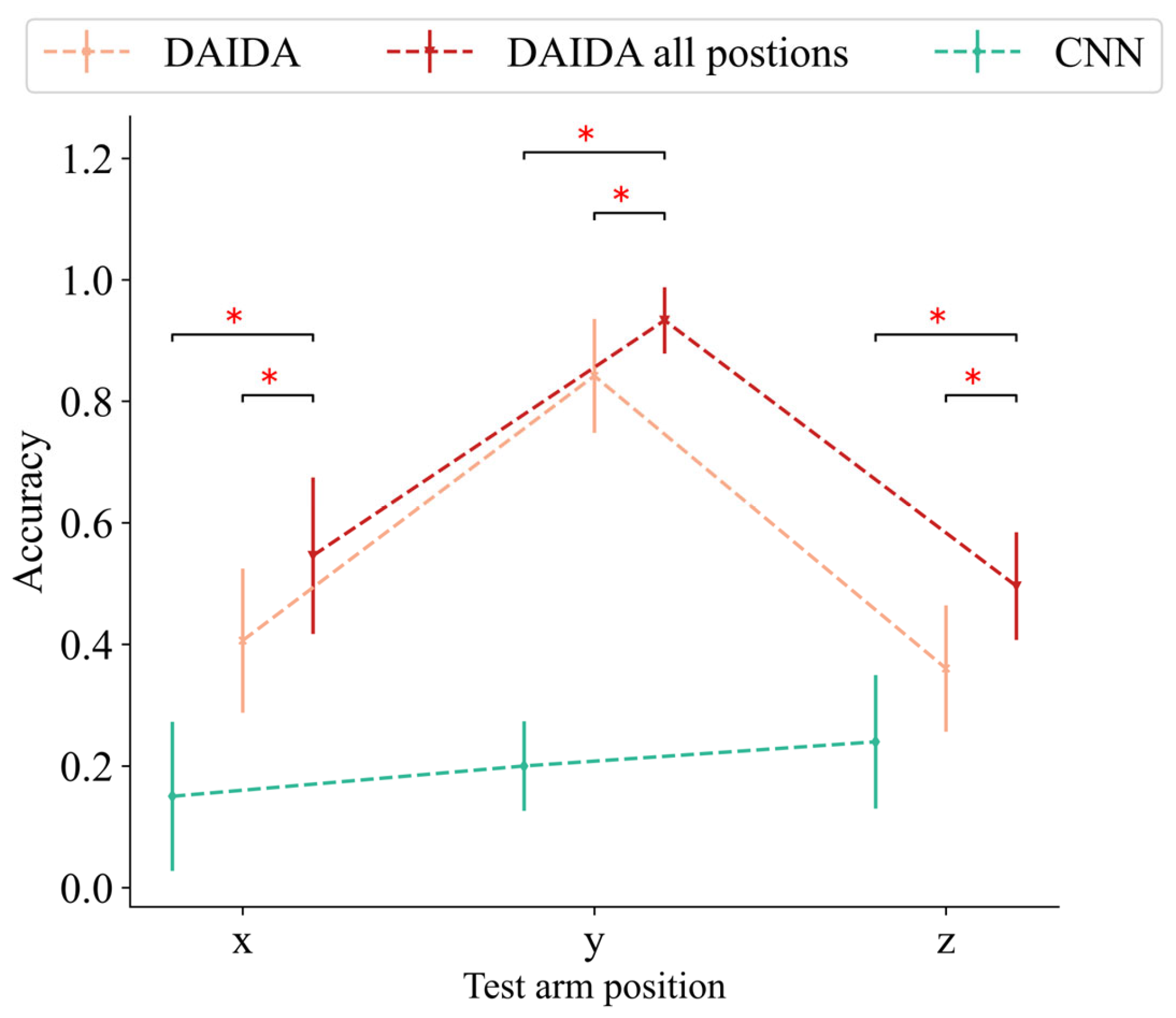

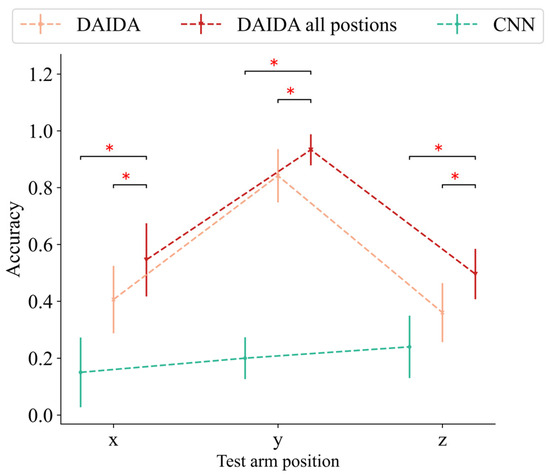

As shown in Table 3, the sEMG signals at position x and position y are used as the pre-training inputs in the source domain and the retraining inputs in the target domain, respectively. The model test results unfold in three scenarios, and the test data are position x, position y and position z (x, y, z ∈ (1,2,3) and x ≠ y ≠ z), respectively.

Recognition accuracies of 15.01% ± 3.08%, 15.54% ± 3.29% and 15.91% ± 2.42% were tested using the CNN directly, i.e., using only the pretrained source network without retraining at the positions x, y, or z, respectively (No. 1).

However, significant improvements were observed when utilizing DAIDA. The accuracy testing at positions x, y and z yielded results of 44.03% ± 12.26% (No. 2), 92.01% ± 7.37% (No. 3) and 41.99% ± 10.98% (No. 4), respectively. Moreover, when employing all arm positions as the source domain data for pretraining, the accuracies achieved were 54.60% ± 12.86% (No. 6), 92.15% ± 8.47% (No. 5) and 49.60% ± 8.86% (No. 6) for positions x, y and z, respectively.

3.3. The Recognition Performance of Transhumeral Subjects

Table 5 shows the recognition performance of DAIDA for transhumeral amputees under different training schemes. The recognition accuracy tested directly after pretraining the model using only sEMG signals under all arm positions is 42.08% ± 8.21%. The recognition accuracies are 43.61% ± 8.77%, 94.93% ± 3.21% and 40.60% ± 9.68% when using data from a single arm position to pretrain and test the performance of the domain adaptation in the source and target domain data as well as the generalization performance of the models corresponding to scenarios 2 to 4, respectively. When pretraining using data from all arm positions to detect the performance of domain adaptation effects on the total dataset, the recognition accuracies were 94.06% ± 4.18% and 58.43% ± 8.38%, corresponding to schemes 5 and 6, respectively.

Table 5.

Recognition results of different training programs for the transhumeral amputees.

4. Discussion

In order to achieve accurate recognition of upper arm motions and to mitigate the impact of arm position effects on the performance of myoelectric interfaces during real-world use, we propose a network framework with an Inception feature extraction structure that combines the techniques of adversarial training in the domain adaptation domain. In this paper, we design the DAIDA to effectively extract and classify domain-invariant features across domains. Specifically, by optimizing the three components of the adversarial network, namely the feature extractor, label predictor and domain discriminator, the network can learn arm-position-specific features by aligning the feature distributions of different arm positions to reduce the impact of domain migration due to changes in arm position. The feature extractor learns discriminative features of sEMG signals through the deep network. The label predictor is used to distinguish between different tasks, while the domain discriminator is used to reduce the inter-domain variation in features. Thus, this framework effectively achieves better accuracy and adaptability in the recognition of upper arm motions.

This study first demonstrates that the classification performance of the parallel structured network outperforms a network using ordinary convolutional layers in upper arm motion recognition (Table 4). The network structure proposed in this paper combines the feature maps output from the parallel convolutional kernels with different kernel sizes to construct feature maps with more comprehensive semantic information in order to improve the model’s ability to recognize upper arm movements. More complex parallel structures look to exhibit better model performance while ensuring that the network size is appropriate. Therefore, in this study, the Inception C structure was chosen as the feature extraction module of the baseline model.

The model performs well in recognizing upper arm motions under the three commonly used arm positions, as can be seen in Figure 6, with recognition accuracies greater than 88% for all of them. The average recognition accuracy was also greater than 90% when using all arm positions as inputs. There is a distinction between the recognition results at the three arm positions, which may be due to the different characteristics of the corresponding EMG data.

In comparison to previous studies, Gaudet et al. found that it was possible to classify eight upper-limb phantom limb movements and one no-movement category in transhumeral amputees with an average accuracy of 81.1% [31]. The inclusion of kinematic features increased the accuracy by an average of 4.8%. Jarrassé et al. successfully classified up to 14 phantom arm movements, achieving an average recognition accuracy of more than 80% when only six hand, wrist and elbow movements were considered [3]. In contrast to previous work, Huang et al. successfully improved the identification accuracy of 15 motions of humeral amputation patients to more than 85% by using a higher-order spatial filter to obtain more informative high-density sEMG signals [32]. Li et al. found that the classification performance of fused sEMG and EEG signals was significantly better than that of single sEMG or electroencephalogram (EEG) signal sources [33]. The accuracy of this fusion method was as high as 87%. Nsugbe et al. found that eight discrete gestures of the upper arm could be recognized with a best classification accuracy of 81% [34]. While the number of movements recognized in previous studies is different from ours, it is worth noting that these works required the use of either high-density electrodes or information fusion as inputs to the classifier, which means that more complexity is introduced in practical use. Our study achieves effective recognition of commonly used motions while using routine EMG electrodes as input.

Previous studies show that the arm position has a significant impact on gesture recognition [35]. As shown in Figure 7, the average recognition accuracy for classifying arm positions using the baseline model is over 95%. This indicates that there is great variability in the motion signals for different arm positions, which is consistent with existing research [21,36,37,38]. It is also illustrated that different arm elevation position recognition results are not obtained due to the dissimilarities in the EMG signals under each arm position. Therefore, we adopt DAIDA to alleviate this impact; i.e., after pre-training with data from a single arm position, migration can be completed following a simple calibration to make the EMG interface applicable to another arm position.

From Figure 8, it can be observed that when a recognition model pretrained on the source domain arm position is retrained with target domain data (different from the source domain arm position) and tested on the source domain arm position (the same arm position as the source domain), the variation caused by different arm positions results in a maximum recognition accuracy of 44.03% ± 12.26%. As can be observed from the first column of Figure 8, the recognition accuracy is improved by 29.02% ± 9.18% compared to the direct use of CNN when tested on the source domain data (p < 0.05).

Figure 8.

Comparison of results using one single position for pretraining data under CNN and DAIDA. ‘DAIDA all positions’ means data from all positions were used for pretraining. Horizontal coordinates indicate test data for different arm positions. The characters x, y and z each represent one arm position, respectively (x, y, z ∈ (1, 2, 3) and x ≠ y ≠ z). * represents p < 0.05.

When the recognition model pretrained on the source domain position is tested on the target domain arm position, the recognition accuracy can reach up to 91.25% ± 6.59% at the test arm position. As can be observed from the second column of Figure 8, this is an improvement of 75.76% ± 2.30% over the result obtained from testing using the CNN directly (p < 0.05). Using the whole dataset (three arm positions) as the source domain, the best recognition results were achieved when testing on the target domain data, with a recognition accuracy of 93.33% ± 6.86%. When not tested on the target domain data, the recognition results declined significantly, with the highest recognition accuracy being 54.60% ± 12.86%, which was still significantly higher than the direct use of CNNs. This meets the expected results while using only a small amount of data for retraining to achieve a performance that approximates the source domain model, meets the expected results and achieves the goal of recognizing different arm elevation positions [21].

When the recognition model trained on the source domain position is tested on data that are different from the source and target domains, the highest arm position recognition accuracy can be 41.99% ± 10.98% in the test. As can be observed from the third column of Figure 8, this is an improvement of 29.08% ± 8.56% over the result obtained from testing with the CNN directly (p < 0.05). This demonstrates the weak generalization ability of the provided domain adaptation model.

A similar distribution of results was demonstrated in the experimental results for the transhumeral amputees. Regardless of whether the source domain data comprised a single arm position or all arm position data, better recognition results with a recognition accuracy greater than 90% were only shown when tested on the target domain. When not tested on the target domain, the recognition accuracy was less than 60%. This fits well with the use scenario of pattern recognition based on sEMG signals, i.e., motion recognition of transhumeral amputees controlling a myoelectric prosthesis in different arm positions. The modeling framework proposed in this study meets the needs of myoelectric prosthesis motion recognition for transhumeral amputees and solves the problem of performance degradation caused by arm position changes.

In previous studies addressing position effects, Fougner et al. used sEMG and accelerometer sensors attached to 10 subjects with normal arms to reduce the average classification error from 18% to 5% [21]. Khushaba et al. presented their proposed spectral moments to achieve significant reductions in the classification error rates of up to 10% on average across subjects and five different limb positions [22]. Geng et al. proposed a cascade classification scheme using a position classifier and multiple motion classifiers responsible for different arm positions using multichannel EMG signals and tri-axial accelerometer mechanomyography signals to reduce the interpositional classification error of amputees by up to 10.8% [19]. Mukhopadhyay et al. used time domain power spectral descriptors as the feature set to train a Deep Neural Network classifier, obtaining 98.88% recognition accuracy on five limb positions [36]. The aim of our study was to address TH amputees’ problem of decreased recognition performance due to arm position changes, and few studies have focused on this group. However, TH amputees similarly and inevitably need to make arm position changes while using their prostheses to complete daily activities [39]. Our proposed algorithm, which does not require the introduction of other inputs or computation of features, simply uses two action repetitions for retraining to achieve stable recognition at different arm elevation positions, which is in line with the actual usage scenario of myoelectric prostheses.

Our proposed DAIDA is able to achieve accurate upper arm movement recognition while realizing adaptation at different arm elevation positions, which is useful in practical applications, by improving the model performance from the source domain to the target domain through knowledge migration. Second, the deep domain adaptation network is able to capture the shared features between the source and target domains, thus achieving better model generalization capabilities. Therefore, DAIDA is uniquely suited to address motion recognition at different arm elevation positions.

Although the DAIDA presented in this paper enables the stable detection of multiple limb positions in transhumeral amputees, we have only covered the most common limb positions in daily life and not all degrees of freedom of the upper arm. At the same time, we were able to achieve a limited number of movement patterns. Practical prosthesis use may also involve factors such as electrode displacement and muscle fatigue, which will be a direction for future refinement.

5. Conclusions

The DAIDA algorithmic framework proposed in this paper first applies a parallel feature module, which significantly improves action recognition for transhumeral amputees. At the same time, this study and existing studies have shown significant variability in the sEMG patterns of the upper arm due to changes in arm position, which adversely affect the performance of models trained in a domain-independent environment. The DAIDA proposed in this paper utilizes a domain adversarial neural network to learn unsupervised domain-invariant features directly from the source arm position signal. By using a small amount of unlabeled arm position sEMG data for adversarial training based on domain adaptation, the domain shift problem present in changing arm position scenarios shows promising performance. Due to its satisfactory classification performance and reduced training burden, the proposed DAIDA framework is a promising practical myoelectric interface technique. Therefore, further work will be carried out to include more transhumeral amputees and to access the model in complex real-life scenarios to realize real-time usability.

Author Contributions

Conceptualization, S.L., W.S. and W.L.; methodology, S.L., W.S. and W.L.; software, S.L., W.S. and W.L.; validation, S.L., W.S., W.L. and H.Y.; formal analysis, S.L. and W.S.; investigation, S.L., W.S. and W.L.; resources, S.L., W.S. and W.L.; data curation, S.L., W.S. and W.L.; writing—original draft preparation, S.L., W.S. and W.L.; writing—review and editing, S.L., W.S and W.L.; visualization, S.L., W.S. and W.L.; supervision, S.L., W.S. and W.L.; project administration, S.L., W.S. and W.L.; funding acquisition, S.L. and H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Program of China (2023YFC3604302 and 2020YFC2007902). The APC was funded by the National Key Research and Development Program of China. All authors are grateful for the help of the collaborators of our research center.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kuiken, T.A.; Li, G.; Lock, B.A.; Lipschutz, R.D.; Miller, L.A.; Stubblefield, K.A.; Englehart, K.B. Targeted Muscle Reinnervation for Real-Time Myoelectric Control of Multifunction Artificial Arms. JAMA 2009, 301, 619–628. [Google Scholar] [CrossRef]

- Garbarini, F.; Bisio, A.; Biggio, M.; Pia, L.; Bove, M. Motor Sequence Learning and Intermanual Transfer with a Phantom Limb. Cortex 2018, 101, 181–191. [Google Scholar] [CrossRef]

- Jarrasse, N.; Nicol, C.; Touillet, A.; Richer, F.; Martinet, N.; Paysant, J.; de Graaf, J.B. Classification of Phantom Finger, Hand, Wrist, and Elbow Voluntary Gestures in Transhumeral Amputees With sEMG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 68–77. [Google Scholar] [CrossRef]

- Zhao, L.; Liu, G.; Wang, H.; Huang, P.; Yu, W. Long-Term Stability Performance Evaluation of Pattern Recognition with sEMG. In Proceedings of the 2021 IEEE 5th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Xi’an, China, 15–17 October 2021; pp. 775–779. [Google Scholar]

- Tsinganos, P.; Cornelis, B.; Cornelis, J.; Jansen, B.; Skodras, A. Hilbert sEMG Data Scanning for Hand Gesture Recognition Based on Deep Learning. Neural Comput. Appl. 2021, 33, 2645–2666. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Xie, H. Surface-Electromyography-Based Gesture Recognition Using a Multistream Fusion Strategy. IEEE Access 2021, 9, 50583–50592. [Google Scholar] [CrossRef]

- Peng, X.; Zhou, X.; Zhu, H.; Ke, Z.; Pan, C. MSFF-Net: Multi-Stream Feature Fusion Network for Surface Electromyography Gesture Recognition. PLoS ONE 2022, 17, e0276436. [Google Scholar] [CrossRef]

- Yang, Z.; Jiang, D.; Sun, Y.; Tao, B.; Tong, X.; Jiang, G.; Xu, M.; Yun, J.; Liu, Y.; Chen, B.; et al. Dynamic Gesture Recognition Using Surface EMG Signals Based on Multi-Stream Residual Network. Front. Bioeng. Biotechnol. 2021, 9, 779353. [Google Scholar] [CrossRef] [PubMed]

- Tigrini, A.; Al-Timemy, A.H.; Verdini, F.; Fioretti, S.; Morettini, M.; Burattini, L.; Mengarelli, A. Decoding Transient sEMG Data for Intent Motion Recognition in Transhumeral Amputees. Biomed. Signal Process. Control 2023, 85, 104936. [Google Scholar] [CrossRef]

- Shi, P.; Zhang, X.; Li, W.; Yu, H. Improving the Robustness and Adaptability of sEMG-Based Pattern Recognition Using Deep Domain Adaptation. IEEE J. Biomed. Health Inform. 2022, 26, 5450–5460. [Google Scholar] [CrossRef]

- Pulliam, C.L.; Lambrecht, J.M.; Kirsch, R.F. EMG-Based Neural Network Control of Transhumeral Prostheses. J. Rehabil. Res. Dev. 2011, 48, 739–754. [Google Scholar] [CrossRef]

- Biddiss, E.; Beaton, D.; Chau, T. Consumer Design Priorities for Upper Limb Prosthetics. Disabil. Rehabil. Assist. Technol. 2007, 2, 346–357. [Google Scholar] [CrossRef] [PubMed]

- Jarrassé, N.; de Montalivet, E.; Richer, F.; Nicol, C.; Touillet, A.; Martinet, N.; Paysant, J.; de Graaf, J.B. Phantom-Mobility-Based Prosthesis Control in Transhumeral Amputees Without Surgical Reinnervation: A Preliminary Study. Front. Bioeng. Biotechnol. 2018, 6, 164. [Google Scholar] [CrossRef] [PubMed]

- Spatial Correlation of High Density EMG Signals Provides Features Robust to Electrode Number and Shift in Pattern Recognition for Myocontrol|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/6949119 (accessed on 18 March 2024).

- Merletti, R.; Botter, A.; Troiano, A.; Merlo, E.; Minetto, M.A. Technology and Instrumentation for Detection and Conditioning of the Surface Electromyographic Signal: State of the Art. Clin. Biomech. 2009, 24, 122–134. [Google Scholar] [CrossRef]

- A Hybrid Non-Invasive Method for the Classification of Amputee’s Hand and Wrist Movements|SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-981-10-4505-9_34 (accessed on 21 October 2023).

- Barron, O.; Raison, M.; Gaudet, G.; Achiche, S. Recurrent Neural Network for Electromyographic Gesture Recognition in Transhumeral Amputees. Appl. Soft Comput. 2020, 96, 106616. [Google Scholar] [CrossRef]

- Khushaba, R.N.; Takruri, M.; Miro, J.V.; Kodagoda, S. Towards Limb Position Invariant Myoelectric Pattern Recognition Using Time-Dependent Spectral Features. Neural Netw. 2014, 55, 42–58. [Google Scholar] [CrossRef]

- Geng, Y.; Zhou, P.; Li, G. Toward Attenuating the Impact of Arm Positions on Electromyography Pattern-Recognition Based Motion Classification in Transradial Amputees. J. Neuroeng. Rehabil. 2012, 9, 74. [Google Scholar] [CrossRef] [PubMed]

- Scheme, E.; Fougner, A.; Stavdahl, Ø.; Chan, A.D.C.; Englehart, K. Examining the Adverse Effects of Limb Position on Pattern Recognition Based Myoelectric Control. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 6337–6340. [Google Scholar]

- Fougner, A.; Scheme, E.; Chan, A.D.C.; Englehart, K.; Stavdahl, Ø. Resolving the Limb Position Effect in Myoelectric Pattern Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 644–651. [Google Scholar] [CrossRef] [PubMed]

- Khushaba, R.N.; Shi, L.; Kodagoda, S. Time-Dependent Spectral Features for Limb Position Invariant Myoelectric Pattern Recognition. In Proceedings of the 2012 International Symposium on Communications and Information Technologies (ISCIT), Gold Coast, Australia, 2–5 October 2012; pp. 1015–1020. [Google Scholar]

- Jarrah, Y.A.; Asogbon, M.G.; Samuel, O.W.; Nsugbe, E.; Chen, S.; Li, G. Performance Evaluation of HD-sEMG Electrode Configurations on Myoelectric Based Pattern Recognition System: High-Level Amputees. In Proceedings of the 2022 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), Trento, Italy, 7–9 June 2022; pp. 75–80. [Google Scholar]

- Ogiri, Y.; Yamanoi, Y.; Nishino, W.; Kato, R.; Takagi, T.; Yokoi, H. Development of an Upper-Limb Neuroprosthesis to Voluntarily Control Elbow and Hand. Adv. Robot. 2018, 32, 879–886. [Google Scholar] [CrossRef]

- A Subject-Transfer Framework Based on Single-Trial EMG Analysis Using Convolutional Neural Networks|Semantic Scholar. Available online: https://www.semanticscholar.org/paper/A-Subject-Transfer-Framework-Based-on-Single-Trial-Kim-Guan/f9f18166304171f862138362c7ed7fd714472a36 (accessed on 16 March 2024).

- Li, W.; Shi, P.; Yu, H. Gesture Recognition Using Surface Electromyography and Deep Learning for Prostheses Hand: State-of-the-Art, Challenges, and Future. Front. Neurosci. 2021, 15, 621885. [Google Scholar] [CrossRef]

- Li, S.; Zhang, Y.; Tang, Y.; Li, W.; Sun, W.; Yu, H. Real-Time sEMG Pattern Recognition of Multiple-Mode Movements for Artificial Limbs Based on CNN-RNN Algorithm. Electronics 2023, 12, 2444. [Google Scholar] [CrossRef]

- Asghari Oskoei, M.; Hu, H. Myoelectric Control Systems—A Survey. Biomed. Signal Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Qi, S.; Wu, X.; Chen, W.-H.; Liu, J.; Zhang, J.; Wang, J. sEMG-Based Recognition of Composite Motion with Convolutional Neural Network. Sens. Actuators A Phys. 2020, 311, 112046. [Google Scholar] [CrossRef]

- Smith, L.H.; Hargrove, L.J.; Lock, B.A.; Kuiken, T.A. Determining the Optimal Window Length for Pattern Recognition-Based Myoelectric Control: Balancing the Competing Effects of Classification Error and Controller Delay. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 186–192. [Google Scholar] [CrossRef] [PubMed]

- Phantom Movements from Physiologically Inappropriate Muscles: A Case Study with a High Transhumeral Amputee|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/7319144 (accessed on 18 October 2023).

- Gaudet, G.; Raison, M.; Achiche, S. Classification of Upper Limb Phantom Movements in Transhumeral Amputees Using Electromyographic and Kinematic Features. Eng. Appl. Artif. Intell. 2018, 68, 153–164. [Google Scholar] [CrossRef]

- Asogbon, M.G.; Samuel, O.W.; Ejay, E.; Jarrah, Y.A.; Chen, S.; Li, G. HD-sEMG Signal Denoising Method for Improved Classification Performance in Transhumeral Amputees Prosthesis Control. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Online, 1–5 November 2021; pp. 857–861. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, D.; Wang, Y.; Feng, J.; Xu, W. Two Ways to Improve Myoelectric Control for a Transhumeral Amputee after Targeted Muscle Reinnervation: A Case Study. J. Neuroeng. Rehabil. 2018, 15, 37. [Google Scholar] [CrossRef]

- Position-Independent Decoding of Movement Intention for Proportional Myoelectric Interfaces|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/7275160 (accessed on 8 October 2023).

- Mukhopadhyay, A.K.; Samui, S. An Experimental Study on Upper Limb Position Invariant EMG Signal Classification Based on Deep Neural Network. Biomed. Signal Process. Control 2020, 55, 101669. [Google Scholar] [CrossRef]

- Boschmann, A.; Platzner, M. Reducing the Limb Position Effect in Pattern Recognition Based Myoelectric Control Using a High Density Electrode Array. In Proceedings of the 2013 ISSNIP Biosignals and Biorobotics Conference: Biosignals and Robotics for Better and Safer Living (BRC), Rio de Janeiro, Brazil, 18–20 February 2013; pp. 1–5. [Google Scholar]

- Betthauser, J.L.; Hunt, C.L.; Osborn, L.E.; Kaliki, R.R.; Thakor, N.V. Limb-Position Robust Classification of Myoelectric Signals for Prosthesis Control Using Sparse Representations. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 6373–6376. [Google Scholar]

- Muscle Synergies and Isometric Torque Production: Influence of Supination and Pronation Level on Elbow Flexion—PubMed. Available online: https://pubmed.ncbi.nlm.nih.gov/8229181/ (accessed on 8 October 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).