Self-Attention-Enhanced Deep Learning Framework with Multi-Scale Feature Fusion for Potato Disease Detection in Complex Multi-Leaf Field Conditions

Abstract

1. Introduction

- (1)

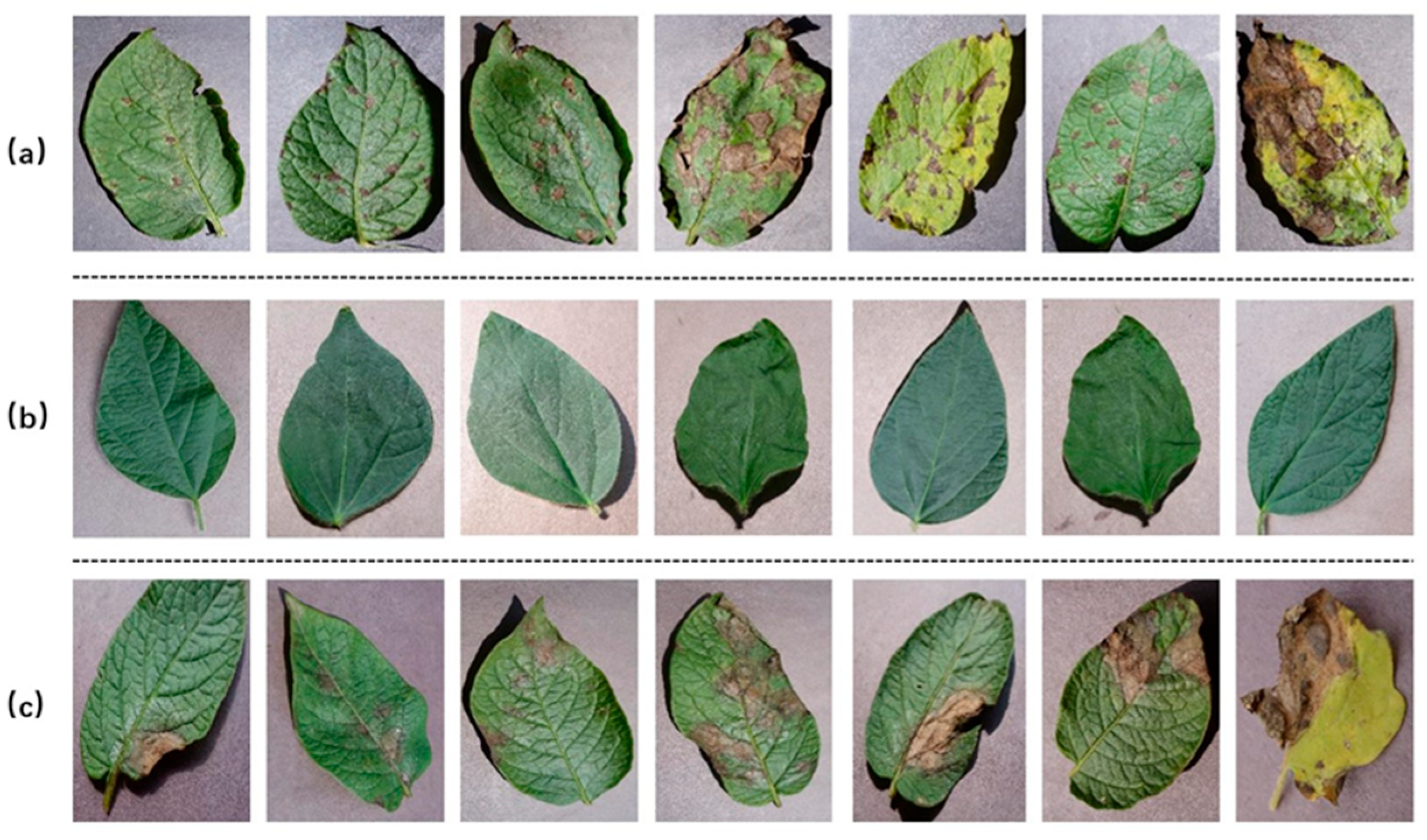

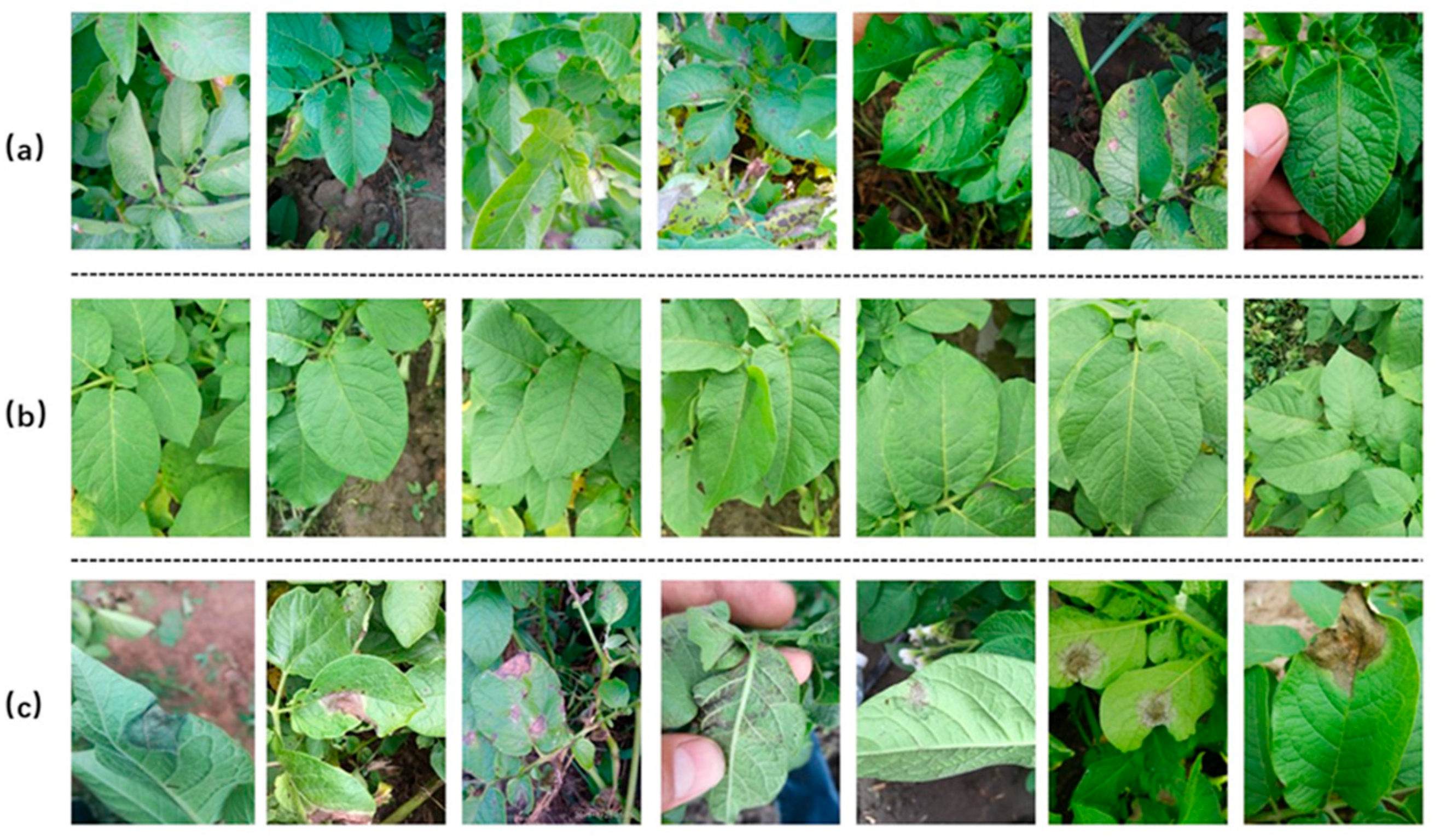

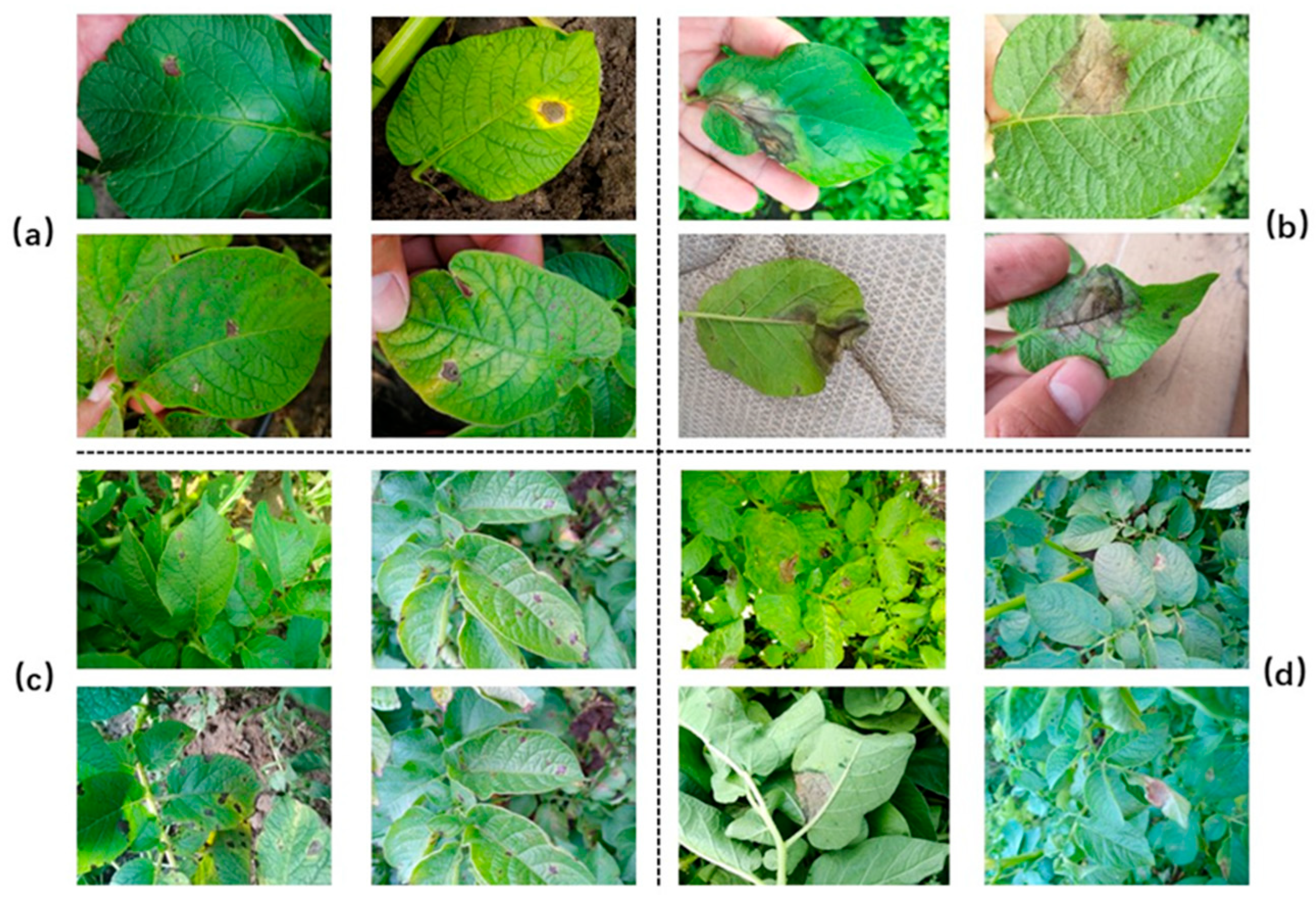

- Data collection and construction: We collected and photographed single leaves and multiple leaves under real complex backgrounds on site, and classified the collected data according to the real background. In addition to the conventional classification of early blight, late blight, and healthy leaves, we further mixed single leaves and multiple leaves under complex backgrounds to construct a mixed dataset. Through comparative analysis with the basic model, we verified the scientificity and effectiveness of the dataset, laying a solid foundation for subsequent research.

- (2)

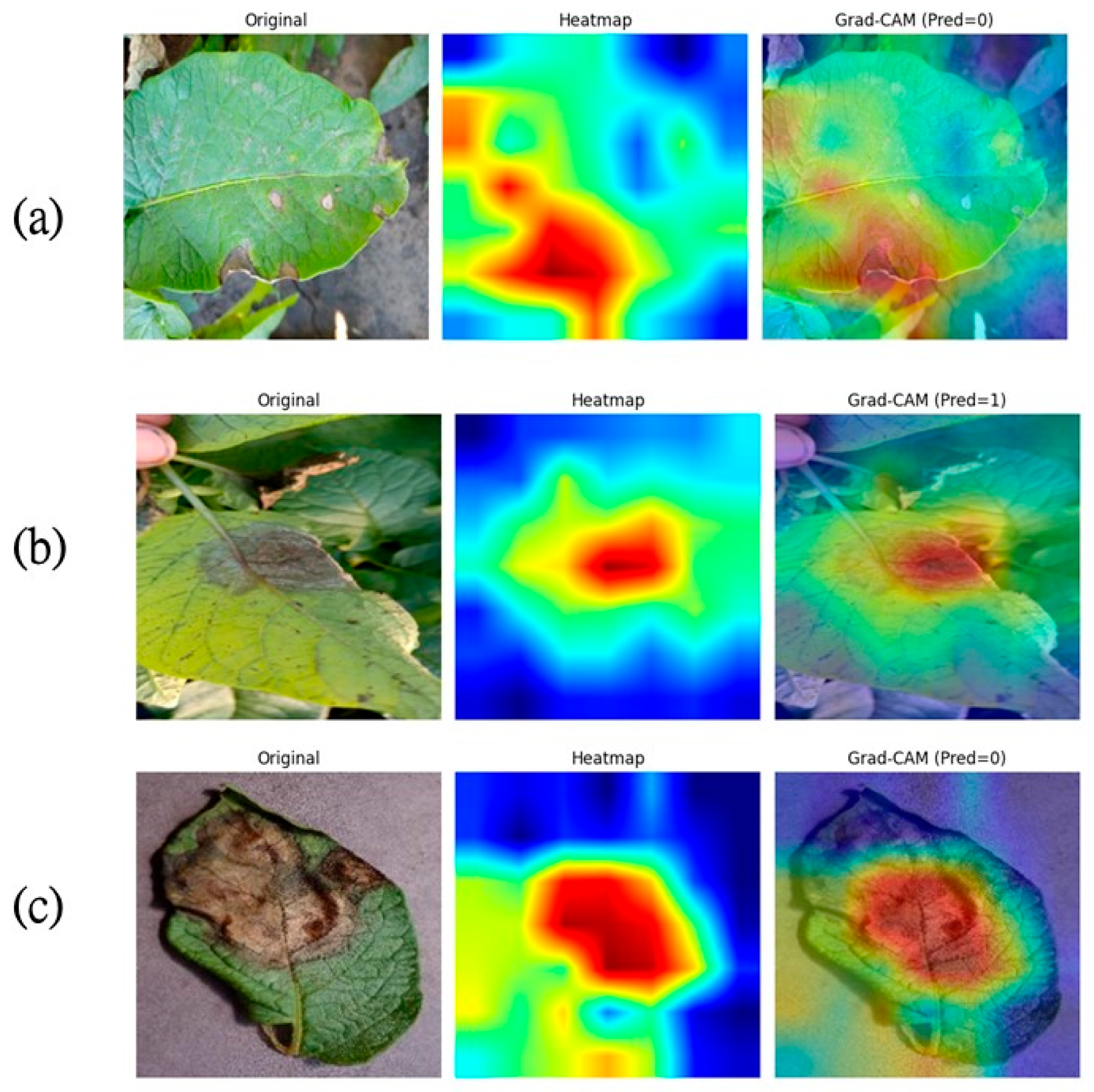

- Classification model design and optimization: Under complex backgrounds and multi-leaf conditions, transfer learning was used to load a pre-trained model, and a self-attention mechanism along with a feature-fusion module was introduced into the ResNet18 architecture, resulting in the proposed ResNet18-SAWF model. This model is capable of accurately extracting the color, texture, and specific spot features of the leaves, thereby significantly improving recognition performance. Relative to the ResNet18 baseline, accuracy is elevated. Moreover, substantial accuracy gains are achieved against existing models across both complex and simple background scenarios.

2. Data Sources and Processing

2.1. Sources of Research Data

2.2. Data Preprocessing

3. Methods

3.1. ResNet18 Network

3.2. The Overall Architecture of the ResNet18-SAWF Model

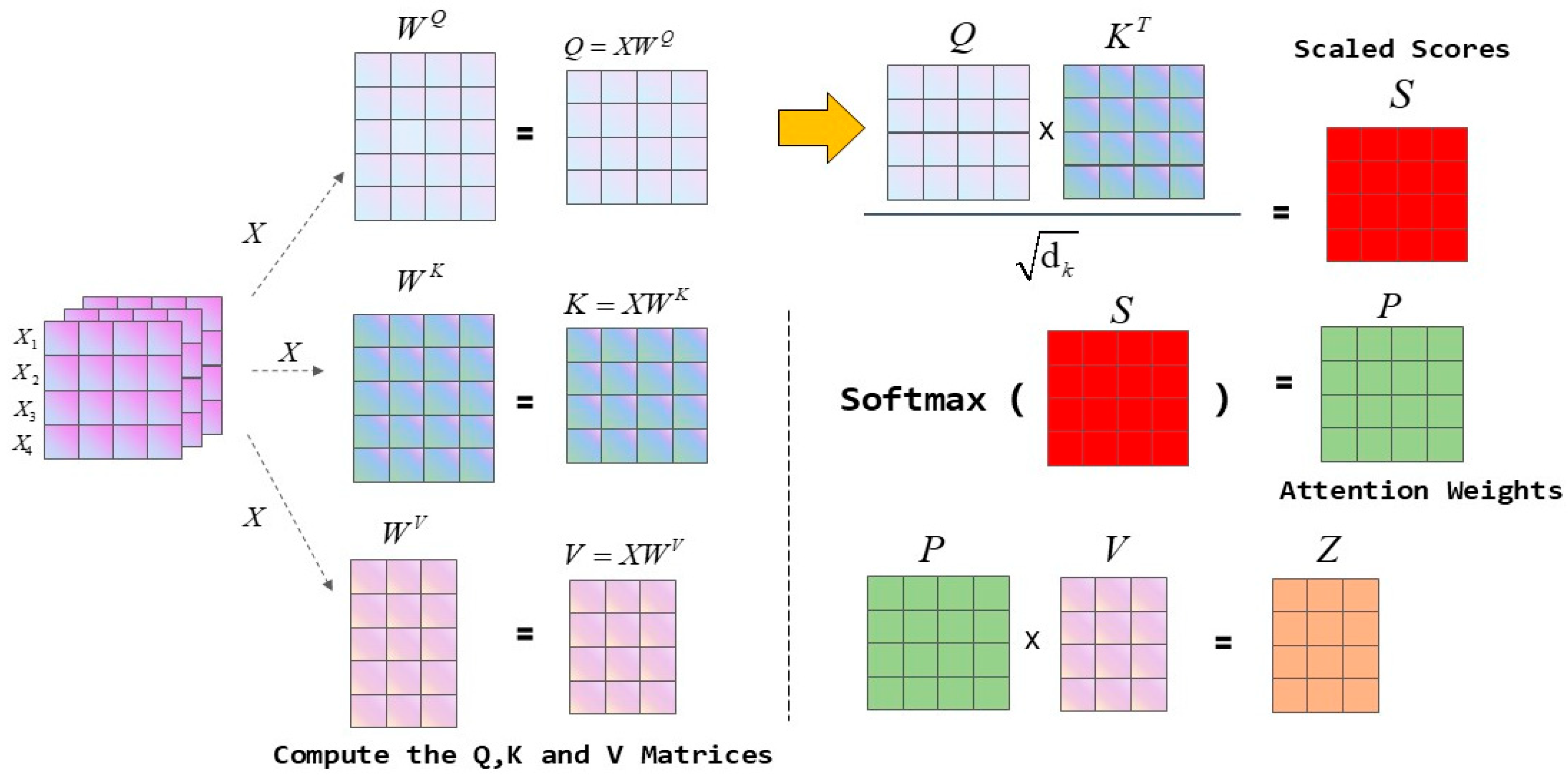

3.3. Self-Attention Mechanism Module

3.4. Multi-Scale Feature-Fusion Module

3.5. Evaluation Metrics

4. Results and Discussion

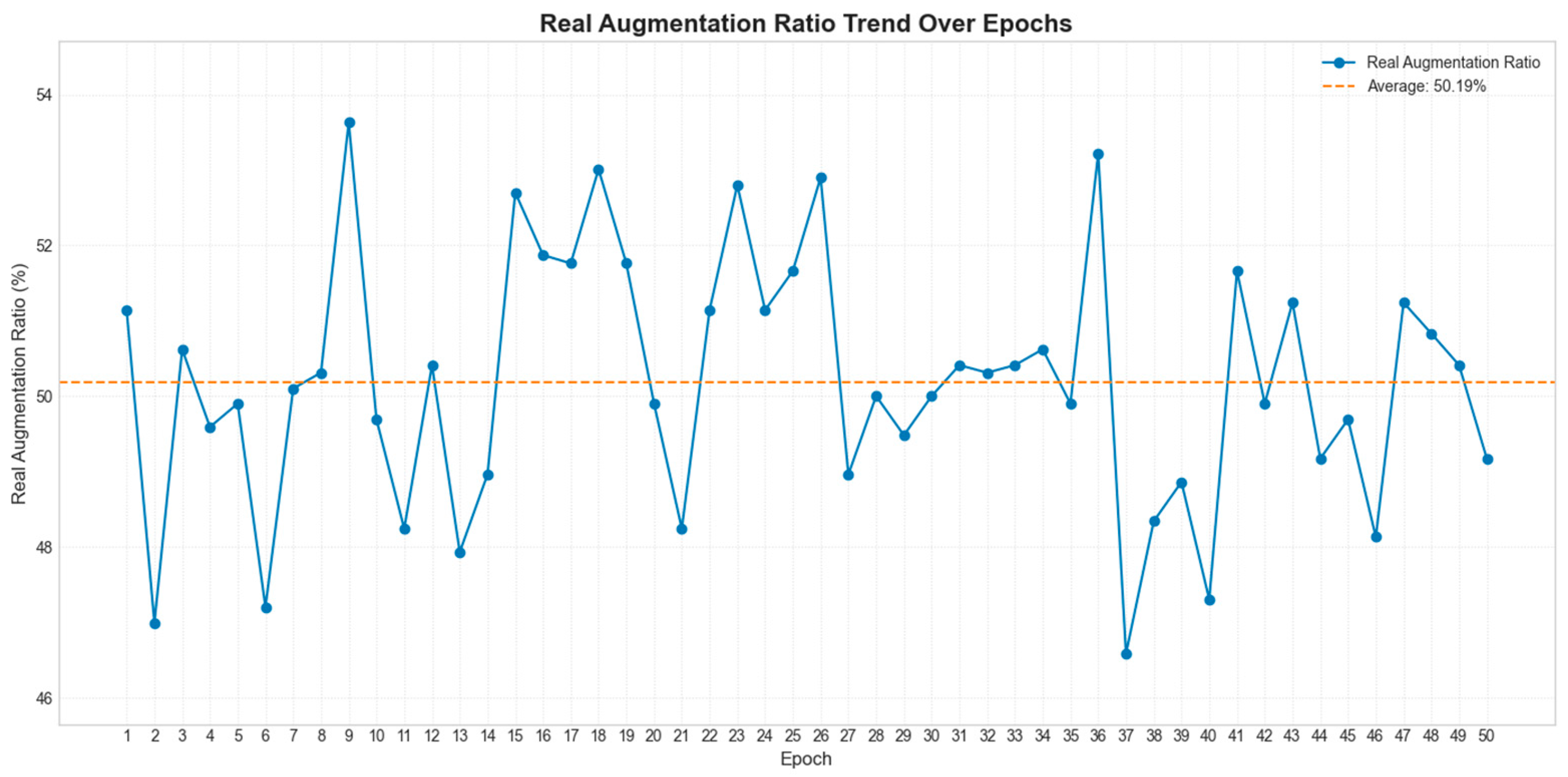

4.1. Experimental SETUP

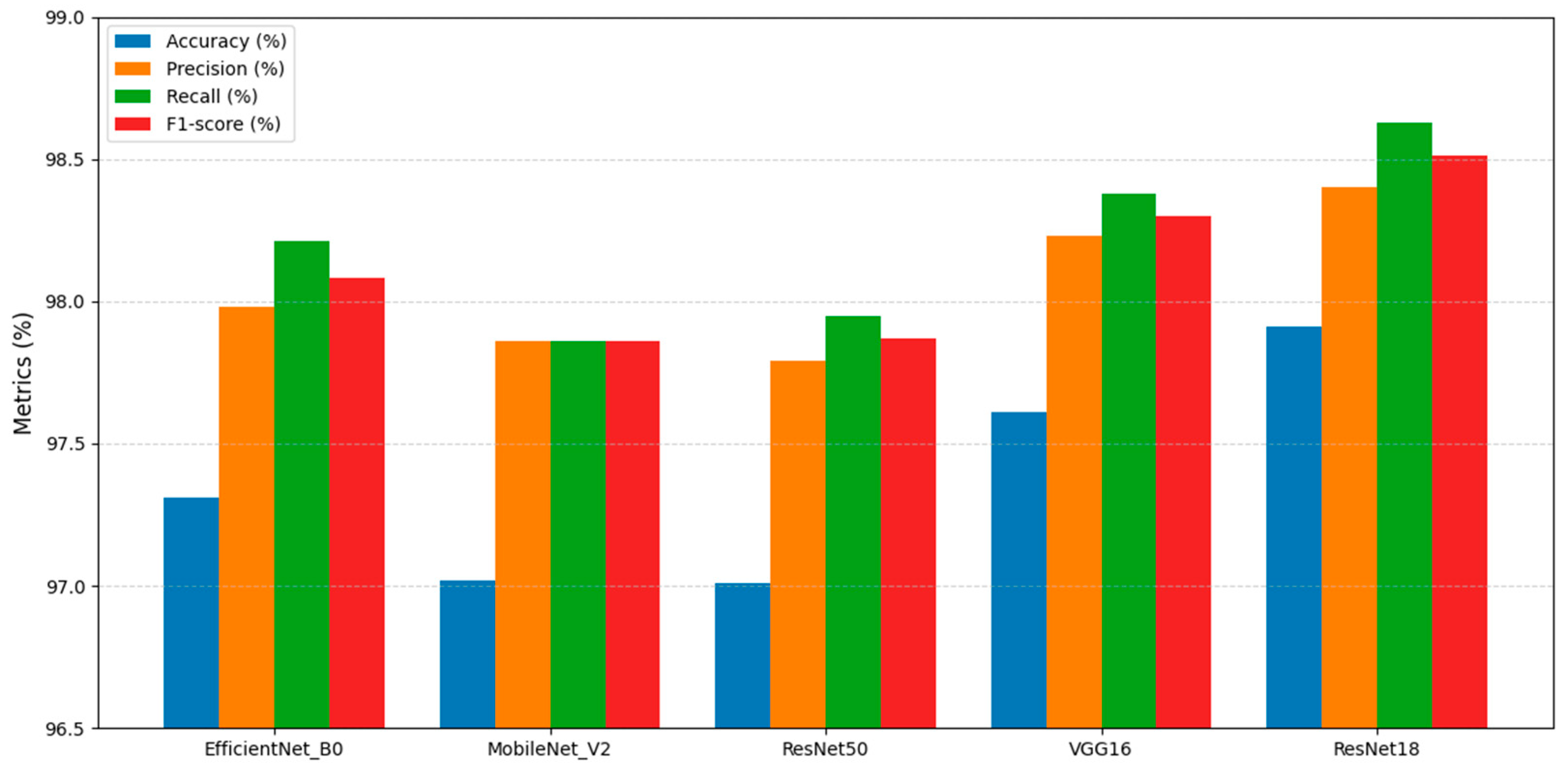

4.2. Base Models Comparison Experiment

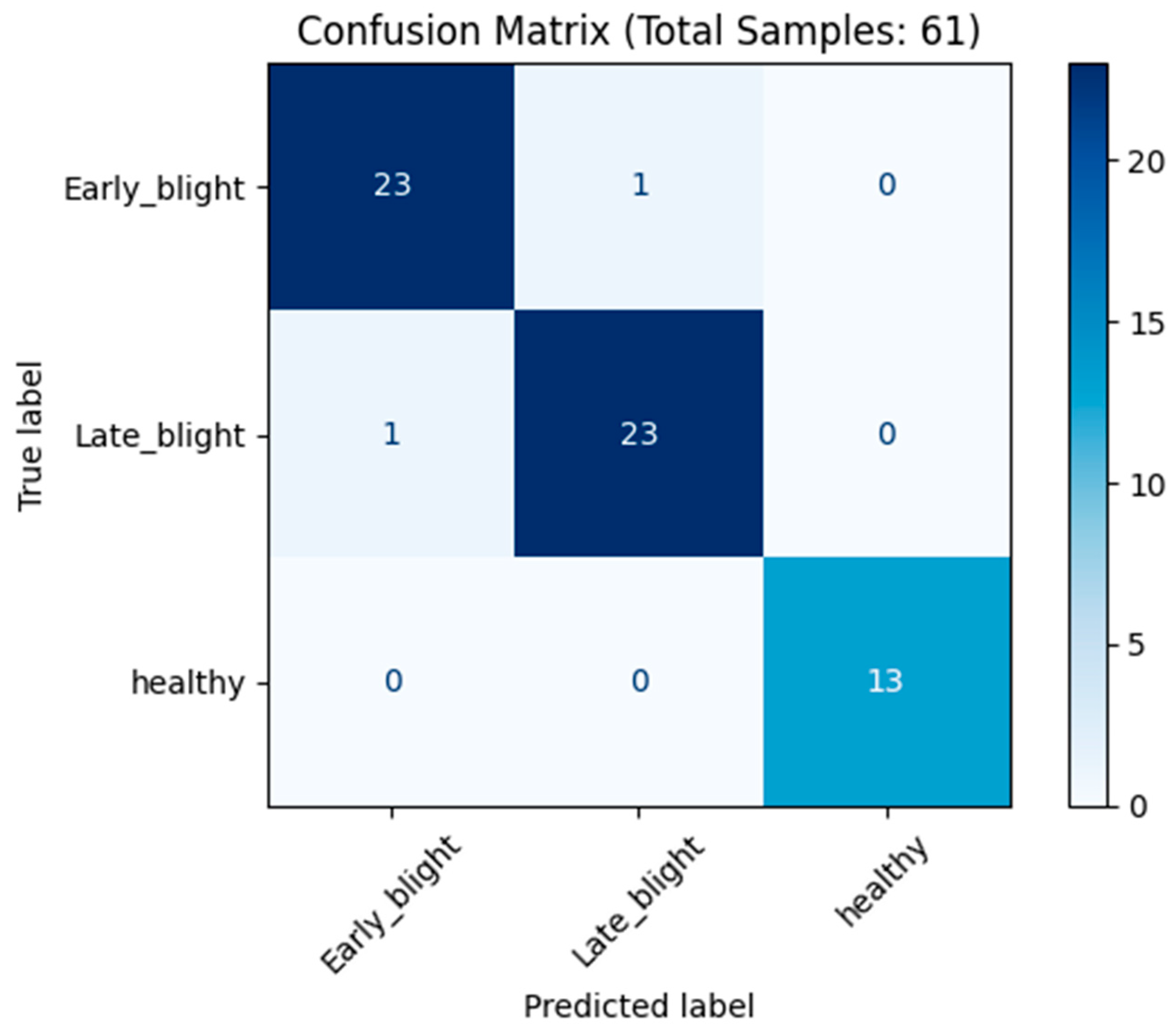

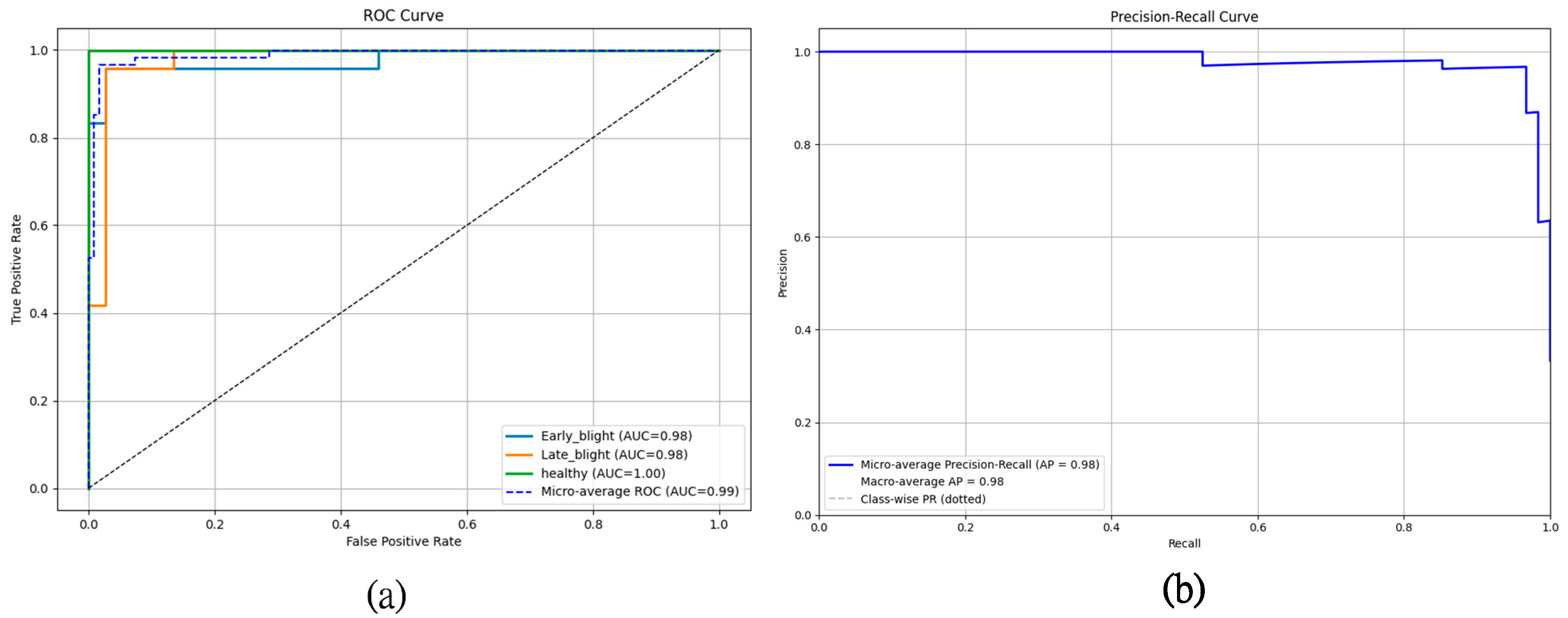

4.3. Ablation Comparison Test Under Complex Background

4.4. Compared with Existing Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Su, W.; Wang, J. Potato and food security in China. Am. J. Potato Res. 2019, 96, 100–101. [Google Scholar] [CrossRef]

- Devaux, A.; Goffart, J.P.; Kromann, P.; Andrade-Piedra, J.; Polar, V.; Hareau, G. The potato of the future: Opportunities and challenges in sustainable agri-food systems. Potato Res. 2021, 64, 681–720. [Google Scholar] [CrossRef] [PubMed]

- Zeng, S.Q. The Ministry of Agriculture held an international seminar on potato staple food products and industrial development. Agric. Prod. Mark. Wkly. 2015, 31, 19. [Google Scholar]

- Gold, K.M.; Townsend, P.A.; Chlus, A.; Herrmann, I.; Couture, J.J.; Larson, E.R.; Gevens, A.J. Hyperspectral measurements enable pre-symptomatic detection and differentiation of contrasting physiological effects of late blight and early blight in potato. Remote Sens. 2020, 12, 286. [Google Scholar] [CrossRef]

- Majeed, A.; Muhammad, Z.; Ullah, Z.; Ullah, R.; Ahmad, H. Late blight of potato (Phytophthora infestans) I: Fungicides application and associated challenges. Turk. J. Agric.-Food Sci. Technol. 2017, 5, 261–266. [Google Scholar] [CrossRef]

- Wang, S.; Xu, D.; Liang, H.; Bai, Y.; Li, X.; Zhou, J.; Su, C.; Wei, W. Advances in deep learning applications for plant disease and pest detection: A review. Remote Sens. 2025, 17, 698. [Google Scholar] [CrossRef]

- Franceschini, M.H.D.; Bartholomeus, H.; Van Apeldoorn, D.F.; Suomalainen, J.; Kooistra, L. Feasibility of unmanned aerial vehicle optical imagery for early detection and severity assessment of late blight in potato. Remote Sens. 2019, 11, 224. [Google Scholar] [CrossRef]

- Shin, J.; Mahmud, M.S.; Rehman, T.U.; Ravichandran, P.; Heung, B.; Chang, Y.K. Trends and prospect of machine vision technology for stresses and diseases detection in precision agriculture. AgriEngineering 2022, 5, 20–39. [Google Scholar] [CrossRef]

- Edan, Y.; Adamides, G.; Oberti, R. Agriculture automation. In Springer Handbook of Automation; Springer: Cham, Switzerland, 2023; pp. 1055–1078. [Google Scholar]

- Nath, P.C.; Mishra, A.K.; Sharma, R.; Bhunia, B.; Mishra, B.; Tiwari, A.; Nayak, P.K.; Sharma, M.; Bhuyan, T.; Kaushal, S. Recent advances in artificial intelligence towards the sustainable future of agri-food industry. Food Chem. 2024, 447, 138945. [Google Scholar] [CrossRef]

- Tyagi, A.K.; Tiwari, S. The future of artificial intelligence in blockchain applications. In Machine Learning Algorithms Using Scikit and Tensorflow Environments; IGI Global Scientific Publishing: Hershey, PA, USA, 2024; pp. 346–373. [Google Scholar]

- Hernandez, D.; Pasha, L.; Yusuf, D.A.; Nurfaizi, R.; Julianingsih, D. The role of artificial intelligence in sustainable agriculture and waste management: Towards a green future. Int. Trans. Artif. Intell. 2024, 2, 150–157. [Google Scholar] [CrossRef]

- Afzaal, H.; Farooque, A.A.; Schumann, A.W.; Hussain, N.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B. Detection of a potato disease (early blight) using artificial intelligence. Remote Sens. 2021, 13, 411. [Google Scholar] [CrossRef]

- Dai, G.; Hu, L.; Fan, J.; Yan, S.; Wang, X.; Man, R.; Liu, T. Based on GLCM feature extraction and voting classification model Potato Early and Late Disease Detection of Type. Jiangsu Agric. Sci. 2023, 51, 185–192. [Google Scholar]

- Sangar, G.; Rajasekar, V. Potato Leaf Disease Classification using Pre Trained Deep Learning Techniques-A Comparative Analysis. In Proceedings of the 2024 5th International Conference for Emerging Technology (INCET), Belgaum, India, 24–26 May 2024; pp. 1–6. [Google Scholar]

- Mahmood ur Rehman, M.; Liu, J.; Nijabat, A.; Faheem, M.; Wang, W.; Zhao, S. Leveraging convolutional neural networks for disease detection in vegetables: A comprehensive review. Agronomy 2024, 14, 2231. [Google Scholar] [CrossRef]

- Arshad, F.; Mateen, M.; Hayat, S.; Wardah, M.; Al-Huda, Z.; Gu, Y.H.; Al-antari, M.A. PLDPNet: End-to-end hybrid deep learning framework for potato leaf disease prediction. Alex. Eng. J. 2023, 78, 406–418. [Google Scholar] [CrossRef]

- Munir, G.; Ansari, M.A.; Zai, S.; Bhacho, I. Deep Learning Based Multi Crop Disease Detection System. Int. J. Innov. Sci. Technol. 2024, 6, 1009–1020. [Google Scholar]

- Panchal, A.V.; Patel, S.C.; Bagyalakshmi, K.; Kumar, P.; Khan, I.R.; Soni, M. Image-based plant diseases detection using deep learning. Mater. Today Proc. 2023, 80, 3500–3506. [Google Scholar] [CrossRef]

- Negi, A.S.; Rawat, A.; Mehra, P. Precision Agriculture: Enhancing Potato Disease Classification using Deep Learning. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; pp. 1–6. [Google Scholar]

- Sarfarazi, S.; Zefrehi, H.G.; Toygar, Ö. Potato leaf disease classification using fusion of multiple color spaces with weighted majority voting on deep learning architectures. Multimed. Tools Appl. 2024, 84, 27281–27310. [Google Scholar] [CrossRef]

- Upadhyay, S.; Jain, J.; Prasad, R. Early blight and late blight disease detection in potato using Efficientnetb0. Int. J. Exp. Res. Rev. 2024, 38, 15–25. [Google Scholar] [CrossRef]

- Liu, J.W.; Liu, J.W.; Luo, X.L. Research progress in attention mechanism in deep learning. Chin. J. Eng. 2021, 43, 1499–1511. [Google Scholar]

- Yang, X. An overview of the attention mechanisms in computer vision. J. Phys. Conf. Ser. 2020, 1693, 012173. [Google Scholar] [CrossRef]

- Gupta, J.; Pathak, S.; Kumar, G. Deep learning (CNN) and transfer learning: A review. J. Phys. Conf. Ser. 2022, 2273, 012029. [Google Scholar] [CrossRef]

- Feng, J.; Hou, B.; Yu, C.; Yang, H.; Wang, C.; Shi, X.; Hu, Y. Research and validation of potato late blight detection method based on deep learning. Agronomy 2023, 13, 1659. [Google Scholar] [CrossRef]

- Natesan, B.; Singaravelan, A.; Hsu, J.-L.; Lin, Y.-H.; Lei, B.; Liu, C.-M. Channel–Spatial segmentation network for classifying leaf diseases. Agriculture 2022, 12, 1886. [Google Scholar] [CrossRef]

- Li, J.; Wu, J.; Liu, R.; Shu, G.; Liu, X.; Zhu, K.; Wang, C.; Zhu, T. Potato late blight leaf detection in complex environments. Sci. Rep. 2024, 14, 31046. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wang, S.; Wang, C.; Wang, H.; Du, Y.; Zong, Z. Research on a Potato Leaf Disease Diagnosis System Based on Deep Learning. Agriculture 2025, 15, 424. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Shafik, W.; Tufail, A.; De Silva Liyanage, C.; Apong, R.A.A.H.M. Using transfer learning-based plant disease classification and detection for sustainable agriculture. BMC Plant Biol. 2024, 24, 136. [Google Scholar] [CrossRef]

- Zhao, X.; Li, K.; Li, Y.; Ma, J.; Zhang, L. Identification method of vegetable diseases based on transfer learning and attention mechanism. Comput. Electron. Agric. 2022, 193, 106703. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, Q.; Chen, J.; Zhou, H. Deep learning-based classification and application test of multiple crop leaf diseases using transfer learning and the attention mechanism. Computing 2024, 106, 3063–3084. [Google Scholar] [CrossRef]

- Nazir, T.; Iqbal, M.M.; Jabbar, S.; Hussain, A.; Albathan, M. EfficientPNet—An optimized and efficient deep learning approach for classifying disease of potato plant leaves. Agriculture 2023, 13, 841. [Google Scholar] [CrossRef]

- Lespinats, S.; Colange, B.; Dutykh, D. Nonlinear Dimensionality Reduction Techniques; Springer International Publishing: Berlin, Germany, 2022. [Google Scholar]

- Barman, U.; Sahu, D.; Barman, G.G.; Das, J. Comparative assessment of deep learning to detect the leaf diseases of potato based on data augmentation. In Proceedings of the 2020 International Conference on Computational Performance Evaluation (ComPE), Shillong, India, 2–4 July 2020; pp. 682–687. [Google Scholar]

- Sinamenye, J.H.; Chatterjee, A.; Shrestha, R. Potato plant disease detection: Leveraging hybrid deep learning models. BMC Plant Biol. 2025, 25, 647. [Google Scholar] [CrossRef]

- Chen, W.; Chen, J.; Zeb, A.; Yang, S.; Zhang, D. Mobile convolution neural network for the recognition of potato leaf disease images. Multimed. Tools Appl. 2022, 81, 20797–20816. [Google Scholar] [CrossRef]

| Leaf Type | Ground Dataset | Public Dataset | Multi-Leaf Dataset |

|---|---|---|---|

| Early blight | 609 | 1000 | 124 |

| Healthy | 60 | 152 | 56 |

| Late blight | 1000 | 1000 | 122 |

| Sr No. | Layer (Type) | Output Shape |

|---|---|---|

| 1 | Conv2d-1 | [−1, 64, 112, 112] |

| 2 | BatchNorm2d-2 | [−1, 64, 112, 112] |

| 3 | ReLU-3 | [−1, 64, 112, 112] |

| 4 | MaxPool2d-4 | [−1, 64, 56, 56] |

| 5 | Conv2d-5 | [−1, 64, 56, 56] |

| 6 | BatchNorm2d-6 | [−1, 64, 56, 56] |

| 7 | ReLU-7 | [−1, 64, 56, 56] |

| 8 | Conv2d-8 | [−1, 64, 56, 56] |

| 9 | BatchNorm2d-9 | [−1, 64, 56, 56] |

| 10 | ReLU-10 | [−1, 64, 56, 56] |

| 11 | BasicBlock-11 | [−1, 64, 56, 56] |

| 12 | Conv2d-12 | [−1, 64, 56, 56] |

| 13 | BatchNorm2d-13 | [−1, 64, 56, 56] |

| 14 | ReLU-14 | [−1, 64, 56, 56] |

| 15 | Conv2d-15 | [−1, 64, 56, 56] |

| 16 | BatchNorm2d-16 | [−1, 64, 56, 56] |

| 17 | ReLU-17 | [−1, 64, 56, 56] |

| 18 | BasicBlock-18 | [−1, 64, 56, 56] |

| 19 | Conv2d-19 | [−1, 128, 28, 28] |

| 20 | BatchNorm2d-20 | [−1, 128, 28, 28] |

| 21 | ReLU-21 | [−1, 128, 28, 28] |

| 22 | Conv2d-22 | [−1, 128, 28, 28] |

| 23 | BatchNorm2d-23 | [−1, 128, 28, 28] |

| 24 | Conv2d-24 | [−1, 128, 28, 28] |

| 25 | BatchNorm2d-25 | [−1, 128, 28, 28] |

| 26 | ReLU-26 | [−1, 128, 28, 28] |

| 27 | BasicBlock-27 | [−1, 128, 28, 28] |

| 28 | Conv2d-28 | [−1, 128, 28, 28] |

| 29 | BatchNorm2d-29 | [−1, 128, 28, 28] |

| 30 | ReLU-30 | [−1, 128, 28, 28] |

| 31 | Conv2d-31 | [−1, 128, 28, 28] |

| 32 | BatchNorm2d-32 | [−1, 128, 28, 28] |

| 33 | ReLU-33 | [−1, 128, 28, 28] |

| 34 | BasicBlock-34 | [−1, 128, 28, 28] |

| 35 | Conv2d-35 | [−1, 256, 14, 14] |

| 36 | BatchNorm2d-36 | [−1, 256, 14, 14] |

| 37 | ReLU-37 | [−1, 256, 14, 14] |

| 38 | Conv2d-38 | [−1, 256, 14, 14] |

| 39 | BatchNorm2d-39 | [−1, 256, 14, 14] |

| 40 | Conv2d-40 | [−1, 256, 14, 14] |

| 41 | BatchNorm2d-41 | [−1, 256, 14, 14] |

| 42 | ReLU-42 | [−1, 256, 14, 14] |

| 43 | BasicBlock-43 | [−1, 256, 14, 14] |

| 44 | Conv2d-44 | [−1, 256, 14, 14] |

| 45 | BatchNorm2d-45 | [−1, 256, 14, 14] |

| 46 | ReLU-46 | [−1, 256, 14, 14] |

| 47 | Conv2d-47 | [−1, 256, 14, 14] |

| 48 | BatchNorm2d-48 | [−1, 256, 14, 14] |

| 49 | ReLU-49 | [−1, 256, 14, 14] |

| 50 | BasicBlock-50 | [−1, 256, 14, 14] |

| 51 | Conv2d-51 | [−1, 32, 14, 14] |

| 52 | Conv2d-52 | [−1, 32, 14, 14] |

| 53 | Softmax-53 | [−1, 196, 196] |

| 54 | Conv2d-54 | [−1, 256, 14, 14] |

| 55 | Conv2d-55 | [−1, 256, 56, 56] |

| 56 | Upsample-56 | [−1, 256, 14, 14] |

| 57 | ReLU-57 | [−1, 256, 14, 14] |

| 58 | Conv2d-58 | [−1, 256, 28, 28] |

| 59 | Upsample-59 | [−1, 256, 14, 14] |

| 60 | ReLU-60 | [−1, 256, 14, 14] |

| 61 | Conv2d-61 | [−1, 256, 14, 14] |

| 62 | Upsample-62 | [−1, 256, 14, 14] |

| 63 | ReLU-63 | [−1, 256, 14, 14] |

| 64 | Conv2d-64 | [−1, 256, 14, 14] |

| 65 | SelfAttentionWithFeatureFusion-65 | [−1, 256, 14, 14] |

| 66 | Conv2d-66 | [−1, 512, 7, 7] |

| 67 | BatchNorm2d-67 | [−1, 512, 7, 7] |

| 68 | ReLU-68 | [−1, 512, 7, 7] |

| 69 | Conv2d-69 | [−1, 512, 7, 7] |

| 70 | BatchNorm2d-70 | [−1, 512, 7, 7] |

| 71 | Conv2d-71 | [−1, 512, 7, 7] |

| 72 | BatchNorm2d-72 | [−1, 512, 7, 7] |

| 73 | ReLU-73 | [−1, 512, 7, 7] |

| 74 | BasicBlock-74 | [−1, 512, 7, 7] |

| 75 | Conv2d-75 | [−1, 512, 7, 7] |

| 76 | BatchNorm2d-76 | [−1, 512, 7, 7] |

| 77 | ReLU-77 | [−1, 512, 7, 7] |

| 78 | Conv2d-78 | [−1, 512, 7, 7] |

| 79 | BatchNorm2d-79 | [−1, 512, 7, 7] |

| 80 | ReLU-80 | [−1, 512, 7, 7] |

| 81 | BasicBlock-81 | [−1, 512, 7, 7] |

| 82 | AdaptiveAvgPool2d-82 | [−1, 512, 1, 1] |

| 83 | Linear-83 | [−1, 3] |

| Model | A (%) | A.avg (%) | P (%) | R (%) | F (%) |

|---|---|---|---|---|---|

| EfficientNet_b0 | 97.31 | 95.69 ± 2.52 | 97.98 | 98.21 | 98.08 |

| MobileNet_V2 | 97.02 | 96.32 ± 1.22 | 97.86 | 97.86 | 97.86 |

| ResNet50 | 97.01 | 99.65 ± 1.96 | 97.79 | 97.95 | 97.87 |

| Vgg16 | 97.61 | 96.31 ± 2.11 | 98.23 | 98.38 | 98.30 |

| ResNet18 | 97.91 | 96.51 ± 1.84 | 98.40 | 98.63 | 98.51 |

| Modules | A (%) | P (%) | R (%) | F (%) |

|---|---|---|---|---|

| ResNet18 | 91.80 | 93.26 | 91.88 | 92.45 |

| EfficientNet_b0 | 86.89 | 88.89 | 88.89 | 88.89 |

| MobileNet_V2 | 88.53 | 90.80 | 89.10 | 89.75 |

| ResNet18 + SA | 90.16 | 91.83 | 90.49 | 91.10 |

| ResNet18 + SA + DC | 42.62 | 42.62 | 36.11 | 30.94 |

| ResNet18 + GABM | 95.08 | 96.23 | 95.83 | 95.82 |

| ResNet18 + SAWF | 98.36 | 98.67 | 98.61 | 98.61 |

| Sr. No | Reference | Accuracy (%) |

|---|---|---|

| 1 | Barman et al. [36] | 96.98 |

| 2 | Zhang et al. [29] | 97.87 |

| 3 | Tahira Nazir et al. [34] | 98.12 |

| 4 | Li et al. [28] | 77.65 |

| 5 | Sinamenye, J. H et al. [37] | 85.06 |

| 6 | Chen et al. [38] | 97.73 |

| 7 | Proposed Technique | 98.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, K.; Xu, D.; Chang, S. Self-Attention-Enhanced Deep Learning Framework with Multi-Scale Feature Fusion for Potato Disease Detection in Complex Multi-Leaf Field Conditions. Appl. Sci. 2025, 15, 10697. https://doi.org/10.3390/app151910697

Xie K, Xu D, Chang S. Self-Attention-Enhanced Deep Learning Framework with Multi-Scale Feature Fusion for Potato Disease Detection in Complex Multi-Leaf Field Conditions. Applied Sciences. 2025; 15(19):10697. https://doi.org/10.3390/app151910697

Chicago/Turabian StyleXie, Ke, Decheng Xu, and Sheng Chang. 2025. "Self-Attention-Enhanced Deep Learning Framework with Multi-Scale Feature Fusion for Potato Disease Detection in Complex Multi-Leaf Field Conditions" Applied Sciences 15, no. 19: 10697. https://doi.org/10.3390/app151910697

APA StyleXie, K., Xu, D., & Chang, S. (2025). Self-Attention-Enhanced Deep Learning Framework with Multi-Scale Feature Fusion for Potato Disease Detection in Complex Multi-Leaf Field Conditions. Applied Sciences, 15(19), 10697. https://doi.org/10.3390/app151910697