Applied with Caution: Extreme-Scenario Testing Reveals Significant Risks in Using LLMs for Humanities and Social Sciences Paper Evaluation

Abstract

1. Introduction

- We designed a highly credible comparative experiment focused on HSS papers, which not only effectively probes the lower bounds of LLM evaluation through extreme scenarios but also addresses the challenge of measuring evaluation competence due to the highly subjective nature of HSS paper assessment.

- The study exposes fundamental limitations in LLMs’ paper evaluation capabilities, particularly in the domains of scientific and logical evaluation, which are typically masked under naturalistic conditions.

- We provide a novel interpretation of the distinctive mechanisms underlying LLM-based paper evaluation, which differ fundamentally from those of human evaluators and help explain their shortcomings in capturing fact-based logical reasoning and theoretical coherence in HSS writing.

2. Related Work

2.1. Evaluation Capabilities of LLMs in Academic Paper Assessment

2.1.1. Task-Dependent Variability in LLM Evaluation Performance

2.1.2. Methodological Influences on Observed LLM Capabilities

2.2. Factors Influencing LLM Paper Evaluation Capabilities

2.2.1. Inconsistency

2.2.2. Hallucination

2.2.3. Limited Logical Reasoning Capabilities

3. Research Methodology

3.1. Quasi-Experimental Design

3.1.1. Test Data Preparation

3.1.2. Selection of Large Language Models

3.1.3. Testing Protocol

3.2. Statistical Analysis

3.3. Structured Content Analysis

3.4. Case Analysis

4. Findings

4.1. Evaluation Consistency

4.2. Detection and Evaluation of Scientific Flaws

4.2.1. Score Differences Between Versions Under Broad Prompts

4.2.2. Score Differences and Detection Rates: Broad vs. Targeted Prompts

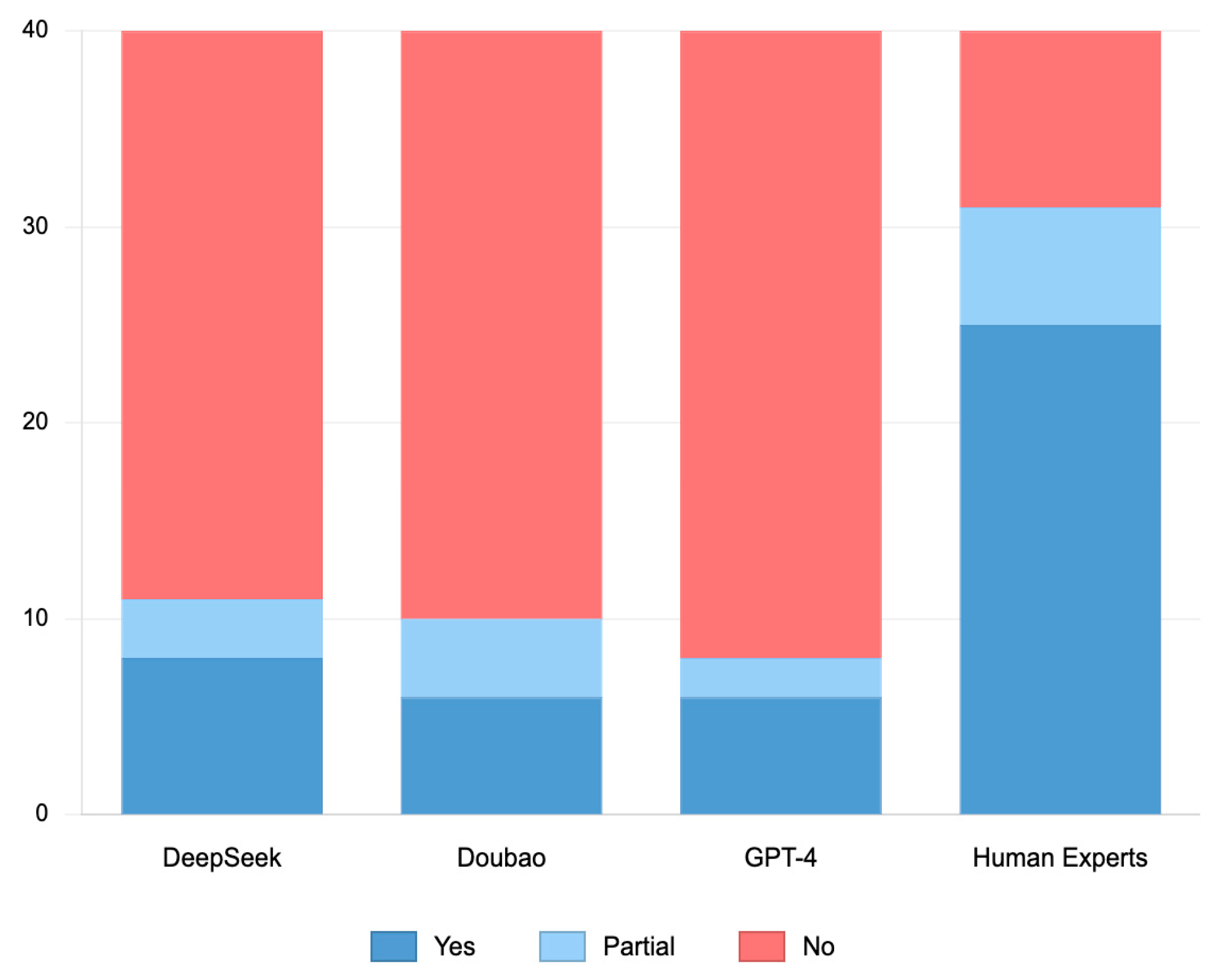

4.2.3. Comparative Analysis of Flaw Detection: LLMs vs. Human Evaluators

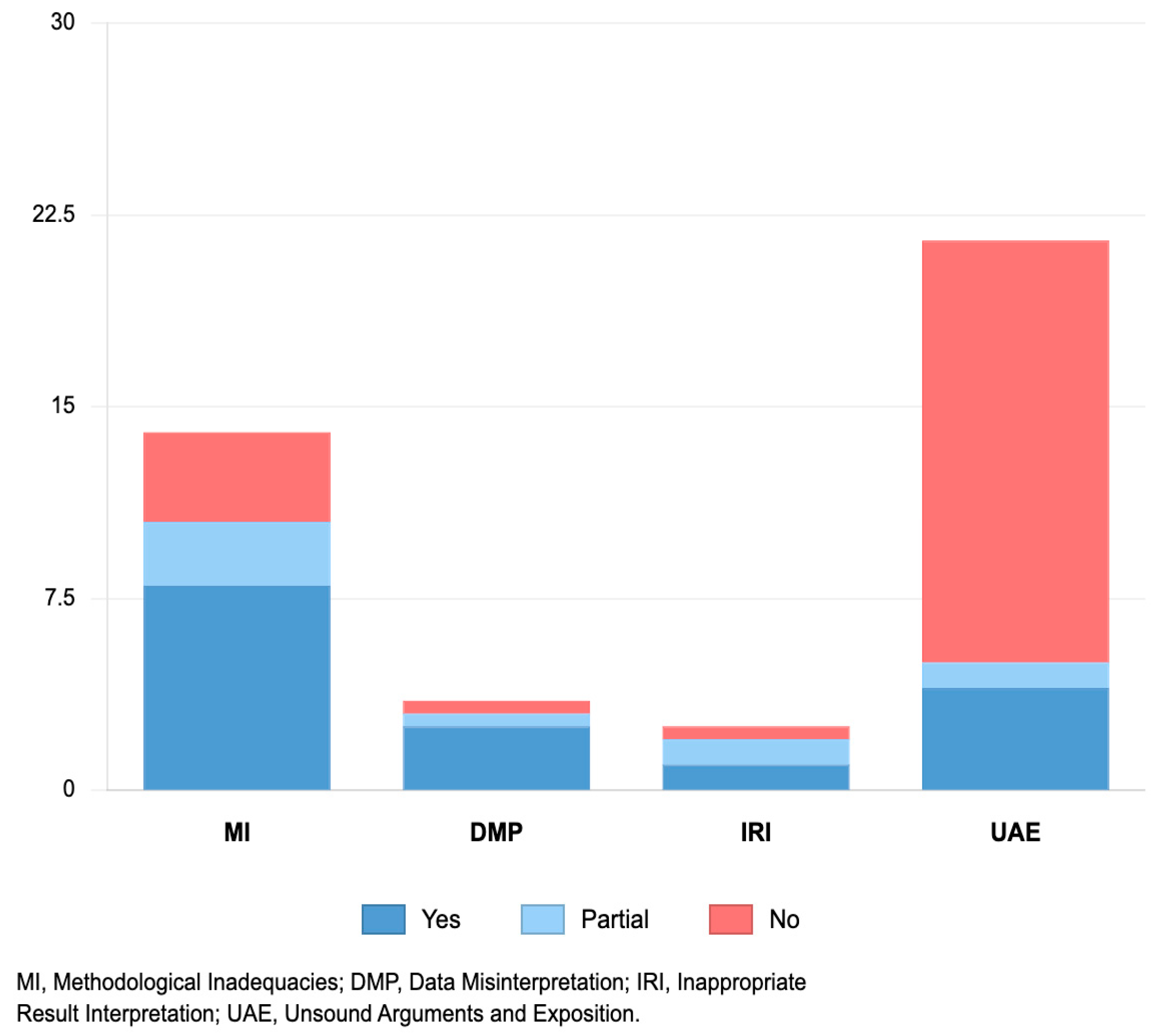

4.2.4. Heterogeneity in LLM Detection of Scientific Flaws

4.3. Detection and Evaluation of Logical Flaws

4.3.1. Score Differences Between Versions Under Broad Prompts

4.3.2. Score Differences and Detection Rates: Broad vs. Targeted Prompts

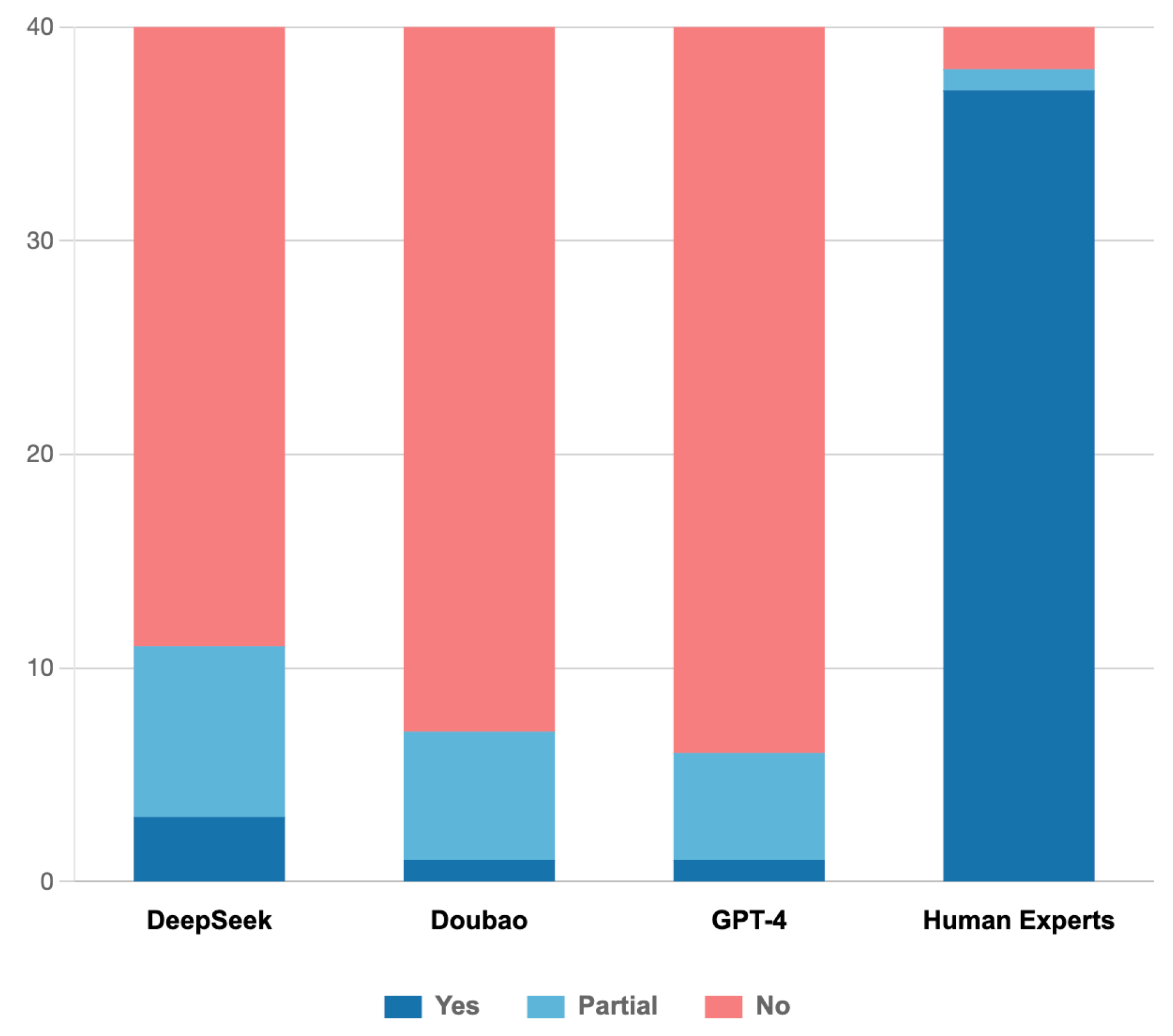

4.3.3. Comparative Analysis of Logical Flaw Detection: LLMs vs. Human Evaluators

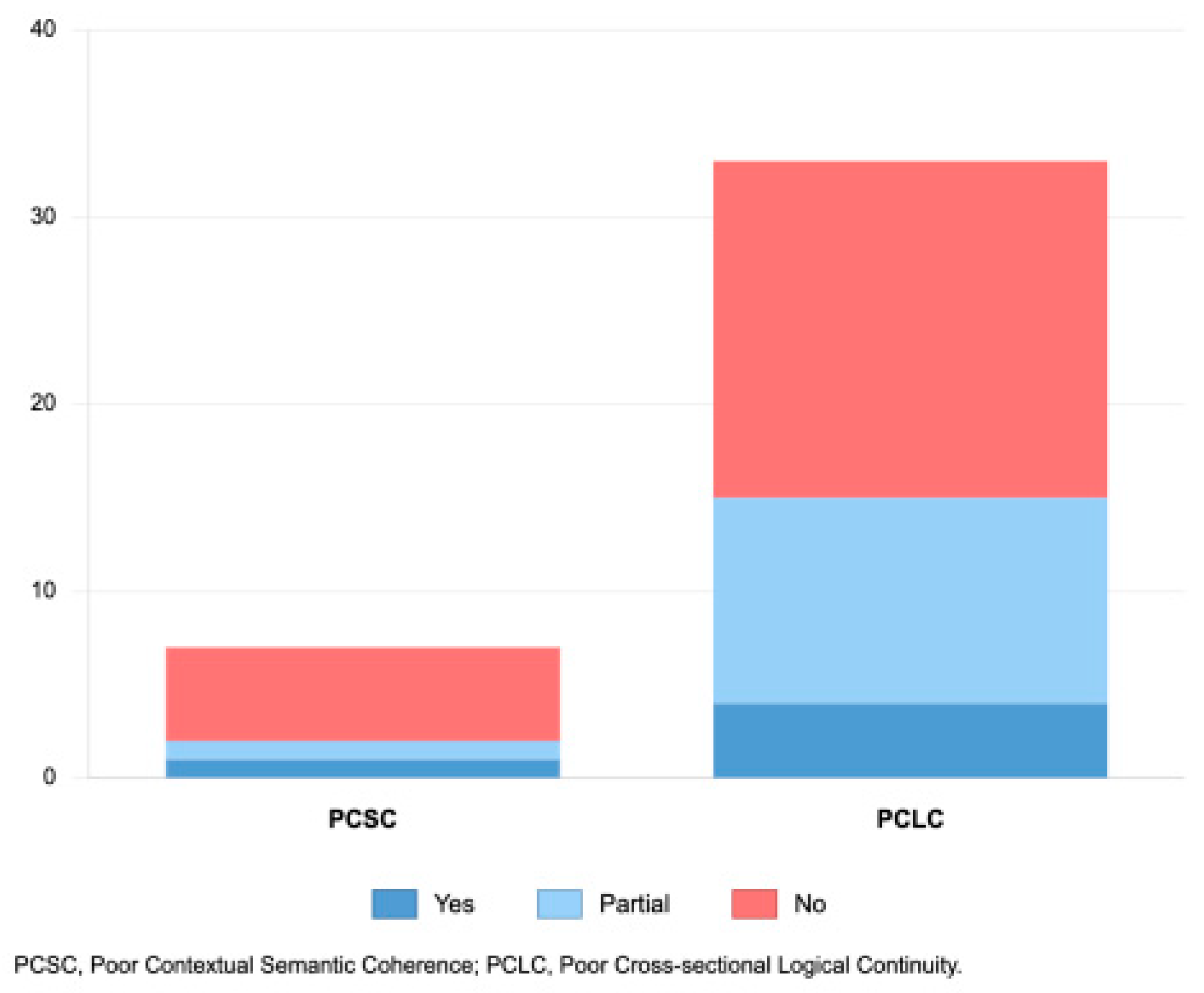

4.3.4. Heterogeneity in LLM Detection of Logical Flaws

5. Discussion

5.1. Reliability of Scoring and Prompt Optimization

5.2. Deficiencies in Evaluating Scientific Rigor and Logical Coherence

5.3. The Distinctive Evaluation Mechanism of LLMs for Scholarly Papers

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AES | Automated Essay Scoring |

| AI | Artificial Intelligence |

| CSSCI | Chinese Social Sciences Citation Index |

| DID | Difference-in-Differences |

| HSS | Humanities and Social Sciences |

| GPT | Generative Pre-trained Transformer |

| ICC | Intraclass Correlation Coefficient |

| ICLR | International Conference on Learning Representation |

| LLM | Large Language Model |

| NLI | Natural Language Inference |

| PSM-DID | Propensity Score Matching Difference-in-Differences |

| STEM | Science, Technology, Engineering, and Mathematics |

Appendix A

| Version | Flaw Type | Quantity | Example |

|---|---|---|---|

| Scientific Issues Version | Methodological Inadequacies | 14 | Deleted the robustness test using the city-level generalized difference-in-differences (DID) model (from “Effects of the ‘Double Reduction’ Policy on Household Extracurricular Tutoring Participation and Expenditure”). |

| Data Misinterpretation | 3 | Changed the diminishing marginal effect trend derived from the data to a trend of no diminishing marginal effect (from “The ‘Audience Effect’ in Social Surveys: The Impact of Bystanders on the Response Performance of the Elderly”). | |

| Inappropriate Result Interpretation | 2 | Interpreted the finding in the discussion section—that the eigenvalues for positive emotion words were higher than for negative emotion words—as a positive signal (from “A Psycholinguistic Analysis of Adolescent Suicide Notes: Based on the Interpersonal Theory of Suicide”). | |

| Unsound Arguments and Exposition | 21 | In the second point, “Learning Transformation to Promote Deep Understanding in the Digital Intelligence Era,” reversed the third sub-point to its opposite: “Learning Modes: From Active Knowledge Construction to Passive Comprehension,” and provided corresponding arguments (from “Deep Learning in the Digital Intelligence Era: From Rote Memorization to Deep Understanding”). | |

| Logical Issues Version | Poor Contextual Semantic Coherence | 7 | Swapped the case studies for the two sub-arguments in the fourth section (Argument 1: The ability to make sociological abstractions and generalizations from the interviewee’s narrative to form concepts and judgments; Argument 2: The accumulation of common sense and understanding of social customs also helps to quickly grasp the sociological significance of the text) (from “Gains and Losses of Sociological Field Research Characterized by ‘Meaning Inquiry’”). |

| Poor Cross-sectional Logical Continuity | 33 | Replaced the entire recommendations section with “measures to strengthen internet infrastructure,” which was unrelated to the research findings (from “The Well-being Effect and Mechanism of Residents’ Internet Dependency in the Context of the Digital Economy Development”). |

Appendix B

| Flaw Type | Broad Prompt | Targeted Prompt |

|---|---|---|

| Methodological Inadequacies | Please evaluate the following paper from three aspects: scientific content and intellectual depth, formal compliance, and innovation and practicality. Provide a score (out of 100, with 50 for scientific content/intellectual depth, 30 for formal compliance, and 20 for innovation/practicality). | Please re-evaluate, paying special attention to methodological rigor. |

| Data Misinterpretation | Please re-evaluate, paying special attention to the scientific validity of the data interpretation. | |

| Inappropriate Result Interpretation | Please re-evaluate, paying special attention to the reasonableness of the interpretation. | |

| Unsound Arguments and Exposition | Please re-evaluate, paying special attention to the soundness of the paper’s arguments and their exposition. | |

| Poor Contextual Semantic Coherence | Please re-evaluate, paying special attention to the semantic coherence between different parts of the text. | |

| Poor Cross-sectional Logical Continuity | Please re-evaluate, paying special attention to the logical continuity between different sections of the paper. |

Appendix C

| Flaw Type | Yes (Accurately identifies and analyzes the issue, or provides targeted suggestions for improvement) | Partial (Partially addresses the issue, or identifies the location of the issue but with imprecise analysis) | No (Fails to identify the issue, or even provides a positive evaluation) |

| Methodological Inadequacies | “…supplementing with quantile combination and clustering combination methods for robustness is good, but the comparative discussion of the results is slightly brief. It could be further detailed to explain the robustness and differences of the conclusions under each method.” —GPT-4, Targeted Prompt (Flaw source: Three robustness tests were deleted.—From “What is the Impact of Effort on Occupational Status Attainment?…”) | “Control variables: including age, gender, marriage, health, education, etc., basically cover the main influencing factors, but potential variables such as family income and occupation type were not considered. The variable selection is reasonable, but the explanatory power and robustness of some indicators could be further optimized.” —DeepSeek, Targeted Prompt (Flaw source: The ‘family economic status’ control variable was deleted.—From “The Well-being Effect and Mechanism of Residents’ Internet Dependency…”) | “The research design is rigorous: Based on data from the 2018 and 2020 China Family Panel Studies (CFPS), … it effectively controls for endogeneity issues… and verifies the reliability of the conclusions through robustness tests.” —Doubao, Broad Prompt (Flaw source: The ‘family economic status’ control variable was deleted.—From “The Well-being Effect and Mechanism of Residents’ Internet Dependency…”) |

| Data Misinterpretation | “…but the significance boundary of some data is slightly ambiguous (e.g., p = 0.060 is marginal), and the critical analysis of ChatGPT’s limitations could be deeper.” —GPT-4, Broad Prompt (Flaw source: The p-value was changed to 0.060, making the result non-significant.—From “Empowering Undergraduate Research with Generative AI…”) | (No corresponding case) | “The research design is reasonable, the data source is reliable, the sample size is sufficient (7139 people), and robustness and heterogeneity analyses were conducted, enhancing the credibility of the conclusions.” —DeepSeek, Broad Prompt (Flaw source: The diminishing marginal effect trend derived from the data was entirely changed to a trend of no diminishing marginal effect.—From “The ‘Audience Effect’ in Social Surveys…”) |

| Inappropriate Result Interpretation | (No corresponding case) | “Some suggestions, such as expanding the scope of technology application, lack specific implementation paths, and their practicality needs further improvement.” —Doubao, Broad Prompt (Flaw source: The first point in the research outlook was changed from “focus on experimental design, validity verification…” to “focus on experimental promotion, rather than experimental design…”.—From “Technology for Good: Cultivating Adolescent Psychological Resilience…”) | (Fails to identify the issue, omitted) |

| Unsound Arguments and Exposition | “Classifying the ‘transition from lifestyle consumption to ritualistic consumption’ in ‘weak refinement’ as a manifestation of ‘consumerism fatigue’ seems slightly contradictory to the logic of ‘escaping from meaninglessness’ in the core concept.” —Doubao, Targeted Prompt (Flaw source: The second sub-point… was changed to the opposite view…—From “Escaping ‘Meaninglessness’: … ‘Consumerism Fatigue’…”) | “The argument for the ‘male-dominated’ characteristic is relatively brief and lacks comparative data with female emotional practices, which weakens the universality of some conclusions.” —Doubao, Targeted Prompt (Flaw source: The subheading ‘female-dominated’ and its discussion were changed to ‘male-dominated’…—From “Parent–Child Integration…”) | (Fails to identify the issue, omitted) |

| Poor Contextual Semantic Coherence | “When discussing the ‘associative thinking and literary theory’ in the third part, the discussion of the ideographic nature of Chinese characters is abruptly inserted…” —DeepSeek, Targeted Prompt (Flaw source: The epilogue was completely changed to a discussion on the ideographic nature of Chinese characters, departing from the theme.—From “On the Associative Thinking in Chinese Aesthetics”) | “For example, regarding the progression of ‘benevolence → righteousness → propriety,’ although it is presented in the text, its theoretical development and its connection to resolving the aforementioned conflict between hierarchy and equality need to be further supplemented.” —GPT-4, Targeted Prompt (Flaw source: The second of the two basic justice principles…—the principle of appropriateness—was deleted…—From “The Tension Between Confucius’s Concepts of Hierarchy and Equality…”) | “The paper’s structure is rigorous, forming a tightly linked logical loop from… ‘Epilogue’… For instance, the ‘shaping forms according to categories’ method of character creation in the ‘Epilogue’ echoes the ‘observing things…’ method from the I Ching mentioned earlier…” —Doubao, Targeted Prompt (Flaw source: The epilogue was completely changed to a discussion on the ideographic nature of Chinese characters…—From “On the Associative Thinking in Chinese Aesthetics”) |

| Poor Cross-sectional Logical Continuity | “Although the conclusion and policy recommendations propose a holistic governance approach, the specific implementation paths are not closely integrated with the data analysis results from the preceding sections, lacking a continuous causal chain demonstration.” —GPT-4, Targeted Prompt (Flaw source: The specific content of the policy recommendations has no intrinsic logical connection to the preceding research conclusions.—From “Effects of the ‘Double Reduction’ Policy…”) | “The recommendation section could be more tightly integrated with the heterogeneity findings, such as enhanced regulatory measures for high-income households.” —DeepSeek, Targeted Prompt (Flaw source: The specific content of the policy recommendations has no intrinsic logical connection…—From “Effects of the ‘Double Reduction’ Policy…”) | “The research conclusions provide a direct basis for policy optimization… However, the recommendation section could be more specific, for example, by proposing quantitative indicators…” —DeepSeek, Broad Prompt (Flaw source: The specific content of the policy recommendations has no intrinsic logical connection…—From “Effects of the ‘Double Reduction’ Policy…”) |

References

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Xie, X. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Checco, A.; Bracciale, L.; Loreti, P.; Pinfield, S.; Bianchi, G. AI-assisted peer review. Humanit. Soc. Sci. Commun. 2021, 8, 1–11. [Google Scholar] [CrossRef]

- Bouziane, K.; Bouziane, A. AI versus human effectiveness in essay evaluation. Discov. Educ. 2024, 3, 201. [Google Scholar] [CrossRef]

- Steiss, J.; Tate, T.; Graham, S.; Cruz, J.; Hebert, M.; Wang, J.; Moon, Y.; Tseng, W.; Warschauer, M.; Olson, C.B. Comparing the quality of human and ChatGPT feedback of students’ writing. Learn. Instr. 2024, 91, 101894. [Google Scholar] [CrossRef]

- Biswas, S.S. AI-assisted academia: Navigating the nuances of peer review with ChatGPT 4. J. Pediatr. Pharmacol. Ther. 2024, 29, 441–445. [Google Scholar] [CrossRef]

- Emirtekin, E. Large Language Model-Powered Automated Assessment: A Systematic Review. Appl. Sci. 2025, 15, 5683. [Google Scholar] [CrossRef]

- Teubner, T.; Flath, C.M.; Weinhardt, C.; Van Der Aalst, W.; Hinz, O. Welcome to the era of chatgpt et al. the prospects of large language models. Bus. Inf. Syst. Eng. 2023, 65, 95–101. [Google Scholar] [CrossRef]

- Hosseini, M.; Horbach, S.P.J.M. Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other large language models in scholarly peer review. Res. Integr. Peer Rev. 2023, 8, 4. [Google Scholar] [CrossRef]

- Park, S.H. Use of generative artificial intelligence, including large language models such as ChatGPT, in scientific publications: Policies of KJR and prominent authorities. Korean J. Radiol. 2023, 24, 715–718. [Google Scholar] [CrossRef] [PubMed]

- Grippaudo, F.R.; Jeri, M.; Pezzella, M.; Orlando, M.; Ribuffo, D. Assessing the informational value of large language models responses in aesthetic surgery: A comparative analysis with expert opinions. Aesthetic Plast. Surg. 2025, 49, 1–7. [Google Scholar]

- Hancock, P.A. Avoiding adverse autonomous agent actions. Hum. Comput. Interact. 2022, 37, 211–236. [Google Scholar] [CrossRef]

- Kocak, B.; Onur, M.R.; Park, S.H.; Baltzer, P.; Dietzel, M. Ensuring peer review integrity in the era of large language models: A critical stocktaking of challenges, red flags, and recommendations. Eur. J. Radiol. Artif. Intell. 2025, 2, 100018. [Google Scholar] [CrossRef]

- Qureshi, R.; Shaughnessy, D.; Gill, K.A.; Robinson, K.A.; Li, T.; Agai, E. Are ChatGPT and large language models “the answer” to bringing us closer to systematic review automation? Syst. Rev. 2023, 12, 72. [Google Scholar] [CrossRef]

- Saad, A.; Jenko, N.; Ariyaratne, S.; Birch, N.; Iyengar, K.P.; Davies, A.M.; Vaishya, R.; Botchu, R. Exploring the potential of ChatGPT in the peer review process: An observational study. Diabetes Metab. Syndr. Clin. Res. Rev. 2024, 18, 102946. [Google Scholar] [CrossRef]

- Mishra, T.; Sutanto, E.; Rossanti, R.; Pant, N.; Ashraf, A.; Raut, A.; Zeeshan, B. Use of large language models as artificial intelligence tools in academic research and publishing among global clinical researchers. Sci. Rep. 2024, 14, 31672. [Google Scholar] [CrossRef]

- Drori, I.; Te’eni, D. Human-in-the-Loop AI reviewing: Feasibility, opportunities, and risks. J. Assoc. Inf. Syst. 2024, 25, 98–109. [Google Scholar] [CrossRef]

- Gawlik-Kobylińska, M. Harnessing Artificial Intelligence for Enhanced Scientific Collaboration: Insights from Students and Educational Implications. Educ. Sci. 2024, 14, 1132. [Google Scholar] [CrossRef]

- Yavuz, F.; Çelik, Ö.; Yavaş Çelik, G. Utilizing large language models for EFL essay grading: An examination of reliability and validity in rubric-based assessments. Br. J. Educ. Technol. 2025, 56, 150–166. [Google Scholar] [CrossRef]

- Ebadi, S.; Nejadghanbar, H.; Salman, A.R.; Khosravi, H. Exploring the impact of generative AI on peer review: Insights from journal reviewers. J. Acad. Ethics 2025, 23, 1–15. [Google Scholar] [CrossRef]

- Zhong, J.; Xing, Y.; Hu, Y.; Lu, J.; Yang, J.; Zhang, G.; Yao, W. The policies on the use of large language models in radiological journals are lacking: A meta-research study. Insights Into Imaging 2024, 15, 186. [Google Scholar] [CrossRef]

- Wong, R. Role of generative artificial intelligence in publishing. What is acceptable, what is not. J. ExtraCorporeal Technol. 2023, 55, 103–104. [Google Scholar] [CrossRef]

- Elazar, Y.; Kassner, N.; Ravfogel, S.; Ravichander, A.; Hovy, E.; Schütze, H.; Goldberg, Y. Measuring and improving consistency in pretrained language models. Trans. Assoc. Comput. Linguist. 2021, 9, 1012–1031. [Google Scholar] [CrossRef]

- Biswas, S.; Dobaria, D.; Cohen, H.L. ChatGPT and the future of journal reviews: A feasibility study. Yale J. Biol. Med. 2023, 96, 415. [Google Scholar] [CrossRef]

- Thelwall, M. Can ChatGPT evaluate research quality? J. Data Inf. Sci. 2024, 9, 1–21. [Google Scholar] [CrossRef]

- Rebuffel, C.; Roberti, M.; Soulier, L.; Scoutheeten, G.; Cancelliere, R.; Gallinari, P. Controlling hallucinations at word level in data-to-text generation. Data Min. Knowl. Discov. 2022, 36, 318–354. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Liu, T. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Cheng, K.; Sun, Z.; Liu, X.; Wu, H.; Li, C. Generative artificial intelligence is infiltrating peer review process. Crit. Care 2024, 28, 149. [Google Scholar] [CrossRef]

- Havlík, V. Meaning and understanding in large language models. Synthese 2024, 205, 9. [Google Scholar] [CrossRef]

- Spreitzer, C.; Straser, O.; Zehetmeier, S.; Maaß, K. Mathematical modelling abilities of artificial intelligence tools: The case of ChatGPT. Educ. Sci. 2024, 14, 698. [Google Scholar] [CrossRef]

- Beaulieu-Jones, B.R.; Berrigan, M.T.; Shah, S.; Marwaha, J.S.; Lai, S.L.; Brat, G.A. Evaluating capabilities of large language models: Performance of GPT-4 on surgical knowledge assessments. Surgery 2024, 175, 936–942. [Google Scholar] [CrossRef]

- Kroupin, I.G.; Carey, S.E. You cannot find what you are not looking for: Population differences in relational reasoning are sometimes differences in inductive biases alone. Cognition 2022, 222, 105007. [Google Scholar] [CrossRef]

- Voutsa, M.C.; Tsapatsoulis, N.; Djouvas, C. Biased by Design? Evaluating Bias and Behavioral Diversity in LLM Annotation of Real-World and Synthetic Hotel Reviews. AI 2025, 6, 178. [Google Scholar] [CrossRef]

- Lunde, Å.; Heggen, K.; Strand, R. Knowledge and power: Exploring unproductive interplay between quantitative and qualitative researchers. J. Mix. Methods Res. 2013, 7, 197–210. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Fleiss, J.L. Balanced incomplete block designs for inter-rater reliability studies. Appl. Psychol. Meas. 1981, 5, 105–112. [Google Scholar] [CrossRef]

- Cicchetti, D.V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 1994, 6, 284. [Google Scholar] [CrossRef]

- Mitchell, M. AI’s challenge of understanding the world. Science 2023, 382, eadm8175. [Google Scholar] [CrossRef]

- Dennstädt, F.; Zink, J.; Putora, P.M.; Hastings, J.; Cihoric, N. Title and abstract screening for literature reviews using large language models: An exploratory study in the biomedical domain. Syst. Rev. 2024, 13, 158. [Google Scholar] [CrossRef]

- Jiang, H.; Zhu, D.; Chugh, R.; Turnbull, D.; Jin, W. Virtual reality and augmented reality-supported K-12 STEM learning: Trends, advantages and challenges. Educ. Inf. Technol. 2025, 30, 12827–12863. [Google Scholar] [CrossRef]

- Rest, J.; Narvaez, D.; Bebeau, M.; Thoma, S. A neo-Kohlbergian approach: The DIT and schema theory. Educ. Psychol. Rev. 1999, 11, 291–324. [Google Scholar] [CrossRef]

- Zhu, Y.; Jiang, H.; Chugh, R. Empowering STEM teachers’ professional learning through GenAI: The roles of task-technology fit, cognitive appraisal, and emotions. Teach. Teach. Educ. 2025, 167, 105204. [Google Scholar] [CrossRef]

- Suleiman, A.; Von Wedel, D.; Munoz-Acuna, R.; Redaelli, S.; Santarisi, A.; Seibold, E.L.; Ratajczak, N.; Kato, S.; Said, N.; Sundar, E.; et al. Assessing ChatGPT’s ability to emulate human reviewers in scientific research: A descriptive and qualitative approach. Comput. Methods Programs Biomed. 2024, 254, 108313. [Google Scholar] [CrossRef]

- Zhang, W.; Cui, Y.; Zhang, K.; Wang, Y.; Zhu, Q.; Li, L.; Liu, T. A static and dynamic attention framework for multi turn dialogue generation. ACM Trans. Inf. Syst. 2023, 41, 1–30. [Google Scholar] [CrossRef]

| Reliability Type | Evaluators | Metric | ICC | 95% CI | p-Value |

|---|---|---|---|---|---|

| Intra-rater Reliability | DeepSeek | ICC(3, 1) | 0.219 | [0.042, 0.280] | 0.008 |

| Doubao | ICC(3, 1) | 0.461 | [0.309, 0.591] | 0.000 | |

| GPT-4 | ICC(3, 1) | 0.192 | [0.013, 0.359] | 0.018 | |

| Human Experts | ICC(2, k) | 0.785 | [0.651, 0.852] | 0.000 | |

| Inter-rater Reliability | deepseek; Doubao; GPT-4 | ICC(3, k) | 0.394 | [0.188, 0.556] | 0.000 |

| deepseek; Human Experts | ICC(2, k) | 0.525 | [0.262, 0.687] | 0.000 | |

| Doubao; Human Experts | ICC(2, k) | 0.100 | [−0.145, 0.311] | 0.187 | |

| GPT-4; Human Experts | ICC(2, k) | 0.100 | [−0.215, 0.343] | 0.250 |

| Pair | Version | Mean | N | SD | t | p | r | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Pair 1 (DeepSeek) | Original | 88.85 | 40 | 2.551 | 0.723 | 0.474 | 0.193 | 0.114 |

| Scientific flaws | 88.48 | 40 | 2.526 | |||||

| Pair 2 (Doubao) | Original | 88.89 | 40 | 1.866 | −0.270 | 0.789 | 0.695 | 0.043 |

| Scientific flaws | 89.95 | 40 | 1.884 | |||||

| Pair 3 (GPT-4) | Original | 88.36 | 40 | 2.279 | −1.285 | 0.206 | 0.100 | 0.203 |

| Scientific flaws | 89.93 | 40 | 1.922 | |||||

| Pair 4 (Human Experts) | Original | 89.83 | 40 | 2.427 | 7.343 | 0.000 | 0.541 | 1.161 |

| Scientific flaws | 85.89 | 40 | 4.023 |

| Pair | Prompt Type | Mean | N | SD | t | p | r | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Pair 1 (DeepSeek) | Broad | 88.48 | 40 | 2.526 | 10.243 | 0.000 | 0.520 | 1.620 |

| Targeted | 82.78 | 40 | 4.098 | |||||

| Pair 2 (Doubao) | Broad | 89.95 | 40 | 1.884 | 4.083 | 0.000 | 0.453 | 0.646 |

| Targeted | 88.23 | 40 | 2.931 | |||||

| Pair 3 (GPT-4) | Broad | 88.94 | 40 | 1.922 | 1.401 | 0.169 | 0.479 | 0.221 |

| Targeted | 88.20 | 40 | 3.791 |

| Pair | Version | Mean | N | SD | t | p | r | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Pair 1 (DeepSeek) | Original | 88.85 | 40 | 2.551 | 1.491 | 0.144 | 0.433 | 0.236 |

| Logical flaws | 88.14 | 40 | 3.066 | |||||

| Pair 2 (Doubao) | Original | 88.89 | 40 | 1.866 | −1.032 | 0.309 | 0.563 | 0.163 |

| Logical flaws | 90.15 | 40 | 1.511 | |||||

| Pair 3 (GPT-4) | Original | 88.36 | 40 | 2.279 | −0.373 | 0.712 | 0.113 | 0.059 |

| Logical flaws | 88.54 | 40 | 2.182 | |||||

| Pair 4 (Human Experts) | Original | 89.83 | 40 | 2.427 | 9.896 | 0.000 | 0.345 | 1.565 |

| Logical flaws | 83.83 | 40 | 3.922 |

| Pair | Version | Mean | N | SD | t | p | r | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Pair 1 (DeepSeek) | Broad | 88.14 | 40 | 3.066 | 1.136 | 0.263 | 0.601 | 0.180 |

| Targeted | 87.45 | 40 | 4.782 | |||||

| Pair 2 (Doubao) | Broad | 90.15 | 40 | 1.511 | −6.042 | 0.000 | 0.594 | 0.955 |

| Targeted | 91.63 | 40 | 1.849 | |||||

| Pair 3 (GPT-4) | Broad | 88.54 | 40 | 2.182 | 0.856 | 0.397 | 0.296 | 0.135 |

| Targeted | 88.00 | 40 | 4.026 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Dai, L.; Jiang, H. Applied with Caution: Extreme-Scenario Testing Reveals Significant Risks in Using LLMs for Humanities and Social Sciences Paper Evaluation. Appl. Sci. 2025, 15, 10696. https://doi.org/10.3390/app151910696

Liu H, Dai L, Jiang H. Applied with Caution: Extreme-Scenario Testing Reveals Significant Risks in Using LLMs for Humanities and Social Sciences Paper Evaluation. Applied Sciences. 2025; 15(19):10696. https://doi.org/10.3390/app151910696

Chicago/Turabian StyleLiu, Hua, Ling Dai, and Haozhe Jiang. 2025. "Applied with Caution: Extreme-Scenario Testing Reveals Significant Risks in Using LLMs for Humanities and Social Sciences Paper Evaluation" Applied Sciences 15, no. 19: 10696. https://doi.org/10.3390/app151910696

APA StyleLiu, H., Dai, L., & Jiang, H. (2025). Applied with Caution: Extreme-Scenario Testing Reveals Significant Risks in Using LLMs for Humanities and Social Sciences Paper Evaluation. Applied Sciences, 15(19), 10696. https://doi.org/10.3390/app151910696