Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs

Abstract

1. Introduction

2. Background

2.1. Edge AI Paradigm

2.2. Edge AI Hardware

2.3. Edge AI Software

2.4. Edge AI Model Architectures

2.5. ISO/IEC 25010:2011 Standard

- Functional Suitability: The standard defines it as the “degree to which a product or system provides functions that meet stated and implied needs when used under specified conditions”. This characteristic ensures that the edge applications perform their intended functions within the constrained environments of edge computing, such as limited processing power, memory, and energy resources.

- Reliability: The standard defines it as the “degree to which a system, product or component performs specified functions under specified conditions for a specified period”. It is a critical factor for edge systems, as they often operate in real-time and in dynamic environments, such as vehicles, drones, or remote monitoring systems. These systems are expected to function consistently under specified conditions over an extended period without failure.

- Usability: The standard defines it as the “degree to which a product or system can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”. The usability characteristic ensures that the system can be effectively and efficiently used by the intended stakeholders. For example, for end-users, systems like smart home devices should be intuitive, responsive, and easy to interact with.

- Performance Efficiency: The standard defines it as the “degree of the performance relative to the number of resources used under stated conditions”. Performance efficiency is one of the most critical characteristics for Edge AI applications. Edge devices often have limited resources in terms of processing power, memory, and battery life. Ensuring that the system delivers expected performance under these limited resources is essential for effective deployment in edge environments.

- Portability: The standard defines it as the “degree of effectiveness and efficiency with which a system, product or component can be transferred from one hardware, software or other operational or usage environment to another”. This characteristic ensures that AI models and hardware can be easily deployed across various devices, allowing for flexible and scalable Edge AI solutions.

- Maintainability: The standard defines it as the “degree of effectiveness and efficiency with which a product or system can be modified to improve it, correct it, or adapt it to changes in the environment, and in requirements”. This characteristic ensures that edge hardware and AI models can be easily updated or optimized, which is essential for adapting to evolving requirements and ensuring long-term system viability.

- Compatibility: The standard defines it as the “degree to which a product, system, or component can exchange information with other products, systems, or components, and perform its required functions while sharing the same hardware or software environment”. Ensuring compatibility ensures that edge devices and AI models can work cohesively within a larger ecosystem, enabling seamless data exchange, integration with other services, and interoperability across different technological stacks.

- Security: The standard defines it as the “degree to which a product or system protects information and data so that persons or other products or systems have the degree of data access appropriate to their types and levels of authorization”. Edge devices and AI models often process personal, medical, financial, or other confidential data, which makes robust security mechanisms essential. The security characteristic ensures that the system protects data integrity, confidentiality, and availability by preventing unauthorized access or tampering.

2.6. Multi-Criteria Decision Analysis (MCDA) Methods

- Handling the complexity and uncertainty in decision parameters: Edge hardware selection involves assessing various criteria, many of which are subjective, uncertain, or difficult to quantify accurately. For example, while supported AI frameworks or cost might have clear numerical values, criteria like processing efficiency or power consumption may involve ranges of values. In this regard, fuzzy MCDA techniques allow decision-makers to handle these uncertainties by using fuzzy sets, such as Triangular Fuzzy Numbers (TFN), which represent values as ranges instead of precise numbers [38].

- Weighting and Prioritizing Criteria: Different applications may prioritize certain criteria over others. For example, in battery-powered drones (MAVs), power consumption may be weighted higher than processing power, or similarly, in an industrial setting, reliability and processing power may take precedence over cost. Fuzzy MCDA techniques enable decision-makers to define weighted importance for each criterion and ensure that the chosen option aligns with the specific goals and the requirements of the application.

- Multi-dimensional trade-offs: In selecting edge hardware, trade-offs often arise due to conflicting objectives (e.g., maximizing performance while minimizing power consumption and cost). These trade-offs can be difficult to handle with traditional methods since they require balancing multiple dimensions simultaneously. Fuzzy MCDA techniques are well suited for handling such multi-dimensional trade-offs. They provide a structured way to evaluate different alternatives, considering both profit criteria (those that should be maximized, like performance) and cost criteria (those that should be minimized, like power consumption or cost). By this way, decision-makers can ensure that the trade-offs are balanced appropriately based on the context.

- TOPSIS (Technique for the Order of Prioritization by Similarity to Ideal Solution) [16]: This method ranks alternatives based on their distance from an ideal solution–best possible outcome and a negative-ideal solution–worst possible outcome. It is useful in the presence of many conflicting criteria to be optimized, such as processing power vs. power consumption.

- MABAC (Multi-Attributive Border Approximation Area Comparison) [46]: This method is designed to rank alternatives based on their relative distance from the boundary of acceptability, and it is ideal for cases where the decision involves defining thresholds or boundaries for acceptable hardware performance under fuzzy conditions.

- COMET (Characteristic Objects Method) [47]: This method evaluates decision alternatives according to their characteristic objects and ranks them according to a comprehensive comparison of criteria. The fuzzy COMET method is well suited for representing subjective preferences and uncertainties in criteria such as performance stability or long-term reliability.

- SPOTIS (Stable Preference Ordering Towards Ideal Solution) [48]: This method simplifies the decision process by comparing alternatives to an ideal solution while considering fuzzy criteria. It is advantageous when evaluating hardware which must satisfy certain threshold criteria, such as a minimum acceptable level of power consumption or processing speed, under uncertain conditions.

- COCOSO (Combined Compromise Solution) [49]: This method combines different compromise solutions to provide a more robust ranking of alternatives, which is beneficial in multi-criteria hardware selection problems where performance and cost must be balanced. Fuzzy COCOSO helps handle the uncertainty in performance and energy efficiency.

- VIKOR [17]: This method focuses on finding a compromise solution, which is particularly relevant when there are multiple conflicting criteria with trade-offs. It considers both the best and worst performance across the criteria and aims to minimize regret. Fuzzy VIKOR is useful in edge hardware selection because it addresses trade-offs between attributes like cost, power efficiency, and processing power under fuzzy conditions.

3. Related Work

- There are a limited number of studies that rely on standards such as ISO 25010 for the evaluation and selection process of edge hardware and AI models. Most of the studies have conducted benchmarking and focused on specific performance metrics (e.g., inference time, power consumption) for specific hardware, e.g., [53,54,55].

- A limited number of studies have utilized MCDA techniques for the selection of edge hardware or AI models. Moreover, these studies have considered a limited number of assessment criteria [9].

- We have not encountered studies that employ fuzzy MCDA techniques for the evaluation and selection of edge hardware and AI models, assessment criteria of which may require considering uncertainties.

4. Method

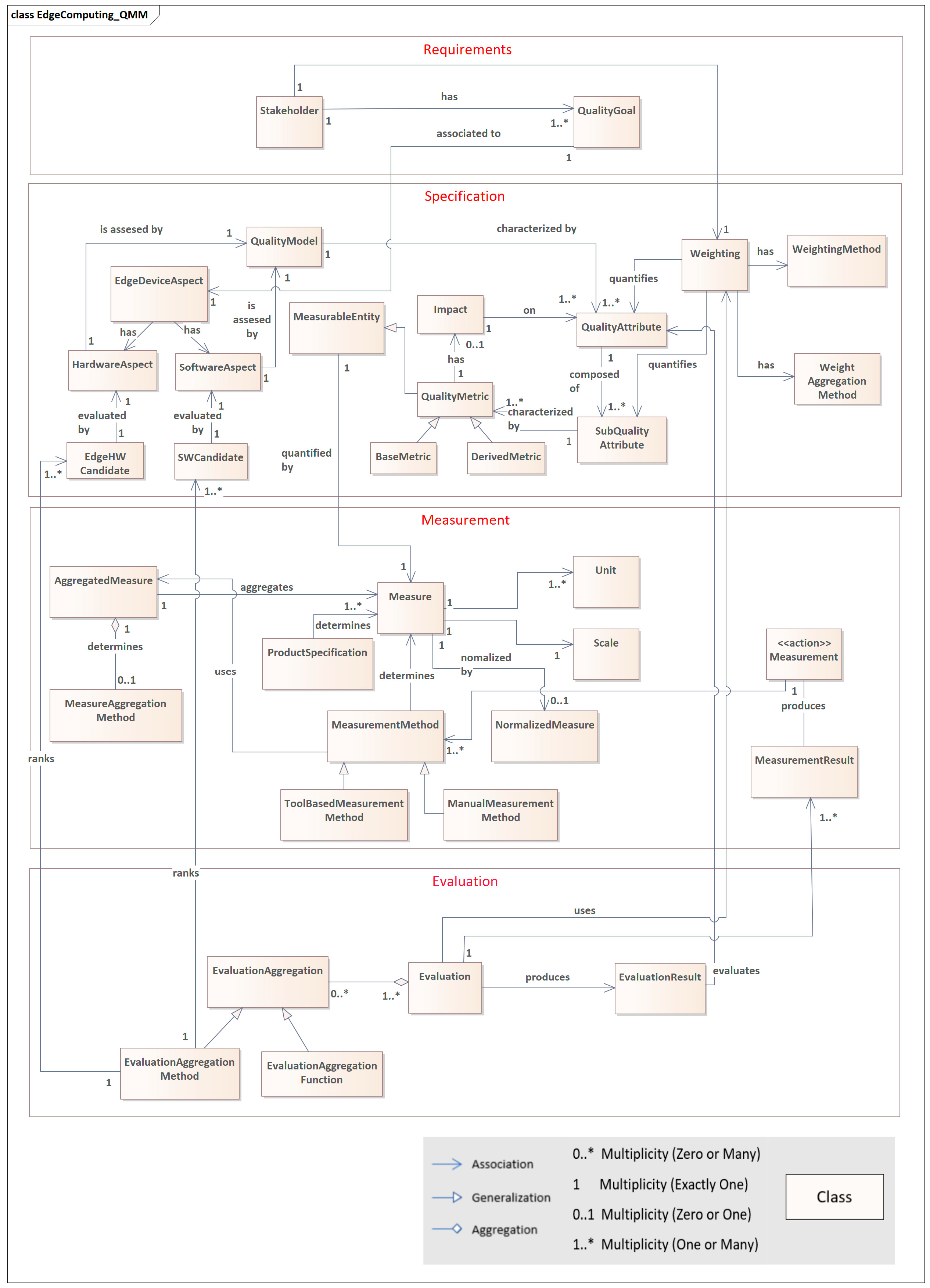

4.1. Quality Assessment Framework for Edge AI Applications

4.1.1. Requirements Layer (Stakeholder Goal Identification)

4.1.2. Specification Layer (Quality Attributes, Sub-Quality Attributes, and Metrics Definition)

4.1.3. Measurement Layer (Data Collection and Normalization)

4.1.4. Evaluation Layer (Evaluation and Ranking of Hardware and AI Model Candidates)

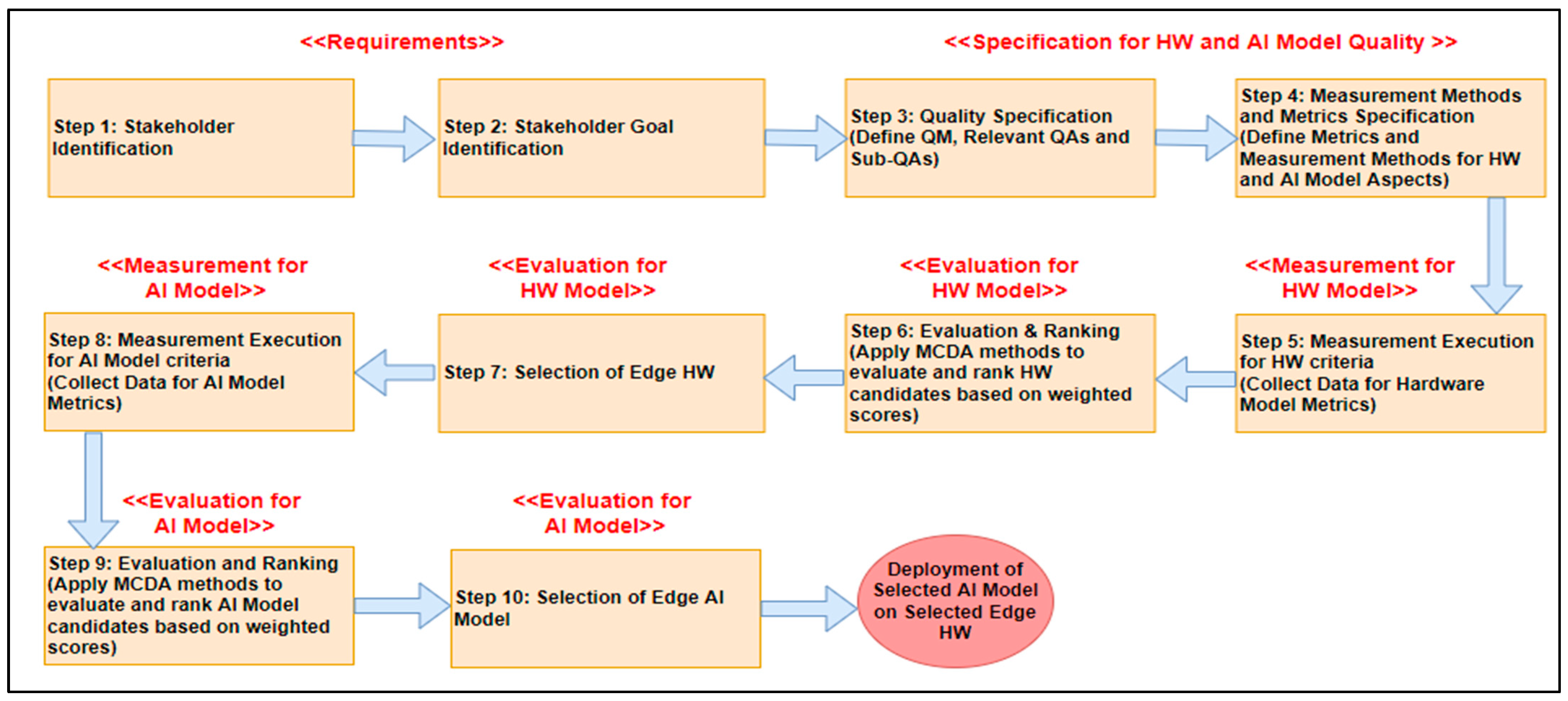

4.2. Selection of Suitable Edge Hardware and AI Models

4.2.1. Selection of Edge Hardware (Phase I)

4.2.2. Selection of AI Model (Phase II)

4.3. Process Flow to Adopt the Method

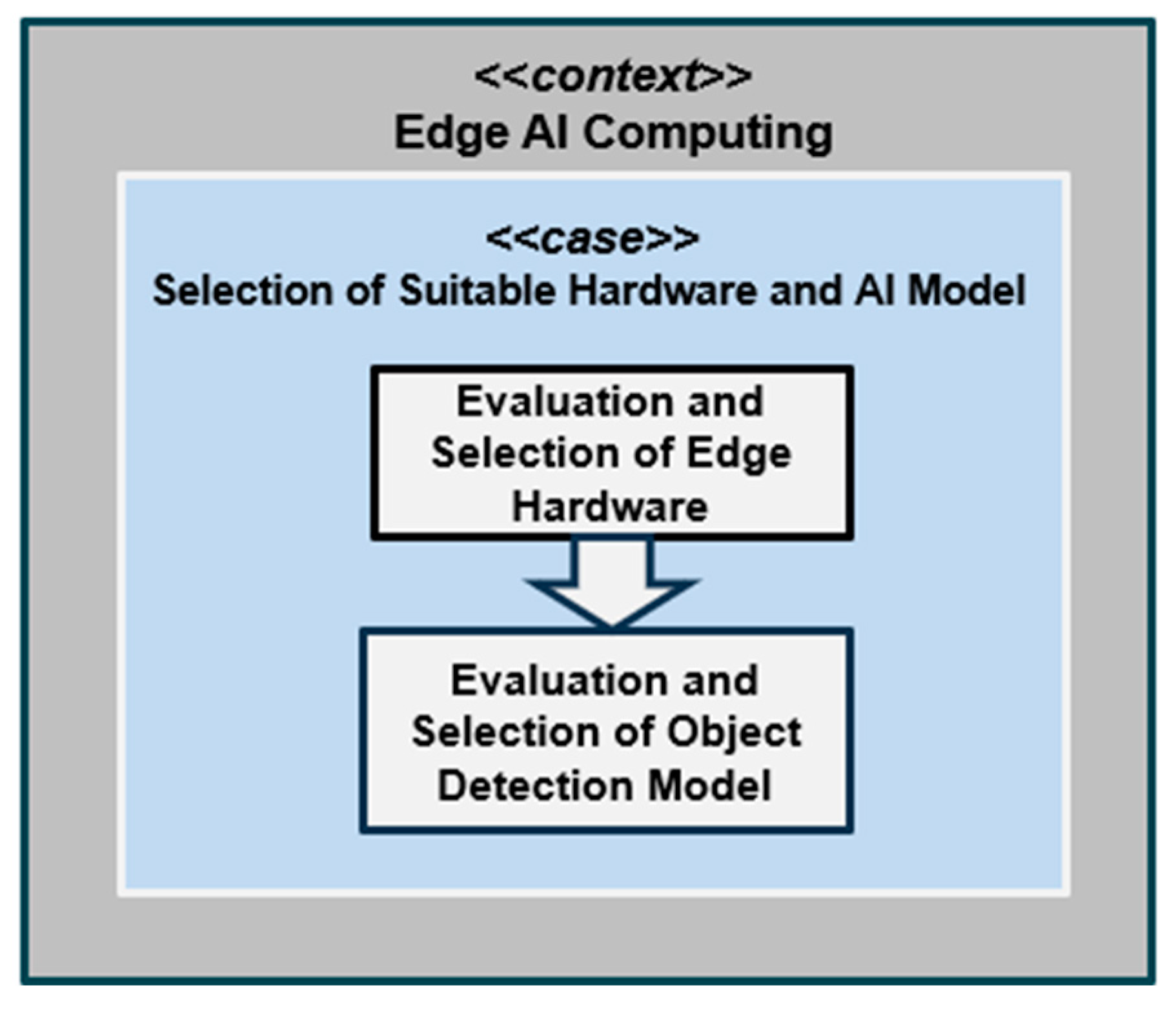

5. Experiments

5.1. Test of the Proposed Method

5.1.1. Overview of the Scenarios

Scenario I: Low-Cost, Low-Power, Lightweight MAV for Basic Object Detection Tasks

Scenario II: Mid-Level UAV for AI-Based Obstacle Detection

Scenario III: General-Purpose UAV for Outdoor Object Detection

Scenario IV: High-Performance UAV for Real-Time Advanced Object Detection and Tracking

5.1.2. Application of the Method to the Scenarios

Requirements: Layer/Stakeholder Goal Identification

- Process Flow—Step 1, Step 2: Stakeholder and Stakeholder Goal Identification

Specification Layer/Hardware and AI Model Quality Specification

- Process Flow—Step 3: Quality Specification (Define QM, Relevant QAs, and Sub-QAs)

- Process Flow—Step 4: Measurement Metrics and Measurement Methods Specification (Define Metrics and Measurement Methods for HW and AI Model Aspects)

Measurement Layer/HW Model Metrics Measurement

- Process Flow—Step 5: Measurement Execution for HW Criteria (Collect Data for Hardware Model Criteria/Metrics)

Evaluation Layer/Evaluation and Ranking of Hardware Options

- Process Flow—Step 6: Evaluation & Ranking (Apply MCDA methods to evaluate and rank HW candidates based on weighted scores)

- Process Flow—Step 7: Selection of Edge HW

- Focus Session Setup: A focus session was conducted with a group of six expert practitioners in the field working for a civil aviation company. Initially, the experts were given a detailed overview of the goals for each scenario given in Section 5.1.1, such as low-cost or high-performance objectives. The experts then engaged in a discussion to evaluate the relative importance of each criterion in achieving these goals.

- Expert Scoring: Following the focus session, each expert was asked to assign scores to each criterion, typically on a scale of 1 to 10, where 1 represents the least importance and 10 represents the highest importance. Experts provided their individual opinions on how critical each criterion is for the success of the UAV design in the specific scenario.

- Aggregation of Experts Scores: After the individual expert scores were collected, the scores were aggregated to obtain a consensus weight for each criterion. The aggregation process involved calculating the mean and standard deviation (SD) of the expert scores for each criterion.

- Statistical Analysis: An average score for each criterion from the experts is calculated. To understand the level of agreement or disagreement among the experts, SD was calculated to understand how the scores varied around the mean. A low SD (<1) indicated strong agreement, while a high SD (>1) showed variability in expert opinions. Population standard deviation is used to find the SD: where N is the total number of experts, xi individual expert score, and μ is the mean (average) of all the scores.

- Final Weight Assignment: After analyzing the statistical data, assigning final weights based on consensus was crucial. Since criteria with low SD reflected a strong consensus, their weights were directly based on the mean. In contrast, criteria with high SD (>1) required further discussion and adjustments of scores to ensure that the final weight reflected a more balanced perspective. The weight assignment session for edge hardware was finalized in two rounds of discussion.

- Weight Derivation: Finally, weight derivation is employed through normalization, where mean scores were divided by the maximum score to produce normalized weights, and re-adjustment of the weights was performed so that they sum to 1, ensuring that they were correctly proportional. The weights of HW criteria for each scenario were finalized, as given in Appendix D, Table A6.

Measurement Layer/Measurement for AI Model

- Process Flow—Step 8: Measurement Execution for AI Model Criteria (Collect Data for AI Model Metrics/Criteria)

- Scenario 1, AI Model Candidates: Object detection models in different object detection frameworks (e.g., Single Shot Multi-Box Detector—SSD [67]), with different architectures (e.g., MobileNet v1 or MobileNet v2) and trained with the COCO Person Dataset [68] are evaluated according to the metrics given in Table A2 (input resolution, inference time, accuracy) to select the most suitable object detection model for the selected edge hardware. The metrics for these int8 quantized object detection models for STM32H7 series microcontrollers are given in Table A2. These metrics are obtained from the vendor’s GitHub page [69].

- Scenario 2, AI Model Candidates: For this scenario, in Table A3, the metrics of the object detection models varying in architectures are determined for NVIDIA Jetson Nano. The experiments were conducted on the Microsoft COCO Dataset, which includes 80 objects. The metrics for the models are obtained from the GitHub page of Q-Engineering [70].

- Scenario 3, AI Model Candidates: In this scenario, object detection models in differing frameworks and architectures, given in Table A4, were tested on Raspberry Pi 4. All the experiments were conducted on the Microsoft COCO Dataset, which includes 80 objects. The metrics are retrieved from the GitHub page of Q-Engineering [70].

- Scenario 4, AI Model Candidates: In this scenario, deep learning-based 3D object detection models were tested on the KITTI Dataset on NVIDIA Xavier NX. The metrics given in Table A5 are obtained from Choe et al.’s study that conducted benchmark analysis on 3D object detectors for NVIDIA Jetson platforms [71].

Evaluation Layer/Evaluation for AI Models

- Process Flow—Step 9: Evaluation and Ranking (apply MCDA methods to evaluate and rank AI model candidates based on weighted scores)

- Process Flow—Step 10: Selection of Edge AI Model

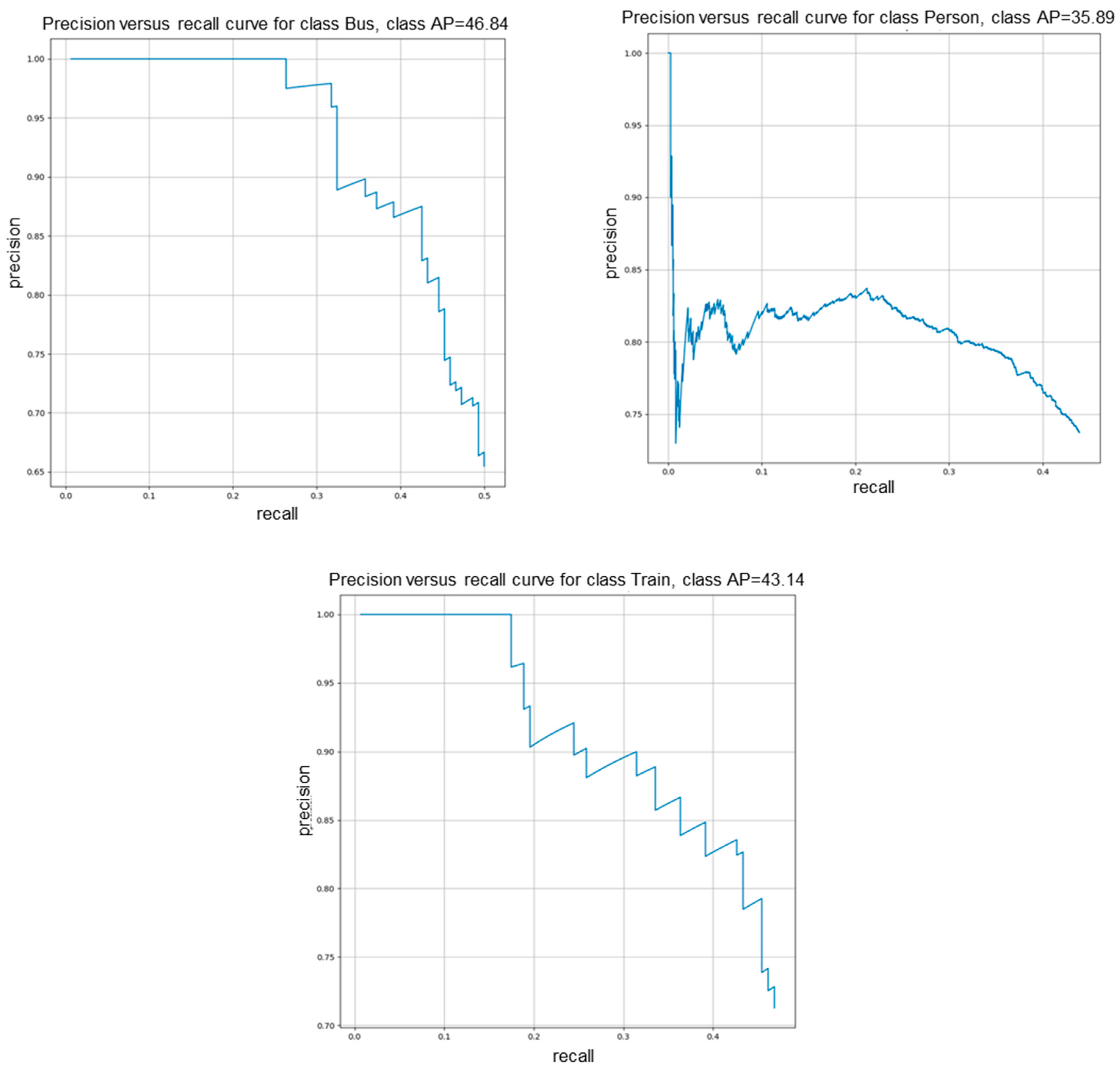

5.1.3. Deployment Phase and Results (Deployment of Selected AI Models on Selected Edge HWs)

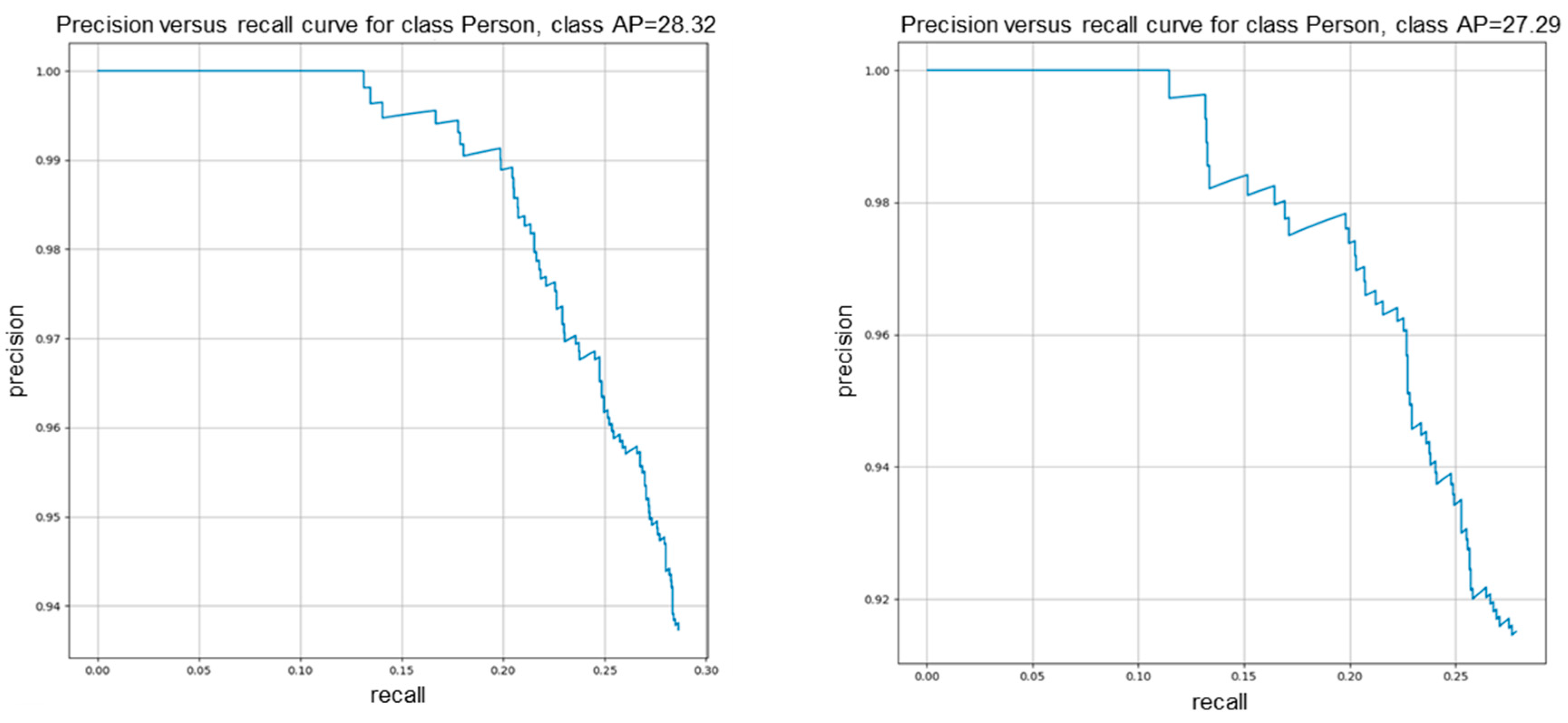

5.2. A TensorFlow Lite Model for Object Detection

5.2.1. Deployment Results of Scenario I

5.2.2. Additional TensorFlow Lite Model for Object Detection

6. Discussion

7. Threats to Validity

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Evaluation Indicators Across Application Scenarios

| Metric Category | Evaluation Indicator | Description | Application Scenarios | Device Constraints Addressed |

|---|---|---|---|---|

| Accuracy [75,76,77,78] | Model Accuracy (e.g., Top-1, Top-5, mAP, Prediction Accuracy, Precision/Recall/F1-Score, IoU, MAE, Latency-Adjusted Accuracy) [75,76] | Measures how well the AI model performs on a given task, such as object detection or classification. | Autonomous vehicles, Surveillance | Limited precision or inference errors |

| Accuracy under Resource Constraints [75,76] | Tests accuracy with reduced precision (e.g., INT8 quantization) or limited memory. | Low-power IoT devices | Constrained computation and memory | |

| Accuracy under Noise/Adversarial Conditions [77,78] | Evaluates model performance with added input noise or adversarial attacks. | Drones in dynamic environments | Robustness to real-world interference | |

| Computational Efficiency [79,80] | Frames Per Second (FPS) | Measures real-time processing speed of inference tasks. | Real-time video analytics | Processing under low latency |

| Latency (End-to-End Inference Time) | Total time for data input to output prediction. | Healthcare AI systems, Robotics | Low-latency or time-sensitive tasks | |

| Model Throughput (Inference per Second) | Number of inferences the model can handle per unit time. | Batch processing, industrial use | Scaling with computational constraints | |

| Resource Utilization [79,80] | CPU/GPU/TPU Utilization | Tracks hardware usage during inference. | Resource monitoring in IoT | Avoids overloading hardware |

| Power Consumption (Watts/Inferences per Watt) [71] | Measures energy efficiency of the device. | Battery-powered drones, Edge AI | Power-efficient operations | |

| Memory Utilization (RAM/Flash) | Evaluates memory footprint for models and inputs. | Embedded AI, wearable devices | Limited memory capacity | |

| Adaptability | Transfer Learning Capability [81] | Assesses the ability to fine-tune models for new tasks with limited data. | Personalized recommendations | Adaptation to new environments or datasets |

| Scalability [65] | Tests how models and hardware perform with varying workloads. | Distributed Edge AI systems | Dynamic workload adjustment | |

| Multi-Model Support [80] | Ability to run multiple models concurrently or in parallel. | Smart city applications | Support for diverse use cases | |

| Robustness | Mean Time Between Failures (MTBF) [82] | Evaluates hardware reliability under prolonged usage. | Mission-critical drones, IoT devices | Reliability for long-term deployments |

| Environmental Resilience (e.g., temperature, humidity, vibration) [83] | Performance under harsh environmental conditions. | Outdoor drones, industrial IoT | Operates in extreme conditions | |

| Fault Tolerance [84,85] | Ability to recover from hardware or software failures. | Autonomous vehicles | High reliability under unexpected failures |

Appendix B. Simplified Object Model Diagram

Appendix C. Scenario AI Model Options

| Model ID | Model | Resolution | Total RAM (KiB) | Total Flash (KiB) | Inference Time (ms) | Accuracy (AP) (%) |

|---|---|---|---|---|---|---|

| EdgeSW_Scenario1_1 | SSD Mobilenet v2 0.35 FPN-lite | 192 × 192 × 3 | 781.75 | 1174.51 | 512.33 | 40.73 |

| EdgeSW_Scenario1_2 | ST SSD Mobilenet v1 0.25 | 192 × 192 × 3 | 296.23 | 534.67 | 149.22 | 33.70 |

| EdgeSW_Scenario1_3 | ST SSD Mobilenet v1 0.25 | 224 × 224 × 3 | 413.93 | 702.81 | 218.68 | 44.45 |

| EdgeSW_Scenario1_4 | ST SSD Mobilenet v1 0.25 | 256 × 256 × 3 | 489.85 | 701.97 | 266.4 | 46.26 |

| EdgeSW_Scenario1_5 | st_yolo_lc_v1 | 192 × 192 × 3 | 174.38 | 330.23 | 179.35 | 31.61 |

| EdgeSW_Scenario1_6 | st_yolo_lc_v1 | 224 × 224 × 3 | 225.38 | 330.24 | 244.7 | 36.80 |

| EdgeSW_Scenario1_7 | st_yolo_lc_v1 | 256 × 256 × 3 | 286.38 | 330.23 | 321.23 | 40.58 |

| Model ID | Model | Resolution | Objects | mAP (%) | FPS |

|---|---|---|---|---|---|

| EdgeSW_Scenario2_1 | NanoDet | 320 × 320 | 80 | 20.6 | 26.2 |

| EdgeSW_Scenario2_2 | NanoDet Plus | 416 × 416 | 80 | 30.4 | 18.5 |

| EdgeSW_Scenario2_3 | YOLOFastestV2 | 352 × 352 | 80 | 24.1 | 38.4 |

| EdgeSW_Scenario2_4 | YOLOv2 | 416 × 416 | 20 | 19.2 | 10.1 |

| EdgeSW_Scenario2_5 | YOLOv3 | 352 × 352 (tiny) | 20 | 16.6 | 17.7 |

| EdgeSW_Scenario2_6 | YOLOv4 Darknet | 416 × 416 (tiny) | 80 | 21.7 | 16.5 |

| EdgeSW_Scenario2_7 | YOLOv4 | 608 × 608 (full) | 80 | 45.3 | 1.3 |

| EdgeSW_Scenario2_8 | YOLOv5 | 640 × 640 (small) | 80 | 22.5 | 5.0 |

| EdgeSW_Scenario2_9 | YOLOv6 | 640 × 640 (nano) | 80 | 35.0 | 10.5 |

| EdgeSW_Scenario2_10 | YOLOv7 | 640 × 640 (tiny) | 80 | 38.7 | 8.5 |

| EdgeSW_Scenario2_11 | YOLOx | 416 × 416 (nano) | 80 | 25.8 | 22.6 |

| EdgeSW_Scenario2_12 | YOLOx | 416 × 416 (tiny) | 80 | 32.8 | 11.35 |

| EdgeSW_Scenario2_13 | YOLOx | 640 × 640 (small) | 80 | 40.5 | 3.65 |

| Model ID | Model | Resolution | Objects | mAP | FPS (RPi 4 64-OS 1950 MHz) |

|---|---|---|---|---|---|

| EdgeSW_Scenario3_1 | NanoDet | 320 × 320 | 80 | 20.6 | 13.0 |

| EdgeSW_Scenario3_2 | NanoDet Plus | 416 × 416 | 80 | 30.4 | 5.0 |

| EdgeSW_Scenario3_3 | YOLOFastestV2 | 352 × 352 | 80 | 24.1 | 18.8 |

| EdgeSW_Scenario3_4 | YOLOv2 | 416 × 416 | 20 | 19.2 | 3.0 |

| EdgeSW_Scenario3_5 | YOLOv3 | 352 × 352 tiny | 20 | 16.6 | 4.4 |

| EdgeSW_Scenario3_6 | YOLOv4 Darknet | 416 × 416 tiny | 80 | 21.7 | 3.4 |

| EdgeSW_Scenario3_7 | YOLOv4 | 608 × 608 full | 80 | 45.3 | 0.2 |

| EdgeSW_Scenario3_8 | YOLOv5 | 640 × 640 small | 80 | 22.5 | 1.6 |

| EdgeSW_Scenario3_9 | YOLOv6 | 640 × 640 nano | 80 | 35.0 | 2.7 |

| EdgeSW_Scenario3_10 | YOLOv7 | 640 × 640 tiny | 80 | 38.7 | 2.1 |

| EdgeSW_Scenario3_11 | YOLOx | 416 × 416 nano | 80 | 25.8 | 7.0 |

| EdgeSW_Scenario3_12 | YOLOx | 416 × 416 tiny | 80 | 32.8 | 2.8 |

| EdgeSW_Scenario3_13 | YOLOx | 640 × 640 small | 80 | 40.5 | 0.9 |

| Model ID | Model | mAP (Bird Eye View) | Average FPS | Average Power Consumption (W) |

|---|---|---|---|---|

| EdgeSW_Scenario4_1 | PointRCNN | 88 | 1.2 | 12 |

| EdgeSW_Scenario4_2 | Part-A2 | 89 | 1.82 | 12.5 |

| EdgeSW_Scenario4_3 | PV-RCNN | 88 | 1.43 | 12.1 |

| EdgeSW_Scenario4_4 | Complex-YOLOv3 | 82 | 2.95 | 13.2 |

| EdgeSW_Scenario4_5 | Complex-YOLOv3 (Tiny) | 67 | 17.93 | 8.9 |

| EdgeSW_Scenario4_6 | Complex-YOLOv4 | 0.833 | 2.82 | 13.8 |

| EdgeSW_Scenario4_7 | Complex-YOLOv4 (Tiny) | 0.68 | 16.4 | 9.3 |

| EdgeSW_Scenario4_8 | SECOND | 0.837 | 2.6 | 13.4 |

| EdgeSW_Scenario4_9 | PointPillar | 0.871 | 5.73 | 13.9 |

| EdgeSW_Scenario4_10 | CIA-SSD | 0.867 | 3.12 | 13.1 |

| EdgeSW_Scenario4_11 | SE-SSD | 0.883 | 3.17 | 13.1 |

Appendix D. Edge HW and AI Model Criteria Weights for Scenarios

| Criterion | Scenario I | Scenario II | Scenario III | Scenario IV |

|---|---|---|---|---|

| MTBF | 0.114 | 0.047 | 0.051 | 0.052 |

| Processing Power | 0.104 | 0.358 | 0.143 | 0.439 |

| Supported AI Frameworks | 0.056 | 0.052 | 0.048 | 0.054 |

| Power Consumption | 0.143 | 0.097 | 0.096 | 0.101 |

| Weight | 0.147 | 0.053 | 0.026 | 0.049 |

| Size | 0.138 | 0.057 | 0.024 | 0.051 |

| Flash Memory | 0.056 | 0.046 | 0.108 | 0.059 |

| RAM | 0.077 | 0.048 | 0.098 | 0.057 |

| Cost | 0.165 | 0.242 | 0.406 | 0.138 |

| Scenario | AI Model Criteria | AI Model Criteria Weights |

|---|---|---|

| Scenario I | [Resolution, Inference Time, Accuracy] | [0.18, 0.22, 0.60] |

| Scenario II | [Resolution, Objects, mAP, FPS] | [0.12, 0.08, 0.33, 0.47] |

| Scenario III | [Resolution, Objects, mAP, FPS] | [0.1, 0.15, 0.35, 0.40] |

| Scenario IV | [mAP, FPS, Power consumption] | [0.4, 0.4, 0.2] |

Appendix E. Edge Hardware—Metrics Rating

Appendix F. Object Detection Models, Datasets, Results

Appendix G. Fuzzy MCDA Software, Evaluation Results for Edge Hardware and AI Models

References

- Singh, R.; Gill, S.S. Edge AI: Internet of Things and Cyber-Physical Systems. Internet Things Cyber-Phys. Syst. 2023, 3, 71–92. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Tang, J.; Yu, B.; Wang, Y.; Shi, W. Edge Computing for Autonomous Driving: Opportunities and Challenges. Proc. IEEE 2019, 107, 1697–1716. [Google Scholar] [CrossRef]

- Ke, R.; Zhuang, Y.; Pu, Z.; Wang, Y. A Smart, Efficient, and Reliable Parking Surveillance System with Edge Artificial Intelligence on IoT Devices. IEEE Trans. Intell. Transport. Syst. 2020, 22, 4962–4974. [Google Scholar] [CrossRef]

- Sharkov, G.; Asif, W.; Rehman, I. Securing Smart Home Environment Using Edge Computing. In Proceedings of the 2022 IEEE International Smart Cities Conference (ISC2), Pafos, Cyprus, 26–29 September 2022; pp. 1–7. [Google Scholar]

- Shankar, V. Edge AI: A Comprehensive Survey of Technologies, Applications, and Challenges. In Proceedings of the 2024 1st International Conference on Advanced Computing and Emerging Technologies (ACET), Ghaziabad, India, 23–24 August 2024; pp. 1–6. [Google Scholar]

- Hadidi, R.; Cao, J.; Xie, Y.; Asgari, B.; Krishna, T.; Kim, H. Characterizing the Deployment of Deep Neural Networks on Commercial Edge Devices. In Proceedings of the 2019 IEEE International Symposium on Workload Characterization (IISWC), Orlando, FL, USA, 3–5 November 2019; pp. 35–48. [Google Scholar]

- Garcia-Perez, A.; Miñón, R.; Torre-Bastida, A.I.; Zulueta-Guerrero, E. Analysing Edge Computing Devices for the Deployment of Embedded AI. Sensors 2023, 23, 9495. [Google Scholar] [CrossRef] [PubMed]

- Contreras-Masse, R.; Ochoa-Zezzatti, A.; García, V.; Elizondo, M. Selection of IoT Platform with Multi-Criteria Analysis: Defining Criteria and Experts to Interview. Res. Comput. Sci. 2019, 148, 9–19. [Google Scholar] [CrossRef]

- Silva, E.M.; Agostinho, C.; Jardim-Goncalves, R. A multi-criteria decision model for the selection of a more suitable Internet-of-Things device. In Proceedings of the 2017 International Conference on Engineering, Technology and Innovation (ICE/ITMC), Madeira, Portugal, 27–29 June 2017; pp. 1268–1276. [Google Scholar]

- Ilieva, G.; Yankova, T. IoT System Selection as a Fuzzy Multi-Criteria Problem. Sensors 2022, 22, 4110. [Google Scholar] [CrossRef]

- Gan, G.-Y.; Lee, H.-S.; Liu, J.-Y. A DEA Approach towards to the Evaluation of IoT Applications in Intelligent Ports. J. Mar. Sci. Technol. 2021, 29, 257–267. [Google Scholar] [CrossRef]

- Park, S.; Lee, K. Improved Mitigation of Cyber Threats in IIoT for Smart Cities: A New-Era Approach and Scheme. Sensors 2021, 21, 1976. [Google Scholar] [CrossRef]

- Zhang, X.; Geng, J.; Ma, J.; Liu, H.; Niu, S.; Mao, W. A hybrid service selection optimization algorithm in internet of things. EURASIP J. Wirel. Commun. Netw. 2021, 2021, 4. [Google Scholar] [CrossRef]

- Saaty, T.L. How to Make a Decision: The Analytic Hierarchy Process. Aestimum 1994, 24, 19–43. [Google Scholar] [CrossRef]

- Chen, C.T. Extensions of the TOPSIS for Group Decision-making under Fuzzy Environment. Fuzzy Sets Syst. 2000, 114, 1–9. [Google Scholar] [CrossRef]

- Opricovic, S. A Fuzzy Compromise Solution for Multicriteria Problems. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2007, 15, 363–380. [Google Scholar] [CrossRef]

- Uslu, B.; Eren, T.; Gur, S.; Ozcan, E. Evaluation of the Difficulties in the Internet of Things (IoT) with Multi-Criteria Decision-Making. Processes 2019, 7, 164. [Google Scholar] [CrossRef]

- Soltani, S.; Martin, P.; Elgazzar, K. A hybrid approach to automatic IaaS service selection. J. Cloud Comput. 2018, 7, 12. [Google Scholar] [CrossRef]

- Alelaiwi, A. Evaluating distributed IoT databases for edge/cloud platforms using the analytic hierarchy process. J. Parallel Distrib. Comput. 2019, 124, 4146. [Google Scholar] [CrossRef]

- Silva, E.M.; Jardim-Goncalves, R. Multi-criteria analysis and decision methodology for the selection of internet-of-things hardware platforms. In Doctoral Conference on Computing, Electrical and Industrial Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 111–121. [Google Scholar]

- Garg, S.K.; Versteeg, S.; Buyya, R. A framework for ranking of cloud computing services. Future Gener. Comput. Syst. 2013, 29, 1012–1023. [Google Scholar] [CrossRef]

- ISO/IEC 25010:2011; Systems and software engineering-Systems and Software Quality Requirements and Evaluation (SQuaRE)-System and software quality models. International Organization for Standardization: Geneva, Switzerland, 2011.

- Sipola, T.; Alatalo, J.; Kokkonen, T.; Rantonen, M. Artificial Intelligence in the IoT Era: A Review of Edge AI Hardware and Software. In Proceedings of the 31th Conference of Open Innovations Association FRUCT, Helsinki, Finland, 27–29 April 2022; Volume 31, pp. 320–331. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level Accuracy with 50x Fewer Parameters and <0.5MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both Weights and Connections for Efficient Neural Networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2020, arXiv:2104.00298. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Orsini, G.; Bade, D.; Lamersdorf, W. CloudAware: Empowering context-aware self-adaptation for mobile applications. Trans. Emerg. Telecommun. Technol. 2018, 29, e3210. [Google Scholar] [CrossRef]

- White, G.; Nallur, V.; Clarke, S. Quality of service approaches in IoT: A systematic mapping. J. Syst. Softw. 2017, 132, 186–203. [Google Scholar] [CrossRef]

- Ashouri, M.; Davidsson, P.; Spalazzese, R. Quality attributes in edge computing for the Internet of Things: A systematic mapping study. Internet Things 2021, 13, 100346. [Google Scholar] [CrossRef]

- ISO/IEC 25010:2011. Available online: https://www.iso.org/standard/35733.html (accessed on 17 December 2024).

- Więckowski, J.; Kizielewicz, B.; Sałabun, W. pyFDM: A Python Library for Uncertainty Decision Analysis Methods. SoftwareX 2022, 20, 101271. [Google Scholar] [CrossRef]

- De Souza Melaré, A.V.; González, S.M.; Faceli, K.; Casadei, V. Technologies and Decision Support Systems to Aid Solid-waste Management: A Systematic Review. Waste Manag. 2017, 59, 567–584. [Google Scholar] [CrossRef]

- Maghsoodi, A.I.; Kavian, A.; Khalilzadeh, M.; Brauers, W.K. CLUS-MCDA: A Novel Framework based on Cluster Analysis and Multiple Criteria Decision Theory in a Supplier Selection Problem. Comput. Ind. Eng. 2018, 118, 409–422. [Google Scholar] [CrossRef]

- Ulutaş, A.; Balo, F.; Sua, L.; Demir, E.; Topal, A.; Jakovljević, V. A New Integrated Grey MCDM Model: Case of Warehouse Location Selection. Facta Univ. Ser. Mech. Eng. 2021, 19, 515–535. [Google Scholar] [CrossRef]

- Youssef, M.I.; Webster, B. A Multi-criteria Decision-making Approach to the New Product Development Process in Industry. Rep. Mech. Eng. 2022, 3, 83–93. [Google Scholar] [CrossRef]

- Dell’Ovo, M.; Capolongo, S.; Oppio, A. Combining Spatial Analysis with MCDA for the Siting of Healthcare Facilities. Land Use Policy 2018, 76, 634–644. [Google Scholar] [CrossRef]

- Chen, S.J.; Hwang, C.L. Fuzzy Multiple Attribute Decision Making: Methods and Applications; Lecture Notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 1992; Volume 375. [Google Scholar]

- Zimmermann, H.J. Fuzzy Set Theory—And Its Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Bozanic, D.; Tešić, D.; Milićević, J. A Hybrid Fuzzy AHP-MABAC Model: Application in the Serbian Army–The selection of the location for deep wading as a technique of crossing the river by tanks. Decis. Mak. Appl. Manag. Eng. 2018, 1, 143–164. [Google Scholar] [CrossRef]

- Sałabun, W.; Karczmarczyk, A.; Wątróbski, J.; Jankowski, J. Handling Data Uncertainty in Decision Making with COMET. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 1478–1484. [Google Scholar]

- Shekhovtsov, A.; Paradowski, B.; Więckowski, J.; Kizielewicz, B.; Sałabun, W. Extension of the SPOTIS Method for the Rank Reversal Free Decision-making under Fuzzy Environment. In Proceedings of the 2022 IEEE 61st Conference on Decision and Control (CDC), Cancun, Mexico, 6–9 December 2022; pp. 5595–5600. [Google Scholar]

- Ulutaş, A.; Popovic, G.; Radanov, P.; Stanujkic, D.; Karabasevic, D. A New Hybrid Fuzzy PSI-PIPRECIA-CoCoSo MCDM based Approach to Solving the Transportation Company Selection Problem. Technol. Econ. Dev. Econ. 2021, 27, 1227–1249. [Google Scholar] [CrossRef]

- Mandel, N.; Milford, M.; Gonzalez, F. A Method for Evaluating and Selecting Suitable Hardware for Deployment of Embedded System on UAVs. Sensors 2020, 20, 4420. [Google Scholar] [CrossRef]

- Imran, H.; Mujahid, U.; Wazir, S.; Latif, U.; Mehmood, K. Embedded Development Boards for Edge-AI: A Comprehensive Report. arXiv 2020, arXiv:2009.00803. [Google Scholar]

- Merenda, M.; Porcaro, C.; Iero, D. Edge Machine Learning for AI-enabled IoT devices: A review. Sensors 2020, 20, 2533. [Google Scholar] [CrossRef]

- Rajput, K.R.; Kulkarni, C.D.; Cho, B.; Wang, W.; Kim, I.K. Edgefaasbench: Benchmarking Edge Devices using Serverless Computing. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Barcelona, Spain, 11–15 July 2022; pp. 93–103. [Google Scholar]

- Feng, H.; Mu, G.; Zhong, S.; Zhang, P.; Yuan, T. Benchmark Analysis of Yolo Performance on Edge Intelligence Devices. Cryptography 2022, 6, 16. [Google Scholar] [CrossRef]

- Cantero, D.; Esnaola-Gonzalez, I.; Miguel-Alonso, J.; Jauregi, E. Benchmarking Object Detection Deep Learning Models in Embedded Devices. Sensors 2022, 22, 4205. [Google Scholar] [CrossRef]

- Yılmaz, N.; Tarhan, A.K. Matching terms of quality models and meta-models: Toward a unified meta-model of OSS quality. Softw. Qual. J. 2023, 31, 721–773. [Google Scholar] [CrossRef]

- Yılmaz, N.; Tarhan, A.K. Quality Evaluation Meta-model for Open-source Software: Multi-method Validation Study. Softw. Qual. J. 2024, 32, 487–541. [Google Scholar] [CrossRef]

- Boroujerdian, B.; Genc, H.; Krishnan, S.; Cui, W.; Faust, A.; Reddi, V. MAVBench: Micro Aerial Vehicle Benchmarking. In Proceedings of the 2018 51st Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Fukuoka, Japan, 20–24 October 2018; pp. 894–907. [Google Scholar]

- NVIDIA Series Products. Available online: https://developer.nvidia.com/ (accessed on 17 December 2024).

- Intel Atom 6000E Product. Available online: https://www.intel.com/content/www/us/en/content-details/635255/intel-atom-x6000e-series-processor-and-intel-pentium-and-celeron-n-and-j-series-processors-for-internet-of-things-iot-applications-datasheet-volume-2-book-1-of-3.html (accessed on 17 December 2024).

- Google Coral Products. Available online: https://coral.ai/products/ (accessed on 17 December 2024).

- Raspberry Products. Available online: https://www.raspberrypi.com/ (accessed on 17 December 2024).

- STM32 Microcontroller Products. Available online: https://www.st.com/content/st_com/en.html (accessed on 17 December 2024).

- Xilinx Boards. Available online: https://www.xilinx.com/products/boards-and-kits.html (accessed on 17 December 2024).

- Wang, N.; Matthaiou, M.; Nikolopoulos, D.S.; Varghese, B. DYVERSE: DYnamic VERtical Scaling in multi-tenant Edge environments. Future Gener. Comput. Syst. 2020, 108, 598–612. [Google Scholar] [CrossRef]

- Beagle Board products. Available online: https://www.beagleboard.org/ (accessed on 17 December 2024).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- COCO Person Dataset. 2017. Available online: https://cocodataset.org/#home (accessed on 17 December 2024).

- STMicroelectronics–Object Detection, STM32 Model Zoo. Available online: https://github.com/STMicroelectronics/stm32ai-modelzoo (accessed on 17 December 2024).

- QEngineering, Jetson Nano and Raspberry Pi 4 Metrics. Available online: https://github.com/Qengineering (accessed on 17 December 2024).

- Choe, C.; Choe, M.; Jung, S. Run Your 3D Object Detector on NVIDIA Jetson Platforms: A Benchmark Analysis. Sensors 2023, 23, 4005. [Google Scholar] [CrossRef] [PubMed]

- ImageNet. Available online: https://www.image-net.org/ (accessed on 17 December 2024).

- PASCAL VOC Dataset. 2012. Available online: https://datasets.activeloop.ai/docs/ml/datasets/pascal-voc-2012-dataset/ (accessed on 17 December 2024).

- Rausand, M.; Hoyland, A. System Reliability Theory: Models, Statistical Methods, and Applications. Wiley-Interscience; John Wiley & Sons Inc: New York, NY, USA, 2004. [Google Scholar]

- Fei-Fei, L.; Deng, J.; Russakovksy, O.; Berg, A.; Li, K. Common Evaluation Metrics Used in Computer Vision Tasks, Documented in ImageNet Benchmark Datasets. 2009. Available online: https://image-net.org/about.php (accessed on 17 December 2024).

- Lin, T.; Patterson, G.; Ronchi, M.; Maire, M.; Belongie, S.; Bourdev, L.; Girschik, R.; Hays, J.; Perona, P.; Ramanan, D.; et al. Common Evaluation Metrics Used in Computer Vision Tasks, Documented in COCO. 2014. Available online: https://www.picsellia.com/post/coco-evaluation-metrics-explained (accessed on 17 December 2024).

- Goodfellow, I.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Mandry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. Stat 2017, 1050, 1–27. Available online: https://arxiv.org/abs/1706.06083 (accessed on 17 December 2024).

- Jetsons Benchmark. 2024. Available online: https://developer.nvidia.com/embedded/jetson-benchmarks (accessed on 17 December 2024).

- MLPerf Inference: Edge. 2024. Available online: https://mlcommons.org/benchmarks/inference-edge/ (accessed on 17 December 2024).

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Reliability Prediction of Electronic Equipment. MIL-HDBK-217F. 1991. Available online: https://www.navsea.navy.mil/Portals/103/Documents/NSWC_Crane/SD-18/Test%20Methods/MILHDBK217.pdf (accessed on 17 December 2024).

- International Electrotechnical Commission. Environmental Testing-Part 2: Tests (IEC 60068-2 Series); IEC: Geneva, Switzerland, 2013. [Google Scholar]

- Laprie, J.C. Dependability: Basic Concepts and Terminology in English, French, German, Italian and Japanese; Dependable Computing and Fault-Tolerant Systems; Springer: Vienna, Austria, 1991; Volume 5. [Google Scholar]

- Bloomfield, R.; Rushby, J. Assurance of AI Systems from a Dependability Perspective. arXiv 2024, arXiv:2407.13948. [Google Scholar]

| Scenario ID, Name | Stakeholder | Goal | Priority, Impact | Trade-Offs |

|---|---|---|---|---|

| Scenario I: Low-Cost, Low-Power MAV for Simple Object Detection | Small-scale Users: Organizations or individuals using MAVs in controlled environments (e.g., farms, industrial facilities). | Build a lightweight, low-cost, reliable MAV capable of performing simple object detection tasks, such as detecting a person or large static obstacles (e.g., cars, buses) in controlled environments. | Lightweight design, cost-efficiency, and low power consumption are critical to enhance agility and extend operational time in constrained environments. | The MAV does not need advanced AI processing capabilities, but must be agile, power-efficient, reliable, and operate in environments where only basic detection algorithms are sufficient. |

| Scenario II: Mid-Level UAV for AI-Based Obstacle Detection | Urban Surveillance Operators: Organizations deploying UAVs for traffic monitoring or public safety, requiring detection of moving obstacles. | Build a UAV with moderate performance capable of real-time AI-based obstacle detection for dynamic objects like pedestrians, cars, and moving obstacles. | Balanced performance, energy efficiency, and cost. The UAV must perform moderately complex tasks like running machine learning models (YOLO, SSD) while keeping power consumption in check to maintain flight time. | The UAV should handle real-time object detection without requiring high-end AI processing. Balancing power consumption with AI performance is critical for efficiency in tasks like monitoring or surveillance. |

| Scenario III: Cost-Effective, General-Purpose UAV for Outdoor Object Detection | Environmental Monitoring Operators: Organizations using UAVs to inspect areas such as forests, agricultural land, or urban landscapes for environmental changes, wildlife monitoring, or security. | A cost-efficient UAV that performs general-purpose outdoor object detection. | Cost-efficiency is the highest priority. Flash memory and RAM are next in importance to handle basic processing and data storage needs. | The system should reflect a balance between computational power and cost, being capable of processing moderate amounts of data from standard-resolution cameras and sensors while maintaining low power consumption. |

| Scenario IV: High-Performance UAV for Real-Time Advanced Object Detection and Tracking | Search and Rescue Teams: Organizations using UAVs for lifesaving, where precise object tracking (e.g., people, vehicles) is critical. | A high-performance UAV for real-time advanced object detection and tracking under challenging conditions. | High performance and precision are paramount. The UAV must support advanced AI models (e.g., Faster R-CNN, Complex-YOLOV3V) for real-time, accurate detection and tracking in complex scenarios. | Processing power takes priority, as the UAV needs to perform heavy-duty AI processing for real-time tracking. Cost and power consumption is secondary as to allow for moderate-duration operations in critical applications. |

| Scenario ID | Quality Models | ISO 25010 Quality Attribute | ISO 25010 Quality Sub-Attribute | Related Evaluation Indicators/Metrics | Measurement Method of the Indicator/Metric |

|---|---|---|---|---|---|

| Scenario I | MAV_Hardware QM_1; MAV_Software QM_1 | Functional Suitability | Functional Correctness | AI Model: Accuracy (mean average precision, recall vs. precision across specified object categories) | Accuracy: STM32 firmware (STM32Cube.AI) |

| Performance Efficiency | Time Behavior | HW: Processing power AI Model: Inference time (ms.) | Processing power: product specification. Inference time: STM32 firmware | ||

| Resource Utilization | HW: Average power consumption (W) | Average power consumption: product specification | |||

| Capacity | HW: Flash memory, RAM capacity AI Model: Required flash and RAM for the model. | HW flash memory, RAM capacity: product specification. AI model’s required flash and RAM: STM32CubeMX firmware | |||

| Reliability | Availability | HW: MTBF | MTBF: product specification | ||

| Portability | Adaptability | HW: Number of supported AI environments | Number of supported AI environments: product specification | ||

| Replaceability | HW: Cost | Cost: product specification | |||

| Usability | Operability | HW: Weight, size | Weight, size: product specification | ||

| Scenario II | UAV_Hardware QM_2; UAV_Software QM_2 | Functional Suitability | Functional Correctness | AI Model: Object detection precision, mean average precision, localization accuracy, precision, recall, Intersection over Union (IoU) | AI model metrics: benchmarking specifications |

| Performance Efficiency | Time Behavior | HW: Processing power AI Model: Inference time (ms) | Processing power: product specification. Inference time: benchmarking specification | ||

| Resource Utilization | HW: Average power consumption (W) | Average power consumption: product specification | |||

| Capacity | HW: Flash memory, RAM capacity | Flash memory, RAM capacity: product specification | |||

| Reliability | Availability | Hardware: MTBF | MTBF: product specification | ||

| Portability | Adaptability | HW: Number of supported AI environments | Number of supported AI environments: product specification | ||

| Replaceability | HW: Cost | Cost: product specification | |||

| Usability | Operability | HW: Weight, size | Weight, size: product specification | ||

| Scenario III | UAV_Hardware QM_3; UAV_Software QM_3 | Functional Suitability | Functional Correctness | AI Model: Object detection accuracy (mean average precision), recall vs. precision across specified categories | AI model metrics: benchmarking specifications |

| Performance Efficiency | Time Behavior | HW: Processing power AI Model: Inference time (ms) | Processing power: product specification Inference time: benchmarking specifications | ||

| Resource Utilization | HW: Power consumption (W) | Power consumption: product specification | |||

| Capacity | HW: Flash memory, RAM | Flash memory, RAM: product specification | |||

| Reliability | Availability | HW: MTBF | MTBF: product specification | ||

| Portability | Adaptability | HW: Number of supported AI frameworks | Number of supported AI frameworks: product specification | ||

| Replaceability | HW: Cost | Cost: product specification | |||

| Usability | Operability | HW: Weight, size | Weight, size: product specification | ||

| Scenario IV | UAV_Hardware QM_4; UAV_Software QM_4 | Functional Suitability | Functional Correctness | AI Model: Mean average precision | mean average precision: benchmarking specifications |

| Performance Efficiency | Time Behavior | AI Model: FPS | FPS: benchmarking specifications | ||

| Resource Utilization | AI Model: Average power consumption | Average power consumption: benchmarking specifications | |||

| Capacity | HW: Flash memory, RAM capacity | Flash memory, RAM capacity: product specification | |||

| Reliability | Availability | HW: MTBF | MTBF: product specification | ||

| Portability | Adaptability | HW: Number of supported AI environments | Number of supported AI environments: product specification | ||

| Replaceability | HW: Cost | Cost: product specification | |||

| Usability | Operability | HW: Weight, size | Weight, size: product specification |

| Product ID | Product Name | MTBF (Hours) | Processing Power | Supported AI Framework | Power Consumption (W) | Weight (gr) | Size (mm) | Flash Memory | RAM | Cost (~) |

|---|---|---|---|---|---|---|---|---|---|---|

| EdgeHW_1 | NVIDIA Jetson Nano [59] | 1836 K+ | 472 GFLOPS, Quad-core ARM Cortex-A57 1.43 GHz | TensorFlow, PyTorch, Caffe, Darknet, MXNet | 5–10 | 174 | 100 × 80 × 29 | 16 GB eMMC | 4 GB | $100 |

| EdgeHW_2 | NVIDIA Jetson Xavier NX [59] | 1634 K+ | 21 TOPS, 6-core NVIDIA Carmel ARM v8.2 | Tensorflow, PyTorch, MXNet, Caffe/Caffe2, Keras, Darknet, ONNXRuntime | 10–20 | 174 | 100 × 80 × 29 | 16 GB eMMC | 8 GB | $479 |

| EdgeHW_3 | NVIDIA Jetson AGX Orin [59] | 1381 K+ | Up to 275 TOPS (Ampere GPU + ARM) | PyTorch, MXNet, Caffe/Caffe2, Keras, Darknet, ONNXRuntime | 15–75 | 306 | 105 × 87 × 40 | 64 GB eMMC | 64 GB | $2349 |

| EdgeHW_4 | Intel Atom x6000E Series [60] | 400 K+ | 3.0 GHz (Cortex-A76) | TensorFlow, OpenVINO | 4.5–12 | 700 | 25 × 25 (system-on-module) | Up to 64 GB eMMC | Up to 8 GB | $460+ |

| EdgeHW_5 | Google Coral Dev Board [61] | 50 K+ | 4 TOPS (Edge TPU) + Quad Cortex-A53 | TensorFlow Lite | 4 | 139 | 88 × 60 × 22 | 8 GB eMMC | 4 GB | $129 |

| EdgeHW_6 | Raspberry Pi 4 [62] | 50 K+ | Quad-core Cortex-A72 1.8 GHz | TensorFlow, Keras, PyTorch, DarkNet (YOLO), Edge Impulse, MLKit, Scikit-learn | ~15 | 46 | 85.6 × 56.5 × 19.5 | MicroSD (ext.) | 8 GB | $35–$75 |

| EdgeHW_7 | Raspberry Pi Zero 2 W [62] | 50 K+ | Quad-core Cortex-A53 1.0 GHz | TensorFlow, Keras, PyTorch, DarkNet (YOLO), Edge Impulse, MLKit, Scikit-learn | ~12.5 | 16 | 65 × 30 × 5 | MicroSD (ext.) | 512 MB | $15 |

| EdgeHW_8 | Raspberry Pi 3 Model B+ [62] | 50 K+ | Quad-core Cortex-A53 1.4 GHz | TensorFlow, Keras, PyTorch, DarkNet (YOLO), Edge Impulse, MLKit, Scikit-learn | 12.5 | 100 | 85.6 × 56.5 × 17 | MicroSD (ext.) | 1 GB | $40 |

| EdgeHW_9 | STM32F4 Series (STM32F429ZI) [63] | 1000 K+ | ARM Cortex-M4 @180 MHz | TensorFlow, Keras, PyTorch and Scikit-learn via ONNX | ~0.1 | <10 | 20 × 20 (LQFP 144) | Up to 2 MB | 256 KB | $5–$15 |

| EdgeHW_10 | STM32F7 Series (STM32F746ZG) [63] | 1000 K+ | ARM Cortex-M7 @216 MHz | TensorFlow, Keras, PyTorch and Scikit-learn via ONNX | ~0.2 | <10 | 14 × 14 (LQFP 100) | Up to 1 MB | 320 KB | $10–$20 |

| EdgeHW_11 | STM32H7 Series (STM32H747XI) [63] | 1000 K+ | ARM Cortex-M7 @480 MHz | TensorFlow, Keras, PyTorch and Scikit-learn via ONNX | ~0.3 | <10 | 24 × 24 (LQFP 176) | 2 MB | 1 MB | $15 |

| EdgeHW_12 | STM32MP1 Series (STM32MP153C) [63] | 1000 K+ | Dual-core Cortex-A7 + Cortex-M4 | TensorFlow, Keras, PyTorch and Scikit-learn via ONNX | 2 | <10 | 14 × 14 (LQFP 100) | 512 MB | 8 GB | $15 |

| EdgeHW_13 | Xilinx Zynq ZCU102 [64] | 1000 K+ | Quad-core Cortex-A53 + FPGA | Caffe, PyTorch, TensorFlow [65] | ~20 | 300 | 200 × 160 × 20 | 512 MB Flash | 4 GB | $3250 |

| EdgeHW_14 | Xilinx Zynq ZCU104 [64] | 1000 K+ | Quad-core Cortex-A53 + FPGA | Caffe, PyTorch, TensorFlow [65] | ~25 | 400 | 200 × 160 × 20 | 512 MB Flash | 4 GB | $1700 |

| EdgeHW_15 | BeagleBone Black [66] | 50 K+ | ARM Cortex-A8 1 GHz | TensorFlow Lite, PyTorch, OpenVINO, Darknet (YOLO), Keras, Scikit-learn, OpenCV | 2–3 | 40 | 90 × 60 × 22 | 4 GB eMMC | 512 MB | $55 |

| EdgeHW_16 | BeagleBone AI-64 [66] | 50 K+ | Quad-core Cortex-A72 2.0 GHz | TensorFlow Lite, PyTorch, OpenVINO, Darknet (YOLO), Keras, Scikit-learn, OpenCV, TIDL, Edge Impulse | 7–10 | 60 | 100 × 60 × 20 | 16 GB eMMC | 4 GB | $145 |

| Scenario | Edge Hardware ID | Suggested HW Option |

|---|---|---|

| Scenario I | EdgeHW_11 | STM32H7 Series Microcontrollers |

| Scenario II | EdgeHW_1 | NVIDIA Jetson Nano |

| Scenario III | EdgeHW_6 | Raspberry Pi4 |

| Scenario IV | EdgeHW_2, EdgeHW_3 | NVIDIA Xavier NX, Jetson AGX Orin |

| Scenario | Selected HW | Suggested AI Model Option for Deployment |

|---|---|---|

| Scenario_I | STM32H7 Series Microcontrollers | EdgeSW_Scenario1_4, SSD Mobilenet v1 0.25 (256 × 256) |

| Scenario_II | NVIDIA Jetson Nano | EdgeSW_Scenario2_3, YOLOFastestV2 |

| Scenario_III | Raspberry Pi 4 | EdgeSW_Scenario3_3, YOLOFastestV2 |

| Scenario_IV | NVIDIA Jetson Xavier NX | EdgeSW_Scenario4_5, Complex-YOLOv3 (Tiny) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Canpolat Şahin, M.; Kolukısa Tarhan, A. Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs. Appl. Sci. 2025, 15, 1026. https://doi.org/10.3390/app15031026

Canpolat Şahin M, Kolukısa Tarhan A. Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs. Applied Sciences. 2025; 15(3):1026. https://doi.org/10.3390/app15031026

Chicago/Turabian StyleCanpolat Şahin, Müge, and Ayça Kolukısa Tarhan. 2025. "Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs" Applied Sciences 15, no. 3: 1026. https://doi.org/10.3390/app15031026

APA StyleCanpolat Şahin, M., & Kolukısa Tarhan, A. (2025). Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs. Applied Sciences, 15(3), 1026. https://doi.org/10.3390/app15031026