Abstract

Existing methods for detecting road damage mainly depend on manual inspections or sensor-equipped vehicles, which are inefficient, have limited coverage, and are susceptible to errors and delays. These traditional methods also struggle with detecting minor damage, such as small cracks and initial potholes, making real-time road monitoring challenging. To address these issues and improve the performance for real-time road damage detection using Street View Image Data (SVRDD), this study propose DAPONet, a new deep learning model. DAPONet proposes three main innovations: (1) a dual attention mechanism that combines global context and local attention, (2) a multi-scale partial overparameterization module (CPDA), and (3) an efficient downsampling module (MCD). Experimental results on the SVRDD public dataset show that DAPONet reaches a mAP50 of 70.1%, surpassing YOLOv10n (an optimized version of YOLO) by 10.4%, while reducing the model’s size to 1.6 M parameters and cutting FLOPs to 1.7 G, resulting in a 41% and 80% decrease, respectively. Furthermore, the model’s mAP50-95 of 33.4% on the MS COCO2017 dataset demonstrates its superior performance, with a 0.8% improvement over EfficientDet-D1, while reducing parameters and FLOPs by 74%.

1. Introduction

Road maintenance and management are vital for ensuring the safety and operational efficiency of urban areas []. However, existing road damage detection techniques primarily depend on manual inspections or vehicle-mounted sensors for data collection [,], which face challenges such as low detection efficiency, high costs, and complex data processing. Moreover, traditional approaches are less accurate in identifying minor damage (e.g., small cracks or early-stage potholes), which hampers timely detection and repair, raising the risk of traffic accidents. Meanwhile, with the acceleration of urbanization, the complexity of the road network and the increasing vehicular traffic, the demand for road quality is increasing. Timely and accurate road damage detection can not only extend the service life of the road and reduce the frequency and cost of maintenance, but also effectively prevent traffic accidents caused by road damage and ensure the safety of public travel. At the same time, the construction of modern smart city puts forward higher requirements for real-time data processing and efficient responses, and the traditional detection methods have difficulty meeting this demand.

In this context, road damage detection techniques based on artificial intelligence have significant advantages [,,]. These techniques can detect road damage more quickly and accurately, reduce manual intervention, and improve the efficiency of road maintenance, so they have attracted the attention of a large number of researchers. Currently, the existing AI methods for road damage detection can be roughly divided into four categories: methods based on traditional image processing [] utilize traditional image processing techniques such as image preprocessing, edge detection, feature extraction, etc., to analyze the road image and damage recognition. This type of method relies on hand-designed features, and is more sensitive to image quality and environmental conditions. Techniques driven by machine learning [] perform classification and detection by extracting manual features and using machine learning algorithms (e.g., support vector machines, random forests, etc.). Such methods offer greater flexibility in feature selection and algorithm optimization, but usually require large amounts of labeled data for training. Deep learning-based single-stage and two-stage detection models [,,,,,] include single-stage detection models, such as the You Only Look Once (YOLO) series, are classic examples of single-stage object detection. By integrating object localization and classification into a single network, they complete detection in just one forward propagation, making them highly efficient for real-time tasks. And two-stage detection models (e.g., Faster R-CNN), which are able to automatically learn features and perform end-to-end damage detection and classification. Deep learning methods perform well in complex scenarios with high detection accuracy and robustness, as well as hybrid models that incorporate lightweighting and robustness enhancement [,,,,,,,,,,], which aim to reduce model complexity and computational resource requirements while maintaining detection accuracy. Such models are suitable for resource-constrained devices and real-time detection applications, enhancing the feasibility and efficiency of practical deployment. The application of these methods greatly improves the road damage detection and classification capabilities in diverse scenarios.

Although these methods have made significant progress in detection accuracy, model robustness, and computational efficiency, several challenges remain. For instance, processing complex contextual information and maintaining real-time detection speeds while ensuring high accuracy continue to be significant obstacles. These issues highlight the need for further advancements in the field, in the efficient fusion of global and local contextual information to improve model performance in real-time applications. In response to these challenges, this paper proposes a novel DAPONet: A Dual Attention and Partially Overparameterized Network for road damage detection. DAPONet is designed to achieve efficient processing of multi-scale features and enhanced fusion of global and local information. The proposed model significantly improves detection speed and efficiency while maintaining high accuracy, making it suitable for real-time road damage detection tasks in complex scenarios. The main contributions of this study are outlined as follows:

- 1.

- A novel Global Localization and Context Attention (GLCA) mechanism is proposed, enhancing the model’s ability to handle complex backgrounds and multi-scale targets through the integration of both local and global attention mechanisms.

- 2.

- The proposed Cross-Stage Partial Depthwise Overparameterized Attention module is proposed, which combines partial overparameterized convolution with global and local Context Attention mechanisms to achieve efficient processing of multi-scale features, significantly improving the detection accuracy and computational efficiency of the model.

- 3.

- This study propose the Mixed Convolutional Downsampling module, which downsamples and processes feature maps via multiple parallel paths, enhancing both the diversity and efficiency of feature extraction.

- 4.

- A real-time detection model, a dual attention and partially overparameterized network, is designed for road damage detection tasks in complex scenes, which significantly improves the model’s performance in multi-scale feature extraction and fusion by incorporating the dual attention mechanism and partially overparameterized convolution, as well as parallel downsampling. The model is validated on SVRDD public dataset and MS COCO dataset, which demonstrates the superiority of the proposed model.

The paper is organized as follows. Section 2 provides an overview of related work in object detection and road damage detection. Section 3 details the architecture and components of the proposed DAPONet model, including its dual attention mechanism, multi-scale overparameterization, and downsampling techniques. Section 4 outlining the datasets used, describes the experimental environment, and evaluation metrics. Section 5 presents the experimental results and evaluates the performance of DAPONet in relation to existing models. Finally, Section 6 concludes the paper and suggests directions for future research.

2. Related Work

2.1. Object Detection

In recent years, object detection techniques have experienced rapid development from traditional methods to deep learning, and have made significant progress driven by lightweighting, multi-scale feature optimization, and Transformer []. Traditional methods rely on manual features and classifiers, which are inefficient and difficult to cope with complex scenes. The rise of deep learning has led to breakthroughs in detection accuracy and speed in two-stage methods based on CNN [] (e.g., R-CNN series []) and single-stage methods (e.g., YOLO series []). Lightweight models such as MobileNet [,,] and YOLOv5 [] drastically reduce the number of parameters by introducing depth-separable convolution [], etc., which improves the real-time detection capability on edge devices. In addition, multi-scale feature fusion techniques (e.g., FPN [], NAS-FPN []) and attentional mechanisms (e.g., ECA [], CBAM []) significantly improve the performance of small object detection. In recent years, the application of Transformer in object detection (e.g., DETR [], Swin Transformer [,]) further enhances the multitasking capability and complex scene adaptation through multi-head attention mechanism and global modeling.

2.2. Attention Mechanism

In convolutional neural networks (CNNs) in deep learning, the attention mechanism improves model performance by strengthening the network’s attention to important features. Common attention modules such as SE [], CBAM [], ECA [], CA [], SimAM [], and GAM [] each optimize feature representation in different ways, but they often have problems such as incomplete information fusion, high computational overhead, and separation of global and local information. To address these limitations, this paper proposes a GLCA module that simultaneously fuses global and local information and enhances channel and spatial interactions to overcome the shortcomings of traditional mechanisms.

2.3. Lightweight Object Detection Models

In the research of lightweight object detection models, in order to meet the requirements of edge devices for low computational complexity and high detection performance, researchers have proposed a variety of efficient lightweight models. For example, Tiny-DSOD [] introduces Depthwise Dense blocks and lightweight feature pyramid networks (Depthwise FPN). ThunderNet [], on the other hand, utilizes a context enhancement module and a spatial attention module to improve the feature representation ability, and is a two-stage detector suitable for resource-constrained devices. In addition, the lightweight versions of YOLO series (such as YOLOv3-Tiny, YOLOv4-Tiny, and YOLObile) [] significantly reduce the hardware resource consumption while maintaining high detection efficiency by optimizing the network structure, which promotes the development of object detection technology in edge computing environment. However, these methods still face the challenges of detection stability and lack of versatility in complex scenes, especially when dealing with multi-scale objects and complex background information. To solve these problems, we propose DAPONet, which combines global and local attention mechanism, multi-scale partially overparameterized convolution, and hybrid convolution downsampling module, significantly enhancing the ability of the model in multi-scale feature extraction and fusion, while reducing computational overhead, and showing excellent performance in real-time detection tasks of complex scenes.

2.4. Road Damage Detection

In recent years, road damage detection methods have experienced rapid development from traditional image processing techniques to deep learning methods. YOLO-based detection methods include YOLO-DC proposed by Ren, X. et al. [], YOLOv7 BiFPN-G proposed by He, J. et al. [], MED-YOLOv8s proposed by Zhao, M. et al. [], MN-YOLOv5 proposed by Guo, G. et al. [], the optimized YOLOv8 model proposed by Jiang, Y. et al. [], the improved YOLOv8 model proposed by Wang, J. et al. [], YOLOv5s-DSG proposed by Xiang W. et al. [], FG_YOLO proposed by Xie, X. et al. [], SCD proposed by Ding, K. et al. [], SCD -YOLO, YOLO9tr proposed by Youwai, S. et al. [], YOLO-LRDD proposed by Wan, F. et al. [], and YOLOv8-PD proposed by Zeng, J. et al. []. These methods significantly improve the accuracy and efficiency of road damage detection by improving the YOLO architecture, combining the attention mechanism, the lightweight design, and the feature fusion technique. In terms of accuracy and efficiency of road damage detection, however, many YOLO-based methods [] improve the detection performance while the model complexity and computation remain high, limiting their application on resource-constrained devices. In addition, significant progress has been made in road damage detection methods based on traditional CNNs. Xu, H. et al. [] proposed CrdNet, a CNN-based cascade detection network, Safaei, N. et al. [] proposed an automatic image processing algorithm based on the pixel density of cracks, and Yan, K. et al. [] proposed a detection algorithm based on deformable SSDs []. Roul, R.K. et al. [] proposed an intelligent integrated detection method combining CNN and Extreme Learning Machine [], Cha, Y.-J. et al. [] proposed a CNN method without feature extraction, and He, Q. et al. [,] proposed LSF-RDD and LMFE-RDD, respectively. These methods improve the accuracy and robustness of detection by improving the network structure, introducing multi-scale feature fusion and innovative loss functions. However, some of the methods still have limitations in dealing with complex backgrounds and diverse damage types, and the detection speed and real-time performance need to be further improved. In addition, lightweight and efficient road damage detection models have also become a research hotspot. Many scholars are committed to reducing the model parameters and computation volume while ensuring the detection performance so as to achieve real-time detection on edge devices. For example, Ren, X. et al. [] and He, J. et al. [] achieved efficient road crack detection by designing a lightweight YOLO model; MED-YOLOv8s proposed by Zhao, M. et al. [] and YOLO-LRDD proposed by Wan, F. et al. [] achieved efficient road crack detection by introducing a lightweight attention mechanism and optimizing the network structure, which substantially reduces the model complexity; YOLO9tr proposed by Youwai, S. et al. [] realizes real-time pavement damage detection by using an efficient feature aggregation network [] and attention mechanism. These lightweight methods significantly improve the inference speed of the model and the ease of deployment while ensuring higher detection accuracy, but the detection stability and generalization ability in extremely complex environments still need to be further optimized.

Although existing road damage detection methods have made significant progress in terms of detection accuracy and efficiency, they still have many shortcomings. First, while many YOLO-based models improve the detection performance, the model complexity and computation amount are still high, which limits their wide application on resource-constrained devices. Second, traditional deep learning methods have not yet reached the desired level of detection stability and generalization ability when dealing with complex backgrounds and diverse damage types, especially in extremely complex environments, and the performance of the models still needs to be improved. In addition, although the existing lightweight model reduces the number of parameters and the demand for computational resources to a certain extent, how to further improve the detection speed and real-time performance while ensuring high accuracy is still an urgent problem.

3. Methodology

In this section, we provide a comprehensive overview of the proposed model. We provide a detailed description of each module in the network model and clarify their respective functions. First, an explanation of the overall model will be provided, followed by a detailed explanation of the modules involved as well as their structure, including the GLCA, CPDA, and MCD.

3.1. Overview

DAPONet achieves efficient and accurate feature extraction and analysis through the combination of multi-layer and multi-module. The whole network consists of two main parts, Backbone and Neck, which undertake the tasks of initial feature extraction and further fusion and output, respectively, and finally the Head part generates the specific detection results.

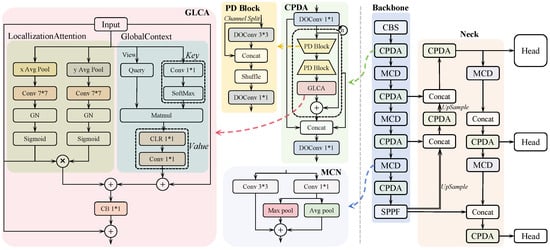

As shown in Figure 1, in the Backbone part of DAPONet, the network extracts and processes key features from the input image layer-by-layer by combining multiple CPDA modules and MCD modules. The CPDA module effectively captures multi-scale features through partial overparameterization techniques to enhance the model representation. The MCD module further compresses the size of the feature map through feature downsampling to save computational resources for subsequent processing. In the Neck section, DAPONet uses multiple CPDA and MCD modules to perform multiple up- and down-sampling and feature fusion on the features extracted from the Backbone to ensure that the model can accurately analyze the image content at different levels, and the feature concatenation and up-sampling operations in the Neck further integrate the features from different scales to generate a more detailed high-level feature map. This process ensures that the network is able to process the features at the same time. This process ensures that the network is able to handle different levels of features simultaneously, providing strong support for object detection in complex scenarios. Finally, the Head part of DAPONet transforms the high-level feature maps from the Neck into concrete detection results. Through the design of the multi-scale Head, the network is able to simultaneously process feature maps from different scales to generate detection results such as the location and category of the target.

Figure 1.

Shows the overall framework.

3.2. Global Localization and Context Attention

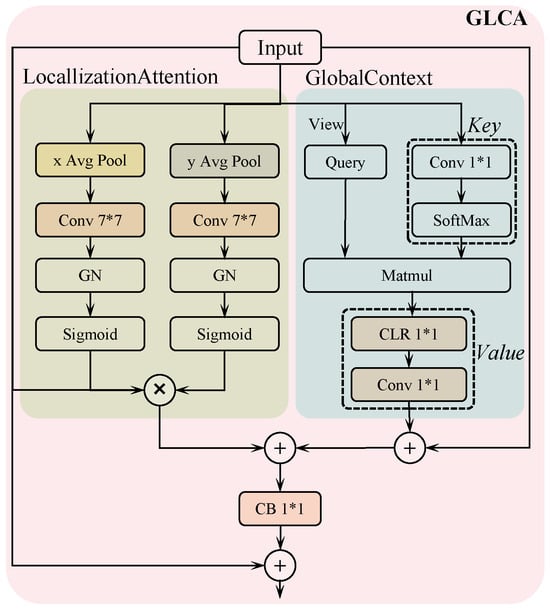

The module consists of two sub-modules: Efficient Localization Attention (ELA) [] for handling local context and the Global Context Block (GC) for processing global context information.

As shown in Figure 2, the ELA module is responsible for capturing local salient features in the input feature maps and generating local attention weights by pooling and convolution operations in the X and Y directions, respectively, to ensure that the model can accurately focus on key regions in the image. Meanwhile, the GC module focuses on the capture and integration of global contextual information, generates a weighted feature map through matrix multiplication operations to represent the important information in the global scope, and further enhances the expressive capability of the feature map through nonlinear activation and normalization. Subsequently, the feature maps generated by the ELA and GC modules are fused by element-by-element summation to ensure the organic combination of local and global information, so that the model is able to capture detailed features while maintaining an overall grasp of the global structure. Finally, the fused feature maps are further processed by a 1 × 1 convolutional layer, which integrates and compresses the feature information, enhances the expressive ability of the feature maps and effectively reduces the dimensionality, thus making the subsequent computation more efficient.

Figure 2.

Global Localization and Context Attention.

Specifically, in the ELA module, firstly, the input feature maps are average pooled in the X and Y directions, respectively, which reduces the data dimensions while retaining the global information by compressing the features along specific dimensions. Then, after 7 × 7 convolution operation, rich local features are extracted in specific directions, followed by normalization through Group Normalization (GN) [] to improve feature stability, and attention weights are generated through Sigmoid activation function to highlight important feature regions. Finally, the module multiplies the initial input feature map with the processed feature maps in the X- and Y-directions element by element, and uses the local attention mechanism in the spatial dimension to strengthen the salient regions in the input feature maps, so as to improve the model’s ability to identify key features. In the GC module, firstly, the input feature map is transformed into two different representations, Query and Key, where the Query part performs shape transformation on the input feature map, and the Key part extracts the features through a 1 × 1 convolutional layer and maps them into weight distributions by SoftMax function to ensure that the significant regions are effectively attended to on a global scale. Then, through a matrix multiplication (Matmul) operation, Query and Key interact to generate a weighted feature map that represents the global contextual information in the input feature map. This weighted feature map is then fed into a network consisting of the CLR module, which enhances the representation of the feature map through nonlinear activation and normalization, and the 1 × 1 convolution, which further refines the feature map. Finally, the Global Context Block sums up the original input feature maps and the generated Value feature maps element by element to ensure the seamless combination of the original features and the global context information, thus improving the overall detection and recognition capability of the model. Among them, CLR firstly performs a convolution operation on X to obtain the convolution result , then performs layer normalization on the convolution result. Then, we calculate the mean and standard deviation , and apply the formula LN = . Finally, we apply the ReLU activation function .

where LN refers to the layer normalization, Conv refers to the convolution operation, X is the input data, W is the weight matrix of the convolutional layer, and b is the bias term added after convolution. and are the normalized scale and displacement parameters.

3.3. Cross-Stage Partial Depthwise Overparameterized Attention Module

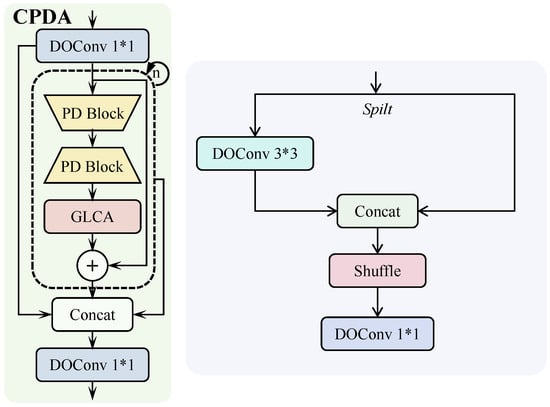

The CPDA is a core module in the DAPONet architecture designed for efficient processing of multi-scale feature extraction in images.

As shown in Figure 3, the CPDA module first initially processes the input feature map through a DOConv 1 × 1 convolutional layer [], which enhances the diversity and richness of the features using depth-separable convolution and partially overparameterized techniques. Then, two PD Blocks (Partial Depthwise Partially Overparameterized Convolutional Blocks) within the module are connected in series to capture additional contextual information by expanding the receptive field, which is particularly beneficial for handling complex-shaped features. Each PD Block primarily focuses on extracting local features using a 3 × 3 DOConv convolution. Additionally, the PD Block incorporates Split, Concat, and Shuffle operations to enhance feature interaction between channels. Finally, feature integration is achieved through a 1 × 1 DOConv convolution, ensuring that the extracted features are effectively combined and enhanced.

Figure 3.

Cross-Stage Partial Depthwise Partially Overparameterized Attention module.

Subsequently, the feature map enters the GLCA module, which further strengthens the expressive power of the feature map by combining the attention mechanism of global and local context information, ensuring that the output contains rich details, as well as reflecting the global contextual relationships. The processed feature map is fused with the previous feature information by element-by-element summation, which preserves and integrates the important features at each stage. Finally, the fused feature map is further processed by feature concatenation and another DOConv 1 × 1 convolutional layer to compress the feature dimensions and integrate the information.

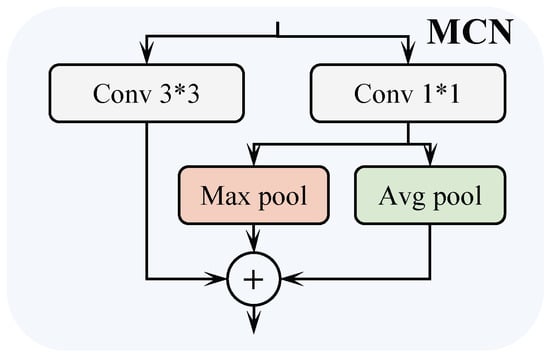

3.4. Mixed Convolutional Downsampling

As illustrated in Figure 4, the module processes the input feature maps through several parallel paths, employing 3 × 3 and 1 × 1 convolutions, Max Pooling, and Avg Pooling to capture information across different scales. In the first path, the 3 × 3 convolution operation has a large receptive field to capture the details and edge information in the feature map. The second path performs a linear transformation of the input channel by 1 × 1 convolution, which preserves the local information while reducing the computational complexity. The maximum pooling operation in the third path preserves salient features and removes redundant information by selecting the maximum value within each pooling window to reduce the resolution of the feature map. The fourth path, on the other hand, preserves the overall information of the feature map using average pooling, which is suitable for processing features with smooth variations.

Figure 4.

Mixed convolutional downsampling.

3.5. Loss Function

In this subsection, the loss function used in this paper will be introduced.

In the object detection loss function, multiple symbols work together to optimize the model performance: , , and are the weight coefficients of each part of the loss function, which are used to balance the impact of bounding box regression, classification, and objectness loss. is the true class label for the ith class (which takes the value 0 or 1), and is the probability of the jth object being an object that the model predicted this class. is an indication of the presence of the JTH target (1 is present, 0 is absent), and is the probability that the model predicts the presence of the target. is the center coordinate of the predicted box, and is the center coordinate of the true box. and are the width and height of the true box, respectively, while w and h are the width and height of the predicted box. Together, they are used to calculate the aspect ratio loss and intersection over union (IoU) metrics, which help the model better locate the boundary of the object.

4. Experimental Details

This section provides a brief overview of the experimental setup and associated resources. Following this, the experimental dataset, setup, and evaluation metrics are discussed in sequence.

4.1. Datasets

The contents of SVRDD and MS COCO datasets are shown in Table 1, and a detailed explanation of each dataset is carried out below.

Table 1.

Details of SVRDD and MS COCO datasets.

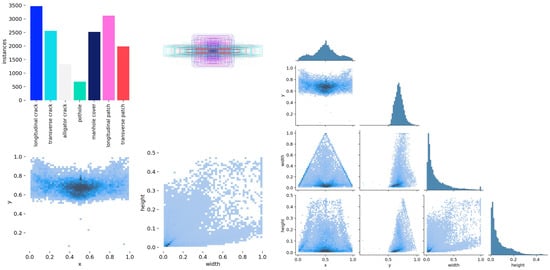

(1) The Street View Image Dataset for Automated Road Damage Detection (SVRDD) [] is a dataset designed for road damage detection using street view images. These dataset is the first publicly available street view image dataset dedicated to road damage detection, and consists of 8000 street view images from the Dongcheng District, Xicheng District, Haidian District, Chaoyang District, and Fengtai District in Beijing. These images cover a wide range of urban road types and pavement conditions with detailed annotations, and contain a total of 20,804 damage instances with bounding box annotation levels. Seven categories of pavement damage were included: longitudinal cracks, transverse cracks, web cracks, potholes, longitudinal patches, transverse patches, and manhole covers. The images in the dataset were captured in multiple seasons, weather and lighting conditions, and the background contained pedestrians, vehicles, buildings, viaducts, trees and their shadows, adding to the challenge of detection. Detailed analysis of the dataset is shown in Figure 5. The SVRDD dataset consists of 8000 images with a total of 20,804 damage annotations across six categories. Potholes and alligator cracks are less common, as they typically occur after severe road aging. Among the five regions, Chaoyang District has the lowest average number of damage instances. Damage centers are mainly located in the lower half of the images, while the upper half generally serves as the background. Due to perspective effects, the road areas in the upper half are narrower, making damage detection more challenging. The height-width distribution shows that damage widths can span the entire image, whereas lengths reach up to half the image height. Longitudinal and transverse cracks and patches are similarly distributed, occupying up to 10% of the image area. Alligator cracks cover larger areas, up to 50%, while potholes and manhole covers occupy mostly less than 0.5% of the image area.

Figure 5.

Visualization of detailed analysis of the SVRDD dataset.

(2) The Microsoft Common Objects in Context (MS COCO) dataset [] is a crucial benchmark in the field of computer vision, widely utilized for tasks such as object detection, image segmentation, and image annotation. The COCO dataset encompasses 80 common object classes across more than 330,000 images, with approximately 200,000 images providing detailed annotation information. These annotations include object bounding boxes, pixel-level segmentation masks, human keypoints, and image descriptions. Due to its complex scenarios, diverse annotation types, and high-quality data, COCO has become a standard platform for evaluating the performance of deep learning models, and has significantly contributed to the advancement of computer vision technology.

4.2. Experimental Environment

The experiments were conducted on a Windows 11 system with an NVIDIA GeForce RTX 3090 24G graphics card. The deep learning framework used was PyTorch with CUDA version 11.8 and 2.0.1, while Jupyter Notebook was employed as the compiler, and Python 3.8 served as the programming language. All algorithms used for comparison were executed under the same computational environment for consistency. Images were resized to 640 × 640 × 3, with a batch size of 32. The optimizer was SGD, the learning rate was set to 0.0937, and the model was trained for 300 epochs.

4.3. Evaluation Metrics

In this study, we used a set of four key metrics to evaluate the performance of the detection model, specifically, Precision (P), Recall (R), mAP50, and mAP50:95. Moreover, in order to measure the performance of the model in terms of lightweight, we use the number of model Parameters (Params), FLOPs, and Model Size (Size) for comparison.

where refers to true positives, i.e., the number of samples that the model correctly predicts as positive. is false positive, which is the number of samples that the model incorrectly predicts as positive. is false negative, which is the number of samples that the model incorrectly predicts as negative. N is the total number of categories for the detection task. t represents the average precision () at a specific recall (R) or intersection over union (IoU) threshold t. R is the recall. L denotes the number of layers of the model. The weights refer to the weight parameters associated with each layer. Bias refers to the bias parameter in each layer. The Model Size is the total size of all parameters in bytes, where each parameter is represented as a floating-point number.

5. Experimental Results and Discussion and Analysis

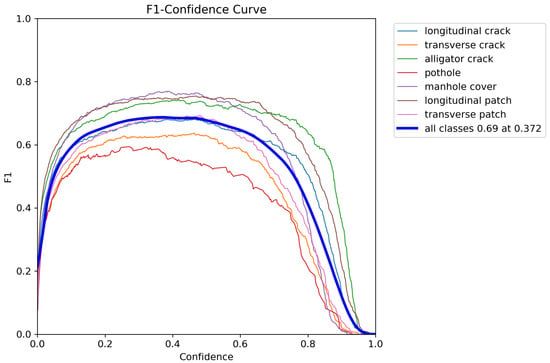

In order to test the effectiveness of the proposed model DAPONet in this paper, we first perform the hidden danger identification with the current mainstream object detection models YOLOv5 [], YOLOv8 [], YOLOv9 [], and YOLOv10 [] on SVRDD dataset to compare and analyze the superiority of DAPONet in various hidden danger detection. Comparison tests are then conducted on the SVRDD dataset to evaluate the performance of the model in various aspects through seven metrics, namely P, R, mAP50, mAP50-95, Params, FLOPs, and Model Size, to further demonstrate the significant advantages of the model in terms of detection accuracy and computation rate. Subsequently, the confusion matrix is used to evaluate the classification performance of the model in identifying various types of defects, and to find out the advantages and room for improvement of the model. The performance of the model under different confidence thresholds is further analyzed through the F1-Confidence Curve to find the confidence level that allows the model to achieve the best balance between precision and recall. The generalized object detection performance of DAPONet is then validated on the MS COCO2017 dataset and compared with other lightweight models to assess its adaptability and robustness in resource-constrained environments. Finally, ablation studies are conducted to verify the validity of the individual modules mentioned in this paper in terms of their structure and their specific contribution to the model performance.

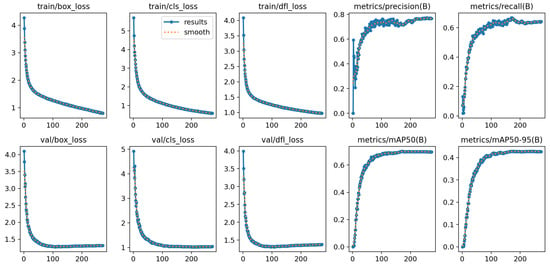

Figure 6 records the changes in edge loss, classification loss, focus loss, and model precision, recall, and average precision for each iteration of the model on the training and test sets. According to the charts, it can be seen that at the beginning of the training period, the model has high values for each loss and converges quickly, while the model metrics rise rapidly, indicating that the model is learning efficiently at this time. With the increase in the number of iterations, the rate of change in each curve gradually decreases, and after about 40 iterations, the curves begin to stabilize, and finally about 300 iterations, the curves basically remain stable.

Figure 6.

At training time, DAPONet’s loss on the SVRDD dataset along with the evaluation metric iteration plot.

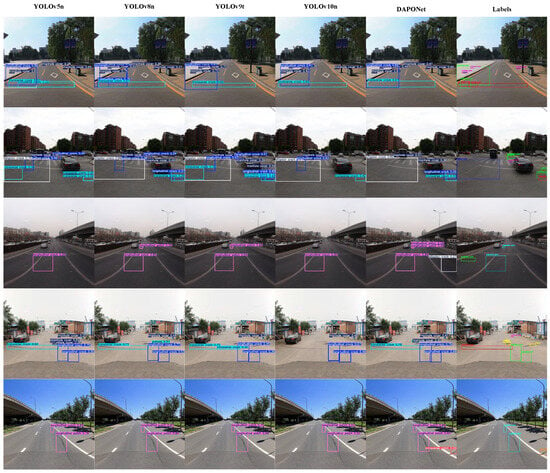

5.1. Comparative Experiments

Figure 7 shows several representative sets of detection results of each model in real-world scenarios, in order to clearly see the differences in the performance of different models in detecting road hazards. Among them, YOLOv5n is relatively stable in detecting cracks and manhole covers, but it has limited ability in identifying small cracks in some complex scenarios, with a high leakage rate, which indicates that the network structure of YOLOv5n has some limitations in dealing with small-sized objects, especially in feature extraction and fusion, which is not fine enough. In contrast, YOLOv8n has improved its detection accuracy, especially in the detection of long cracks and patches, but there is still a leakage problem in some complex backgrounds, which indicates that the generalization ability of YOLOv8n still needs to be improved, while YOLOv9t, although the detection speed has improved, the detection accuracy decreases in some scenarios, especially for the detection of fine cracks, which indicates that it has sacrificed part of the model’s accuracy while improving the detection rate. YOLOv10n is better for the detection of cracks and patches in larger areas, but the phenomena of misdetection and leakage are more obvious in some cases. Compared with the above models, it can be seen that DAPONet’s performance is significantly superior, its CPDA module can capture multi-scale information of the detection target, thus accurately identifying small cracks, patches, and other such tiny hidden dangers, which makes up for the limitations of YOLOv5n when detecting small-sized objects and, at the same time, with the highly efficient downsampling ability of MCD, it can ensure the model’s accuracy while improving the inference speed. It makes up for the shortcomings of YOLOv9t. Moreover, DAPONet can accurately identify various types of road damage in various complex scenarios, and its generalization ability and stability are significantly better than YOLOv8n and YOLOv10n.

Figure 7.

Experimental models recognize visual results on the SVRDD dataset.

To assess the performance of the proposed model, we use SVRDD trained YOLOv5, YOLOv8, YOLOv9, YOLOv10 models for comparison, from P, R, mAP50, mAP50-95, Params, FLOPs, Model Size to analyze the model performance, the experimental results are shown in Table 2. It can be seen that DAPONet’s P, R, and mAP (mAP50, mAP50-90) are significantly better than other models, reaching 71.6%, 66.6%, 70.1%, and 42.8%, respectively. The advantage of mAP is the most obvious, with the mAP50 of DAPONet being 8.4%, 5.6%, 9.3%, and 10.4% higher compared to YOLO v5n, YOLOv8n, YOLOv9t, and YOLOv10n, respectively, and the mAP50-95 being 7%, 5%, 6.7%, and 7% higher, which indicates that DAPONet can maintain a higher level of mAP50 under different IoU thresholds can maintain high detection performance, which gives it a strong advantage in complex, multi-scale damage detection tasks, while maintaining high accuracy, DAPONet has significantly lower complexity than other models, with only 1.6 M parameters, 1.7 G FLOPs, and a 3.7 MB model size; the lowest among all compared models. Its mAP50 metric demonstrates superior performance in detecting road damage, which is critical for applications requiring precise identification of road issues. Additionally, the reduced FLOPs and small model size enable real-time processing on devices with limited computational resources, such as mobile phones, edge devices, and on-site inspection tools. These metrics not only showcase DAPONet’s enhanced detection capabilities, but also offer practical advantages for real-world deployment. High detection accuracy enhances reliability in applications like autonomous vehicles and mobile-based inspections, while the computational efficiency ensures the model can function effectively in resource-constrained environments. Consequently, DAPONet is highly suitable for diverse road damage detection scenarios, including edge computing and mobile deployments.

Table 2.

Comparative experimental results of SVRDD dataset test set.

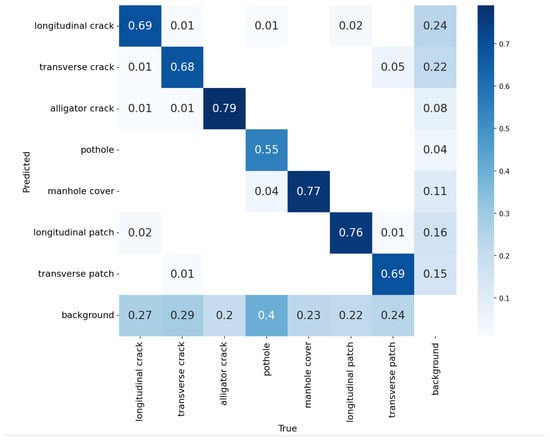

The confusion matrix in Figure 8 illustrates the model’s classification performance across various defect types. The model demonstrates strong performance in identifying alligator cracks, manhole covers, and longitudinal patches, achieving accuracies of 0.79, 0.77, and 0.76, respectively. Although the accuracy for detecting longitudinal cracks, transverse cracks, and transverse patches is slightly lower, the model still performs reliably, with accuracies of 0.69, 0.68, and 0.69, respectively. Overall, these results indicate that the model effectively identifies both prominent and subtle defects. The above data highlight the excellent performance of the model in the identification of critical hazards such as cracks, patches and manhole covers. However, for pothole identification and detection, the model is slightly insufficient, with an identification accuracy of only 0.55, and a large portion of the pothole is incorrectly identified as background, which may be due to the difficulty of extracting pothole features from the model, especially when the pothole is highly similar to the surrounding environment in terms of its shape, size, texture, and color, which is easy to be misclassified as background. Meanwhile, the background was also misclassified to varying degrees, which indicates that the model still has a lot of room for improvement in pothole identification as well as background classification.

Figure 8.

The confusion matrix demonstrates the classification performance of the model in identifying various types of defects.

As shown in Figure 9, the F1-Confidence Curve demonstrates the performance of the model in identifying various types of hidden dangers under different confidence thresholds. It can be seen through the graph that with the increase in confidence, the value of F1 will first increase and then decrease; this is because the increase in confidence will make the model precision rate gradually increase, so that the model will select the prediction results more strictly, which leads to the exclusion of some correct predictions, and then make the recall rate decrease, and ultimately lead to the decrease in F1. The graph shows that when the confidence level is 0.321, the average F1 score for all categories reaches the maximum value of 0.62. At this point the model finds the optimal balance between precision and recall, and at this confidence level the model is able to carry out the hidden danger identification task with the minimum error rate.

Figure 9.

F1-Confidence Curve of DAPONet on SVRDD dataset.

As shown in Table 3, in the validation set of MS COCO2017 dataset, in order to verify the performance of the proposed model, in general-purpose scenarios, we compare DAPONet trained using the MS COCO2017 dataset with the lightweight models NanoDet-Plus [], DPNet [], PP-PicoDet [], EfficientDet [], and the YOLOv5n model. This experiment demonstrates the superior performance of the model for generic object detection.

Table 3.

Experimental results on MS COCO2017 dataset val.

5.2. General Object Detection Experiments

In the validation set of MS COCO2017 dataset, DAPONet performs well in all the indexes. mAP50 and mAP50-95 of DAPONet achieved the highest value and far exceeded the other models, reaching 48.3% and 33.4%, respectively. Despite having 1.6 M parameters and 1.7 G FLOPs—slightly higher than some lightweight models—DAPONet delivers superior performance. Additionally, DAPONet’s model size of just 3.6 MB is smaller than most models, enhancing its suitability for resource-limited environments like mobile devices and embedded systems. In contrast, models such as NanoDet-Plus-m-1.5x and DPNet, though having fewer parameters and FLOPs, cannot achieve the same detection accuracy as DAPONet. Meanwhile, EfficientDet-D1, although much larger than DAPONet in terms of FLOPs and model size, achieves a mAP50-95 of only 32.6%, indicating limited performance gains despite significantly higher computational demands.

5.3. Ablation Study

In order to further prove the effectiveness of the two modules (CPDA and MCD), we conducted ablation studies on the two modules on MS COCO2017 and SVRDD data sets using YOLOv8n as the baseline algorithm, and the results of the experiments are shown in Table 4. The Recall(R) and mAP50 of the original YOLOv8n model are 59.3% and 64.5%, respectively, whereas DAPONet, although the Precision decreases slightly after only the CPDA module is introduced, the Recall(R) improves from 59.3% to 62.1%, which is an increase of nearly 2.8%, and the mAP50 improves from 64.5 to 66.1, with an increased by nearly 1.6%, while Params, FLOPs, and Model Size are all reduced, especially FLOPs are reduced from 8.1 to 4.6, which is about 41%. This verifies the efficient multi-scale extraction capability of the CPDA module. When only the MCD module is introduced into DAPONet, the Recall(R) and mAP50 of the model are also improved, in which the Recall(R) increases from 59.3% to 60.8%, which is nearly 1.5%, and the mAP50 increases from 64.5 to 65.2, which is nearly 0.7%, and the reduction in the Params, FLOPs, and Model Size are also achieved. Thus, the efficient downsampling effect of the MCD module is proved. When the two are combined, the model performance reaches the best, which not only exceeds the benchmark model YOLOv8n in precision from 70.7% to 71.6%, but also improves the recall from 59.3% to 66.6%, which is an increase of about 7.3%, and the mAP50 and mAP50-95 are increased from 64.5, 37.8, 70.1%, and 42.8%, which are significant increases, respectively. increase. And Params, FLOPs, and Model Size are all reduced significantly, in which FLOPs are reduced from 8.1 to 1.7, which is about 79%. It can be inferred that both CPDA and MCD play an indispensable role in DAPONet. The combination of the two makes DAPONet able to effectively capture the multi-scale features of the detected targets, and at the same time, realize the efficient processing of multi-scale features and the organic fusion of global and local information, which in turn significantly improves the detection rate while guaranteeing the accuracy of the model.

Table 4.

Ablation study results of SVRDD test set, where the baseline model is YOLOv8n.

5.4. Discussion on Dataset Biases and Limitations

The SVRDD dataset used in this study is heavily based on urban road conditions, particularly those in specific regions such as Beijing districts. As a result, the model trained on these dataset may exhibit certain biases related to the types of road damage and environmental conditions prevalent in urban areas, such as road cracks, potholes, and surface wear that are common in highly trafficked city streets. This could limit the model’s ability to generalize effectively to rural roads or less-developed regions, where road conditions and types of damage might differ significantly. For example, rural roads often have different surface types, wear patterns, and environmental factors (e.g., less frequent maintenance, varying weather conditions) that may not be adequately represented in the SVRDD dataset. Additionally, the dataset may not fully cover seasonal variations in road damage, such as cracks caused by freezing and thawing or damage due to extreme weather conditions like heavy rains or flooding.

5.5. Error Analysis

Although DAPONet performs well in terms of detection performance and computational efficiency, surpassing many mainstream object detection models in terms of the balance of accuracy and speed, it still has some shortcomings. Firstly, the model’s leakage and false detection problems are more prominent in small objects (e.g., potholes and cracks) and complex backgrounds, especially in pothole detection, where the model is difficult to effectively distinguish similar regions between potholes and backgrounds, resulting in lower detection accuracy. In addition, although DAPONet’s multi-scale feature extraction and efficient downsampling modules (CPDA and MCD) improve the precision and efficiency, the ability to differentiate between the background and the target still needs to be strengthened in some complex scenarios. The analysis of the F1-Confidence curve shows that there is a challenge in balancing the model with different confidence levels, and how to improve the recall rate while guaranteeing the high precision is still the the focus of future optimization. Meanwhile, although the computational consumption of DAPONet has increased compared with other models with lower precision, how to further optimize the computational efficiency on resource-constrained devices is still a problem to be solved.

To address the challenges identified in the error analysis, we propose several concrete strategies for improving the detection of small and background-similar objects, such as the class-weighted loss function. To enhance detection of small objects like potholes, we propose applying a class-weighted loss function, giving higher importance to the pothole class during training. This helps the model focus more on correctly identifying and localizing smaller objects. Transfer learning from models trained on large-scale datasets like MS COCO or ImageNet can help DAPONet learn more generalized features, improving its ability to recognize diverse types of damage. Fine-tuning a pre-trained model specifically for road damage detection allows DAPONet to leverage existing knowledge from a broader dataset while adapting to the specific characteristics of road distress. Pruning the model (removing less significant weights and parameters) and applying techniques like quantization can reduce the size and computational demands of DAPONet without significantly compromising performance. This can make the model more suitable for deployment on resource-constrained devices.

6. Conclusions

In this paper, we propose the Dual Attention and Partially Overparameterized Network (DAPONet), a real-time detection model for the road damage detection task (SVRDD). To enhance detection accuracy and computational efficiency, we propose several innovative modules: the Global Context and Local Attention module (GLCA), the Partially Overparameterized module (CPDA), and the Mixed Convolutional Downsampling module (MCD). Experimental results show that DAPONet significantly outperforms other leading object detection models on the SVRDD dataset, excelling in both accuracy and efficiency. Our model achieves 70.1% mAP50, a 10.4% improvement over the efficient YOLOv10n variant, while reducing model parameters to 1.6 M and FLOPs to 1.7 G, reflecting reductions of 41% and 80%, respectively. Additionally, DAPONet performs excellently on the MS COCO2017 dataset, with a mAP50-95 of 33.4%, marking a 0.8% improvement over the EfficientDet-D1 lightweight model. Although DAPONet performs well across multiple tasks, there is still room for further optimization. In the future, we will combine the Transformer architecture to improve the performance of DAPONet in small object detection and complex scenes, and introduce domain adaptation technology to improve its cross-region generalization ability and further enhance the accuracy and practicality of the model.

Author Contributions

Conceptualization, W.P.; Methodology, W.P.; Software, W.P.; Validation, W.P.; Formal analysis, W.P. and C.L. (Chengze Lv); Investigation, W.P. and J.L.; Resources, J.L. and C.L. (Chong Li); Data curation, W.P. and J.L.; Writing—original draft, W.P., X.W. and C.L. (Chengze Lv); Writing—review & editing, W.P., J.L., C.L. (Chengze Lv) and C.L. (Chong Li); Visualization, X.W. and G.W.; Supervision, X.W. and C.L. (Chong Li); Project administration, J.L. and C.L. (Chong Li); Funding acquisition, C.L. (Chong Li). All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by the Research on Automotive Electronic Software Code Quality Control Technology and Tool Development of the 2022 Municipal Key Research and Development Program Project (grant number: 0001KTSJ20230741-01).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in this experiment is publicly available. The SVRDD dataset can be found from https://zenodo.org/records/10100129, its original article is https://www.nature.com/articles/s41597-024-03263-7, COCO dataset can be found at https://cocodataset.org/, his original article is at https://link.springer.com/chapter/10.1007/978-3-319-10602-1_48 accessed date 1 November 2024.

Conflicts of Interest

Authors Jianmei Lei and Chong Li were employed by the company China Automotive Engineering Research Institute Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Fan, L.; Cao, D.; Zeng, C.; Li, B.; Li, Y.; Wang, F.-Y. Cognitive-Based Crack Detection for Road Maintenance: An Integrated System in Cyber-Physical-Social Systems. IEEE Trans. Syst. Man, Cybern. Syst. 2023, 53, 3485–3500. [Google Scholar] [CrossRef]

- Zhang, T. Toward Automated Vehicle Teleoperation: Vision, Opportunities, and Challenges. IEEE Internet Things J. 2020, 7, 11347–11354. [Google Scholar] [CrossRef]

- Iparraguirre, O.; Iturbe-Olleta, N.; Brazalez, A.; Borro, D. Road Marking Damage Detection Based on Deep Learning for Infrastructure Evaluation in Emerging Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22378–22385. [Google Scholar] [CrossRef]

- Khan, M.W.; Obaidat, M.S.; Mahmood, K.; Batool, D.; Badar, H.S.S.; Aamir, M.; Gao, W. Real-Time Road Damage Detection and Infrastructure Evaluation Leveraging Unmanned Aerial Vehicles and Tiny Machine Learning. IEEE Internet Things J. 2024, 11, 21347–21358. [Google Scholar] [CrossRef]

- Silva, L.A.; Leithardt, V.R.Q.; Batista, V.F.L.; Villarrubia González, G.; De Paz Santana, J.F. Automated Road Damage Detection Using UAV Images and Deep Learning Techniques. IEEE Access 2023, 11, 62918–62931. [Google Scholar] [CrossRef]

- Yin, T.; Zhang, W.; Kou, J.; Liu, N. Promoting Automatic Detection of Road Damage: A High-Resolution Dataset, a New Approach, and a New Evaluation Criterion. IEEE Trans. Autom. Sci. Eng. 2024, 1–13. [Google Scholar] [CrossRef]

- Safaei, N.; Smadi, O.; Masoud, A.; Safaei, B. An Automatic Image Processing Algorithm Based on Crack Pixel Density for Pavement Crack Detection and Classification. Int. J. Pavement Res. Technol. 2022, 15, 159–172. [Google Scholar] [CrossRef]

- Roul, R.K.; Rani, R. Cultivating road safety: A comprehensive examination of intelligent ensemble-based road crack detection. Multimed. Tools Appl. 2024. [Google Scholar] [CrossRef]

- Xu, H.; Chen, B.; Qin, J. A CNN-Based Length-Aware Cascade Road Damage Detection Approach. Sensors 2021, 21, 689. [Google Scholar] [CrossRef]

- Yan, K.; Zhang, Z. Automated Asphalt Highway Pavement Crack Detection Based on Deformable Single Shot Multi-Box Detector Under a Complex Environment. IEEE Access 2021, 9, 150925–150938. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- He, Q.; Li, Z.; Yang, W. Lsf-rdd: A local sensing feature network for road damage detection. Pattern Anal. Appl. 2024, 27, 99. [Google Scholar] [CrossRef]

- Jiang, Y. Road damage detection and classification using deep neural networks. Discov. Appl. Sci. 2024, 6, 421. [Google Scholar] [CrossRef]

- Ding, K.; Ding, Z.; Zhang, Z.; Yuan, M.; Ma, G.; Lv, G. SCD-YOLO: A Novel Object Detection Method for Efficient Road Crack Detection. Multimed. Syst. 2024, 30, 351. [Google Scholar] [CrossRef]

- Ren, X.; Shaolin, H.; Hou, Y.; Ye, K.; Zhengquan, C.; Wu, Z. A lightweight convolutional neural network for detecting road cracks. Signal Image Video Process. 2024, 18, 6729–6743. [Google Scholar]

- He, J.; Wang, Y.; Wang, Y.; Li, R.; Zhang, D.; Zheng, Z. A lightweight road crack detection algorithm based on improved YOLOv7 model. Signal Image Video Process. 2024, 18, 847–860. [Google Scholar] [CrossRef]

- He, Q.; Li, Z.; Yang, W. LMFE-RDD: A road damage detector with a lightweight multi-feature extraction network. Multimed. Syst. 2024, 30, 176. [Google Scholar] [CrossRef]

- Zhao, M.; Su, Y.; Wang, J.; Liu, X.; Wang, K.; Liu, Z.; Liu, M.; Guo, Z. MED-YOLOv8s: A new real-time road crack, pothole, and patch detection model. J. Real-Time Image Process. 2024, 21, 26. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, Z. Road damage detection algorithm for improved YOLOv5. Sci. Rep. 2022, 12, 15523. [Google Scholar] [CrossRef]

- Wang, J.; Meng, R.; Huang, Y.; Zhou, L.; Huo, L.; Qiao, Z.; Niu, C. Road defect detection based on improved YOLOv8s model. Sci. Rep. 2024, 14, 16758. [Google Scholar] [CrossRef]

- Xiang, W.; Wang, H.; Xu, Y.; Zhao, Y.; Zhang, L.; Duan, Y. Road disease detection algorithm based on YOLOv5s-DSG. J. Real-Time Image Process. 2023, 20, 56. [Google Scholar] [CrossRef]

- Xie, X. Road Surface Defect Detection Based on Partial Convolution and Global Attention. Int. J. Pavement Res. Technol. 2024. [Google Scholar] [CrossRef]

- Youwai, S.; Chaiyaphat, A.; Chaipetch, P. YOLO9tr: A lightweight model for pavement damage detection utilizing a generalized efficient layer aggregation network and attention mechanism. J. Real-Time Image Process. 2024, 21, 163. [Google Scholar] [CrossRef]

- Wan, F.; Sun, C.; He, H.; Lei, G.; Xu, L.; Xiao, T. YOLO-LRDD: A lightweight method for road damage detection based on improved YOLOv5s. EURASIP J. Adv. Signal Process. 2022, 2022, 98. [Google Scholar] [CrossRef]

- Zeng, J.; Zhong, H. YOLOv8-PD: An improved road damage detection algorithm based on YOLOv8n model. Sci. Rep. 2024, 14, 12052. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A Survey of Deep Learning-Based Object Detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Aziz, L.; Haji Salam, M.S.B.; Sheikh, U.U.; Ayub, S. Exploring Deep Learning-Based Architecture, Strategies, Applications and Current Trends in Generic Object Detection: A Comprehensive Review. IEEE Access 2020, 8, 170461–170495. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics; Version 7.0. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 November 2024).

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7029–7038. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the 2020 European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11999–12009. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Zhang, C.; Lin, G.; Liu, F.; Yao, R.; Shen, C. CANet: Class-Agnostic Segmentation Networks with Iterative Refinement and Attentive Few-Shot Learning. arXiv 2019, arXiv:1903.02351. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Meila, M., Zhang, T., Eds.; 2021; Volume 139, pp. 11863–11874. Available online: http://proceedings.mlr.press/v139/yang21o (accessed on 1 November 2024).

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Li, Y.; Li, J.; Lin, W.; Li, J. Tiny-DSOD: Lightweight Object Detection for Resource-Restricted Usages. arXiv 2018, arXiv:1807.11013. [Google Scholar]

- Qin, Z.; Li, Z.; Zhang, Z.; Bao, Y.; Yu, G.; Peng, Y.; Sun, J. ThunderNet: Towards Real-Time Generic Object Detection on Mobile Devices. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6717–6726. [Google Scholar] [CrossRef]

- Mittal, P. A comprehensive survey of deep learning-based lightweight object detection models for edge devices. Artif. Intell. Rev. 2024, 57, 242. [Google Scholar] [CrossRef]

- He, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2022, 42, 513–529. [Google Scholar]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. SSD: Single Shot MultiBox Detector. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient Local Attention for Deep Convolutional Neural Networks. arXiv 2024, arXiv:2403.01123. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. In Computer Vision–ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. ISBN 978-3-030-01261-8. [Google Scholar]

- Cao, J.; Li, Y.; Sun, M.; Chen, Y.; Lischinski, D.; Cohen-Or, D.; Chen, B.; Tu, C. DO-Conv: Depthwise Over-Parameterized Convolutional Layer. IEEE Trans. Image Process. 2022, 31, 3726–3736. [Google Scholar] [CrossRef]

- Ren, M.; Zhang, X.; Zhi, X.; Wei, Y.; Feng, Z. An annotated street view image dataset for automated road damage detection. Sci. Data 2024, 11, 407. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Computer Vision–ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 1–21. Available online: https://github.com/WongKinYiu/yolov9 (accessed on 1 November 2024)ISBN 978-3-031-72751-1.

- Lyu, R. NanoDet-Plus: Super Fast and High Accuracy Lightweight Anchor-Free Object Detection Model. 2021. Available online: https://github.com/RangiLyu/nanodet (accessed on 1 November 2024).

- Shi, H.; Zhou, Q.; Ni, Y.; Wu, X.; Latecki, L.J. DPNET: Dual-Path Network for Efficient Object Detection with Lightweight Self-Attention. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 771–775. [Google Scholar] [CrossRef]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A Better Real-Time Object Detector on Mobile Devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).