Artificial Intelligence in Ophthalmology: Advantages and Limits

Abstract

1. General Introduction to AI for Clinicians

- Autonomy

- Adaptability

- -

- the availability of large amounts of data (big data) for various applications and

- -

- increased computing power at lower cost through methods such as graphics processing units

1.1. Should a Clinician Worry About AI?

1.2. How to Read and Understand a Medical Paper with AI?

- -

- Has there been an unconstrained, fair comparison with an explanation standard?

- -

- Did the patient group incorporate an adequate gamut of patients with diagnostic tests made in clinical settings?

- Are the databases adequate and delineated in enough features?

- Was the reference level for training the algorithm adequate and well-founded?

- -

- Did the outcomes of the assessed test affect the decision to apply the “gold” standard?

- -

- Were the test methods described in sufficient detail to allow replication?

- Is the algorithm development methodology described in sufficient detail to allow replication?

- Are the algorithm/datasets used available for external validation?

- -

- Are probability ratios presented for test results or is the data required for these calculations provided?

- Are appropriate performance metrics reported? [6]

- -

- Will the reproducibility of the test result and its interpretation be satisfactory for me?

- -

- Are the outcomes appropriate for sick persons?

- -

- Will the outcomes replace my usual approach?

- -

- Will sick persons be treated in a better way due to the new test?

- Are the conclusions of the method used responsible and explainable?

- Does the method show generalizability (can it be simply modified for other input data)?

- Was the performance of the initial method too cheerful?

- Has the method been confirmed in my region?

- Is there a cost-effectiveness evaluation for using the method?

- Will there be a relevant relationship with the patient’s quality of life after applying the AI method?

- Is there any aim to calculate this act?

- Has there been an independent, blind comparison with a reference standard?

- -

- Are the datasets adequate and described in sufficient detail?

- Did the patient group incorporate an adequate range of patients for the application of the diagnostic measurements in a clinical setting?

- -

- Was the benchmark for training/testing the algorithm adequate and reliable?

- Did the outcomes of the test in question affect the applicability of the conclusions to the reference gold standard?

- Were the methods for performing the test described detailed enough to allow replication?

- -

- Is the algorithm progress explained in enough detail to permit its replica?

- -

- Are the algorithm and datasets used obtainable for external confirmation?

- Are the probability ratios of the outcomes given, or at least the data needed for their computation provided?

- -

- Are performance metrics adequately reported? [6]

- Will the test’s reproducibility and its explanation be adequate for the attending physician?

- -

- Are the outcomes of the method used explainable?

- -

- Does the method show generalization?

- -

- Was the performance of the original method too cheerful?

- Are the outcomes pertinent to the sick persons? Equivalently, are we in the case of personalized medicine (a very trendy approach now)?

- -

- Has the algorithm been validated in the local population?

- Will the results change my management?

- -

- Is there any contrast of the new method with current standards of healthcare?

- -

- Is there any cost-effectiveness examination to justify using the algorithm?

- Will patients be better cared for because of the test?

- -

- Will there be a relevant impact on the patient’s health after implementing the new method?

- -

- Is there any endeavor to estimate or calculate its impact?

1.3. Partial Conclusions

- Exciting times lie ahead in medicine as well, because of the huge possibilities of AI as a tool of clinically aided decision-making.

- Yet, the possible legal and ethical problems of liability management, decrease in clinical expertise due to the overuse of algorithms, inadequate representation of data (mainly for various minorities, the absence of individual privacy, “biomarking” because of intensive testing), and an inappropriate understanding of outcomes (with AI seen as a black box), can all hold back the implementation and acceptance of AI methods [9].

- AI algorithms are fundamentally unbiased. Yet, the bias in the training data and the inherent biases of software developers or the diversity of medical schools can ultimately create complex, interpretable ethics.

- Issues: A critical assessment by all stakeholders before any acquisition of new technologies will aid in setting apart reality from “hype”.

- It should be kept in mind that the main goal of the medical world is to always provide patients with the “best” procedures of care on the market.

- The accessibility, availability and spread of the social impact of the “health care model” should also be taken into consideration when doing so.

2. Technical Introduction to AI for Clinicians

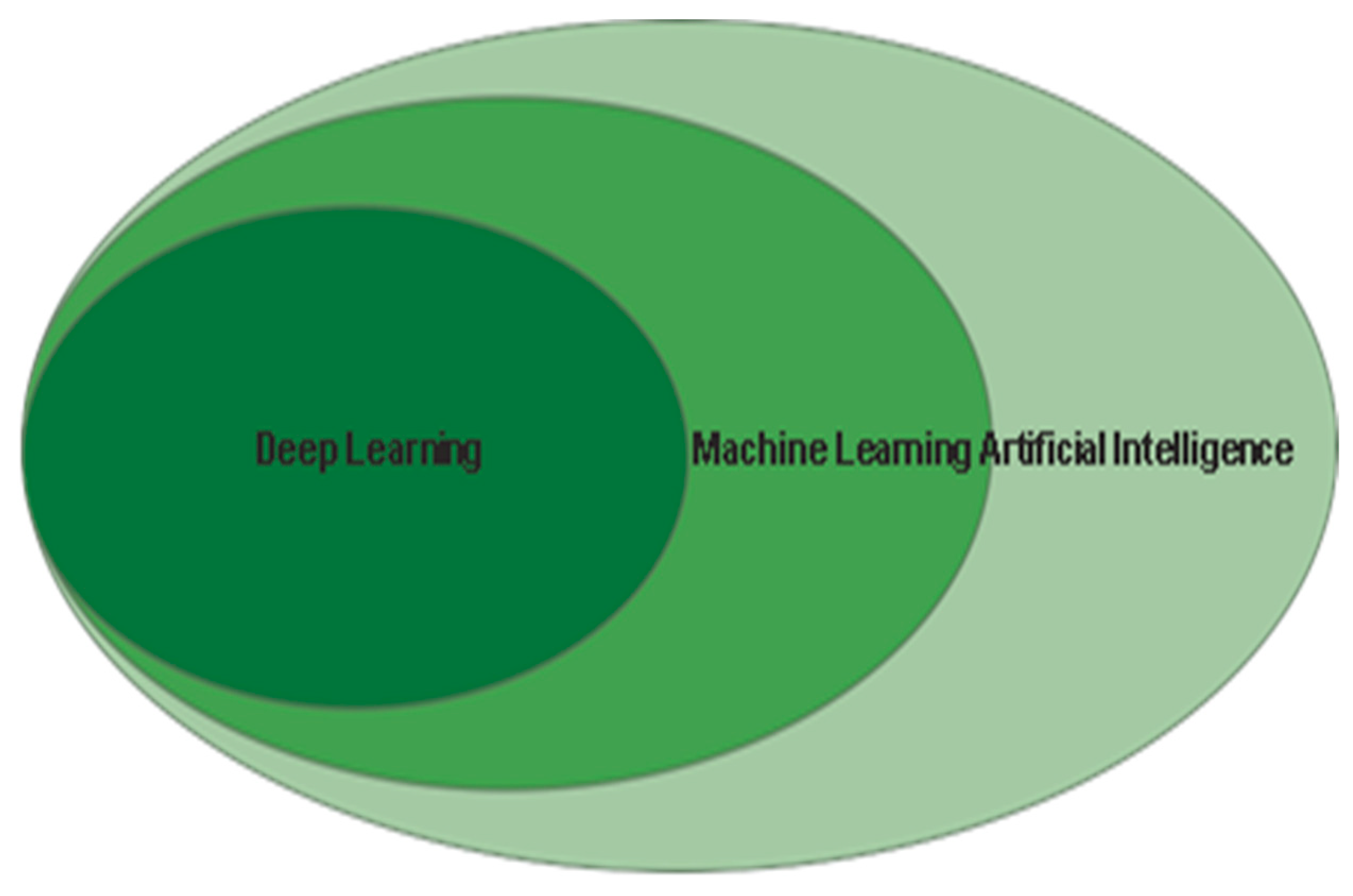

2.1. Brief Introduction to AI

- Artificial neural networks (ANN), built on connectionist paradigms, seeking to imitate the human cerebral matter;

- Evolutionary algorithms that use bio-inspired optimization methods, such as the mechanism of natural selection;

- Fuzzy logic, which may emulate the natural language of humans, modifying classical logic.

2.2. Difference Between Machine Learning and Deep Learning

2.3. Machine Learning (ML)

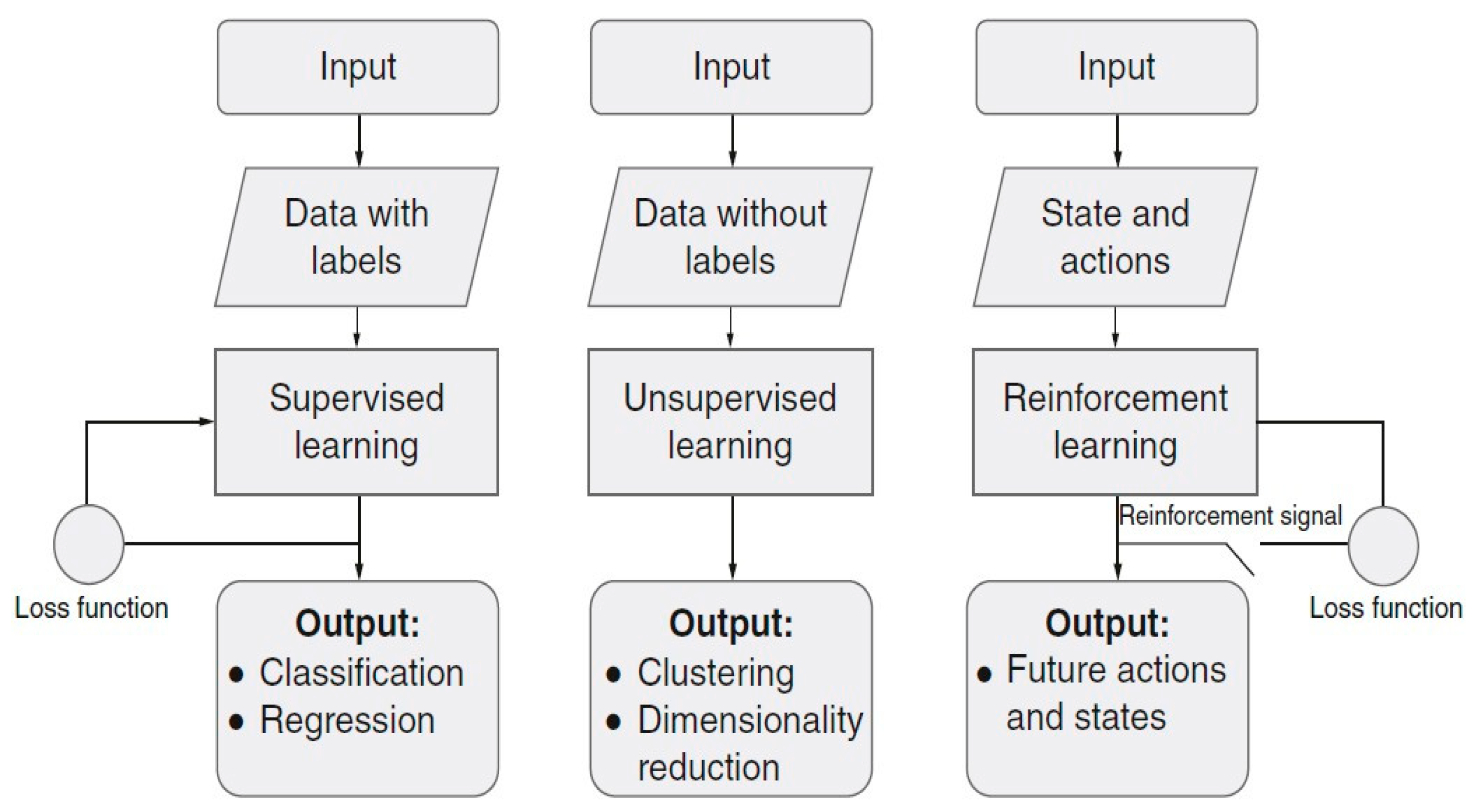

Types of Learning Methods for ML

- Supervised learning: The network has current inputs and desired outputs and delineates the connection of the inputs and those wanted outputs;

- Unsupervised learning: The network has certain input data, and it must group them into n classes based on similarity between those data;

- Reinforcement learning: Within this method, some actions and states are fed as inputs, and the network finds a policy on how to take actions, specified for a particular state.

- -

- In [14], it is shown how retinal blood vessels are detected by means of an extreme machine learning (ELM) method and probabilistic neural networks;

- -

- Gurudath et al. [13] studied fundus images with a three-layer ANN and Support Vector Machine (SVM) to classify retinal images;

- -

- Priyadarshini et al. classified with a data mining technique to provide useful predictions to diabetics diagnosed with retinopathy (DR) [15].

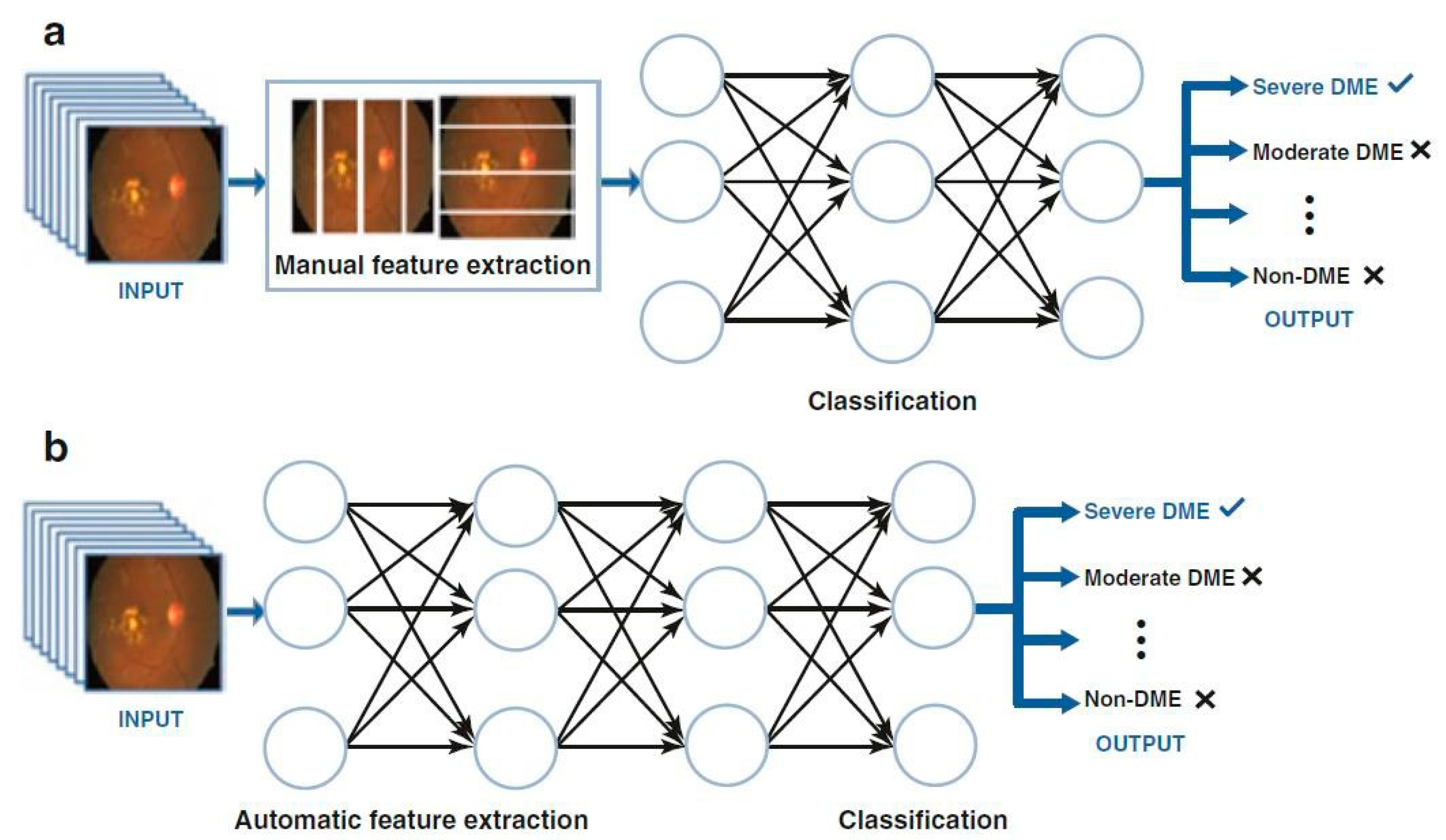

2.4. Deep Learning (DL)

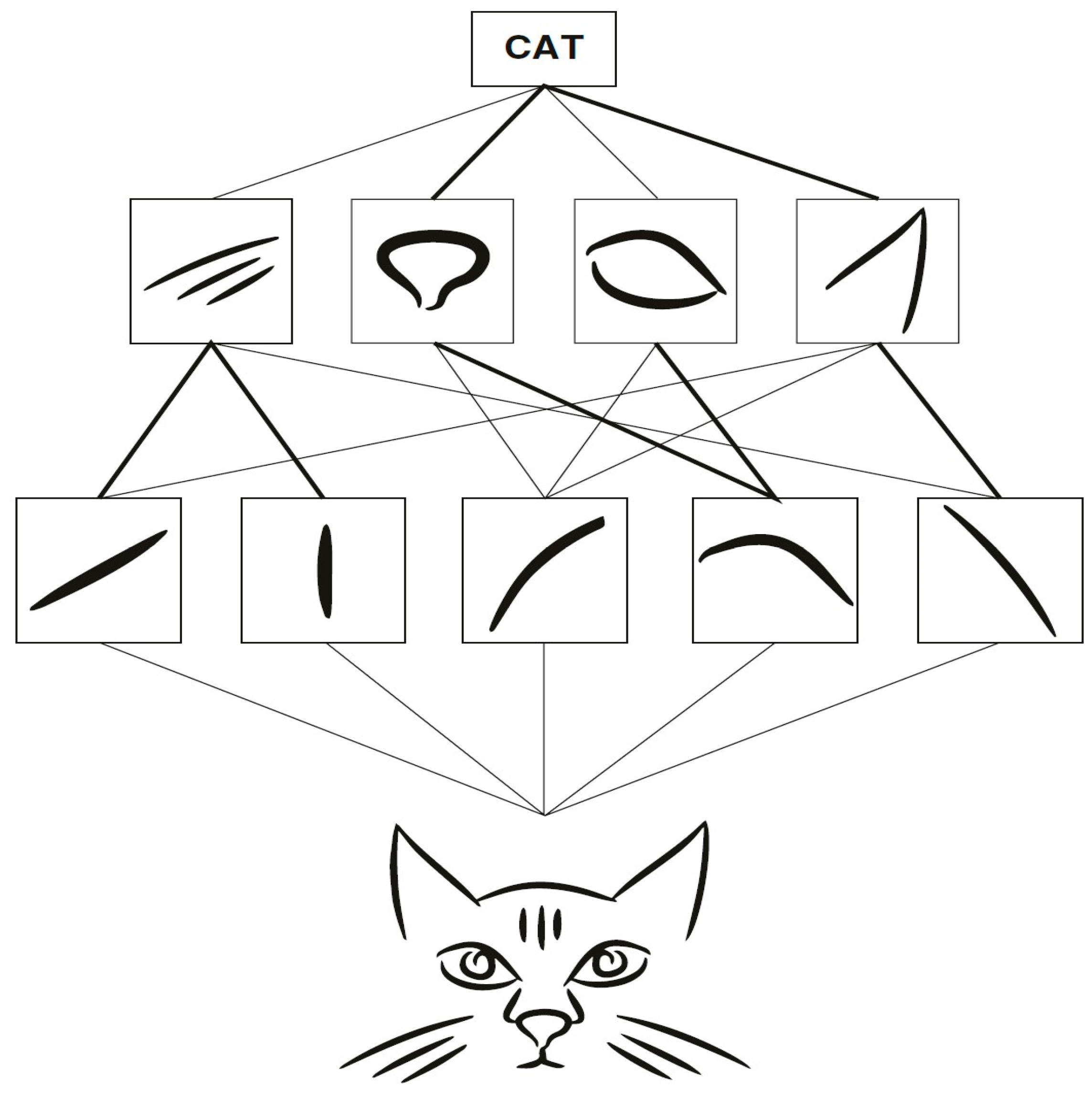

2.4.1. Convolutional Neural Network (CNN)

2.4.2. Learning by Transfer

- -

- Natural and medical images have different and particular statistical characteristics;

- -

- Moreover, training these deep NNs with medical image datasets from scratch is not efficient because these datasets are often small. For fundus images, for instance, one of the largest freely available databases is EyePACS, which has just 35,126 training images;

- -

- Due to the large quantity of factors that deep NNs have to optimize, their hit relies on large volumes of available data [21];

- -

- Transfer learning takes models with parameters learned from ImageNet (or another natural image set like CIFAR) and then performs a fine-tuning process.

2.4.3. Classification

2.4.4. Segmentation

2.4.5. Multimodal Learning

3. AI and Ophthalmology: View of the Ensemble

3.1. Introduction

- The semantic (or syntactic) features, expressed by specialists;

- The numerical characteristics, represented by mathematical formulas.

3.2. AI and Anterior Segment Diseases

3.2.1. AI and Corneal Ectasia

3.2.2. AI and Keratoconus

3.3. AI and Posterior Segment Diseases

3.3.1. AI and Diabetic Retinopathy

3.3.2. AI and Retinal Vein Occlusion

3.3.3. AI and Retinopathy of Prematurity

3.3.4. AI and Age-Related Macular Degeneration (ARMD)

3.3.5. AI and Glaucoma

3.3.6. AI and Retinal Detachment

3.4. AI and Various Eye Diseases

3.4.1. Ocular Oncology

3.4.2. Pediatric Ophthalmology

4. Representative Areas of Application of AI in Ophthalmology

4.1. Artificial Intelligence and Cataract

4.1.1. Scope of the Problem

4.1.2. Limitations of Current Clinical Procedures

- -

- Case Detection Programs

- -

- Calculation of Intraocular Lens (IOL) Power

- -

- Workforce Training and Surgical Evaluation

- -

- Postoperative Care and Quality of Life (QoL)

4.1.3. AI and Cataract Detection

Cataract Detection Based on Slit Lamp Photographs

Based on Color Fundus Photographs

4.1.4. Calculation of the Power of Intraocular Lenses

4.1.5. AI and Post-Operative Care: Quality of Life (QoL)

4.2. AI and Glaucoma

4.2.1. Introduction

4.2.2. Overview of AI in Glaucoma

Classical Machine Learning (ML) Models

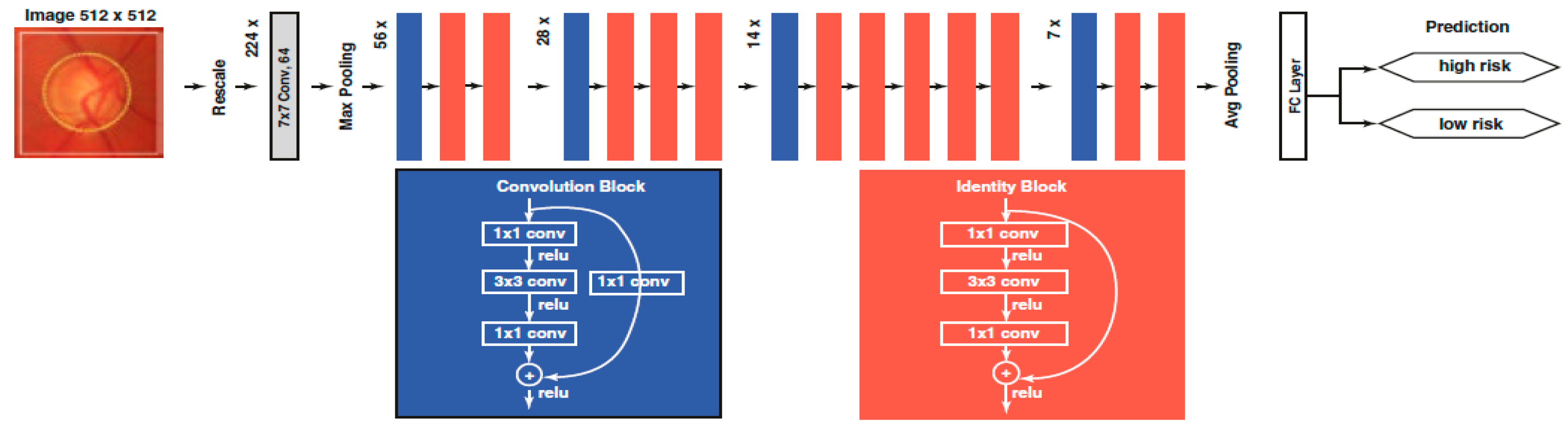

Deep Learning (DL) Models

- DL networks can detect complicated patterns in input data by automatically tuning internal model parameters that are used to calculate data representations (without feature extraction) and classification in a two-step process.

4.3. AI-Based Systems in Glaucoma

4.3.1. Detection of Glaucomatous Characteristics

4.3.2. Diagnosis of Glaucoma

| Authors | Year | Dataset | Modality | Model | Application | AUC | Sensitivity | Specificity |

|---|---|---|---|---|---|---|---|---|

| Goldbaum et al. [83] | 1994 | Local dataset | Visual fields | A two-layer FNN | Diagnosis | – | 0.65 | 0.72 |

| Brigatti et al. [84] | 1996 | Local dataset | Visual fields; OD and RNFL measurements | A four-layer BPNN | Diagnosis: Functional | – | 0.84 | 0.86 |

| Diagnosis: Structural | – | 0.87 | 0.56 | |||||

| Diagnosis: Multimodal | – | 0.90 | 0.84 | |||||

| Chen et al. [85] | 2015 | ORIGA; SCES* | Fundus photos | A six-layer CNN | Diagnosis | 0.898 | – | – |

| Asaoka et al. [86] | 2016 | Local dataset | Visual fields | A four-layer FNN | Diagnosis | 0.926 | 0.749 | 1.000 |

| Ting et al. [54] | 2017 | SiDRP 14–15# | Fundus photos | VGG-19 | Diagnosis | 0.942 | 0.964 | 0.932 |

| Liu et al. [75] | 2018 | Local dataset; HRF; RIM-ONE | Fundus photos | ResNet50 | Diagnosis: Local dataset | 0.970 | 0.893 | 0.971 |

| Diagnosis: HRF | 0.890 | 0.867 | 0.867 | |||||

| Li et al. [53] | 2018 | LabelMe | Fundus photos | Inception_v3 | Diagnosis | 0.986 | 0.956 | 0.920 |

| Li et al. [87] | 2018 | Local dataset | Visual fields | VGG-16 | Diagnosis | 0.966 | 0.932 | 0.826 |

| Shibata et al. [88] | 2018 | Local dataset | Fundus photos | ResNet | Diagnosis | 0.965 | – | – |

| Christopher et al. [89] | 2018 | ADAGES$; DIGS~ | Fundus photos | ResNet50 | Diagnosis | 0.910 | 0.840 | 0.830 |

| Medeiros et al. [82] | 2019 | Local dataset | OCT scans; fundus photos | ResNet34 | Diagnosis | 0.944 | 0.900 | 0.800 |

| Liu et al. [90] | 2019 | CGSA^ | Fundus photos | ResNet | Diagnosis | 0.996 | 0.962 | 0.977 |

| Asaoka et al. [91] | 2019 | Local dataset | OCT scans | A 12-layer CNN | Diagnosis | 0.937 | 0.825 | 0.939 |

| Fu et al. [92] | 2019 | Local dataset | AS-OCT scans | VGG-16 | Diagnosis | 0.96 | 0.90 | 0.92 |

| Normando et al. [93] | 2020 | Local dataset | OCT scans | MobileNet_v2 | Diagnosis | – | 0.911 | 0.971 |

| Prognosis | 0.890 | 0.857 | 0.917 | |||||

| Thakur et al. [94] | 2020 | OHTS+ | Fundus photos | MobileNet_v2 | Diagnosis | 0.94 | – | – |

| Prognosis: 1–3 years | 0.88 | – | – | |||||

| Prognosis: 4–7 years | 0.77 | – | – |

4.3.3. Progress and Prognosis of Glaucoma

4.4. Provocations of AI in Glaucoma

4.4.1. Dataset Dependency

4.4.2. Lack of Agreement Among Ophthalmologists

4.4.3. Early Glaucoma

4.5. Possible Evolution

4.5.1. Wearable Equipment and Cloud Computing

4.5.2. Explainable AI

4.5.3. Convergent Technologies

4.6. Final Traits

4.7. AI in Retinal Diseases: Other Applications

4.7.1. Introduction

4.7.2. Applications of AI in Retinal Diseases

AI in Diabetic Retinopathy

AI in Age-Related Macular Degeneration

AI in Choroidal Neovascularization and Macular Diseases

AI in Retinopathy of Prematurity

Retinal Vein Occlusions (RVOs)

4.8. Pearls and Pitfalls in Using AI Applications in Ophthalmology

4.9. Conclusions

4.10. AI in Neuro-Ophthalmology

4.10.1. Neuro-Ophthalmology

- diseases impacting the afferent ocular system (the pathway of retina–optic nerve, chiasm, retro-chiasmal pathways, and occipital lobes), which are sources of high-order optical abnormalities;

- diseases that affect the efferent visual system, which are sources of central ocular motor disorders (at the cortical level, brainstem), gaze instability, cranial motor ocular neuropathies, pupillary conditions, and several peripheral abnormalities impacting the neuromuscular junction as well as the muscles themselves.

4.10.2. AI in Optic Nerve Head (Optic Disc) Anomalies

4.11. AI in Eye Movement Disorders

AI and Ocular Motor Characteristics

- (1)

- lack of agreement regarding the reference standard among specialists;

- (2)

- limited ability to reproduce and compare results when researchers do not use publicly accessible databases;

- (3)

- absence of time-related assessments;

- (4)

- the existence of uninterpretable achievements in DL applications (black boxes), which make them unreliable for some medical service providers.

4.12. Conclusions

5. AI and Different Applications in Ophthalmology and Other Fields

5.1. AI in Pathology

5.1.1. Models

5.1.2. AI in Ocular Oncology

Choroidal Melanoma

Retinoblastoma and Leukocoria

5.1.3. AI in Ocular Genetics

Precedents

Inherited Retinal Disorders (IRD)

5.1.4. AI in Pediatric Ophthalmology

Pediatric Cataract

Strabismus

5.1.5. Supplementary AI Applications in Eye Healthcare

Cardiovascular Risk Factors

Multiple Sclerosis

Other Neurodegenerative Diseases

5.1.6. AI in Tele-Ophthalmology

- Asynchronous: This “store-and-forward” method uses clinical imaging information and sends it to the ophthalmologist for evaluation. This method is currently applied for diabetic retinopathy [141].

- Synchronous: This approach may provide real-time telemedicine between patients and ophthalmologists through certain communication channels (e.g., video chat, phone calls, and smartphone and internet apps). This method has been implemented with success in many intensive care units (ICUs) where remote eye care is offered to centers that do not have such services.

- Remote monitoring: This type of service permits suppliers to monitor sick individuals at home or remotely. Intraocular pressure (IOP)-measuring contact lenses, home IOP monitors, IOP sensors, visual field devices, and age-related macular degeneration (AMD) devices are examples of instruments that automatically send acquired data to providers in order to enhance clinical services.

- Administrative work: AI programs reduce the administrative workload through intelligent scheduling, automatic invoicing, patient tracking, and claims management;

- Robotics and procedures: AI software can be found in many specific devices, such as alignment automatic guides, and focus and data acquisition. In addition, AI has been used in minimally invasive surgery, remote surgery, and slit-lamp examinations. Robotic tools such as co-manipulators, tele-manipulators, and highly stable hands are being developed;

- Diagnosis and screening: Integrating the interpretive and predictive skills of AI for tele-diagnosis significantly increases access to healthcare, decreases unnecessary eye doctor consultations, and saves money and time for sick individuals, providers, and the healthcare system. Tele-ophthalmology and AI have so far shown effectiveness for diabetic retinopathy and are being used, for instance, for glaucoma screening, AMD monitoring, corneal abnormal topography identification, and pediatric cataract screening.

- Remote Monitoring: Telemonitoring is an important challenge in tele-ophthalmology. AI programs can provide personalized information to a large number of patients and assist providers in making clinical decisions.

5.1.7. Limitations

6. Ethics and AI: Pandora’s Box

6.1. Introduction

6.2. Key Ethical Principles

6.2.1. Beneficence and Non-Maleficence

6.2.2. Respect for Autonomy

6.2.3. Justice

6.3. The Black Box Problem: The AI Enigma

6.4. Explainable AI (XAI) in Ophthalmology

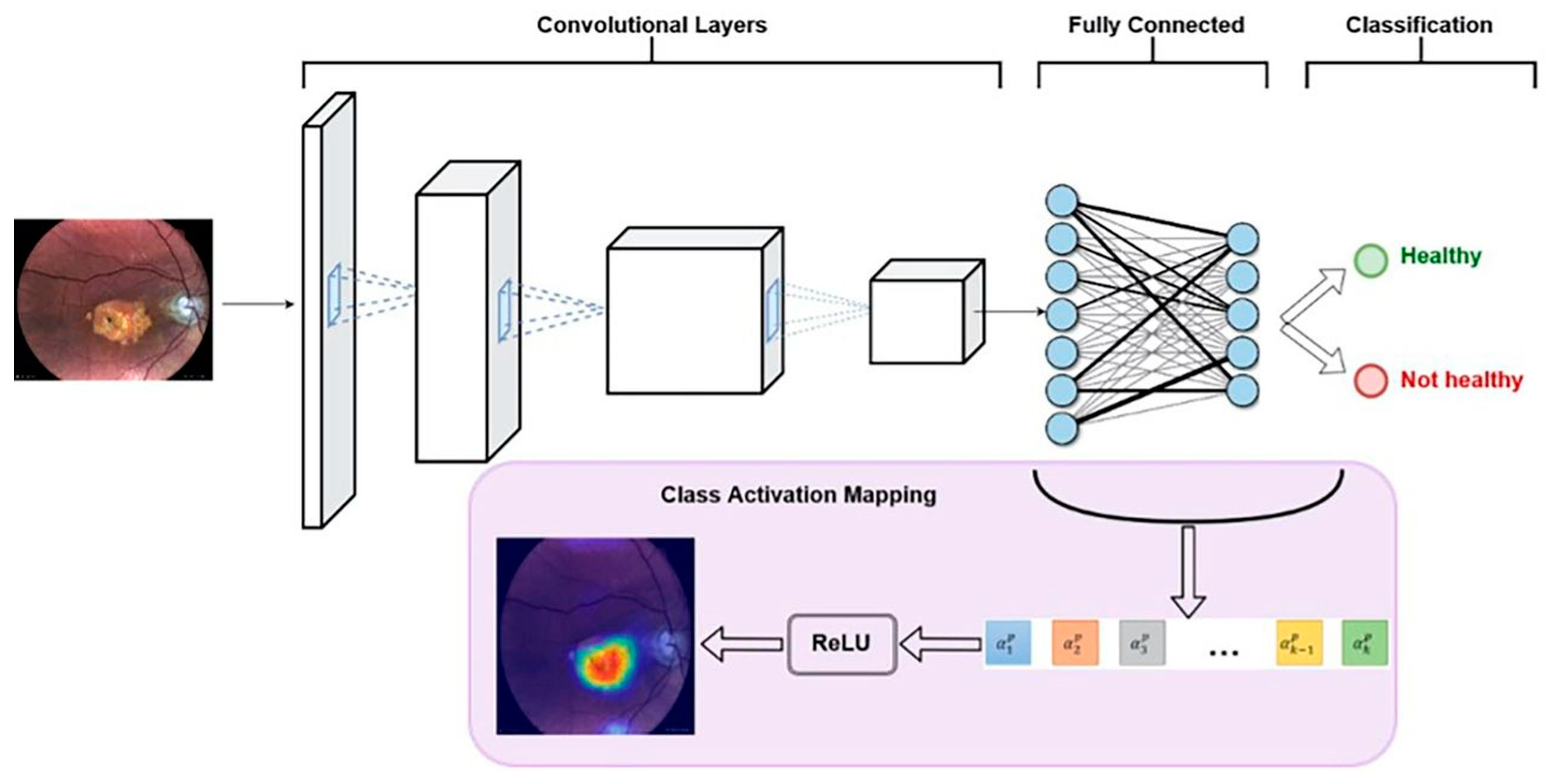

- Outcomes: A total of 540 color retinal images were collected. The data were used as follows: 300 images trained the AI model, 120 for validation, and 120 for testing. To distinguish GA from healthy subjects’ eyes, the model demonstrated 100% sensitivity, 97.5% specificity, and 98.4% overall diagnostic accuracy. Other performance values: AUC-ROC = 0.988 and precision-recall = AUC-PR = 0.952, which are very good model values.

- The explainable AI model provides automatic GA screening, with very good results, using easily accessible retinal imaging.

- Because of high performance and explainability, the above architecture can aid clinical validation and propose AI acceptance for GA diagnosis.

- Due to optimization, early detection of GA can occur, and this method with AI can increase patient access to innovative, possibly vision-preserving treatments.

7. General Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

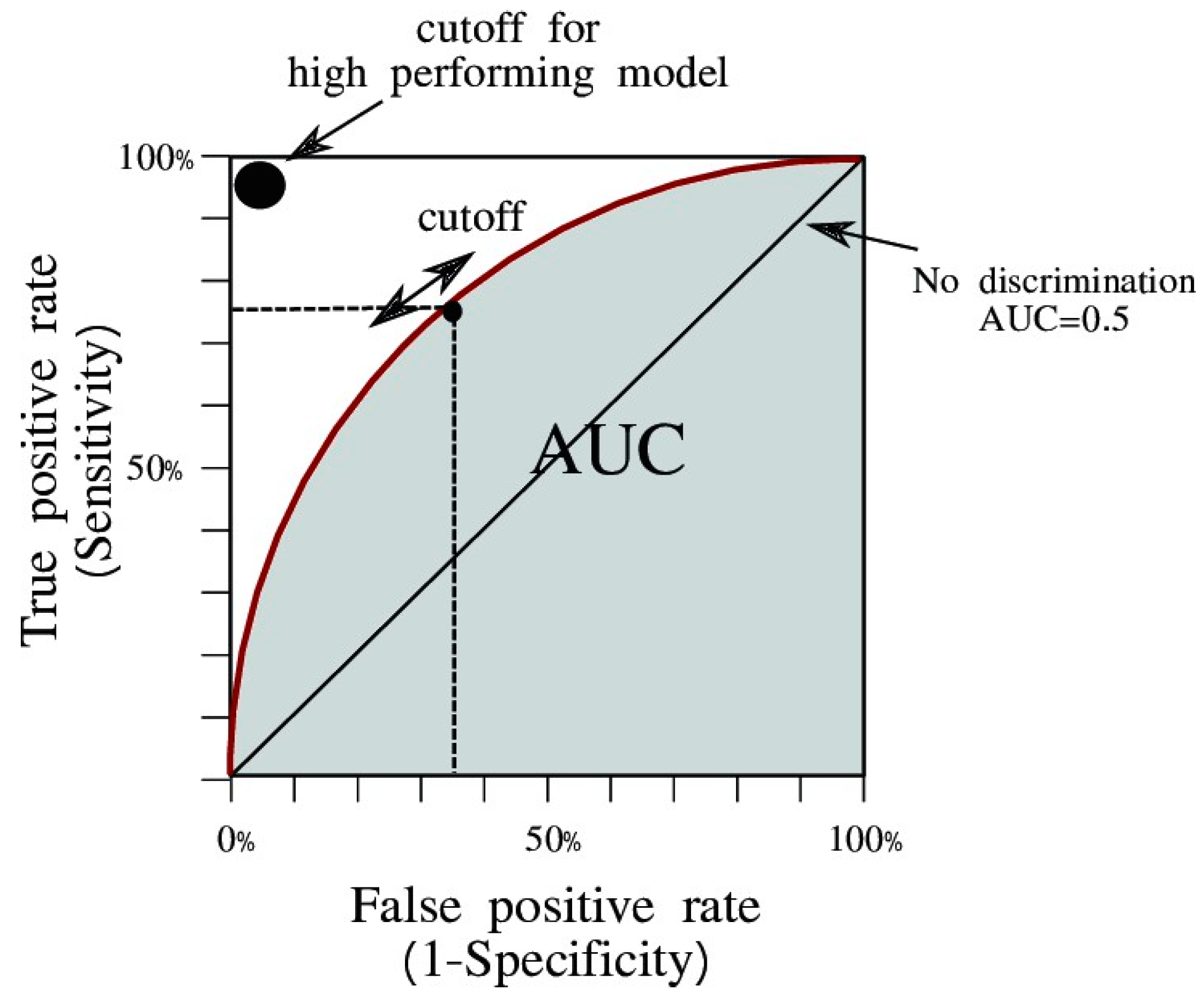

- Area under the ROC curve (AUC)

- AUC is widely used to measure the accuracy of diagnostic tests. The closer the ROC (Receiver Operating Characteristic) curve is to the upper-left corner of the graph, the higher the accuracy of the test, because in the upper left corner, sensitivity = 1, and false positive rate = 0 (specificity = 1).

- Area under the curve (AUC) is the measure of a binary classifier’s ability to distinguish between classes and is used as a summary of the ROC curve. The higher the AUC, the better the performance of the model in distinguishing between positive and negative classes.

- In general, an AUC of 0.5 suggests that there is no discrimination (i.e., for example, the ability to diagnose patients with or without a disease or condition based on the test), 0.7 to 0.8 is considered acceptable, 0.8 to 0.9 is considered excellent, and greater than 0.9 is considered outstanding.

- Cohen’s Kappa score (κ) is a statistical measure used to quantify the level of agreement between two raters (or judges, observers, etc.) who each classify items into categories. It is especially useful in situations where decisions are subjective and the categories are nominal (i.e., they do not have a natural order).

References

- Available online: https://pubmed.ncbi.nlm.nih.gov/?term=artificial+intelligence&filter=years.2018-2024&Timeline=expanded (accessed on 4 November 2024).

- Hype Cycle Research Methodology. Available online: https://www.gartner.com/en/research/methodologies/gartner-hype-cycle (accessed on 4 November 2024).

- Sevakula, R.; Au-yeung, W.-T.S.; Jagmeet, H.; Isselbacher, E.; Armoundas, E. State-of-the-Art Machine Learning Techniques Aiming to Improve Patient Outcomes Pertaining to the Cardiovascular System. J. Am. Heart Assoc. 2020, 9, e013924. [Google Scholar] [CrossRef] [PubMed]

- Kang, D.; Wu, H.; Yuan, L.; Shi, Y.; Jin, K.; Grzybowski, A. A beginner’s guide to artificial intelligence for ophthalmologists. Ophthalmol. Ther. 2024, 13, 1841–1855. [Google Scholar] [CrossRef]

- Jaeschke, R.; Guyatt, G.H.; Sackett, D.L. Users’ guides to the medical literature: III. How to use an article about a diagnostic test B. what are the results and will they help me in caring for my patients? JAMA 1994, 271, 703–707. [Google Scholar] [CrossRef] [PubMed]

- Yu, M.; Tham, Y.-C.; Rim, T.H.; Ting, D.S.W.; Wong, T.Y.; Cheng, C.-Y. Reporting on deep learning algorithms in health care. Lancet Digit. Health 2019, 1, e328–e329. [Google Scholar] [CrossRef] [PubMed]

- De Fauw, J.; Ledsam, J.R.; Romera-Paredes, B.; Nikolov, S.; Tomasev, N.; Blackwell, S.; Askham, H.; Glorot, X.; O’Donoghue, B.; Visentin, D.; et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018, 24, 1342–1350. [Google Scholar] [CrossRef] [PubMed]

- Moraes, G.; Fu, D.J.; Wilson, M.; Khalid, H.; Wagner, S.K.; Korot, E.; Ferraz, D.; Faes, L.; Kelly, C.J.; Spitz, T.; et al. Quantitative analysis of OCT for neovascular age-related macular degeneration using deep learning. Ophthalmology 2021, 128, 693–705. [Google Scholar] [CrossRef]

- Cabitza, F.; Rasoini, R.; Gensini, G.F. Unintended consequences of machine learning in medicine. JAMA 2017, 318, 517–518. [Google Scholar] [CrossRef]

- Raví, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.Z. Deep learning for health informatics. IEEE J. Biomed. Health Inform. 2017, 21, 4–21. [Google Scholar] [CrossRef]

- Ichhpujani, P.; Thakur, S. (Eds.) Artificial Intelligence and Ophthalmology. Perks, Perils and Pitfalls; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar] [CrossRef]

- Gurudath, N.; Celenk, M.; Riley, H.B. Machine Learning Identification of Diabetic Retinopathy from Fundus Images. In Proceedings of the IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 13 December 2014; pp. 1–7. [Google Scholar]

- Decencière, E.; Cazuguel, G.; Zhang, X.; Thibault, G.; Klein, J.-C.; Meyer, F.; Marcotegui, B.; Quellec, G.; Lamard, M.; Danno, R.; et al. TeleOphta: Machine learning and image processing methods for teleophthalmology. IRBM 2013, 34, 196–203. [Google Scholar] [CrossRef]

- Vandarkuhali, T.; Ravichandran, D.R.C.S. ELM based detection of abnormality in retinal image of eye due to diabetic retinopathy. J. Theor. Appl. Inf. Technol. 2014, 6, 423–428. [Google Scholar]

- Priyadarshini, R.; Dash, N.; Mishra, R. A Novel Approach to Predict Diabetes Mellitus Using Modified Extreme Learning Machine. In Proceedings of the Electronics and Communication Systems (ICECS), Coimbatore, India, 13–14 February 2014; pp. 1–5. [Google Scholar]

- Bietti, A.; Mairal, J. Group invariance stability to deformations, and complexity of deep convolutional representations. J. Mach. Learn. Res. 2019, 20, 1–49. [Google Scholar]

- Claro, M.; Santos, L.; Silva, W.; Araújo, F.; Moura, N.; Macedo, A. Automatic glaucoma detection based on optic disc segmentation and texture feature extraction. CLEI Electron. J. 2016, 19, 1–10. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Ehteshami Bejnordi, B.; Adiyoso Setio, A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Perdomo, O.; González, F. A systematic review of deep learning methods applied to ocular images. Cienc. Ing. Neogranadina 2019, 30, 9–26. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; FeiFei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Toledo-Cortés, S.; de la Pava, M.; Perdomo, O.; González, F.A. Hybrid Deep Learning Gaussian Process for Diabetic Retinopathy Diagnosis and Uncertainty Quantification. In Proceedings of the 7th International Workshop, OMIA 2020, Lima, Peru, 8 October 2020. [Google Scholar]

- Müller, H.; Unay, D. Medical decision support using increasingly large multimodal data sets. In Big Data Analytics for Large-Scale Multimedia Search; Wiley: Hoboken, NJ, USA, 2019; pp. 317–336. [Google Scholar]

- Zhou, S.K.; Greenspan, H.; Shen, D. Deep Learning for Medical Image Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–433. [Google Scholar]

- Voets, M.; Møllersen, K.; Bongo, L.A. Reproduction study using public data of: Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. PLoS ONE 2019, 14, e0217541. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.A.; Satheesh Kumar, J. A Review On Recent Developments for the Retinal Vessel Segmentation Methodologies and Exudate Detection in Fundus Images Using Deep Learning Algorithms. In Computational Vision and Bio-Inspired Computing; Smys, S., Tavares, J., Balas, V.E., Iliyasu, A.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 1363–1370. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for Biomedical Image Segmentation. In Proceedings of the MICCAI 2015—18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hesamian, M.H.; Jia, W.; He, X.; Kennedy, P. Deep learning techniques for medical image segmentation: Achievements and challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef] [PubMed]

- Andrearczyk, V.; Müller, H. Deep Multimodal Classification of Image Types in Biomedical Journal Figures. In Proceedings of the 9th International Conference of the CLEF Association, CLEF 2018, Avignon, France, 10–14 September 2018; pp. 3–14. [Google Scholar]

- Yoo Tae, K.; Choi Joon, Y.; Seo Jeong, G.; Ramasubramanian, B.; Selvaperumal, S.; Kim, D.W. The possibility of the combination of OCT and fundus images for improving the diagnostic accuracy of deep learning for age-related macular degeneration: A preliminary experiment. Med. Biol. Eng. Comput. 2019, 57, 677–687. [Google Scholar]

- Golabbakhsh, M.; Rabbani, H. Vessel-based registration of fundus and optical coherence tomography projection images of retina using a quadratic registration model. IET Image Process 2013, 7, 768–776. [Google Scholar] [CrossRef]

- Schlegl, T.; Waldstein, S.; Vogl, W.D.; Schmidt Erfurth, U.; Langs, G. Predicting semantic descriptions from medical images with convolutional neural networks. Inf. Process. Med. Imaging 2015, 9123, 733–745. [Google Scholar]

- Perdomo, O.J.; Arevalo, J.; González, F.A. Combining morphometric features and convolutional networks fusion for glaucoma diagnosis. In Proceedings of the 13th International Symposium on Medical Information Processing and Analysis, San Andres Island, Colombia, 5–7 October 2017. [Google Scholar] [CrossRef]

- Lopes, B.T.; Ramos, I.C.; Salomão, M.Q.; Guerra, F.P.; Schallhorn, S.C.; Schallhorn, J.M.; Vinciguerra, R.; Vinciguerra, P.; Price, F.W., Jr.; Price, M.O.; et al. Enhanced tomographic assessment to detect corneal ectasia based on artificial intelligence. Am. J. Ophthalmol. 2018, 195, 223–232. [Google Scholar] [CrossRef]

- Yoo, T.K.; Ryu, I.H.; Lee, G.; Kim, Y.; Kim, J.K.; Lee, I.S.; Kim, J.S.; Rim, T.H. Adopting machine learning to automatically identify candidate patients for corneal refractive surgery. NPJ Digit. Med. 2019, 2, 59. [Google Scholar] [CrossRef] [PubMed]

- Lin, S.R.; Ladas, J.G.; Bahadur, G.G.; Al-Hashimi, S.; Pineda, R. A review of machine learning techniques for keratoconus detection and refractive surgery screening. Semin. Ophthalmol. 2019, 34, 317–326. [Google Scholar] [CrossRef] [PubMed]

- Kamiya, K.; Ayatsuka, Y.; Kato, Y.; Fujimura, F.; Takahashi, M.; Shoji, N.; Mori, Y.; Miyata, K. Keratoconus detection using deep learning of colour-coded maps with anterior segment optical coherence tomography: A diagnostic accuracy study. BMJ Open 2019, 9, e031313. [Google Scholar] [CrossRef] [PubMed]

- Valdés-Mas, M.A.; Martín-Guerrero, J.D.; Rupérez, M.J.; Pastor, F.; Dualde, C.; Monserrat, C.; Peris-Martínez, C. A new approach based on machine learning for predicting corneal curvature (K1) and astigmatism in patients with keratoconus after intracorneal ring implantation. Comput. Methods Programs Biomed. 2014, 116, 39–47. [Google Scholar] [CrossRef]

- Yousefi, S.; Takahashi, H.; Hayashi, T.; Tampo, H.; Inoda, S.; Arai, Y.; Tabuchi, H.; Asbell, P. Predicting the likelihood of need for future keratoplasty intervention using artificial intelligence. Ocul. Surf. 2020, 18, 320–325. [Google Scholar] [CrossRef]

- Yau, J.W.; Rogers, S.L.; Kawasaki, R.; Lamoureux, E.L.; Kowalski, J.W.; Bek, T.; Chen, S.J.; Dekker, J.M.; Fletcher, A.; Grauslund, J.; et al. Global prevalence and major risk factors of diabetic retinopathy. Diabetes Care 2012, 35, 556–564. [Google Scholar] [CrossRef] [PubMed]

- ElTanboly, A.; Ismail, M.; Shalaby, A.; Switala, A.; El-Baz, A.; Schaal, S.; Gimel’farb, G.; El-Azab, M. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med. Phys. 2017, 44, 914–923. [Google Scholar] [CrossRef] [PubMed]

- Faes, L.; Bodmer, N.S.; Locher, S.; Keane, P.A.; Balaskas, K.; Bachmann, L.M.; Schlingemann, R.O.; Schmid, M.K. Test performance of optical coherence tomography angiography in detecting retinal diseases: A systematic review and meta-analysis. Eye 2019, 33, 1327–1338. [Google Scholar] [CrossRef] [PubMed]

- Food and Drug Administration. FDA Permits Marketing of Artificial Intelligence-Based Device to Detect Certain Diabetes-Related Eye Problems. 2018. Available online: https://www.fda.gov/news-events/press-announcements/fda-permits-marketing-artificial-intelligence-based-device-detect-certain-diabetes-related-eye (accessed on 6 November 2024).

- Zhang, H.; Chen, Z.; Chi, Z.; Fu, H. Hierarchical local binary pattern for branch retinal vein occlusion recognition with fluorescein angiography images. Electron. Lett. 2014, 50, 1902–1904. [Google Scholar] [CrossRef]

- Nagasato, D.; Tabuchi, H.; Masumoto, H.; Enno, H.; Ishitobi, N.; Kameoka, M.; Niki, M.; Mitamura, Y. Automated detection of a nonperfusion area caused by retinal vein occlusion in optical coherence tomography angiography images using deep learning. PLoS ONE 2019, 14, e0223965. [Google Scholar] [CrossRef]

- Waldstein, S.M.; Montuoro, A.; Podkowinski, D.; Philip, A.M.; Gerendas, B.S.; Bogunovic, H.; Schmidt-Erfurth, U. Evaluating the impact of vitreomacular adhesion on anti-VEGF therapy for retinal vein occlusion using machine learning. Sci. Rep. 2017, 7, 2928. [Google Scholar] [CrossRef] [PubMed]

- Brown, J.M.; Campbell, J.P.; Beers, A.; Chang, K.; Ostmo, S.; Chan, R.V.P.; Dy, J.; Erdogmus, D.; Ioannidis, S.; Kalpathy-Cramer, J.; et al. Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. 2018, 136, 803–810. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.S.; Baughman, D.M.; Lee, A.Y. Deep learning is effective for classifying normal versus age-related macular degeneration OCT images. Ophthalmol. Retin. 2017, 1, 322–327. [Google Scholar] [CrossRef] [PubMed]

- Burlina, P.M.; Joshi, N.; Pacheco, K.D.; Freund, D.E.; Kong, J.; Bressler, N.M. Use of deep learning for detailed severity characterization and estimation of 5-year risk among patients with age-related macular degeneration. JAMA Ophthalmol. 2018, 136, 1359–1366. [Google Scholar] [CrossRef]

- Grassmann, F.; Mengelkamp, J.; Brandl, C.; Harsch, S.; Zimmermann, M.E.; Linkohr, B.; Peters, A.; Heid, I.M.; Palm, C.; Weber, B.H.F. A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology 2018, 125, 1410–1420. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Dharssi, S.; Chen, Q.; Keenan, T.D.; Agrón, E.; Wong, W.T.; Chew, E.Y.; Lu, Z. DeepSeeNet: A deep learning model for automated classification of patient-based age-related macular degeneration severity from color fundus photographs. Ophthalmology 2019, 126, 565–575. [Google Scholar] [CrossRef]

- Stuart, A. Harnessing AI for Glaucoma. Eyenet Magazine, 1 May 2023; 40–45. [Google Scholar]

- Salam, A.A.; Khalil, T.; Akram, M.U.; Jameel, A.; Basit, I. Automated detection of glaucoma using structural and nonstructural features. Springerplus 2016, 5, 1519. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; He, Y.; Keel, S.; Meng, W.; Chang, R.T.; He, M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology 2018, 125, 1199–1206. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.S.W.; Cheung, C.Y.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; San Yeo, I.Y.; Lee, S.Y.; et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef] [PubMed]

- Ohsugi, H.; Tabuchi, H.; Enno, H.; Ishitobi, N. Accuracy of deep learning, a machine-learning technology, using ultra-wide-field fundus ophthalmoscopy for detecting rhegmatogenous retinal detachment. Sci. Rep. 2017, 7, 9425. [Google Scholar] [CrossRef]

- Damato, B.; Eleuteri, A.; Fisher, A.C.; Coupland, S.E.; Taktak, A.F. Artificial neural networks estimating survival probability after treatment of choroidal melanoma. Ophthalmology 2008, 115, 1598–1607. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.G.; Pica, A.; Hrbacek, J.; Weber, D.C.; La Rosa, F.; Schalenbourg, A.; Sznitman, R.; Bach Cuadra, M. A novel segmentation framework for uveal melanoma based on magnetic resonance imaging and class activation maps. In Proceedings of the 2nd International Conference on Medical Imaging with Deep Learning, London, UK, 8–10 July 2019; Volume 102, pp. 370–379. [Google Scholar]

- Sun, M.; Zhou, W.; Qi, X.; Zhang, G.; Girnita, L.; Seregard, S.; Grossniklaus, H.E.; Yao, Z.; Zhou, X.; Stålhammar, G. Prediction of BAP1 expression in uveal melanoma using densely-connected deep classification networks. Cancers 2019, 11, 1579. [Google Scholar] [CrossRef] [PubMed]

- Reid, J.E.; Eaton, E. Artificial intelligence for pediatric ophthalmology. Curr. Opin. Ophthalmol. 2019, 30, 337–346. [Google Scholar] [CrossRef]

- Ma, M.K.I.; Saha, C.; Poon, S.H.L.; Yiu, R.S.W.; Shih, K.C.; Chan, Y.K. Virtual reality and augmented reality—Emerging screening and diagnostic techniques in ophthalmology: A systematic review. Surv. Ophthalmol. 2022, 67, 1516–1530. [Google Scholar] [CrossRef]

- Mohammadi, S.-F.; Sabbaghi, M.; Z-Mehrjardi, H.; Hashemi, H.; Alizadeh, S.; Majdi, M.; Taee, F. Using artificial intelligence to predict the risk for posterior capsule opacification after phacoemulsification. J. Cataract. Refract. Surg. 2012, 38, 403–408. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Lim, J.H.; Liu, J.; Wong, D.W.; Tan, N.M.; Lu, S.; Zhang, Z.; Wong, T.Y. An automatic diagnosis system of nuclear cataract using slit-lamp images. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Conference, Minneapolis, MN, USA, 3–6 September 2009; pp. 3693–3696. [Google Scholar]

- Xu, Y.; Gao, X.; Lin, S.; Wong, D.W.K.; Liu, J.; Xu, D.; Cheng, C.Y.; Cheung, C.Y.; Wong, T.Y. Automatic Grading of Nuclear Cataracts from Slit-Lamp Lens Images Using Group Sparsity Regression; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Gao, X.; Lin, S.; Wong, T.Y. Automatic feature learning to grade nuclear cataracts based on deep learning. IEEE Trans. Biomed. Eng. 2015, 62, 2693–2701. [Google Scholar] [CrossRef]

- Wu, X.; Huang, Y.; Liu, Z.; Lai, W.; Long, E.; Zhang, K.; Jiang, J.; Lin, D.; Chen, K.; Yu, T.; et al. Universal artificial intelligence platform for collaborative management of cataracts. Br. J. Ophthalmol. 2019, 103, 1553–1560. [Google Scholar] [CrossRef] [PubMed]

- Xiao Lian, J.; Gangwani, R.A.; McGhee, S.M.; Chan, C.K.W.; Lam, C.L.K.; Wong, D.S.H. Systematic screening for diabetic retinopathy (DR) in Hong Kong: Prevalence of DR and visual impairment among diabetic population. Br. J. Ophthalmol. 2016, 100, 151–155. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Zhang, Q.; Qiao, Z.; Yang, J.-J. Classification of Cataract Fundus Image Based on Deep Learning. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017. [Google Scholar] [CrossRef]

- Pratap, T.; Kokil, P. Computer-aided diagnosis of cataract using deep transfer learning. Biomed. Signal Process. Control 2019, 53, 101533. [Google Scholar] [CrossRef]

- Hee, M.R. State-of-the-art of intraocular lens power formulas. JAMA Ophthalmol. 2015, 133, 1436–1437. [Google Scholar] [CrossRef] [PubMed]

- Melles, R.B.; Kane, J.X.; Olsen, T.; Chang, W.J. Update on intraocular lens calculation formulas. Ophthalmology 2019, 126, 1334–1335. [Google Scholar] [CrossRef] [PubMed]

- Nemeth, G.; Modis, L., Jr. Accuracy of the Hill-radial basis function method and the Barrett Universal II formula. Eur. J. Ophthalmol. 2020, 31, 566–571. [Google Scholar] [CrossRef]

- Long, E.; Chen, J.; Wu, X.; Liu, Z.; Wang, L.; Jiang, J.; Li, W.; Zhu, Y.; Chen, C.; Lin, Z.; et al. Artificial intelligence manages congenital cataract with individualized prediction and telehealth computing. NPJ Digit. Med. 2020, 3, 112. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Devalla, S.K.; Liang, Z.; Pham, T.H.; Boote, C.; Strouthidis, N.G.; Thiery, A.H.; Girard, M.J.A. Glaucoma management in the era of artificial intelligence. Br. J. Ophthalmol. 2020, 104, 301–311. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Graham, S.L.; Schulz, A.; Kalloniatis, M.; Zangerl, B.; Cai, W.; Gao, Y.; Chua, B.; Arvind, H.; Grigg, J.; et al. A deep learning-based algorithm identifies glaucomatous discs using monoscopic fundus photographs. Ophthalmol. Glaucoma 2018, 1, 15–22. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Cheng, J.; Xu, Y.; Wong, D.W.K.; Liu, J.; Cao, X. Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE Trans. Med. Imaging 2018, 37, 1597–1605. [Google Scholar] [CrossRef] [PubMed]

- Alawad, M.; Aljouie, A.; Alamri, S.; Alghamdi, M.; Alabdulkader, B.; Alkanhal, N.; Almazroa, A. Machine Learning and Deep Learning Techniques for Optic Disc and Cup Segmentation—A Review. Clin. Ophthalmol. 2022, 16, 747–764. [Google Scholar] [CrossRef]

- Orlando, J.I.; Fu, H.; Breda, J.B.; van Keer, K.; Bathula, D.R.; Diaz-Pinto, A.; Fang, R.; Heng, P.-A.; Kim, J.; Lee, J.; et al. REFUGE challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Med. Image Anal. 2020, 59, 101570. [Google Scholar] [CrossRef] [PubMed]

- Elze, T.; Pasquale, L.R.; Shen, L.Q.; Chen, T.C.; Wiggs, J.L.; Bex, P.J. Patterns of functional vision loss in glaucoma determined with archetypal analysis. J. R. Soc. Interface 2015, 12, 20141118. [Google Scholar] [CrossRef]

- Mayro, E.L.; Wang, M.; Elze, T.; Pasquale, L.R. The impact of artificial intelligence in the diagnosis and management of glaucoma. Eye 2020, 34, 1–11. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef] [PubMed]

- Medeiros, F.A.; Jammal, A.A.; Thompson, A.C. From machine to machine: An OCT-trained deep learning algorithm for objective quantification of glaucomatous damage in fundus photo- graphs. Ophthalmology 2019, 126, 513–521. [Google Scholar] [CrossRef] [PubMed]

- Goldbaum, M.H.; Sample, P.A.; White, H.; Côlt, B.; Raphaelian, P.; Fechtner, R.D.; Weinreb, R.N. Interpretation of automated perimetry for glaucoma by neural network. Investig. Ophthalmol. Vis. Sci. 1994, 35, 3362–3373. [Google Scholar]

- Brigatti, L.; Hoffman, D.; Caprioli, J. Neural networks to identify glaucoma with structural and functional measurements. Am. J. Ophthalmol. 1996, 121, 511–521. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xu, Y.; Yan, S.; Wong, D.W.K.; Wong, T.Y.; Liu, J. Automatic feature learning for glaucoma detection based on deep learning. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015—18th International Conference, Munich, Germany, 5–9 October 2015; pp. 669–677. [Google Scholar]

- Asaoka, R.; Murata, H.; Iwase, A.; Araie, M. Detecting preperimetric glaucoma with standard auto- mated perimetry using a deep learning classifier. Ophthalmology 2016, 123, 1974–1980. [Google Scholar] [CrossRef]

- Li, F.; Wang, Z.; Qu, G.; Song, D.; Yuan, Y.; Xu, Y.; Gao, K.; Luo, G.; Xiao, Z.; Lam, D.S.C.; et al. Automatic differentiation of Glaucoma visual field from non-glaucoma visual filed using deep convolutional neural network. BMC Med. Imaging 2018, 18, 35. [Google Scholar] [CrossRef]

- Shibata, N.; Tanito, M.; Mitsuhashi, K.; Fujino, Y.; Matsuura, M.; Murata, H.; Asaoka, R. Development of a deep residual learning algorithm to screen for glaucoma from fundus photography. Sci. Rep. 2018, 8, 14665. [Google Scholar] [CrossRef] [PubMed]

- Christopher, M.; Belghith, A.; Bowd, C.; Proudfoot, J.A.; Goldbaum, M.H.; Weinreb, R.N.; Girkin, C.A.; Liebmann, J.M.; Zangwill, L.M. Performance of deep learning architectures and transfer learning for detecting glaucomatous optic neuropathy in fundus photographs. Sci. Rep. 2018, 8, 16685. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Li, L.; Wormstone, I.M.; Qiao, C.; Zhang, C.; Liu, P.; Li, S.; Wang, H.; Mou, D.; Pang, R.; et al. Development and validation of a deep learning system to detect glaucomatous optic neuropathy using fundus photographs. JAMA Ophthalmol. 2019, 137, 1353–1360. [Google Scholar] [CrossRef]

- Asaoka, R.; Murata, H.; Hirasawa, K.; Fujino, Y.; Matsuura, M.; Miki, A.; Kanamoto, T.; Ikeda, Y.; Mori, K.; Iwase, A.; et al. Using deep learning and transfer learn- ing to accurately diagnose early-onset glaucoma from macular optical coherence tomography images. Am. J. Ophthalmol. 2019, 198, 136–145. [Google Scholar] [CrossRef]

- Fu, H.; Baskaran, M.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J.; Tun, T.A.; Mahesh, M.; Perera, S.A.; Aung, T. A deep learning system for automated angle-closure detection in anterior segment optical coherence tomography images. Am. J. Ophthalmol. 2019, 203, 37–45. [Google Scholar] [CrossRef] [PubMed]

- Normando, E.M.; Yap, T.E.; Maddison, J.; Miodragovic, S.; Bonetti, P.; Almonte, M.; Mohammad, N.G.; Ameen, S.; Crawley, L.; Ahmed, F.; et al. A CNN-aided method to predict glaucoma progression using DARC (Detection of Apoptosing Retinal Cells). Expert Rev. Mol. Diagn. 2020, 20, 737–748. [Google Scholar] [CrossRef] [PubMed]

- Thakur, A.; Goldbaum, M.; Yousefi, S. Predicting glaucoma before onset using deep learning. Ophthalmol. Glaucoma 2020, 3, 262–268. [Google Scholar] [CrossRef] [PubMed]

- Weinreb, R.N.; Garway-Heath, D.F.; Leung, C.; Medeiros, F.A.; Liebmann, J. (Eds.) 10th Consensus Meeting: Diagnosis of Primary Open Angle Glaucoma; Kugler Publications: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Keel, S.; Wu, J.; Lee, P.Y.; Scheetz, J.; He, M. Visualizing deep learning models for the detection of referable diabetic retinopathy and glaucoma. JAMA Ophthalmol. 2019, 137, 288–292. [Google Scholar] [CrossRef]

- Khawaja, A.P.; Cooke Bailey, J.N.; Wareham, N.J.; Scott, R.A.; Simcoe, M.; Igo, R.P., Jr.; Song, Y.E.; Wojciechowski, R.; Cheng, C.Y.; Khaw, P.T.; et al. Genome-wide analyses identify 68 new loci associated with intraocular pressure and improve risk prediction for primary open-angle glaucoma. Nat. Genet. 2018, 50, 778–782. [Google Scholar] [CrossRef] [PubMed]

- Margeta, M.A.; Letcher, S.M.; Igo, R.P.; for the NEIGHBORHOOD Consortium. Association of APOE with primary open-angle glaucoma suggests a protective effect for APOE ε4. Investig. Ophthalmol. Vis. Sci. 2020, 61, 3. [Google Scholar] [CrossRef]

- Raman, R.; Srinivasan, S.; Virmani, S.; Sivaprasad, S.; Rao, C.; Rajalakshmi, R. Fundus photograph-based deep learning algorithms in detecting diabetic retinopathy. Eye 2019, 33, 97–109. [Google Scholar] [CrossRef] [PubMed]

- Venhuizen, F.G.; van Ginneken, B.; Liefers, B.; van Asten, F.; Schreur, V.; Fauser, S.; Hoyng, C.; Theelen, T.; Sánchez, C.I. Deep learning approach for the detection and quantification of intraretinal cystoid fluid in multivendor optical coherence tomography. Biomed. Opt. Express 2018, 9, 1545–1569. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Pasquale, L.R.; Peng, L.; Campbell, J.P.; Lee, A.Y.; Raman, R.; Tan, G.S.W.; Schmetterer, L.; Keane, P.A.; Wong, T.Y. Artificial intelligence and deep learning in ophthalmology. Br. J. Ophthalmol. 2019, 103, 167–175. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.C.; Niemeijer, M. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef] [PubMed]

- Hogarty, D.T.; Mackey, D.A.; Hewitt, A.W. Current state and future prospects of artificial intelligence in ophthalmology: A review. Clin. Exp. Ophthalmol. 2019, 47, 128–139. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.A.; Ting, D.S.; Paul, H.Y.; Wei, J.; Zhu, H.; Subramanian, P.S.; Li, T.; Hui, F.K.; Hager, G.D.; Miller, N.R. Deep learning and transfer learning for optic disc laterality detection: Implications for machine learning in neuro-ophthalmology. J. Neuroophthalmol. 2020, 40, 178–184. [Google Scholar] [CrossRef]

- Milea, D.; Singhal, S.; Najjar, R.P. Artificial intelligence for detection of optic disc abnormalities. Curr. Opin. Neurol. 2020, 33, 106–110. [Google Scholar] [CrossRef] [PubMed]

- Akbar, S.; Akram, M.U.; Sharif, M.; Tariq, A.; Yasin, U.U. Decision support system for detection of papilledema through fundus retinal images. J. Med. Syst. 2017, 41, 66. [Google Scholar] [CrossRef]

- Prashanth, R.; Dutta Roy, S.; Mandal, P.K.; Ghosh, S. High-accuracy detection of early Parkinson’s disease through multimodal features and machine learning. Int. J. Med. Inform. 2016, 90, 13–21. [Google Scholar] [CrossRef] [PubMed]

- Nam, U.; Lee, K.; Ko, H.; Lee, J.-Y.; Lee, E.C. Analyzing facial and eye movements to screen for Alzheimer’s disease. Sensors 2020, 20, 5349. [Google Scholar] [CrossRef]

- Miranda, Â.; Lavrador, R.; Júlio, F.; Januário, C.; Castelo-Branco, M.; Caetano, G. Classification of Huntington’s disease stage with support vector machines: A study on oculomotor performance. Behav. Res. 2016, 48, 1667–1677. [Google Scholar] [CrossRef]

- Mao, Y.; He, Y.; Liu, L.; Chen, X. Disease classification based on eye movement features with decision tree and random forest. Front. Neurosci. 2020, 14, 798. [Google Scholar] [CrossRef]

- Zhu, G.; Jiang, B.; Tong, L.; Xie, Y.; Zaharchuk, G.; Wintermark, M. Applications of deep learning to neuro-imaging techniques. Front. Neurol. 2019, 10, 869. [Google Scholar] [CrossRef]

- Viikki, K.; Isotalo, E.; Juhola, M.; Pyykkö, I. Using decision tree induction to model oculomotor data. Scand. Audiol. 2001, 30, 103–105. [Google Scholar] [CrossRef]

- D’Addio, G.; Ricciardi, C.; Improta, G.; Bifulco, P.; Cesarelli, M. Feasibility of Machine Learning in Predicting Features Related to Congenital Nystagmus. In XV Mediterranean Conference on Medical and Biological Engineering and Computing—MEDICON 2019; Henriques, J., Neves, N., de Carvalho, P., Eds.; Springer: Cham, Switzerland, 2020; pp. 907–913. [Google Scholar]

- Fisher, A.C.; Chandna, A.; Cunningham, I.P. The differential diagnosis of vertical strabismus from prism cover test data using an artificially intelligent expert system. Med. Biol. Eng. Comput. 2007, 45, 689–693. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Fu, H.; Lo, W.-L.; Chi, Z. Strabismus recognition using eye-tracking data and convolutional neural networks. J. Healthc. Eng. 2018, 2018, e7692198. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Fan, Z.; Zheng, C.; Feng, J.; Huang, L.; Li, W.; Goodman, E.D. Automated Strabismus Detection for Telemedicine Applications. arXiv 2018, arXiv:1809.02940. [Google Scholar]

- Chandna, A.; Fisher, A.C.; Cunningham, I.; Stone, D.; Mitchell, M. Pattern recognition of vertical strabismus using an artificial neural network (StrabNet©). Strabismus 2009, 17, 131–138. [Google Scholar] [CrossRef] [PubMed]

- Bloem, B.R.; Dorsey, E.R.; Okun, M.S. The coronavirus disease 2019 crisis as catalyst for telemedicine for chronic neurological disorders. JAMA Neurol. 2020, 77, 927–928. [Google Scholar] [CrossRef] [PubMed]

- Ko, M.W.; Busis, N.A. Tele-neuro-ophthalmology: Vision for 20/20 and beyond. J. Neuroophthalmol. 2020, 40, 378–384. [Google Scholar] [CrossRef] [PubMed]

- Kann, B.H.; Aneja, S.; Loganadane, G.V.; Kelly, J.R.; Smith, S.M.; Decker, R.H.; Yu, J.B.; Park, H.S.; Yarbrough, W.G.; Malhotra, A.; et al. Pretreatment identification of head and neck Cancer nodal metastasis and Extranodal extension using deep learning neural networks. Sci. Rep. 2018, 8, 14036. [Google Scholar] [CrossRef] [PubMed]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Kann, B.H.; Thompson, R.; Thomas, C.R., Jr.; Dicker, A.; Aneja, S. Artificial intelligence in oncology: Current applications and future directions. Oncology 2019, 33, 46–53. [Google Scholar] [PubMed]

- Munson, M.C.; Plewman, D.L.; Baumer, K.M.; Henning, R.; Zahler, C.T.; Kietzman, A.T.; Beard, A.A.; Mukai, S.; Diller, L.; Hamerly, G.; et al. Autonomous early detection of eye disease in childhood photographs. Sci. Adv. 2019, 5, eaax6363. [Google Scholar] [CrossRef]

- Akkara, J.D.; Kuriakose, A. Role of artificial intelligence and machine learning in ophthalmology. Kerala J. Ophthalmol. 2019, 31, 150–160. [Google Scholar] [CrossRef]

- Fujinami-Yokokawa, Y.; Pontikos, N.; Yang, L.; Tsunoda, K.; Yoshitake, K.; Iwata, T.; Miyata, H.; Fujinami, K.; Japan Eye Genetics Consortium OBO. Prediction of causative genes in inherited retinal disorders from spectral-domain optical coherence tomography utilizing deep learning techniques. J. Ophthalmol. 2019, 2019, 1691064. [Google Scholar] [CrossRef] [PubMed]

- Medsinge, A.; Nischal, K.K. Pediatric cataract: Challenges and future directions. Clin. Ophthalmol. 2015, 9, 77–90. [Google Scholar] [PubMed]

- Sarao, V.; Veritti, D.; De Nardin, A.; Misciagna, M.; Foresti, G.; Lanzetta, P. Explainable artificial intelligence model for the detection of geographic atrophy using colour retinal photographs. BMJ Open Ophthalmol. 2023, 8, e001411. [Google Scholar] [CrossRef]

- Van Eenwyk, J.; Agah, A.; Giangiacomo, J.; Cibis, G. Artificial intelligence techniques for automatic screening of amblyogenic factors. Trans. Am. Ophthalmol. Soc. 2008, 106, 64–73. [Google Scholar] [PubMed]

- de Figueiredo, L.A.; Debert, I.; Dias, J.V.P.; Polati, M. An artificial intelligence app for strabismus. Investig. Ophthalmol. Vis. Sci. 2020, 61, 2129. [Google Scholar]

- Ting, D.S.W.; Wong, T.Y. Eyeing cardiovascular risk factors. Nat. Biomed. Eng. 2018, 2, 140–141. [Google Scholar] [CrossRef] [PubMed]

- Cheung, C.Y.; Tay, W.T.; Ikram, M.K.; Ong, Y.T.; De Silva, D.A.; Chow, K.Y.; Wong, T.Y. Retinal microvascular changes and risk of stroke: The Singapore Malay eye study. Stroke 2013, 44, 2402–2408. [Google Scholar] [CrossRef] [PubMed]

- Pérez Del Palomar, A.; Cegoñino, J.; Montolío, A.; Orduna, E.; Vilades, E.; Sebastián, B.; Pablo, L.E.; Garcia-Martin, E. Swept source optical coherence tomography to early detect multiple sclerosis disease. The use of machine learning techniques. PLoS ONE 2019, 14, e0216410. [Google Scholar] [CrossRef] [PubMed]

- Cavaliere, C.; Vilades, E.; Alonso-Rodríguez, M.C.; Rodrigo, M.J.; Pablo, L.E.; Miguel, J.M.; López-Guillén, E.; Morla, E.M.S.; Boquete, L.; Garcia-Martin, E. Computer-aided diagnosis of multiple sclerosis using a support vector machine and optical coherence tomography features. Sensors 2019, 19, 5323. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Carass, A.; Liu, Y.; Jedynak, B.M.; Solomon, S.D.; Saidha, S.; Calabresi, P.A.; Prince, J.L. Deep learning-based topology guaranteed surface and MME segmentation of multiple sclerosis subjects from retinal OCT. Biomed. Opt. Express. 2019, 10, 5042–5058. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.S.; Apte, R.S. Retinal biomarkers of Alzheimer’s disease. Am. J. Ophthalmol. 2020, 218, 337–341. [Google Scholar] [CrossRef] [PubMed]

- Nunes, A.; Silva, G.; Duque, C.; Januário, C.; Santana, I.; Ambrósio, A.F.; Castelo-Branco, M.; Bernardes, R. Retinal texture biomarkers may help to discriminate between Alzheimer’s, Parkinson’s, and healthy controls. PLoS ONE 2019, 14, e0218826. [Google Scholar] [CrossRef]

- Cai, J.H.; He, Y.; Zhong, X.L.; Lei, H.; Wang, F.; Luo, G.H.; Zhao, H.; Liu, J.C. Magnetic resonance texture analysis in Alzheimer’s disease. Acad. Radiol. 2020, 27, 1774–1783. [Google Scholar] [CrossRef]

- Sharafi, S.M.; Sylvestre, J.P.; Chevrefils, C.; Soucy, J.P.; Beaulieu, S.; Pascoal, T.A.; Arbour, J.D.; Rhéaume, M.A.; Robillard, A.; Chayer, C.; et al. Vascular retinal biomarkers improve the detection of the likely cerebral amyloid status from hyperspectral retinal images. Alzheimers Dement. 2019, 5, 610–617. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.prnewswire.com/news-releases/optina-diagnostics-receives-breakthrough-device-designation-from-us-fda-for-a-retinal-imaging-platform-to-aid-in-the-diagnosis-of-alzheimers-disease-300846450.html (accessed on 7 November 2024).

- Available online: https://www.fda.gov/medical-devices/how-study-and-market-your-device/breakthrough-devices-program (accessed on 7 November 2024).

- ASCRS. Guide to Teleophthalmology. Available online: https://ascrs.org/advocacy/regulatory/telemedicine (accessed on 7 November 2024).

- Kapoor, R.; Walters, S.P.; Al-Aswad, L.A. The current state of artificial intelligence in ophthalmology. Surv. Ophthalmol. 2019, 64, 233–240. [Google Scholar] [CrossRef] [PubMed]

- Heinke, A.; Radgoudarzi, N.; Huang, B.B.; Baxter, S.L. A review of ophthalmology education in the era of generative artificial intelligence. Asia-Pac. J. Ophthalmol. 2024, 13, 100089. [Google Scholar] [CrossRef] [PubMed]

- Luxton, D.D. Recommendations for the ethical use and design of artificial intelligent care providers. Artif. Intell. Med. 2014, 62, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Johnson, S.L.J. AI machine learning, and ethics in health care. J. Leg. Med. 2019, 39, 427–441. [Google Scholar] [CrossRef]

- Tom, E.; Keane, P.A.; Blazes, M.; Pasquale, L.R.; Chiang, M.F.; Lee, A.Y.; Lee, C.S. AAO Artificial Intelligence Task Force. Protecting Data Privacy in the Age of AI-Enabled Ophthalmology. Transl. Vis. Sci. Technol. 2020, 9, 36. [Google Scholar] [CrossRef] [PubMed]

| Year | Author | Data Source | Method | Definition of Gold Standard/Ground Truth | Performance |

|---|---|---|---|---|---|

| 2009 | Li et al. [62] | Singapore Malay eye study (SiMES), 10,000 training, 5490 testing | Modified ASM: Lens structure detection HSV model: Feature extraction SVM: Automatic grading | Wisconsin cataract grading system | Accuracy: 95% MAE (grading): 0.36 |

| 2013 | Xu et al. [63] | ACHIKO-NC (subset of SiMES, 10,000 training, 5278 testing | Modified ASM: Lens structure detection BOF model: Feature extraction GSR: Automatic grading | Wisconsin cataract grading system | MAE: 0.336 |

| 2015 | Gao. et al. [64] | ACHIKO-NC (subset of SiMES), 10,000 training, 5278 testing | CRNN: Feature learning SVM regression: Automatic grading | Wisconsin cataract grading system | MAE: 0.304 |

| 2019 | Wu X. et al. [65] | Chinese Medical Alliance for Artificial Intelligence (CMAAI), 30,132 training, 7506 testing | ResNet | LOCS II | Capture mode recognition AUC = 99.36% a. AUC = 99.28% b. AUC = 99.68% c. AUC = 99.71% Cataract diagnosis Cataract AUC = 99.93% a. AUC = 99.96% b. AUC = 99.19% c. AUC = 99.38%d Post-operative eye AUC = 99.93% a. AUC = 99.93% b. AUC = 98.99% c. AUC = 99.74% Detection of referable cataracts a. Adult cataract: AUC = 94.88% b. Pediatric cataract with VA involvement: AUC = 100% c. PCO with VA involvement: AUC = 91.90% |

| Year | Author | Data Source | Method | Definition of Gold Standard/Ground Truth | Performance |

|---|---|---|---|---|---|

| 2017 | Dong et al. [67] | 5495 training, 2355 testing | Caffe: Feature extraction SoftMax: Detection and grading | Labelled fundus images by ophthalmologists | Accuracy a = 94.07% b Accuracy a = 90.82% c |

| 2017 | Zhang et al. | Beijing Tongren Eye Centre’s clinical database, 4004 training, 1606 testing | DCNN: Detection and grading | Labelled fundus images by graders | AUC = 0.935 b AUC = 0.867 c |

| 2018 | Ran et al. | Not described | DCNN: Feature extraction Random forest: Detection and grading | Labelled fundus images by ophthalmologists, crosschecked by graders | AUC = 0.970 b Sensitivity = 97.26% b Specificity = 96.92% b |

| 2018 | Li et al. [53] | Beijing Tongren Eye Centre’s clinical database, 7030 training, 1000 testing | ResNet 18: Detection ResNet 50: Grading | Labelled fundus images by graders | AUC = 0.972 b AUC = 0.877 c |

| 2019 | Pratap and Kokil [68] | Multiple online databases, 400 training, 400 testing | Pre-trained CNN: Feature extraction SVM: Detection and grading | Labelled fundus images by ophthalmologists | Accuracy a = 100% b Accuracy a = 92.91% c |

| Kane Formula | Hill-RBF | |

|---|---|---|

| Short axial length (≤22.0 mm) | 0.441 | 0.440 |

| Intermediate axial length (>22.0 mm to <26.0 mm) | 0.322 | 0.340 |

| Long axial length (≥26.0 mm) | 0.326 | 0.358 |

| Author | Study Type | AI Algorithm/Fundus Camera | Dataset | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Abràmoff et al. [102] (2016) | Retrospective (DR) | Topcon TRC NW6 non-mydriatic fundus camera/IDx-DR X2 | MESSIDOR-2 | 96.8 | 87 |

| Gulshan et al. [73] (2016) | Retrospective (DR) | Topcon TRC NW6 non-mydriatic camera/Inception-V3 | MESSIDOR-2 | 87 | 98.50 |

| Ting et al. (2017) [54] | Retrospective (DR) | FundusVue, Canon, Topcon, and Carl Zeiss/VCG-19 | SiDRP 14–15 | 90.5 | 91.6 |

| Guangdong | 98.7 | 81.6 | |||

| SIMES | 97.1 | 82.0 | |||

| SINDI | 99.3 | 73.3 | |||

| SCES | 100 | 76.3 | |||

| BES | 94.4 | 88.5 | |||

| AFEDS | 98.8 | 86.5 | |||

| RVEEH | 98.9 | 92.2 | |||

| Mexican | 91.8 | 84.8 | |||

| CUHK | 99.3 | 83.1 | |||

| Brown et al. [46] (2018) | Retrospective (ROP) | Inception-V1 and U-Net | AREDS | 100 | 94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costin, H.-N.; Fira, M.; Goraș, L. Artificial Intelligence in Ophthalmology: Advantages and Limits. Appl. Sci. 2025, 15, 1913. https://doi.org/10.3390/app15041913

Costin H-N, Fira M, Goraș L. Artificial Intelligence in Ophthalmology: Advantages and Limits. Applied Sciences. 2025; 15(4):1913. https://doi.org/10.3390/app15041913

Chicago/Turabian StyleCostin, Hariton-Nicolae, Monica Fira, and Liviu Goraș. 2025. "Artificial Intelligence in Ophthalmology: Advantages and Limits" Applied Sciences 15, no. 4: 1913. https://doi.org/10.3390/app15041913

APA StyleCostin, H.-N., Fira, M., & Goraș, L. (2025). Artificial Intelligence in Ophthalmology: Advantages and Limits. Applied Sciences, 15(4), 1913. https://doi.org/10.3390/app15041913