Privacy Illusion: Subliminal Channels in Schnorr-like Blind-Signature Schemes

Abstract

Featured Application

Abstract

1. Introduction

1.1. Cryptographic Schemes Versus Black-Box Implementations

1.2. Our Contribution

1.3. Impact of the Findings

1.4. Structure of the Article

2. Related Work

3. Methods

3.1. Notation

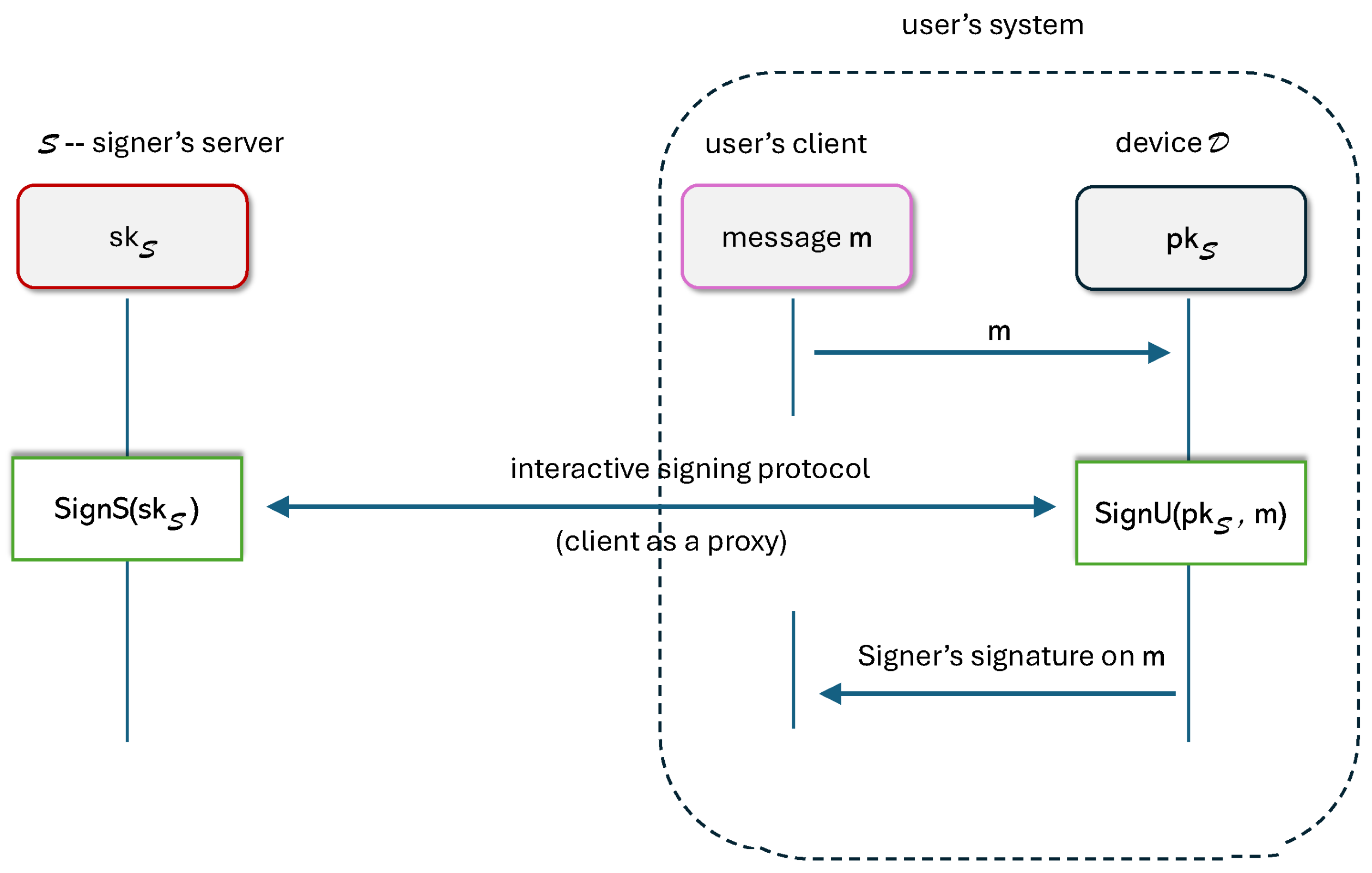

3.2. Blind Signatures

- Signer is a party that holds private signing keys and signs (blindly) messages on request of Users,

- User is a party that requests signatures of over chosen messages; all cryptographic operations of the scheme are executed by a black-box device of (we assume that may have a pair of keys used, e.g., for authentication);

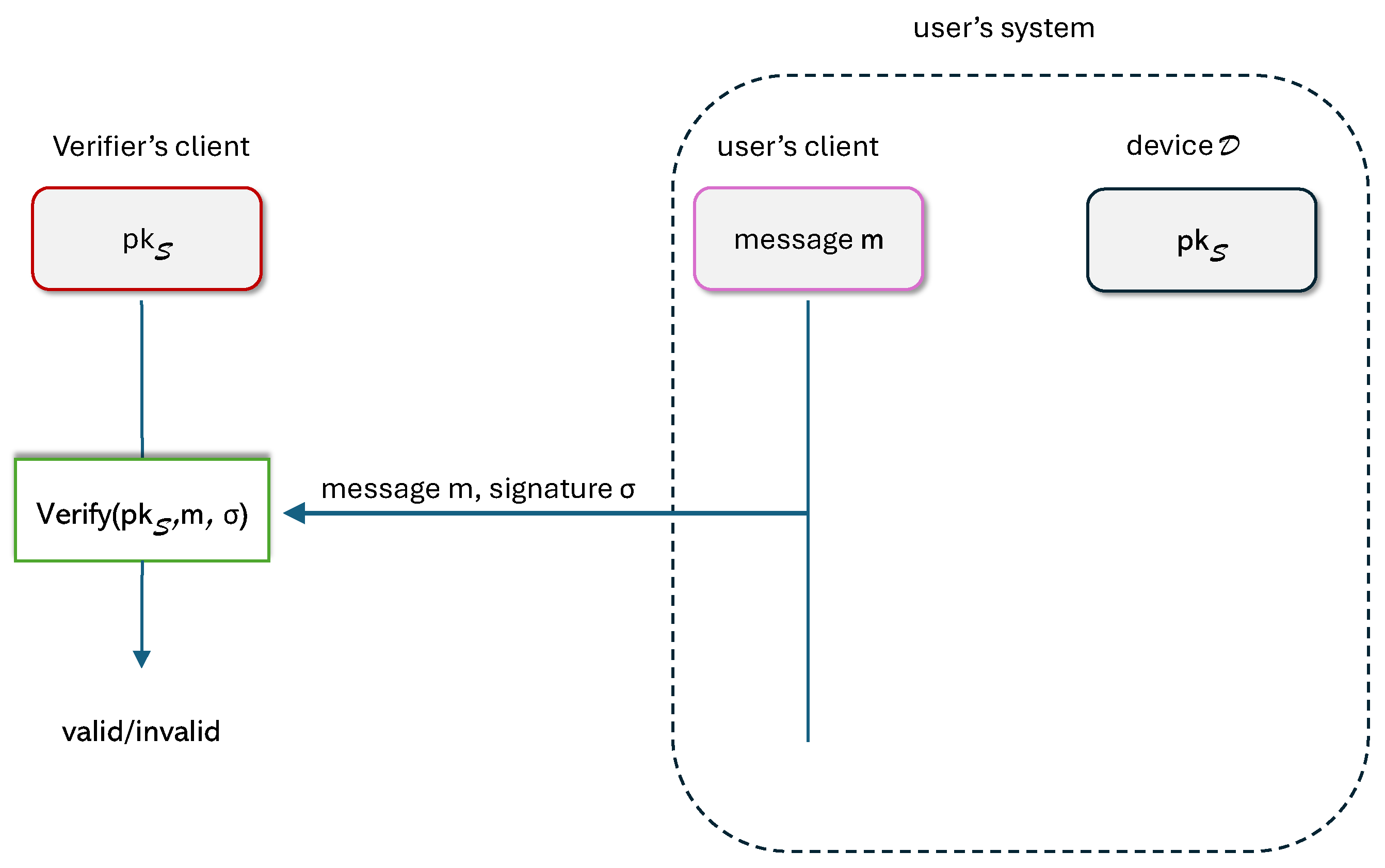

- A verifier checks the signatures allegedly created by .

- 1.

- The key-generation procedure takes as input the security parameter n and outputs a pair of signing keys for the Signer .

- 2.

- The signing procedure is an interactive protocol, where runs , and runs on behalf of a User:For message ( is the set of possible messages to be signed), the joint execution outputs a signature σ on the side of the User . A transcript of the interaction (all messages exchanged between and during joint execution) is visible to all parties (including, in particular, the User).

- 3.

- The verification procedure takes as input a public key , a message , and a signature σ. It returns a bit , where 1 stands for ‘the signature is valid’.

- One-more unforgeablity: This property captures the unforgeability of signatures: no signature can be created without running the interactive procedure with . Namely, in the security game, the Adversary can interact with and receive valid signatures for on messages of their choice. The Adversary wins if they can present a valid signature .The scheme satisfies one-more unforgeability property if the probability of winning the game by the Adversary is negligible.

- Blindness: This property captures the fact that cannot link the signatures with the sessions executed with the User. It is expressed by the following game: first, the Adversary chooses messages , , generates signing keys , and sends and to the User. Next, the User chooses a bit b at random. Then, two instances of blind signing procedures are started (and possibly run concurrently) with the Adversary being the Signer. In the first instance, the User obtains a valid signature of signed with ; in the second, it obtains a valid signature for signed with . The resulting valid unblinded signatures are presented to the Adversary. The Adversary presents a bit and wins the game if .The scheme satisfies the blindness property if the probability of winning the game by the Adversary is , where is a negligibly small value.

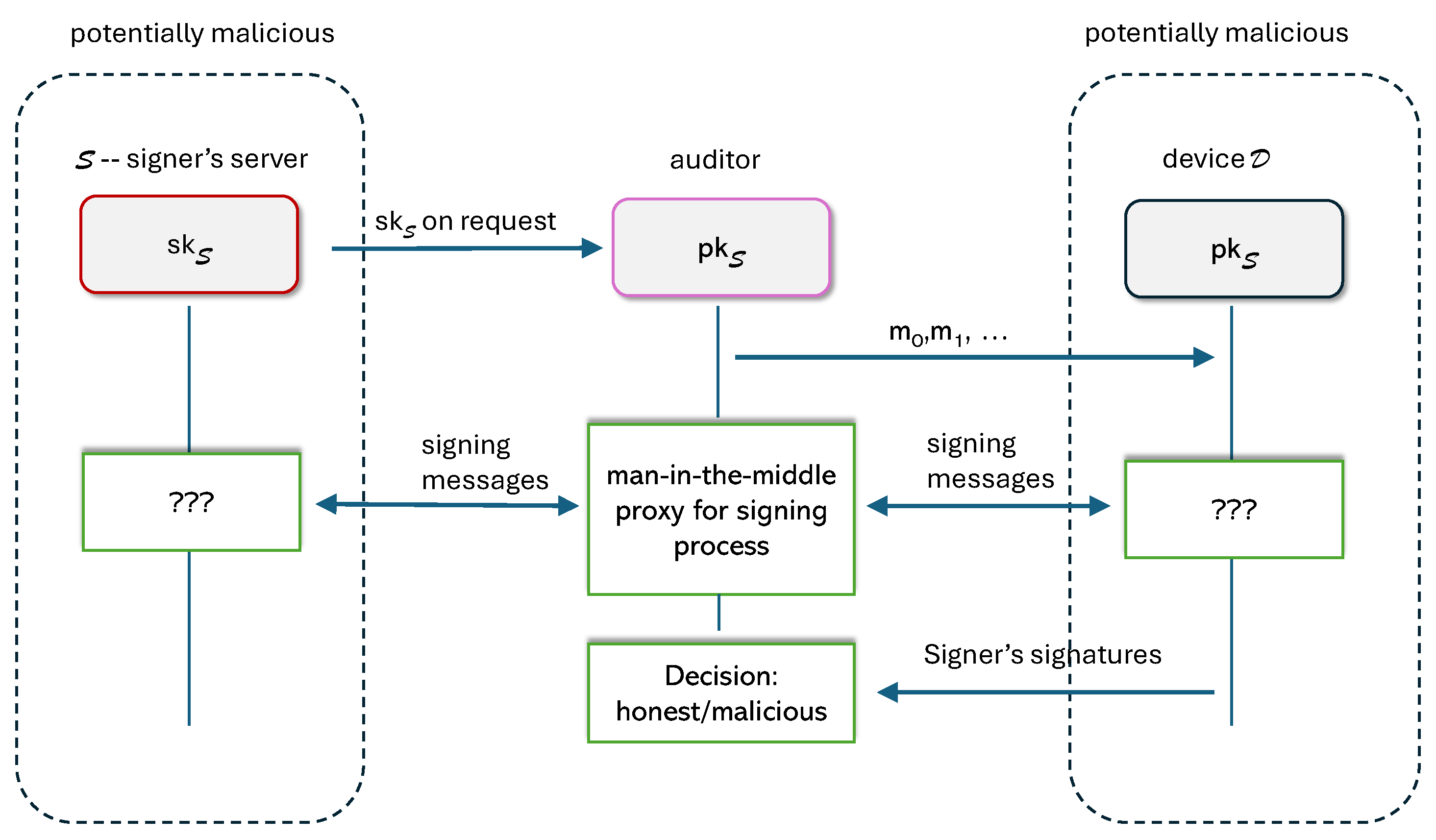

3.3. Audit and Threat Model

- Unblinding known signatures: This attack enables to decide whether a given signature has been created during a given session with (effectively showing which device has derived ); the attack may require the message signed by . If effective, this attack dismantles privacy protection for signatures that emerge on the market.

- Immediate unblinding: This attack enables to derive the same unblinded signature as that obtained by while running the blind-signature creation procedure;

- Covert transmission to Signer: In this attack, sends a covert message to during blind-signature creation. In particular, the covert message can contain the message to be signed blindly for immediate unblinding.

- Covert transmission to device: In this attack, sends a covert message to during blind-signature creation. In particular, the covert message can contain secret instructions for the device.

3.3.1. Auditor Model

3.3.2. Indistinguishability

- and choose jointly a bit uniformly at random,

- If , they install the original blind-signature scheme Π. If , they install a modified scheme implementing the attack.

- inspects and as described in Definition 2.

- outputs a bit .

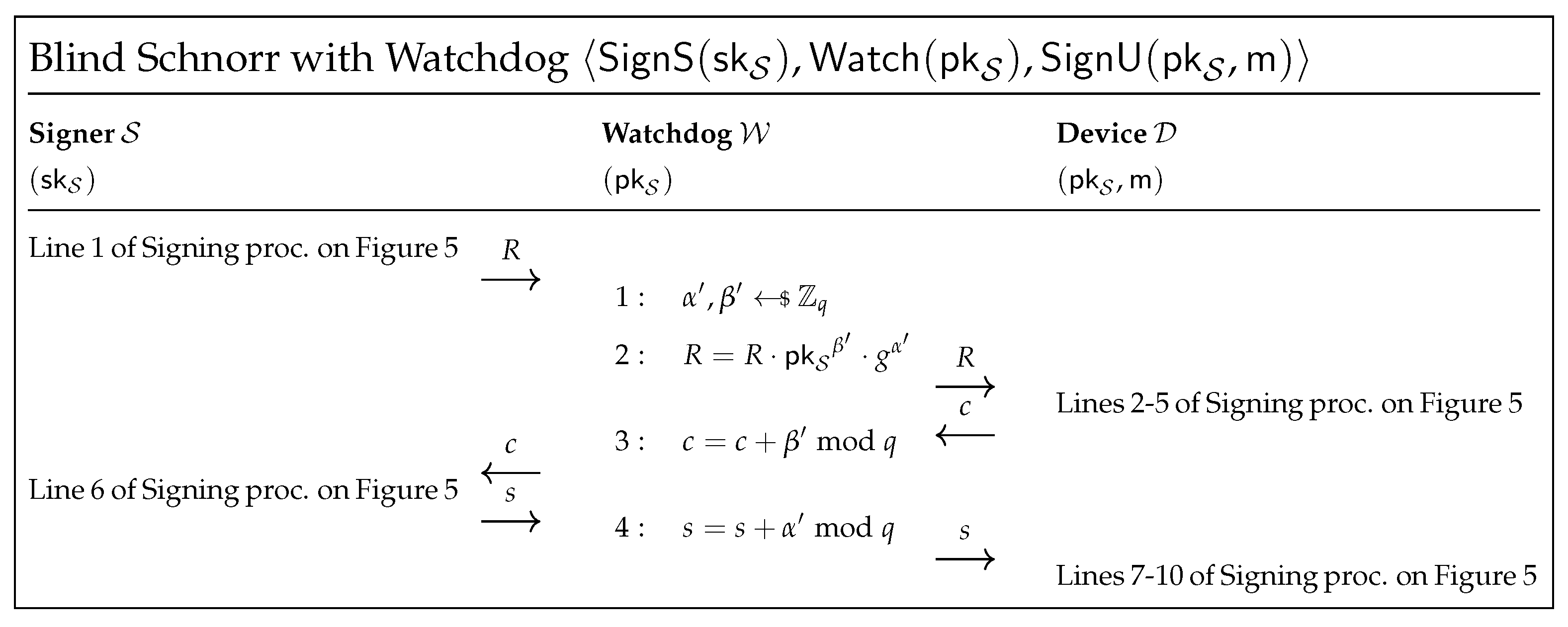

3.4. Watchdog Defense

4. Blind Schnorr Signatures—Results and Discussion

4.1. Establishing a Shared Secret by and

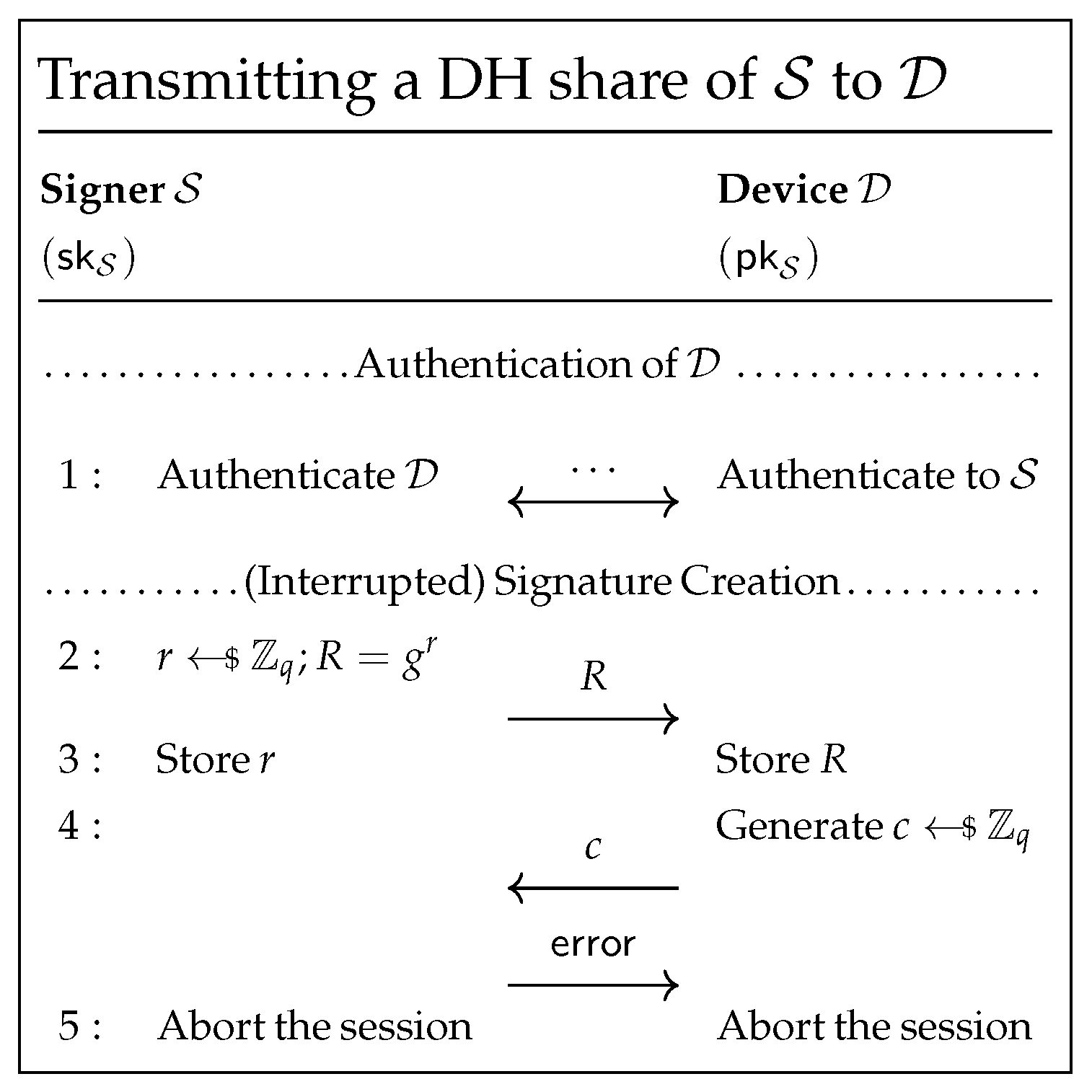

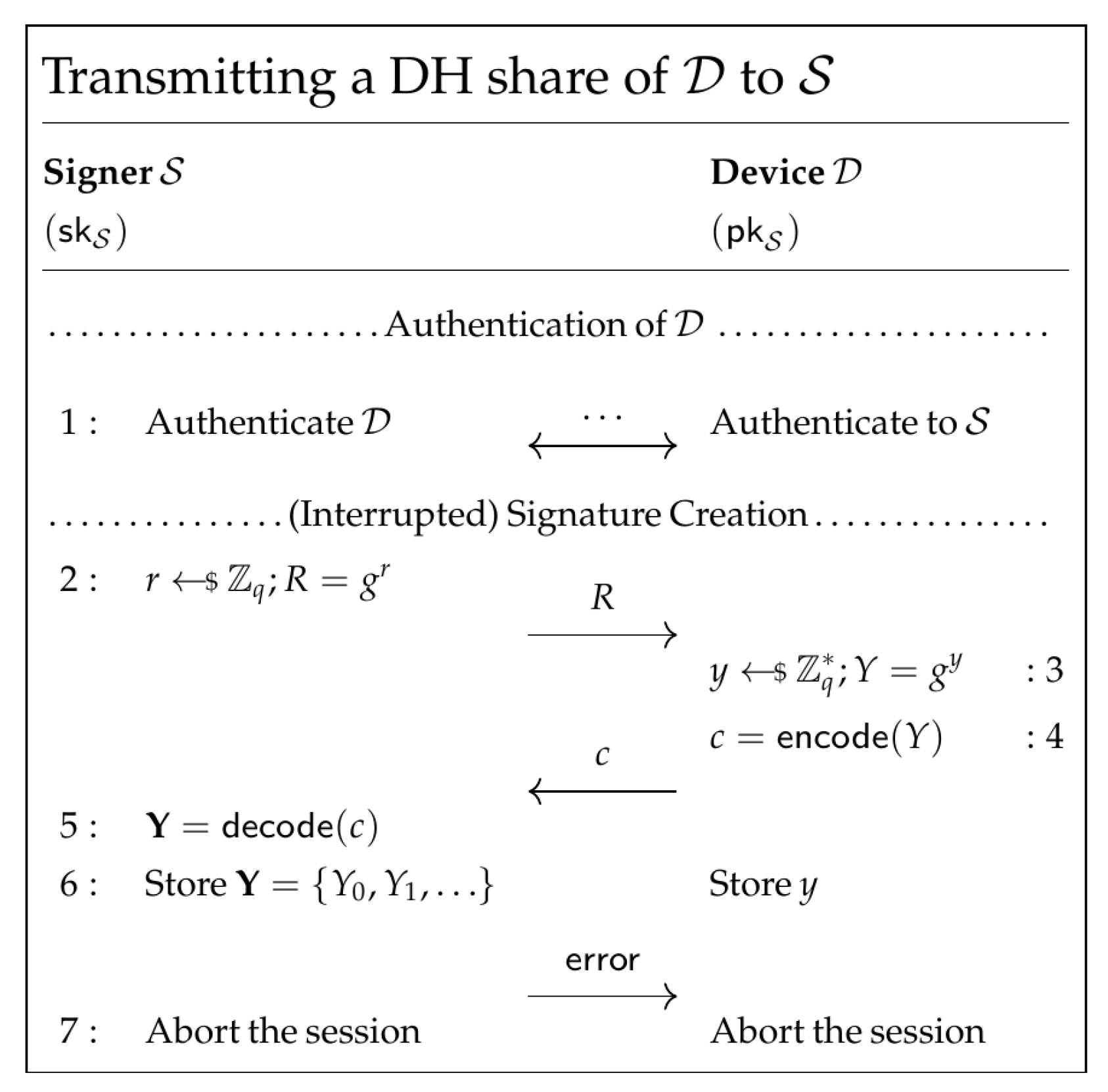

4.1.1. Transmitting a Diffie–Hellman Ephemeral Share from to

4.1.2. Transmitting a DH Ephemeral Share from to

4.1.3. Generating

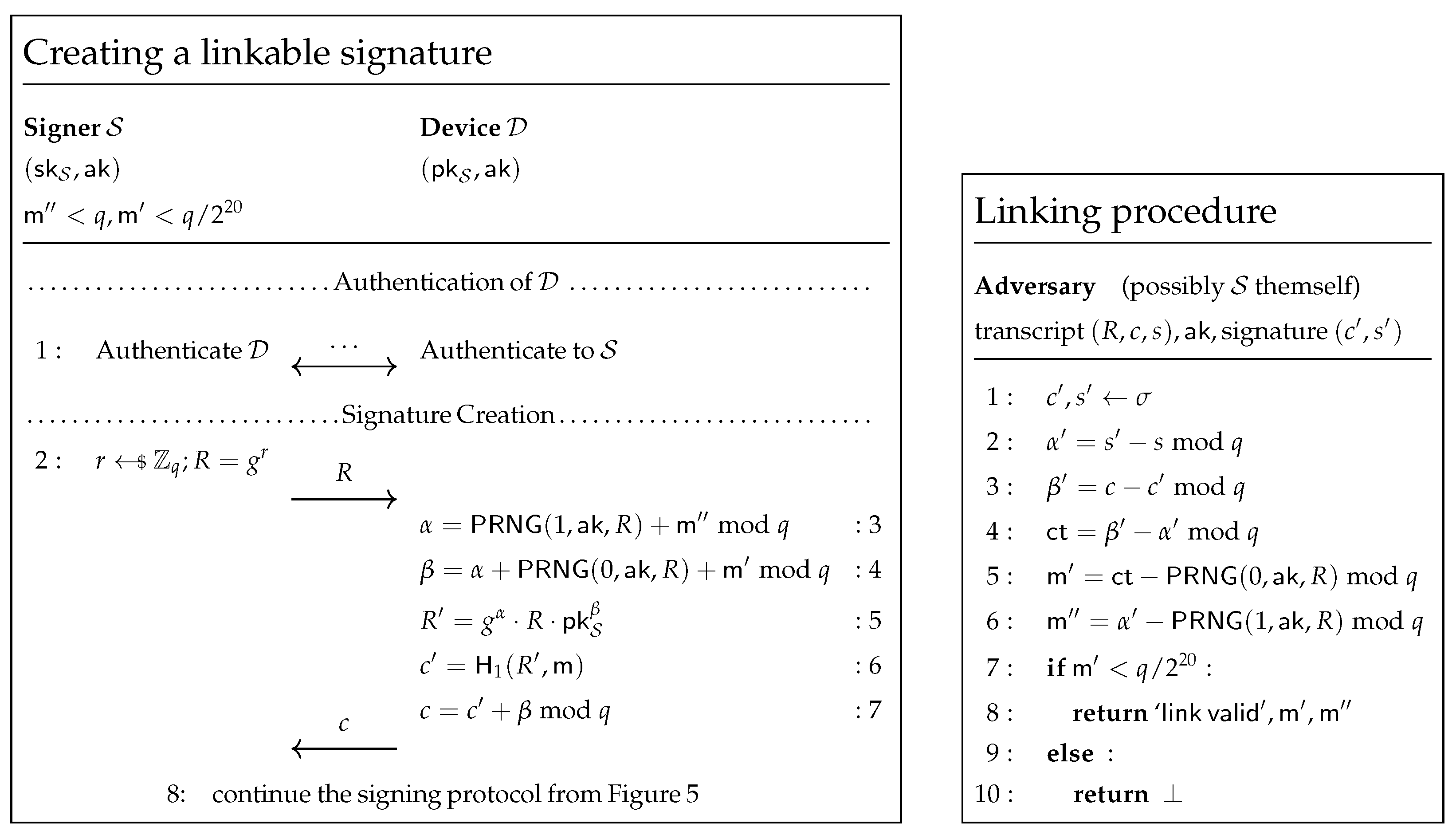

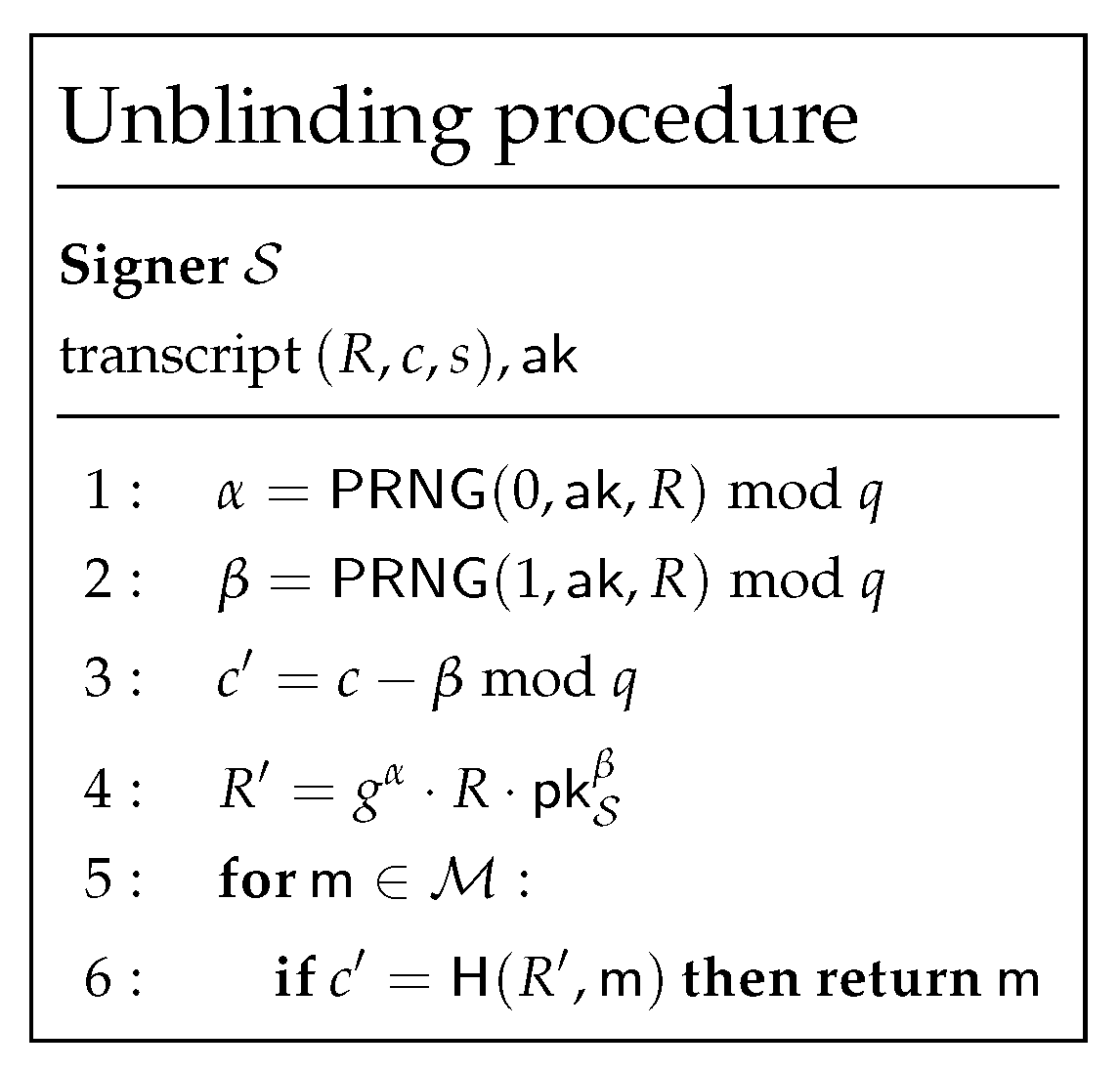

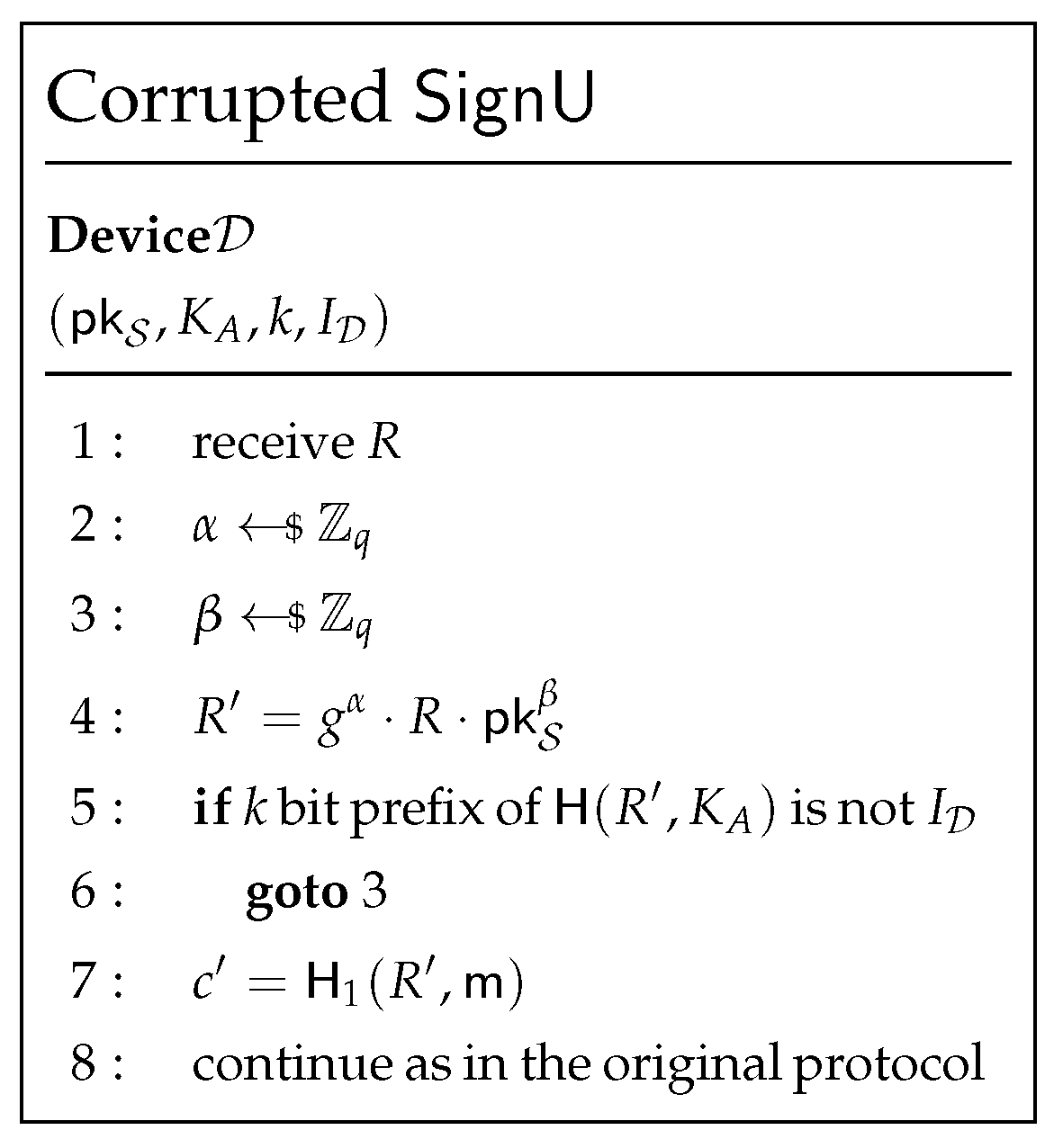

4.2. Linking with the Shared Key

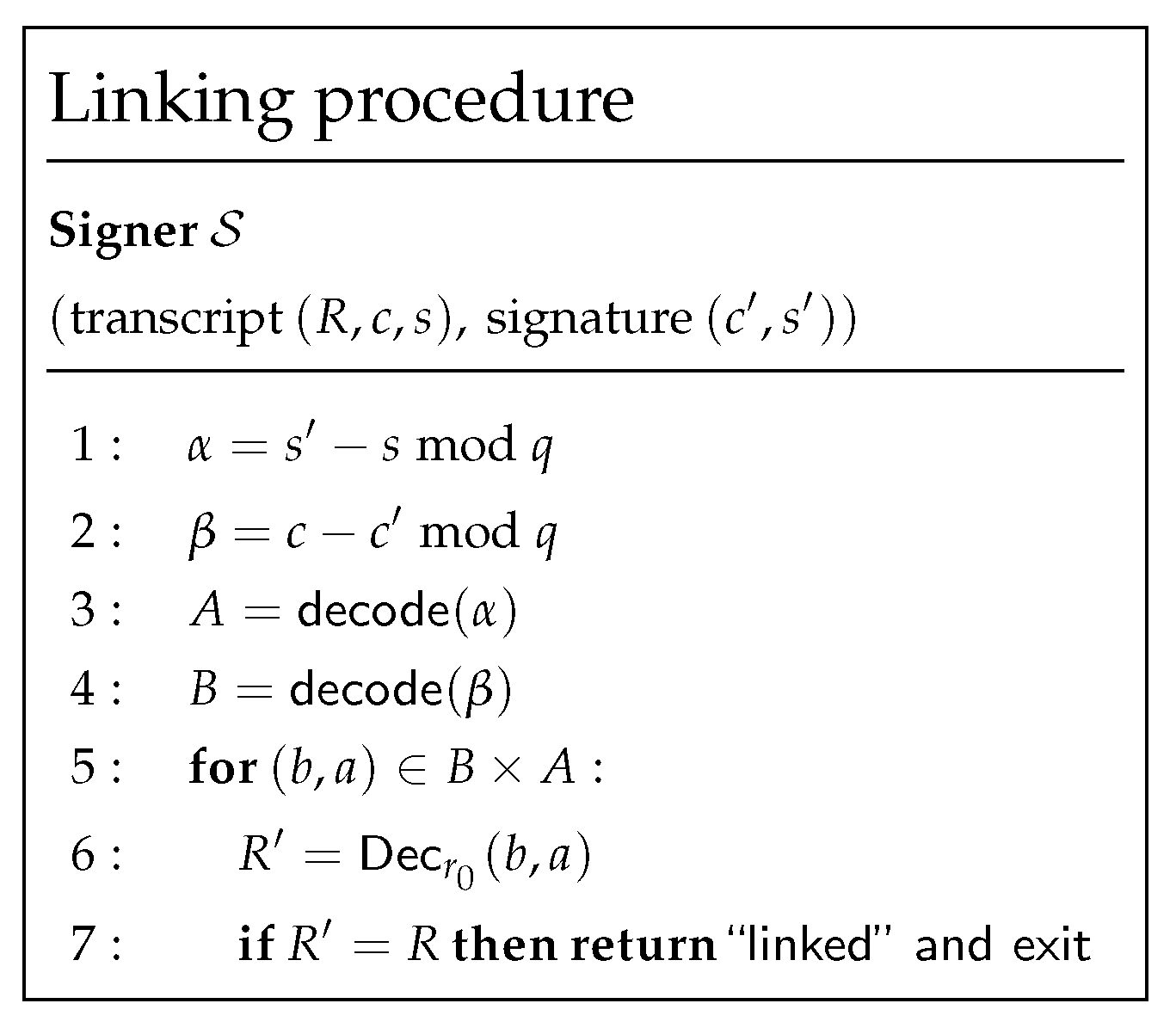

- 1.

- ,

- 2.

- ,

- 1.

- ,

- 2.

- .

4.3. Linking Without the Shared Key

4.4. Countermeasures

- instead of passing R, the watchdog delivers ;

- instead of passing c, the watchdog delivers ;

- instead of passing s, the watchdog delivers .

4.5. The Remaining Threat—Rejection Sampling Attack

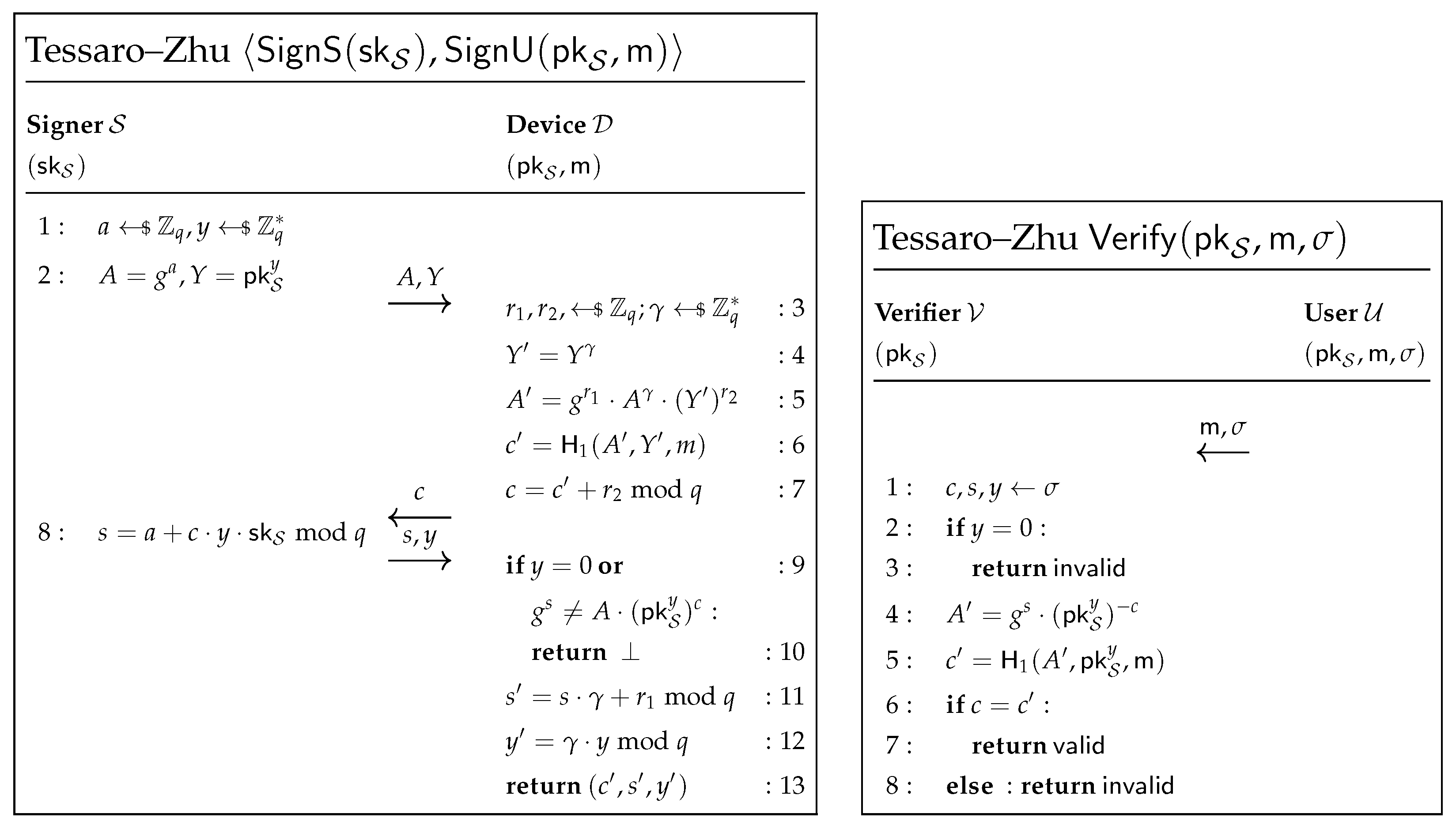

5. Tessaro–Zhu Blind Signatures—Results and Discussion

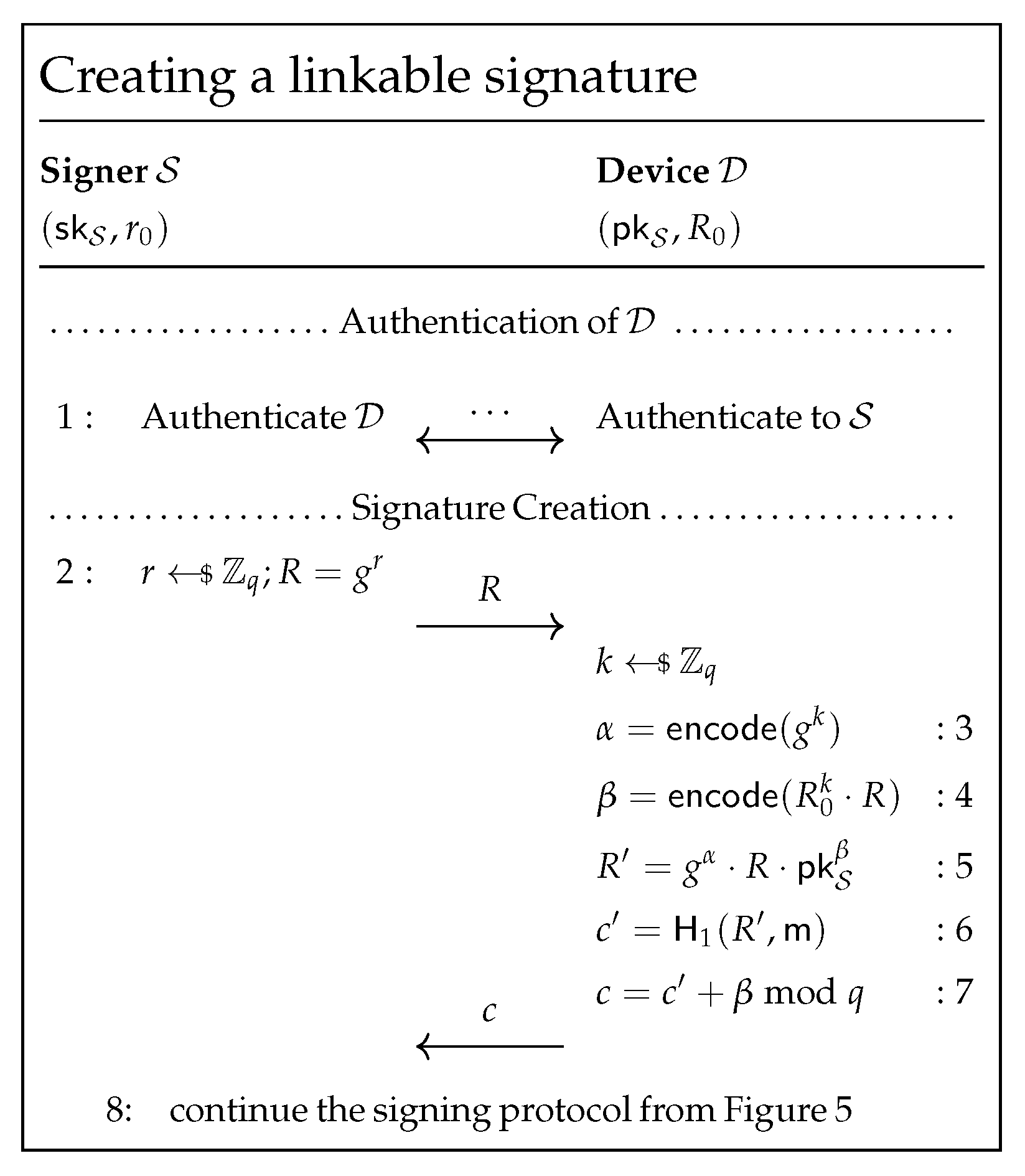

5.1. Establishing a Shared Key

5.1.1. Transmitting the Share of

- 1.

- ,

- 2.

- ,

- 3.

5.1.2. Transmitting the Share of

- 1.

- ,

- 2.

- ,

- 3.

5.1.3. Generating

5.2. Linking with a Shared Secret

5.3. Linking with No Shared Key

- 1.

- ,

- 2.

- ,

- 3.

- 4.

- 1.

- Eliminate wrong guesses for the identity of (for wrong keys of , after decryption we would obtain random elements of , hence not in the required range with a high probability;

- 2.

- Eliminate wrong elements delivered by .

5.4. Attacks on Related Schemes

- 1.

- Scheme BS3 from the same paper [19];

- 2.

- 3.

5.4.1. Establishing a Public Key of Dedicated for

5.4.2. Linkable Signature Creation Details

- Scheme BS3 from [19]: selected by during execution of (compare page 22 of [19]). They can be recalculated by as follows:

- –

- (compare );

- –

- (compare ));

- –

- (compare );

- –

- (compare ).

Note that are the components of the signature compared with an interaction with messages containing (message sent by as the output of ) and c (message sent by as the output of ). - Scheme BS from [34]: (in some cases, ) selected by (compare and on page 13 of [34]). They can be recovered by as follows (compare , and , on page 13 of [34]):

- –

- ,

- –

- (the version with ) or (the version with ),

- –

- (the version with ) or (the version with ),

- Scheme BSr3 from [34]: the elements . Note that random s is sent in clear during blind-signature creation so it can be used to calculate the shared ephemeral secret used to derive . In turn, can be derived by as , where is recalculated according to the regular verification procedure (compare page 14 of [34]);

- Scheme BS3 from [35]: the elements and selected by during the execution of (compare page 34 of [35]). They can be recalculated by during the linking test as:

- –

- .

- –

- ;

- –

- ;

- –

- (these are component-wise calculations on vectors of length 2);

- –

- (again, these are vector operations)

(compare on page 34 of [35]). Note that these calculations can be performed by as are the components of the final signature (see the output of ), while are contained in the message sent by in the last step of .

5.5. Countermeasures

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PET | Privacy-Enhancing Technology |

| RFC | Request For Comments |

| RSA | Rivest–Shamir–Adleman (cryptosystem) |

| VPN | Virtual Private Network |

| EDIW | European Digital Identity Wallet |

| GDPR | General Data Protection Regulation |

| DH | Diffie–Hellman (key exchange protocol) |

| DDH | Decisional Diffie–Hellman (assumption) |

| SSI | Self-Sovereign Identity |

| PRNG | Pseudorandom Number Generator |

| FDH | Full Domain Hash |

| KDF | Key Derivation Function |

| ROS | Random inhomogeneities in a Overdetermined Solvable system of linear equations (problem) |

| Proc. | Procedure |

References

- Chaum, D. Blind Signatures for Untraceable Payments. In Proceedings of the Advances in Cryptology—CRYPTO’82, Santa Barbara, CA, USA, 23–25 August 1982; Chaum, D., Rivest, R.L., Sherman, A.T., Eds.; Plenum Press: New York, NY, USA, 1983; pp. 199–203. [Google Scholar] [CrossRef]

- Baldimtsi, F.; Lysyanskaya, A. Anonymous credentials light. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, CCS ’13, Berlin, Germany, 4–8 November 2013; Sadeghi, A.-R., Gligor, V.D., Yung, M., Eds.; ACM: New York, NY, USA, 2013; pp. 1087–1098. [Google Scholar] [CrossRef]

- Davidson, A.; Goldberg, I.; Sullivan, N.; Tankersley, G.; Valsorda, F.; Holloway, R. Privacy Pass: Bypassing Internet Challenges Anonymously. Proc. Priv. Enhancing Technol. 2018, 2018, 164–180. [Google Scholar] [CrossRef]

- Google. How the VPN by Google One Works | Google One. 2024. Available online: https://web.archive.org/web/20231226211122/https://one.google.com/about/vpn/howitworks (accessed on 3 January 2024).

- Apple. iCloud Private Relay Overview; Technical Report; Apple: Cupertino, CA, USA, 2021; Available online: https://www.apple.com/icloud/docs/iCloud_Private_Relay_Overview_Dec2021.pdf (accessed on 20 January 2025).

- Schmitt, P.; Raghavan, B. Pretty Good Phone Privacy. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Online, 11–13 August 2021; Michael, D., Bailey, M.D., Greenstadt, R., Eds.; pp. 1737–1754. Available online: https://www.usenix.org/conference/usenixsecurity21/presentation/schmitt (accessed on 20 January 2025).

- European Parliament and the Council. Regulation (EU) 2024/1183 of the European Parliament and of the Council. 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1183/oj (accessed on 4 March 2025).

- Baum, C.; Blazy, O.; Camenisch, J.; Hoepman, J.H.; Lee, E.; Lehmann, A.; Lysyanskaya, A.; Mayrhofer, R.; Montgomery, H.; Nguyen, N.K.; et al. Cryptographers’ Feedback on the EU Digital Identity’s ARF. 2024. Available online: https://github.com/eu-digital-identity-wallet/eudi-doc-architecture-and-reference-framework/discussions/211 (accessed on 20 January 2025).

- European Parliament and the Council. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA Relevance). 2016. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 20 January 2025).

- Apple; Google. Exposure Notification Privacy-Preserving Analytics (ENPA) White Paper. 2021. Available online: https://covid19-static.cdn-apple.com/applications/covid19/current/static/contact-tracing/pdf/ENPA_White_Paper.pdf (accessed on 20 January 2025).

- Nemec, M.; Sýs, M.; Svenda, P.; Klinec, D.; Matyas, V. The Return of Coppersmith’s Attack: Practical Factorization of Widely Used RSA Moduli. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS ’17, Dallas, TX, USA, 30 October–3 November 2017; Thuraisingham, B., Evans, D., Malkin, T., Xu, D., Eds.; ACM: New York, NY, USA, 2017; pp. 1631–1648. [Google Scholar] [CrossRef]

- Simmons, G.J. The Prisoners’ Problem and the Subliminal Channel. In Proceedings of the Advances in Cryptology, Proceedings of CRYPTO ’83, Santa Barbara, CA, USA, 21–24 August 1983; Chaum, D., Ed.; Plenum Press: New York, NY, USA, 1983; pp. 51–67. [Google Scholar] [CrossRef]

- Young, A.L.; Yung, M. Malicious Cryptography—Exposing Cryptovirology; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Persiano, G.; Phan, D.H.; Yung, M. Anamorphic Encryption: Private Communication Against a Dictator. In Proceedings of the Advances in Cryptology —EUROCRYPT 2022, Trondheim, Norway, 30 May–3 June 2022; Proceedings, Part II. Dunkelman, O., Dziembowski, S., Eds.; Springer: Cham, Switzerland, 2022. Lecture Notes in Computer Science. Volume 13276, pp. 34–63. [Google Scholar] [CrossRef]

- Kutyłowski, M.; Lauks-Dutka, A.; Kubiak, P.; Zawada, M. FIDO2 Facing Kleptographic Threats By-Design. Appl. Sci. 2024, 14, 11371. [Google Scholar] [CrossRef]

- Kubiak, P.; Kutyłowski, M. Supervised Usage of Signature Creation Devices. In Proceedings of the Information Security and Cryptology INSCRYPT’2013, Guangzhou, China, 27–30 November 2013; Lin, D., Xu, S., Yung, M., Eds.; Springer: Cham, Switzerland, 2013. Lecture Notes in Computer Science. Volume 8567, pp. 132–149. [Google Scholar] [CrossRef]

- Chaum, D.; Pedersen, T.P. Wallet Databases with Observers. In Proceedings of the Advances in Cryptology, Proceedings of CRYPTO ’92, Santa Barbara, CA, USA, 16–20 August 1992; Brickell, E.F., Ed.; Springer: Berlin/Heidelberg, Germany, 1992. Lecture Notes in Computer Science. Volume 740, pp. 89–105. [Google Scholar] [CrossRef]

- Schnorr, C.P. Efficient Signature Generation by Smart Cards. J. Cryptol. 1991, 4, 161–174. [Google Scholar] [CrossRef]

- Tessaro, S.; Zhu, C.; Allen, P.G. Short Pairing-Free Blind Signatures with Exponential Security. In Proceedings of the Advances in Cryptology – EUROCRYPT 2022, Trondheim, Norway, 30 May–3 June 2022; Proceedings, Part II. Dunkelman, O., Dziembowski, S., Eds.; Springer: Cham, Switzerland, 2022. Lecture Notes in Computer Science. Volume 13276, pp. 782–811. [Google Scholar] [CrossRef]

- Chairattana-Apirom, R.; Tessaro, S.; Zhu, C. Pairing-Free Blind Signatures from CDH Assumptions. In Proceedings of the Advances in Cryptology—CRYPTO 2024, Santa Barbara, CA, USA, 18–22 August 2024; Proceedings, Part I. Stebila, D., Ed.; Springer: Cham, Switzerland, 2024. Lecture Notes in Computer Science. Volume 14920, pp. 174–209. [Google Scholar] [CrossRef]

- Crites, E.C.; Komlo, C.; Maller, M.; Tessaro, S.; Zhu, C. Snowblind: A Threshold Blind Signature in Pairing-Free Groups. In Proceedings of the Advances in Cryptology—CRYPTO 2023, Santa Barbara, CA, USA, 20–24 August 2023; Proceedings, Part I. Handschuh, H., Lysyanskaya, A., Eds.; Springer: Cham, Switzerland, 2023. Lecture Notes in Computer Science. Volume 14081, pp. 710–742. [Google Scholar] [CrossRef]

- Juels, A.; Luby, M.; Ostrovsky, R. Security of blind digital signatures. In Proceedings of the Advances in Cryptology—CRYPTO ’97, Santa Barbara, CA, USA, 17–21 August 1997; Kaliski, B.S., Ed.; Springer: Berlin/Heidelberg, Germany, 1997. Lecture Notes in Computer Science. Volume 1294, pp. 150–164. [Google Scholar] [CrossRef]

- Pointcheval, D.; Stern, J. Provably secure blind signature schemes. In Proceedings of the Advances in Cryptology—ASIACRYPT ’96, Kyongju, Republic of Korea, 3–7 November 1996; Kim, K., Matsumoto, T., Eds.; Springer: Berlin/Heidelberg, Germany, 1996. Lecture Notes in Computer Science. Volume 1163, pp. 252–265. [Google Scholar] [CrossRef]

- Hanzlik, L. Non-interactive Blind Signatures for Random Messages. In Proceedings of the Advances in Cryptology—EUROCRYPT 2023, Lyon, France, 23–27 April 2023; Proceedings, Part V. Hazay, C., Stam, M., Eds.; Springer: Cham, Switzerland, 2023. Lecture Notes in Computer Science. Volume 14008, pp. 722–752. [Google Scholar] [CrossRef]

- Baldimtsi, F.; Cheng, J.; Goyal, R.; Yadav, A. Non-Interactive Blind Signatures: Post-Quantum and Stronger Security. In Proceedings of the Advances in Cryptology—ASIACRYPT 2024, Kolkata, India, 9–13 December 2024; Proceedings, Part II. Chung, K.M., Sasaki, Y., Eds.; Springer: Singapore, 2025. Lecture Notes in Computer Science. Volume 15485, pp. 70–104. [Google Scholar] [CrossRef]

- Kastner, J.; Loss, J.; Xu, J. The Abe-Okamoto Partially Blind Signature Scheme Revisited. In Proceedings of the Advances in Cryptology—ASIACRYPT 2022, Taipei, Taiwan, 5–9 December 2022; Proceedings, Part IV. Agrawal, S., Lin, D., Eds.; Springer: Cham, Switzerland, 2022. Lecture Notes in Computer Science. Volume 13794, pp. 279–309. [Google Scholar] [CrossRef]

- Kutyłowski, M.; Persiano, G.; Phan, D.H.; Yung, M.; Zawada, M. Anamorphic Signatures: Secrecy from a Dictator Who Only Permits Authentication! In Proceedings of the Advances in Cryptology—CRYPTO 2023, Santa Barbara, CA, USA, 20–24 August 2023; Handschuh, H., Lysyanskaya, A., Eds.; Springer: Cham, Switzerland, 2023. Lecture Notes in Computer Science. Volume 14082, pp. 759–790. [Google Scholar] [CrossRef]

- Russell, A.; Tang, Q.; Yung, M.; Zhou, H.S. Generic Semantic Security against a Kleptographic Adversary. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS ’17, Dallas, TX, USA, 30 October–3 November 2017; Thuraisingham, B., Evans, D., Malkin, T., Xu, D., Eds.; ACM: New York, NY, USA, 2017; pp. 907–922. [Google Scholar] [CrossRef]

- Bemmann, P.; Chen, R.; Jager, T. Subversion-Resilient Public Key Encryption with Practical Watchdogs. In Proceedings of the Public-Key Cryptography—PKC 2021, Virtual, 10–13 May 2021; Garay, J.A., Ed.; Springer: Cham, Switzerland, 2021. Lecture Notes in Computer Science. Volume 12710, pp. 627–658. [Google Scholar] [CrossRef]

- Schnorr, C.P. Security of Blind Discrete Log Signatures against Interactive Attacks. In Proceedings of the Information and Communications Security, Xi’an, China, 13–16 November 2001; Qing, S., Okamoto, T., Zhou, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2001. Lecture Notes in Computer Science. Volume 2229, pp. 1–12. [Google Scholar] [CrossRef]

- Benhamouda, F.; Lepoint, T.; Loss, J.; Orrù, M.; Raykova, M. On the (in)Security of ROS. In Proceedings of the Advances in Cryptolog—EUROCRYPT 2021, Zagreb, Croatia, 17–21 October 2021; Proceedings, Part I. Canteaut, A., Standaert, F., Eds.; Springer: Cham, Switzerland, 2021. Lecture Notes in Computer Science. Volume 12696, pp. 33–53. [Google Scholar] [CrossRef]

- Bernstein, D.J.; Hamburg, M.; Krasnova, A.; Lange, T. Elligator: Elliptic-Curve Points Indistinguishable from Uniform Random Strings. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, CCS ’13, Berlin, Germany, 4–8 November 2013; Sadeghi, A.-R., Gligor, V.D., Yung, M., Eds.; ACM: New York, NY, USA, 2013; pp. 967–980. [Google Scholar] [CrossRef]

- Goyal, V.; O’Neill, A.; Rao, V. Correlated-Input Secure Hash Functions. In Proceedings of the Theory of Cryptography—8th Theory of Cryptography Conference, TCC 2011, Providence, RI, USA, 28–30 March 2011; Proceedings. Ishai, Y., Ed.; Springer: Berlin/Heidelberg, Germany, 2011. Lecture Notes in Computer Science. Volume 6597, pp. 182–200. [Google Scholar] [CrossRef]

- Crites, E.C.; Komlo, C.; Maller, M.; Tessaro, S.; Zhu, C. Snowblind: A Threshold Blind Signature in Pairing-Free Groups. IACR Cryptol. ePrint Arch. 2023, 14081, 1228. [Google Scholar]

- Chairattana-Apirom, R.; Tessaro, S.; Zhu, C. Pairing-Free Blind Signatures from CDH Assumptions. IACR Cryptol. ePrint Arch. 2023, 14920, 1780. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kutyłowski, M.; Sobolewski, O. Privacy Illusion: Subliminal Channels in Schnorr-like Blind-Signature Schemes. Appl. Sci. 2025, 15, 2864. https://doi.org/10.3390/app15052864

Kutyłowski M, Sobolewski O. Privacy Illusion: Subliminal Channels in Schnorr-like Blind-Signature Schemes. Applied Sciences. 2025; 15(5):2864. https://doi.org/10.3390/app15052864

Chicago/Turabian StyleKutyłowski, Mirosław, and Oliwer Sobolewski. 2025. "Privacy Illusion: Subliminal Channels in Schnorr-like Blind-Signature Schemes" Applied Sciences 15, no. 5: 2864. https://doi.org/10.3390/app15052864

APA StyleKutyłowski, M., & Sobolewski, O. (2025). Privacy Illusion: Subliminal Channels in Schnorr-like Blind-Signature Schemes. Applied Sciences, 15(5), 2864. https://doi.org/10.3390/app15052864