Enhanced Detection of Small Unmanned Aerial System Using Noise Suppression Super-Resolution Detector for Effective Airspace Surveillance

Abstract

1. Introduction

- -

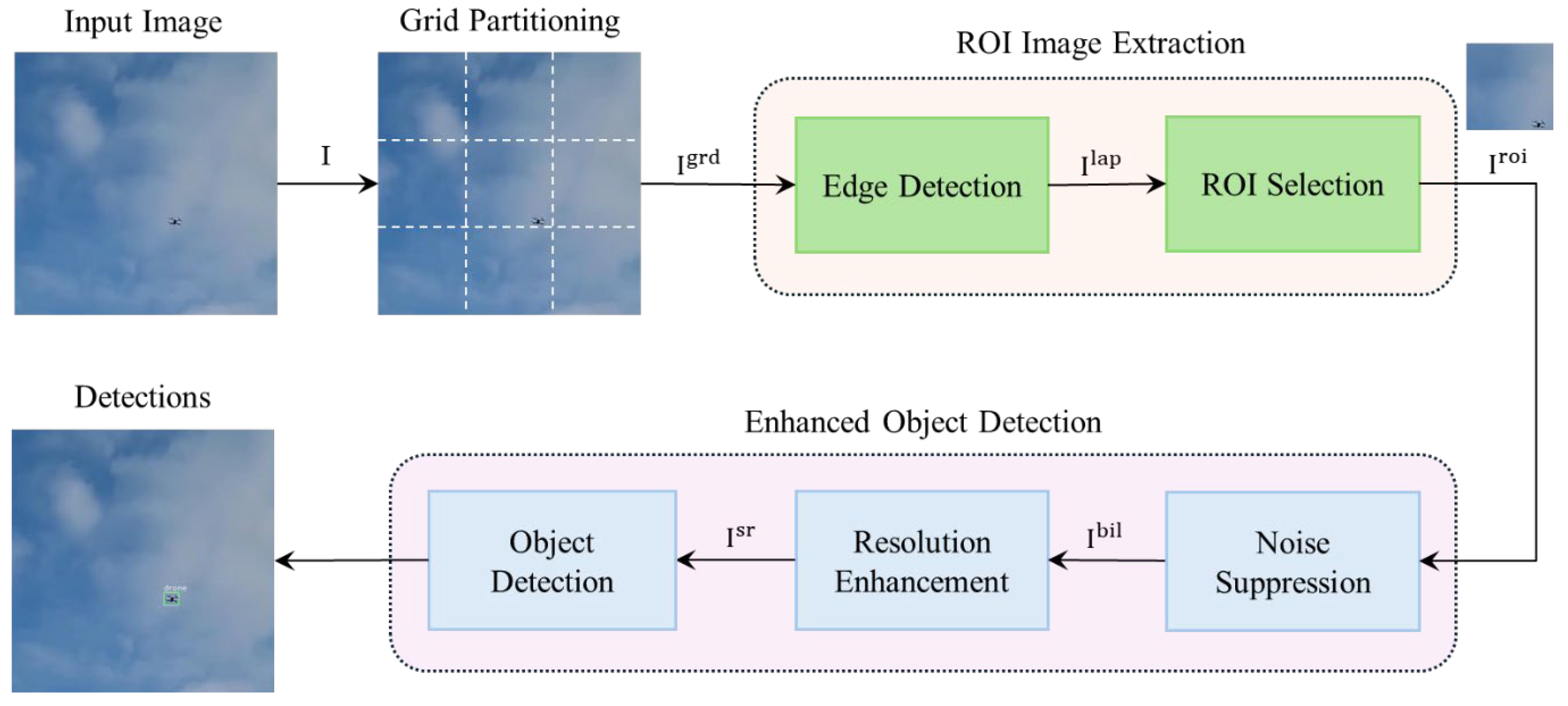

- A detection model integrating image partitioning, noise suppression, and super-resolution image enhancement was developed to improve detection performance for small drones, while preserving as much of the critical silhouette information of the drone as possible for accurate detection.

- -

- A preprocessing method using image partitioning and bilateral filtering was proposed to suppress noise and distortion occurring during image magnification, thereby improving the reliability of drone detection.

- -

- An ROI exploration process based on Laplacian filtering was introduced to reduce unnecessary computations in image partitioning, thereby enhancing overall computational efficiency and detection speed, and meeting real-time processing requirements.

- -

- The performance and adaptability of the proposed model were validated in real-world scenarios with various drone altitudes, drone-to-sensor distance conditions, and drone sizes.

2. Materials and Methods

| Algorithm 1. Noise Suppression Super-Resolution Detection (NSSRD) |

| Input: input image , image width , image height , maximum integer by which the image can be partitioned , binarization threshold , number of ROI images Require: pretrained detector YOLO, weights (, , ), biases (, , ) Output: detections

|

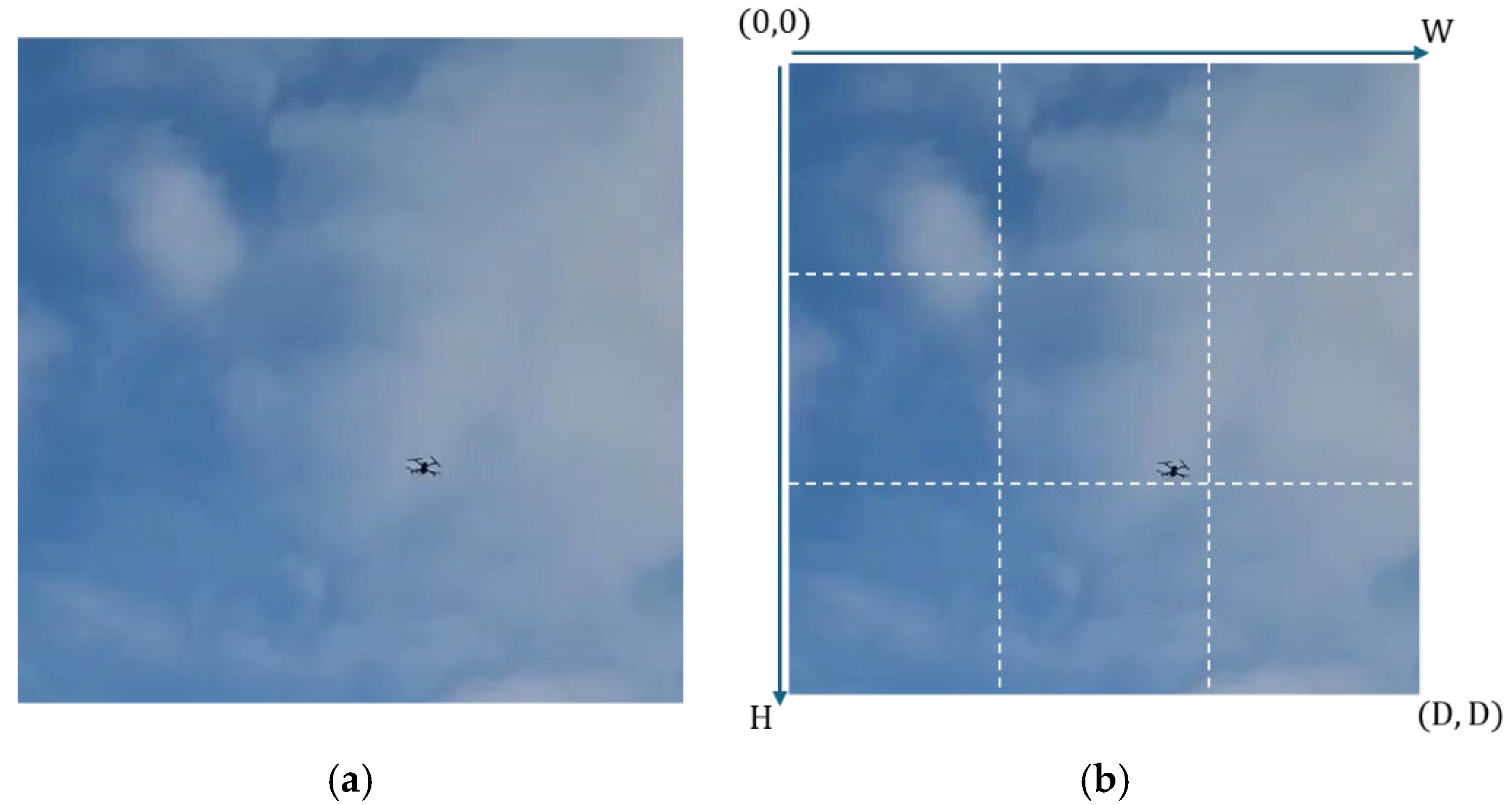

2.1. Grid Partitioning

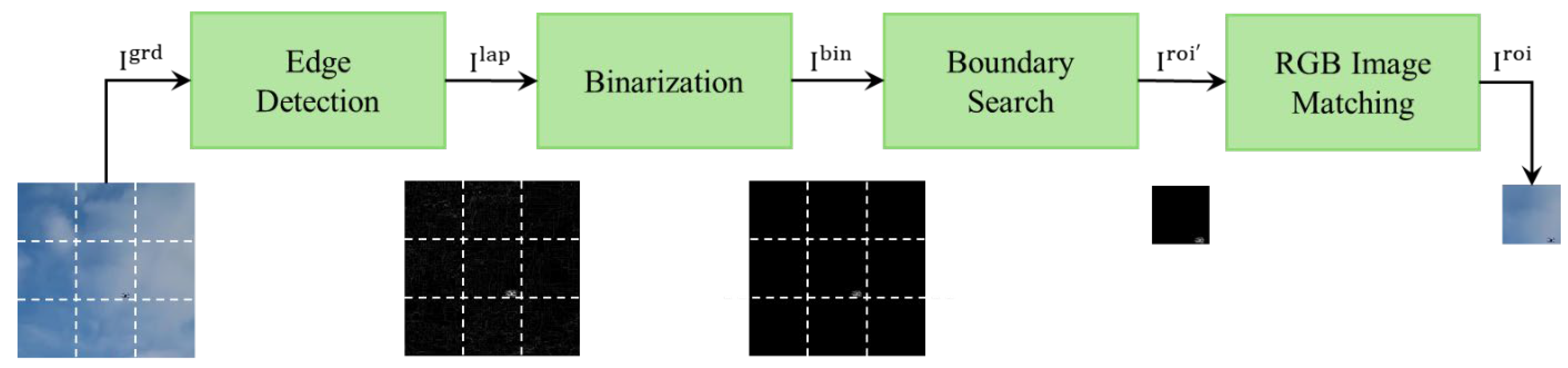

2.2. ROI Image Extraction

2.2.1. Edge Detection

2.2.2. Binarization and RGB Image Matching

2.3. Enhanced Object Detection

2.3.1. Noise Suppression

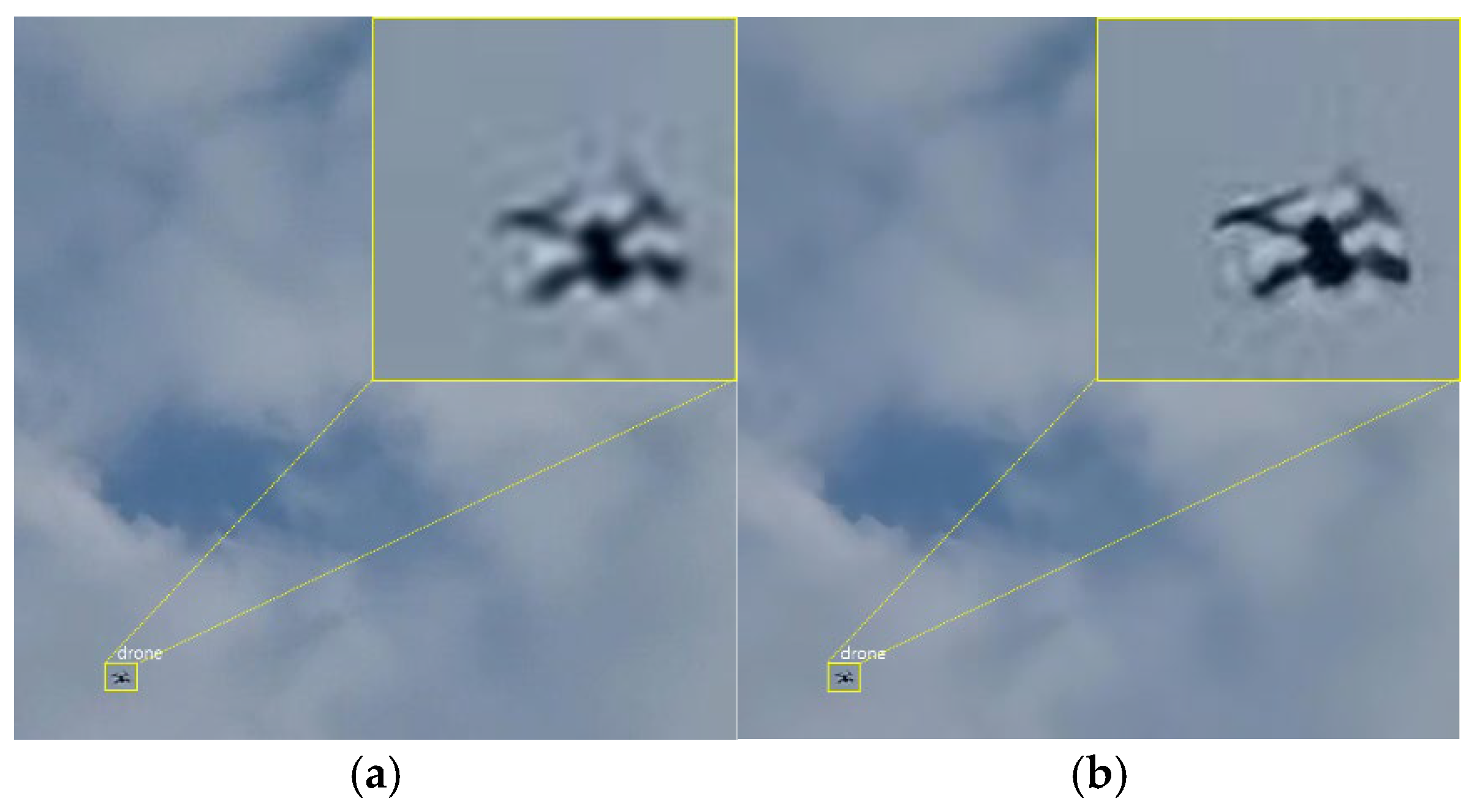

2.3.2. Image Enhancement

2.3.3. Object Detection

3. Experimental Results

3.1. Experimental Setup

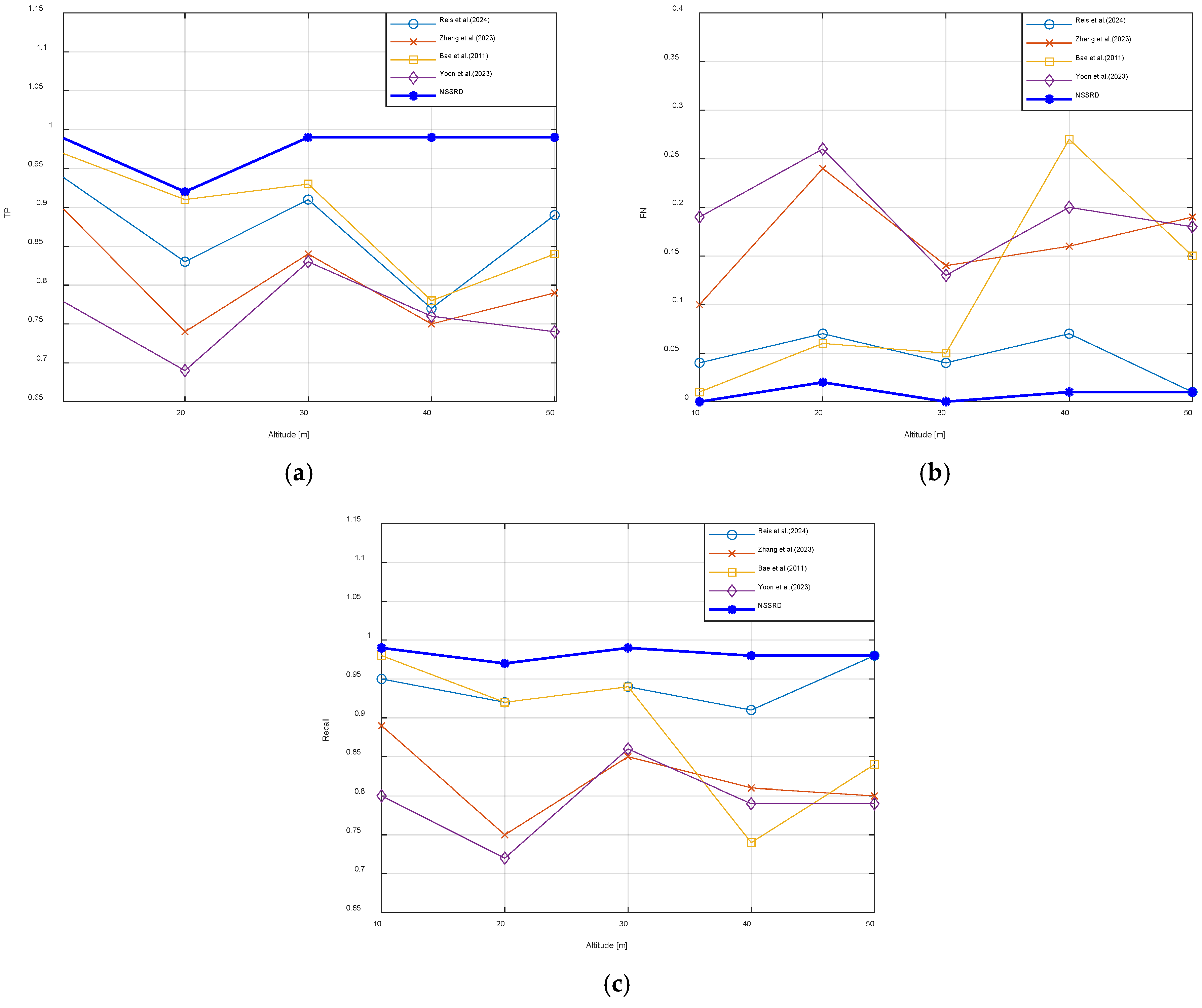

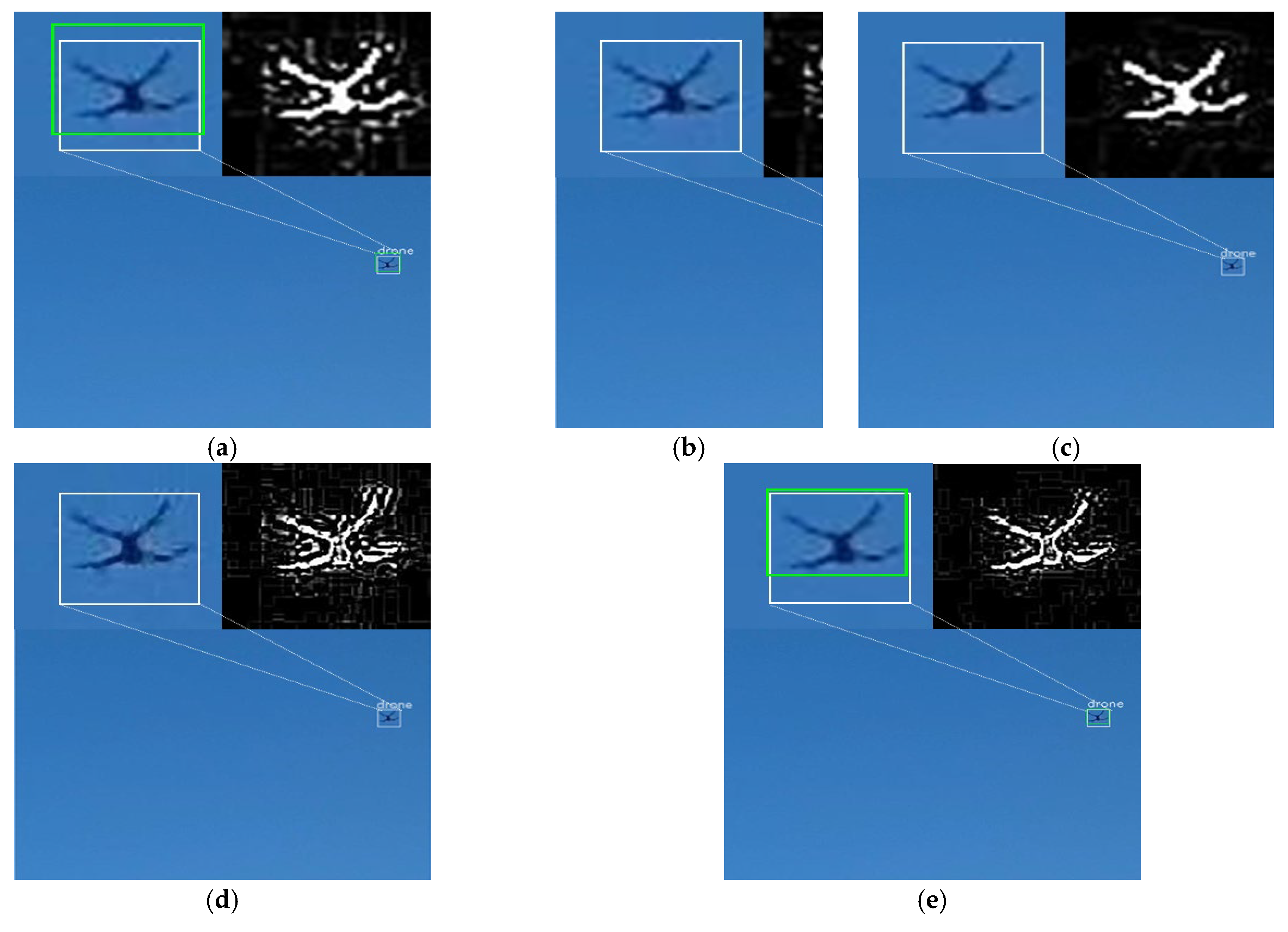

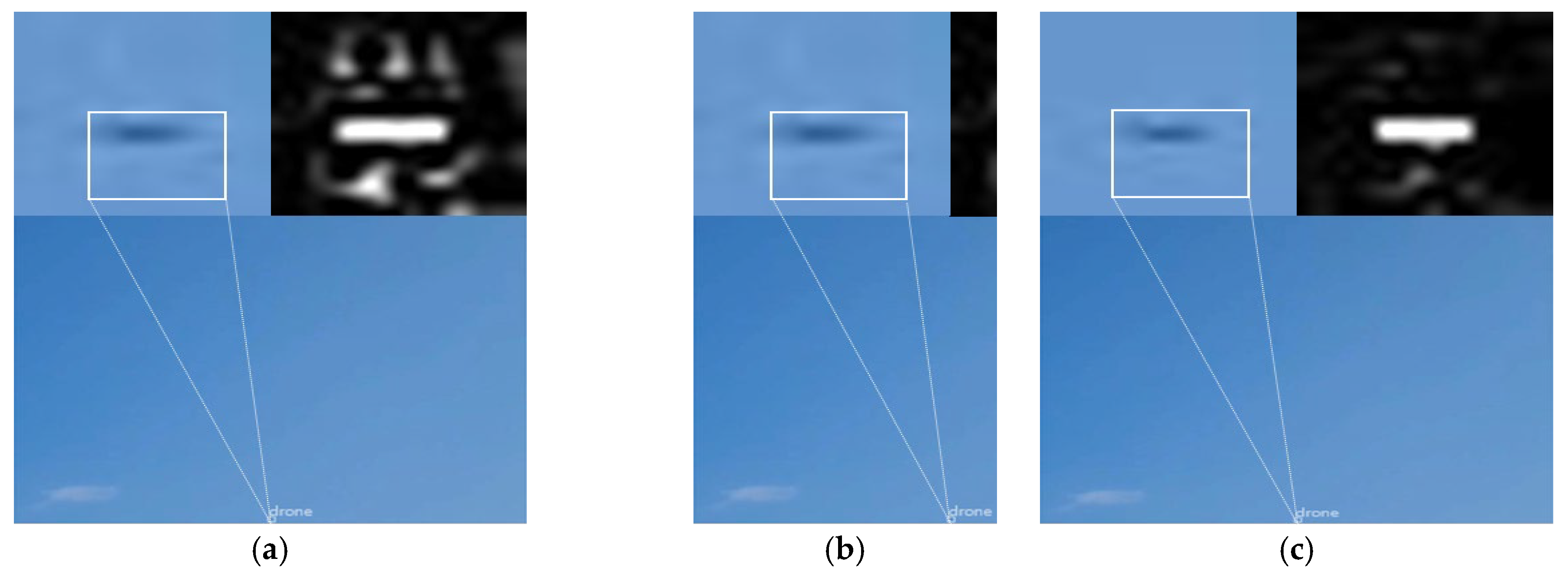

3.2. Comparative Evaluation of Detection Performance

3.3. Comparative Evaluation of Inference Speed

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Seidaliyeva, U.; Alduraibi, M.; Ilipbayeva, L.; Almagambetov, A. Detection of loaded and unloaded UAV using deep neural network. In Proceedings of the IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020. [Google Scholar]

- Liu, B.; Luo, H. An improved YOLOv5 for multi-rotor UAV detection. Electronics 2022, 11, 2330. [Google Scholar] [CrossRef]

- Long, T.; Ozger, M.; Cetinkaya, O.; Akan, O.B. Energy neutral Internet of drones. IEEE Commun. Mag. 2018, 56, 22–28. [Google Scholar] [CrossRef]

- Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep learning on multi-sensor data for counter UAV applications: A systematic review. Sensors 2019, 19, 4837. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, Y.; Lee, K.; Smith, A.H.; Dietz, J.E.; Matson, E.T. Accessible real-time surveillance radar system for object detection. Sensors 2020, 20, 2215. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Yi, J.; Wan, X.; Liu, Y.; Ke, H. Experimental research of multistatic passive radar with a single antenna for drone detection. IEEE Access 2018, 6, 33542–33551. [Google Scholar]

- Wu, Q.; Chen, J.; Lu, Y.; Zhang, Y. A complete automatic target recognition system of low altitude, small RCS, and slow speed (LSS) targets based on multi-dimensional feature fusion. Sensors 2019, 19, 5048. [Google Scholar] [CrossRef]

- Fan, S.; Wu, Z.; Xu, W.; Zhu, J.; Tu, G. Micro-Doppler signature detection and recognition of UAVs based on OMP algorithm. Sensors 2023, 23, 7922. [Google Scholar] [CrossRef] [PubMed]

- Gong, J.; Yan, J.; Hu, H.; Kong, D.; Li, D. Improved radar detection of small drones using Doppler signal-to-clutter ratio (DSCR) detector. Drones 2023, 7, 316. [Google Scholar] [CrossRef]

- Kim, J.; Park, C.; Ahn, J.; Ko, Y.; Park, J.; Gallagher, J.C. Real-time UAV sound detection and analysis system. In Proceedings of the IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; pp. 1–5. [Google Scholar]

- Seo, Y.; Jang, B.; Im, S. Drone detection using convolutional neural networks with acoustic STFT features. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018. [Google Scholar]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing-aided hyper inference and fine-tuning for small object detection. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

- Zhang, H.; Hao, C.; Song, W.; Jiang, B.; Li, B. Adaptive slicing-aided hyper inference for small object detection in high-resolution remote sensing images. Remote Sens. 2023, 15, 124. [Google Scholar] [CrossRef]

- Bashir, S.M.A.; Wang, Y. Small object detection in remote sensing images with residual feature aggregation-based super-resolution and object detector network. Remote Sens. 2021, 13, 1854. [Google Scholar] [CrossRef]

- Zhao, X.; Li, W.; Zhang, Y.; Feng, Z. Residual super-resolution single shot network for low-resolution object detection. IEEE Access 2018, 6, 47780–47793. [Google Scholar] [CrossRef]

- Yoon, S.; Jalal, A.; Cho, J. MODAN: Multifocal object detection associative network for maritime horizon surveillance. J. Mar. Sci. Eng. 2023, 11, 1890. [Google Scholar] [CrossRef]

- Yang, X.; Song, Y.; Zhou, Y.; Liao, Y.; Yang, J.; Huang, J.; Bai, Y. An efficient detection framework for aerial imagery based on uniform slicing window. Remote Sens. 2023, 15, 4122. [Google Scholar] [CrossRef]

- Al-E’mari, S.; Sanjalawe, Y.; Alqudah, H. Integrating enhanced security protocols with moving object detection: A YOLO-based approach for real-time surveillance. In Proceedings of the International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 26–28 February 2024; pp. 1–6. [Google Scholar]

- Xuan, L.; Hong, Z. An improved Canny edge detection algorithm. In Proceedings of the IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 24–26 November 2017; pp. 275–278. [Google Scholar]

- Zhang, J.Y.; Chen, Y.; Huang, X.X. Edge detection of images based on improved Sobel operator and genetic algorithms. In Proceedings of the International Conference on Image Analysis and Signal Processing, Linhai, China, 11–12 April 2009; pp. 31–35. [Google Scholar]

- Stanković, I.; Brajović, M.; Stanković, L.; Daković, M. Laplacian Filter in Reconstruction of Images using Gradient-Based Algorithm. In Proceedings of the 29th Telecommunications Forum (TELFOR), Belgrade, Serbia, 23–24 November 2021. [Google Scholar]

- Gao, Y.; Wang, Y.; Zhang, Y.; Li, Z.; Chen, C.; Feng, H. Feature super-resolution fusion with cross-scale distillation for small-object detection in optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6008105. [Google Scholar] [CrossRef]

- Zou, H.; Gao, Y.; Guo, X.; Zheng, M. Small Object Detection Based on Super-Resolution Enhanced Detection Network. In Proceedings of the International Conference on Computer Information Science and Artificial Intelligence (CISAI), Kunming, China, 17–19 September 2021. [Google Scholar]

- Rabbi, J.; Ray, N.; Schubert, M.; Chowdhury, S.; Chao, D. Small-Object Detection in Remote Sensing Images with End-to-End Edge-Enhanced GAN and Object Detector Network. Remote Sens. 2020, 12, 1432. [Google Scholar] [CrossRef]

- Liu, G.; Wang, M.; Liu, L.; Liu, Y.; Jiang, Y. A noise-aware framework for blind image super-resolution. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Lee, G.; Hong, S.; Cho, D. Self-Supervised Feature Enhancement Networks for Small Object Detection in Noisy Images. IEEE Signal Process. Lett. 2021, 28, 1026–1030. [Google Scholar] [CrossRef]

- Zafar, A.; Aamir, M.; Mohd, N.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.; Almotairi, S. A comparison of pooling methods for convolutional neural networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Wang, F.; Wang, H.; Qin, Z.; Tang, J. UAV Target Detection Algorithm Based on Improved YOLOv8. IEEE Access 2023, 11, 116534–116544. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Reis, D.; Jordan, K.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2024, arXiv:2305.09972. [Google Scholar]

- Bae, T.W. Small target detection using bilateral filter and temporal cross product in infrared images. Infrared Phys. Technol. 2011, 54, 403–411. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoo, J.; Cho, J. Enhanced Detection of Small Unmanned Aerial System Using Noise Suppression Super-Resolution Detector for Effective Airspace Surveillance. Appl. Sci. 2025, 15, 3076. https://doi.org/10.3390/app15063076

Yoo J, Cho J. Enhanced Detection of Small Unmanned Aerial System Using Noise Suppression Super-Resolution Detector for Effective Airspace Surveillance. Applied Sciences. 2025; 15(6):3076. https://doi.org/10.3390/app15063076

Chicago/Turabian StyleYoo, Jiho, and Jeongho Cho. 2025. "Enhanced Detection of Small Unmanned Aerial System Using Noise Suppression Super-Resolution Detector for Effective Airspace Surveillance" Applied Sciences 15, no. 6: 3076. https://doi.org/10.3390/app15063076

APA StyleYoo, J., & Cho, J. (2025). Enhanced Detection of Small Unmanned Aerial System Using Noise Suppression Super-Resolution Detector for Effective Airspace Surveillance. Applied Sciences, 15(6), 3076. https://doi.org/10.3390/app15063076