Object Detection in Complex Traffic Scenes Based on Environmental Perception Attention and Three-Scale Feature Fusion

Abstract

1. Introduction

2. Related Work

2.1. Attention Mechanism

2.2. Traffic Scene Object Detection

3. Method

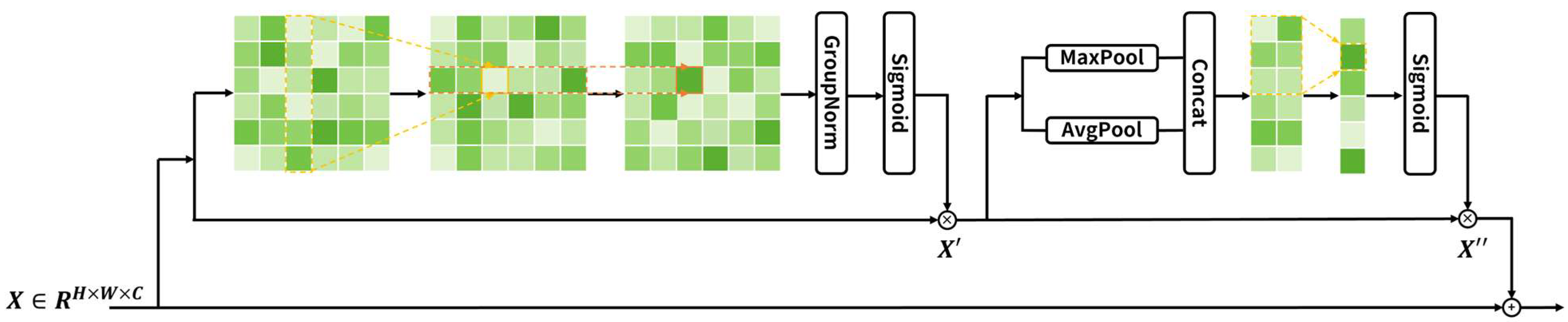

3.1. Environmental Perception Attention (EPA)

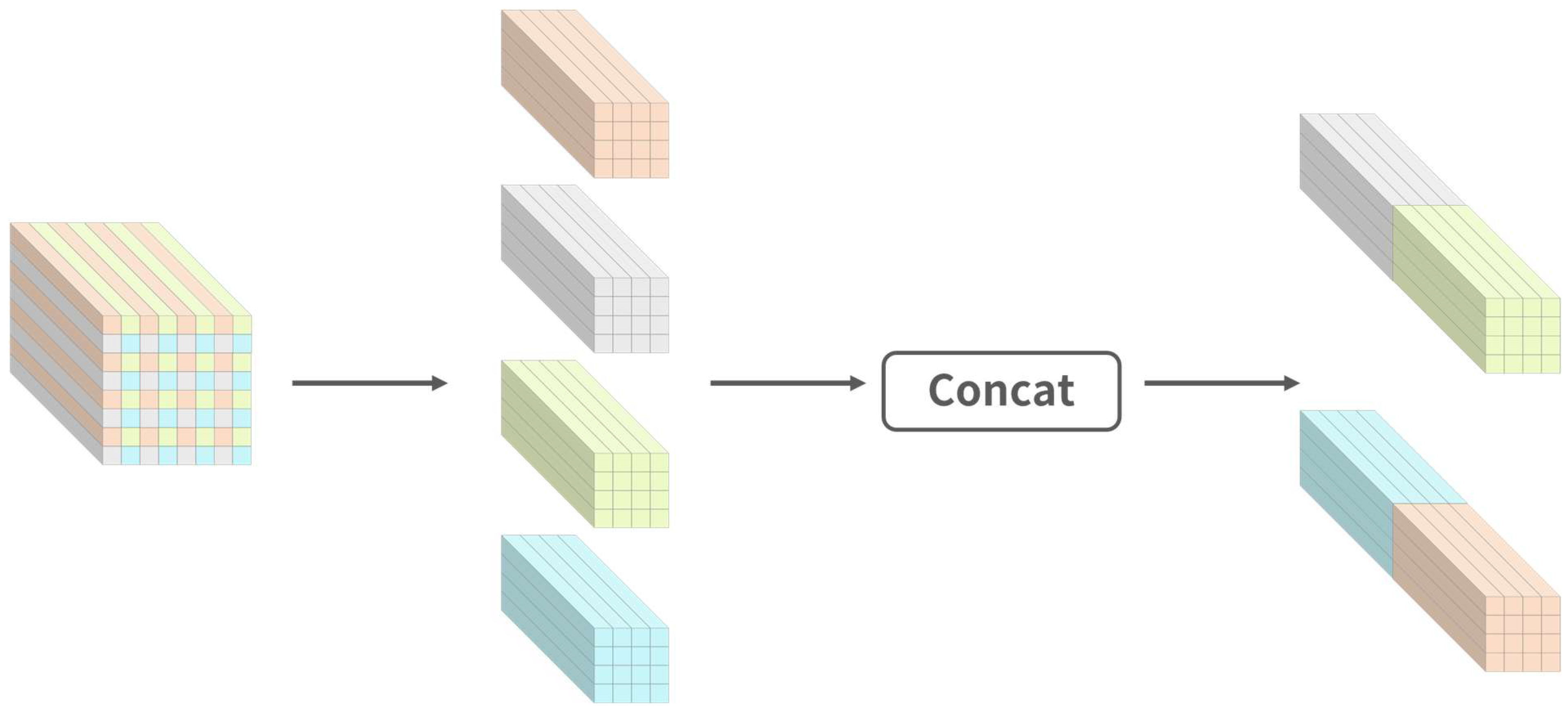

3.2. Three-Scale Fusion Module (TSFM)

4. Experimental Results

4.1. Dataset and Experimental Environment

4.2. Comparative Experiment

4.3. Ablation Study

4.3.1. Environmental Perception Attention (EPA)

4.3.2. Three-Scale Fusion Module (TSFM)

4.3.3. Ablation Experiment

4.4. Visualization Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO; Version 8.0.0; Ultralytics: UK, 2023; Available online: https://github.com/ultralytics/ultralytics (accessed on 1 March 2024).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31 × 31: Revisiting large kernel design in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11963–11975. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric non-local neural networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Park, J. Bam: Bottleneck attention module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference On computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference On computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Hou, Q.; Zhang, L.; Cheng, M.M.; Feng, J. Strip pooling: Rethinking spatial pooling for scene parsing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4003–4012. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient Local Attention for Deep Convolutional Neural Networks. arXiv 2024, arXiv:2403.01123. [Google Scholar]

- Wang, X.; Wang, S.; Cao, J.; Wang, Y. Data-driven based tiny-YOLOv3 method for front vehicle detection inducing SPP-net. IEEE Access 2020, 8, 110227–110236. [Google Scholar] [CrossRef]

- Wu, T.H.; Wang, T.W.; Liu, Y.Q. Real-time vehicle and distance detection based on improved YOLOv5 network. In Proceedings of the 2021 3rd World Symposium on Artificial Intelligence (WSAI), Online, 18–20 June 2021; pp. 24–28. [Google Scholar]

- Carrasco, D.P.; Rashwan, H.A.; García, M.Á.; Puig, D. T-YOLO: Tiny vehicle detection based on YOLO and multi-scale convolutional neural networks. IEEE Access 2021, 11, 22430–22440. [Google Scholar] [CrossRef]

- Guo, X.; Liu, Q.; Qin, Z.; Xu, Y. Target Detection of Forward Vehicle Based on Improved SSD. In Proceedings of the 2021 IEEE 6th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), Chengdu, China, 24–26 April 2021. [Google Scholar]

- Mao, Q.C.; Sun, H.M.; Zuo, L.Q.; Jia, R.S. Finding every car: A traffic surveillance multi-scale vehicle object detection method. Appl. Intell. 2020, 50, 3125–3136. [Google Scholar] [CrossRef]

- Alsanabani, A.A.; Saeed, S.A.; Al-Mkhlafi, M.; Albishari, M. A low cost and real time vehicle detection using enhanced YOLOv4-tiny. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 372–377. [Google Scholar]

- Benjumea, A.; Teeti, I.; Cuzzolin, F.; Bradley, A. YOLO-Z: Improving small object detection in YOLOv5 for autonomous vehicles. arXiv 2021, arXiv:2112.11798. [Google Scholar]

- Bie, M.; Liu, Y.; Li, G.; Hong, J.; Li, J. Real-time vehicle detection algorithm based on a lightweight You-Only-Look-Once (YOLOv5n-L) approach. Expert Syst. Appl. 2023, 213, 119108. [Google Scholar] [CrossRef]

- Ghosh, R. On-road vehicle detection in varying weather conditions using faster R-CNN with several region proposal networks. Multimed. Tools Appl. 2021, 80, 25985–25999. [Google Scholar] [CrossRef]

- Zhao, M.; Zhong, Y.; Sun, D.; Chen, Y. Accurate and efficient vehicle detection framework based on SSD algorithm. IET Image Process. 2021, 15, 3094–3104. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, S.; Peng, X.; Lu, Z.; Li, G. Improved object detection method for autonomous driving based on DETR. Front. Neurorobot. 2025, 18, 1484276. [Google Scholar] [CrossRef]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. GhostNetv2: Enhance cheap operation with long-range attention. Adv. Neural Inf. Process. Syst. 2022, 35, 9969–9982. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.M.; Hu, S.M. Visual attention network. Comput. Vis. Media 2023, 9, 733–752. [Google Scholar] [CrossRef]

- Lau, K.W.; Po, L.M.; Rehman, Y.A.U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Yu, F. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Gao, Z.; Xie, J.; Wang, Q.; Li, P. Global Second-Order Pooling Convolutional Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN models for fine-grained visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1449–1457. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Grenoble, France, 19–23 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 443–459. [Google Scholar]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2636–2645. [Google Scholar]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2016, 29, 4898–4906. [Google Scholar]

- Im Choi, J.; Tian, Q. Visual-saliency-guided channel pruning for deep visual detectors in autonomous driving. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023; pp. 1–6. [Google Scholar]

- Lan, Q.; Tian, Q. Gradient-guided knowledge distillation for object detectors. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 424–433. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Model | Backbone | Input Size | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|---|---|

| Faster-RCNN | ResNet-50 | 800 × 800 | 50.4 | 28.2 | 41.389 | 86.1 |

| SSD | VGG-16 | 800 × 800 | 48.7 | 24.0 | 25.566 | 88.6 |

| TOOD | ResNet-50 | 800 × 800 | 57.0 | 31.4 | 32.037 | 74.1 |

| VFNet | ResNet-50 | 800 × 800 | 54.5 | 29.6 | 32.728 | 70.9 |

| YOLOv3-tiny | Darknet53 | 640 × 640 | 40.4 | 22.3 | 12.136 | 19.1 |

| YOLOv5s | CSPDarknet53+SPPF | 640 × 640 | 59.1 | 33.7 | 9.153 | 24.2 |

| YOLOv10s | CSPDarknet53+SPPF | 640 × 640 | 58.8 | 33.9 | 8.042 | 24.5 |

| YOLO11s | CSPDarknet53+SPPF | 640 × 640 | 59.7 | 33.9 | 9.416 | 21.3 |

| Ours | CSPDarknet53+SPPF | 640 × 640 | 60.9 | 34.9 | 11.193 | 30.1 |

| Setting | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|

| Baseline | 59.6 | 34.0 | 11.139 | 28.7 |

| +EPA (k = 5) | 60.1 | 34.5 | 11.152 | 28.7 |

| +EPA (k = 7) | 60.0 | 34.4 | 11.156 | 28.8 |

| +EPA (k = 9) | 60.2 | 34.5 | 11.160 | 28.8 |

| +EPA (k = 17) | 60.5 | 34.6 | 11.175 | 28.9 |

| +EPA (k = 19) | 60.2 | 34.6 | 11.179 | 28.9 |

| +EPA (k = 21) | 60.2 | 34.5 | 11.183 | 28.9 |

| +EPA (k = 23) | 60.1 | 34.4 | 11.187 | 29.0 |

| Setting | r | |||

|---|---|---|---|---|

| t = 20% | t = 30% | t = 50% | t = 99% | |

| Baseline | 3.11% | 5.13% | 10.46% | 76.28% |

| +EPA (k = 5) | 3.34% | 5.42% | 11.07% | 89.36% |

| +EPA (k = 7) | 3.22% | 5.13% | 10.66% | 92.94% |

| +EPA (k = 9) | 3.22% | 5.13% | 10.66% | 91.14% |

| +EPA (k = 17) | 3.34% | 5.27% | 10.86% | 90.54% |

| +EPA (k = 19) | 3.34% | 5.27% | 11.07% | 94.75% |

| +EPA (k = 21) | 3.11% | 5.13% | 10.66% | 90.54% |

| +EPA (k = 23) | 3.22% | 5.27% | 10.86% | 89.95% |

| Description | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|

| Baseline | 59.6 | 34.0 | 11.139 | 28.7 |

| +TSFM (P3~P5) | 59.7 | 34.1 | 11.271 | 29.9 |

| +TSFM (P2~P4) | 59.9 | 34.4 | 11.172 | 30.0 |

| +TSFM (P2~P4)+TSFM (P3~P5) | 60.2 | 34.4 | 11.304 | 31.2 |

| Method | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | Flops (G) | FPS | ||

|---|---|---|---|---|---|---|---|

| Baseline | EPA (k = 9) | TSFM (P2~P4) | |||||

| √ | 59.6 | 34.0 | 11.139 | 28.7 | 160 | ||

| √ | √ | 60.2 | 34.5 | 11.160 | 28.8 | 142 | |

| √ | √ | 59.9 | 34.4 | 11.172 | 30.0 | 153 | |

| √ | √ | √ | 60.9 | 34.9 | 11.193 | 30.1 | 134 |

| Model | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|

| Baseline | 94.5 | 76.3 |

| Ours | 95.1 | 76.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, C.; Liu, J.; Wang, H.; Yang, Q. Object Detection in Complex Traffic Scenes Based on Environmental Perception Attention and Three-Scale Feature Fusion. Appl. Sci. 2025, 15, 3163. https://doi.org/10.3390/app15063163

Yuan C, Liu J, Wang H, Yang Q. Object Detection in Complex Traffic Scenes Based on Environmental Perception Attention and Three-Scale Feature Fusion. Applied Sciences. 2025; 15(6):3163. https://doi.org/10.3390/app15063163

Chicago/Turabian StyleYuan, Chunmiao, Jinlong Liu, Haobo Wang, and Qingyong Yang. 2025. "Object Detection in Complex Traffic Scenes Based on Environmental Perception Attention and Three-Scale Feature Fusion" Applied Sciences 15, no. 6: 3163. https://doi.org/10.3390/app15063163

APA StyleYuan, C., Liu, J., Wang, H., & Yang, Q. (2025). Object Detection in Complex Traffic Scenes Based on Environmental Perception Attention and Three-Scale Feature Fusion. Applied Sciences, 15(6), 3163. https://doi.org/10.3390/app15063163