Optimizing Methanol Injection Quantity for Gas Hydrate Inhibition Using Machine Learning Models

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Generation

2.2. Data Preparation

2.3. Machine Learning Models

2.4. Performance Evaluation Metrics

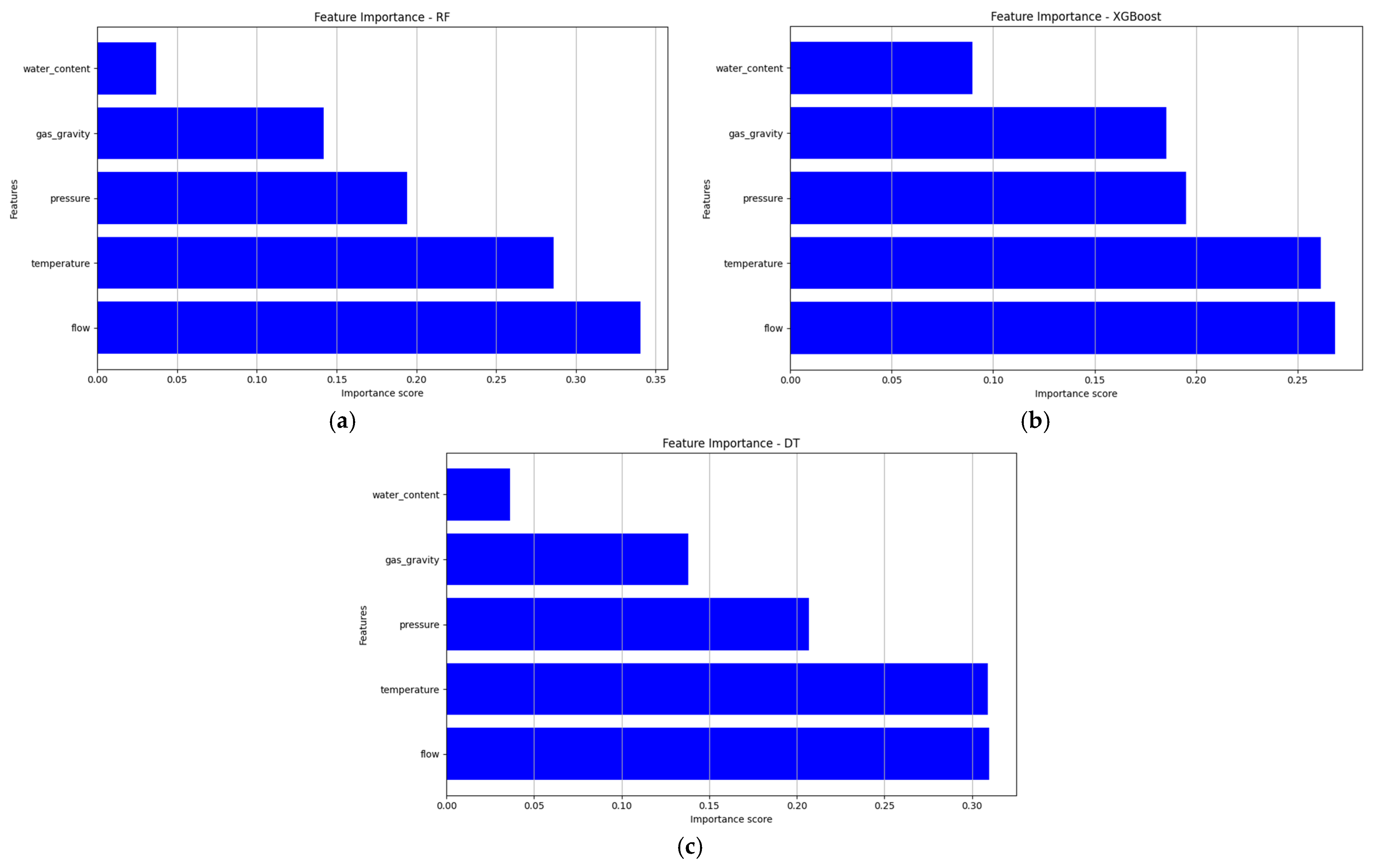

2.5. Feature Importance

3. Results and Discussion

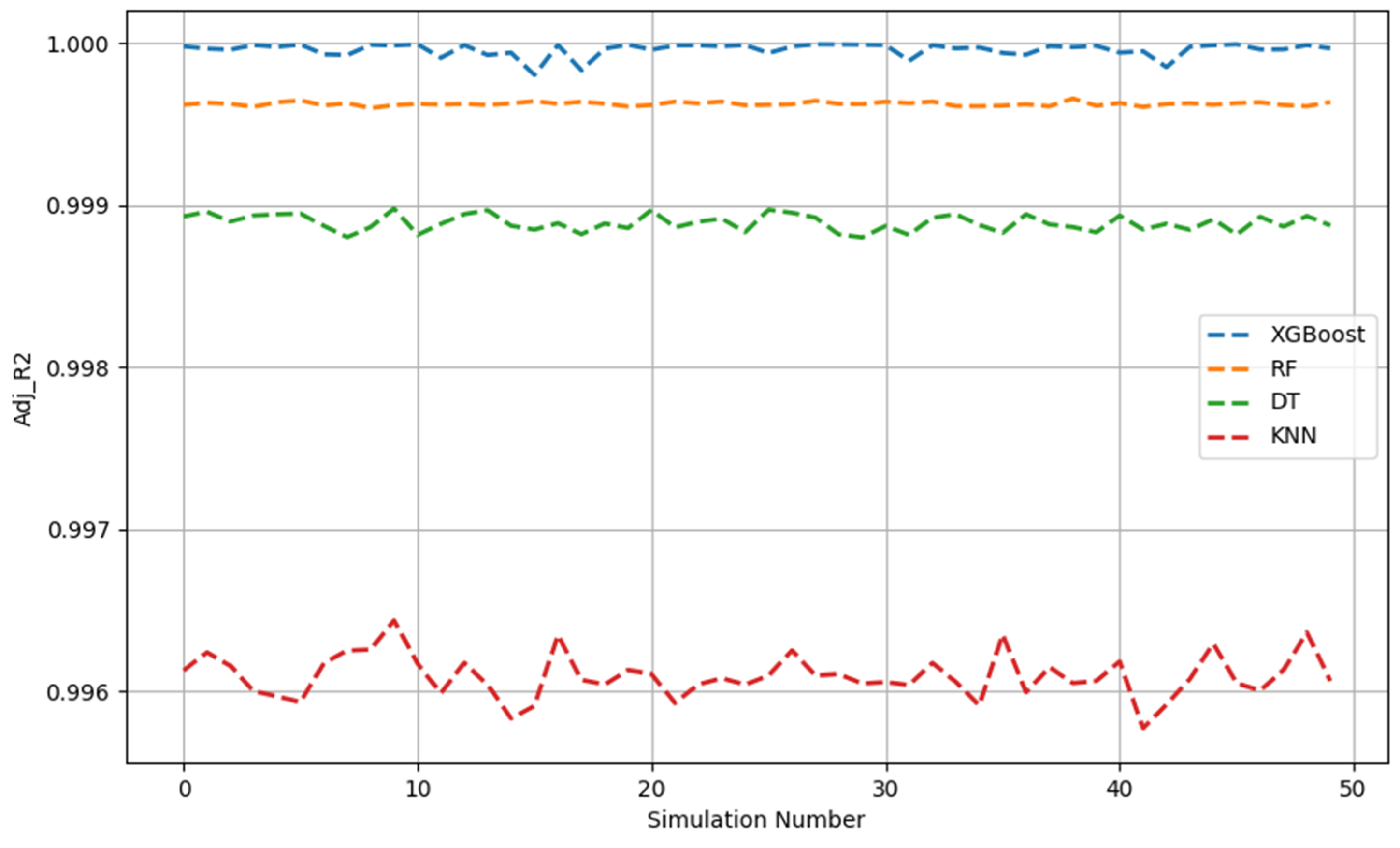

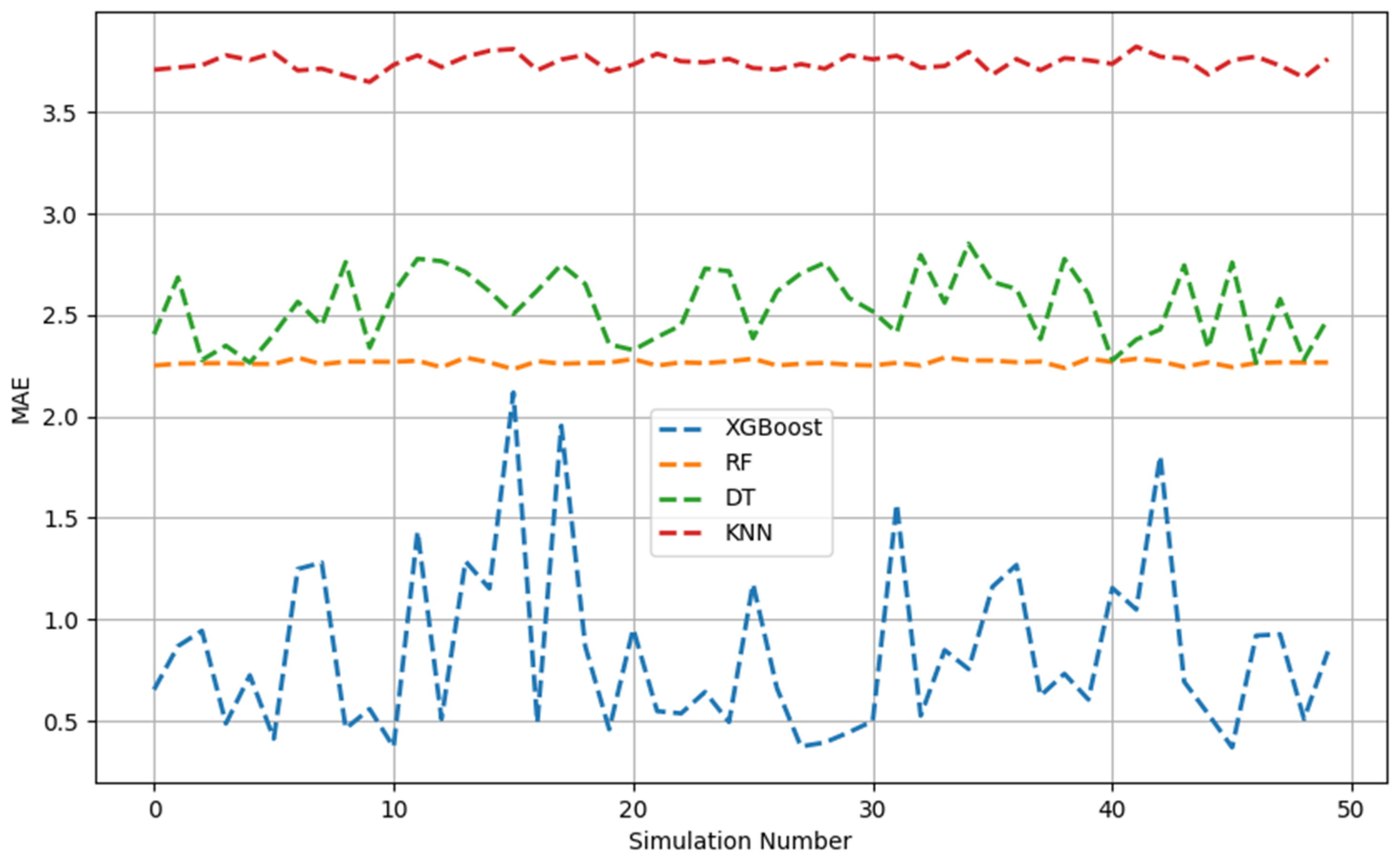

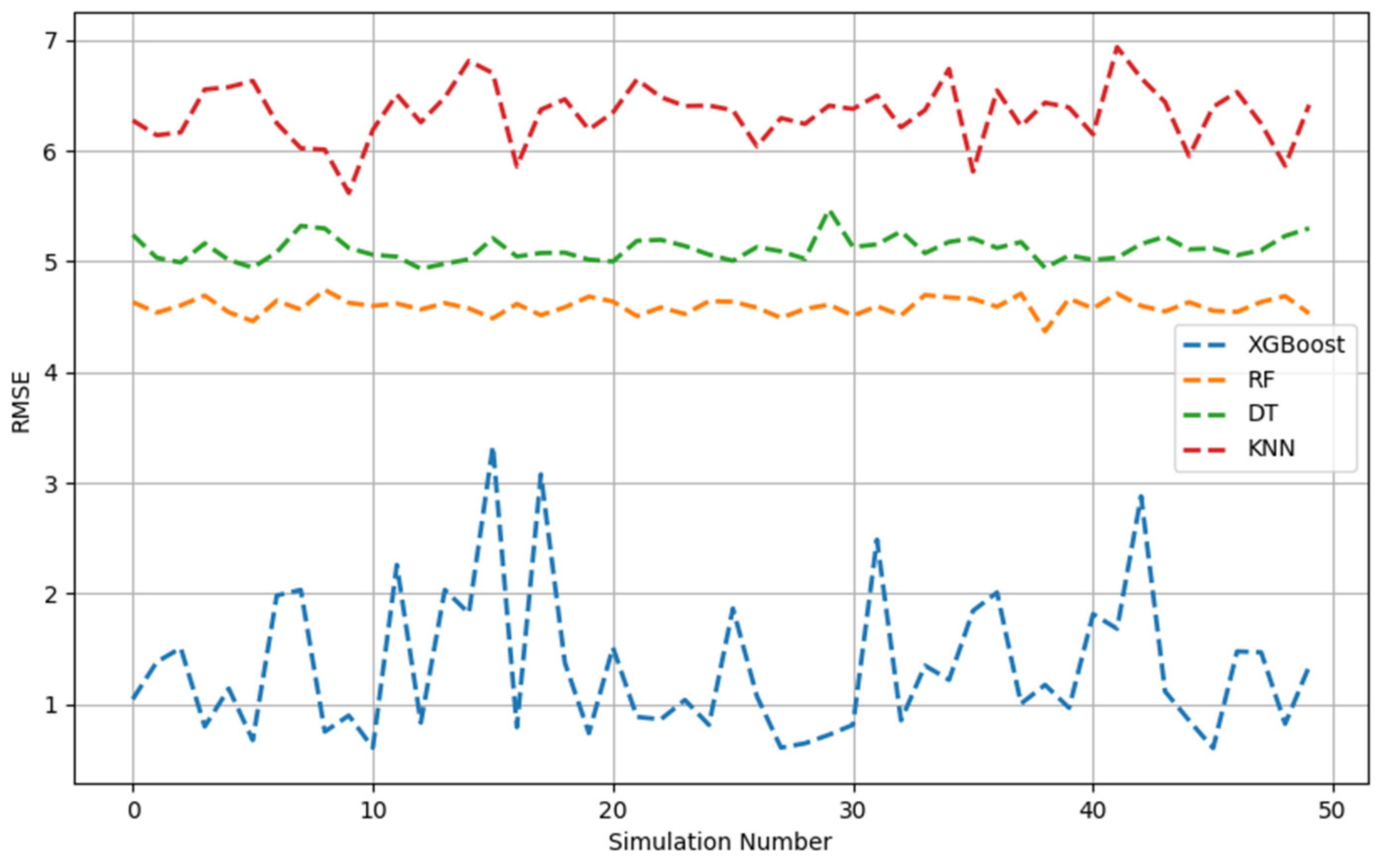

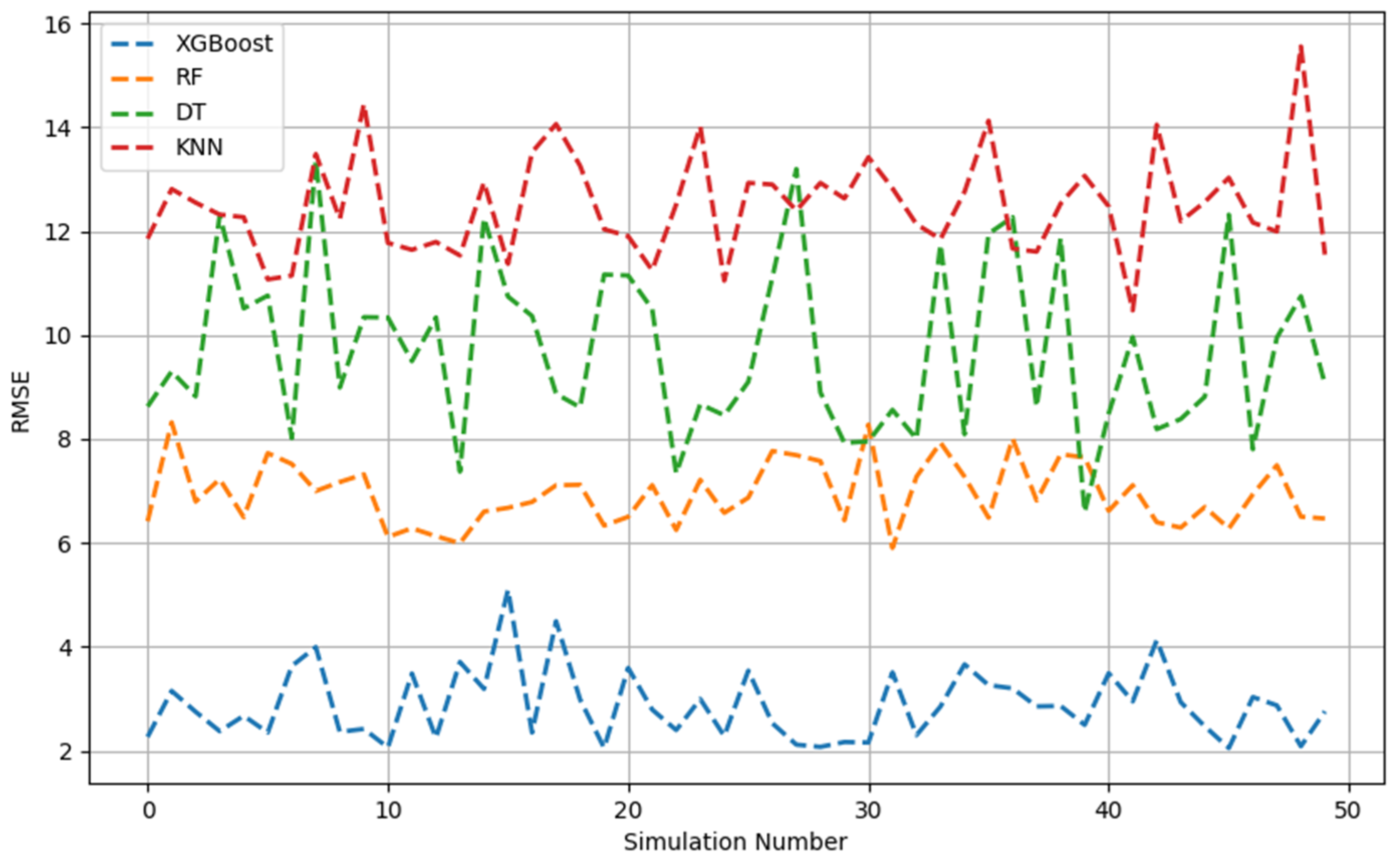

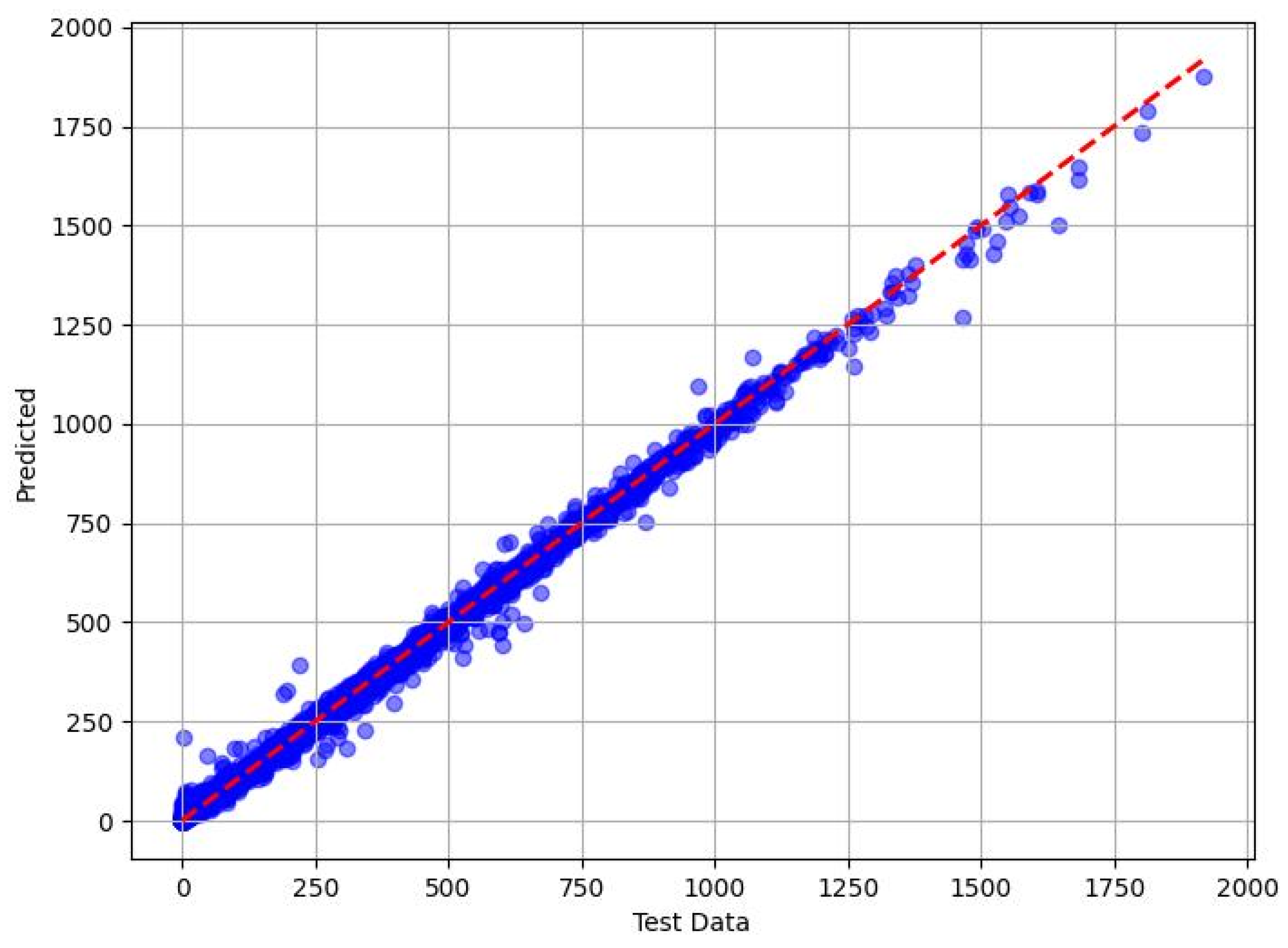

3.1. Analysis of Model Results

3.2. Feature Importance Analysis

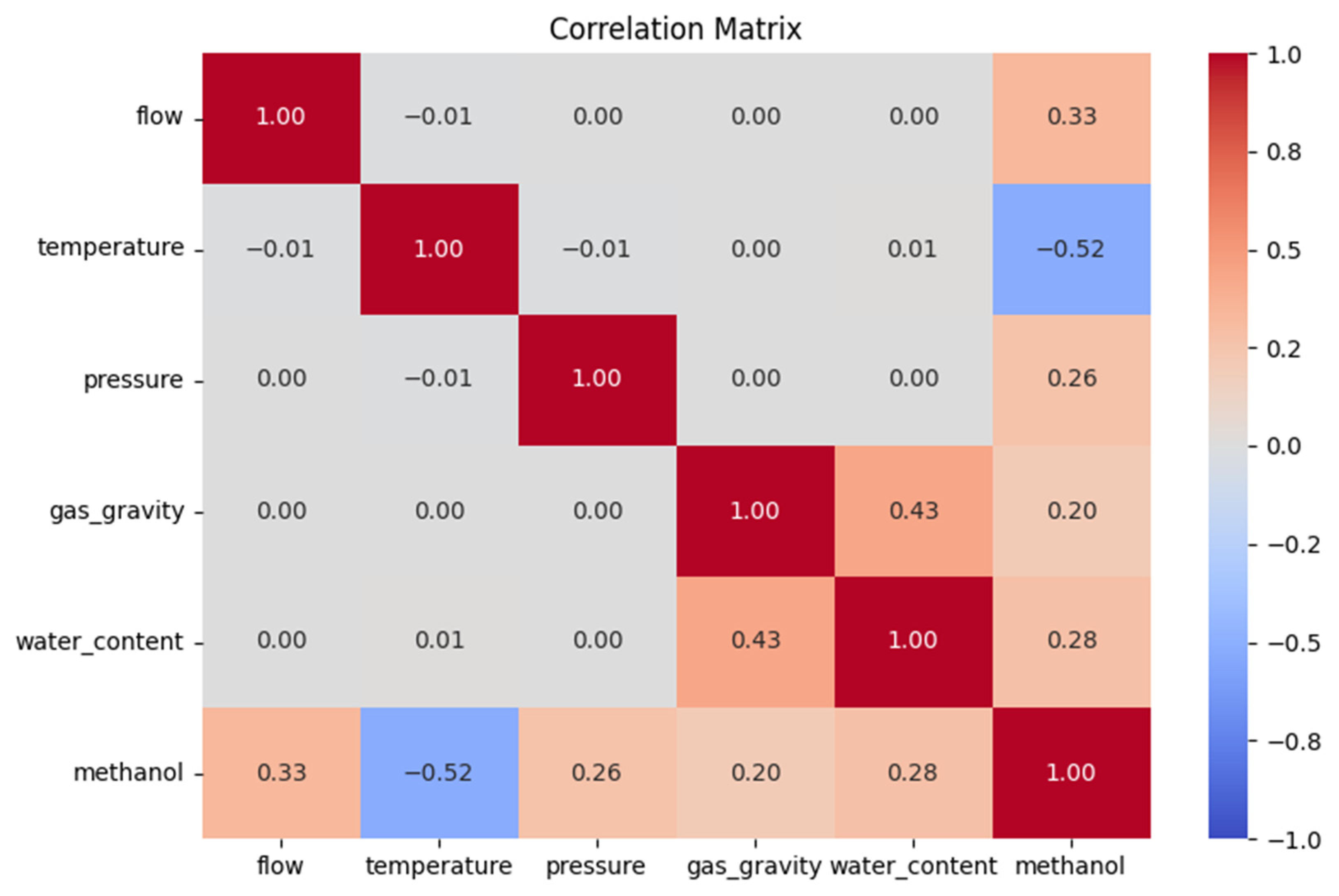

3.3. Correlation Matrix of Input Features and Methanol Injection Rate

4. Conclusions

5. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| XGBoost | Extreme Gradient Boosting |

| RF | Random Forest |

| DT | Decision Tree |

| KNN | K-Nearest Neighbors |

| Adj. R2 | Adjusted R-Squared |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

| ML | Machine Learning |

| HFT | Hydrate Formation Temperature |

| HFP | Hydrate Formation Pressure |

| ANN | Artificial Neural Network |

| SVM | Support Vector Machine |

References

- Holechek, J.; Geli, H.; Sawalhah, M.; Valdez, R. A global assessment: Can renewable energy replace fossil fuels by 2050? Sustainability 2022, 14, 4792. [Google Scholar] [CrossRef]

- Sloan, E.D., Jr.; Koh, C.A. Clathrate Hydrates of Natural Gases; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Makogon, Y.F. Hydrates of Hydrocarbons; PennWell Books: Tulsa, OK, USA, 1997. [Google Scholar]

- Hammerschmidt, E. Formation of gas hydrates in natural gas transmission lines. Ind. Eng. Chem. 1934, 26, 851–855. [Google Scholar] [CrossRef]

- Aman, Z.M. Hydrate risk management in gas transmission lines. Energy Fuels 2021, 35, 14265–14282. [Google Scholar] [CrossRef]

- Carroll, J. Natural Gas Hydrates: A Guide for Engineers; Gulf Professional Publishing: Waltham, MA, USA, 2020. [Google Scholar]

- Anderson, B.J.; Tester, J.W.; Borghi, G.P.; Trout, B.L. Properties of inhibitors of methane hydrate formation via molecular dynamics simulations. J. Am. Chem. Soc. 2005, 127, 17852–17862. [Google Scholar] [CrossRef] [PubMed]

- Ismail, I.; Gaganis, V. Prediction of Hydrate Dissociation Conditions in Natural/Acid/Flue Gas Streams in the Presence and Absence of Inhibitors. Mater. Proc. 2024, 15, 73. [Google Scholar] [CrossRef]

- Giavarini, C.; Hester, K. Gas Hydrates: Immense Energy Potential and Environmental Challenges; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar] [CrossRef]

- Davarnejad, R.; Azizi, J.; Azizi, J. Prediction of gas hydrate formation using HYSYS software. Int. J. Eng.-Trans. C Asp. 2014, 27, 1325–1330. [Google Scholar] [CrossRef]

- Carroll, J. Inhibiting hydrate formation with chemicals. In Natural Gas Hydrates; Gulf Professional Publishing: Waltham, MA, USA, 2020; pp. 163–208. [Google Scholar]

- Owolabi, J.O.; Kila, O.O.; Giwa, A. Modelling and Simulation of a Natural Gas Liquid Fractionation System Using Aspen HYSYS. Int. J. Eng. Res. Afr. 2020, 49, 1–14. [Google Scholar] [CrossRef]

- Abbasi, A.; Hashim, F.M. A review on fundamental principles of a natural gas hydrate formation prediction. Pet. Sci. Technol. 2022, 40, 2382–2404. [Google Scholar] [CrossRef]

- Proshutinskiy, M.; Raupov, I.; Brovin, N. Algorithm for optimization of methanol consumption in the «gas inhibitor pipeline-well-gathering system». In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP: Bristol, UK, 2022. [Google Scholar]

- Lu, Y.; Shen, M.; Wang, H.; Wang, X.; van Rechem, C.; Fu, T.; Wei, W. Machine learning for synthetic data generation: A review. arXiv 2023, arXiv:2302.04062. [Google Scholar]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.H.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: New York, NY, USA, 2009; Volume 2. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- El-hoshoudy, A.; Ahmed, A.; Gomaa, S.; Abdelhady, A. An artificial neural network model for predicting the hydrate formation temperature. Arab. J. Sci. Eng. 2022, 47, 11599–11608. [Google Scholar] [CrossRef]

- Ibrahim, A.A.; Lemma, T.A.; Kean, M.L.; Zewge, M.G. Prediction of gas hydrate formation using radial basis function network and support vector machines. Appl. Mech. Mater. 2016, 819, 569–574. [Google Scholar] [CrossRef]

- Cao, J.; Zhu, S.; Li, C.; Han, B. Integrating support vector regression with genetic algorithm for hydrate formation condition prediction. Processes 2020, 8, 519. [Google Scholar] [CrossRef]

- Nasir, Q.; Suleman, H.; Abdul Majeed, W.S. Application of Machine Learning on Hydrate formation prediction of pure components with water and inhibitors solution. Can. J. Chem. Eng. 2024, 102, 3953–3981. [Google Scholar] [CrossRef]

- Nasir, Q.; Abdul Majeed, W.S.; Suleman, H. Predicting Gas Hydrate Equilibria in Multicomponent Systems with a Machine Learning Approach. Chem. Eng. Technol. 2023, 46, 1854–1867. [Google Scholar] [CrossRef]

- Hajjeyah, A.; Al-Saleh, A.; Al-Qahtan, S.; Al-Shuaib, M.; Al-Muhanna, D.; Al-Jarki, D.; Al-Salali, Y.Z.; Osaretin, G.I.; Mahmoud, M.M.; Sabat, S. Enhancing Production Efficiency: AI-Powered Hydrate Detection and Prevention in North Kuwait Jurassic Gas Field. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 4–7 November 2024. [Google Scholar]

- Salam, K.; Arinkoola, A.; Araromi, D.; Ayansola, Y. Prediction of Hydrate formation conditions in Gas pipelines. Int. J. Eng. Sci. 2013, 2, 327–331. [Google Scholar]

- Sloan, E.D. Natural Gas Hydrates in Flow Assurance; Gulf Professional Publishing: Waltham, WA, USA, 2010. [Google Scholar]

- Mokhatab, S.; Poe, W.A.; Mak, J.Y. Handbook of Natural Gas Transmission and Processing: Principles and Practices; Gulf Professional Publishing: Waltham, WA, USA, 2018. [Google Scholar]

- Raschka, S.; Liu, Y.H.; Mirjalili, V. Machine Learning with PyTorch and Scikit-Learn: Develop Machine Learning and Deep Learning Models with Python; Packt Publishing Ltd.: Birmingham, UK, 2022. [Google Scholar]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- De Clercq, D.; Smith, K.; Chou, B.; Gonzalez, A.; Kothapalle, R.; Li, C.; Dong, X.; Liu, S.; Wen, Z. Identification of urban drinking water supply patterns across 627 cities in China based on supervised and unsupervised statistical learning. J. Environ. Manag. 2018, 223, 658–667. [Google Scholar] [CrossRef]

- Feng, M.; Wang, X.; Zhao, Z.; Jiang, C.; Xiong, J.; Zhang, N. Enhanced Heart Attack Prediction Using eXtreme Gradient Boosting. J. Theory Pract. Eng. Sci. 2024, 4, 9–16. [Google Scholar] [CrossRef]

- Probst, P.; Wright, M.N.; Boulesteix, A.L. Hyperparameters and tuning strategies for random forest. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef]

- Ali, A.; Hamraz, M.; Kumam, P.; Khan, D.M.; Khalil, U.; Sulaiman, M.; Khan, Z. A k-nearest neighbours based ensemble via optimal model selection for regression. IEEE Access 2020, 8, 132095–132105. [Google Scholar] [CrossRef]

- Bergstra, J.; Komer, B.; Eliasmith, C.; Yamins, D.; Cox, D.D. Hyperopt: A python library for model selection and hyperparameter optimization. Comput. Sci. Discov. 2015, 8, 014008. [Google Scholar] [CrossRef]

- Dao, D.V.; Trinh, S.H.; Ly, H.-B.; Pham, B.T. Prediction of compressive strength of geopolymer concrete using entirely steel slag aggregates: Novel hybrid artificial intelligence approaches. Appl. Sci. 2019, 9, 1113. [Google Scholar] [CrossRef]

- Fatah, T.A.; Mastoi, A.K.; Bhatti, N.-u.-K.; Ali, M. Optimizing Stabilization of Contaminated Mining Sludge: A Machine Learning Approach to Predict Strength and Heavy Metal Leaching. Arab. J. Sci. Eng. 2024, 1–21. [Google Scholar] [CrossRef]

- Shen, K.-Q.; Ong, C.-J.; Li, X.-P.; Wilder-Smith, E.P. Feature selection via sensitivity analysis of SVM probabilistic outputs. Mach. Learn. 2008, 70, 1–20. [Google Scholar] [CrossRef]

- Tohidi, B.; Burgass, R.; Danesh, A.; Todd, A. Hydrate inhibition effect of produced water: Part 1—Ethane and propane simple gas hydrates. In Proceedings of the SPE Offshore Europe Conference and Exhibition, Aberdeen, UK, 7–10 September 1993. [Google Scholar]

| Feature | Unit | Minimum | Maximum |

|---|---|---|---|

| Temperature | °C | −15 | 25 |

| Pressure | bar | 5 | 100 |

| Flow | Kg/h | 10 K | 500 K |

| Water content | Mole% | 0.0007 | 0.004 |

| Specific gravity | - | 0.55 | 0.85 |

| Metric | XGBoost | RF | DT | KNN | ||||

|---|---|---|---|---|---|---|---|---|

| Training | Testing | Training | Testing | Training | Testing | Training | Testing | |

| Adj. R2 | 0.999 | 0.999 | 0.999 | 0.997 | 0.998 | 0.994 | 0.996 | 0.992 |

| MAE | 0.369 | 0.943 | 2.238 | 5.680 | 2.339 | 5.695 | 3.648 | 6.07 |

| RMSE | 0.602 | 2.06 | 4.369 | 6.13 | 5.12 | 6.594 | 5.619 | 10.475 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mukhsaf, M.H.; Li, W.; Jani, G.H. Optimizing Methanol Injection Quantity for Gas Hydrate Inhibition Using Machine Learning Models. Appl. Sci. 2025, 15, 3229. https://doi.org/10.3390/app15063229

Mukhsaf MH, Li W, Jani GH. Optimizing Methanol Injection Quantity for Gas Hydrate Inhibition Using Machine Learning Models. Applied Sciences. 2025; 15(6):3229. https://doi.org/10.3390/app15063229

Chicago/Turabian StyleMukhsaf, Mohammed Hilal, Weiqin Li, and Ghassan Husham Jani. 2025. "Optimizing Methanol Injection Quantity for Gas Hydrate Inhibition Using Machine Learning Models" Applied Sciences 15, no. 6: 3229. https://doi.org/10.3390/app15063229

APA StyleMukhsaf, M. H., Li, W., & Jani, G. H. (2025). Optimizing Methanol Injection Quantity for Gas Hydrate Inhibition Using Machine Learning Models. Applied Sciences, 15(6), 3229. https://doi.org/10.3390/app15063229