Autonomous Mobile Station for Artificial Intelligence Monitoring of Mining Equipment and Risks

Abstract

1. Introduction

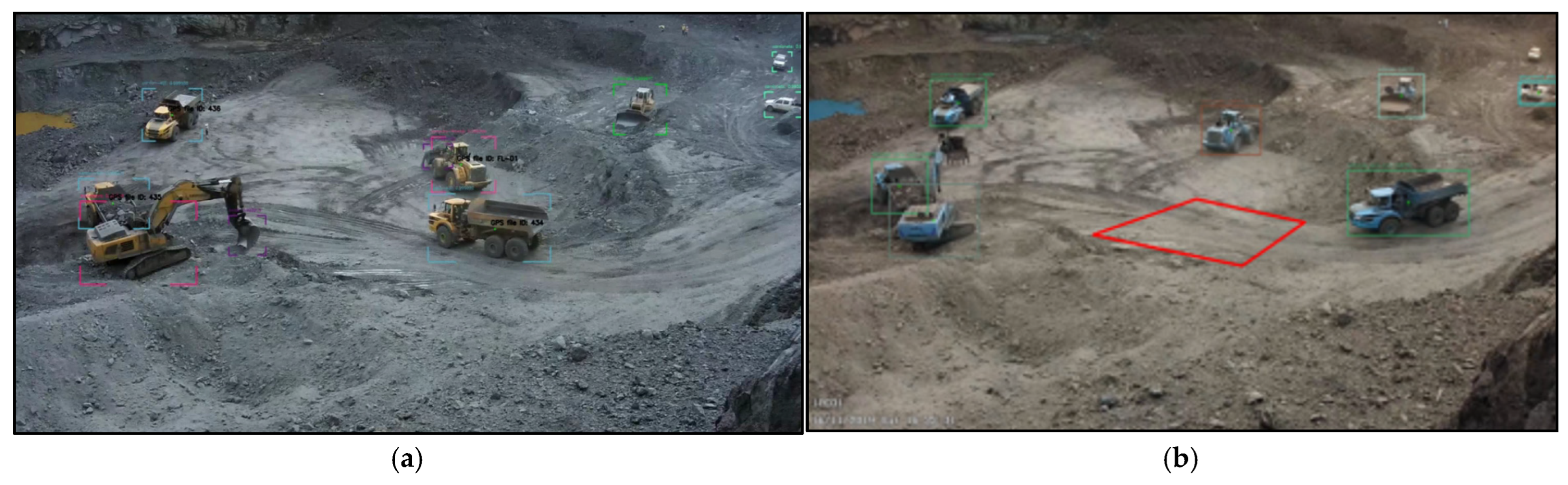

- Development of an autonomous mobile station with artificial vision and artificial intelligence capable of identifying equipment, people, and animals in critical operational areas, optimizing safety and surveillance.

- Implementation of deep learning algorithms to analyze movements and operation times, improving the allocation of mining equipment and correcting inefficiencies not considered by traditional systems.

- Integration of virtual delimitation of risk zones and issuance of automatic alerts in real time when unwanted presence is identified, significantly reducing occupational accidents.

- Application of data-augmented convolutional neural networks (CNN) to achieve 100% accuracy in the identification of key mining equipment during validation tests in real environments.

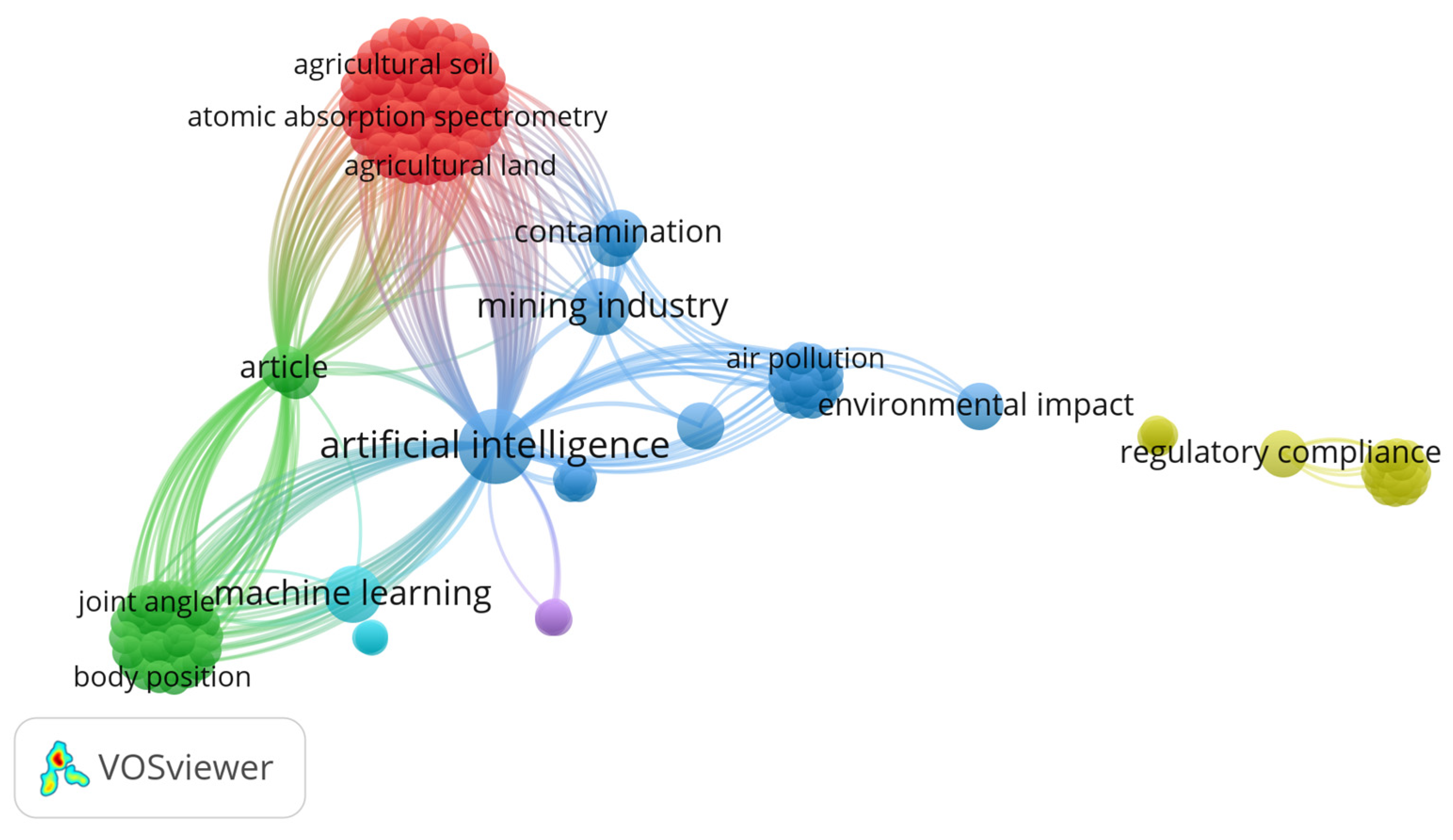

2. Literature Review

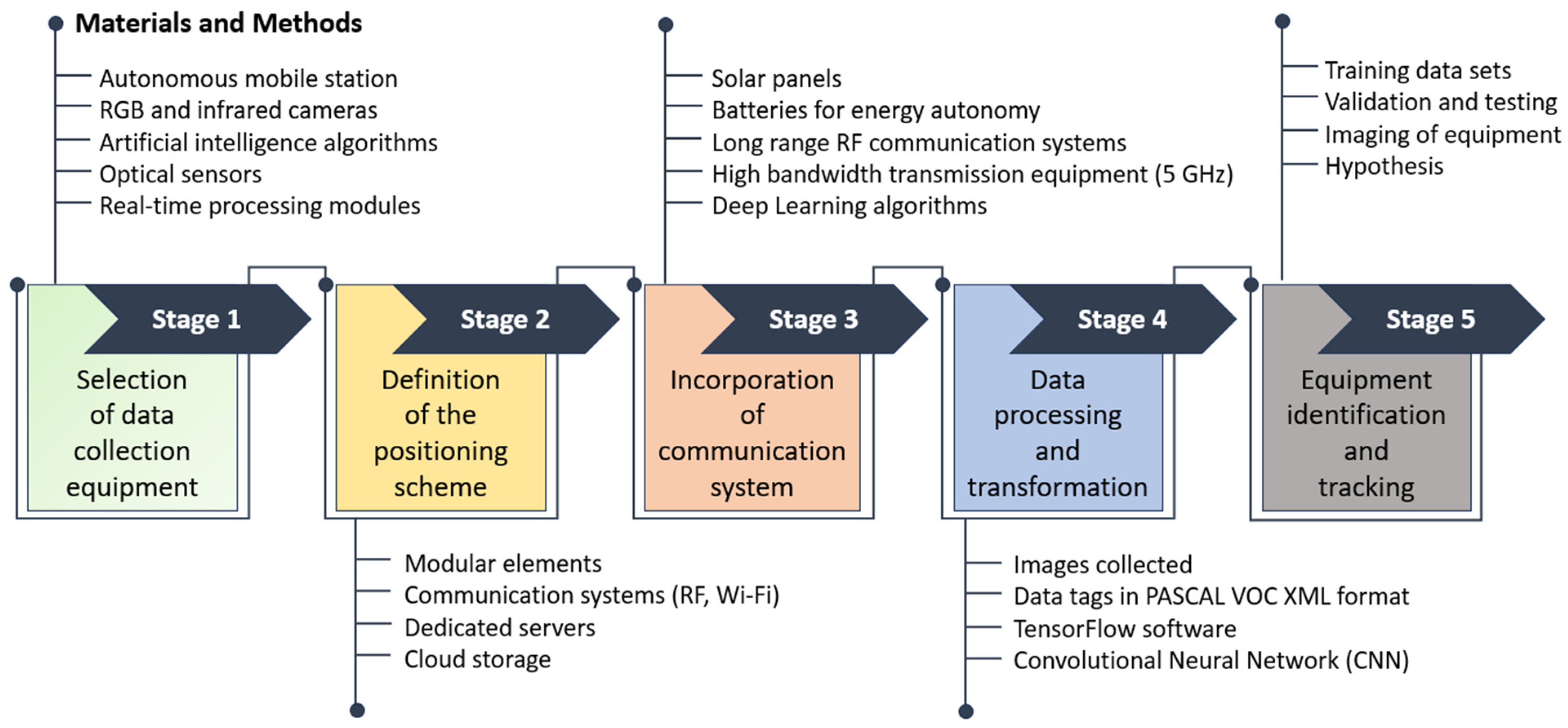

3. Materials and Methods

3.1. Selection of Data Collection Equipment

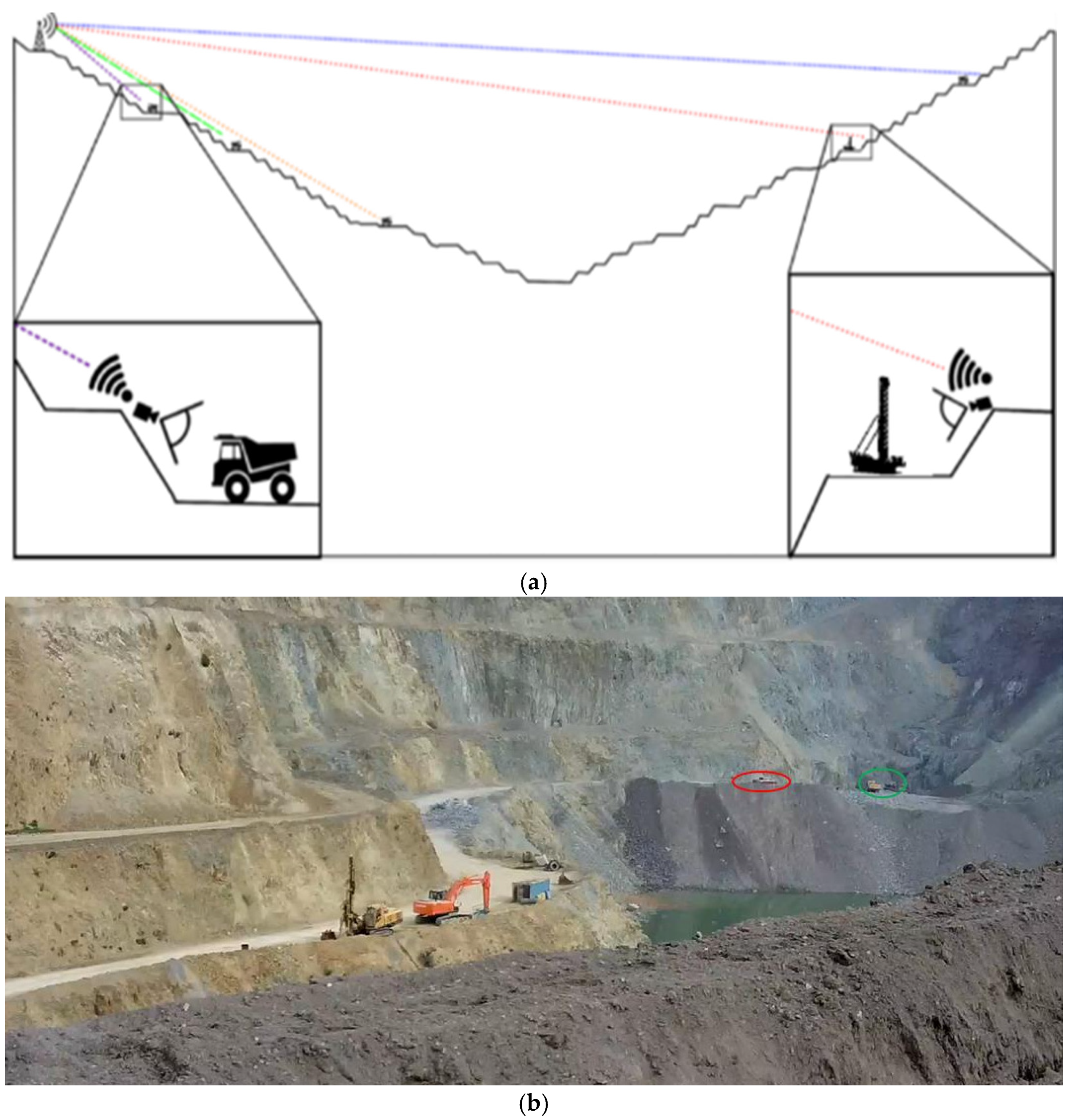

3.2. Definition of the Positioning Scheme

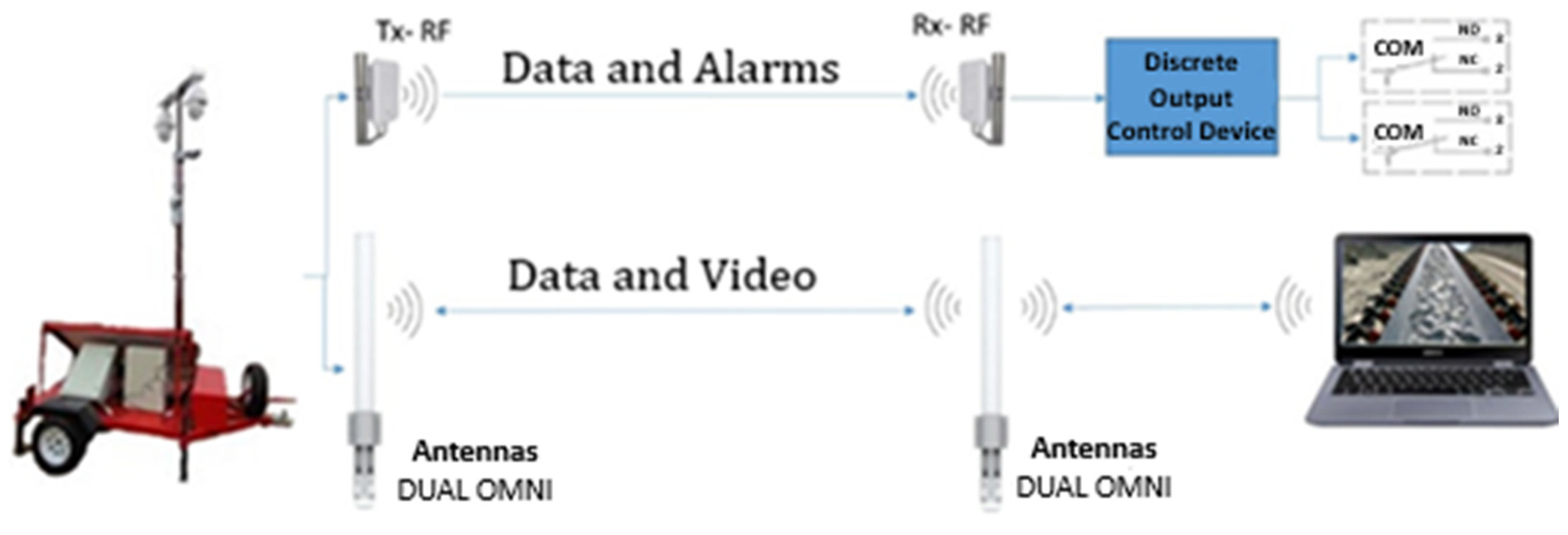

3.3. Incorporation of the Communication System

3.3.1. RF Transmission System (Tx-RF/Rx-RF)

- Transmit power: +20 dBm (100 mW).

- Receiver sensitivity: −139 dBm

- Transmission rate: 0.3 to 62.5 kbps

- Latency: <10 ms

- Antennas: Dual 5 dBi omnidirectional, IP67-rated for outdoor operation

3.3.2. High Bandwidth (5 GHz) Wi-Fi Link

- Channel width: 80 MHz

- Latency: <50 ms

- Security protocol: WPA2-PSK with 128-bit AES encryption

- Range: 500–800 m (LoS)

- Antennas: 5 dBi dual-band Omni, with low interference shielding

3.4. Data Processing and Transformation

3.5. Main Features of the Model

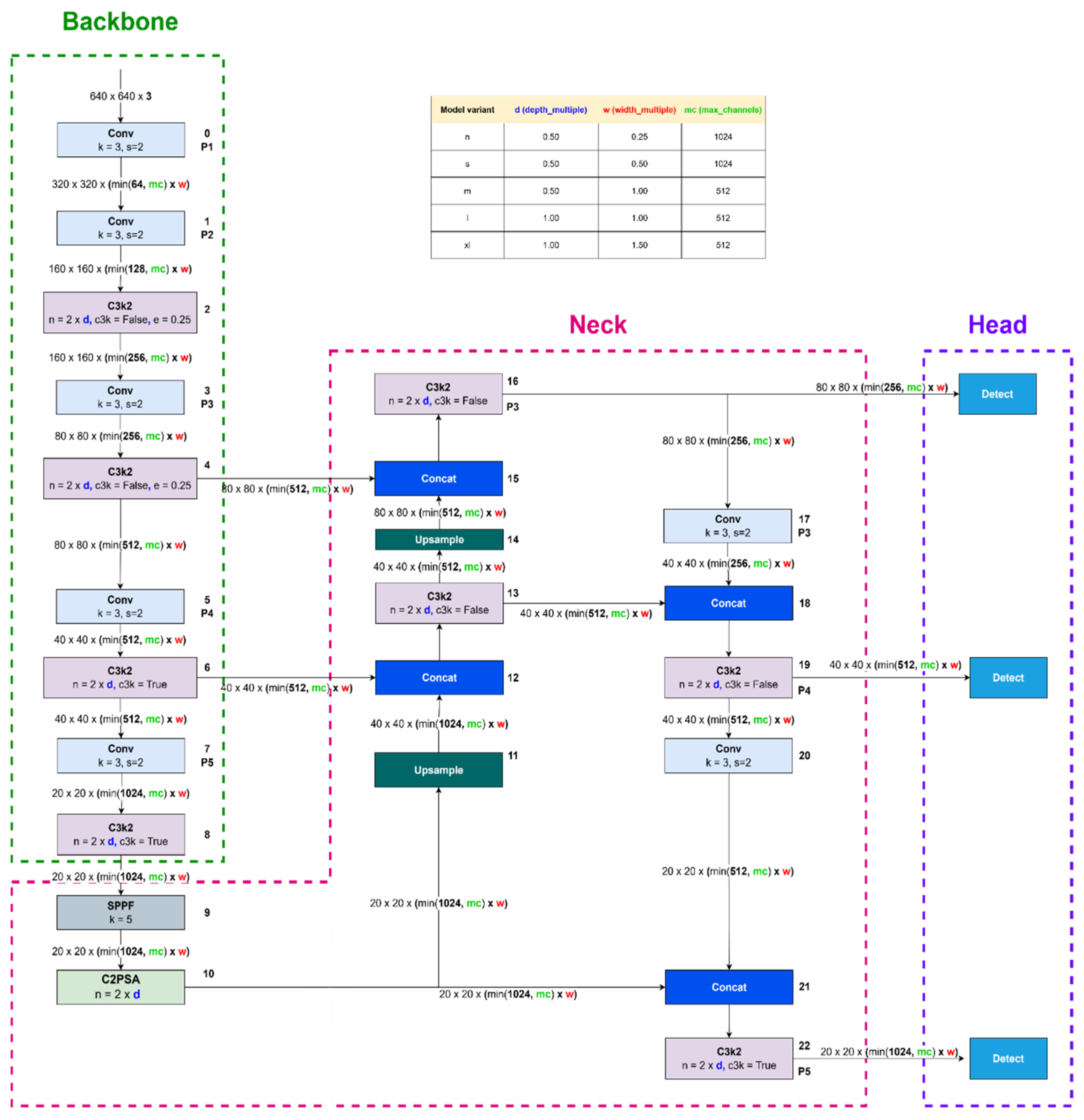

3.5.1. Architecture Details

- Convolutional layers: The model includes a deep CNN with a variable number of convolutional layers depending on the version (n, s, m, l, x), ranging from 2.6 M to 56.9 M parameters [75].

- Size and number of filters: Convolutional layers use kernels of sizes 3 × 3 and 5 × 5, optimizing feature extraction at different spatial scales.

- Activation functions: The activation function used in all convolutional layers is Leaky ReLU, which guarantees nonlinearity and stable gradient flow.

- Pooling strategy: The model employs spatial pyramid pooling (SPP) to retain spatial information while efficiently reducing dimensionality.

- Regularization techniques: The training process integrates multiple regularization strategies, including dropout (0, 1) and label smoothing (0, 1), which prevent overfitting and improve generalization [76].

- Backbone: It is the module responsible for visual feature extraction. It is composed of multiple deep convolutional blocks with CSP (Cross Stage Partial) connections that optimize the gradient flow and reduce the computational cost. The convolutional layers employ 3 × 3 and 5 × 5 filters, with variable strides and adequate padding to preserve spatial resolution. In the base versions, the backbone contains approximately 30–40 convolutional layers. All layers are accompanied by batch normalization (Batch Normalization) and Leaky ReLU activation (α = 0.1).

- Neck: The middle section of the model implements an optimized FPN (Feature Pyramid Network) and PANet mechanism, which allows effective feature combination at multiple scales. Operations such as concatenation, bilinear upsampling, and 1 × 1 convolutions are included to adjust the dimensionality of the features. In addition, SPP (Spatial Pyramid Pooling) is incorporated to retain contextual information at different resolutions.

- Head: The final prediction layer performs simultaneous inference at three scales (P3, P4, P5), adjusted for small, medium, and large objects. The model employs anchor-free detection, which improves flexibility and speed of inference. Each prediction includes box coordinates, confidence score, and classification. The total number of predictions per image varies according to the size of the feature map, with outputs generated through 1 × 1 convolutions and sigmoid activation functions.

- Regularization and optimization: During training, techniques such as Dropout (p = 0.1) and Label Smoothing (ε = 0.1) are applied. The loss is calculated using a function composed of three components: CIoU loss for boxes, binary cross-entropy for classification, and objectness loss. AdamW optimizer with initial learning rate of 0.001 and cosine scheduler was employed.

- Implementation: The model was trained using PyTorch 2.0 and the Ultralytics YOLOv11 framework, run on an NVIDIA RTX 3090 GPU with 24 GB of VRAM, batch size of 16, for 300 epochs. Final model selection was performed with early stopping and cross validation.

3.5.2. Dataset and Training Details

3.5.3. Training Setup

- Epochs: 300

- Image size: 640

- Batch size: 16

- Patience 100

- Optimizer: AdamW with a learning rate of 7.7 × 10−4 and pulse of 0.9

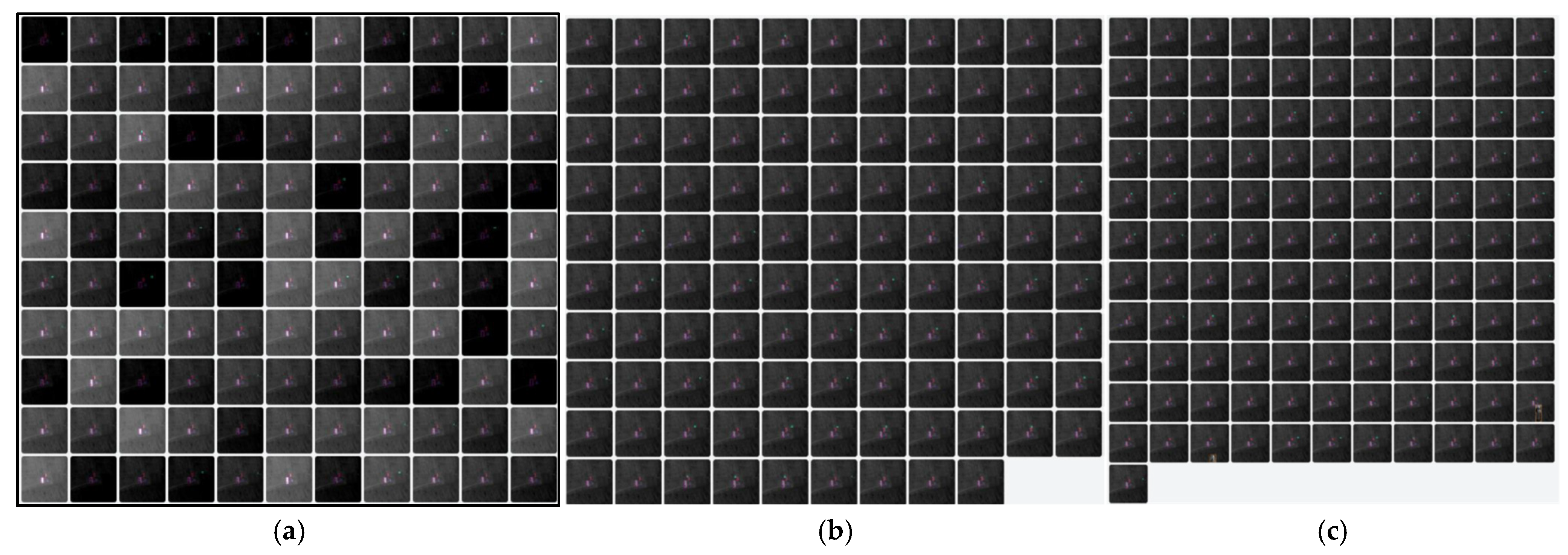

3.5.4. Data Augmentation

- HSV modifications (hue: 0.015, saturation: 0.7, value: 0.4)

- Geometric transformations such as translation (0.1), scaling (0.5), and shearing (0.1)

- Horizontal rotations (0.5 probability)

- Mosaic augmentation, which combines several images in a single batch to improve generalization

- Model performance and computational efficiency

3.6. Identification and Tracking

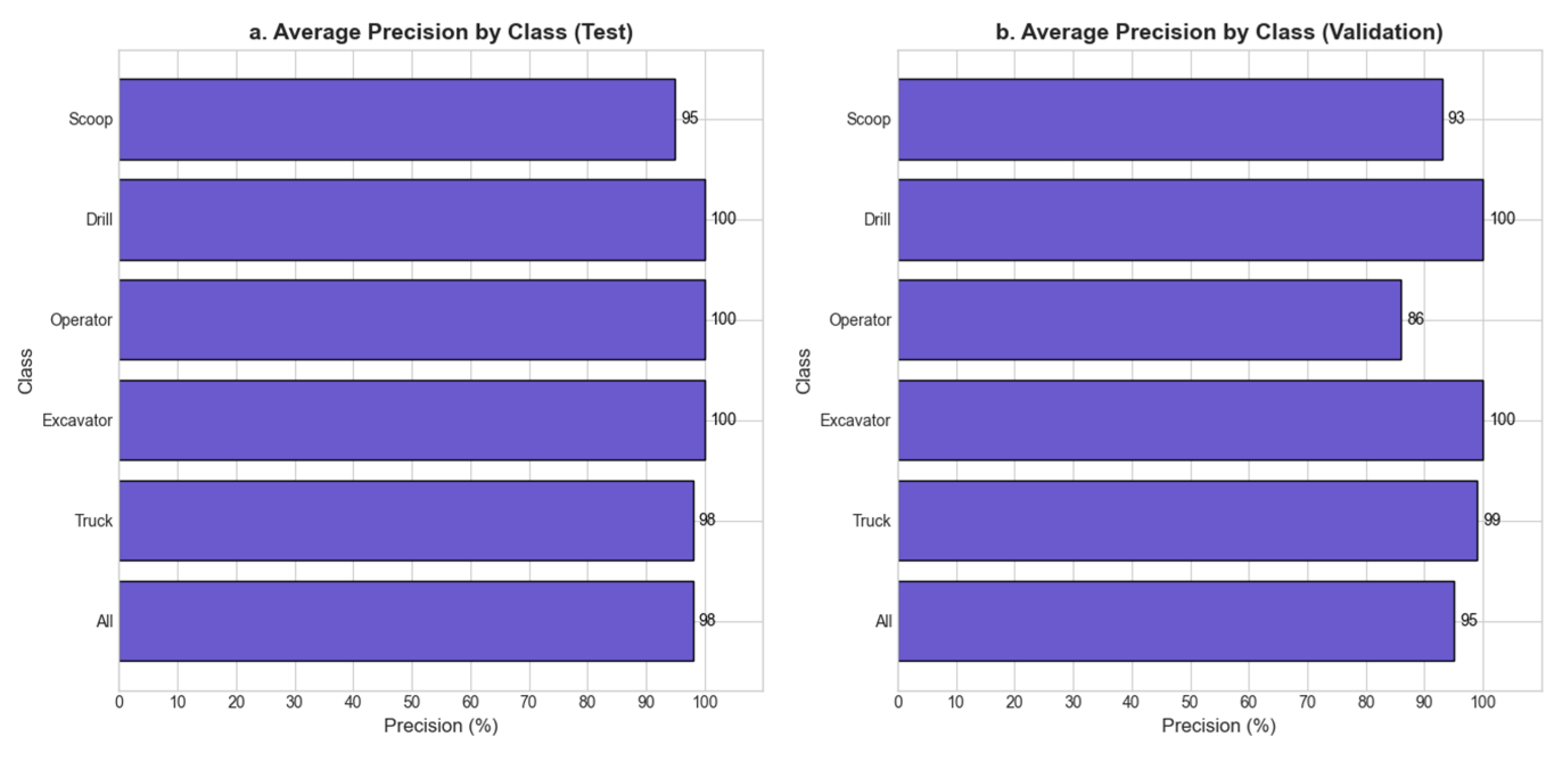

- H10. The trained neural network does not achieve an average accuracy higher than 95% in the classification of mining equipment (pickup truck, excavator, operator, drill, scoop) in both the validation and test sets.

- H11. The trained neural network achieves an average accuracy greater than 95% in classifying mining equipment (pickup, excavator, operator, drill, scoop) in both the validation and test sets.

- H20. There are no specific classes (such as excavator or drill) that reach 100% accuracy in the validation and test stages.

- H21. Specific classes (such as excavator or drill) reach 100% accuracy in the validation and testing stages.

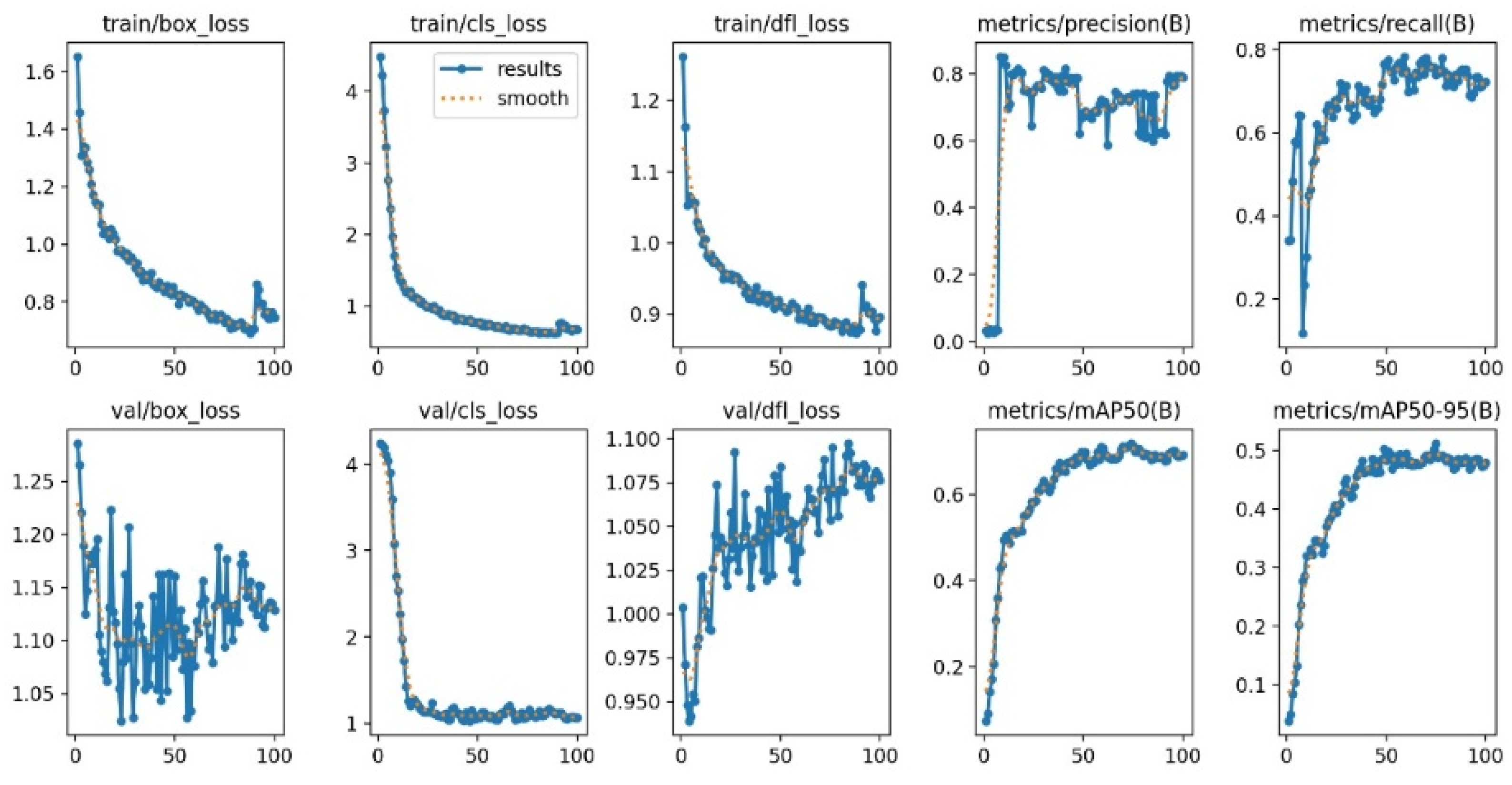

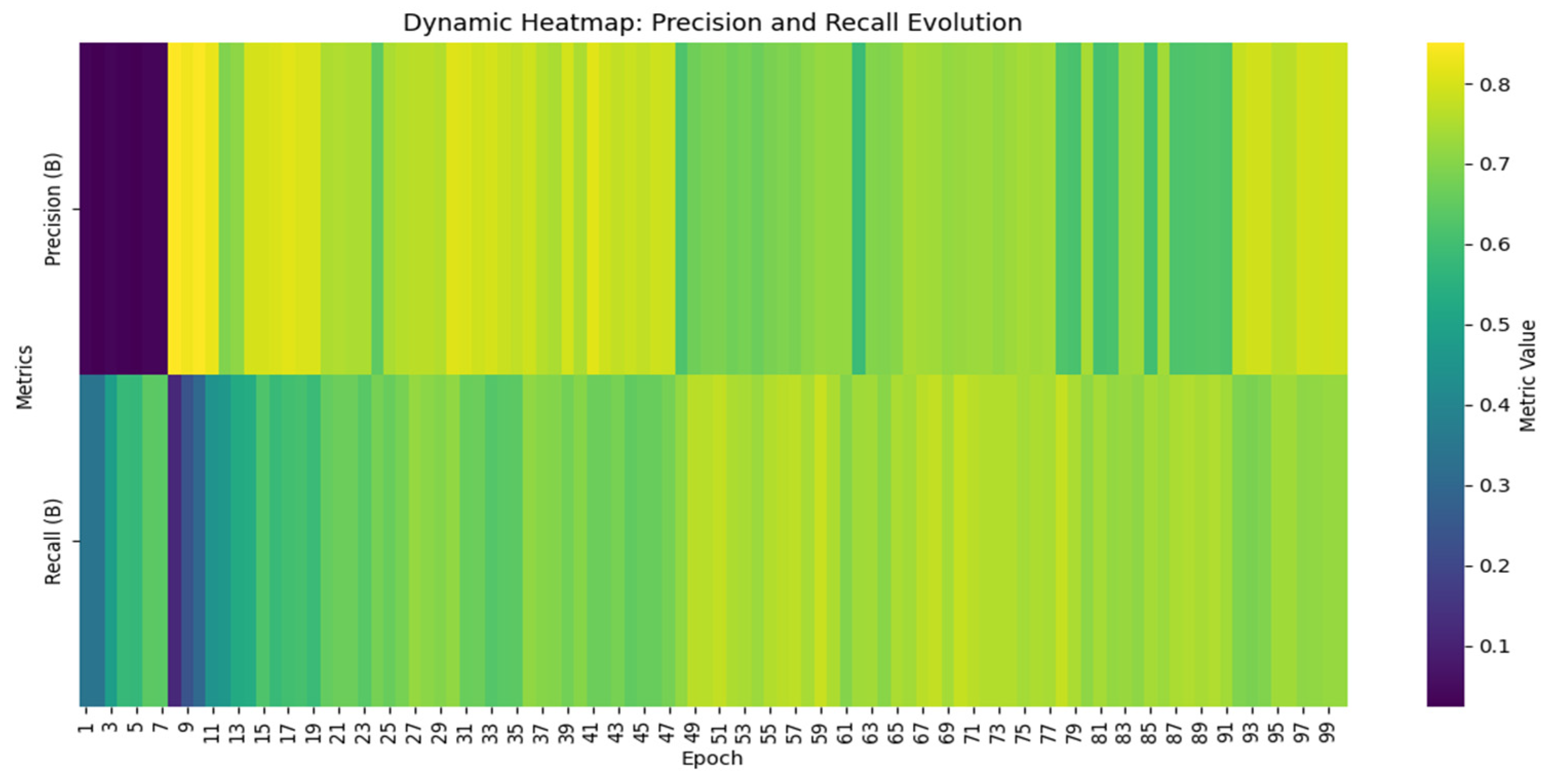

- H30. The evolution of precision (accuracy) and recall metrics shows no significant stabilization patterns between majority and minority classes across epochs.

- H31. The evolution of precision and recall metrics shows significant stabilization patterns towards the later epochs, being more consistent in the majority classes than in the minority classes.

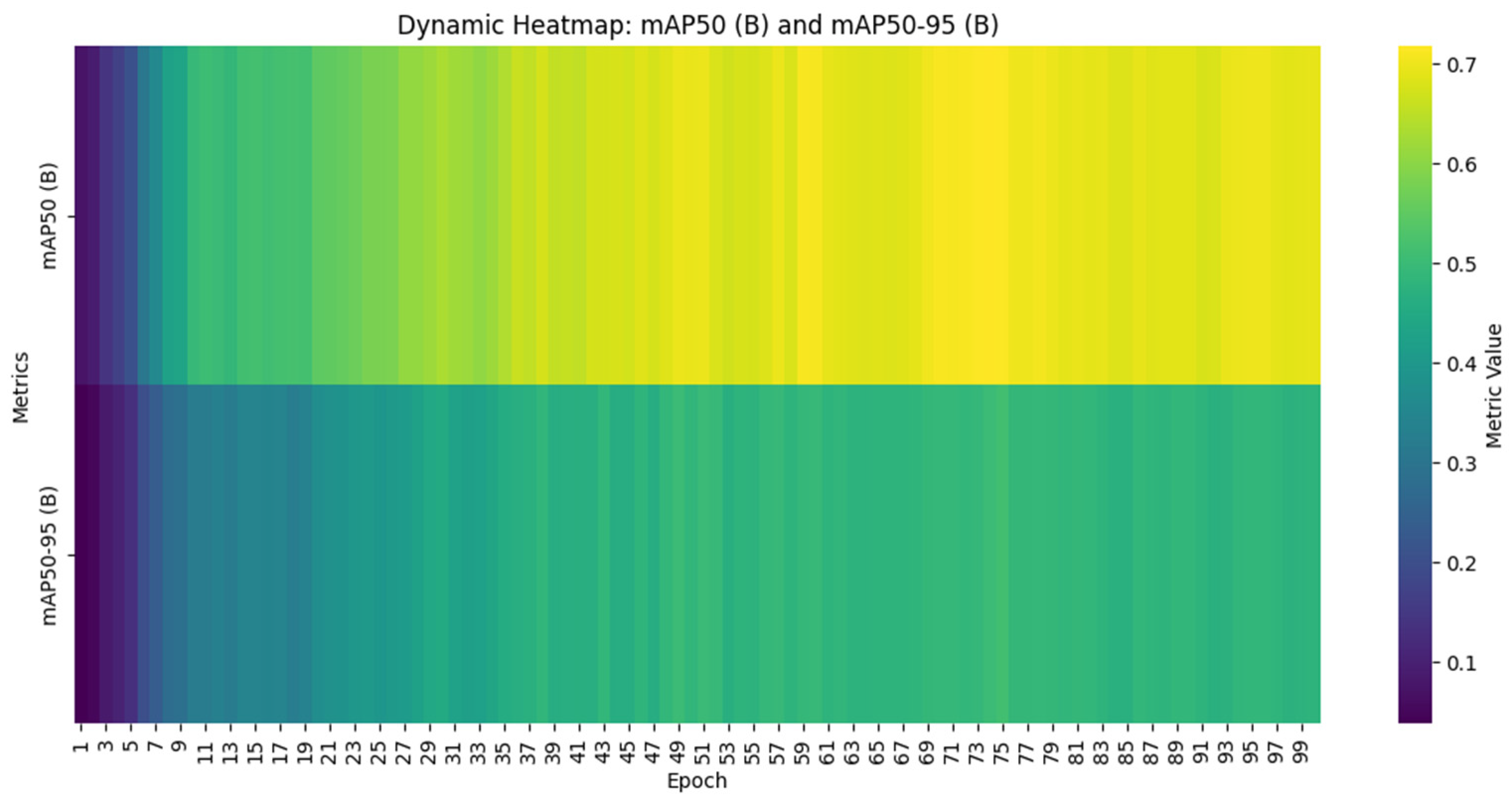

- H40. There is no significant trend of convergence between metrics across training epochs.

- H41. There is a significant trend of convergence between metrics across training epochs.

4. Results

4.1. Stage 1: Selection of Data Collection Equipment

4.2. Stage 2: Definition of the Positioning Scheme

4.3. Stage 3: Incorporation of the Communication System

- RF system: it allows the transmission of simple data and the activation of discrete signals at a distance of up to 8 km, with response times in the order of milliseconds. This ensures fast actuation to prevent accidents or emergency stops.

- High-bandwidth transmission (5 GHz): Facilitates real-time video transmission from the high-risk sector being monitored.

4.4. Stage 4: Data Processing and Transformation

4.5. Stage 5: Identification and Tracking

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Marimuthu, R.; Sankaranarayanan, B.; Ali, S.M.; de Sousa Jabbour, A.B.L.; Karuppiah, K. Assessment of key socio-economic and environmental challenges in the mining industry: Implications for resource policies in emerging economies. Sustain. Prod. Consum. 2021, 27, 814–830. [Google Scholar] [CrossRef]

- Ranjith, P.G.; Zhao, J.; Ju, M.; De Silva, R.V.; Rathnaweera, T.D.; Bandara, A.K. Opportunities and challenges in deep mining: A brief review. Engineering 2017, 3, 546–551. [Google Scholar] [CrossRef]

- Jämsä-Jounela, S.L. Future automation systems in context of process systems and minerals engineering. IFAC-PapersOnLine 2019, 52, 403–408. [Google Scholar] [CrossRef]

- Nguyen, N.M.; Pham, D.T. Tendencies of mining technology development in relation to deep mines. Gorn. Nauk. Tekhnol. Min. Sci. Technol. 2019, 4, 16–22. [Google Scholar] [CrossRef]

- Ediriweera, A.; Wiewiora, A. Barriers and enablers of technology adoption in the mining industry. Resour. Policy 2021, 73, 102188. [Google Scholar] [CrossRef]

- Lumadi, V.W.; Nyasha, S. Technology and growth in the South African mining industry: An assessment of critical success factors and challenges. J. S. Afr. Inst. Min. Metall. 2024, 124, 163–171. [Google Scholar] [CrossRef]

- Hamraoui, L.; Bergani, A.; Ettoumi, M.; Aboulaich, A.; Taha, Y.; Khalil, A.; Neculita, C.M.; Benzaazoua, M. Towards a Circular Economy in the Mining Industry: Possible Solutions for Water Recovery through Advanced Mineral Tailings Dewatering. Minerals 2024, 14, 319. [Google Scholar] [CrossRef]

- Qi, C.C. Big data management in the mining industry. Int. J. Miner. Metall. Mater. 2020, 27, 131–139. [Google Scholar] [CrossRef]

- Calzada Olvera, B. Innovation in mining: What are the challenges and opportunities along the value chain for Latin American suppliers? Miner. Econ. 2022, 35, 35–51. [Google Scholar] [CrossRef]

- Singh, G.; Singh, S.K.; Chaurasia, R.C.; Jain, A.K. The Present and Future Prospect of Artificial Intelligence in the Mining Industry. Mach. Learn. 2024, 53, 1. [Google Scholar]

- Mutovina, N.; Nurtay, M.; Kalinin, A.; Tomilov, A.; Tomilova, N. Application of Artificial Intelligence and Machine Learning in Expert Systems for the Mining Industry: Literature Review of Modern Methods and Technologies. Preprints 2024. [Google Scholar] [CrossRef]

- Minbaleev, A.V.; Berestnev, M.; Evsikov, A.K.S. Regulating the use of artificial intelligence in the mining industry-Web of Science Core Collection. Proc. Tula States Univ. Sci. Earth 2022, 2, 509–525. [Google Scholar]

- Ghosh, R. Applications, promises and challenges of artificial intelligence in mining industry: A review. TechRxiv 2023. [Google Scholar] [CrossRef]

- Sardjono, W.; Perdana, W.G. Adoption of Artificial Intelligence in Response to Industry 4.0 in the Mining Industry. In Proceedings of the Conference on Innovative Technologies in Intelligent Systems and Industrial Applications, Sydney, Australia, 16–18 November 2022; Springer Nature: Cham, Switzerland, 2022; pp. 699–707. [Google Scholar]

- Soofastaei, A. The application of artificial intelligence to reduce greenhouse gas emissions in the mining industry. Green Technol. Improv. Environ. Earth 2018, 25, 234–245. [Google Scholar]

- Matloob, S.; Li, Y.; Khan, K.Z. Safety measurements and risk assessment of coal mining industry using artificial intelligence and machine learning. Open J. Bus. Manag. 2021, 9, 1198–1209. [Google Scholar] [CrossRef]

- Muchowe, R.M. Artificial Intelligence and Training: Opportunities and Challenges in The Zimbabwean Mining Industry. Met Manag. Rev. 2024, 11, 20–31. [Google Scholar] [CrossRef]

- Pimpalkar, A.S.; Gote, A.C. Utilization of artificial intelligence and machine learning in the coal mining industry. AIP Conf. Proc. 2024, 3188, 040002. [Google Scholar] [CrossRef]

- Bendaouia, A.; Qassimi, S.; Boussetta, A.; Benzakour, I.; Amar, O.; Hasidi, O. Artificial intelligence for enhanced flotation monitoring in the mining industry: A ConvLSTM-based approach. Comput. Chem. Eng. 2024, 180, 108476. [Google Scholar] [CrossRef]

- Kaushal, H.; Bhatnagar, A. Application of Artificial Intelligence in Drones in the Mining Industry: A Case Study. Res. Highlights Sci. Technol. 2023, 9, 98–107. [Google Scholar] [CrossRef]

- Gordan, M.; Sabbagh-Yazdi, S.R.; Ghaedi, K.; Ismail, Z. A Damage Detection Approach in the Era of Industry 4.0 Using the Relationship between Circular Economy, Data Mining, and Artificial Intelligence. Adv. Civ. Eng. 2023, 2023, 3067824. [Google Scholar] [CrossRef]

- Saputra, N.; Putri, A.M.; Aryanto, A.D.; Maharani, D.; Aritonang, I.J.; Ladhuny, M.; Garcia, A. Utilizing Artificial Intelligence to Analyze Technological Trends in Indonesia’s Mining and Quarrying Industry. In Proceedings of the 2024 3rd International Conference on Creative Communication and Innovative Technology (ICCIT), Tangerang, Indonesia, 7–8 August 2024; pp. 1–6. [Google Scholar]

- Hasan, A.N. Potential Use of Artificial Intelligence in the Mining Industry: South African Case Studies; University of Johannesburg: Johannesburg, South Africa, 2013. [Google Scholar]

- Cho, I.; Ju, Y. Text mining method to identify artificial intelligence technologies for the semiconductor industry in Korea. World Pat. Inf. 2023, 74, 102212. [Google Scholar] [CrossRef]

- Kaur, N.; Mahajan, N.; Singh, V.; Gupta, A. Artificial Intelligence Revolutionizing The Restaurant Industry-Analyzing Customer Experience Through Data Mining and Thematic Content Analysis. In Proceedings of the 2023 3rd International Conference on Innovative Practices in Technology and Management (ICIPTM), Uttar Pradesh, India, 22–24 February 2023; pp. 1–5. [Google Scholar]

- Bolanos, F.; Salatino, A.; Osborne, F.; Motta, E. Artificial intelligence for literature reviews: Opportunities and challenges. Artif. Intell. Rev. 2024, 57, 259. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Acypreste, R.D.; Paraná, E. Artificial Intelligence and employment: A systematic review. Braz. J. Political Econ. 2022, 42, 1014–1032. [Google Scholar] [CrossRef]

- Atkinson, C.F. Cheap, quick, and rigorous: Artificial intelligence and the systematic literature review. Soc. Sci. Comput. Rev. 2024, 42, 376–393. [Google Scholar] [CrossRef]

- Herrera-Vidal, G.; Coronado-Hernández, J.R.; Paredes, B.P.M.; Ramos, B.O.S.; Sierra, D.M. Systematic configurator for complexity management in manufacturing systems. Entropy 2024, 26, 747. [Google Scholar] [CrossRef]

- Herrera-Vidal, G.; Coronado-Hernández, J.R.; Derpich-Contreras, I.; Paredes, B.P.M.; Gatica, G. Measuring Complexity in Manufacturing: Integrating Entropic Methods, Programming and Simulation. Entropy 2025, 27, 50. [Google Scholar] [CrossRef]

- Damsgaard, H.J.; Ometov, A.; Nurmi, J. Approximation opportunities in edge computing hardware: A systematic literature review. ACM Comput. Surv. 2023, 55, 1–49. [Google Scholar] [CrossRef]

- Abimannan, S.; El-Alfy, E.S.M.; Hussain, S.; Chang, Y.S.; Shukla, S.; Satheesh, D.; Breslin, J.G. Towards federated learning and multi-access edge computing for air quality monitoring: Literature review and assessment. Sustainability 2023, 15, 13951. [Google Scholar] [CrossRef]

- Rahman, A.; Street, J.; Wooten, J.; Marufuzzaman, M.; Gude, V.G.; Buchanan, R.; Wang, H. MoistNet: Machine vision-based deep learning models for wood chip moisture content measurement. Expert Syst. Appl. 2025, 259, 125363. [Google Scholar] [CrossRef]

- Ji, A.; Fan, H.; Xue, X. Vision-Based Body Pose Estimation of Excavator Using a Transformer-Based Deep-Learning Model. J. Comput. Civ. Eng. 2025, 39, 04024064. [Google Scholar] [CrossRef]

- Zhou, H.; Cong, H.; Wang, Y.; Dou, Z. A computer-vision-based deep learning model of smoke diffusion. Process Saf. Environ. Prot. 2024, 187, 721–735. [Google Scholar] [CrossRef]

- Paniego, S.; Shinohara, E.; Cañas, J. Autonomous driving in traffic with end-to-end vision-based deep learning. Neurocomputing 2024, 594, 127874. [Google Scholar] [CrossRef]

- Liang, T.; Liu, T.; Wang, J.; Zhang, J.; Zheng, P. Causal deep learning for explainable vision-based quality inspection under visual interference. J. Intell. Manuf. 2025, 36, 1363–1384. [Google Scholar] [CrossRef]

- Cheng, M.Y.; Cao, M.T.; Nuralim, C.K. Computer vision-based deep learning for supervising excavator operations and measuring real-time earthwork productivity. J. Supercomput. 2023, 79, 4468–4492. [Google Scholar] [CrossRef]

- Prunella, M.; Scardigno, R.M.; Buongiorno, D.; Brunetti, A.; Longo, N.; Carli, R.; Dotoli, M.; Bevilacqua, V. Deep learning for automatic vision-based recognition of industrial surface defects: A survey. IEEE Access 2023, 11, 43370–43423. [Google Scholar] [CrossRef]

- Chen, Z.; Santhakumar, P.; Granland, K.; Troeung, C.; Chen, C.; Tang, Y. Predicting Future Warping from the First Layer: A vision-based deep learning method for 3D Printing Monitoring. In Proceedings of the 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE), Auckland, New Zealand, 26–30 August 2023; pp. 1–6. [Google Scholar]

- Xi, J.; Gao, L.; Zheng, J.; Wang, D.; Tu, C.; Jiang, J.; Miao, Y.; Zhong, J. Automatic spacing inspection of rebar spacers on reinforcement skeletons using vision-based deep learning and computational geometry. J. Build. Eng. 2023, 79, 107775. [Google Scholar] [CrossRef]

- Chen, Y.; Yuan, X.; Wang, J.; Wu, R.; Li, X.; Hou, Q.; Cheng, M.M. YOLO-MS: Rethinking multi-scale representation learning for real-time object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025; early access. [Google Scholar]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X. Mamba YOLO: SSMs-based YOLO for object detection. arXiv 2024, arXiv:2406.05835. [Google Scholar]

- Zhou, Y. A YOLO-NL object detector for real-time detection. Expert Syst. Appl. 2024, 238, 122256. [Google Scholar] [CrossRef]

- Oreski, G. YOLO* C—Adding context improves YOLO performance. Neurocomputing 2023, 555, 126655. [Google Scholar] [CrossRef]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. Yolo-facev2: A scale and occlusion aware face detector. Pattern Recognit. 2024, 155, 110714. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Zhao, J.; Du, C.; Li, Y.; Mudhsh, M.; Guo, D.; Fan, Y.; Wu, X.; Wang, X.; Almodfer, R. YOLO-Granada: A lightweight attentioned Yolo for pomegranates fruit detection. Sci. Rep. 2024, 14, 16848. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Hua, Z.; Wen, Y.; Zhang, S.; Xu, X.; Song, H. E-YOLO: Recognition of estrus cow based on improved YOLOv8n model. Expert Syst. Appl. 2024, 238, 122212. [Google Scholar] [CrossRef]

- Zhao, C.; Shu, X.; Yan, X.; Zuo, X.; Zhu, F. RDD-YOLO: A modified YOLO for detection of steel surface defects. Measurement 2023, 214, 112776. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Kanagamalliga, S.; Jayashree, R.; Guna, R. Fast R-CNN approaches for transforming dental caries detection: An in-depth investigation. In Proceedings of the 2024 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Hefei, China, 24–26 October 2024; pp. 1–5. [Google Scholar]

- Dwivedi, P.; Khan, A.A.; Gawade, A.; Deolekar, S. A deep learning based approach for automated skin disease detection using Fast R-CNN. In Proceedings of the 2021 Sixth International Conference on Image Information Processing (ICIIP), Shimla, India, 26–28 November 2021; Volume 6, pp. 116–120. [Google Scholar]

- Huang, H.N.; Zhang, T.; Yang, C.T.; Sheen, Y.J.; Chen, H.M.; Chen, C.J.; Tseng, M.W. Image segmentation using transfer learning and Fast R-CNN for diabetic foot wound treatments. Front. Public Health 2022, 10, 969846. [Google Scholar] [CrossRef]

- Lee, Y.S.; Park, W.H. Diagnosis of depressive disorder model on facial expression based on fast R-CNN. Diagnostics 2022, 12, 317. [Google Scholar] [CrossRef]

- Chen, X.; Lian, C.; Deng, H.H.; Kuang, T.; Lin, H.-Y.; Xiao, D.; Gateno, J.; Shen, D.; Xia, J.J.; Yap, P.-T. Fast and accurate craniomaxillofacial landmark detection via 3D faster R-CNN. IEEE Trans. Med. Imaging 2021, 40, 3867–3878. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Wang, X.; Ni, G.; Liu, J.; Hao, R.; Liu, L.; Liu, Y.; Du, X.; Xu, F. Fast and accurate automated recognition of the dominant cells from fecal images based on Faster R-CNN. Sci. Rep. 2021, 11, 10361. [Google Scholar] [CrossRef] [PubMed]

- Tian, X.; Bi, C.; Han, J.; Yu, C. EasyRP-R-CNN: A fast cyclone detection model. Vis. Comput. 2024, 40, 4829–4841. [Google Scholar] [CrossRef]

- Fu, R.; He, J.; Liu, G.; Li, W.; Mao, J.; He, M.; Lin, Y. Fast seismic landslide detection based on improved mask R-CNN. Remote Sens. 2022, 14, 3928. [Google Scholar] [CrossRef]

- Chen, Z.Y.; Liao, I.Y.; Liao, I.Y. Improved fast r-cnn with fusion of optical and 3d data for robust palm tree detection in high resolution uav images. Int. J. Mach. Learn. Comput. 2020, 10, 122–127. [Google Scholar] [CrossRef]

- Zhang, H.; Tan, J.; Zhao, C.; Liang, Z.; Liu, L.; Zhong, H.; Fan, S. A fast detection and grasping method for mobile manipulator based on improved faster R-CNN. Ind. Robot. Int. J. Robot. Res. Appl. 2020, 47, 167–175. [Google Scholar] [CrossRef]

- Sasirekha, R.; Surya, V.; Nandhini, P.; Preethy Jemima, P.; Bhanushree, T.; Hanitha, G. Ensemble of Fast R-CNN with Bi-LSTM for Object Detection. In Proceedings of the 2025 6th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Goathgaun, Nepal, 7–8 January 2025; pp. 1200–1206. [Google Scholar]

- Chaudhuri, A. Hierarchical modified Fast R-CNN for object detection. Informatica 2021, 45, 67–82. [Google Scholar] [CrossRef]

- Eversberg, L.; Lambrecht, J. Combining synthetic images and deep active learning: Data-efficient training of an industrial object detection model. J. Imaging 2024, 10, 16. [Google Scholar] [CrossRef]

- Ouarab, S.; Boutteau, R.; Romeo, K.; Lecomte, C.; Laignel, A.; Ragot, N.; Duval, F. Industrial Object Detection: Leveraging Synthetic Data for Training Deep Learning Models. In Proceedings of the International Conference on Industrial Engineering and Applications, Nice, France, 10–12 January 2024; Springer Nature: Cham, Switzerland, 2024; pp. 200–212. [Google Scholar]

- Ouarab, S. Enhancing Industrial Object Detection with Synthetic Data for Deep Learning Model Training. Ph.D. Thesis, Higher School of Computer Science, Sidi Bel Abbès, Algeria, 2023. [Google Scholar]

- Kapusi, T.P.; Erdei, T.I.; Husi, G.; Hajdu, A. Application of deep learning in the deployment of an industrial scara machine for real-time object detection. Robotics 2022, 11, 69. [Google Scholar] [CrossRef]

- Puttemans, S.; Callemein, T.; Goedemé, T. Building robust industrial applicable object detection models using transfer learning and single pass deep learning architectures. arXiv 2020, arXiv:2007.04666. [Google Scholar]

- Rhee, J.; Park, J.; Lee, J.; Ahn, H.; Pham, L.H.; Jeon, J. A Safety System for Industrial Fields using YOLO Object Detection with Deep Learning. In Proceedings of the 2023 International Technical Conference on Circuits/Systems, Computers, and Communications (ITC-CSCC), Grand Hyatt Jeju, Republic of Korea, 25–28 June 2023; pp. 1–6. [Google Scholar]

- IEEE 802.11ad-2012; IEEE Standard for Information Technology—Telecommunications and Information Exchange Between Systems—Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications Amendment 3: Enhancements for Very High Throughput in the 60 GHz Band. IEEE Standards Association: Piscataway, NJ, USA, 2012.

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO11; Version 11.0.0; Ultralytics: Frederick, MD, USA, 2024. [Google Scholar]

- Hidayatullah, P.; Syakrani, N.; Sholahuddin, M.R.; Gelar, T.; Tubagus, R. YOLOv8 to YOLO11: A Comprehensive Architecture In-depth Comparative Review. arXiv 2025, arXiv:2501.13400. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO (Version 8.0.0) [Software]; Ultralytics: Frederick, MD, USA, 2023. [Google Scholar]

- Tzutalin, D. Tzutalin/Labelimg; Github: Online, 2015. [Google Scholar]

- Cao, X.; Su, Y.; Geng, X.; Wang, Y. YOLO-SF: YOLO for fire segmentation detection. IEEE Access 2023, 11, 111079–111092. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, B.; Yuan, X.; Lian, C.; Ji, L.; Zhang, Q.; Yue, J. YOLO-CIR: The network based on YOLO and ConvNeXt for infrared object detection. Infrared Phys. Technol. 2023, 131, 104703. [Google Scholar] [CrossRef]

- Su, P.; Han, H.; Liu, M.; Yang, T.; Liu, S. MOD-YOLO: Rethinking the YOLO architecture at the level of feature information and applying it to crack detection. Expert Syst. Appl. 2024, 237, 121346. [Google Scholar] [CrossRef]

| Class | Train | Validation |

|---|---|---|

| Person | 65 | 15 |

| Truck-haul | 427 | 164 |

| Excavator | 38 | 12 |

| Bulldozer | 178 | 80 |

| Front-loader | 173 | 80 |

| Pickup-truck | 165 | 57 |

| Motor-grader | 22 | 3 |

| Rock breaker | 7 | 4 |

| Shovel | 164 | 79 |

| Model | Size (px) | mAPval 50–95 | Parameters (M) | FLOPs (B) |

|---|---|---|---|---|

| YOLO11n | 640 | 39.5 | 2.6 | 6.5 |

| YOLO11s | 640 | 47.0 | 9.4 | 21.5 |

| YOLO11m | 640 | 51.5 | 20.1 | 68.0 |

| YOLO11l | 640 | 53.4 | 25.3 | 86.9 |

| YOLO11x | 640 | 54.7 | 56.9 | 194.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cerna, G.P.; Herrera-Vidal, G.; Coronado-Hernández, J.R. Autonomous Mobile Station for Artificial Intelligence Monitoring of Mining Equipment and Risks. Appl. Sci. 2025, 15, 4197. https://doi.org/10.3390/app15084197

Cerna GP, Herrera-Vidal G, Coronado-Hernández JR. Autonomous Mobile Station for Artificial Intelligence Monitoring of Mining Equipment and Risks. Applied Sciences. 2025; 15(8):4197. https://doi.org/10.3390/app15084197

Chicago/Turabian StyleCerna, Gabriel País, Germán Herrera-Vidal, and Jairo R. Coronado-Hernández. 2025. "Autonomous Mobile Station for Artificial Intelligence Monitoring of Mining Equipment and Risks" Applied Sciences 15, no. 8: 4197. https://doi.org/10.3390/app15084197

APA StyleCerna, G. P., Herrera-Vidal, G., & Coronado-Hernández, J. R. (2025). Autonomous Mobile Station for Artificial Intelligence Monitoring of Mining Equipment and Risks. Applied Sciences, 15(8), 4197. https://doi.org/10.3390/app15084197