Human Reliability Analysis in Acetylene Filling Operations: Risk Assessment and Mitigation Strategies

Abstract

1. Introduction

- Offering guidance on potential and likely decision-making pathways for operators, based on mental process models from cognitive psychology;

- Expanding the understanding of errors beyond the typical binary classification (omission-commission), acknowledging the significance of “cognitive errors”;

- Considering the dynamic nature of human–machine interaction, which can serve as a foundation for developing simulators to assess operator performance.

- Capability-based errors;

- Knowledge-based errors;

- Rule-based errors.

- Task Analysis—TA;

- Human Error Identification—HEI;

- Wuantification of human factor reliability or Human Error Probability—HEP.

- Problem definition.

- Task analysis.

- Identification of human errors.

- Representation.

- Quantification of human error.

- Impact assessment.

- Error reduction analysis.

- Documentation and quality assurance [24].

2. Materials and Methods

- Activity errors A;

- Control errors C;

- Information retrieval errors R;

- Tracking/checking errors T;

- Selection errors S;

- Planning errors P.

- Low (L)—The occurrence of the error is nearly unpredictable at the current security level.

- Medium (M)—The error has been detected in the past, but the current security level limits its recurrence.

- High (H)—The error has occurred multiple times (by different team members) or repeatedly by the same employee, and its occurrence must be expected at the current system security level.

3. Results

3.1. Human Reliability Assessment for Activities with the Potential for Human Failure

3.2. TESEO Method (Technique to Estimate Operator’s Errors)

| K1: Activity Typological Factor |

| Simple, routine: 0.001 |

| Requiring attention, routine: 0.01 |

| Not routine: 0.1 |

| K2: Time Available |

| Routine Activities |

| >20 s: 0.5 |

| >10 s: 1 |

| >2 s: 10 |

| Non-routine Activities |

| >60 s: 0.1 |

| >45 s: 0.3 |

| >30 s: 1 |

| >3 s: 10 |

| K3: Operators Qualities |

| Carefully selected, expert, well trained: 0.5 |

| Average knowledge and training: 1 |

| Little knowledge, poorly trained: 3 |

| K4: State of Anxiety |

| Situation of grave emergency: 3 |

| Situation of potential emergency: 2 |

| Normal situation: 1 |

| K5: Environmental Ergonomic Factor |

| Excellent microclimate, excellent interface with plant: 0.7 |

| Good microclimate, good interface with plant: 1 |

| Discrete microclimate, discrete interface with plant: 3 |

| Discrete microclimate, poor interface with plant: 7 |

| Worse microclimate, poor interface with plant: 11 |

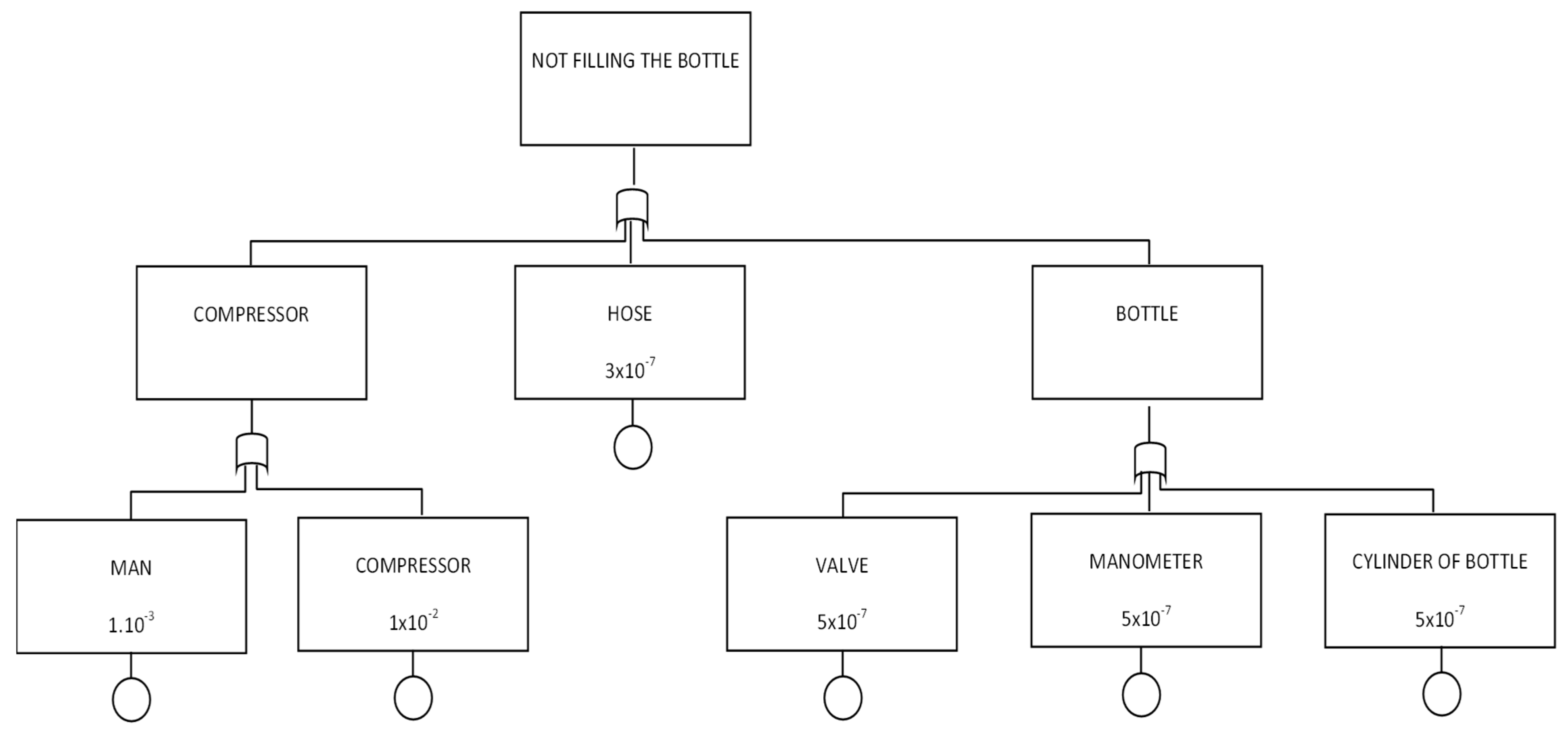

3.3. FTA

- Represent the causal dependency model to investigate the reliability and safety of the monitored system, in order to understand the input–output interactions;

- Define the failure frequency in the system or any of its components;

- Provide a clear analytical representation of the logical operations within the monitored system;

- Present the monitored system as a graphical model in which both quantitative and qualitative data are recorded.

- Define the system under analysis, the purpose and scope of the analysis, and the basic assumptions made.

- Define the fault. Specifying an undesirable fault involves identifying the onset of unsafe conditions or the system’s inability to perform required functions.

- Create a graphical representation consisting of individual elements connected by logical operations, describing the monitored process. It is important to distinguish between conditioned and unconditioned states during this step.

- Evaluate the fault state tree.

- Conduct a logical (qualitative) analysis of the system.

- Perform a numerical (quantitative) analysis of the system.

- Assess the plausibility of the information for critical system elements.

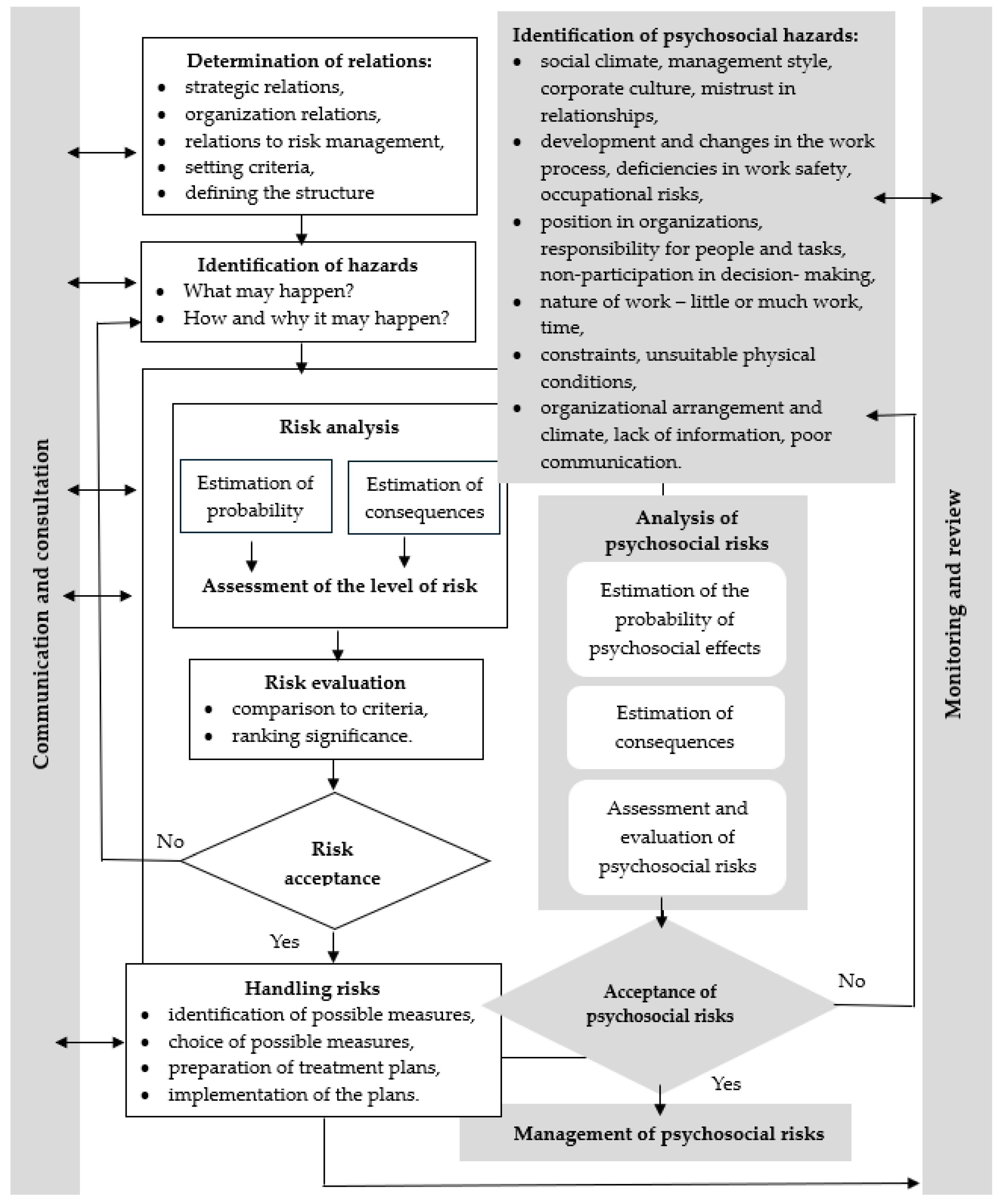

4. Discussion

- -

- The severity of the damage and its consequences;

- -

- The probability of the occurrence of such damage, which depends on the following:

- (a)

- The frequency and duration of the threat;

- (b)

- The probability of an adverse event occurring;

- (c)

- Measures (technical, organizational, humanitarian) to eliminate the risk.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reason, J. Human error: Models and management. BMJ 2000, 320, 768–770. [Google Scholar] [CrossRef] [PubMed]

- Adhikari, S.; Bayley, C.; Bedford, T.; Busby, J.; Cliffe, A.; Devgun, G.; Eid, M.; French, S.; Keshvala, R.; Pollard, S.J.T.; et al. Human Reliability Analysis: A Review and Critique; Technical Report; University of Manchester: Manchester, UK, 2021. [Google Scholar]

- Bell, J.; Holroyd, J. Review of Human Reliability Assessment Methods; Health & Safety Laboratory: Buxton, UK, 2009. [Google Scholar]

- Dougherty, E. Human reliability Analysis—Where Shouldst Thou Turn? Reliab. Eng. Syst. Saf. 1990, 29, 283–299. [Google Scholar] [CrossRef]

- Ji, C.; Gao, F.; Liu, W. Dependence assessment in human reliability analysis based on cloud model and best-worst method. Reliab. Eng. Syst. Saf. 2024, 242, 109770. [Google Scholar] [CrossRef]

- Perera, H.; De Silva, C. Human reliability analysis using machine learning: A review. Saf. Sci. 2021, 134, 105072. [Google Scholar]

- Ioffe, A.; Muscarella, A. Virtual reality simulations for human reliability analysis in high-risk environments. Int. J. Hum.-Comput. Stud. 2021, 146, 102552. [Google Scholar]

- Hollnagel, E. The Cognitive Reliability and Error Analysis Method (CREAM): A framework for human reliability analysis. Reliab. Eng. Syst. Saf. 2019, 183, 58–67. [Google Scholar]

- Shi, H.; Xu, X. A review of Bayesian network-based human reliability analysis methods. Saf. Sci. 2020, 124, 104602. [Google Scholar]

- Cooper, M.D. Towards a model of safety culture. Saf. Sci. 2020, 36, 111–136. [Google Scholar] [CrossRef]

- Patriarca, R.; Ramos, M.; Paltrinieri, N.; Massaiu, S.; Costantino, F.; Di Gravio, G.; Boring, R.L. Human reliability analysis: Exploring the intellectual structure of a research field. Reliab. Eng. Syst. Saf. 2020, 203, 107102. [Google Scholar] [CrossRef]

- Cross, R.; Youngblood, R. Probabilistic Risk Assessment Procedures Guide for Offshore Applications; Technical Report; Bureau of Safety and Environmental Enforcement: Washington, DC, USA, 2018. [Google Scholar]

- Rasmussen, J. Risk management in a dynamic society: A modelling problem. Saf. Sci. 1997, 27, 183–213. [Google Scholar] [CrossRef]

- Skrehot, P.; Trpiš, J. Analýza Chybování Lidského Činitele Pomocí Integrované Metody HTA-PHEA; Metodická Příručka; Výzkumný Ústav Bezpečnosti Práce, v.v.i.: Prague, Czech Republic, 2009. [Google Scholar]

- Swain, A.D.; Guttmann, H.E. Handbook of Human-Reliability Analysis with Emphasis on Nuclear Power Plant Applications; Final Report; Sandia National Labs: Albuquerque, NM, USA, 1983. [Google Scholar]

- Park, J.; Boring, R.L. An Approach to Dependence Assessment in Human Reliability Analysis: Application of Lag and Linger Effects. In Proceedings of the ESREL 2020 PSAM 15, Venice, Italy, 1–5 November 2020. [Google Scholar]

- Fargnoli, M.; Lombardi, M. Preliminary Human Safety Assessment (PHSA) for the improvement of the behavioral aspects of safety climate in the construction industry. Buildings 2019, 9, 69. [Google Scholar] [CrossRef]

- Fargnoli, M.; Lombardi, M.; Puri, D. Applying Hierarchical Task Analysis to Depict Human Safety Errors during Pesticide Use in Vineyard Cultivation. Agriculture 2019, 9, 158. [Google Scholar] [CrossRef]

- Jung, W.; Park, J.; Kim, Y.; Choi, S.Y.; Kim, S. HuREX—A framework of HRA data collection from simulators in nuclear power plants. Reliab. Eng. Syst. Saf. 2020, 194, 106235. [Google Scholar] [CrossRef]

- IOGP Assessment Risk Data Directory: Human Factors in QRA 66. 2010. Available online: https://www.iogp.org/bookstore/product/risk-assessment-data-directory-human-factors-in-qra/ (accessed on 5 July 2024).

- Porthin, M.; Liinasuo, M.; Kling, T. Effects of digitalization of nuclear power plant control rooms on human reliability analysis—A review. Reliab. Eng. Syst. Saf. 2020, 194, 106415. [Google Scholar] [CrossRef]

- Ade, N.; Peres, S.C. A review of human reliability assessment methods for proposed application in quantitative risk analysis of offshore industries. Int. J. Ind. Ergonom. 2022, 87, 103238. [Google Scholar] [CrossRef]

- De Felice, F.; Petrillo, A. Human Factors and Reliability Engineering for Safety and Security in Critical Infrastructures. Decision Making, Theory, and Practice; Springer International Publishing: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Kirwan, B. A Guide to Practical Human Reliability Assessment; CRC Press: London, UK, 1994; p. 606. [Google Scholar]

- Sandom, C.; Harvey, R.S. Human Factors for Engineers; The Institution of Engineering & Technology: London, UK, 2004. [Google Scholar]

- Harris, D.; Stanton, N.A.; Marshall, A.; Young, M.S.; Demagalski, J.; Salmon, P. Using SHERPA to predict design-induced error on the flight deck. Aerosp. Sci. Technol. 2005, 9, 525–532. [Google Scholar] [CrossRef]

- IEEE. IEEE Guide for Incorporating Human Reliability Analysis into Probabilistic Risk Assessments for Nuclear Power Generating Stations and Other Nuclear Facilities. In IEEE Std 1082-2017 (Revision of IEEE Std 1082-1997); IEEE: New York, NY, USA, 2018; pp. 1–34. [Google Scholar] [CrossRef]

- Hannman, G.W.; Spurgin, A.J. Systematic Human Action Reliability Procedure (SHARP); EPRI NP-3583; NUS Corp.: San Diego, CA, USA, 1984. [Google Scholar]

- Oddone, I. Validation of the TESEO Human Reliability Assessment Technique for the Analysis of Aviation Occurrences, Politecnico di Milano, 2017–2018. Available online: https://www.politesi.polimi.it/retrieve/a81cb05d-03fb-616b-e053-1605fe0a889a/2018_12_Cassis.pdf (accessed on 5 July 2024).

- Schneeweiss, W.G. The Fault Tree Method: In the Fields of Reliability and Safety Technology; LiLoLe-Verlag: Hagen, Germany, 1999. [Google Scholar]

- Mueller, M.; Polinder, H. Electrical Drives for Direct Drive Renewable Energy Systems; Woodhead Publishing: Sawston, UK, 2013; ISBN 978-1-84569-783-9. [Google Scholar]

- Haag, U. Reference Manual Bevi Risk Assessment; National Institute of Public Health and the Environment (RIVM): Bilthoven, The Netherlands, 2009. [Google Scholar]

- Vaurio, J.K. Modelling and quantification of dependent repeatable human errors in system analysis and risk assessment. Reliab. Eng. Syst. Saf. 2001, 71, 179–188. [Google Scholar]

- Strand, G.O.; Haskins, C. On Linking of Task Analysis in the HRA Procedure: The Case of HRA in Offshore Drilling Activities. Safety 2018, 4, 39. [Google Scholar] [CrossRef]

- Steijn, W.M.P.; Van Gulijk, C.; Van der Beek, D.; Sluijs, T. A System-Dynamic Model for Human–Robot Interaction; Solving the Puzzle of Complex Interactions. Safety 2023, 9, 1. [Google Scholar] [CrossRef]

- Li, Y.; Guldenmund, F.W.; Aneziris, O.N. Delivery systems: A systematic approach for barrier management. Saf. Sci. 2017, 121, 679–694. [Google Scholar] [CrossRef]

- Winge, S.; Albrechtsen, E. Accident types and barrier failures in the construction industry. Saf. Sci. 2018, 105, 158–166. [Google Scholar] [CrossRef]

- Nnaji, C.; Karakhan, A.A. Technologies for safety and health management in construction: Current use, implementation benefits and limitations, and adoption barriers. J. Build. Eng. 2020, 29, 101212. [Google Scholar] [CrossRef]

- Selleck, R.; Hassall, M.; Cattani, M. Determining the Reliability of Critical Controls in Construction Projects. Safety 2022, 8, 64. [Google Scholar] [CrossRef]

- Yang, C.W.; Lin, C.J.; Jou, Y.T.; Yenn, T.C. A review of current human reliability assessment methods utilized in high hazard human-system interface design. In Engineering Psychology and Cognitive Ergonomics: 7th International Conference, EPCE 2007, Held as Part of HCI International 2007, Beijing, China, July 22–27, 2007. Proceedings 7 2007; LNAI 4562; Springer: Berlin/Heidelberg, Germany, 2007; pp. 212–221. [Google Scholar]

- Hollnagel, E. Human reliability assessment in context. Nucl. Eng. Technol. 2005, 37, 2. [Google Scholar]

| Process | Acetylene Bottling | Date | |||

|---|---|---|---|---|---|

| Step | Activity Description | Responsibility | Co-Responsibility | Technical Inspection | |

| Preparatory phase | |||||

| 1 | Empty bottles check | Bottling plant operator | Production technologist, operations master | Flame arresters, safety caps | |

| 2 | Checking the amount of solvent—acetone in the bottles | Bottling plant operator | Production technologist, operations master | ||

| 3 | Reacetonation | Bottling plant operator | Production technologist, operations master | ||

| 4 | Connecting the bottles to filling points | Bottling plant operator | Production technologist, operations master | ||

| 5 | Checking the tightness of connections | Bottling plant operator | Production technologist, operations master | ||

| 6 | To be recorded in the production record of production | Bottling plant operator | Production technologist, operations master | ||

| Filling | |||||

| 7 | Securing bottles with fall-arrest chains | Bottling plant operator | Production technologist, operations master | Flame arresters, safety caps | |

| 8 | Bottling | Bottling plant operator | Production technologist, operations master | ||

| 9 | During filling, the bottle cooling function and bottle temperature are checked | Bottling plant operator | Production technologist, operations master | ||

| 10 | Closing the filling valves at the acetylene inlet on the ramp | Bottling plant operator | Production technologist, operations master | ||

| 11 | When the compressors are switched off, the inlet valves on the ramps are closed | Bottling plant operator | Production technologist, operations master | ||

| 12 | Closing the bottles | Bottling plant operator | Production technologist, operations master | ||

| 13 | Opening the degassing valves | Bottling plant operator | Production technologist, operations master | ||

| 14 | Ramp degassing | Bottling plant operator | Production technologist, operations master | ||

| 15 | Degassing valves closing | Bottling plant operator | Production technologist, operations master | ||

| 16 | Bottle securing | Bottling plant operator | Production technologist, operations master | ||

| 17 | Tightness inspection | Bottling plant operator | Production technologist, operations master | ||

| 18 | Bottle weighing | Bottling plant operator | Production technologist, operations master | ||

| 19 | Bottle and ramp valve closing | Bottling plant operator | Production technologist, operations master | ||

| Acetylene bottles carrying away | |||||

| 20 | Transport to the handling area | Transport platoon staff | Production technologist, operations master | Anti-flame insurance, Insurance caps | |

| 21 | Record in the production record | Transport platoon staff | Production technologist, operations master | ||

| 22 | Records of imported empty bottles, dispatched bottles and full bottles | Warehouse woman or foreman (production and dispatch management) | Production technologist, operations master | ||

| Process | HEP | ||||

|---|---|---|---|---|---|

| Step | Activity Description | Responsibility Person | Secondary Inspection | Technical Inspection | Resulting HEP |

| 1 | Empty bottles check | 0.005 | 0.001 | 0.001 | 0.000000005 |

| 2 | Checking the amount of solvent—acetone in the bottles | 0.005 | 0.001 | 0.001 | 0.000000005 |

| 3 | Reacetonation | 0.01 | 0.001 | 0.001 | 0.00000001 |

| 4 | Connecting the bottles to filling points | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 5 | Checking the tightness of connections | 0.0021 | 0.001 | 0.001 | 0.0000000021 |

| 6 | To be recorded in the production record of production | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 7 | Securing bottles with fall-arrest chains | 0.005 | 0.001 | 0.001 | 0.000000005 |

| 8 | Bottling | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 9 | During filling, the bottle cooling function and bottle temperature are checked | 0.005 | 0.001 | 0.001 | 0.000000005 |

| 10 | Closing the filling valves at the acetylene inlet on the ramp | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 11 | When the compressors are switched off, the inlet valves on the ramps are closed | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 12 | Closing the bottles | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 13 | Opening the degassing valves | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 14 | Ramp degassing | 0.01 | 0.001 | 0.001 | 0.00000001 |

| 15 | Degassing valves closing | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 16 | Bottle securing | 0.01 | 0.001 | 0.001 | 0.00000001 |

| 17 | Tightness inspection | 0.005 | 0.001 | 0.001 | 0.000000005 |

| 18 | Bottle weighing | 0.003 | 0.001 | 0.001 | 0.000000003 |

| 19 | Bottle and ramp valve closing | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 20 | Transport to the handling area | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 21 | Record in the production record | 0.001 | 0.001 | 0.001 | 0.000000001 |

| 22 | Records of imported empty bottles, dispatched bottles and full bottles | 0.001 | 0.001 | 0.001 | 0.000000001 |

| Process | Factors | P (EO) | |||||

|---|---|---|---|---|---|---|---|

| Step | Activity Description | K1 | K2 | K3 | K4 | K5 | |

| 1 | Empty bottles check | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 2 | Checking the amount of solvent—acetone in the bottles | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 3 | Reacetonation | 0.01 | 1 | 0.5 | 1 | 1 | 0.005 |

| 4 | Connecting the bottles to filling points | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 5 | Checking the tightness of connections | 0.001 | 1 | 0.5 | 1 | 3 | 0.0015 |

| 6 | To be recorded in the production record of production | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 7 | Securing bottles with fall-arrest chains | 0.001 | 1 | 0.5 | 1 | 3 | 0.0015 |

| 8 | Bottling | ||||||

| 9 | During filling, the bottle cooling function and bottle temperature are checked | 0.001 | 1 | 0.5 | 1 | 3 | 0.0015 |

| 10 | Closing the filling valves at the acetylene inlet on the ramp | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 11 | When the compressors are switched off, the inlet valves on the ramps are closed | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 12 | Closing the bottles | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 13 | Opening the degassing valves | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 14 | Ramp degassing | 0.01 | 1 | 0.5 | 1 | 1 | 0.005 |

| 15 | Degassing valves closing | 0.001 | 1 | 0.5 | 1 | 3 | 0.0015 |

| 16 | Bottle securing | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 17 | Tightness inspection | 0.001 | 1 | 0.5 | 1 | 3 | 0.0015 |

| 18 | Bottle weighing | 0.001 | 0.5 | 1 | 1 | 3 | 0.0015 |

| 19 | Bottle and ramp valve closing | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 20 | Transport to the handling area | 0.001 | 1 | 0.5 | 1 | 7 | 0.00035 |

| 21 | Record in the production record | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

| 22 | Records of imported empty bottles, dispatched bottles and full bottles | 0.001 | 0.5 | 1 | 1 | 7 | 0.0035 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balazikova, M.; Kotianova, Z. Human Reliability Analysis in Acetylene Filling Operations: Risk Assessment and Mitigation Strategies. Appl. Sci. 2025, 15, 4558. https://doi.org/10.3390/app15084558

Balazikova M, Kotianova Z. Human Reliability Analysis in Acetylene Filling Operations: Risk Assessment and Mitigation Strategies. Applied Sciences. 2025; 15(8):4558. https://doi.org/10.3390/app15084558

Chicago/Turabian StyleBalazikova, Michaela, and Zuzana Kotianova. 2025. "Human Reliability Analysis in Acetylene Filling Operations: Risk Assessment and Mitigation Strategies" Applied Sciences 15, no. 8: 4558. https://doi.org/10.3390/app15084558

APA StyleBalazikova, M., & Kotianova, Z. (2025). Human Reliability Analysis in Acetylene Filling Operations: Risk Assessment and Mitigation Strategies. Applied Sciences, 15(8), 4558. https://doi.org/10.3390/app15084558