Cork as a Unique Object: Device, Method, and Evaluation

Abstract

:1. Introduction

- Avoiding storing secret digital keys on vulnerable hardware;

- Natural feature disorder is very hard to clone and falsify. As a consequence, it becomes very expensive to replicate these kind of features;

- The existence of this randomness can be a valuable asset, allowing the use of some cryptographic protocols.

- Disorder—The generated fingerprint should only be based on the unique disorder of the object in study;

- Operability—It is imperative that the fingerprint is calculated in a timely manner, can be detected with different measurements, and is robust to ageing and environmental conditions. Simultaneously, it should be economically viable to manufacture several instances of the measurement equipment;

- Unclonable—It should be overly expensive or impossible for any entity to reproduce an object with the same features of the extracted fingerprint of the physical object.

- Proposing cork as a UNO, part of the Physical Unclonability and Disorder systems approach;

- Proposing a low cost hand-held illumination device for wine anti-counterfeiting applications;

2. Related Works

- Unique—The output of a PUF is unpredictable as a result of the unique micro-structural variations.

- Unclonable—Because of its inherent physical properties, cloning a PUF should be unfeasible or extremely difficult. Two PUFs cannot produce the same response via cloning.

- Unpredictable—Given a set of known challenges producing the responses , it should not be possible to predict the correct response of the challenge .

- One-way—It must not be possible to calculate the challenge that triggers a PUF to generate .

- Tamper evident—Any attempt to recover the structural aspect of a PUF should alter the original structure and therefore the initial challenge-response pair.

3. Proposed Approach

3.1. Illumination Device

3.2. RIOTA

| Algorithm 1 RIOTA’s Implementation |

|

4. Experimental Evaluation—RIOTA’s Implementation and Cork as a UNO

4.1. Databases Description

4.1.1. CASIA-IrisV1 Database

4.1.2. MMU1 Database

4.1.3. IIT Delhi Iris Database

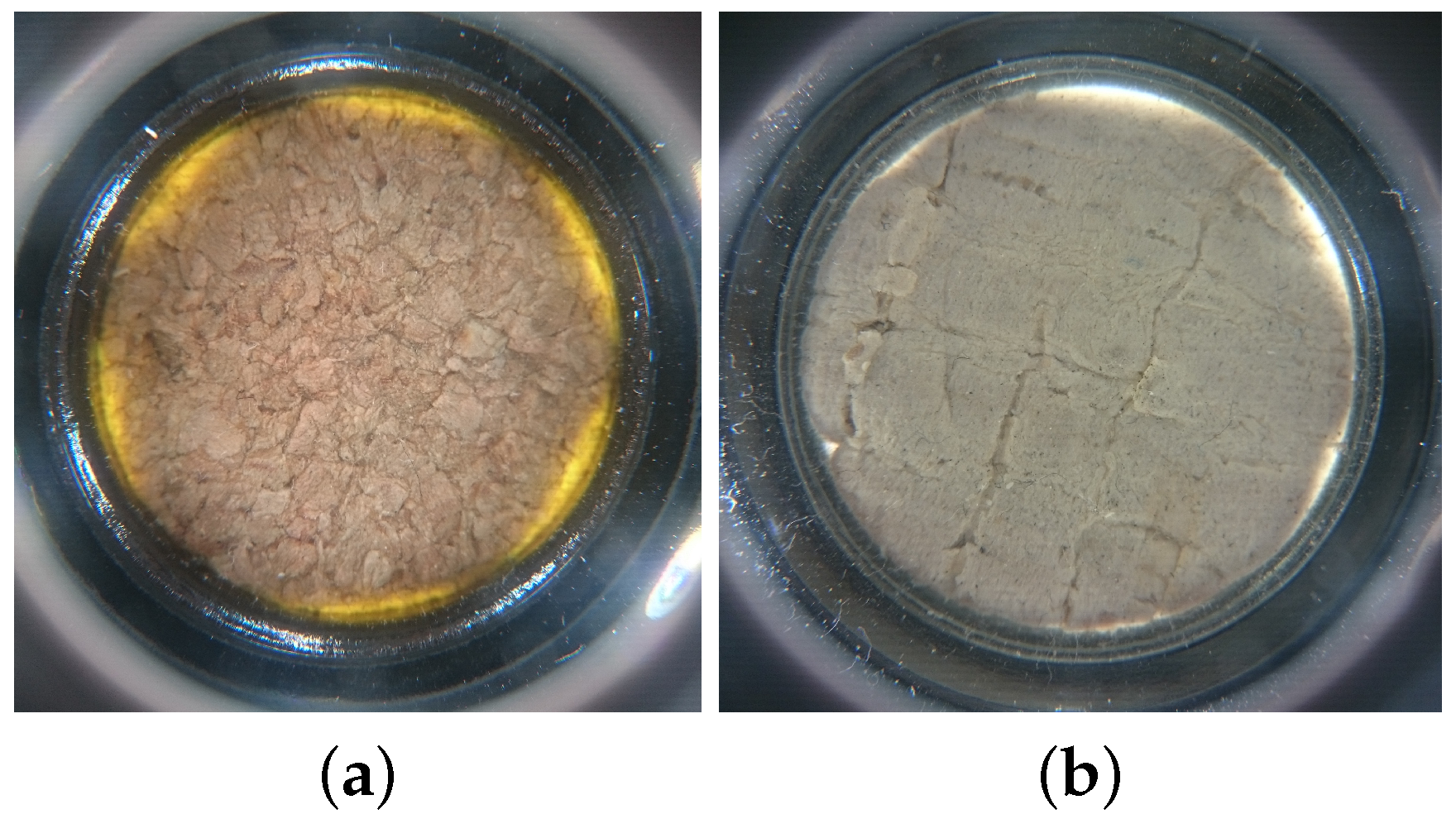

4.1.4. Cork Database

4.2. Evaluation Metrics

4.3. Testing Procedures

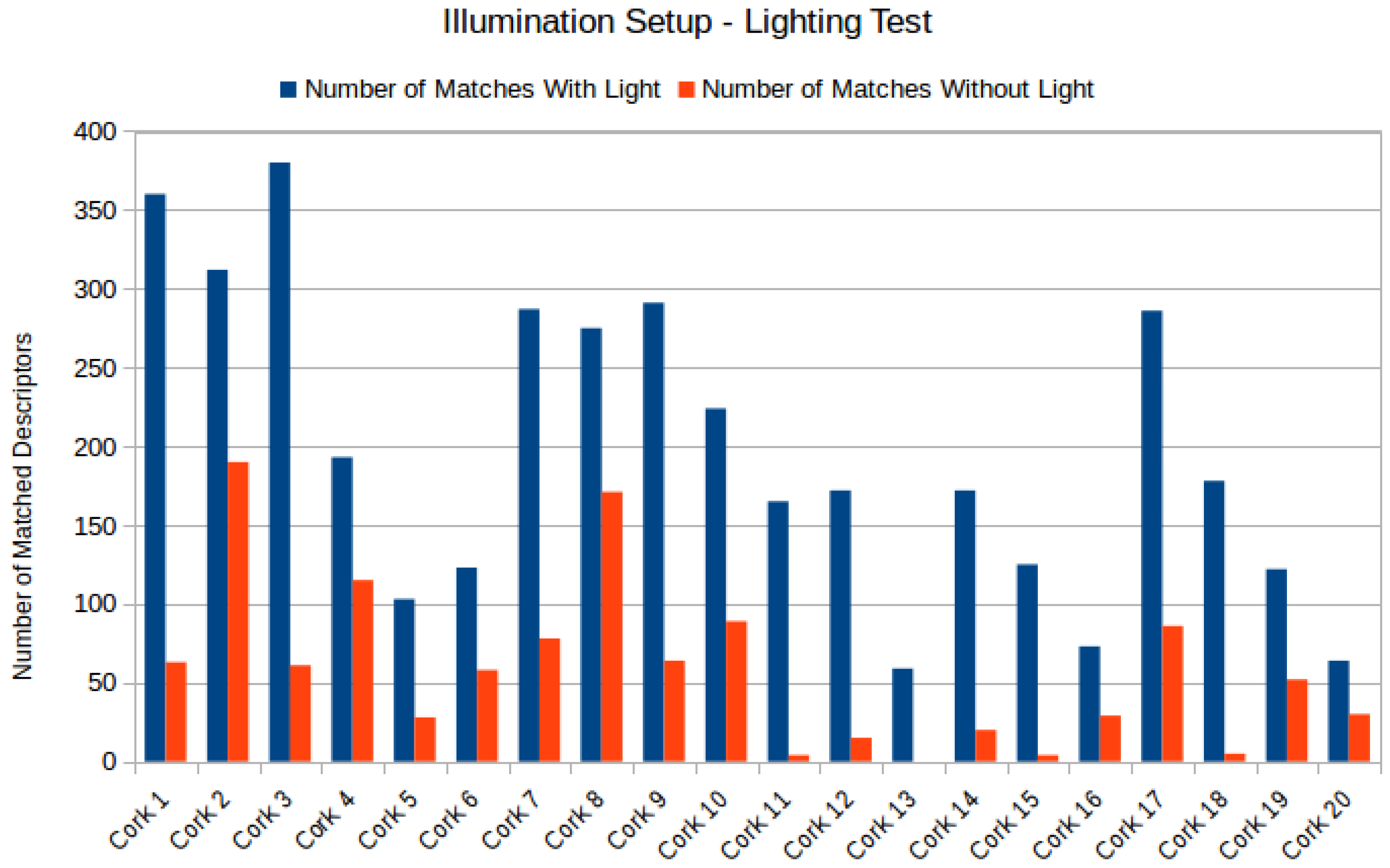

4.3.1. Illumination Setup

- Randomly select 20 cork stoppers from the database (10 cork stoppers from each type of cork: agglomerated and natural);

- Capture four images using the same smartphone camera per cork: two images using with the light turned on, and two images with the light turned off;

- Match the images with and without light using RIOTA’s implementation;

- Store the matching results for further analysis.

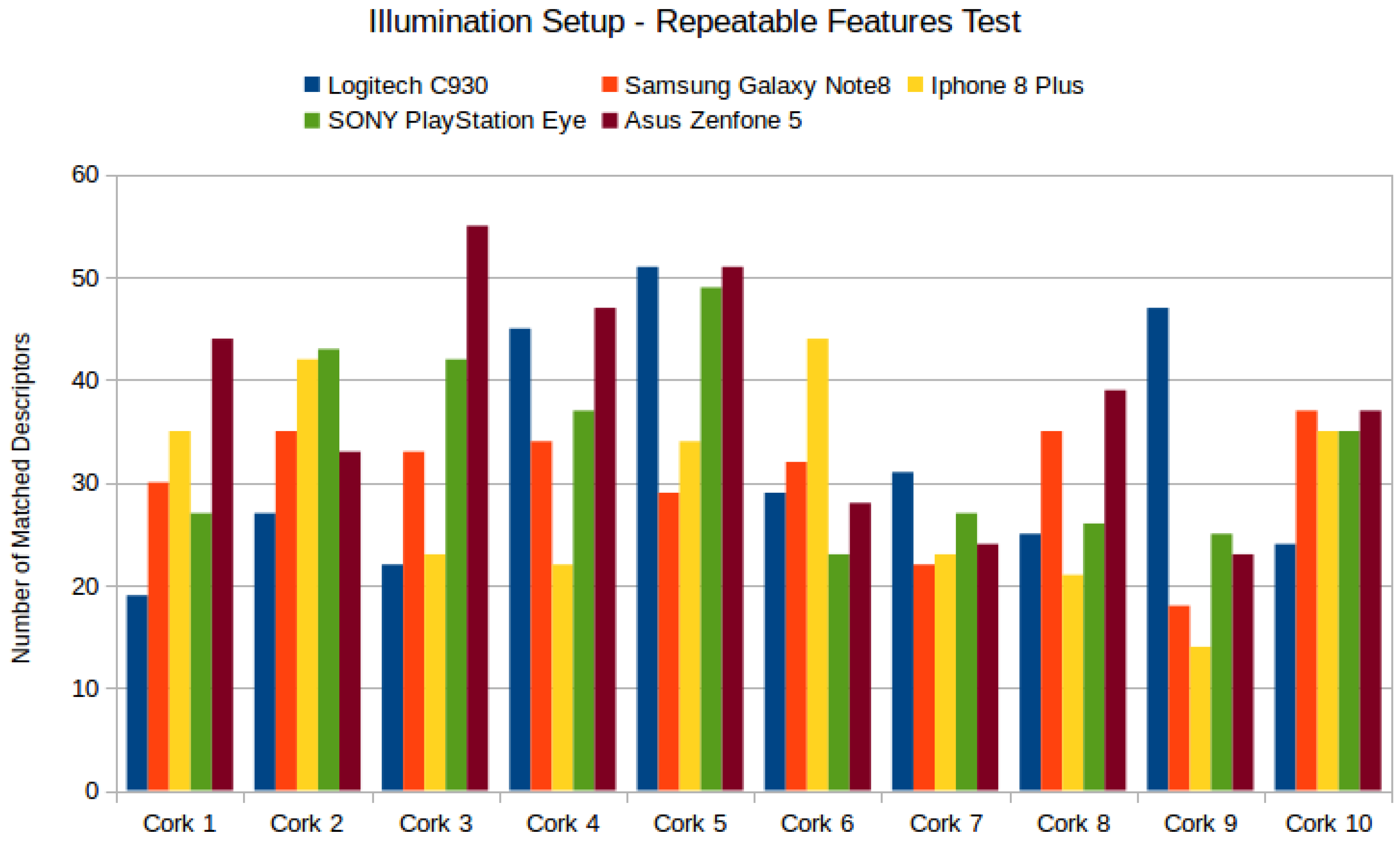

- Randomly select 10 cork stoppers from the database (5 cork stoppers from each type of cork: agglomerated and natural);

- Capture six images per cork using different cameras: rear camera of Sony Z3 Compact, USB camera Logitech C930, rear camera of Samsung Galaxy Note8, USB camera PlayStation Eye and Asus Zenfone 5 A500CG;

- Match the images using the tuned version of RIOTA’s implementation;

- Store the matching results for further analysis.

4.3.2. RIOTA’s Implementation

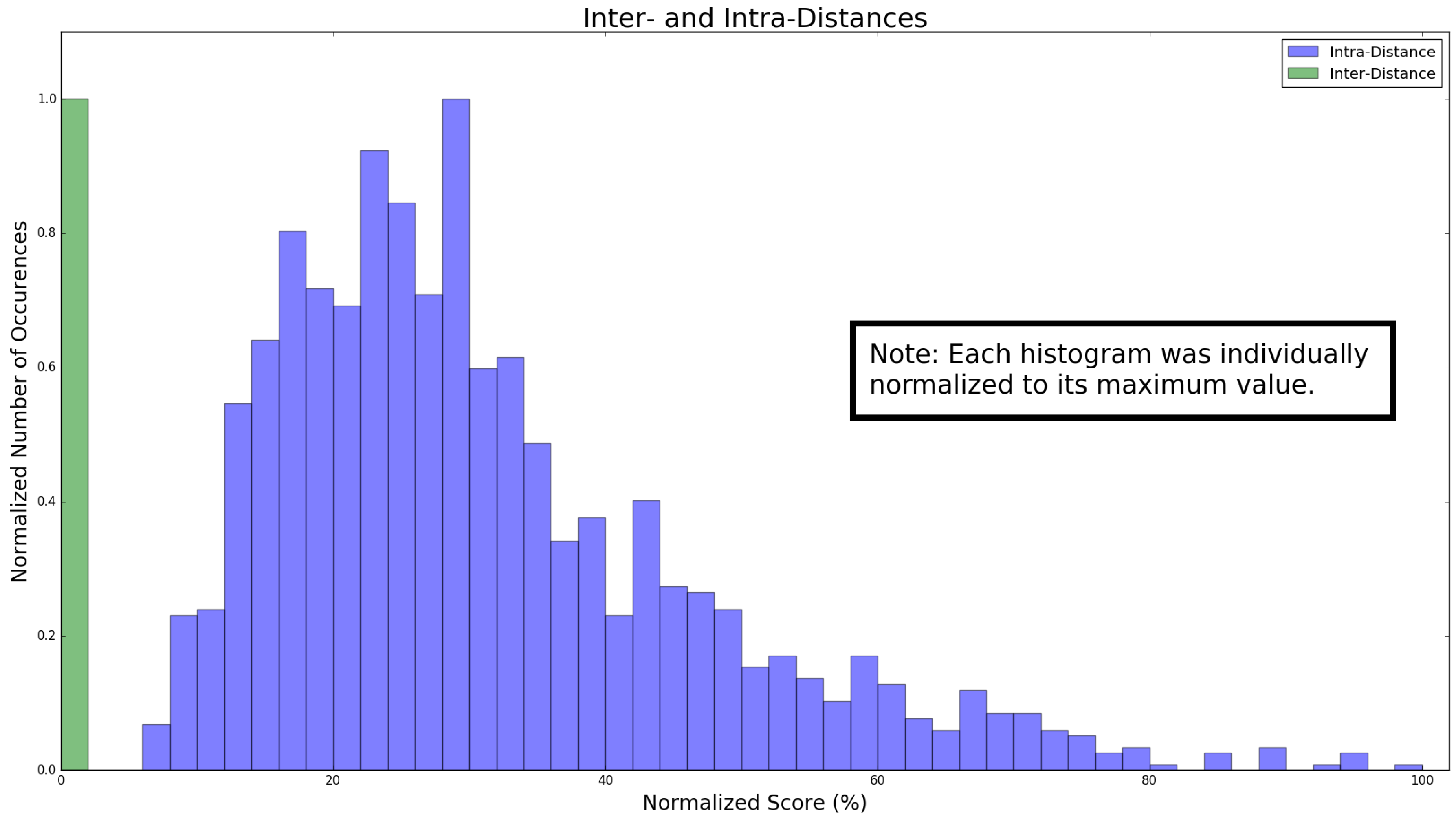

4.3.3. UNO Evaluation

4.4. Results

4.4.1. Discussion

4.4.2. Cork as Unique Object

5. Conclusions

6. Patent

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| RIOTA | Recognition of Individual Objects using Tagless Approaches |

| ORB | Oriented Fast and Rotated BRIEF |

| PUD | Psysical Unclonability and Disorder |

| PUF | Psysical Unclonable Function |

| UNO | Unique Object |

| FAR | False Acceptance Rate |

| FRR | False Rejection Rate |

References

- Costa, V.; Sousa, A.; Reis, A. Preventing Wine Counterfeiting by Individual Cork Stopper Recognition Using Image Processing Technologies. J. Imag. 2018, 4, 54. [Google Scholar] [CrossRef] [Green Version]

- Joaquim Ramos Costa, V.; Jorge Miranda De Sousa, A.; Rosanete Lourenço Reis, A.; Gerard Celina Robert Loyens, D. Device And Method For Identifying A Cork Stopper, And Respective Kit. WO 2018/078600 A1, 2018. Available online: https://patents.google.com/patent/WO2018078600A1/en (accessed on 31 October 2018).

- Lee, P.S.; Ewe, H.T. Individual Recognition Based on Human Iris Using Fractal Dimension Approach. In Biometric Authentication; Springer: Berlin/Heidelberg, Germany, 2004; pp. 467–474. [Google Scholar]

- Kumar, A.; Passi, A. Comparison and combination of iris matchers for reliable personal authentication. Pattern Recog. 2010, 43, 1016–1026. [Google Scholar] [CrossRef]

- Rührmair, U.; Devadas, S.; Koushanfar, F. Security Based on Physical Unclonability and Disorder. In Introduction to Hardware Security and Trust; Springer: New York, NY, USA, 2012; pp. 65–102. [Google Scholar]

- European Observatory on Infringements of Intellectual Property Rights. Infringement of Protected Geographical Indications for Wine, Spirits, Agricultural Products and Foodstuffs in the European Union; Technical Report; European Observatory on Infringements of Intellectual Property Rights; EUIPO: Alicante, Spain, 2016. [Google Scholar]

- Medasani, S.; Srinivasa, N.; Owechko, Y. Active learning system for object fingerprinting. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No.04CH37541), Budapest, Hungary, 25–29 July 2004; Volume 1, pp. 345–350. [Google Scholar]

- Buchanan, J.D.R.; Cowburn, R.P.; Jausovec, A.V.; Petit, D.; Seem, P.; Xiong, G.; Atkinson, D.; Fenton, K.; Allwood, D.A.; Bryan, M.T. Forgery: ’fingerprinting’ documents and packaging. Nature 2005, 436, 475. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Subramanian, L.; Brewer, E.A. PaperSpeckle: Microscopic fingerprinting of paper. In Proceedings of the 18th ACM Conference on Computer And Communications Security—CCS ’11, New York, NY, USA, 17–21 October 2011; p. 99. [Google Scholar]

- Takahashi, T.; Ishiyama, R. FIBAR: Fingerprint Imaging by Binary Angular Reflection for Individual Identification of Metal Parts. In Proceedings of the 2014 Fifth International Conference on Emerging Security Technologies, Lisbon, Portugal, 16–20 November 2014; pp. 46–51. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Takahashi, T.; Kudo, Y.; Ishiyama, R. Mass-produced parts traceability system based on automated scanning of “Fingerprint of Things”. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 202–206. [Google Scholar]

- Ishiyama, R.; Kudo, Y.; Takahashi, T. mIDoT: Micro identifier dot on things—A tiny, efficient alternative to barcodes, tags, or marking for industrial parts traceability. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taibei, Taiwan, 14–17 March 2016; pp. 781–786. [Google Scholar]

- Kudo, Y.; Zwaan, H.; Takahashi, T.; Ishiyama, R.; Jonker, P. Tip-on-a-chip: Automatic Dotting with Glitter Ink Pen for Individual Identification of Tiny Parts. In Proceedings of the 9th ACM Multimedia Systems Conference on—MMSys ’18, New York, NY, USA, 12–15 June 2018; pp. 502–505. [Google Scholar]

- Wigger, B.; Meissner, T.; Winkler, M.; Foerste, A.; Jetter, V.; Buchholz, A.; Zimmermann, A. Label-/tag-free traceability of electronic PCB in SMD assembly based on individual inherent surface patterns. Int. J. Adv. Manuf. Technol. 2018. [Google Scholar] [CrossRef]

- Pappu, R. Physical One-Way Functions. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2001. [Google Scholar]

- Maes, R.; Verbauwhede, I. Physically Unclonable Functions: A Study on the State of the Art and Future Research Directions. In Towards Hardware-Intrinsic Security; Sadeghi, A.R., Naccache, D., Eds.; Number 71369 in Information Security and Cryptography; Springer: Berlin/Heidelberg, Germany, 2010; pp. 3–37. [Google Scholar] [Green Version]

- Dolev, S.; Krzywiecki, L.; Panwar, N.; Segal, M. Optical PUF for Non Forwardable Vehicle Authentication. In Proceedings of the 2015 IEEE 14th International Symposium on Network Computing and Applications, Cambridge, MA, USA, 28–30 September 2015; pp. 204–207. [Google Scholar]

- Pappu, R. Physical One-Way Functions. Science 2002, 297, 2026–2030. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rührmair, U.; Hilgers, C.; Urban, S.; Weiershäuser, A.; Dinter, E.; Forster, B.; Jirauschek, C. Optical PUFs Reloaded. 2013. Available online: http://www.crypto.rub.de/imperia/md/crypto/kiltz/ulrich_paper_48.pdf (accessed on 31 October 2018).

- Shariati, S.; Koeune, F.; Standaert, F.X. Security Analysis of Image-Based PUFs for Anti-counterfeiting. In Communications and Multimedia Security; De Decker, B., Chadwick, D.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 26–38. [Google Scholar]

- Shariati, S.; Standaert, F.X.; Jacques, L.; Macq, B. Analysis and experimental evaluation of image-based PUFs. J. Cryptogr. Eng. 2012, 2, 189–206. [Google Scholar] [CrossRef]

- Tuyls, P.; Schrijen, G.J.; Škorić, B.; van Geloven, J.; Verhaegh, N.; Wolters, R. Read-Proof Hardware from Protective Coatings. Proc. Cryptogr. Hardw. Embed. Syst. 2006, 369–383. [Google Scholar] [CrossRef]

- Škorić, B.; Maubach, S.; Kevenaar, T.; Tuyls, P. Information-theoretic analysis of capacitive physical unclonable functions. J. Appl. Phys. 2006, 100. [Google Scholar] [CrossRef]

- Gassend, B.; Clarke, D.; van Dijk, M.; Devadas, S. Silicon Physical Random Functions. In Proceedings of the 9th ACM Conference on Computer and Communications Security, New York, NY, USA, 17–20 May 2002; pp. 148–160. [Google Scholar]

- Guajardo, J.; Kumar, S.S.; Schrijen, G.J.; Tuyls, P. FPGA Intrinsic PUFs and Their Use for IP Protection. Lect. Notes Comput. Sci. 2007, 4727, 63–80. [Google Scholar] [CrossRef]

- Holcomb, D. Initial SRAM state as a fingerprint and source of true random numbers for RFID tags. In Proceedings of the Conference on RFID Security, Graz, Austria, 11–13 July 2007; pp. 1–12. [Google Scholar]

- Arjona, R.; Prada-Delgado, M.; Arcenegui, J.; Baturone, I. A PUF- and Biometric-Based Lightweight Hardware Solution to Increase Security at Sensor Nodes. Sensors 2018, 18, 2429. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Liu, H.; Min, R.; Liu, Z. Pitfall of the Strongest Cells in Static Random Access Memory Physical Unclonable Functions. Sensors 2018, 18, 1776. [Google Scholar] [CrossRef] [PubMed]

- Bulens, P.; Standaert, F.X.; Quisquater, J.J. How to strongly link data and its medium: The paper case. IET Inf. Secur. 2010, 4, 125–136. [Google Scholar] [CrossRef]

- Lee, J.; Lim, D.L.D.; Gassend, B.; Suh, G.; Dijk, M.V.; Devadas, S. A technique to build a secret key in integrated circuits for identification and authentication applications. In Proceedings of the 2004 Symposium on VLSI Circuits, Tokyo, Japan, 17–19 June 2004; pp. 176–179. [Google Scholar] [Green Version]

- Kursawe, K.; Sadeghi, A.R.; Schellekens, D.; Skoric, B.; Tuyls, P. Reconfigurable physical unclonable functions—Enabling technology for tamper-resistant storage. In Proceedings of the 2009 IEEE International Workshop on Hardware-Oriented Security and Trust, HOST, San Francisco, CA, USA, 29 October–5 November 2009; pp. 22–29. [Google Scholar]

- Lu, Z.; Li, D.; Liu, H.; Gong, M.; Liu, Z. An Anti-Electromagnetic Attack PUF Based on a Configurable Ring Oscillator for Wireless Sensor Networks. Sensors 2017, 17, 2118. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Zhao, X.; Ye, W.; Han, Q.; Pan, X. A Compact and Low Power RO PUF with High Resilience to the EM Side-Channel Attack and the SVM Modelling Attack of Wireless Sensor Networks. Sensors 2018, 18, 322. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Ding, J.; Li, P.; Zhu, F.; Wang, R. A Lightweight RFID Mutual Authentication Protocol Based on Physical Unclonable Function. Sensors 2018, 18, 760. [Google Scholar] [CrossRef] [PubMed]

- Shariati, S. Image-Based Physical Unclonable Functions for Anti-Counterfeiting. Ph.D. Thesis, Catholic University of Louvain, Louvain-la-Neuve, Belgium, 2013. [Google Scholar]

- Valehi, A.; Razi, A.; Cambou, B.; Yu, W.; Kozicki, M. A graph matching algorithm for user authentication in data networks using image-based physical unclonable functions. In Proceedings of the 2017 Computing Conference, London, UK, 15–17 May 2017; pp. 863–870. [Google Scholar]

- Wigger, B.; Meissner, T.; Förste, A.; Jetter, V.; Zimmermann, A. Using unique surface patterns of injection moulded plastic components as an image based Physical Unclonable Function for secure component identification. Sci. Rep. 2018, 8, 4738. [Google Scholar] [CrossRef] [PubMed]

- Dachowicz, A.; Chaduvula, S.C.; Atallah, M.; Panchal, J.H. Microstructure-Based Counterfeit Detection in Metal Part Manufacturing. JOM 2017, 69, 2390–2396. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef] [Green Version]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Punithavathi, P.; Geetha, S.; Sasikala, S. Generation of Cancelable Iris Template Using Bi-level Transformation. In Proceedings of the 6th International Conference on Bioinformatics and Biomedical Science—ICBBS ’17, New York, NY, USA, 22–24 June 2017; pp. 94–100. [Google Scholar]

- Awalkar, K.V.; Kanade, S.G.; Jadhav, D.V.; Ajmera, P.K. A multi-modal and multi-algorithmic biometric system combining iris and face. In Proceedings of the 2015 International Conference on Information Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 496–501. [Google Scholar]

- Mohamed, E.; Ahmed, F.; Rehan, S.E.; Mohamed, A.A. Rough set analysis and cloud model algorithm to automated knowledge acquisition for classification Iris to chieve high security. In Proceedings of the 2011 11th International Conference on Hybrid Intelligent Systems (HIS), Malacca, Malaysia, 5–8 December 2011; pp. 55–60. [Google Scholar]

- Belcher, C.; Du, Y. Region-based SIFT approach to iris recognition. Opt. Lasers Eng. 2009, 47, 139–147. [Google Scholar] [CrossRef]

- Marciniak, T.; Da̧browski, A.; Chmielewska, A.; Krzykowska, A. Analysis of Particular Iris Recognition Stages. In Multimedia Communications, Services and Security; Springer: Berlin/Heidelberg, Germany, 2011; pp. 198–206. [Google Scholar]

- Taur, J. Iris recognition based on relative variation analysis with feature selection. Opt. Eng. 2008, 47, 097202. [Google Scholar] [CrossRef]

- Wang, Y.; Han, J. Iris Recognition Using Support Vector Machines. In Advances in Neural Networks—ISNN; Springer: Berlin/Heidelberg, Germany, 2004; pp. 622–628. [Google Scholar]

- Umer, S.; Dhara, B.C.; Chanda, B. Texture code matrix-based multi-instance iris recognition. Pattern Anal. Appl. 2016, 19, 283–295. [Google Scholar] [CrossRef]

- Rahulkar, A.D.; Holambe, R.S. Half-Iris Feature Extraction and Recognition Using a New Class of Biorthogonal Triplet Half-Band Filter Bank and Flexible k-out-of-n: A Postclassifier. IEEE Trans. Inf. Forensics Secur. 2012, 7, 230–240. [Google Scholar] [CrossRef]

- Kumar, A.; Hanmandlu, M.; Das, A.; Gupta, H.M. Biometric based personal authentication using fuzzy binary decision tree. In Proceedings of the 2012 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 396–401. [Google Scholar]

- Zhou, Y.; Kumar, A. Personal Identification from Iris Images Using Localized Radon Transform. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2840–2843. [Google Scholar]

- Costa, V.; Sousa, A.; Reis, A. CBIR for a wine anti-counterfeiting system using imagery from cork stoppers. In Proceedings of the Iberian Conference on Information Systems and Technologies, CISTI, Cáceres, Spain, 13–16 June 2018; Volume 2018, pp. 1–6. [Google Scholar]

- Shariati, S.; Jacques, L.; Standaert, F.X.; Macq, B.; Salhi, M.A.; Antoine, P. Randomly driven fuzzy key extraction of unclonable images. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 4329–4332. [Google Scholar]

- Shariati, S.; Standaert, F.X.; Jacques, L.; Macq, B.; Salhi, M.A.; Antoine, P. Random Profiles of Laser Marks. In Proceedings of the 31st WIC Symposium on Information Theory in the Benelux, Rotterdam, The Netherlands, 11–12 May 2010; pp. 27–34. [Google Scholar]

- Costa, M.; Sanchez, E.; Sanchez, C. Caracterização de espumas plásticas e cortiça para aplicação em um sistema de segurança acoplado ao parachoque frontal veicular. Rev. Ciência Tecnol. 2017, 20, 36. [Google Scholar]

- G154-16. Standard Practice for Operating Fluorescent Ultraviolet (UV) Lamp Apparatus for Exposure of Nonmetallic Materials; Technical Report; ASTM: West Conshohocken, PA, USA, 2016. [Google Scholar]

| Methods | Acc (%) | EER (%) | FAR (%) | FRR (%) |

|---|---|---|---|---|

| Proposed approach: RIOTA’s implementation | 100.0 | 0.00 | 0.00 | 0.00 |

| Methods | Acc (%) | EER (%) | FAR (%) | FRR (%) |

|---|---|---|---|---|

| Discrete Fourier Transform (DFT) + Hadamard Transform (HT) [43] | - | 1.2 | - | - |

| Gabor [44] | - | 9.81 | - | - |

| Decision Tree Construction based on Rough Set Theory under Characteristic Relation (DTCCRSCR) [45] | 98.649 | - | - | - |

| Scale-Invariant Features Transform (SIFT) [46] | - | 2.1 | - | - |

| Logaritmic Gabor filters [47] | - | - | 3.25 | 3.03 |

| Robust Principal Component Analysis (PCA) [48] | - | 0.02 | - | - |

| Support Vector Machine (SVM) [49] | - | 2.63 | - | - |

| Proposed approach: RIOTA’s (Recognition of Individual Objects using Tagless Approaches) implementation | 98.14 | 1.89 | - | - |

| Methods | Acc (%) | EER (%) | FAR (%) | FRR (%) |

|---|---|---|---|---|

| Texture code matrix [50] | 99.96 | 0.00 | - | - |

| Triplet Half-Band Filter Bank (THFB) + k-out-of-n [51] | 99.84 | - | 0.16 | 0.15 |

| Fuzzy Binary Decision Tree (FBDT) [52] | - | - | 0.0250 | 8.1081 |

| Localized Radon Transform (LRT) [53] | - | 0.53 | - | - |

| Log-Gabor + Haar wavelet [4] | - | 2.59 | - | - |

| Discrete Fourier Transform (DFT) + Hadamard Transform (HT) [43] | - | 3.3 | - | - |

| Proposed approach: RIOTA’s (Recognition of Individual Objects using Tagless Approaches) implementation | 99.76 | 0.311 | - | - |

| Methods | Acc (%) | EER (%) | FAR (%) | FRR (%) |

|---|---|---|---|---|

| Texture code matrix [50] | 100 | 0.00 | - | - |

| Triplet Half-Band Filter Bank (THFB) + k-out-of-n [51] | 98.06 | - | 1.99 | 1.88 |

| Proposed approach: RIOTA’s (Recognition of Individual Objects using Tagless Approaches) implementation | 97.59 | 2.45 | - | - |

| Databases | Detection Time (ms) | Extraction Time (ms) | Matching Time (ms) | Total Time (ms) |

|---|---|---|---|---|

| Cork database | 7.88 | 5.99 | 56.1 | 70.0 |

| CASIA-IrisV1 database | 2.60 | 3.64 | 32.3 | 35.3 |

| IITD database | 4.17 | 4.38 | 37.5 | 46.5 |

| MMU1 database | 2.34 | 3.40 | 12.5 | 18.2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa, V.; Sousa, A.; Reis, A. Cork as a Unique Object: Device, Method, and Evaluation. Appl. Sci. 2018, 8, 2150. https://doi.org/10.3390/app8112150

Costa V, Sousa A, Reis A. Cork as a Unique Object: Device, Method, and Evaluation. Applied Sciences. 2018; 8(11):2150. https://doi.org/10.3390/app8112150

Chicago/Turabian StyleCosta, Valter, Armando Sousa, and Ana Reis. 2018. "Cork as a Unique Object: Device, Method, and Evaluation" Applied Sciences 8, no. 11: 2150. https://doi.org/10.3390/app8112150