FMnet: Iris Segmentation and Recognition by Using Fully and Multi-Scale CNN for Biometric Security

Abstract

:Featured Application

Abstract

1. Introduction

Why Deep Learning Used in Iris Recognition

2. Background

3. Related Work

3.1. Convolutional Neural Networks (CNNs)

3.1.1. Convolution Layer

3.1.2. Transfer Learning

3.1.3. Max-Aggregation Layer

3.1.4. Classic Neural Layer

3.2. Different Architectures of Convolutional neural networks (CNNs)

4. Proposed Method

4.1. Pre-Processing of Data

4.1.1. Segmentation Using FCN

4.1.2. Normalization

4.1.3. MCNN Feature Extraction

- Layer to enter: A picture size 28 × 28;

- First convolution layer: Number of convolution kernel (filters) is 6 of Size 5 × 5; the result is a set of 24 × 24 convolutional maps;

- Subsampling layer: number of maps is 6; kernel size: 2 × 2; Size of the maps: 12 × 12;

- Second convolution layer: Number of convolution kernel (filters) is 6 of Size 5 × 5; the result is a set of 8 × 8 convolutional maps;

- Subsampling layer: number of maps: 6; kernel size: 2 × 2; Size of maps: 4 × 4;

- Third convolution layer: Number of convolution kernel (filters) is 6 of Size 4 × 4; the result is a set of 1 × 1 convolutional maps.

5. Experimental Results and Discussion

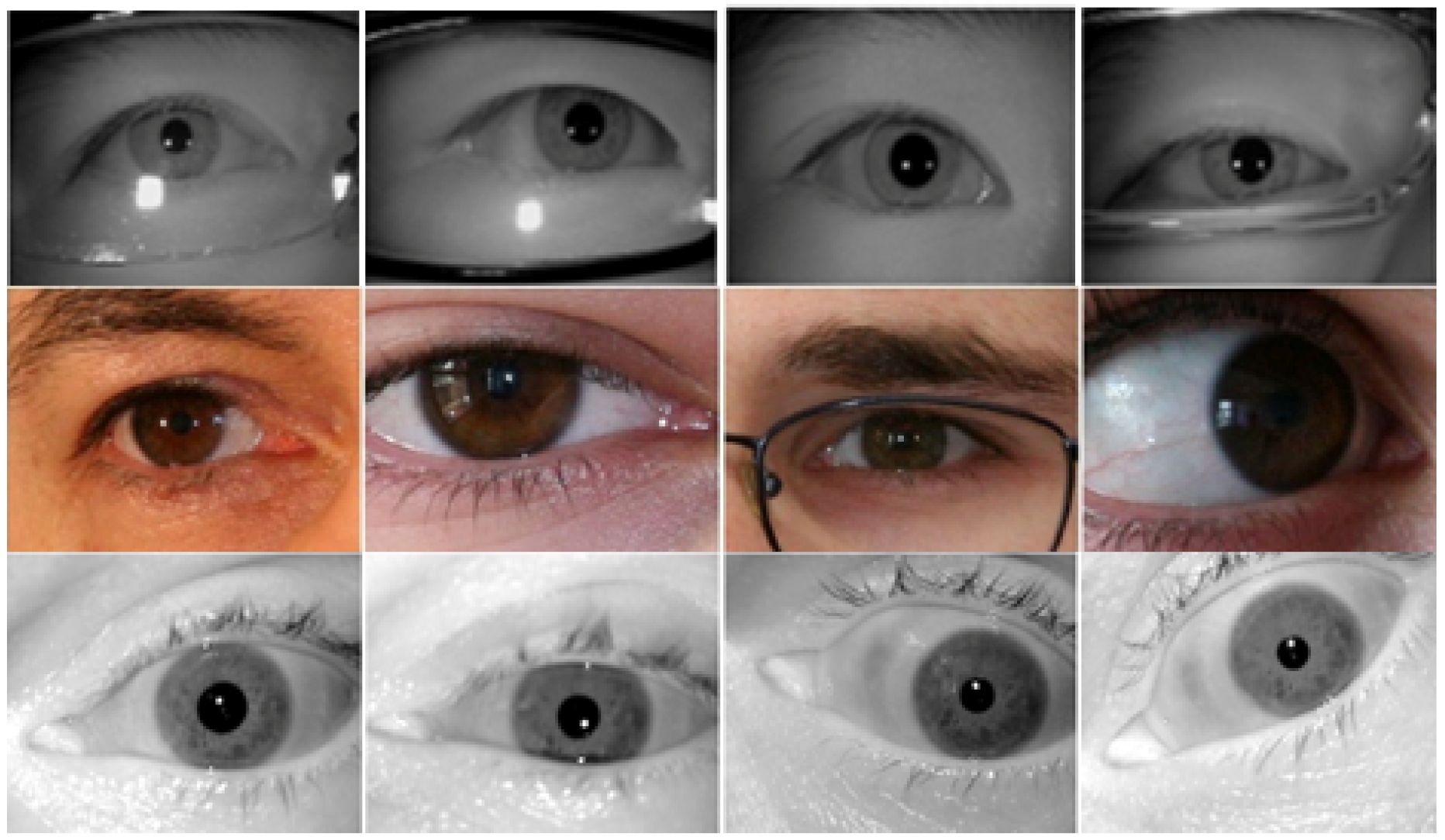

5.1. Databases

5.1.1. CASIA-Iris-Thousand

5.1.2. UBIRIS.v2

5.1.3. LG2200

5.2. Discussion

- The design of feature extraction is robust and easy to calculate.

- Perform the selection, calculation and evaluation of the relevant characteristics and their relevance for class separation at different level.

- Avoid and circumvents the difficulties occurred in delicate step related to characteristics.

5.3. Results

5.4. Performance Metric and Baseline Method

5.5. Performance Analyses

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Networks |

| DCNN | Deep Convolutional Neural Network |

| FCN | Fully Convolutional Network |

| FCDNN | Fully Convolutional Deep Neural Network |

| GPUs | Graphics Processing Units |

| HMM | Hidden Markov Model |

| HCNNs | heterogeneous Convolutional Neural Networks |

| ILSVRC | ImageNet Large Scale Visual Recognition Competition |

| MCNN | Multi-scale Convolutional Neural Network |

| RNN | Recurrent neural network |

| SVM | Support Vector machine |

References

- Zhu, C.; Sheng, W. Multi-sensor fusion for human daily activity recognition in robot-assisted living. In Proceedings of the 4th ACM/IEEE international conference on Human robot interaction, La Jolla, CA, USA, 9–13 March 2009; pp. 303–304. [Google Scholar] [CrossRef]

- Ghosh, A.; Riccardi, G. Recognizing human activities from smartphone sensor signals. In Proceedings of the ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 865–868. [Google Scholar] [CrossRef]

- Rahman, S.A.; Merck, C.; Huang, Y.; Kleinberg, S. Unintrusive eating recognition using Google Glass. In Proceedings of the 9th International Conference on Pervasive Computing Technologies for Healthcare, Istanbul, Turkey, 20–23 May 2015; pp. 108–111. [Google Scholar] [CrossRef]

- Bengio, Y.; Goodfellow, I.; Courville, A. Deep Learning; MIT Press: Cambridge, London, UK, 2015; preparation. [Google Scholar]

- Ouchi, K.; Doi, M. Smartphone-based monitoring system for activities of daily living for elderly people and their relatives etc. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013; pp. 103–106. [Google Scholar] [CrossRef]

- Aparicio, M., IV; Levine, D.S.; McCulloch, W.S. Why are neural networks relevant to higher cognitive function. In Neural Network Knowledge Represent Inference; Lawrence Erlbaum Associates, Inc.: Hillsdale, NJ, USA, 1994; pp. 1–26. [Google Scholar]

- Parizeau, M. Neural Networks; GIF-21140 and GIF-64326; Laval University: Québec, QC, Canada, 2004. [Google Scholar]

- Karpathy, A. Neural Networks Part 1: Setting Up the Architecture. In Notes for CS231n Convolutional Neural Networks for Visual Recognition; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Ng, A. Machine Learning Yearning. In Technical strategy for Al Engineers, In the Era of Deep Learning; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- LeCun, Y.; Jackel, L.D.; Bottou, L.; Cortes, C.; Denker, J.S.; Drucker, H.; Guyon, I.; Muller, U.A.; Sackinger, E.; Simard, P.; et al. Learning algorithms for classification: A comparison on handwritten digit recognition. Neural Netw. Stat. Mech. Perspect. 1995, 261, 276. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G.E. Convolutional Deep Belief Networks on Cifar-10. Available online: https://www.cs.toronto.edu/~kriz/conv-cifar10-aug2010.pdf (accessed on 1 April 2017).

- Penatti, O.A.B.; Nogueira, K.; dos Santos, A.J. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar] [CrossRef]

- Lagrange, A.; Le Saux, B.; Beaupere, A.; Boulch, A.; Chan-Hon-Tong, A.; Herbin, S.; Randrianarivo, H.; Ferecatu, M. Benchmarking classification of earthobservation data: From learning explicit features to convolutional networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4173–4176. [Google Scholar] [CrossRef]

- Vo, A.V.; Truong-Hong, L.; Laefer, D.F.; Tiede, D.; d’Oleire-Oltmanns, S.; Baraldi, A.; Shimoni, M.; Moser, G.; Tuia, D. Processing of Extremely High Resolution LiDAR and RGB Data: Outcome of the 2015 IEEE GRSS Data Fusion Contest—Part B: 3-D Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5560–5575. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- Marmanis, D.; Wegner, J.D.; Galliani, S.; Schindler, K.; Datcu, M.; Stilla, U. Semantic Segmentation of Aerial Images with an Ensemble of CNNs. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 473–480. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhao, J.; Mathieu, M.; Goroshin, R.; LeCun, Y. Stacked What-Where Auto-encoders. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, USA, 2–4 May 2016. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 1520–1528. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017. [Google Scholar] [CrossRef]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H. Conditional Random Fields as Recurrent Neural Networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 1529–1537. [Google Scholar] [CrossRef]

- Arnab, A.; Jayasumana, S.; Zheng, V.; Torr, P. Higher Order Conditional Random Fields in Deep Neural Networks. Eur. Conf. Comput. Vis. 2016. [Google Scholar] [CrossRef]

- Hasan, S.A.; Ling, Y.; Liu, J.; Sreenivasan, R.; Anand, S.; Arora, T.R.; Datla, V.V.; Lee, K.; Qadir, A.; Swisher, C.; et al. PRNA at ImageCLEF 2017 Caption Prediction and Concept Detection Tasks. In Proceedings of the Working Notes of CLEF 2017–Conference and Labs of the Evaluation Forum, Dublin, Ireland, 11–14 September 2017. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A deep convolutional activation feature for generic visual recognition. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef]

- Nebauer, C. Evaluation of convolutional neural networks for visual recognition. IEEE Trans. Neural Netw. 1998, 9, 685–696. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Hubel, D.H.; Wiesel, T. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef] [PubMed]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the NIPS 2014—Neural Information Processing Systems Conference, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Razavian, A.S.; Azizpour, H.; Sullivan, J.; Carlsson, S. Cnn features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 806–813. [Google Scholar] [CrossRef]

- Shankar, S.; Garg, V.K.; Cipolla, R. Deep-carving: Discovering visual attributes by carving deep neural nets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3403–3412. [Google Scholar] [CrossRef]

- Huval, B.; Coates, A.; Ng, A. Deep learning for class-generic object detection. arXiv 2013, arXiv:1312.6885. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Elsevier Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of pooling operations in convolutional architectures for object recognition. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2010; pp. 92–101. [Google Scholar] [CrossRef]

- Bowyer, K.W.; Hollingsworth, K.; Flynn, P.J. Image understanding for iris biometrics: A survey. Comput. Vis. Image Understand. 2008, 110, 281–307. [Google Scholar] [CrossRef] [Green Version]

- Bowyer, K.W.; Hollingsworth, K.; Flynn, P.J. A survey of iris biometrics research 2008–2010. In Handbook Iris Recognition; Springer: London, UK, 2013. [Google Scholar] [CrossRef]

- Daugman, J. The importance of being random: Statistical principles of iris recognition. Pattern Recognit. 2003, 36, 279–291. [Google Scholar] [CrossRef]

- Meier, U.; Claudiu Ciresan, D.; Gambardella, L.M.; Schmidhuber, J. Better digit recognition with a committee of simple neural nets. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; pp. 1250–1254. [Google Scholar]

- Ueda, N. Optimal linear combination of neural networks for improving classification performance. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 18–21. [Google Scholar] [CrossRef]

- Chinese Academy of Sciences; Institute of Automation. Biometrics Ideal Test. CASIA iris Database. Available online: http://biometrics.idealtest.org/ (accessed on 1 April 2017).

- Proença, H.; Alexandre, L.A. UBIRIS: A noisy iris image database. In Proceedings of Image Analysis and Processing—ICIAP 2005; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3617, pp. 970–977. [Google Scholar] [CrossRef]

- Proença, H.; Filipe, S.; Santos, R.; Oliveira, J.; Alexandre, L.A. The UBIRIS.v2: A database of visible wavelength iris images captured on-the-move and at-a-distance. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1529–1535. [Google Scholar] [CrossRef]

- Phillip, P.J.; Scruggs, W.T.; O’Toole, A.J.; Flynn, P.J.; Bowyer, K.W.; Schott, C.L.; Sharpe, M. FRVT 2006 and ICE 2006 large-scale experimental results. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 831–846. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y. Deep Learning using Linear Support Vector Machines. In Proceedings of the International Conference on Machine Learning (ICML), Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Tobji, R.; Di, W.; Ayoub, N.; Haouassi, S. Efficient Iris Pattern Recognition method by using Adaptive Hamming Distance and 1D Log-Gabor Filter. (IJACSA) Int. J. Adv. Comput. Sci. Appl. 2018, 9, 662–669. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, M.; Li, H.; Sun, Z.; Tan, T. Deepiris: Learning pairwise filter bank for heterogeneous iris verification. Pattern Recognit. Lett. 2016, 82, 154–161. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. Towards More Accurate Iris Recognition Using Deeply Learned Spatially Corresponding Features. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3809–3818. [Google Scholar] [CrossRef]

- Bazrafkan, S.; Thavalengal, S.; Corcoran, P. An End to End Deep Neural Network for Iris Segmentation in Unconstraint Scenarios. ELSEVIER Neural Netw. 2018, 106, 79–95. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris Recognition With Off-the-Shelf CNN Features: A Deep Learning Perspective. IEEE Access 2018, 6, 18848–18855. [Google Scholar] [CrossRef]

| Layer | Type | 80 × 80 | 56 × 56 | 40 × 40 | 28 × 28 |

|---|---|---|---|---|---|

| C1 | Convolution | 7 × 7 | 7 × 7 | 5 × 5 | 5 × 5 |

| 74 × 74 | 50 × 50 | 36 × 36 | 24 × 24 | ||

| P2 | Max-pooling | 2 × 2 | 2 × 2 | 2 × 2 | 2 × 2 |

| 37 × 37 | 25 × 25 | 18 × 18 | 12 × 12 | ||

| C3 | Convolution | 6 × 6 | 6 × 6 | 5 × 5 | 5 × 5 |

| 32 × 32 | 20 × 20 | 14 × 14 | 8 × 8 | ||

| P4 | Max-pooling | 4 × 4 | 4 × 4 | 2 × 2 | 2 × 2 |

| 8 × 8 | 5 × 5 | 7 × 7 | 4 × 4 | ||

| C5 | Convolution | 8 × 8 | 5 × 5 | 7 × 7 | 4 × 4 |

| 1 × 1 | 1 × 1 | 1 × 1 | 1 × 1 |

| Error Rate (%) | |||

|---|---|---|---|

| Method | UBIRIS.v2 | LG2200 | CASIA-Iris-Thousand |

| 1.07% | 1.35% | 1.21% | |

| 1.02% | 1.29% | 1.10% | |

| 0.98% | 1.17% | 1.02% | |

| 0.89% | 1.11% | 0.93% | |

| SVM | 0.95% | 1.07% | 0.79% |

| MCNN | 0.85% | 1.00% | 0.71% |

| FCN | 0.56% | 0.96% | 0.63% |

| Recognition Accuracy (%) | ||||

|---|---|---|---|---|

| Methods | UBIRIS.v2 | LG2200 | CASIA-Iris-Thousand | |

| Liu et al. [54] | HCNNs and MFCNs | 98.40% | - | - |

| Zhao et al. [55] | AttNet+FCN-peri | 98.79 % | - | - |

| Bazrafkan et al. [56] | End to End FCDNN | 99.30% | - | - |

| Nguyen et al. [57] | CNN | - | 90.7% | 91.1% |

| Our proposed | FCN + MCNN | 99.41% | 93.17% | 95.63 % |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tobji, R.; Di, W.; Ayoub, N. FMnet: Iris Segmentation and Recognition by Using Fully and Multi-Scale CNN for Biometric Security. Appl. Sci. 2019, 9, 2042. https://doi.org/10.3390/app9102042

Tobji R, Di W, Ayoub N. FMnet: Iris Segmentation and Recognition by Using Fully and Multi-Scale CNN for Biometric Security. Applied Sciences. 2019; 9(10):2042. https://doi.org/10.3390/app9102042

Chicago/Turabian StyleTobji, Rachida, Wu Di, and Naeem Ayoub. 2019. "FMnet: Iris Segmentation and Recognition by Using Fully and Multi-Scale CNN for Biometric Security" Applied Sciences 9, no. 10: 2042. https://doi.org/10.3390/app9102042