Landmark Generation in Visual Place Recognition Using Multi-Scale Sliding Window for Robotics

Abstract

1. Introduction

2. Related Work

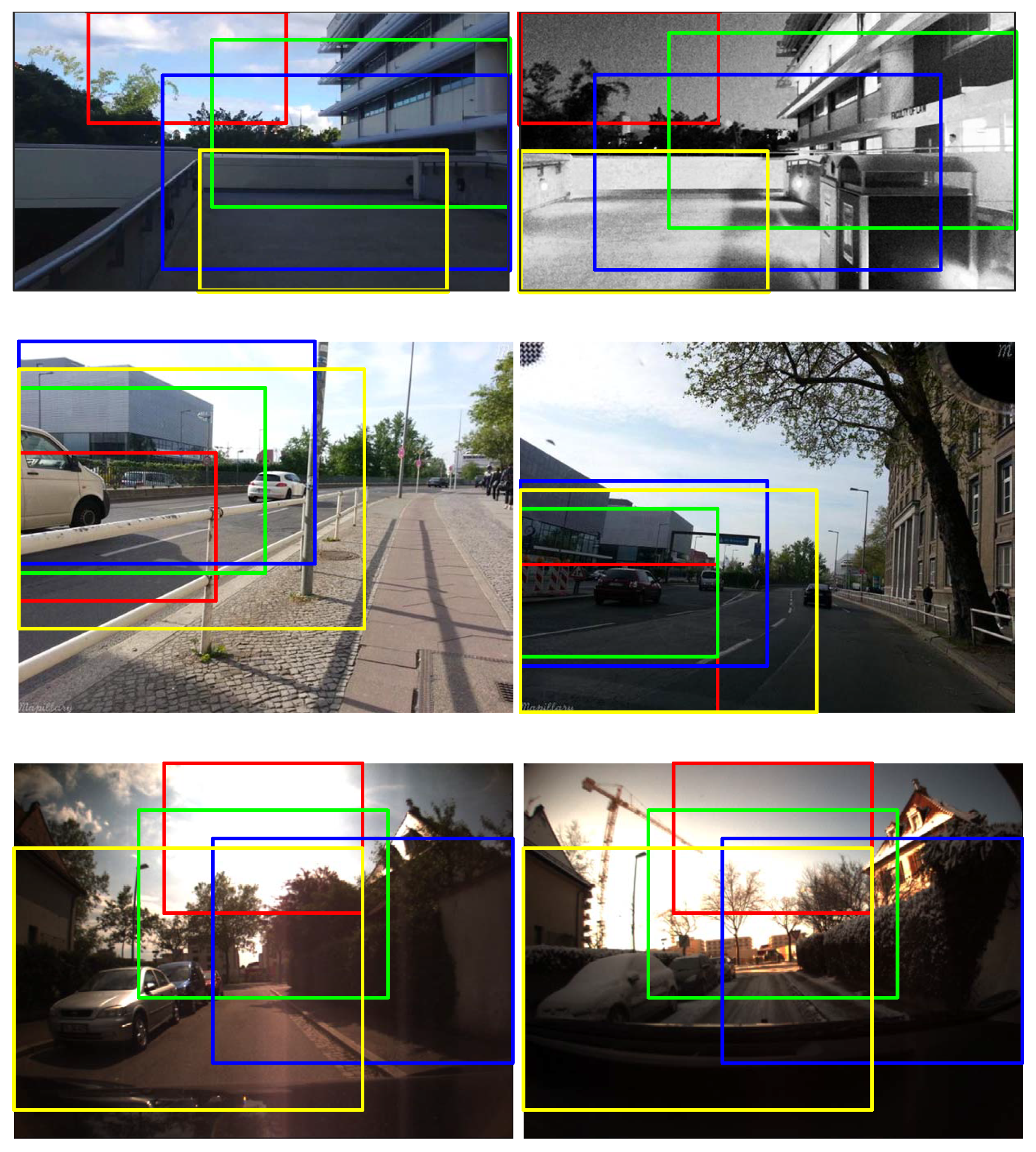

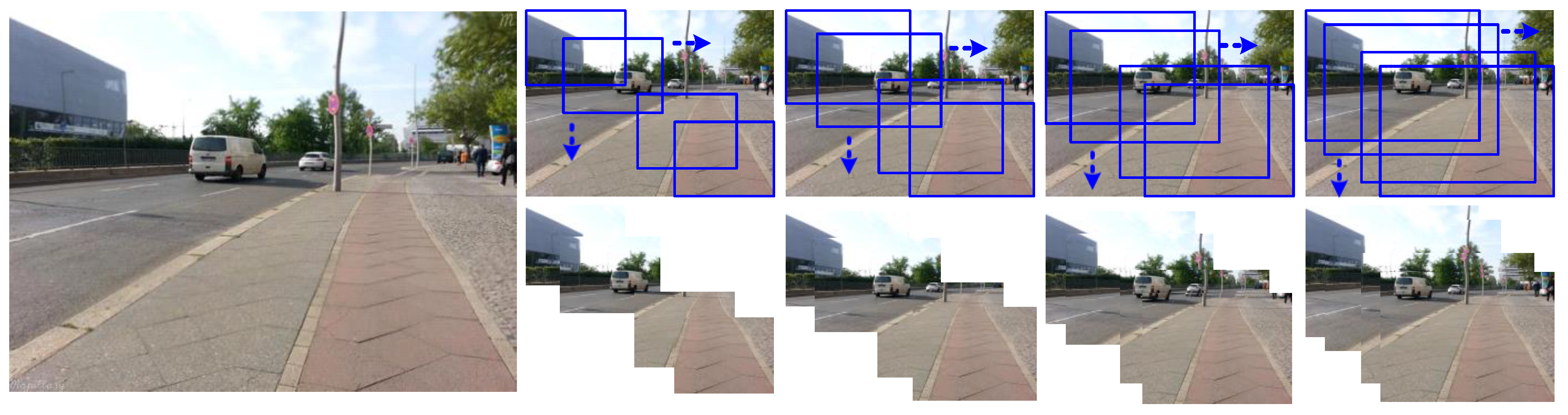

3. Proposed Method of Landmark Generation via MSW

| Algorithm 1: MSW based landmark extraction. |

| Input: Image width W and height H, landmark scales s Output: Bounding boxes of extracted landmarks L

|

4. Experiments

4.1. Experimental Settings

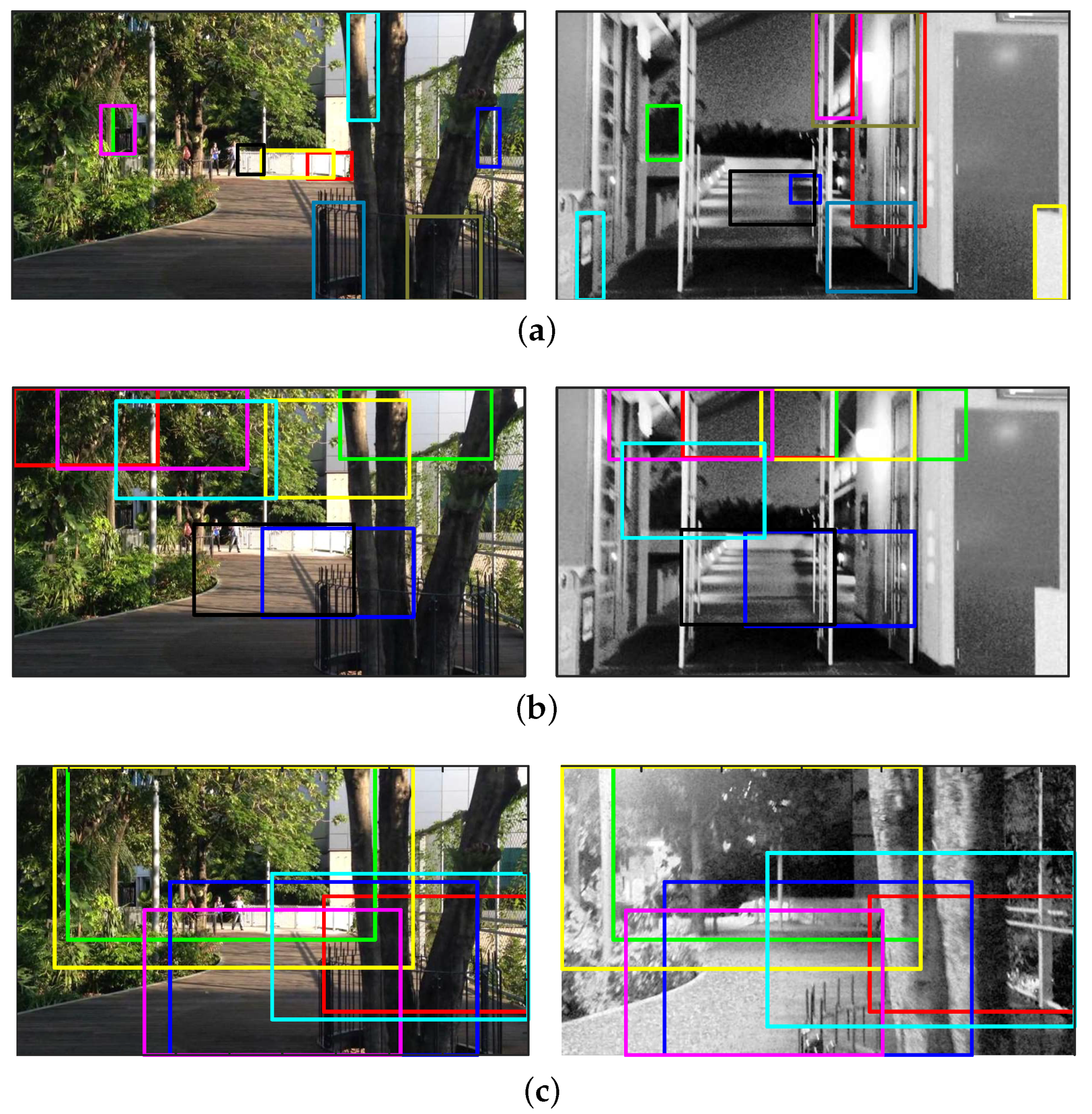

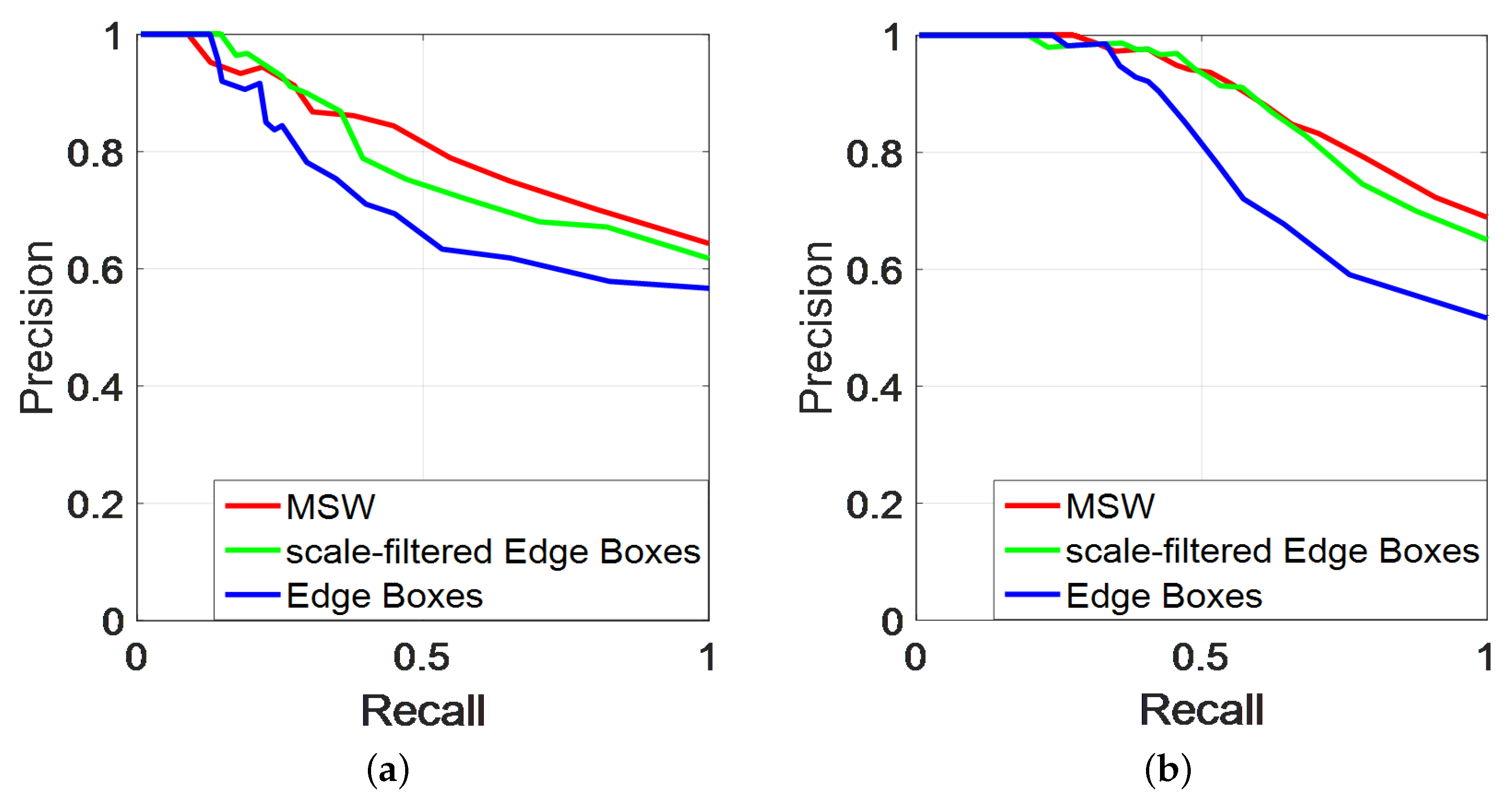

4.2. Results

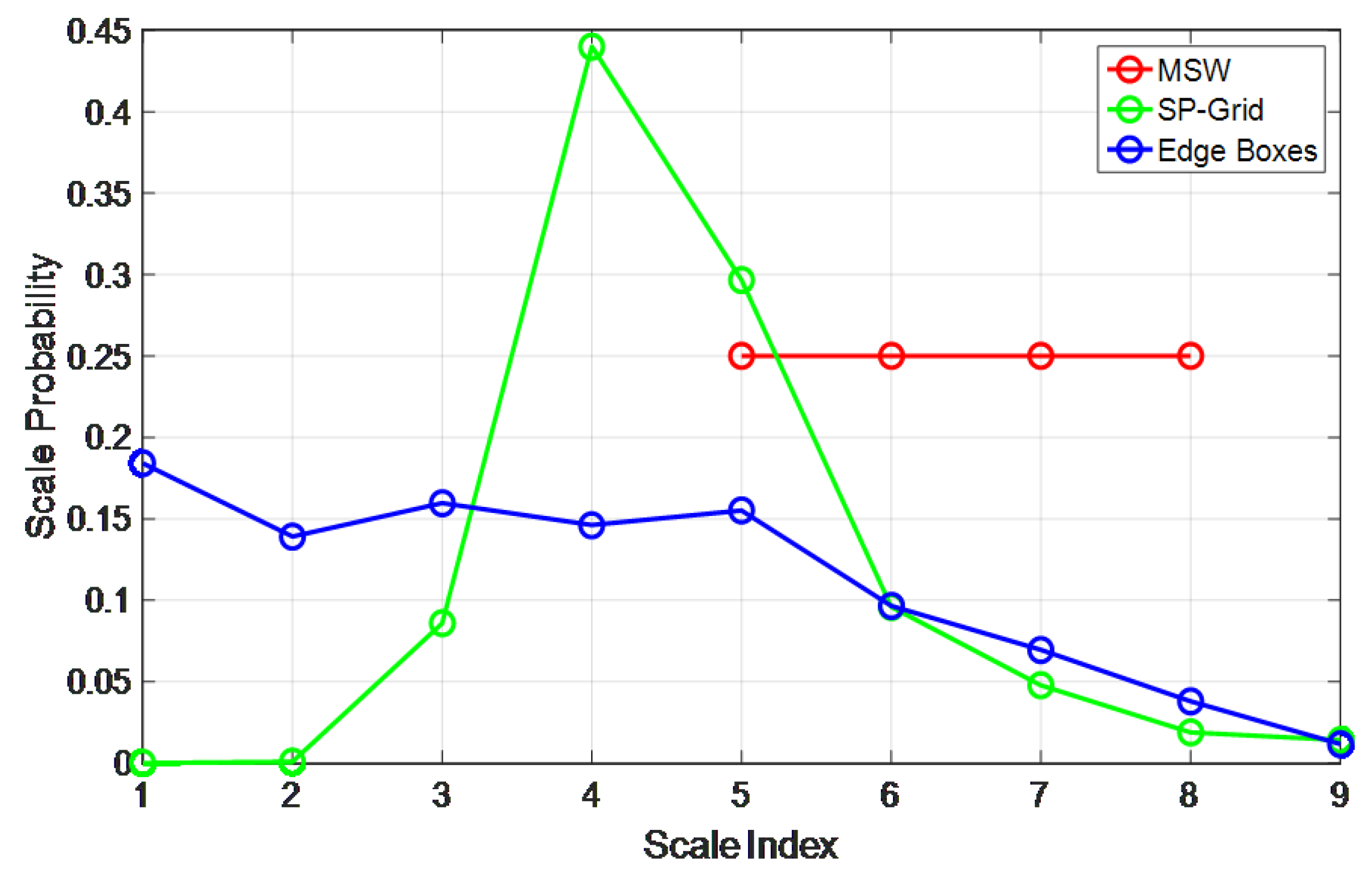

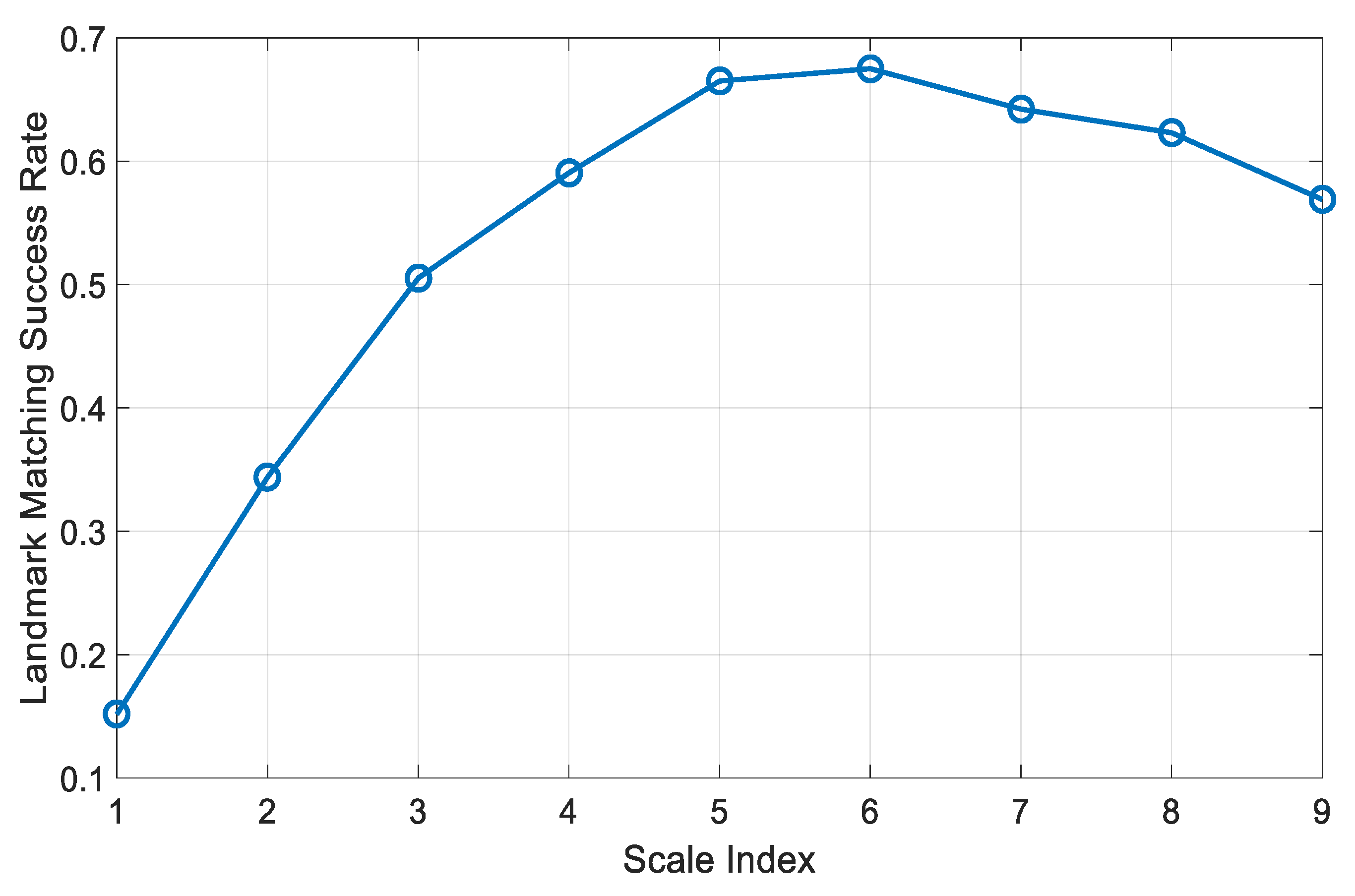

4.3. Detailed Analysis of Landmark Scale and Space Distributions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, Z.; Liu, L.; Sa, I.; Ge, Z.; Chli, M. Learning Context Flexible Attention Model for Long-Term Visual Place Recognition. IEEE Robot. Autom. Lett. 2018, 3, 4015–4022. [Google Scholar] [CrossRef]

- Johns, E.; Yang, G.Z. Generative Methods for Long-Term Place Recognition in Dynamic Scenes. Int. J. Comput. Vis. 2014, 106, 297–314. [Google Scholar] [CrossRef]

- Naseer, T.; Burgard, W.; Stachniss, C. Robust Visual Localization Across Seasons. IEEE Trans. Robot. 2018, 34, 289–302. [Google Scholar] [CrossRef]

- Lowry, S.; Sünderhauf, N.; Newman, P.; Leonard, J.J.; Cox, D.; Corke, P.; Milford, M.J. Visual Place Recognition: A Survey. IEEE Trans. Robot. 2016, 32, 1–19. [Google Scholar] [CrossRef]

- Milford, M.J.; Wyeth, G.F. SeqSLAM: Visual route-based navigation for sunny summer days and stormy winter nights. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1643–1649. [Google Scholar]

- Neubert, P.; Sünderhauf, N.; Protzel, P. Appearance change prediction for long-term navigation across seasons. In Proceedings of the 2013 European Conference on Mobile Robots, Barcelona, Spain, 25–27 September 2013; pp. 198–203. [Google Scholar]

- McManus, C.; Churchill, W.; Maddern, W.; Stewart, A.D.; Newman, P. Shady dealings: Robust, long-term visual localisation using illumination invariance. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 901–906. [Google Scholar]

- Caduff, D.; Timpf, S. On the assessment of landmark salience for human navigation. Cogn. Process. 2008, 9, 249–267. [Google Scholar] [CrossRef] [PubMed]

- Keil, J.; Edler, D.; Dickmann, F.; Kuchinke, L. Meaningfulness of landmark pictograms reduces visual salience and recognition performance. Appl. Ergon. 2019, 75, 214–220. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H. BoRF: Loop-closure detection with scale invariant visual features. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3125–3130. [Google Scholar]

- Cummins, M.; Newman, P. FAB-MAP: Probabilistic Localization and Mapping in the Space of Appearance. Int. J. Robot. Res. 2008, 27, 647–665. [Google Scholar] [CrossRef]

- Sünderhauf, N.; Shirazi, S.; Jacobson, A.; Dayoub, F.; Pepperell, E.; Upcroft, B.; Milford, M. Place recognition with ConvNet landmarks: Viewpoint-robust, condition-robust, training-free. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015. [Google Scholar]

- Sünderhauf, N.; Shirazi, S.; Dayoub, F.; Upcroft, B.; Milford, M. On the performance of ConvNet features for place recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4297–4304. [Google Scholar]

- Neubert, P.; Protzel, P. Local region detector + CNN based landmarks for practical place recognition in changing environments. In Proceedings of the 2015 European Conference on Mobile Robots (ECMR), Lincoln, UK, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Hou, Y.; Zhang, H.; Zhou, S. BoCNF: efficient image matching with Bag of ConvNet features for scalable and robust visual place recognition. Auton. Robot. 2018, 42, 1169–1185. [Google Scholar] [CrossRef]

- Jégou, H.; Douze, M.; Schmid, C.; Pérez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Zitnick, C.L.; Dollár, P. Edge Boxes: Locating Object Proposals from Edges. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 391–405. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014; pp. 1–14. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; NIPS: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cheng, M.; Zhang, Z.; Lin, W.; Torr, P. BING: Binarized Normed Gradients for Objectness Estimation at 300fps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 3286–3293. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Corfu, Greece, 21–22 September 1999; pp. 1150–1157. [Google Scholar]

- Oliva, A.; Torralba, A. Modeling the Shape of the Scene: A Holistic Representation of the Spatial Envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Chen, Z.; Lam, O.; Jacobson, A.; Milford, M. Convolutional Neural Network-based Place Recognition. arXiv 2014, arXiv:1411.1509. [Google Scholar]

- Naseer, T.; Spinello, L.; Burgard, W.; Stachniss, C. Robust Visual Robot Localization Across Seasons Using Network Flows. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Quebec City, QC, Canada, 27–31 July 2014; pp. 2564–2570. [Google Scholar]

- Cascianelli, S.; Costante, G.; Bellocchio, E.; Valigi, P.; Fravolini, M.L.; Ciarfuglia, T.A. Robust visual semi-semantic loop closure detection by a covisibility graph and CNN features. Robot. Auton. Syst. 2017, 92, 53–65. [Google Scholar] [CrossRef]

- Hou, Y.; Zhang, H.; Zhou, S. Evaluation of Object Proposals and ConvNet Features for Landmark-based Visual Place Recognition. J. Intell. Robot. Syst. 2018, 92, 505–520. [Google Scholar] [CrossRef]

- Hosang, J.; Benenson, R.; Dollár, P.; Schiele, B. What Makes for Effective Detection Proposals? IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 814–830. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Arbeláez, P.; Pont-Tuset, J.; Barron, J.; Marques, F.; Malik, J. Multiscale Combinatorial Grouping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 328–335. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Learning to propose objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2015; pp. 1574–1582. [Google Scholar]

- Neubert, P.; Protzel, P. Beyond Holistic Descriptors, Keypoints, and Fixed Patches: Multiscale Superpixel Grids for Place Recognition in Changing Environments. IEEE Robot. Autom. Lett. 2016, 1, 484–491. [Google Scholar] [CrossRef]

- Bingham, E.; Mannila, H. Random Projection in Dimensionality Reduction: Applications to Image and Text Data. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), San Francisco, CA, USA, 26–29 August 2001; pp. 245–250. [Google Scholar]

- Arandjelović, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 Year, 1000km: The Oxford RobotCar Dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

| Index | Scale Range |

|---|---|

| 1 | [0, 0.02) |

| 2 | [0.02, 0.05) |

| 3 | [0.05, 0.09) |

| 4 | [0.09, 0.14) |

| 5 | [0.14, 0.23) |

| 6 | [0.23, 0.34) |

| 7 | [0.34, 0.48) |

| 8 | [0.48, 0.70) |

| 9 | [0.70, 1] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, B.; Xu, X.; Li, J.; Zhang, H. Landmark Generation in Visual Place Recognition Using Multi-Scale Sliding Window for Robotics. Appl. Sci. 2019, 9, 3146. https://doi.org/10.3390/app9153146

Yang B, Xu X, Li J, Zhang H. Landmark Generation in Visual Place Recognition Using Multi-Scale Sliding Window for Robotics. Applied Sciences. 2019; 9(15):3146. https://doi.org/10.3390/app9153146

Chicago/Turabian StyleYang, Bo, Xiaosu Xu, Jun Li, and Hong Zhang. 2019. "Landmark Generation in Visual Place Recognition Using Multi-Scale Sliding Window for Robotics" Applied Sciences 9, no. 15: 3146. https://doi.org/10.3390/app9153146

APA StyleYang, B., Xu, X., Li, J., & Zhang, H. (2019). Landmark Generation in Visual Place Recognition Using Multi-Scale Sliding Window for Robotics. Applied Sciences, 9(15), 3146. https://doi.org/10.3390/app9153146