Applying Eye-Tracking Technology to Measure Interactive Experience Toward the Navigation Interface of Mobile Games Considering Different Visual Attention Mechanisms

Abstract

1. Introduction

2. Method

2.1. Participants

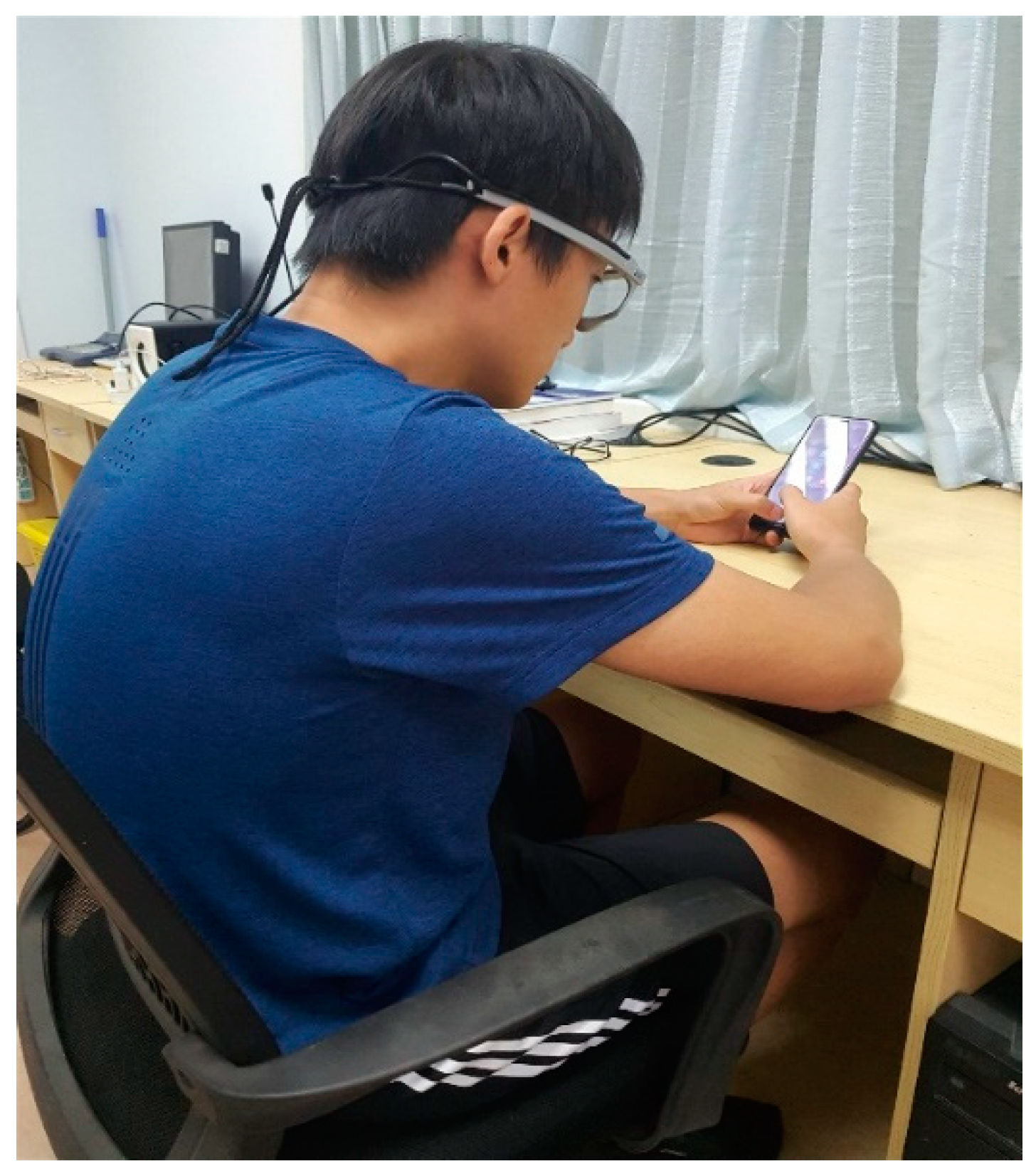

2.2. Apparatus

2.3. Stimuli

2.4. Eye-Movement Measures

2.5. Procedure

3. Results

3.1. Subjective Evaluation

3.2. Eye-Movement Outcomes

3.3. Regression Analysis of Eye-Movement Outcomes and Subjective Evaluation

4. Discussion

4.1. The Eye-Movement Indicator Reflecting Interactive Experience in the Free Browsing Mode

4.2. The Eye-Movement Indicator Reflecting Interactive Experience in the Task-Oriented Mode

4.3. The Prediction of Interactive Experience through Eye-Tracking Indicators Should Consider Attention Patterns

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rutz, O.; Aravindakshan, A.; Rubel, O. Measuring and forecasting mobile game app engagement. Int. J. Res. Mark. 2019, 36, 185–199. [Google Scholar] [CrossRef]

- Hsiao, K.L.; Chen, C.C. What drives in-app purchase intention for mobile games? An examination of perceived values and loyalty. Electron. Commer. Res. Appl. 2016, 16, 18–29. [Google Scholar] [CrossRef]

- Ncube, C.; Shaalan, K.; Alomari, K.M. Predicting Success of a Mobile Game: A Proposed Data Analytics-Based Prediction Model; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Merikivi, J.; Tuunainen, V.; Nguyen, D. What makes continued mobile gaming enjoyable? Comput. Hum. Behav. 2017, 68, 411–421. [Google Scholar] [CrossRef]

- Yoon, H.; Park, S.; Lee, K.; Park, J.W.; Dey, A.K.; Kim, S.J. A case study on iteratively assessing and enhancing wearable user interface prototypes. Symmetry 2017, 9, 114. [Google Scholar] [CrossRef]

- Feng, Y.; Zhang, W.; Luan, P.; Liu, M. Design of Game Style Navigation APP Interface Based on User Experience. In Proceedings of the 3rd International Conference on Culture, Education and Economic Development of Modern Society (ICCESE 2019), Moscow, Russia, 1–3 March 2019; Atlantis Press: Paris France, 2019. [Google Scholar]

- Nagai, Y.; Georgiev, G.V. The role of impressions on users’ tactile interaction with product materials: An analysis of associative concept networks. Mater. Des. 2011, 32, 291–302. [Google Scholar] [CrossRef]

- Nacke, L.E. Games User Research and Physiological Game Evaluation. Game User Experience Evaluation; Springer: Cham, Switzerland, 2015; pp. 63–86. [Google Scholar]

- Ke, F.; Xie, K.; Xie, Y. Game-based learning engagement: A theory-and data-driven exploration. Br. J. Educ. Technol. 2016, 47, 1183–1201. [Google Scholar] [CrossRef]

- Roberts, V.L.; Fels, D.I. Methods for inclusion: Employing think aloud protocols in software usability studies with individuals who are deaf. Int. J. Hum.-Comput. Stud. 2006, 64, 489–501. [Google Scholar] [CrossRef]

- Matsuno, S.; Terasaki, T.; Aizawa, S.; Mizuno, T.; Mito, K.; Itakura, N. Physiological and Psychological Evaluation by Skin Potential Activity Measurement Using Steering Wheel While Driving. In Proceedings of the International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Springer: Cham, Switzerland, 2016; pp. 177–181. [Google Scholar]

- Isbister, K.; Schaffer, N. Game Usability: Advice from the Experts for Advancing the Player Experience; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Sim, H.; Lee, W.H.; Kim, J.Y. A Study on Emotion Classification utilizing Bio-Signal (PPG, GSR, RESP). Adv. Sci. Technol. Lett. 2015, 87, 73–77. [Google Scholar]

- Wang, Y.J.; Minor, M.S. Validity, reliability, and applicability of psychophysiological techniques in marketing research. Psychol. Mark. 2008, 25, 197–232. [Google Scholar] [CrossRef]

- Harwood, T.; Garry, T. An investigation into gamification as a customer engagement experience environment. J. Serv. Mark. 2015, 29, 533–546. [Google Scholar] [CrossRef]

- Schifferstein, H.N.; Desmet, P.M. The effects of sensory impairments on product experience and personal well-being. Ergonomics 2007, 50, 2026–2048. [Google Scholar] [CrossRef] [PubMed]

- Tsai, M.; Huang, L.; Hou, H.; Hsu, C.; Chiou, G. Visual behavior, flow and achievement in game-based learning. Comput. Educ. 2016, 98, 115–129. [Google Scholar] [CrossRef]

- Park, K.; Lee, D.-J.; Lee, J.; Ju, J.; Ahn, J.-H. Do Mobile Devices Change Shopping Behavior? An Eye-tracking Approach. In Proceedings of the Americas Conference on Information Systems, Cancun, Mexico, 15–17 August 2019. [Google Scholar]

- Vidal, M.; Bulling, A.; Gellersen, H. Pursuits: Spontaneous eye-based interaction for dynamic interfaces. Getmobile Mob. Comput. Commun. 2015, 18, 8–10. [Google Scholar] [CrossRef]

- Cortinez, M.; Cabeza, R.; Chocarro, R.; Villanueva, A. Attention to Online Channels Across the Path to Purchase: An Eye-Tracking Study. Electron. Commer. Res. Appl. 2019, 29, 100864. [Google Scholar] [CrossRef]

- Chen, Y.; Tsai, M.J. Eye-hand coordination strategies during active video game playing: An eye-tracking study. Comput. Hum. Behav. 2015, 51, 8–14. [Google Scholar] [CrossRef]

- Almeida, S.; Mealha, Ó.; Veloso, A. Video game scenery analysis with eye tracking. Entertain. Comput. 2016, 14, 1–13. [Google Scholar] [CrossRef]

- Abbaszadegan, M.; Yaghoubi, S.; MacKenzie, I.S. TrackMaze: A Comparison of Head-Tracking, Eye-Tracking, and Tilt as Input Methods for Mobile Games. International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2018; pp. 393–405. [Google Scholar]

- van der Laan, L.N.; Hooge, I.T.; De Ridder, D.T.; Viergever, M.A.; Smeets, P.A. Do you like what you see? The role of first fixation and total fixation duration in consumer choice. Food Qual. Prefer. 2015, 39, 46–55. [Google Scholar] [CrossRef]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Corbetta, M.; Shulman, G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002, 3, 201–215. [Google Scholar] [CrossRef]

- Orquin, J.L.; Loose, S.M. Attention and choice: A review on eye movements in decision making. Acta Psychol. 2013, 144, 190–206. [Google Scholar] [CrossRef]

- West, G.L.; Al-Aidroos, N.; Pratt, J. Action video game experience affects oculomotor performance. Acta Psychol. 2013, 142, 38–42. [Google Scholar] [CrossRef]

- Privitera, C.M.; Renninger, L.W.; Carney, T.; Klein, S.; Aguilar, M. Pupil dilation during visual target detection. J. Vis. 2010, 10, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Rosbergen, E.; Pieters, R.; Wedel, M. Visual attention to advertising: A segment-level analysis. J. Consum. Res. 1997, 24, 305–314. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye tracking methodology. Theory Pract. 2007, 328, 614. [Google Scholar]

- Inal, T.C.; Serteser, M.; Coşkun, A.; Ozpinar, A.; Unsal, I. Indirect reference intervals estimated from hospitalized population for thyrotropin and free thyroxine. Croat. Med. J. 2010, 51, 124–130. [Google Scholar] [CrossRef] [PubMed]

- Guo, F.; Jiang, J.; Lv, W. Development of a scale for user experience in mobile games and construct validation. Ergonomics 2017, 23, 24–32. [Google Scholar]

- Kunanusont, K.; Lucas, S.M.; Pérez-Liébana, D. General video game ai: Learning from screen capture. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Donostia-San Sebastián, Spain, 5–8 June 2017; pp. 2078–2085. [Google Scholar]

- Abdi, H. Partial least square regression (PLS regression). Encycl. Res. Methods Soc. Sci. 2003, 6, 792–795. [Google Scholar]

- Alshehri, M.; Alghowinem, S. An exploratory study of detecting emotion states using eye-tracking technology. In Proceedings of the IEEE Science and Information Conference, Karlsruhe, Germany, 6–10 May 2013; pp. 428–433. [Google Scholar]

- Luo, H.; Koszalka, T.; Zuo, M. Investigating the Effects of Visual Cues in Multimedia Instruction Using Eye Tracking. International Conference on Blended Learning; Springer: Cham, Switzerland, 2016; pp. 63–72. [Google Scholar]

- Uzzaman, S.; Joordens, S. The eyes know what you are thinking: Eye movements as an objective measure of mind wandering. Conscious. Cogn. 2011, 20, 1882–1886. [Google Scholar] [CrossRef]

- Goldberg, J.H.; Kotval, X.P. Computer interface evaluation using eye movements: Methods and constructs. Int. J. Ind. Ergon. 1999, 24, 631–645. [Google Scholar] [CrossRef]

- Guo, F.; Cao, Y.; Ding, Y.; Liu, W.; Zhang, X. A multimodal measurement method of users’ emotional experiences shopping online. Hum. Factors Ergon. Manuf. Serv. Ind. 2015, 25, 585–598. [Google Scholar] [CrossRef]

- Krejtz, K.; Biele, C.; Chrzastowski, D.; Kopacz, A.; Niedzielska, A.; Toczyski, P.; Duchowski, A. Gaze-controlled gaming: Immersive and difficult but not cognitively overloading. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; pp. 1123–1129. [Google Scholar]

- Cowen, L.; Ball, L.J.; Delin, J. An Eye Movement Analysis of Web Page Usability. People and Computers XVI-Memorable Yet Invisible; Springer: London, UK, 2002; pp. 317–335. [Google Scholar]

- Zagermann, J.; Pfeil, U.; Reiterer, H. Measuring cognitive load using eye tracking technology in visual computing. In Proceedings of the Sixth Workshop on Beyond Time and Errors on Novel Evaluation Methods for Visualization, Baltimore, MD, USA, 24 October 2016; pp. 78–85. [Google Scholar]

- Wang, Q.; Yang, S.; Liu, M.; Cao, Z.; Ma, Q. An eye-tracking study of website complexity from cognitive load perspective. Decis. Support Syst. 2014, 62, 1–10. [Google Scholar] [CrossRef]

- Meeter, M.; Van der Stigchel, S. Visual priming through a boost of the target signal: Evidence from saccadic landing positions. Atten. Percept. Psychophys. 2013, 75, 1336–1341. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Glaholt, M.G.; Reingold, E.M. Direct control of fixation times in scene viewing: Evidence from analysis of the distribution of first fixation duration. Vis. Cogn. 2012, 20, 605–626. [Google Scholar] [CrossRef]

- Knickerbocker, H. Release from Proactive Interference: The Impact of Emotional and Semantic Shifts on Recall Performance; State University of New York at Albany: Albany, NY, USA, 2014. [Google Scholar]

- Guo, F.; Ding, Y.; Liu, W.; Liu, C.; Zhang, X. Can eye-tracking data be measured to assess product design?: Visual attention mechanism should be considered. Int. J. Ind. Ergon. 2016, 53, 229–235. [Google Scholar] [CrossRef]

- Lu, W.; Li, M.; Lu, S.; Song, Y.; Yin, J.; Zhong, N. Visual search strategy and information processing mode: An eye-tracking study on web pages under information overload. In Proceedings of the International Symposium on Information and Automation, Guangzhou, China, 10–11 November 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 153–159. [Google Scholar]

- Goyal, S.; Miyapuram, K.P.; Lahiri, U. Predicting consumer’s behavior using eye tracking data. In Proceedings of the IEEE Second International Conference on Soft Computing and Machine Intelligence (ISCMI), Hong Kong, China, 23–24 November 2015; pp. 126–129. [Google Scholar]

- Levy, D.L.; Holzman, P.S.; Matthysse, S.; Mendell, N.R. Eye tracking and schizophrenia: A selective review. Schizophr. Bull. 1994, 20, 47–62. [Google Scholar] [CrossRef] [PubMed]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum.-Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Pinto, Y.; van der Leij, A.R.; Sligte, I.G.; Lamme, V.A.; Scholte, H.S. Bottom-up and top-down attention are independent. J. Vis. 2013, 13, 16. [Google Scholar] [CrossRef] [PubMed]

| Mode | High Level | Low Level | F | p | |

|---|---|---|---|---|---|

| M (SD) | M (SD) | ||||

| Free browse | 82.6250 (7.6389) | 50.6042 (6.2814) | 156.059 | <0.001 | 0.872 |

| Task-oriented | 85.9792 (8.1019) | 51.3958 (8.0595) | 127.014 | <0.001 | 0.847 |

| Metrics | Interactive Experience | High | Low | F | p | |

|---|---|---|---|---|---|---|

| Fixation count | M | 49.3958 | 53.0000 | 4.638 | 0.042 | 0.168 |

| SD | 10.6791 | 10.2438 | ||||

| First fixation duration | M | 235.0688 | 221.7333 | 0.612 | 0.442 | 0.026 |

| SD | 97.7865 | 84.4442 | ||||

| Dwell time ratio | M | 6.0208 | 5.8479 | 0.976 | 0.334 | 0.041 |

| SD | 1.1232 | 0.8698 | ||||

| Saccades count | M | 43.9583 | 49.6875 | 11.995 | 0.002 | 0.343 |

| SD | 7.9754 | 7.1183 | ||||

| Average saccade amplitude | M | 3.0188 | 3.6917 | 7.576 | 0.011 | 0.248 |

| SD | 0.9161 | 1.6101 |

| Metrics | Interactive Experience | High | Low | F | p | η2 |

|---|---|---|---|---|---|---|

| Fixation count | M | 66.2292 | 54.5417 | 5.370 | 0.030 | 0.189 |

| SD | 19.1748 | 19.7082 | ||||

| First fixation duration | M | 260.5417 | 343.3083 | 4.383 | 0.048 | 0.160 |

| SD | 135.1768 | 155.3116 | ||||

| Dwell time ratio | M | 7.8167 | 6.2438 | 10.895 | 0.003 | 0.321 |

| SD | 1.7653 | 1.5612 | ||||

| Saccades count | M | 59.8333 | 51.6458 | 5.398 | 0.029 | 0.190 |

| SD | 16.6640 | 15.8947 | ||||

| Average saccade amplitude | M | 3.2292 | 3.4458 | 0.383 | 0.542 | 0.016 |

| SD | 0.9098 | 2.4137 |

| Interactive Experience | Actual Value | Predicted Value | t | df | p | ||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||

| Monster elimination | 69.7500 | 8.5000 | 68.2341 | 9.0139 | 1.080 | 3 | 0.359 |

| Animal elimination | 58.7500 | 10.7199 | 58.6961 | 12.1273 | 0.057 | 3 | 0.958 |

| Love everyday | 66.2500 | 6.2383 | 65.1508 | 6.8686 | 1.508 | 3 | 0.229 |

| Seaside entertainment | 54.5000 | 7.3258 | 53.6606 | 6.4975 | 1.678 | 3 | 0.192 |

| Interactive Experience | Actual Value | Predicted Value | t | df | p | ||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||

| Monster elimination | 72.2500 | 14.9304 | 71.4581 | 15.9040 | 1.245 | 3 | 0.301 |

| Animal elimination | 61.7500 | 10.5948 | 61.3745 | 11.0715 | 1.000 | 3 | 0.391 |

| Love everyday | 73.2500 | 13.4505 | 72.5496 | 14.3451 | 1.104 | 3 | 0.350 |

| Seaside entertainment | 61.0000 | 4.5461 | 60.7878 | 4.9255 | 0.589 | 3 | 0.597 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, J.-Y.; Guo, F.; Chen, J.-H.; Tian, X.-H.; Lv, W. Applying Eye-Tracking Technology to Measure Interactive Experience Toward the Navigation Interface of Mobile Games Considering Different Visual Attention Mechanisms. Appl. Sci. 2019, 9, 3242. https://doi.org/10.3390/app9163242

Jiang J-Y, Guo F, Chen J-H, Tian X-H, Lv W. Applying Eye-Tracking Technology to Measure Interactive Experience Toward the Navigation Interface of Mobile Games Considering Different Visual Attention Mechanisms. Applied Sciences. 2019; 9(16):3242. https://doi.org/10.3390/app9163242

Chicago/Turabian StyleJiang, Jun-Yi, Fu Guo, Jia-Hao Chen, Xiao-Hui Tian, and Wei Lv. 2019. "Applying Eye-Tracking Technology to Measure Interactive Experience Toward the Navigation Interface of Mobile Games Considering Different Visual Attention Mechanisms" Applied Sciences 9, no. 16: 3242. https://doi.org/10.3390/app9163242

APA StyleJiang, J.-Y., Guo, F., Chen, J.-H., Tian, X.-H., & Lv, W. (2019). Applying Eye-Tracking Technology to Measure Interactive Experience Toward the Navigation Interface of Mobile Games Considering Different Visual Attention Mechanisms. Applied Sciences, 9(16), 3242. https://doi.org/10.3390/app9163242