Blind Image Deblurring Based on Local Edges Selection

Abstract

1. Introduction

- (1)

- The proposed image deblurring model is built based on MAP, but different from traditional MAP based methods, in the deblurring model, we add creative local image edges, the local edges are selected from the bright and dark channels of the image.

- (2)

- In most blind image deblurring methods, the blurring kernel is estimated first and the clear image is obtained by non-blind deblurring methods. Different from these methods, the clear image and blurring kernel are obtained based on alternating iteration in the proposed method.

- (3)

- Tests are carried out based on the dataset of gray value images, color images, and natural color images. The experimental results show that the proposed method can effectively deblur different kinds of images. By comparing with other state-of-the art methods in visual results and quantitative matrices, the clear image and blurring kernel results verify the effectiveness of the proposed method.

2. Method

2.1. Local Edges Selection Method

2.2. Image Deblurring Model

| Algorithm 1. The blind image deblurring process |

| 1. Input: blurred image y, kernel size [m,n] |

| 2. Initialization: x = y; H = zeros [m,n], H (1,1) = 1; KS = 0; |

| 3. if KS ≤ 0.95 |

| Estimate intermediate clear image x by the method in Section 2.4; |

| Estimate intermediate blurring kernel H by the method in Section 2.3; |

| Update kernel similarity (KS) by the method in Section 2.5; |

| 4. or else break; |

| 5. Output: x, H |

2.3. Estimation of Blurring Kernel

2.4. Estimation of Clear Image

| Algorithm 2. The calculation of clear image |

| 1. Initialization: x0, H (Intermediate results in the previous iteration); a0, b0, c0, d0 |

| 2. for k = 1:20 |

| 3. Calculate |

| 4. Obtain xk by Equation (16) |

| 5. end |

| 6. x = x20 |

2.5. Stopping Criterion

3. Results and Discussion

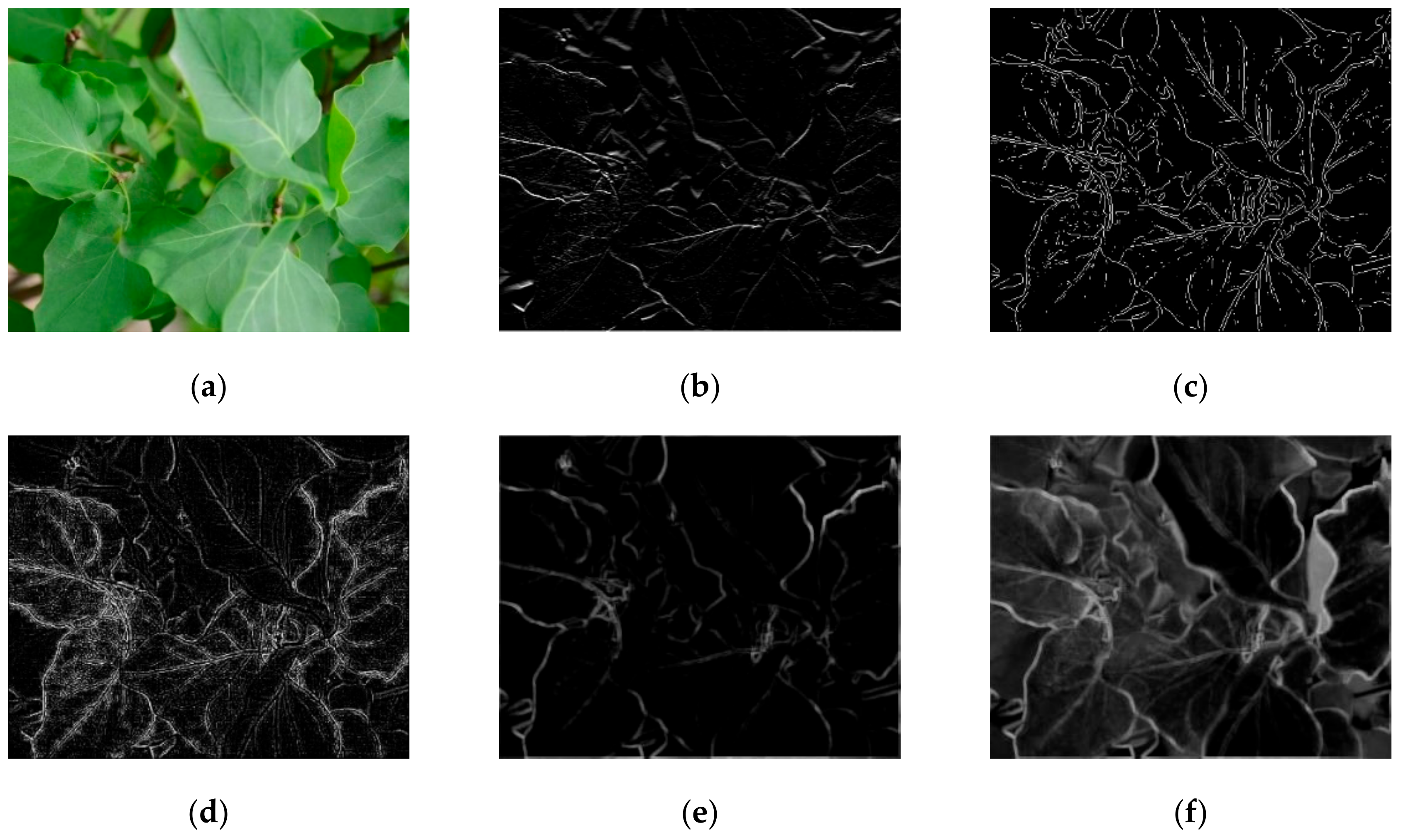

3.1. The Results of Image Edges Selection

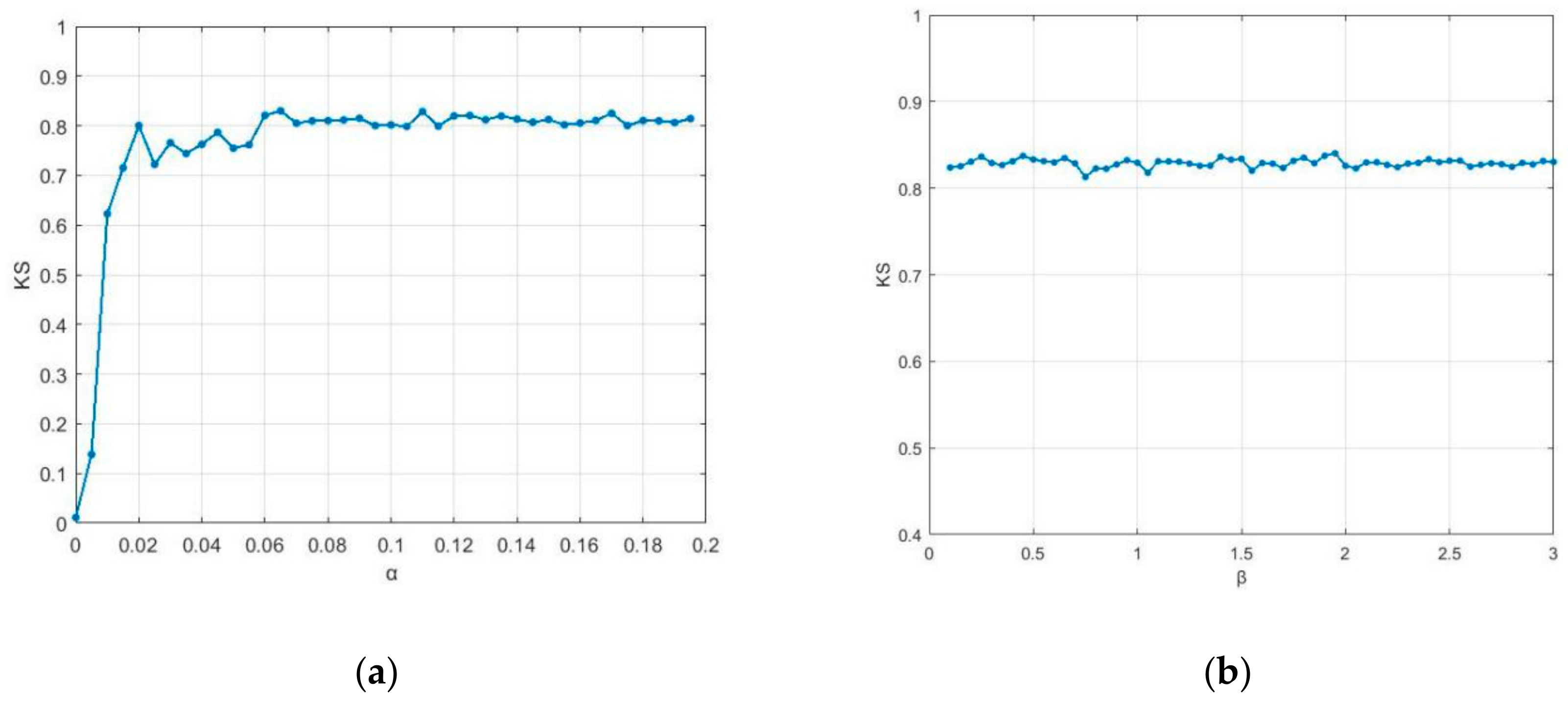

3.2. Discussion of the Parameters in the Deblurring Model

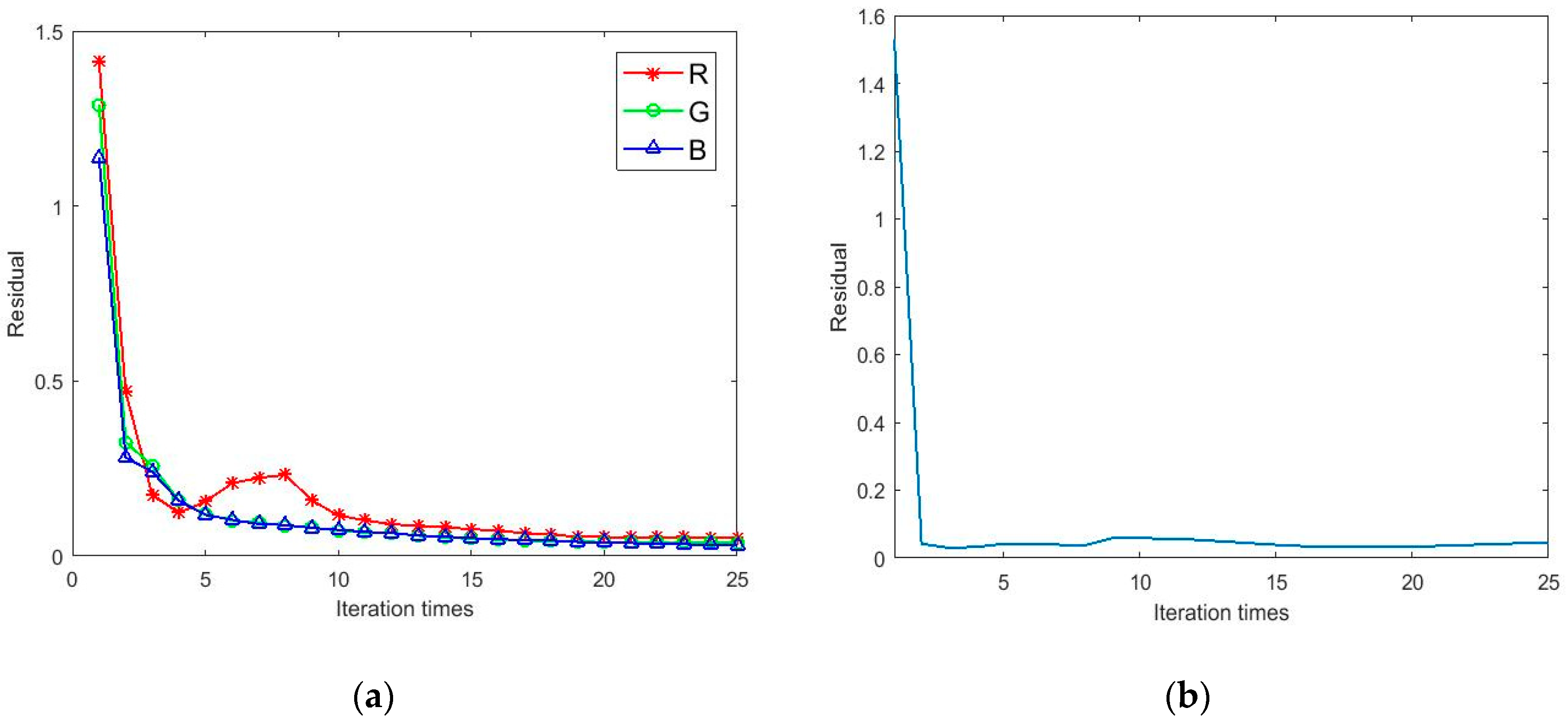

3.3. The Convergence

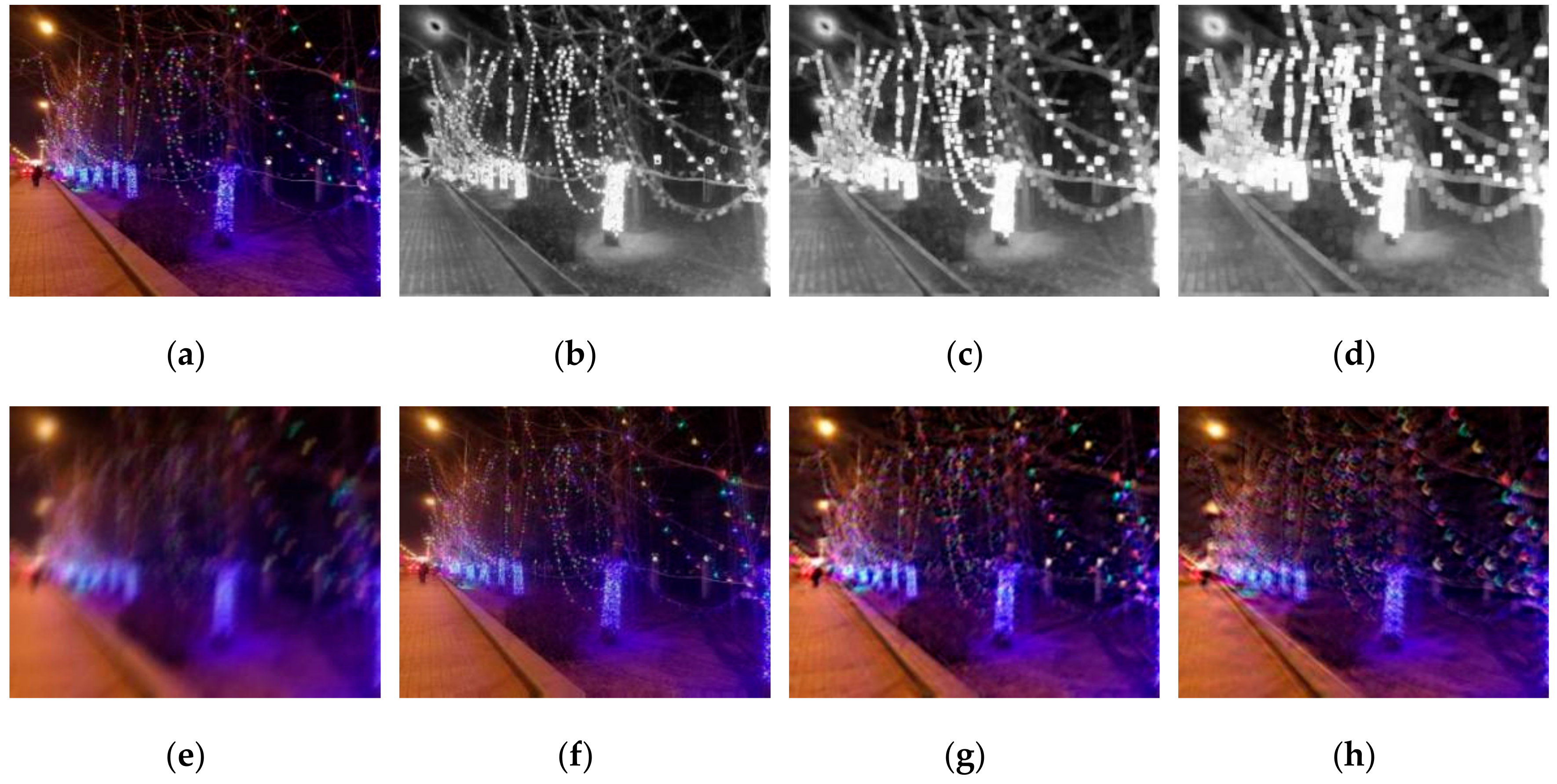

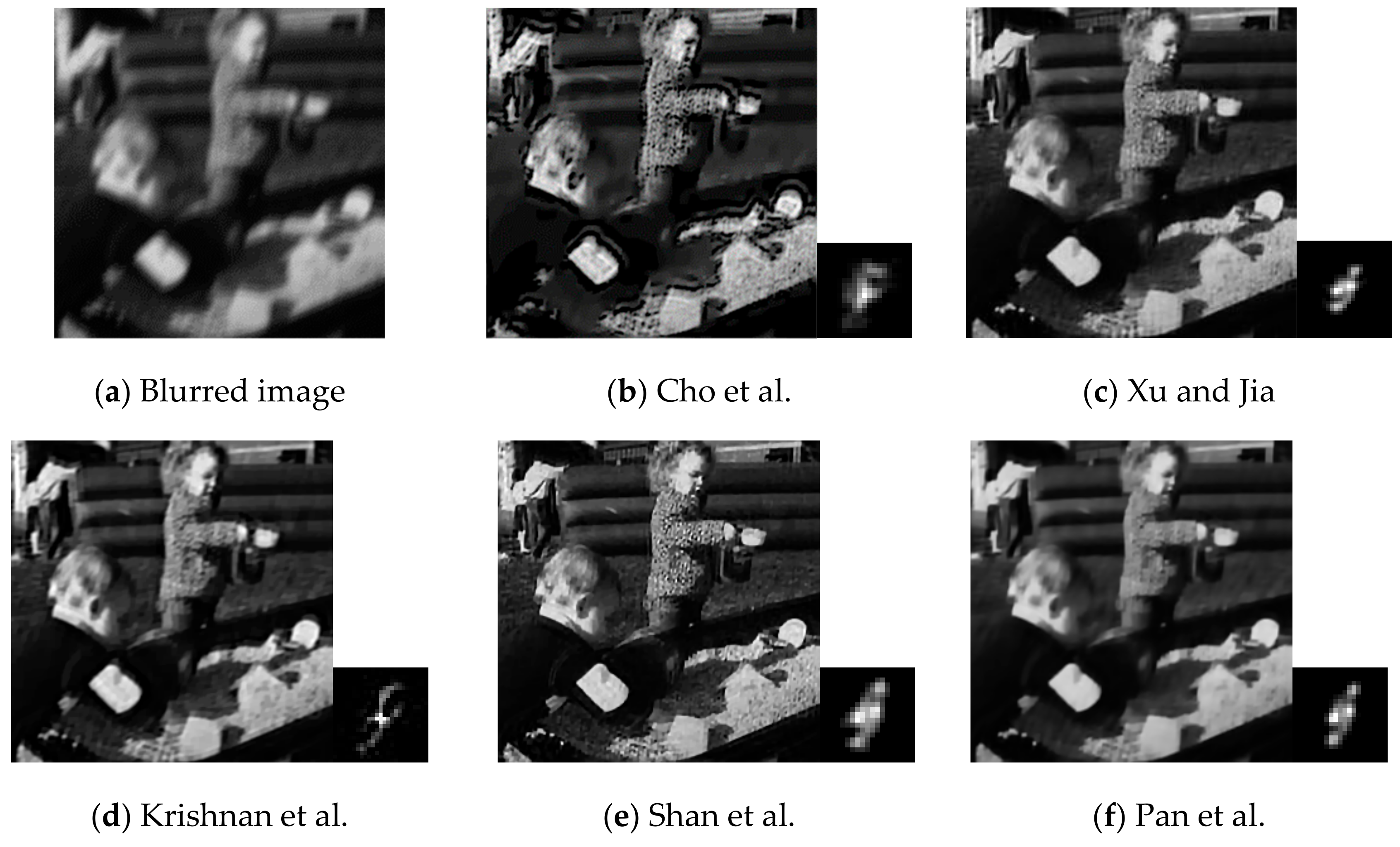

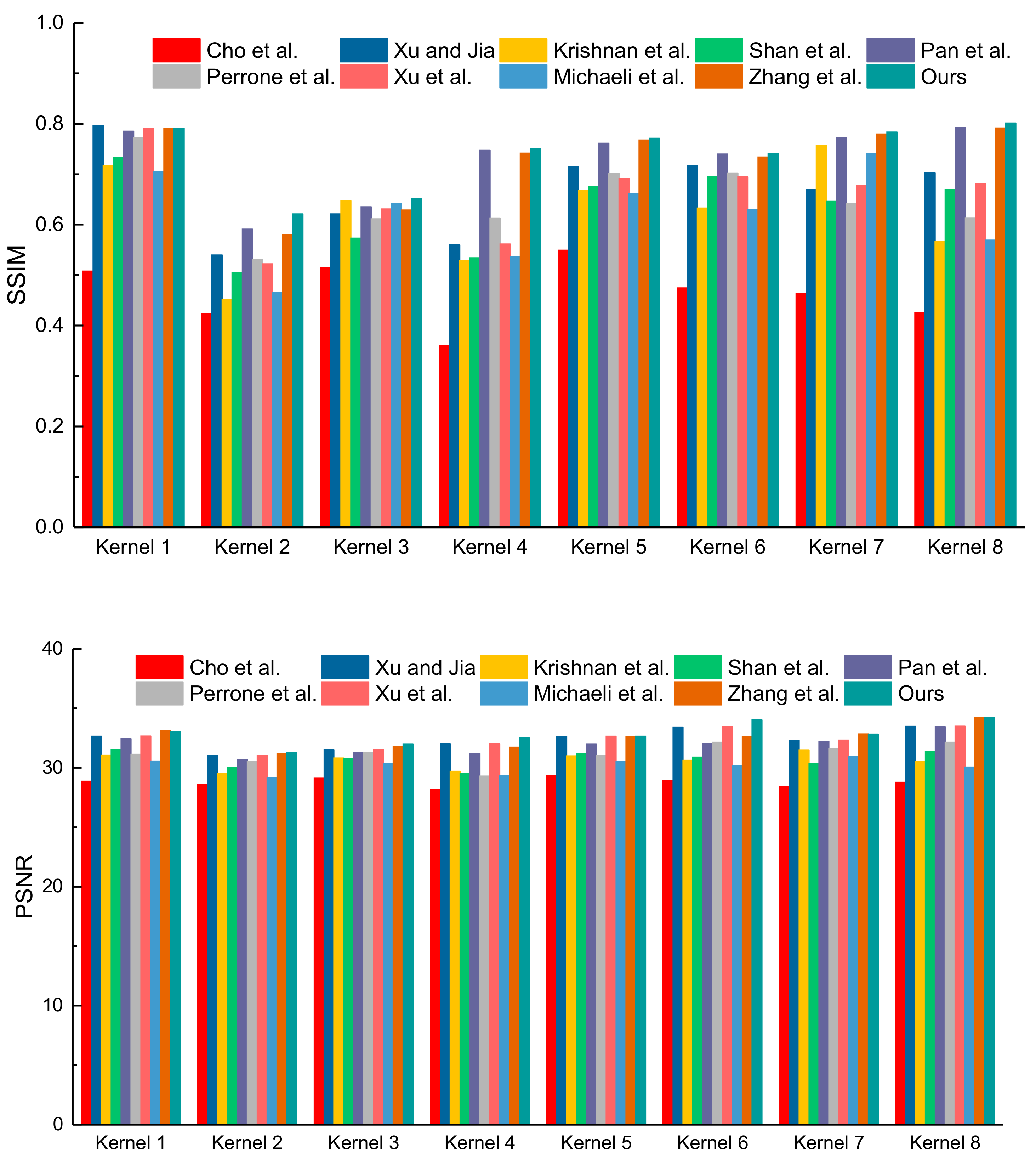

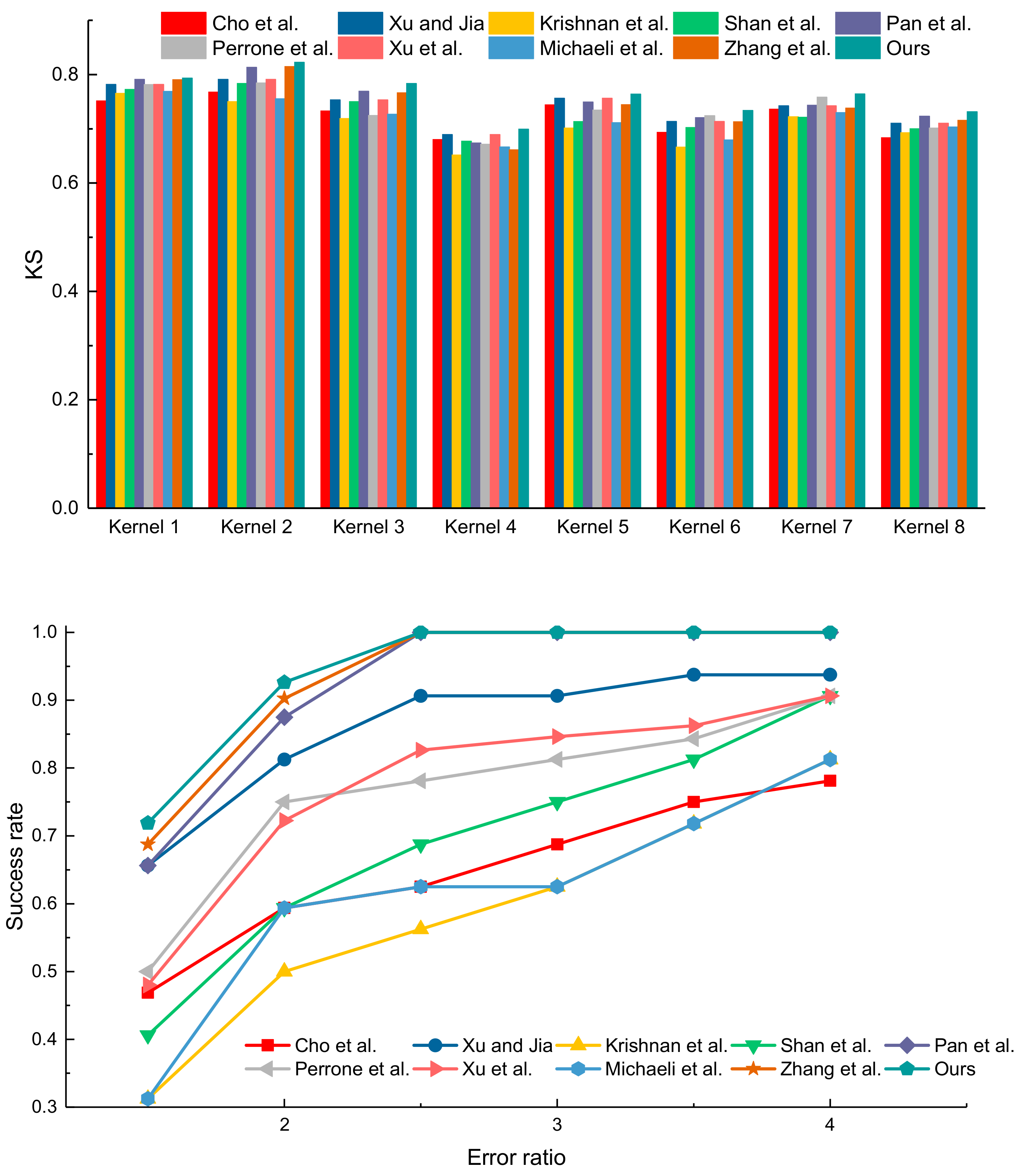

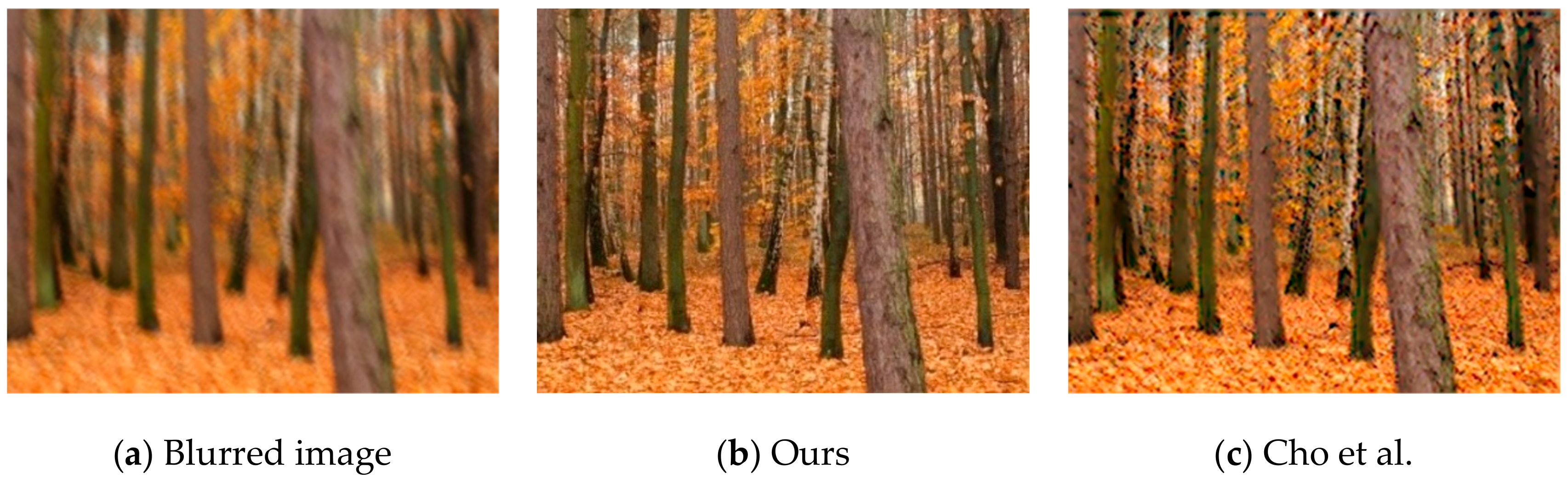

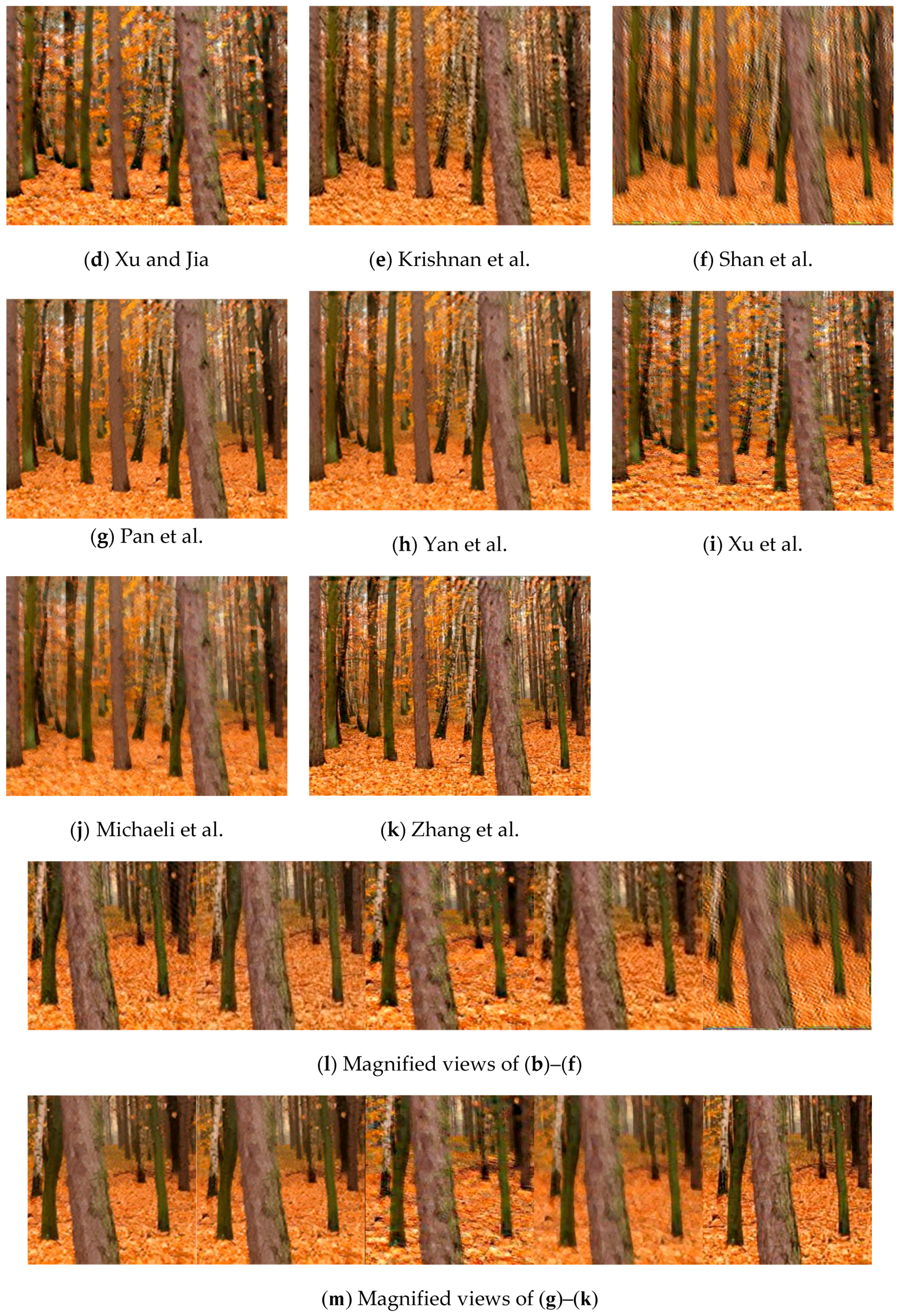

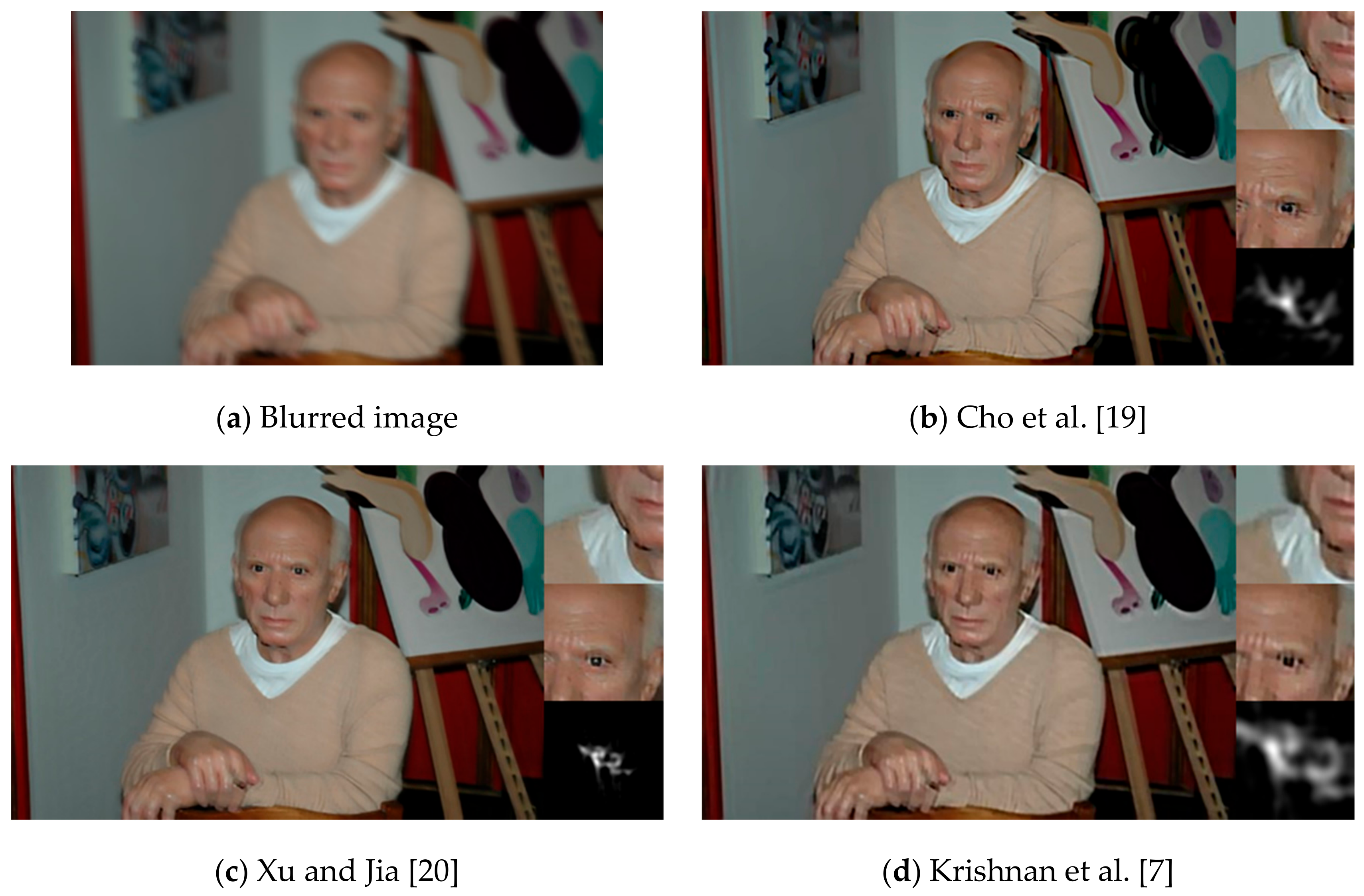

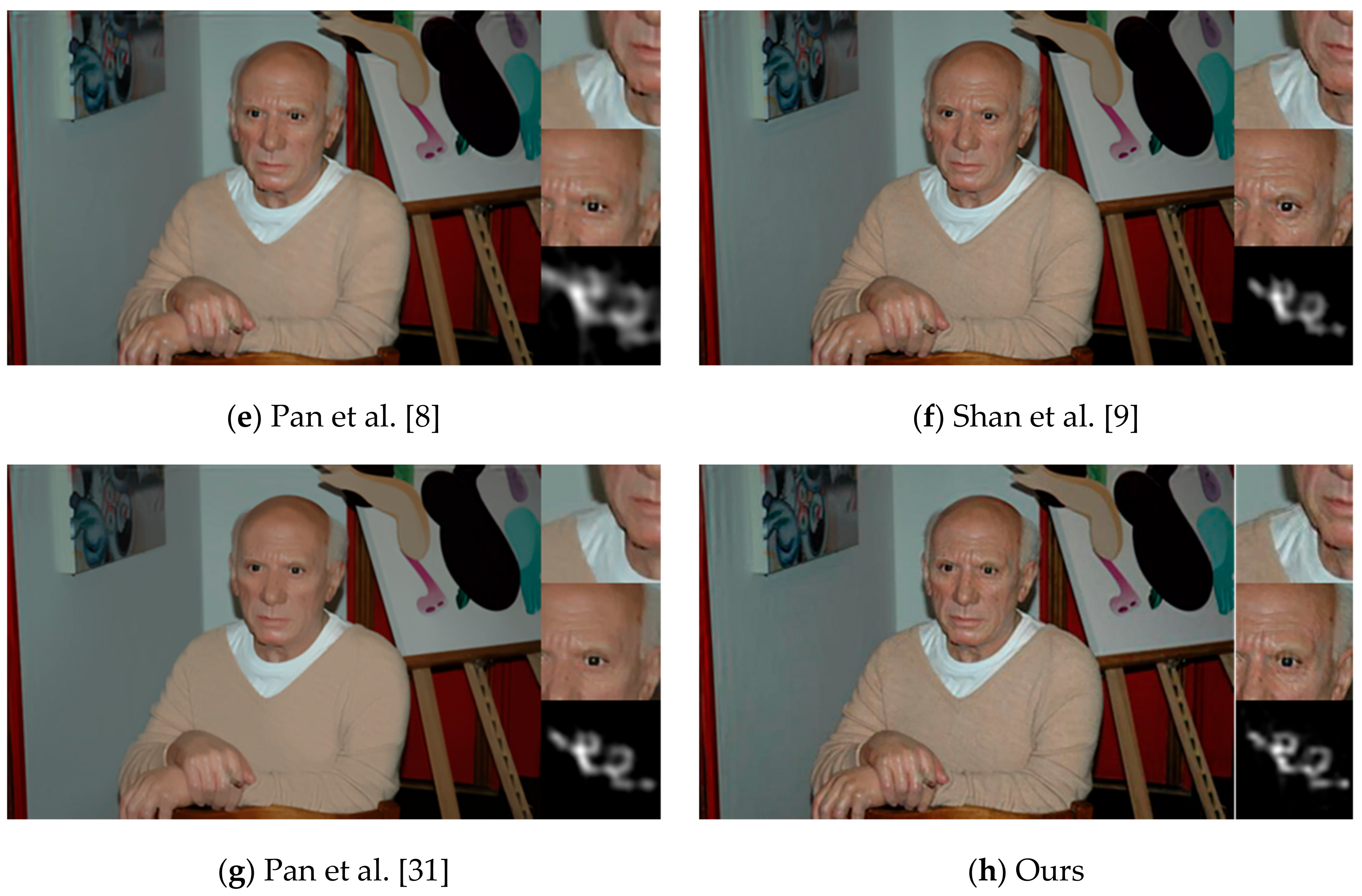

3.4. Image Deblurring Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Whyte, O.; Sivic, J.; Zisserman, A. Deblurring shaken and partially saturated images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 185–201. [Google Scholar]

- Krishnan, D.; Fergus, R. Fast image deconvolution using hyper-laplacian priors. In Advances in Neural Information Processing Systems 22, Proceedings of the 2009 Conference, Vancouver, BC, Canada, 7–10 December 2009; Curran Associates, Inc.: New York, NY, USA; pp. 1033–1041.

- Cho, S.; Wang, J.; Lee, S. Handling outliers in non-blind image deconvolution. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 495–502. [Google Scholar]

- Sun, L.; Cho, S.; Wang, J.; Hays, J. Edge-based blur kernel estimation using patch priors. In Proceedings of the IEEE International Conference on Computational Photography, Cambridge, MA, USA, 19–21 April 2013; pp. 1–8. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Understanding and evaluating blind deconvolution algorithms. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CNPR 2009), Miami, FL, USA, 20–25 June 2009; Volume 8, pp. 1964–1971. [Google Scholar]

- Mignotte, M. A non-local regularization strategy for image deconvolution. Pattern Recognit. Lett. 2008, 29, 2206–2212. [Google Scholar] [CrossRef]

- Krishnan, D.; Tay, T.; Fergus, R. Blind deconvolution using a normalized sparsity measure. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognitio, Colorado Springs, CO, USA, 20–25 June 2011; pp. 233–240. [Google Scholar]

- Pan, J.; Hu, Z.; Su, Z.; Yang, M.H. L0-regularized intensity and gradient prior for deblurring text images and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 342–355. [Google Scholar] [CrossRef] [PubMed]

- Qi, S.; Jia, J.; Agarwala, A. High-quality motion deblurring from a single image. ACM Trans. Graph. 2008, 27, 73. [Google Scholar]

- Mai, L.; Liu, F. Kernel fusion for better image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; Volume 1, pp. 371–380. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Efficient marginal likelihood optimization in blind deconvolution. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2657–2664. [Google Scholar]

- Goldstein, A.; Fattal, R. Blur-kernel estimation from spectral irregularities. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 622–635. [Google Scholar]

- Cai, J.F.; Ji, H.; Liu, C.; Shen, Z. Blind motion deblurring from a single image using sparse approximation. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 104–111. [Google Scholar]

- Almeida, M.S.C. Blind and semi-blind deblurring of natural images. IEEE Trans. Image Process. 2010, 19, 36–52. [Google Scholar] [CrossRef] [PubMed]

- Joshi, N.; Szeliski, R.; Kriegman, D.J. Psf estimation using sharp edge prediction. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 24–26. [Google Scholar]

- Jia, J. Single image motion deblurring using transparency. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Zhe, H.; Yang, M.H. Good regions to deblur. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 59–72. [Google Scholar]

- Javaran, T.A.; Hassanpour, H.; Abolghasemi, V. Local motion deblurring using an effective image prior based on both the first- and second-order gradients. Mach. Vis. Appl. 2017, 28, 431–444. [Google Scholar]

- Cho, S.; Lee, S. Fast motion deblurring. In Proceedings of the Acm Siggraph Asia, Yokohama, Japan, 16–19 December 2009; p. 145. [Google Scholar]

- Xu, J.J.L. Two-phase kernel estimation for robust motion deblurring. In Proceedings of the European Conference on Computer Vision—ECCV, Heraklion, Greece, 5–11 September 2010; pp. 157–170. [Google Scholar]

- Han, Y.; Kan, J. Blind color-image deblurring based on color image gradients. Signal Process. 2019, 155, 14–24. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep cnn denoiser prior for image restoration. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 1, pp. 2808–2817. [Google Scholar]

- Xu, L.; Ren, J.S.J.; Liu, C.; Jia, J. Deep convolutional neural network for image deconvolution. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 1, pp. 1790–1798. [Google Scholar]

- Ghosh, D.; Xiong, F.; Sirsi, S.R.; Shaul, P.W.; Mattrey, R.F.; Hoyt, K. Toward optimization of in vivo super-resolution ultrasound imaging using size-selected microbubble contrast agents. Med. Phys. 2017, 44, 6304–6313. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, D.; Kaabouch, N.; Hu, W.-C. A robust iterative super-resolution mosaicking algorithm using an adaptive and directional huber-markov regularization. J. Vis. Commun. Image Represent. 2016, 40, 98–110. [Google Scholar] [CrossRef]

- Ghosh, D.; Peng, J.; Brown, K.; Sirsi, S.; Mineo, C.; Shaul, P.W.; Hoyt, K. Super-resolution ultrasound imaging of skeletal muscle microvascular dysfunction in an animal model of type 2 diabetes. J. Ultrasound Med. 2019. [Google Scholar] [CrossRef] [PubMed]

- Michaeli, T.; Irani, M. Blind deblurring using internal patch recurrence. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 783–798. [Google Scholar]

- Danielyan, A.; Katkovnik, V.; Egiazarian, K. Bm3d frames and variational image deblurring. IEEE Trans. Image Process. 2012, 21, 1715–1728. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Jian, S.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar]

- Yan, Y.; Ren, W.; Guo, Y.; Rui, W.; Cao, X. Image deblurring via extreme channels prior. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6978–6986. [Google Scholar]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M.H. Blind image deblurring using dark channel prior. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar]

- Almeida, M.S.C.; Figueiredo, M.A.T. Blind image deblurring with unknown boundaries using the alternating direction method of multipliers. In Proceedings of the IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 586–590. [Google Scholar]

- Perrone, D.; Favaro, P. Total variation blind deconvolution: The devil is in the details. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Zurich, Switzerland, 6–12 September 2014; pp. 2909–2916. [Google Scholar]

- Paul, B.; Lei, Z.; Xiaolin, W. Canny edge detection enhancement by scale multiplication. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1485–1490. [Google Scholar]

- Almeida, M.S.C.; Figueiredo, M.A.T. New stopping criteria for iterative blind image deblurring based on residual whiteness measures. In Proceedings of the IEEE Statistical Signal Processing Workshop, Nice, France, 28–30 June 2011; pp. 337–340. [Google Scholar]

- Li, X.; Zheng, S.; Jia, J. Unnatural l0 sparse representation for natural image deblurring. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1107–1114. [Google Scholar]

- Zhou, W.; Alan Conrad, B.; Hamid Rahim, S.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Ghosh, D.; Park, S.; Kaabouch, N.; Semke, W. Quantitative evaluation of image mosaicing in multiple scene categories. In Proceedings of the 2012 IEEE International Conference on Electro/Information Technology, Indianapolis, IN, USA, 6–8 May 2012; pp. 1–6. [Google Scholar]

- Köhler, R.; Hirsch, M.; Mohler, B.; Schölkopf, B.; Harmeling, S. Recording and playback of camera shake: Benchmarking blind deconvolution with a real-world database. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 27–40. [Google Scholar]

- Hirsch, M.; Schuler, C.J.; Harmeling, S.; Schölkopf, B. Fast removal of non-uniform camera shake. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1439–1451. [Google Scholar]

- Fergus, R. Removing camera shake from a single photograph. ACM Trans. Graph. 2006, 25, 787–794. [Google Scholar] [CrossRef]

- Li, L.; Pan, J.; Lai, W.S.; Gao, C.; Yang, M.H. Learning a discriminative prior for blind image deblurring. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1077–1085. [Google Scholar]

| SSIM | PSNR | KS | |

|---|---|---|---|

| Cho et al. | 0.485 | 28.57 | 0.556 |

| Xu and Jia | 0.789 | 26.53 | 0.623 |

| Krishnan et al. | 0.654 | 31.69 | 0.583 |

| Shan et al. | 0.697 | 30.77 | 0.633 |

| Pan et al. | 0.800 | 31.72 | 0.699 |

| Perrone et al. | 0.732 | 26.86 | 0.691 |

| Xu et al. | 0.588 | 26.33 | 0.621 |

| Michaeli et al. | 0.624 | 30.10 | 0.612 |

| Zhang et al. | 0.769 | 30.53 | 0.705 |

| Ours | 0.823 | 31.82 | 0.713 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.; Kan, J. Blind Image Deblurring Based on Local Edges Selection. Appl. Sci. 2019, 9, 3274. https://doi.org/10.3390/app9163274

Han Y, Kan J. Blind Image Deblurring Based on Local Edges Selection. Applied Sciences. 2019; 9(16):3274. https://doi.org/10.3390/app9163274

Chicago/Turabian StyleHan, Yue, and Jiangming Kan. 2019. "Blind Image Deblurring Based on Local Edges Selection" Applied Sciences 9, no. 16: 3274. https://doi.org/10.3390/app9163274

APA StyleHan, Y., & Kan, J. (2019). Blind Image Deblurring Based on Local Edges Selection. Applied Sciences, 9(16), 3274. https://doi.org/10.3390/app9163274