FnnmOS-ELM: A Flexible Neural Network Mixed Online Sequential Elm

Abstract

1. Introduction

- (1)

- The proposed fnnmOS-ELM fully exploits the feature representation of CNNs and RNNs with different datasets and makes use of the excellent classification characteristics of OS-ELM.

- (2)

- We extend the application of OS-ELM to more datasets, and our studies also show that fnnmOS-ELM can optimize the network performance of CNNs or RNNs without changing the original network structure.

- (3)

- We explore the effects of various hyper-parameters on the performance of the model in the mixed structure in detail and explain how to improve the performance of the model by adjusting these parameters.

2. Related Work

3. Proposal and Implementation of FnnmOS-ELM

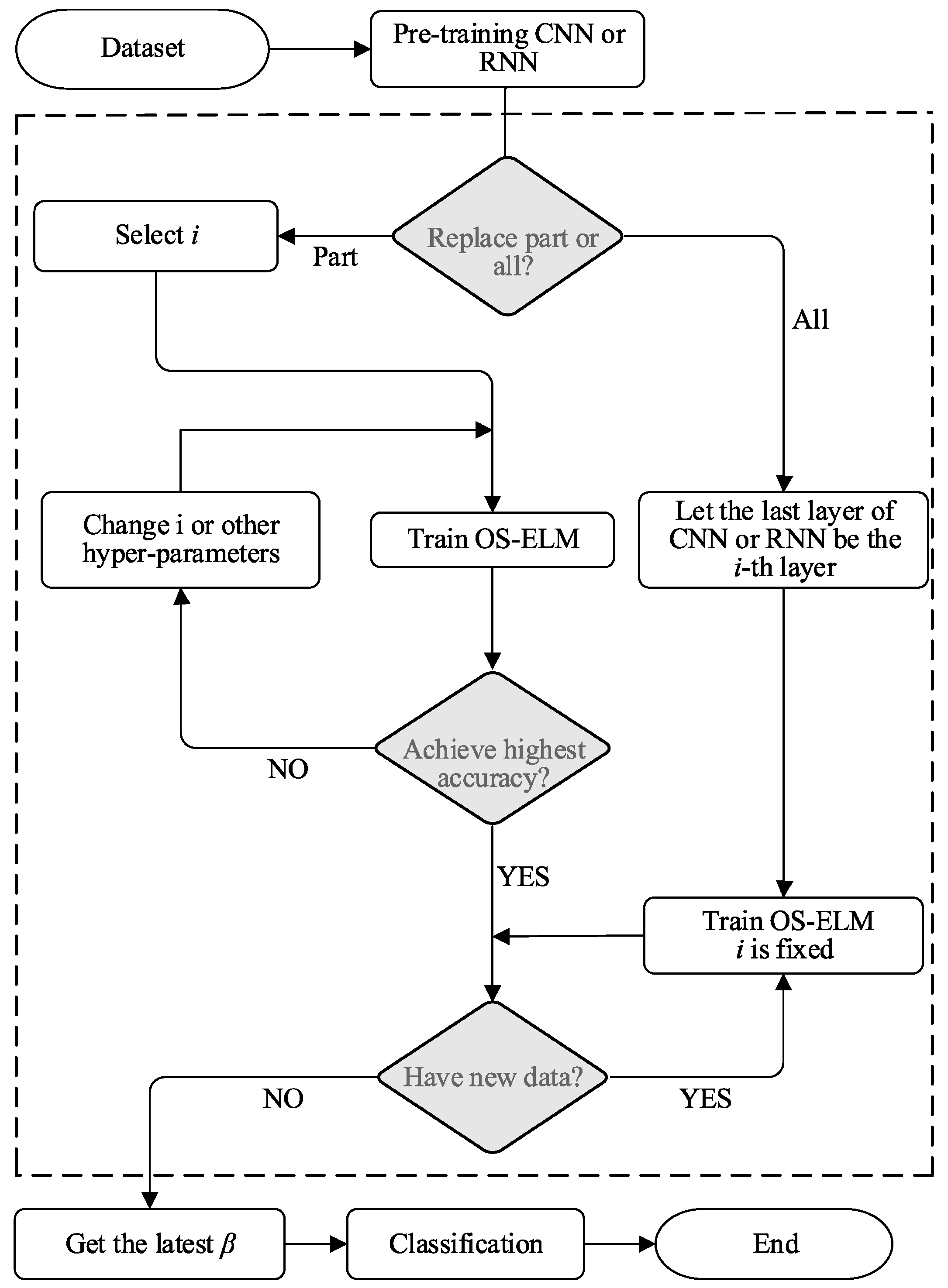

3.1. Proposal of FnnmOS-ELM

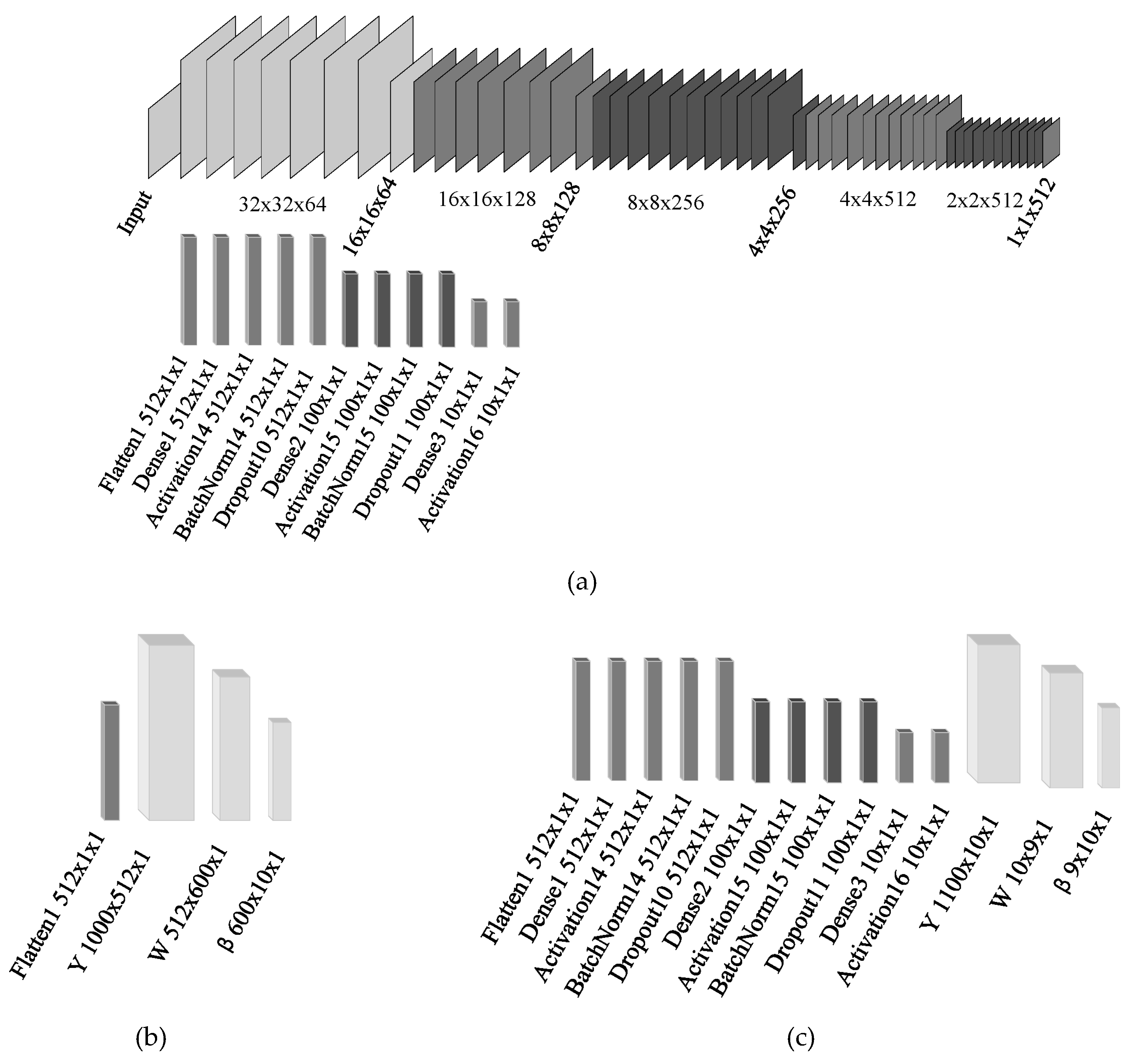

3.2. Model Structure

3.3. Training Process

| Algorithm 1 Online sequential learning |

|

4. Experimental Design and Result Analysis

4.1. Dataset

- (1)

- The Iris dataset is a commonly used classification dataset, which is often used for multiple variable analysis and testing the performance of linear classifiers. It is known that SVM and LR algorithms perform well in linear segmentation. Especially, the SVM made a breakthrough in the fields of binary and generalized linear classification [44]. Thus, we want to compare the performance of the fnnmOS-ELM on linear classification datasets.

- (2)

- IMDb is a dataset of 1000 popular movies from the last 10 years. It is often used in the field of natural language processing for short text sentiment analysis. LSTM [45], RNN, and other algorithms have shown good performance on this problem, so we want to test the performance of the fnnmOS-ELM on the same dataset.

- (3)

- CIFAR-10 and CIFAR-100 datasets have become two of the most popular datasets in recent years. They are basic datasets for image recognition. Using these datasets is beneficial to verify the performance of the fnnmOS-ELM in multi-classification and deep learning.

4.2. OS-ELM Mixed with Simple Neural Networks

4.3. OS-ELM Mixed with RNN

4.4. OS-ELM Mixed with CNN

4.5. Hyper-Parameters on CIFAR-10 Dataset

4.5.1. Impact of Batch Size D on Performance

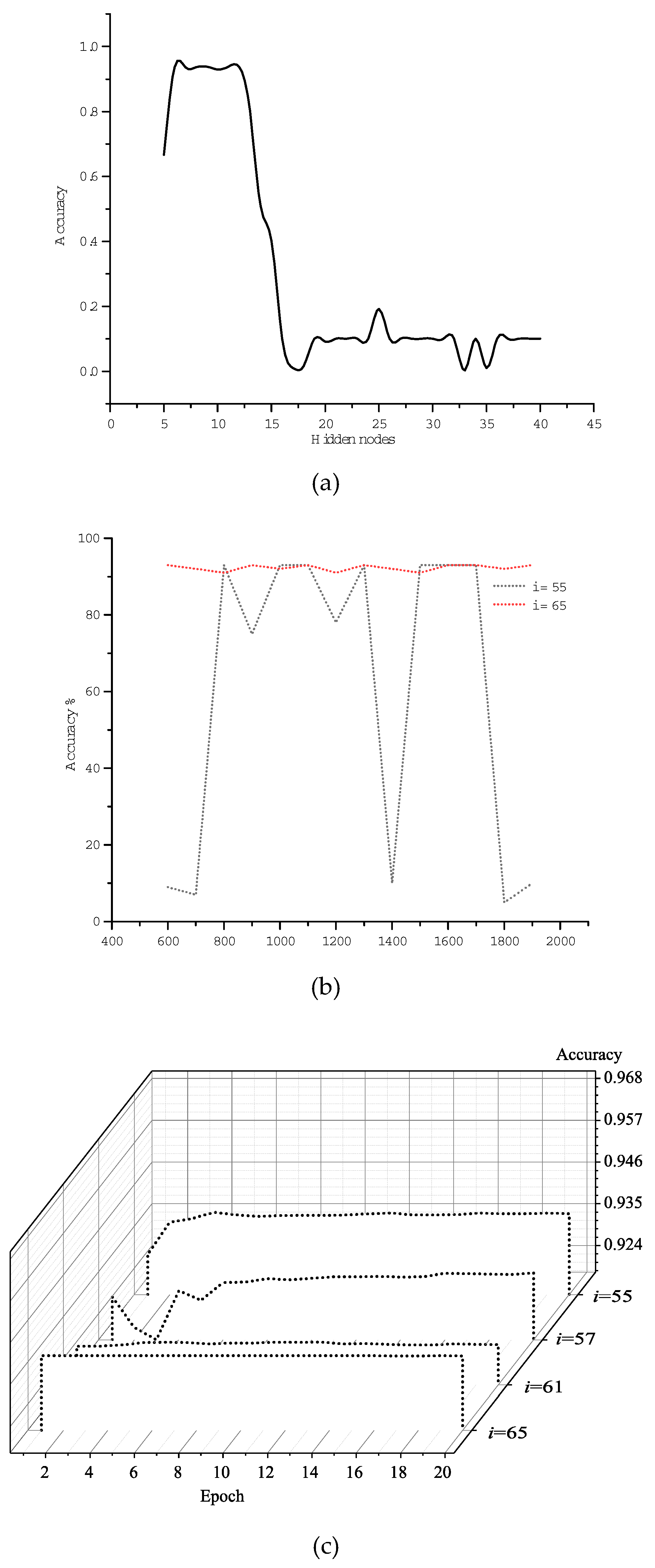

4.5.2. Influence of i on Performance

4.5.3. Influence of Hidden Nodes S on Performance

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H.S. Conditional randomfields as recurrent neural networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1529–1537. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; Lecun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Jozefowicz, R.; Zaremba, W.; Sutskever, I. An empirical exploration of recurrent network architectures. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2342–2350. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.-r.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.; et al. Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Orr, G.B.; Müller, K.-R. Efficient backprop. In Neural Networks: Tricks of the Trade; Lecture Notes in Computer Science; Montavon, G., Orr, G., Müller, K.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 1524, pp. 9–50. [Google Scholar]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to multi-layer feed-forward neural networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme Learning Machine: A New Learning Scheme of Feedforward Neural Networks. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks, Budapest, Hungary, 25–29 July 2004; IEEE: Budapest, Hungary, 2004; pp. 985–990. [Google Scholar]

- Huang, G.-B.; Siew, C.K. Extreme learning machine: RBF network case. In Proceedings of the 8th Control, Automation, Robotics and Vision Conference (ICARCV), Kunming, China, 6–9 December 2004; pp. 1029–1036. [Google Scholar]

- Huang, G.-B.; Zhu, Q.-Y.; Mao, K.; Siew, C.K.; Saratchandran, P.; Sundararajan, N. Can threshold networks be trained directly? IEEE Trans. Circuits Syst. II Express Br. 2006, 53, 187–191. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B.; Chen, L.; Siew, C.K. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 2006, 17, 879–892. [Google Scholar] [CrossRef] [PubMed]

- Liang, N.-Y.; Huang, G.-B.; Saratchandran, P.; Sundararajan, N. A fast and accurate online sequential learning algorithm for feedforward networks. IEEE Trans. Neural Netw. 2006, 17, 1411–1423. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xu, R.; Tao, Y.; Lu, Z.; Zhong, Y. Attention-Mechanism-Containing Neural Networks for High-Resolution Remote Sensing Image Classification. Remote Sens. 2018, 10, 1602. [Google Scholar] [CrossRef]

- Siniscalchi, S.M.; Salerno, V.M. Adaptation to New Microphones Using Artificial Neural Networks with Trainable Activation Functions. IEEE Trans. Neural Netw. 2017, 28, 1959–1965. [Google Scholar] [CrossRef] [PubMed]

- Chae, S.; Kwon, S.; Lee, D. Predicting Infectious Disease Using Deep Learning and Big Data. Int. J. Environ. Res. Public Health 2018, 15, 1596. [Google Scholar]

- Ferrari, S.; Stengel, R.F. Smooth function approximation using neural networks. IEEE Trans. Neural Netw. 2005, 16, 24–38. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Neurocomputing: Foundations of Research. In Learning Internal Representations by Error Propagation; MIT Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Xiang, C.; Ding, S.Q.; Lee, T.H. Geometrical interpretation and architecture selection of MLP. IEEE Trans. Neural Netw. 2005, 16, 84–96. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.-B.; Chen, Y.Q.; Babri, H.A. Classification ability of single hidden layer feedforward neural networks. IEEE Trans. Neural Netw. 2000, 11, 799–801. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Er, M.J.; Han, M. Generalized single-hidden layer feedforward networks for regression problems. IEEE Trans. Neural Netw. 2015, 26, 1161–1176. [Google Scholar] [CrossRef] [PubMed]

- Gopal, S.; Fischer, M.M. Learning in single hidden-layer feedforward network models: Backpropagation in a spatial interaction modeling context. Geogr. Anal. 2010, 28, 38–55. [Google Scholar] [CrossRef]

- Ngia, L.S.H.; Sjoberg, J.; Viberg, M. Adaptive neural nets filter using a recursive Levenberg-Marquardt search direction. In Proceedings of the Conference Record of the Thirty-Second Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 1–4 November 1998; pp. 697–701. [Google Scholar]

- Caruana, R.; Lawrence, S.; Giles, L. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In Proceedings of the 13th International Conference on Neural Information Processing Systems, Denver, CO, USA, 27 November–2 December 2000; pp. 381–387. [Google Scholar]

- Huang, G.-B. Learning capability and storage capacity of two-hidden-layer feedforward networks. IEEE Trans. Neural Netw. 2003, 14, 274–281. [Google Scholar] [CrossRef]

- Tamura, S.; Tateishi, M. Capabilities of a four-layered feedforward neural network: Four layers versus three. IEEE Trans. Neural Netw. 1997, 8, 251–255. [Google Scholar] [CrossRef]

- Banerjee, K.S. Generalized inverse of matrices and its applications. Technometrics 1973, 15, 197. [Google Scholar] [CrossRef]

- Bai, Z.; Huang, G.-B.; Wang, D.; Wang, H.; Westover, M.B. Sparse extreme learning machine for classification. IEEE Trans. Syst. Man Cybern. 2014, 44, 1858–1870. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Wu, Q.M.J.; Wang, Y.; Zeeshan, K.M.; Lin, X.; Yuan, X. Data partition learning with multiple extreme learningmachines. IEEE Trans. Syst. Man Cybern. 2015, 45, 1463–1475. [Google Scholar]

- Luo, J.; Vong, C.-M.; Wong, P.-K. Sparse Bayesian extreme learning machine for multi-classification. IEEE Trans. Neural Netw. 2014, 25, 836–843. [Google Scholar]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Wang, L.; Huang, G.-B.; Zhang, J.; Yin, J. Multiple kernel extreme learning machine. Neurocomputing 2015, 149, 253–264. [Google Scholar] [CrossRef]

- Huang, G.-B.; Bai, Z.; Kasun, L.L.C.; Vong, C.-M. Local receptive fields based extreme learning machine. IEEE Comput. Intell. Mag. 2015, 10, 18–29. [Google Scholar] [CrossRef]

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN-ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.E.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Blake, C.L. UCI Repository of Machine Learning Databases. Available online: http://archive.ics.uci.edu/ml/index.php (accessed on 25 June 2019).

- Daugman, J. New methods in iris recognition. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2007, 37, 1167–1175. [Google Scholar] [CrossRef]

- Ahmed, A.; Batagelj, V.; Fu, X.; Hong, S.-H.; Merrick, D.; Mrvar, A. Visualisation and analysis of the internet movie database. In Proceedings of the 6th International Asia-Pacific Symposium on Visualization, Sydney, Australia, 5–7 February 2007; pp. 17–24. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Tech. Rep. 001; Department of Computer Science, University of Toronto: Toronto, Canada, 2009. [Google Scholar]

- Ben-Hur, A.; Horn, D.; Siegelmann, H.T.; Vapnik, V. A support vector method for clustering. In Proceedings of the 13th International Conference on Neural Information Processing Systems, Barcelona, Spain, 3–7 September 2000; pp. 367–373. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in Neural Information Processing Systems; MIT Press Cambridg: Cambridge, MA, USA, 2015; pp. 802–810. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. Line: Large-scale information network embedding. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1067–1077. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Clevert, D.-A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (ELUs). In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Liang, M.; Hu, X. Recurrent convolutional neural network for object recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3367–3375. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Agostinelli, F.; Hoffman, M.D.; Sadowski, P.J.; Baldi, P. Learning activation functions to improve deep neural networks. arXiv 2014, arXiv:1412.6830. [Google Scholar]

- Keskar, N.S.; Mudigere, D.; Nocedal, J.; Smelyanskiy, M.; Tang, P.T.P. On largebatch training for deep learning: Generalization gap and sharp minima. arXiv 2016, arXiv:1609.04836. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lile, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

| Dataset | Classes | Training Data | Testing Data |

|---|---|---|---|

| Iris | 3 | 90 | 60 |

| IMDb | 2 | 25,000 | 25,000 |

| CIFAR-10 | 10 | 50,000 | 10,000 |

| CIFAR-100 | 100 | 50,000 | 10,000 |

| Dataset | Method | Training Time (s) | Trainable Parameters | |

|---|---|---|---|---|

| Iris | SVM | <0.1 | - | |

| LR | <0.1 | - | ||

| Decision Tree | <0.1 | - | ||

| KNN | <0.1 | - | ||

| Simple NN | 16.7213 | 131 | ||

| fnnmOS-ELM | i = 3 | 0.5004 | 15 | |

| i = 4 | 0.5084 | 15 | ||

| Dataset | Method | Training Time (s) | Trainable Parameters | |

|---|---|---|---|---|

| IMDb | TF-IDF | LR | <0.1 | - |

| Multinominal | <0.1 | - | ||

| NB | ||||

| SGD | <0.1 | - | ||

| LSTM | 1178 | 438,045 | ||

| RNN | 16.72 | 126,993 | ||

| fnnmOS-ELM | i = 4 | 1.15 | 10 | |

| Dataset | Method | Trainable Parameters | Test Accuracy (%) | |

|---|---|---|---|---|

| CIFAR-10 | ResNet-100 | 1.7 M | 93.57 | |

| ELU | >1 M | 93.45 | ||

| RCNN | 0.67 M | 92.91 | ||

| VGG16 | 15 M | 93.01 (0.3) | ||

| ELM-LRF | >10,000 | <85.30 | ||

| CNN(VGG16)-ELM | >10,000 | <90.73 | ||

| fnnmOS-ELM | i = 55 | 6000 | 93.97 () | |

| i = 65 | 90 | 93.80 (0.1) | ||

| CIFAR-100 | ELU | >1 M | 75.72 | |

| RCNN | 1.87 M | 68.25 | ||

| NIN+APL | 0.67 M | 69.17 | ||

| VGG16 | 15 M | 69.73 (0.59) | ||

| ELM-LRF | 0.1 M | <60.31 | ||

| CNN(VGG16)-ELM | 0.1 M | <67.77 | ||

| fnnmOS-ELM | i = 55 | 85,000 | 70.64 ( | |

| i = 65 | 7000 | 70.67 | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; He, S.; Yu, J.; Wu, L.; Yue, Z. FnnmOS-ELM: A Flexible Neural Network Mixed Online Sequential Elm. Appl. Sci. 2019, 9, 3772. https://doi.org/10.3390/app9183772

Li X, He S, Yu J, Wu L, Yue Z. FnnmOS-ELM: A Flexible Neural Network Mixed Online Sequential Elm. Applied Sciences. 2019; 9(18):3772. https://doi.org/10.3390/app9183772

Chicago/Turabian StyleLi, Xiali, Shuai He, Junzhi Yu, Licheng Wu, and Zhao Yue. 2019. "FnnmOS-ELM: A Flexible Neural Network Mixed Online Sequential Elm" Applied Sciences 9, no. 18: 3772. https://doi.org/10.3390/app9183772

APA StyleLi, X., He, S., Yu, J., Wu, L., & Yue, Z. (2019). FnnmOS-ELM: A Flexible Neural Network Mixed Online Sequential Elm. Applied Sciences, 9(18), 3772. https://doi.org/10.3390/app9183772