A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales

Abstract

1. Introduction

2. Related Work

3. Application of the Proposed Method to the Fruit Variety Classification

3.1. Problem Statement

3.2. Research Method

3.2.1. CNN for Fruit Classification

3.2.2. You Only Look Once for Fruit Detection

3.2.3. Proposed Fruit Classification Method Using the Certainty Factor

3.3. Datasets

3.4. Results and Discussion

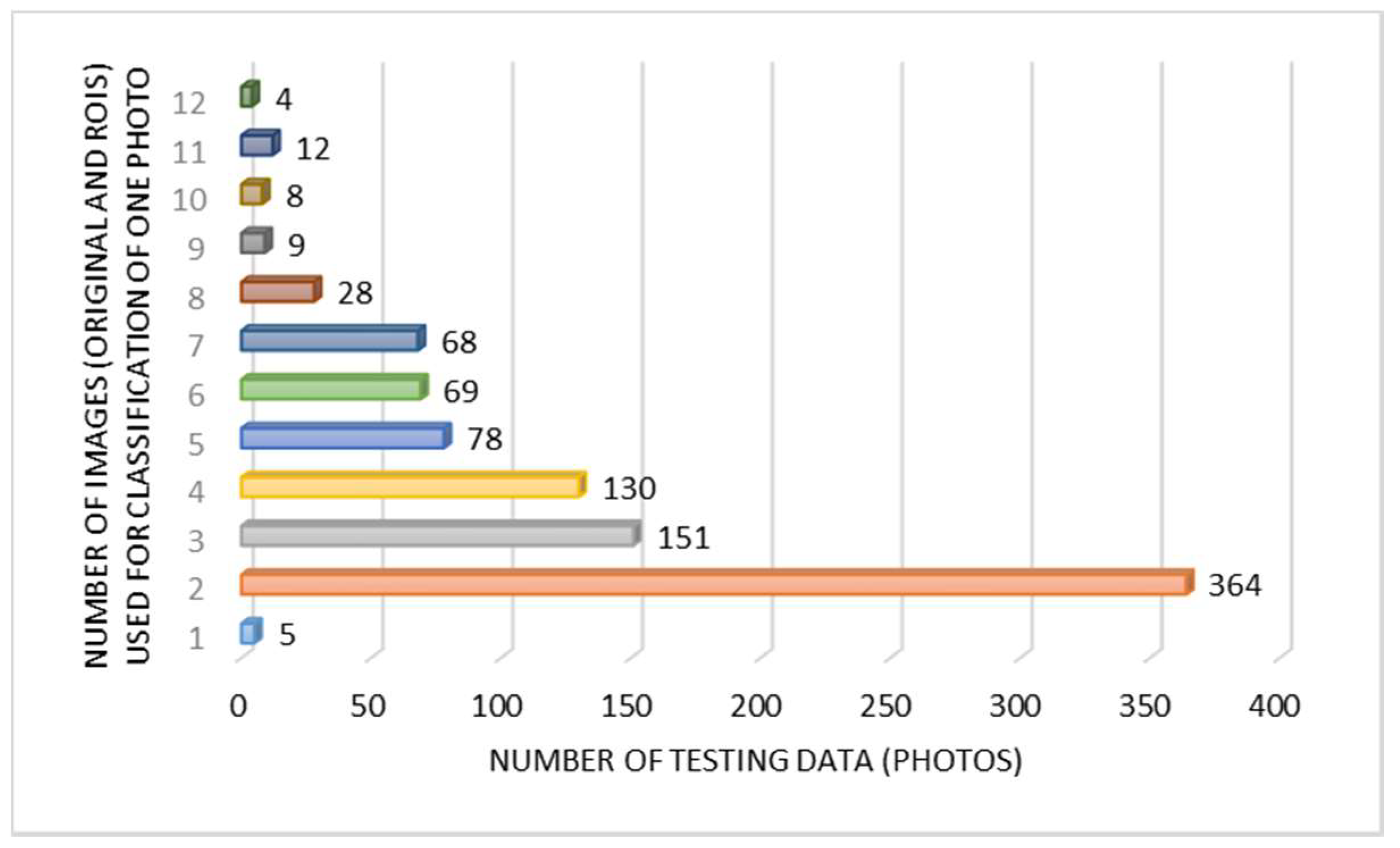

3.4.1. Original contra Region of Interest Images

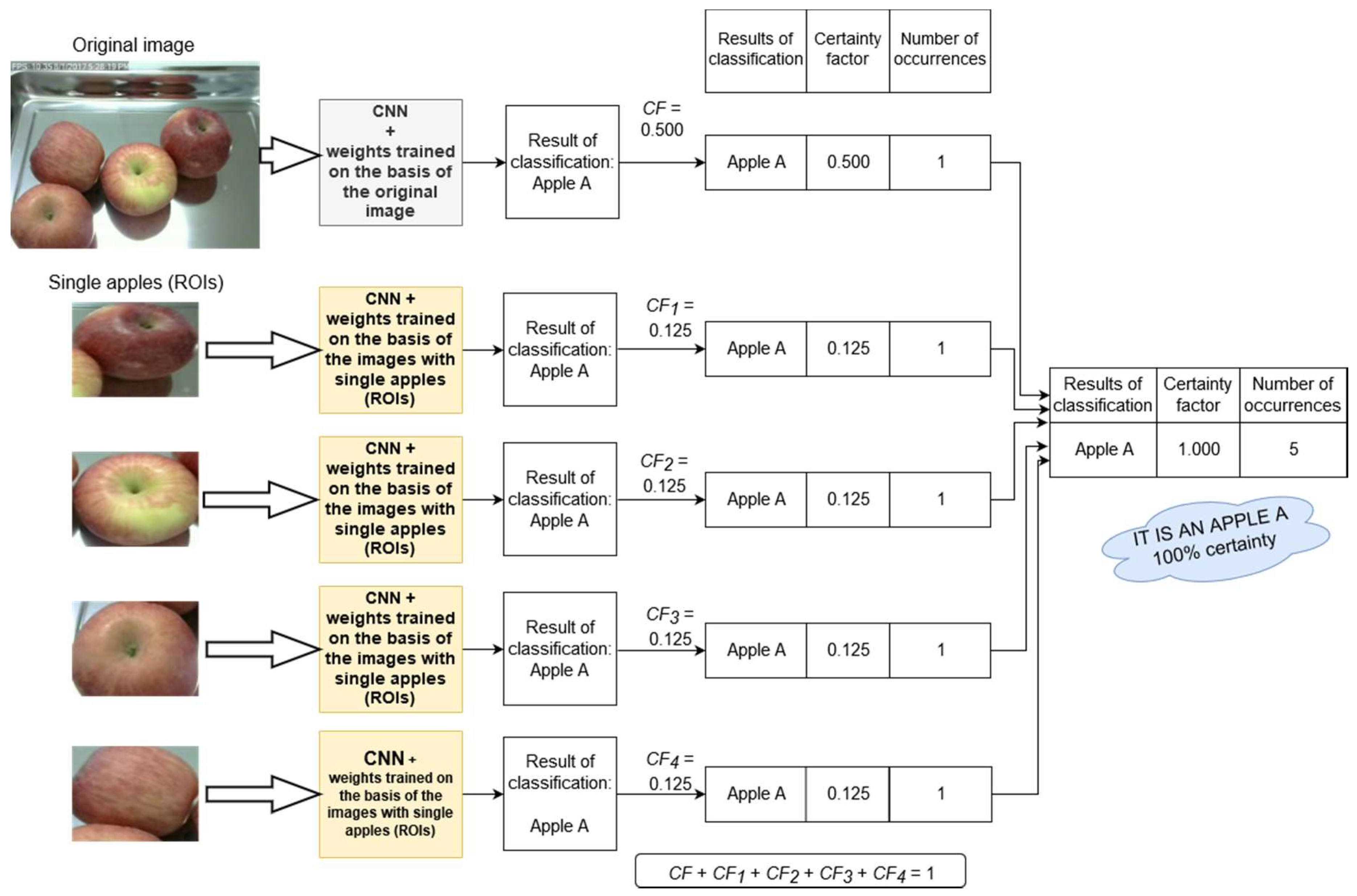

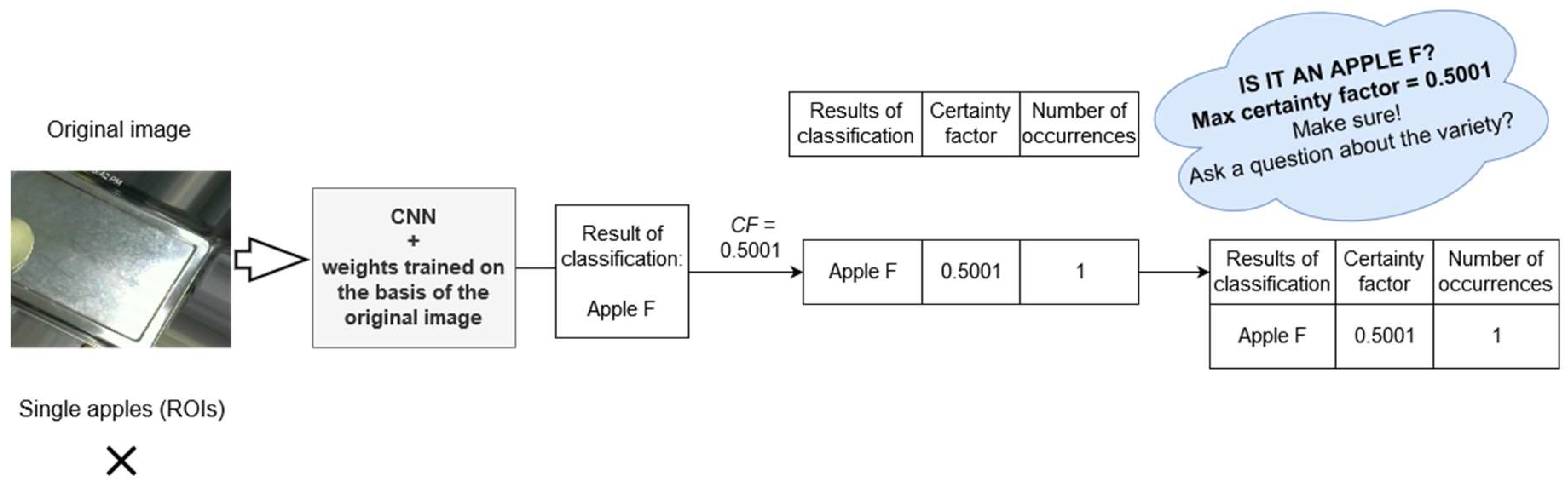

3.4.2. Proposed Fruit Classification Method Using the Certainty Factor

- an unambiguous classification (where , 97.95% of cases)

- an ambiguous classification (where and classification based on the original image and at least one ROI image, 1.51% of cases)

- an uncertain classification (where and classification based only on the original image, 0.54% of cases).

3.4.3. Comparison of the Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hussain, I.; He, Q.; Chen, Z. Automatic Fruit Recognition Based on DCNN for Commercial Source Trace System. Int. J. Comput. Sci. Appl. 2018, 8. [Google Scholar] [CrossRef]

- Hameed, K.; Chai, D.; Rassau, A. A comprehensive review of fruit and vegetable classification techniques. Image Vis. Comput. 2018, 80, 24–44. [Google Scholar] [CrossRef]

- Srivalli, D.S.; Geetha, A. Fruits, Vegetable and Plants Category Recognition Systems Using Convolutional Neural Networks: A Review. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2019, 5, 452–462. [Google Scholar] [CrossRef]

- Bolle, R.M.; Connell, J.H.; Haas, N.; Mohan, R.; Taubin, G. Veggievision: A produce recognition system. In Proceedings of the Third IEEE Workshop on Applications of Computer Vision, WACV’96, Sarasota, FL, USA, 2–4 December 1996; pp. 244–251. [Google Scholar]

- Rachmawati, E.; Supriana, I.; Khodra, M.L. Toward a new approach in fruit recognition using hybrid RGBD features and fruit hierarchy property. In Proceedings of the 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017; pp. 1–6. [Google Scholar]

- Zhou, H.; Chen, X.; Wang, X.; Wang, L. Design of fruits and vegetables online inspection system based on vision. J. Phys. Conf. Ser. 2018, 1074, 012160. [Google Scholar] [CrossRef]

- Garcia, F.; Cervantes, J.; Lopez, A.; Alvarado, M. Fruit Classification by Extracting Color Chromaticity, Shape and Texture Features: Towards an Application for Supermarkets. IEEE Lat. Am. Trans. 2016, 14, 3434–3443. [Google Scholar] [CrossRef]

- Yang, M.-M.; Kichida, R. A study on classification of fruit type and fruit disease, Advances in Engineering Research (AER). In Proceedings of the 13rd Annual International Conference on Electronics, Electrical Engineering and Information Science (EEEIS 2017), Guangzhou, Guangdong, China, 8–10 September 2017; pp. 496–500. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Wang, S.; Ji, G.; Phillips, P. Fruit classification using computer vision and feedforward neural network. J. Food Eng. 2014, 143, 167–177. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Y.; Ji, G.; Yang, J.; Wu, J.; Wei, L. Fruit Classification by Wavelet-Entropy and Feedforward Neural Network Trained by Fitness-Scaled Chaotic ABC and Biogeography-Based Optimization. Entropy 2015, 17, 5711–5728. [Google Scholar] [CrossRef]

- Zhang, Y.; Phillips, P.; Wang, S.; Ji, G.; Yang, J.; Wu, J. Fruit classification by biogeography-based optimization and feedforward neural network. Expert Syst. 2016, 33, 239–253. [Google Scholar] [CrossRef]

- Sakai, Y.; Oda, T.; Ikeda, M.; Barolli, L. A Vegetable Category Recognition System Using Deep Neural Network. In Proceedings of the 2016 10th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS), Fukuoka, Japan, 6–8 July 2016; pp. 189–192. [Google Scholar]

- Femling, F.; Olsson, A.; Alonso-Fernandez, F. Fruit and Vegetable Identification Using Machine Learning for Retail Applications. In Proceedings of the 14th International Conference on Signal Image Technology and Internet Based Systems, SITIS 2018, Gran Canaria, Spain, 26–29 November 2018; pp. 9–15. [Google Scholar]

- Hamid, N.N.A.A.; Rabiatul, A.R.; Zaidah, I. Comparing bags of features, conventional convolutional neural network and AlexNet for fruit recognition. Indones. J. Electr. Eng. Comput. Sci. 2019, 14, 333–339. [Google Scholar] [CrossRef]

- Hossain, M.S.; Al-Hammadi, M.; Muhammad, G. Automatic Fruit Classification Using Deep Learning for Industrial Applications. IEEE Trans. Ind. Inform. 2019, 15, 1027–1034. [Google Scholar] [CrossRef]

- Muresan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sappientiae 2018, 10, 26–42. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Dong, Z.; Chen, X.; Jia, W.; Du, S.; Muhammad, K.; Wang, S.-H. Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation. Multimed. Tools Appl. 2017, 78, 3613–3632. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolo V3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Detection of Apple Lesions in Orchards Based on Deep Learning Methods of CycleGAN and YOLOV3-Dense. J. Sens. 2019, 2019. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster RCNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497v3. [Google Scholar]

- Khan, R.; Debnath, R. Multi Class Fruit Classification Using Efficient Object Detection and Recognition Techniques. Int. J. Image Graph. Signal Process. 2019, 8, 1–18. [Google Scholar] [CrossRef]

- Hussain, I.; Wu, W.L.; Hua, H.Q.; Hussain, N. Intra-Class Recognition of Fruits Using DCNN for Commercial Trace Back-System. In Proceedings of the International Conference on Multimedia Systems and Signal Processing (ICMSSP May 2019), Guangzhou, China, 10–12 May 2019. [Google Scholar] [CrossRef]

- Jarrett, K.; Kavukcuoglu, K.; Ranzato, M.; LeCun, Y. What is the best multi-stage architecture for object recognition? In Proceedings of the International Conference on Computer Vision (ICCV’09), Kyoto, Japan, 29 September–2 October 2009.

- Qian, R.; Yue, Y.; Coenen, F.; Zhang, B. Traffic sign recognition with convolutional neural network based on max pooling positions. In Proceedings of the 2th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 578–582. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Hussain, I.; He, Q.; Chen, Z.; Xie, W. Fruit Recognition dataset. Zenodo 2018. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region based fully convolutional networks. arXiv 2016, arXiv:1506.01497. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

| Layer | Purpose | Filter | No of Filters | Weights | Bias | Activation |

|---|---|---|---|---|---|---|

| 1 | Image input layer | 150 × 50 × 3 | ||||

| 2 | Convolution + ReLU | 3 × 3 | 32 | 3 × 3 × 3 × 32 | 1 × 1 × 32 | 148 × 148 × 32 |

| 3 | Max_pooling | 2 × 2 | 74 × 74 × 32 | |||

| 4 | Convolution + ReLU | 3 × 3 | 64 | 3 × 3 × 32 × 64 | 1 x 1 x 64 | 72 × 72 × 64 |

| 5 | Max_pooling | 2 × 2 | 36 × 36 × 64 | |||

| 6 | Flatten | 1 × 1 × 82,944 | ||||

| 7 | Drop out | 1 × 1 × 82,944 | ||||

| 8 | Fully connected + ReLU | 64 × 82,944 | 64 × 1 | 1 × 1 × 64 | ||

| 9 | Fully connected + Softmax | 6 × 64 | 6 × 1 | 1 × 1 × 6 | ||

| Output layer | 1 × 1 × 6 | |||||

| Apple Varieties | Number of Images | Example |

|---|---|---|

| Apple A | 692 |  |

| Apple B | 740 |  |

| Apple C | 1002 |  |

| Apple D | 1033 |  |

| Apple E | 664 |  |

| Apple F | 2030 |  |

| Type of Data | Apple Variety | ALL | |||||

|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | ||

| Training data | |||||||

| Number of images with apple | 1359 | 1862 | 1992 | 2290 | 1698 | 3088 | 12,289 |

| Number of original images | 484 | 518 | 701 | 723 | 464 | 1421 | 4311 |

| Total number of images | 1843 | 2380 | 2693 | 3013 | 2162 | 4509 | 16,600 |

| Validation data | |||||||

| Number of images with apple | 351 | 382 | 411 | 502 | 332 | 609 | 2587 |

| Number of original images | 104 | 111 | 150 | 155 | 100 | 304 | 924 |

| Total number of images | 455 | 493 | 561 | 657 | 432 | 913 | 3511 |

| Testing data | |||||||

| Number of images with apple | 307 | 416 | 413 | 494 | 351 | 644 | 2625 |

| Number of original images | 104 | 111 | 151 | 155 | 100 | 305 | 926 |

| Total number of images | 411 | 527 | 564 | 649 | 451 | 949 | 3551 |

| Total number of images | 23,662 | ||||||

| Number of Data | Test Results | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Data | Validation Data | Testing Data | Number of Correct Classifications | Number of Wrong Classifications | Accuracy | |||||||||

| Original Images | Images with Apple (ROIs) | Original Images | Images with Apple (ROIs) | Original Images | Images with Apple (ROIs) | All Images | Original Images | Images with Apple (ROIs) | All Images | Original Images | Images with Apple (ROIs) | All Images | ||

| CNN, original images as training and validation data | 4311 | 0 | 924 | 0 | 926 | 0 | 926 | 924 | 0 | 924 | 2 | 0 | 2 | 99.78% |

| 0 | 2625 | 2625 | 0 | 1883 | 1883 | 0 | 742 | 742 | 71.73% | |||||

| 926 | 2625 | 3551 | 924 | 1883 | 2807 | 2 | 742 | 744 | 79.05% | |||||

| CNN, images with apples as training and validation data | 0 | 12289 | 0 | 2587 | 926 | 0 | 926 | 317 | 0 | 317 | 609 | 0 | 609 | 34.23% |

| 0 | 2625 | 2625 | 0 | 2561 | 2561 | 0 | 64 | 64 | 97.56% | |||||

| 926 | 2625 | 3551 | 317 | 2561 | 2878 | 609 | 64 | 673 | 81.05% | |||||

| CNN, original images and images with apples as training and validation data | 4311 | 12289 | 924 | 2587 | 926 | 0 | 926 | 908 | 0 | 908 | 18 | 0 | 18 | 98.06% |

| 0 | 2625 | 2625 | 0 | 2512 | 2512 | 0 | 113 | 113 | 95.70% | |||||

| 926 | 2625 | 3551 | 908 | 2512 | 3420 | 18 | 113 | 131 | 96.31% | |||||

| No. | Image | Result Obtained | Correct Result |

|---|---|---|---|

| 1 |  | variety of B (the probability of the sample belonging to this variety: 0.8393) | variety of A |

| 2 |  | variety of E (the probability of the sample belonging to this variety: 0.9999) | variety of C |

| No. | Image | Result Obtained | Correct Result |

|---|---|---|---|

| 1 |  | variety of A (CF: 0.3333) variety of B (CF: 0.6667) -> variety of C,D,E,F (CF: 0) | variety of A |

| 2 |  | variety of A, B (CF: 0) variety of C (CF: 0.2500) variety of D (CF: 0) variety of E (CF: 0.7500) -> variety of F (CF: 0) | variety of C |

| Value of Max Certainty Factor | Number of Correct Classification | Number of Incorrect Classification | Number of Classifications Obtained Based on One Image | Totally Number of Classifications | Number of Unambiguous Classification | Number of Ambiguous Classification | Number of Certain Classification |

|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) = (2) + (3) + (4) | (6) | (7) | (8) |

| (0; 0.5000> | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| (0.5000; 0.5500> | 7 | 0 | 5 | 7 | 0 | 2 | 5 |

| (0.5500; 0.6000> | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| (0.6000; 0.6500> | 1 | 0 | 0 | 1 | 0 | 1 | 0 |

| (0.6500; 0.7000> | 2 | 1 | 0 | 3 | 0 | 3 | 0 |

| (0.7000; 0.7501> | 7 | 1 | 0 | 8 | 0 | 8 | 0 |

| (0.7501; 0.8000> | 2 | 0 | 0 | 2 | 2 | 0 | 0 |

| (0.8000; 0.8500> | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| (0.8500; 0.9000> | 15 | 0 | 0 | 15 | 15 | 0 | 0 |

| (0.9000; 0.9500> | 15 | 0 | 0 | 15 | 15 | 0 | 0 |

| (0.9500; 1> | 875 | 0 | 0 | 875 | 875 | 0 | 0 |

| Sum | 924 | 2 | 5 | 926 | 907 | 14 | 5 |

| Sum (%) | 97.95% | 1.51% | 0.54% |

| Predicted Apple Variety | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | ||||||||||||||

| CFmax = 1 | CFmax ∈(1;0.7501) | CFmax ≤ 0.7501 | CFmax=1 | CFmax∈(1;0.7501) | CFmax ≤ 0.7501 | CFmax = 1 | CFmax ∈(1;0.7501) | CFmax ≤ 0.7501 | CFmax = 1 | CFmax ∈(1;0.7501) | CFmax ≤ 0.7501 | CFmax = 1 | CFmax ∈(1;0.7501) | CFmax ≤ 0.7501 | CFmax = 1 | CFmax ∈(1;0.7501) | CFmax ≤ 0.7501 | ||

| Actual Apple Variety | A | 97 | 1 | 5 | 1 | ||||||||||||||

| B | 102 | 7 | 2 | ||||||||||||||||

| C | 144 | 4 | 2 | 1 | |||||||||||||||

| D | 148 | 4 | 3 | ||||||||||||||||

| E | 90 | 8 | 2 | ||||||||||||||||

| F | 302 | 0 | 3 | ||||||||||||||||

| correct classification | |||||||||||||||||||

| incorrect classification | |||||||||||||||||||

| Number of Apples on Images | YOLO V3—Average Execution Time (ms) | 9-Layer CNN for Original Image—Average Execution Time (ms) | 9-Layer CNN for ROIs—Average Execution Time (ms) | Presented Method—Average Execution Time (ms) |

|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) = (2) + (3) + (4) |

| 1 | 200.97 | 96.96 | 92.97 | 390.90 |

| 2 | 209.90 | 116.96 | 146.95 | 473.82 |

| 3 | 208.96 | 115.00 | 159.95 | 483.91 |

| 4 | 204.96 | 100.97 | 167.96 | 473.89 |

| 5 | 208.92 | 94.95 | 182.94 | 486.81 |

| 6 | 199.91 | 85.97 | 188.90 | 474.79 |

| 7 | 211.90 | 92.00 | 242.90 | 546.80 |

| 8 | 195.96 | 108.96 | 281.88 | 586.81 |

| 9 | 216.93 | 107.97 | 302.86 | 627.76 |

| 10 | 201.91 | 78.00 | 329.86 | 609.77 |

| 11 | 215.96 | 90.00 | 329.97 | 635.92 |

| Architecture | Number of Apple Class Detections | Average Processing Time for 1 Image (ms) |

|---|---|---|

| YOLO V3 [18] | 2735 | 182,17 |

| Fast RCNN Inception v2 [21] | 1168 | 96,15 |

| SSD Inception v2 [29] | 1006 | 46,61 |

| RFCN ResNet101 [30] | 2321 | 190,13 |

| MobileNetV2 + SSDLite [31] | 1265 | 42,53 |

| Apple Variety | Number of Testing Data | Accuracy (%) | |

|---|---|---|---|

| DCNN Based on [23] | Proposed Method | ||

| Apple A | 104 | 98.08 | 99.04 |

| Apple B | 111 | 99.10 | 100.00 |

| Apple C | 151 | 100.00 | 99.34 |

| Apple D | 155 | 100.00 | 100.00 |

| Apple E | 100 | 100.00 | 100.00 |

| Apple F | 305 | 100.00 | 100.00 |

| Average/Total | 926 | 99.53 | 99.73 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Katarzyna, R.; Paweł, M. A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales. Appl. Sci. 2019, 9, 3971. https://doi.org/10.3390/app9193971

Katarzyna R, Paweł M. A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales. Applied Sciences. 2019; 9(19):3971. https://doi.org/10.3390/app9193971

Chicago/Turabian StyleKatarzyna, Rudnik, and Michalski Paweł. 2019. "A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales" Applied Sciences 9, no. 19: 3971. https://doi.org/10.3390/app9193971

APA StyleKatarzyna, R., & Paweł, M. (2019). A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales. Applied Sciences, 9(19), 3971. https://doi.org/10.3390/app9193971