The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning

Abstract

:1. Introduction

2. Related Work

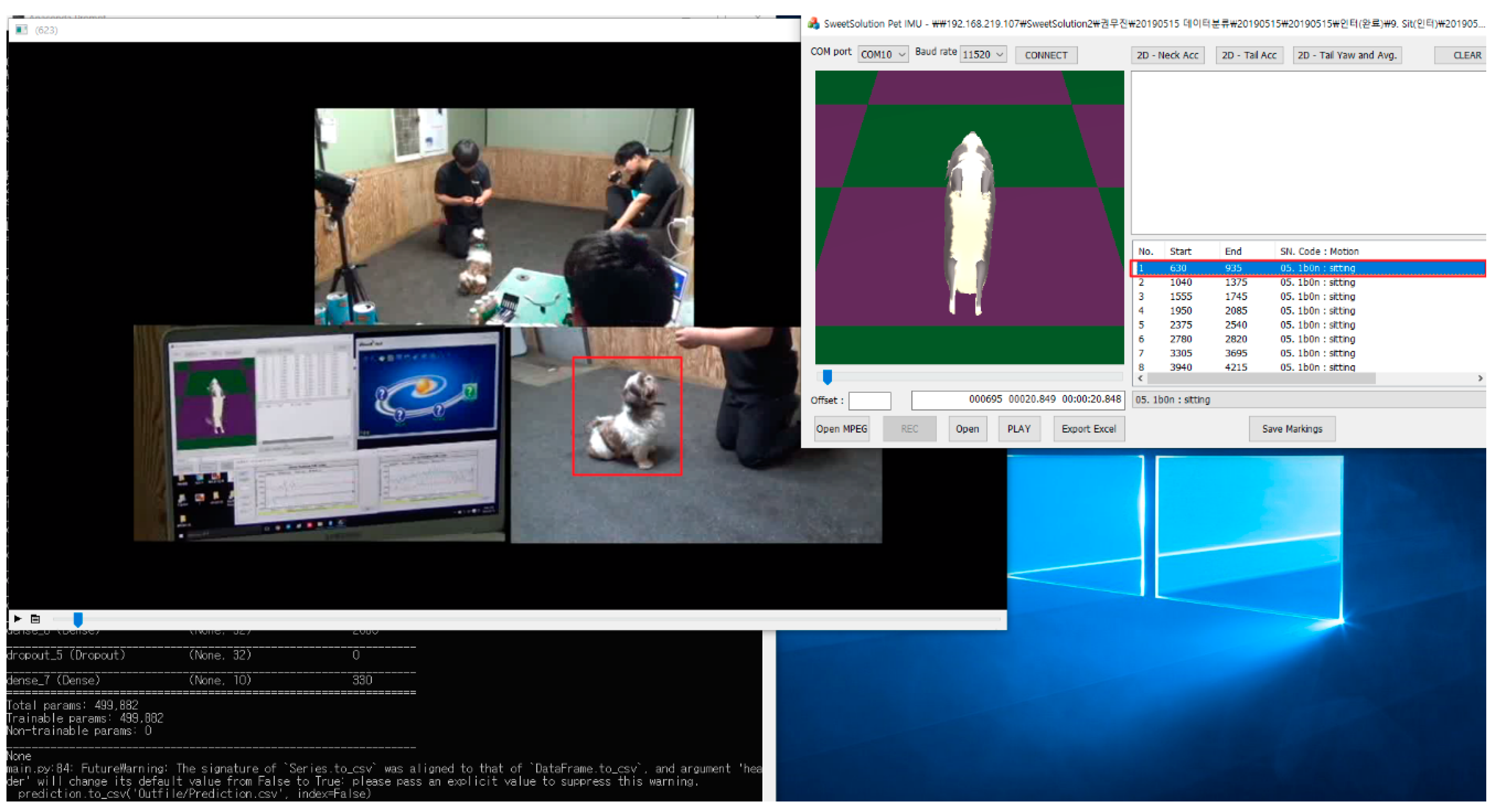

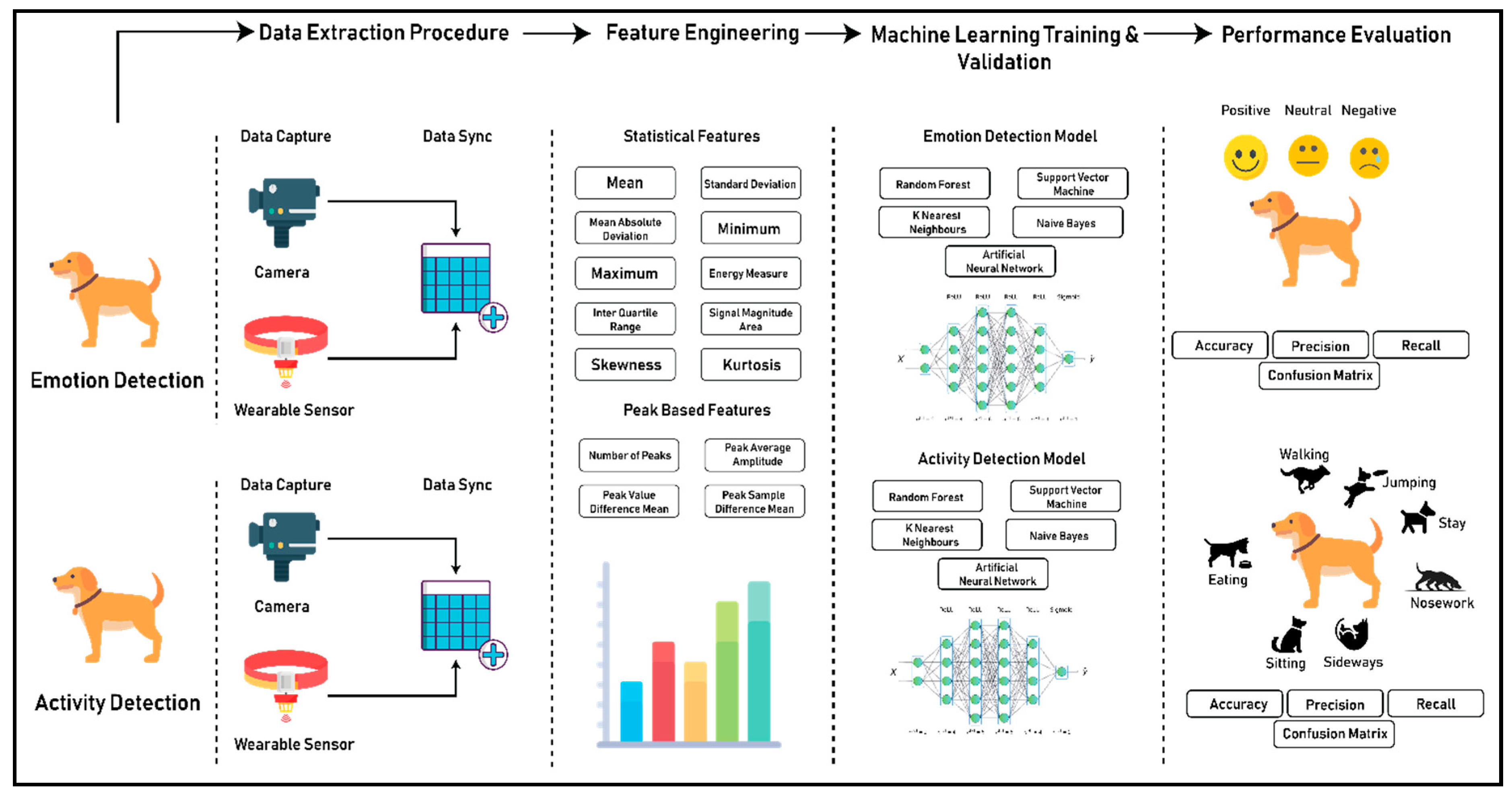

3. Data Collection and Experimental Procedure

4. Methodology

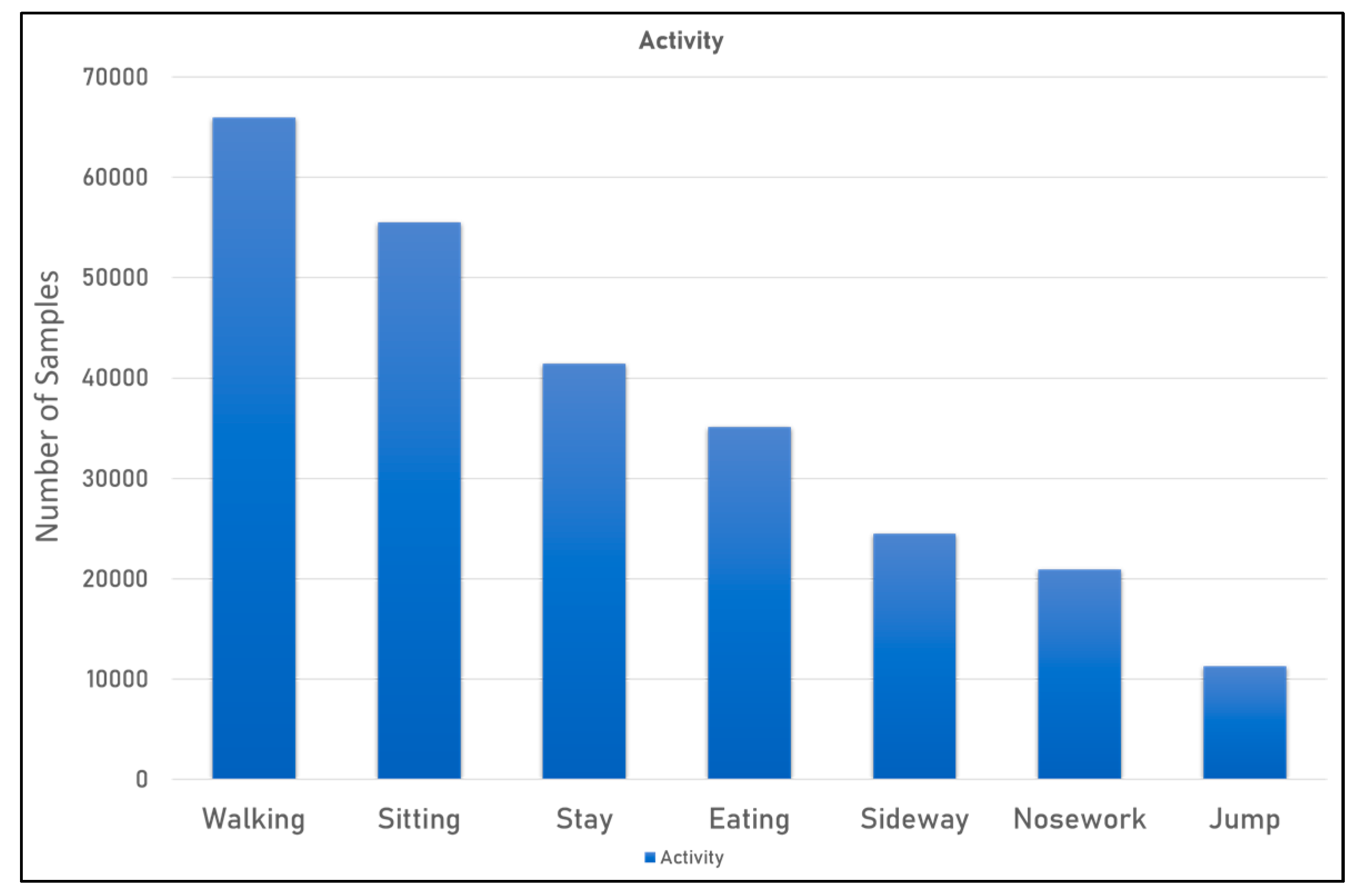

4.1. Activity Detection

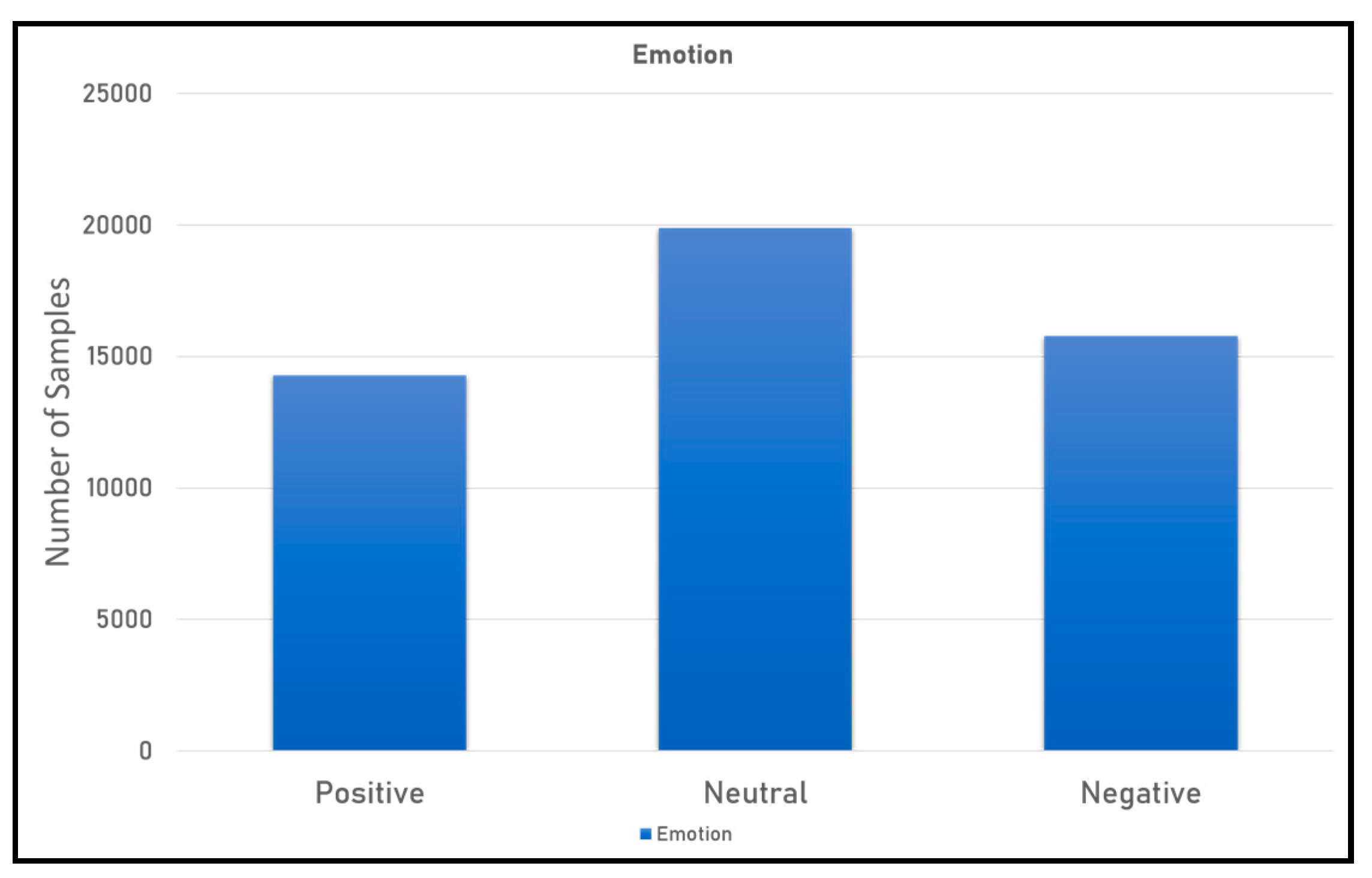

4.2. Emotion Detection

4.3. Feature Engineering

4.4. Machine Learning Algorithm and Evaluation Metrics

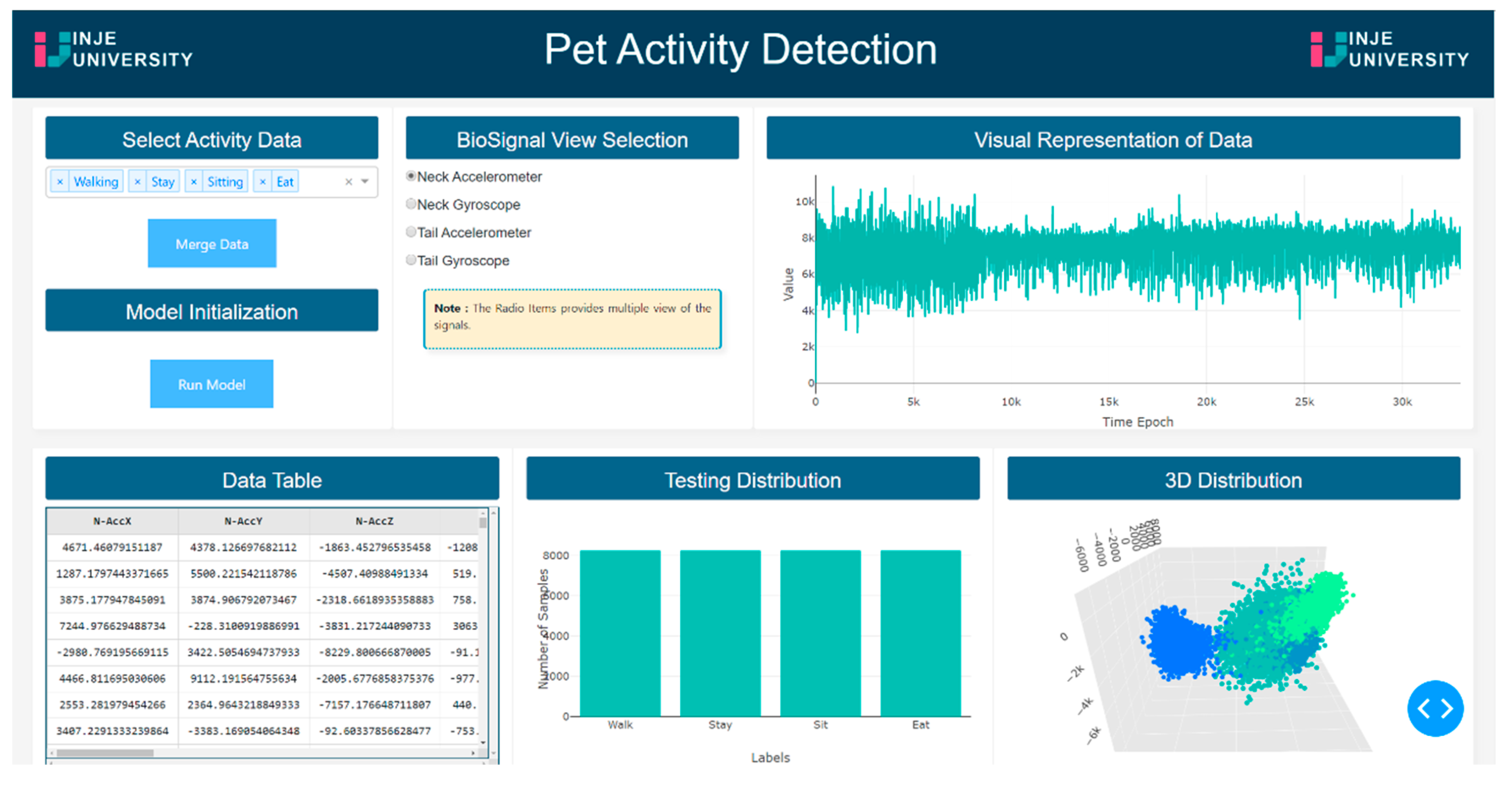

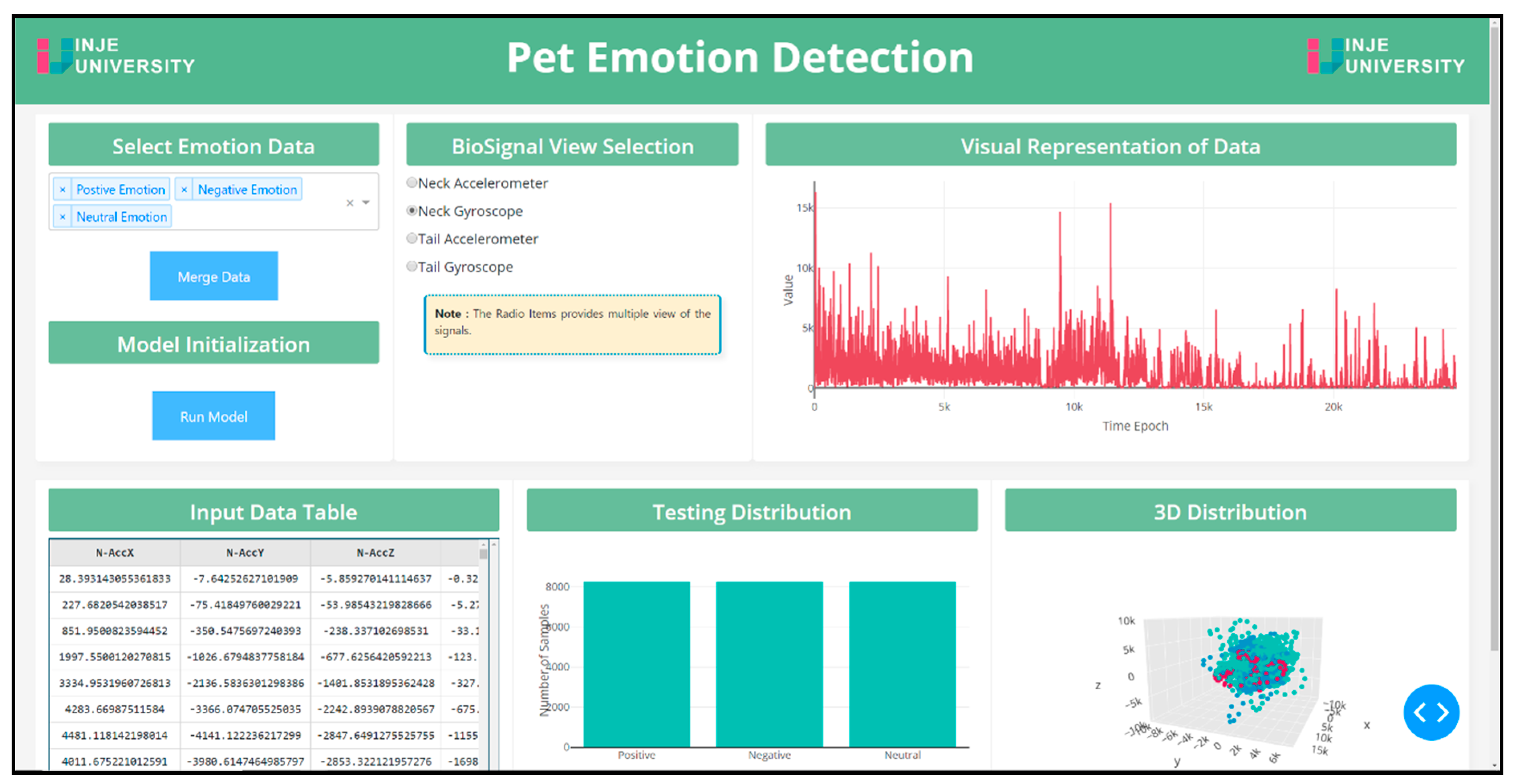

4.5. Web Application

5. Results

5.1. Activity Detection

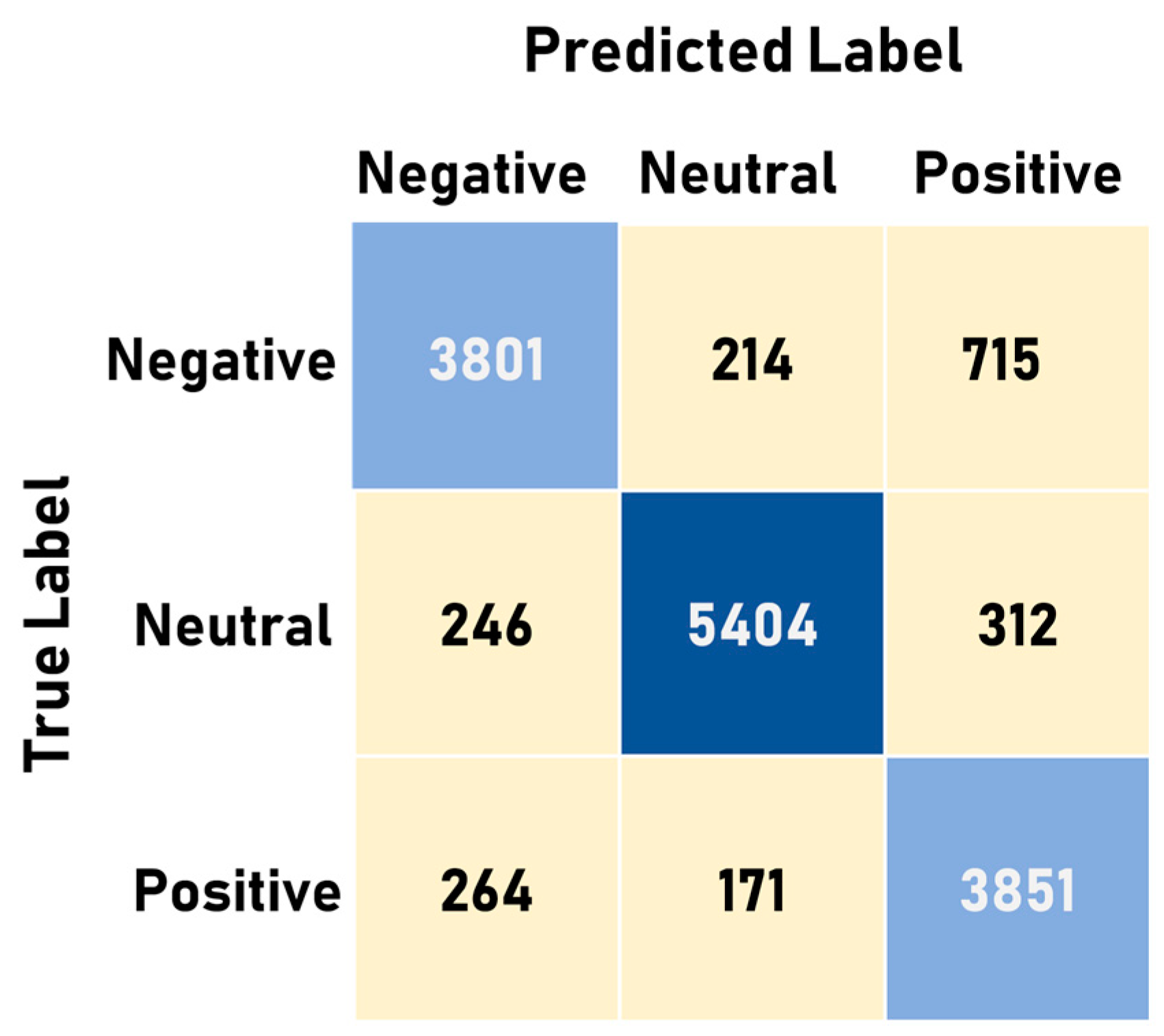

5.2. Emotion Detection

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Watanabe, S.; Izawa, M.; Kato, A.; Ropert-Coudert, Y.; Naito, Y. A new technique for monitoring the detailed behavior of terrestrial animals: A case study with the domestic cat. Appl. Anim. Behav. Sci. 2005, 94, 117–131. [Google Scholar] [CrossRef]

- Hooker, S.K.; Biuw, M.; McConnell, B.J.; Miller, P.J.; Sparling, C.E. Bio-logging science: Logging and relaying physical and biological data using animal-attached tags. Deep Sea Res. 2007, 3, 177–182. [Google Scholar] [CrossRef]

- Bidder, O.R.; Soresina, M.; Shepard, E.L.; Halsey, L.G.; Quintana, F.; Gómez-Laich, A.; Wilson, R.P. The need for speed: Testing acceleration for estimating animal travel rates in terrestrial dead-reckoning systems. Zoology 2012, 115, 58–64. [Google Scholar] [CrossRef] [PubMed]

- Baratchi, M.; Meratnia, N.; Havinga, P.; Skidmore, A.; Toxopeus, B. Sensing solutions for collecting spatio-temporal data for wildlife monitoring applications: A review. Sensors 2013, 13, 6054–6088. [Google Scholar] [CrossRef]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Fortino, G.; Galzarano, S.; Gravina, R.; Li, W. A framework for collaborative computing and multi-sensor data fusion in body sensor networks. Inf. Fusion 2015, 22, 50–70. [Google Scholar] [CrossRef]

- Chakraborty, S.; Aich, S.; Joo, M.I.; Sain, M.; Kim, H.C. A Multichannel Convolutional Neural Network Architecture for the Detection of the State of Mind Using Physiological Signals from Wearable Devices. J. Healthc. Eng. 2019, 2019, 5397814. [Google Scholar] [CrossRef]

- Fortino, G.; Giannantonio, R.; Gravina, R.; Kuryloski, P.; Jafari, R. Enabling effective programming and flexible management of efficient body sensor network applications. IEEE Trans. Hum. Mach. Syst. 2012, 43, 115–133. [Google Scholar] [CrossRef]

- Fortino, G.; Parisi, D.; Pirrone, V.; Di Fatta, G. BodyCloud: A SaaS approach for community body sensor networks. Future Gener. Comput. Syst. 2014, 35, 62–79. [Google Scholar] [CrossRef]

- Kooyman, G.L. Genesis and evolution of bio-logging devices. Natl. Polar Res. Inst. 2004, 58, 15–22. [Google Scholar]

- Yoda, K.; Naito, Y.; Sato, K.; Takahashi, A.; Nishikawa, J.; Ropert-Coudert, Y.; Kurita, M.; Le Maho, Y. A new technique for monitoring the behavior of free-ranging Adelie penguins. J. Exp. Biol. 2001, 204, 685–690. [Google Scholar] [PubMed]

- Wilson, R.P.; Shepard, E.L.C.; Liebsch, N. Prying into the intimate details of animal lives: Use of a daily diary on animals. Endanger. Species Res. 2008, 4, 123–137. [Google Scholar] [CrossRef]

- Britt, W.R.; Miller, J.; Waggoner, P.; Bevly, D.M.; Hamilton, J.A., Jr. An embedded system for real-time navigation and remote command of a trained canine. Pers. Ubiquitous Comput. 2011, 15, 61–74. [Google Scholar] [CrossRef]

- Cooper, C. How to Listen to Your Dog: The Complete Guide to Communicating with Man’s Best Friend; Atlantic Publishing Company: London, UK, 2015. [Google Scholar]

- Siniscalchi, M.; Lusito, R.; Vallortigara, G.; Quaranta, A. Seeing left-or right-asymmetric tail wagging produces different emotional responses in dogs. Curr. Biol. 2013, 23, 2279–2282. [Google Scholar] [CrossRef] [PubMed]

- Mather, M.; Thayer, J.F. How heart rate variability affects emotion regulation brain networks. Curr. Opin. Behav. Sci. 2018, 19, 98–104. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Q.; Liu, D.; Wang, Y.; Zhang, Y.; Bai, O.; Sun, J. Human emotion classification based on multiple physiological signals by wearable system. Technol. Health Care 2018, 26, 459–469. [Google Scholar] [CrossRef]

- De Seabra, J.; Rybarczyk, Y.; Batista, A.; Rybarczyk, P.; Lebret, M.C.; Vernay, D. Development of a Wearable Monitoring System for Service Dogs. 2014. Available online: https://docentes.fct.unl.pt/agb/files/service_dogs.pdf (accessed on 18 September 2019).

- Ladha, C.; Hammerla, N.; Hughes, E.; Olivier, P.; Ploetz, T. Dog’s life: Wearable activity recognition for dogs. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 415–418. [Google Scholar]

- Den Uijl, I.; Alvarez, C.B.G.; Bartram, D.; Dror, Y.; Holland, R.; Cook, A. External validation of a collar-mounted triaxial accelerometer for second-by-second monitoring of eight behavioral states in dogs. PLoS ONE 2017, 12, e0188481. [Google Scholar] [CrossRef]

- Massawe, E.A.; Kisangiri, M.; Kaijage, S.; Seshaiyer, P. Design and Analysis of Smart Sensing System for Animal Emotions Recognition. Int. J. Comput. Appl. 2017, 169, 8887. [Google Scholar]

- Wernimont, S.; Thompson, R.; Mickelsen, S.; Smith, S.; Alvarenga, I.; Gross, K. Use of accelerometer activity monitors to detect changes in pruritic behaviors: Interim clinical data on 6 dogs. Sensors. 2018, 18, 249. [Google Scholar] [CrossRef]

- Gerencsér, L.; Vásárhelyi, G.; Nagy, M.; Vicsek, T.; Miklósi, A. Identification of behavior in freely moving dogs (Canis familiaris) using inertial sensors. PLoS ONE 2013, 8, e77814. [Google Scholar] [CrossRef]

- Rahman, A.; Smith, D.V.; Little, B.; Ingham, A.B.; Greenwood, P.L.; Bishop-Hurley, G.J. Cattle behavior classification from collar, halter, and ear tag sensors. Inf. Process. Agric. 2018, 5, 124–133. [Google Scholar]

- Decandia, M.; Giovanetti, V.; Molle, G.; Acciaro, M.; Mameli, M.; Cabiddu, A.; Cossu, R.; Serra, M.G.; Manca, C.; Rassu, S.P.G.; et al. The effect of different time epoch settings on the classification of sheep behavior using tri-axial accelerometry. Comput. Electron. Agric. 2018, 154, 112–119. [Google Scholar] [CrossRef]

- Hammond, T.T.; Springthorpe, D.; Walsh, R.E.; Berg-Kirkpatrick, T. Using accelerometers to remotely and automatically characterize behavior in small animals. J. Exp. Biol. 2016, 219, 1618–1624. [Google Scholar] [CrossRef] [PubMed]

- Chakravarty, P.; Cozzi, G.; Ozgul, A.; Aminian, K. A novel biomechanical approach for animal behavior recognition using accelerometers. Methods Ecol. Evol. 2019, 10, 802–814. [Google Scholar] [CrossRef]

- Brugarolas, R.; Latif, T.; Dieffenderfer, J.; Walker, K.; Yuschak, S.; Sherman, B.L.; Roberts, D.L.; Bozkurt, A. Wearable heart rate sensor systems for wireless canine health monitoring. IEEE Sens. J. 2015, 16, 3454–3464. [Google Scholar] [CrossRef]

- Venkatraman, S.; Long, J.D.; Pister, K.S.; Carmena, J.M. Wireless inertial sensors for monitoring animal behavior. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 23–26 August 2007; pp. 378–381. [Google Scholar]

- Hansen, B.D.; Lascelles, B.D.X.; Keene, B.W.; Adams, A.K.; Thomson, A.E. Evaluation of an accelerometer for at-home monitoring of spontaneous activity in dogs. Am. J. Vet. Res. 2007, 68, 468–475. [Google Scholar] [CrossRef] [PubMed]

- Grünewälder, S.; Broekhuis, F.; Macdonald, D.W.; Wilson, A.M.; McNutt, J.W.; Shawe-Taylor, J.; Hailes, S. Movement activity based classification of animal behavior with an application to data from cheetah (Acinonyx jubatus). PLoS ONE 2012, 7, e49120. [Google Scholar] [CrossRef]

- McClune, D.W.; Marks, N.J.; Wilson, R.P.; Houghton, J.D.; Montgomery, I.W.; McGowan, N.E.; Gormley, E.; Scantlebury, M. Tri-axial accelerometers quantify behavior in the Eurasian badger (Meles meles): Towards an automated interpretation of field data. Anim. Biotelemetry 2014, 2. [Google Scholar] [CrossRef]

- Nazmi, N.; Abdul Rahman, M.; Yamamoto, S.I.; Ahmad, S.; Zamzuri, H.; Mazlan, S. A review of classification techniques of EMG signals during isotonic and isometric contractions. Sensors 2016, 16, 1304. [Google Scholar] [CrossRef]

- Rosati, S.; Balestra, G.; Knaflitz, M. Comparison of Different Sets of Features for Human Activity Recognition by Wearable Sensors. Sensors. 2018, 18, 4189. [Google Scholar] [CrossRef]

- Rawassizadeh, R.; Tomitsch, M.; Nourizadeh, M.; Momeni, E.; Peery, A.; Ulanova, L.; Pazzani, M. Energy-efficient integration of continuous context sensing and prediction into smartwatches. Sensors 2015, 15, 22616–22645. [Google Scholar] [CrossRef] [PubMed]

- Rehman, M.; Liew, C.; Wah, T.; Shuja, J.; Daghighi, B. Mining personal data using smartphones and wearable devices: A survey. Sensors 2015, 15, 4430–4469. [Google Scholar] [CrossRef] [PubMed]

- Banaee, H.; Ahmed, M.; Loutfi, A. Data mining for wearable sensors in health monitoring systems: A review of recent trends and challenges. Sensors 2013, 13, 17472–17500. [Google Scholar] [CrossRef]

- Castro, D.; Coral, W.; Rodriguez, C.; Cabra, J.; Colorado, J. Wearable-based human activity recognition using an iot approach. J. Sens. Actuator Netw. 2017, 6, 28. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Lee, J.; Jung, H. Fault Diagnosis Management Model using Machine Learning. J. Inf. Commun. Converg. Eng. 2019, 17, 128–134. [Google Scholar]

- Aich, S.; Pradhan, P.; Park, J.; Sethi, N.; Vathsa, V.; Kim, H.C. A validation study of freezing of gait (FoG) detection and machine-learning-based FoG prediction using estimated gait characteristics with a wearable accelerometer. Sensors 2018, 18, 3287. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef] [Green Version]

- Wu, D.; Wang, Z.; Chen, Y.; Zhao, H. Mixed-kernel based weighted extreme learning machine for inertial sensor based human activity recognition with imbalanced dataset. Neurocomputing 2016, 190, 35–49. [Google Scholar] [CrossRef]

- M’hamed Abidine, B.; Fergani, B.; Oussalah, M.; Fergani, L. A new classification strategy for human activity recognition using cost sensitive support vector machines for imbalanced data”. Kybernetes 2014, 43, 1150–1164. [Google Scholar] [CrossRef]

- Fergani, B.; Clavier, L. Importance-weighted the imbalanced data for C-SVM classifier to human activity recognition. In Proceedings of the 2013 8th International Workshop on Systems, Signal Processing and their Applications (WoSSPA), Algeria, Zalalada, 12–15 May 2013; pp. 330–335. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Zhao, B.; Wang, Z.; Yu, Z.; Guo, B. EmotionSense: Emotion Recognition Based on Wearable Wristband. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 346–355. [Google Scholar]

- Dobbins, C.; Fairclough, S. Detecting negative emotions during real-life driving via dynamically labelled physiological data. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 19–23 March 2018; pp. 830–835. [Google Scholar]

- Behoora, I.; Tucker, C.S. Machine learning classification of design team members’ body language patterns for real time emotional state detection. Des. Stud. 2015, 39, 100–127. [Google Scholar] [CrossRef]

- Hasegawa, M.; Ohtani, N.; Ohta, M. Dogs’ body language relevant to learning achievement. Animals 2014, 4, 45–58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Ref. | Sensors | Location | Key Results | Limitations | Subjects |

|---|---|---|---|---|---|

| [18] | Tri-axial accelerometer and gyroscope | Back | Accelerometer data were analyzed based on the simultaneous video recording for different activities and emotions. | This is a preliminary study to check the feasibility of wearable devices for activity and emotion detection and not validated for real-life situations. | Dog |

| [19] | Tri-axial accelerometer | Collar | The accelerometer data-based model is able to classify different activities with natural settings with an accuracy close to 70%. | The classification accuracy of the model solely depends on the recording by the dog owner because that recording was used for annotation, which is not perfect because a manual error is inevitable. The proper environment has not been prepared for conducting the experiment. | Dog |

| [20] | Tri-axial accelerometer | Collar | Accelerometer data has been used for the classification of different activities and external validation has been performed on the model for checking the robustness of the model. | Only accelerometer data is used for the classification of different activities. Emotion detection study was not explored in this paper. | Dog |

| [21] | Heart rate, galvanic skin resistance, and body temperature | Collar | The result would able to recognize the animal emotions based on the physiological signals. | The model has not implemented with real data. So, the result cannot be validated to be used in real-life situations. | Dog and Cow |

| [22] | Tri-axial accelerometer | Collar | The model was developed to monitor the pruritic behavior. It was mentioned that the result obtained using wearable device matched with the veterinarian reports. | Only accelerometer data is used for predicting behavior changes. | Dog |

| [23] | Tri-axial accelerometer and gyroscope | Withers | Accelerometer data and a gyroscope for detecting the activity patterns and used machine learning techniques to automate the system with an accuracy of 91.3% | Used only one machine learning technique (SVM) to evaluate the performance of the system. The other model performance has not been explored to check the robustness of the system. Emotion pattern detection has not been explored as well. | Dog |

| [24] | Tri-axial accelerometer | Collar, halter and ear tag sensors | To monitor the behavior using accelerometer sensor data at different position and automated the system using machine learning techniques with a good classification accuracy. | Only accelerometer data has been taken into account for behavior detection and only one machine learning model has been used to evaluate the system. | Sheep |

| [25] | Tri-axial accelerometer | Back | Acceleration data were used to detect the behavior pattern of chipmunk species and machine learning techniques were used to automate the system. | The model was developed only using two chipmunk species, which don’t support the validation standpoint and the system used only the acceleration data to detect the pattern. | Chipmunk |

| [26] | Tri-axial accelerometer | Collar | Acceleration data were used to detect the behaviors of meerkat species and hybrid techniques (biomechanical principle and machine learning technique) are used for classification and showed good performance. | Only accelerometer data is used for the development of the model and the performance has not been compared due to lack of the state of art model. | Meerkat |

| [27] | Tri-axial accelerometer | Back | Acceleration data and GPS data were used to detect the behavioral modes of vulture and machine learning techniques were used for classification of modes. | This kind of analysis is really challenging because they are free-ranging animals. Validation of this model in real-time is difficult. | Vulture |

| [28] | ECG, PPG, Inertial Measurement Unit (IMU) | Back | The physiological signals collected using these sensors help to detect the emotional response of the dog. A strong correlation found between the physiological signals and emotional state. | No information has been provided about the tail movements behavioral the emotional response and no machine learning system has been used to automate the system. | Dog |

| [29] | Tri-axial accelerometer | Back | Acceleration data collected using the sensors used to develop the algorithm to detect the activity pattern of the rats and also helps to provide information about the neural behaviors which help to detect the emotions. | Only acceleration data has been used for detecting the behavior and performance comparison using different machine learning algorithms has not been studied in this paper. | Rat |

| [30] | Tri-axial accelerometer | Collar, vest, and forelimb stocking locations | Acceleration data collected using sensors were used to detect the activities and the best sensor locations were also analyzed. | Any machine learning techniques were not used for automating the developed system and also the emotional state of the dog using the data has not been discussed. | Dog |

| [31] | GPS radio collar, bi-axial accelerometer | Collar | An algorithm based on the acceleration data has been used to detect the activities of a cheetah and also classification of activities was performed using machine learning techniques. | Only acceleration data has been used for the detection of activities. Emotional behavior has not been studied in this paper. | Cheetah |

| [32] | Tri-axial accelerometer | Neck | Acceleration data were used for the detection of different activities and classification task was performed using a machine learning technique. | Only acceleration data were used for the detection of activities and only one conventional machine learning technique has been used and performance comparison using different machine learning algorithms has not been studied. | Eurasian badger |

| Serial | Size | Name | Breed | Age | Gender |

|---|---|---|---|---|---|

| 1 | Large | Mihokeu | Border Collie | 2 | Male |

| 2 | Large | Ringo | Border Collie | 6 | Female |

| 3 | Small | Deogu | French Bull Dog | 6 | Male |

| 4 | Small | Inter | Sichuan | 5 | Male |

| 5 | Medium | Barley | Golden Retriever | 3 | Male |

| 6 | Large | Cannes | Cannes Korso | 4 | Male |

| 7 | Large | Sohee | Lot Willer | 2 | Female |

| 8 | Large | Saaldog | Dalmatian | 1 | Male |

| 9 | Small | Choi | Beagle | 5 | Female |

| 10 | Medium | Simba | Rhodesian Ridgeback | 7 | Male |

| Feature | Equation | Description |

|---|---|---|

| Mean | The mean of the feature was calculated for each subject for all the samples in each fold i.e., 33 samples. | |

| Standard Deviation | The standard deviation of the signal is calculated for each subject for all the samples in each fold i.e., 33 samples. | |

| Median Absolute Deviation | Where, is the median of X | The median absolute deviation was calculated each subject for all the samples in each fold i.e., 33 Samples. |

| Minimum | min(X) | The minimum of the signal was for calculated each subject for all the samples in each fold i.e., 33 Samples. |

| Maximum | max(X) | The maximum of the signal was for calculated each subject for all the samples in each fold i.e., 33 Samples. |

| Energy Measure | The energy measure of the signal was calculated for each subject based on all the samples in each fold i.e., 33 Samples. | |

| Inter Quartile Range | The interquartile range was calculated on each subject by considering each fold that is 33 samples of data as the complete set. | |

| Signal Magnitude Area | The signal magnitude area is been calculated by considering all the axis of the accelerometer and gyroscope and is calculated for each subject by considering the samples in each fold. | |

| Skewness | The skewness for all the samples of a fold for a particular subject was calculated on the basis of the Fisher-Pearson standardized moment coefficient | |

| Kurtosis | The kurtosis (peakedness) is calculated for individual signals. |

| Feature | Description |

|---|---|

| Number of Peaks | This feature gives out the number of peaks for a subject on a specific data fold of 33 samples |

| Peak Average Amplitude | This feature gives out the average amplitude of the peaks for a subject on a specific data fold of 33 samples |

| Peak Value Difference Mean | This feature pursues the mean difference between the amplitude of consecutive detected peaks for a subject on a specific data fold of 33 samples |

| Peak Sample Difference Mean | This feature provides us with the mean time difference between each detected peak for a subject on a specific data fold of 33 samples |

| Classifier | Specifications |

|---|---|

| Random Forest | n_estimators: 500, criterion = ‘gini’, max_depth:8, min_samples_split = 8, min_samples_leaf = 10 |

| Support Vector Machine | Kernel = ‘rbf’, degree = 3, gamma = ‘auto_deprecated’, C = 1.0, tol = 0.001, cache_size = 200 |

| K—Nearest Neighbors | n_neighbors = 50, weights = ‘uniform’, algorithm =‘auto’, leaf_size = 40, p = 2, metric = ‘minkowski’ |

| Naïve Bayes | Gaussian |

| Artificial Neural Network (Multi-Layer Perceptron) | Layers: [64, 128, 256, 32, 16, 7], Loss: Categorical Cross-Entropy, Optimizer: AdaGrad |

| Classifier | Specifications |

|---|---|

| Random Forest | n_estimators: 200, criterion = ‘gini’, max_depth:4, min_samples_split = 4, min_samples_leaf = 5 |

| Support Vector Machine | Kernel = ‘rbf’, degree = 2, gamma = ‘auto_deprecated’, C = 1.0, tol = 0.001, cache_size = 200 |

| K—Nearest Neighbours | n_neighbors = 10, weights = ‘uniform’, algorithm = ‘auto’, leaf_size = 40, p = 2, metric = ‘minkowski’ |

| Naïve Bayes | Gaussian |

| Artificial Neural Network (Multi-Layer Perceptron) | Layers: [64, 128, 256, 512, 32, 3], Loss: Categorical Cross Entropy, Optimizer: Stochastic Gradient Descent |

| Class | Weight |

|---|---|

| Walking (0) | 1.0 |

| Sitting (1) | 1.0 |

| Stay (2) | 1.0 |

| Eating (3) | 1.012589412 |

| Sideway (4) | 1.156774801 |

| Nosework (5) | 1.203320 |

| Jump (6) | 1.216978244 |

| Metric | Random Forest | SVM | KNN | Naïve Bayes | ANN |

|---|---|---|---|---|---|

| Accuracy | 0.9215 | 0.8452 | 0.8672 | 0.8844 | 0.9658 |

| Average Recall | 0.9136 | 0.8242 | 0.8425 | 0.8667 | 0.9469 |

| Average Precision | 0.9022 | 0.8222 | 0.8556 | 0.8533 | 0.9187 |

| Average F1 Score | 0.9089 | 0.8281 | 0.8502 | 0.8621 | 0.9365 |

| Metric | Random Forest | SVM | KNN | Naïve Bayes | ANN |

|---|---|---|---|---|---|

| Accuracy | 0.9675 | 0.7852 | 0.8123 | 0.8996 | 0.9287 |

| Average Recall | 0.9436 | 0.7765 | 0.8255 | 0.8874 | 0.9134 |

| Average Precision | 0.9291 | 0.8523 | 0.8321 | 0.90 | 0.9469 |

| Average F1 Score | 0.9326 | 0.8126 | 0.8226 | 0.8942 | 0.9289 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aich, S.; Chakraborty, S.; Sim, J.-S.; Jang, D.-J.; Kim, H.-C. The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning. Appl. Sci. 2019, 9, 4938. https://doi.org/10.3390/app9224938

Aich S, Chakraborty S, Sim J-S, Jang D-J, Kim H-C. The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning. Applied Sciences. 2019; 9(22):4938. https://doi.org/10.3390/app9224938

Chicago/Turabian StyleAich, Satyabrata, Sabyasachi Chakraborty, Jong-Seong Sim, Dong-Jin Jang, and Hee-Cheol Kim. 2019. "The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning" Applied Sciences 9, no. 22: 4938. https://doi.org/10.3390/app9224938

APA StyleAich, S., Chakraborty, S., Sim, J.-S., Jang, D.-J., & Kim, H.-C. (2019). The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning. Applied Sciences, 9(22), 4938. https://doi.org/10.3390/app9224938